Professional Documents

Culture Documents

Numeigen

Numeigen

Uploaded by

Muhammad Mahfud RosyidiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Numeigen

Numeigen

Uploaded by

Muhammad Mahfud RosyidiCopyright:

Available Formats

Eigenvalues

In addition to solving linear equations another important task of linear algebra is nding eigenvalues. Let F be some operator and x a vector. If F does not change the direction of the vector x, x is an eigenvector of the operator, stisfying the equation F (x) = x, (1) where is a real or complex number, the eigenvalue corresponding to the eigenvector. Thus the operator will only change the length of the vector by a factor given by the eigenvalue. If F is a linear operator, F (ax) = aF (x) = ax, and hence ax is an eigenvector, too. Eigenvectors are not uniquely determined, since they can be multiplied by any constant. In the following only eigenvalues of matrices are discussed. A matrix can be considered an operator mapping a vector to another one. For eigenvectors this mapping is a mere scaling. Let A be a square matrix. If there is a real or complex number and a vector x such that Ax = x, (2) is an eigenvalue of the matrix and x an eigenvector. The quation (2) can also be written as (A I)x = 0. If the equation is to have a nontrivial solution, we must have det(A I) = 0. (3)

When the determinant is expanded, we get the characteristic polynomial, the zeros of which are the eigenvalues. If the matrix is symmetric and real valued, the eigenvalues are real. Otherwise at least some of them may be complex. Complex eigenvalues appear always as pairs of complex conjugates. For example, the characteristic polynomial of the matrix 1 1 4 1

is det 1 1 4 1 = 2 2 3.

The zeros of this are the eigenvalues 1 = 3 and 2 = 1. Finding eigenvalues usign the characteristic polynomial is very laborious, if the matrix is big. Even determining the coecients of the equation is dicult. The method is not suitable for numerical calculations. To nd eigenvalues the matrix must be transformed to a more suiteble form. Gaussian elimination would transform it into a triangular matrix, but unfortunately the eigenvalues are not conserved in the transform. Another kind of transform, called a similarity transform, will not affect the eigenvalues. Similarity transforms have the form A A = S1 AS, where S is any nonsingular matrix, The matrices A and A are called similar. QR decomposition

A commonly used method for nding eigenvalues is known as the QR method. The method is an iteration that repeatedly computes a decomposition of the matrix know as its QR decomposition. The decomposition is obtained in a nite number of steps, and it has some other uses, too. Well rst see how to compute this decomposition. The QR decomposition of a matrix A is A = QR, where Q is an orthogonal matrix and R an upper triangular matrix. This decomposition is possible for all matrices. There are several methods for nding the decomposition 1) Householder transform 2) Givens rotations 3) GramSchmidt orthogonalisation

In the following we discuss the rst two methods with exemples. They will probably be easier to understand than the formal algorithm. Householder transform

The idea of the Householder transform is to nd a set of transforms that will make all elements in one column below the diagonal vanish. Assume that we have to decompose 3 A = 1 2 a matrix 2 1 1 2 1 3

We begin by taking the rst column of this 3 x1 = a(:, 1) = 1 2 1 and compute the vector u1 = x1 x1 0.7416574 1 2 00 = 1 1 1 matrix 0.5345225 0.7207135 0.4414270

This is used to create a Householder transformation 0.8017837 0.2672612 T u1 u1 0.2672612 0.6396433 P1 = I 2 = u1 2 0.5345225 0.7207135

It can be shown that this is an orthogonal matrix. It is easy to see this by calculating the scalar prouct of any two columns. The products are zeros, and thus the column vectors of the matrix are mutually orthogonal. When the original matrix is multiplied by this transform the result is a matrix with zeros in the rst column below the diagonal: 3.7416574 2.4053512 2.9398737 0. 0.4534522 0.6155927 A1 = P1 A = 0. 0.0930955 2.2311854 Then we use the second column to create a vector x2 = a(2 : 3, 1) = 0.4534522 0.0930955 ,

from which u2 = x2 x2 1 0 = 0.0094578 0.0930955 .

This will give the second transformation matrix 1 0 0 T u2 u2 P2 = I 2 = 0 0.9795688 0.2011093 u2 2 0 0.2011093 0.9795688

The product of A1 and the transformation matrix will be a matrix with zeros in the second column below the diagonal: 3.7416574 2.4053512 2.9398737 0 0.4629100 0.1543033 A2 = P2 A1 = 0 0 2.3094011 Thus the matrix has been transformed to an upper triangular matrix. If the matrix is bigger, repeat the same procedure for each column until all the elements below the diagonal vanish. Matrices of the decomposition are now obtained 0.8017837 0.1543033 0.2672612 0.7715167 Q = P1 P2 = 0.5345225 0.6172134 3.7416574 R = A2 = P2 P1 A = 0 0 as 0.5773503 0.5773503 0.5773503 2.9398737 0.1543033 . 2.3094011

2.4053512 0.4629100 0

The matrix R is in fact the Ak calculated in the last transformation; thus the original matrix A is not needed. If memory must be saved, each of the matrices Ai can be stored in the area of the previous one. Also, there is no need to keep the earlier matrices Pi , but P1 will be used as the initial value of Q, and at each step Q is always multiplied by the new tranformation matrix Pi . As a check, we can calculate the product of the factors of the decomposition to see that we will restore the original matrix: 3 2 1 QR = 1 1 2 . 2 1 3

Orthogonality of the matrix Q can be productQQT : 1 T 0 QQ = 0

seen e.g. by calculating the 0 0 1 0 0 1

In the general case the matrices of the decomposition are Q = P1 P2 Pn , R = Pn P2 P1 A.

Givens rotations

Another commonly used method is based on Givens rotation matrices: 1 cos . . . sin sin . . .

Pkl () = This is an orthogonal matrix. Finding the eigenvalues

1 cos 1

Eigenvalues can be found using iteratively the QR-algorithm, which will use the previous QR decomposition. If we started with the original matrix, the task would be computationally very time consuming. Therefore we start by transformin the matrix to a more suitable form. A square matrix is in the block diagonal form if it is T11 0 0 0 T12 T22 0 0 T13 T23 . . . 0 T1n T2n ,

Tnn

where the submatrices Tij are square matrices. It can be shown that the eigenvalues of such a matrix are the eigenvalues of the diagonal blocks Tii .

If the matrix is a diagonal or triangular matrix, the eigenvalues are the diagonal elements. If such a form can be found, the problem is solved. Usually such a form cannot be obtained by a nite number of similarity transformations. If the original matrix is symmetric, it can be transformed to a tridiagonal form without aecting its eigenvalues. In the case of a general matrix the result is a Hessenberg matrix, which has the form x x x x x x x x H = 0 x x x 0 0 x x 0 0 0 x x x x x x

The transformations required can be accomplished with Householder transforms or Givens rotations. The method is now slightly modied so that the elements immediately below the diagonal are not zeroed. Transformation using the Householder transforms

As a rst example, consider a symmetric matrix 4 3 A= 2 1 3 2 4 1 1 1 2 2 1 2 2 2

We begin to transform this using Householder transform. Now we construct a vector x1 by taking only the elements of the rst column that are below the diagonal: 3 x1 = 2 1 Using these form the vector u1 = x1 and from this the matrix p1 = I 2u1 uT / 1 u1 0.8017837 = 0.5345225 0.2672612 0.5345225 0.4414270 0.7207135 0.2672612 0.7207135 0.6396433 0.7416574 1 00 = 2 1 1

x1

and nally the Householder transformation matrix 1 0 0 0.8017837 P1 = 0 0.5345225 0 0.2672612 0 0.5345225 0.4414270 0.7207135 0 0.2672612 0.7207135 0.6396433

Now we can make the similarity transform of the matrix A. The transformation matrix is symmetric, so there is no need for transposing it: 4 3.7416574 A1 = P1 AP1 = 0 0 3.7416574 2.4285714 1.2977396 2.1188066 0 1.2977396 0.0349563 0.2952113 0 2.1188066 0.2952113 4.5364723

The second column is handled in the same way. First we form the vector x2 1.2977396 x2 = 2.1188066 and from this u2 = x2 and p2 = 0.5223034 0.8527597 0.8527597 0.5223034 x2 (1, 0)T = 1.1869072 2.1188066

and the nal transformation matrix 1 0 P2 = 0 0 0 1 0 0 0 00 0.5223034 0.8527597 0 0.8527597 0.5223034

Making the transform we get 4 3.7416574 A2 = P2 A1 P2 = 0 0 3.7416574 2.4285714 2.4846467 0 0 2.4846467 3.5714286 1.8708287 0 0 1.8708287 1

Thus we obtained a tridiagonal matrix, as we should in the case of a symmetric matrix.

As another example, we take an 4 3 A= 2 1

asymmetric matrix: 2 3 1 4 2 1 1 1 2 2 2 2

The transformation proceeds as before, and the result is 4 3.4743961 1.3039935 0.4776738 1.1216168 3.7416574 1.7857143 2.9123481 A2 = , 0 2.0934787 4.2422252 1.0307759 0 0. 1.2980371 0.9720605 which is of the Hessenberg form. QR-algorithm

We now have a simpler tridiagonal or Hessenberg matrix, which still has the same eigenvalues as the original matrix. Let this transformed matrix be H. Then we can begin to search for the eigenvalues. This is done by iteration. As an initial value, take A1 = H. Then repeat the following steps: Find the QR decomposition Qi Ri = Ai . Calculate a new matrix Ai+1 = Ri Qi . The matrix Q of the QR decomposition is orthogonal and A = QR, and so R = QT A. Therefore RQ = QT AQ is a similarity transform that will conserve the eigenvalues. The sequence of matrices Ai converges towards a upper tridiagonal or block matrix, from which the eigenvalues can be picked up. In the last example the limiting matrix after 50 iterations is 7.0363389 0.7523758 0.7356716 0.3802631 4.265E 08 4.9650342 0.8892339 0.4538061 0 0 1.9732687 1.3234202 0 0 0 0.9718955 The diagonal elements are now the eigenvalues. If the eigenvalues are complex numbers, the matrix is a block matrix, and the eigenvalues are the eigenvalues of the diagonal 2 2 submatrices.

You might also like

- Problems & Solutions On Electric DrivesDocument11 pagesProblems & Solutions On Electric DrivesMohammad Umar Rehman67% (18)

- Introduction To Partial Differential Equations in Fluid Mechanics: Their Types and Some Analytical SolutionsDocument20 pagesIntroduction To Partial Differential Equations in Fluid Mechanics: Their Types and Some Analytical Solutions刘伟轩100% (1)

- Understanding Analysis - Solution of Exercise ProblemsDocument272 pagesUnderstanding Analysis - Solution of Exercise Problemsgt0084e1No ratings yet

- Solution Real Analysis Folland Ch6Document24 pagesSolution Real Analysis Folland Ch6ks0% (1)

- HW 4Document4 pagesHW 4api-379689650% (2)

- Integrals of Bessel FunctionsDocument13 pagesIntegrals of Bessel FunctionsCleber Pereira de SousaNo ratings yet

- Lecture 08 - Spectra and Perturbation Theory (Schuller's Lectures On Quantum Theory)Document13 pagesLecture 08 - Spectra and Perturbation Theory (Schuller's Lectures On Quantum Theory)Simon Rea100% (1)

- (ADVANCE ABSTRACT ALGEBRA) Pankaj Kumar and Nawneet HoodaDocument82 pages(ADVANCE ABSTRACT ALGEBRA) Pankaj Kumar and Nawneet HoodaAnonymous RVVCJlDU6No ratings yet

- Teaching Dossier: Andrew W. H. HouseDocument9 pagesTeaching Dossier: Andrew W. H. HouseMohammad Umar RehmanNo ratings yet

- Solution Manual - Control Systems by GopalDocument0 pagesSolution Manual - Control Systems by Gopalsaggu199185% (27)

- Bowling Performance TaskDocument2 pagesBowling Performance Taskapi-250392239No ratings yet

- KREYSZIG 10E (update版) -Errata CH1~CH18 小本 PDFDocument18 pagesKREYSZIG 10E (update版) -Errata CH1~CH18 小本 PDF李傑No ratings yet

- Math 138 Functional Analysis Notes PDFDocument159 pagesMath 138 Functional Analysis Notes PDFAidan HolwerdaNo ratings yet

- MATH2045: Vector Calculus & Complex Variable TheoryDocument50 pagesMATH2045: Vector Calculus & Complex Variable TheoryAnonymous 8nJXGPKnuW100% (2)

- Existence Results For Gradient Elliptic Systems With Nonlinear Boundary Conditions - Julian Fernandez BONDERDocument27 pagesExistence Results For Gradient Elliptic Systems With Nonlinear Boundary Conditions - Julian Fernandez BONDERJefferson Johannes Roth FilhoNo ratings yet

- Oscillation of Nonlinear Neutral Delay Differential Equations PDFDocument20 pagesOscillation of Nonlinear Neutral Delay Differential Equations PDFKulin DaveNo ratings yet

- Hadamard TransformDocument4 pagesHadamard TransformLucas Gallindo100% (1)

- ADOMIAN Decomposition Method For Solvin1Document18 pagesADOMIAN Decomposition Method For Solvin1Susi SusilowatiNo ratings yet

- Solution Set 3Document11 pagesSolution Set 3HaseebAhmadNo ratings yet

- Basel Problem ProofDocument7 pagesBasel Problem ProofCody JohnsonNo ratings yet

- Introduction To SupersymmetryDocument190 pagesIntroduction To Supersymmetryweiss13No ratings yet

- Mathtut2 PDFDocument120 pagesMathtut2 PDFChi PA JucaNo ratings yet

- 8.1 Convergence of Infinite ProductsDocument25 pages8.1 Convergence of Infinite ProductsSanjeev Shukla100% (1)

- Sample ProblemsDocument166 pagesSample ProblemsCeejhay PanganibanNo ratings yet

- Fact Sheet Functional AnalysisDocument9 pagesFact Sheet Functional AnalysisPete Jacopo Belbo CayaNo ratings yet

- Ee263 Ps1 SolDocument11 pagesEe263 Ps1 SolMorokot AngelaNo ratings yet

- Midterm Exam SolutionsDocument26 pagesMidterm Exam SolutionsShelaRamos100% (1)

- 263 HomeworkDocument153 pages263 HomeworkHimanshu Saikia JNo ratings yet

- 3.1 Least-Squares ProblemsDocument28 pages3.1 Least-Squares ProblemsGabo GarcíaNo ratings yet

- Numerical Linear Algebra With Applications SyllabusDocument2 pagesNumerical Linear Algebra With Applications SyllabusSankalp ChauhanNo ratings yet

- Mathematica PDFDocument3 pagesMathematica PDFAsanka AmarasingheNo ratings yet

- Vector and Matrix NormDocument17 pagesVector and Matrix NormpaivensolidsnakeNo ratings yet

- Generating FunctionsDocument8 pagesGenerating FunctionsRafael M. Rubio100% (1)

- Ordinary Differential Equations: y Xyyy yDocument15 pagesOrdinary Differential Equations: y Xyyy yAdrian GavrilaNo ratings yet

- Analysis On Manifold Via Laplacian CanzaniDocument114 pagesAnalysis On Manifold Via Laplacian CanzaniACCNo ratings yet

- Hilbert-Spaces MATH231B Appendix1of1Document28 pagesHilbert-Spaces MATH231B Appendix1of1Chernet TugeNo ratings yet

- 116 hw1Document5 pages116 hw1thermopolis3012No ratings yet

- Analysis2011 PDFDocument235 pagesAnalysis2011 PDFMirica Mihai AntonioNo ratings yet

- Expander Graphs: 4.1 Measures of ExpansionDocument51 pagesExpander Graphs: 4.1 Measures of ExpansionJasen liNo ratings yet

- Laplace EquationDocument4 pagesLaplace EquationRizwan Samor100% (1)

- The History of The Basel ProblemDocument13 pagesThe History of The Basel ProblemJohn SmithNo ratings yet

- Sobolev SpaceDocument15 pagesSobolev Spaceconmec.crplNo ratings yet

- Assignment 1Document6 pagesAssignment 1Matkabhai Bhai100% (1)

- Differential Geometry Syed Hassan Waqas PDFDocument69 pagesDifferential Geometry Syed Hassan Waqas PDFAHSAN JUTT100% (1)

- 1 S5 PDFDocument85 pages1 S5 PDF너굴No ratings yet

- Q A X X: Definition of Quadratic FormsDocument8 pagesQ A X X: Definition of Quadratic FormshodanitNo ratings yet

- Sylvester Criterion For Positive DefinitenessDocument4 pagesSylvester Criterion For Positive DefinitenessArlette100% (1)

- Histogram EqualizationDocument38 pagesHistogram Equalizationmanjudhas_5100% (1)

- Hopf Bifurcation Normal FormDocument3 pagesHopf Bifurcation Normal FormJohnv13100% (2)

- CHAPTER 6 NUmerical DifferentiationDocument6 pagesCHAPTER 6 NUmerical DifferentiationEdbert TulipasNo ratings yet

- Functions of Several Variables PDFDocument21 pagesFunctions of Several Variables PDFsuntararaajanNo ratings yet

- Linear Dynamical Systems - Course ReaderDocument414 pagesLinear Dynamical Systems - Course ReaderTraderCat SolarisNo ratings yet

- MargulisDocument7 pagesMargulisqbeecNo ratings yet

- Delay Differential EquationsDocument42 pagesDelay Differential EquationsPonnammal KuppusamyNo ratings yet

- Quantum Field Theory Solution To Exercise Sheet No. 9: Exercise 9.1: One Loop Renormalization of QED A)Document20 pagesQuantum Field Theory Solution To Exercise Sheet No. 9: Exercise 9.1: One Loop Renormalization of QED A)julian fischerNo ratings yet

- Module 2 Vector Spaces FundamentalsDocument33 pagesModule 2 Vector Spaces FundamentalsG MahendraNo ratings yet

- LusinDocument6 pagesLusinM ShahbazNo ratings yet

- Center Manifold ReductionDocument8 pagesCenter Manifold Reductionsunoval2013100% (2)

- Real Analysis - Homework Solutions: Chris Monico, May 2, 2013Document37 pagesReal Analysis - Homework Solutions: Chris Monico, May 2, 2013gustavoNo ratings yet

- Putnam Linear AlgebraDocument6 pagesPutnam Linear AlgebrainfinitesimalnexusNo ratings yet

- Engineering Optimization: An Introduction with Metaheuristic ApplicationsFrom EverandEngineering Optimization: An Introduction with Metaheuristic ApplicationsNo ratings yet

- BrakingDocument19 pagesBrakingMohammad Umar RehmanNo ratings yet

- Drives SolutionsDocument4 pagesDrives SolutionsMohammad Umar RehmanNo ratings yet

- Power Distribution IITKDocument5 pagesPower Distribution IITKMohammad Umar RehmanNo ratings yet

- EEE-282N - S&S Quiz, U-1,2 - SolutionsDocument2 pagesEEE-282N - S&S Quiz, U-1,2 - SolutionsMohammad Umar RehmanNo ratings yet

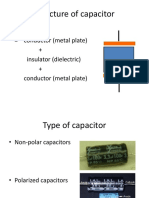

- Structure of Capacitor: - Capacitor Conductor (Metal Plate) + Insulator (Dielectric) + Conductor (Metal Plate)Document11 pagesStructure of Capacitor: - Capacitor Conductor (Metal Plate) + Insulator (Dielectric) + Conductor (Metal Plate)Mohammad Umar RehmanNo ratings yet

- Numerical Methods in ElectromagneticsDocument9 pagesNumerical Methods in ElectromagneticsMohammad Umar Rehman100% (1)

- Matrix YMDocument17 pagesMatrix YMOhwil100% (3)

- Ee 6365 Electrical Engineering Laboratory Manual: (Type Text)Document59 pagesEe 6365 Electrical Engineering Laboratory Manual: (Type Text)Mohammad Umar RehmanNo ratings yet

- Fem Notes Me623Document182 pagesFem Notes Me623Hirday SidhuNo ratings yet

- TOS Gen - Math2022Document4 pagesTOS Gen - Math2022LAARNI REBONGNo ratings yet

- Microsoft Word - 01 02 Circles 2Document21 pagesMicrosoft Word - 01 02 Circles 2madhuNo ratings yet

- Discrete Distributions: Bernoulli Random VariableDocument27 pagesDiscrete Distributions: Bernoulli Random VariableThapelo SebolaiNo ratings yet

- Formula Derivation: Stefan'S LawDocument7 pagesFormula Derivation: Stefan'S LawRitesh BhoiteNo ratings yet

- Water Is Flowing Into A Spherical Tank 24 M in Diameter at A Rate of 2 CuDocument18 pagesWater Is Flowing Into A Spherical Tank 24 M in Diameter at A Rate of 2 CuNicole SesbreñoNo ratings yet

- Gce Ms Mathematics Jan10 eDocument35 pagesGce Ms Mathematics Jan10 eJon RoweNo ratings yet

- Simplifying and Evaluating Algebraic Expressions: Looking BackDocument27 pagesSimplifying and Evaluating Algebraic Expressions: Looking BackthebtcircleNo ratings yet

- Calculus NotesDocument7 pagesCalculus Notesjistoni tanNo ratings yet

- Mathematics With A Calculator PDFDocument126 pagesMathematics With A Calculator PDFJoffrey MontallaNo ratings yet

- Ejercicios Transformaciones ElementalesDocument2 pagesEjercicios Transformaciones ElementalesMarco Alquicira BalderasNo ratings yet

- M - SC - Physics - 345 11 - Classical MechanicsDocument290 pagesM - SC - Physics - 345 11 - Classical MechanicsMubashar100% (1)

- Lab Work 1,2Document18 pagesLab Work 1,2Shehzad khanNo ratings yet

- 9.4 MCQ+FRQDocument14 pages9.4 MCQ+FRQmalikdoo5alNo ratings yet

- Quantum Formula SheetDocument47 pagesQuantum Formula SheetShailja Pujani100% (1)

- Work Sheet 03 - Edexcel - Pure 1Document5 pagesWork Sheet 03 - Edexcel - Pure 1Thaviksha BulathsinhalaNo ratings yet

- Eigenvalues and Eigenvectors - MATLAB EigDocument10 pagesEigenvalues and Eigenvectors - MATLAB EigAkash RamannNo ratings yet

- CCP Lab Manual Vtu 1semDocument20 pagesCCP Lab Manual Vtu 1semChetan Hiremath0% (1)

- Control of Mobile Robots: Linear SystemsDocument65 pagesControl of Mobile Robots: Linear Systemssonti11No ratings yet

- Differential Equations: A Dynamical Systems Approach To Theory and PracticeDocument553 pagesDifferential Equations: A Dynamical Systems Approach To Theory and PracticeNoe MartinezNo ratings yet

- STAT 5383 - Lab 1: Exploratory Tools For Time Series AnalysisDocument7 pagesSTAT 5383 - Lab 1: Exploratory Tools For Time Series AnalysisHofidisNo ratings yet

- X y X Y: Conic Sections/ConicsDocument2 pagesX y X Y: Conic Sections/ConicsVlad VizcondeNo ratings yet

- FINAL BOOK For Cal1Document260 pagesFINAL BOOK For Cal1Abdul Halil AbdullahNo ratings yet

- Kiran Kedlaya - A Is Less Than BDocument37 pagesKiran Kedlaya - A Is Less Than BDijkschneierNo ratings yet

- Littoral Mathematics Teachers Association: Multiple Choice Question Paper Instructions To CandidatesDocument6 pagesLittoral Mathematics Teachers Association: Multiple Choice Question Paper Instructions To CandidatesAlphonsius WongNo ratings yet

- The Matrix Cook BookDocument71 pagesThe Matrix Cook BookChristien MarieNo ratings yet

- All Chapters NoteDocument272 pagesAll Chapters NoteMike BelayNo ratings yet

- Optimization Toolbox: User's GuideDocument305 pagesOptimization Toolbox: User's GuideDivungoNo ratings yet

- 65 (B) Mathematics For ViDocument23 pages65 (B) Mathematics For Vi18-Mir Rayhan AlliNo ratings yet

- Geometri Ruang File 1Document4 pagesGeometri Ruang File 1Muhammad Isna SumaatmajaNo ratings yet