Professional Documents

Culture Documents

5 IBM Presentation 268018 7

5 IBM Presentation 268018 7

Uploaded by

ajujanCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

5 IBM Presentation 268018 7

5 IBM Presentation 268018 7

Uploaded by

ajujanCopyright:

Available Formats

2008 IBM Corporation 1

Three courses of DataStage, with a side

order of Teradata

Stewart Hanna

Product Manager

2

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

3

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

4

Information Management Software

Business

Value

Maturity of

Information Use

Accelerate to the Next Level

Unlocking the Business Value of Information for Competitive Advantage

5

Information Management Software

An Industry Unique Information Platform

Simplify the delivery of Trusted Information

Accelerate client value

Promote collaboration

Mitigate risk

Modular but Integrated

Scalable Project to Enterprise

The IBM InfoSphere Vision

6

Information Management Software

IBM InfoSphere Information Server

Delivering information you can trust

7

Information Management Software

InfoSphere DataStage

Provides codeless visual design of

data flows with hundreds of built-in

transformation functions

Optimized reuse of integration

objects

Supports batch & real-time

operations

Produces reusable components that

can be shared across projects

Complete ETL functionality with

metadata-driven productivity

Supports team-based development

and collaboration

Provides integration from across the

broadest range of sources

Transform

Transform and aggregate any volume

of information in batch or real time

through visually designed logic

Hundreds of Built-in

Transformation Functions

Architects Developers

InfoSphere DataStage

Deliver

8

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

9

Information Management Software

DataStage Designer

Complete

development

environment

Graphical, drag and

drop top down design

metaphor

Develop sequentially,

deploy in parallel

Component-based

architecture

Reuse capabilities

10

Information Management Software

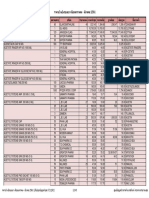

Transformation Components

Over 60 pre-built components

available including

Files

Database

Lookup

Sort, Aggregation,

Transformer

Pivot, CDC

J oin, Merge

Filter, Funnel, Switch, Modify

Remove Duplicates

Restructure stages

Sample of

Stages

Available

11

Information Management Software

Some Popular Stages

Usual ETL Sources & Targets:

- RDBMS, Sequential File, Data Set

Combining Data:

- Lookup, Joins, Merge

- Aggregator

Transform Data:

- Transformer, Remove Duplicates

Ancillary:

- Row Generator, Peek, Sort

12

Information Management Software

Deployment

Easy and integrated job

movement from one environment

to the other

A deployment package can be

created with any first class objects

from the repository. New objects

can be added to an existing

package.

The description of this package is

stored in the metadata repository.

All associated objects can be

added in like files outside of DS

and QS like scripts.

Audit and Security Control

13

Information Management Software

Role-Based Tools with Integrated Metadata

Simplify Integration Increase trust and

confidence in information

Increase compliance to

standards

Facilitate change

management & reuse

Design Operational

Developers

Subject Matter

Experts

Data

Analysts

Business

Users

Architects

DBAs

Unified Metadata Management

14

Information Management Software

Business analysts and IT

collaborate in context to

create project specification

Leverages source

analysis, target models,

and metadata to facilitate

mapping process

Auto-generation of

data transformation

jobs & reports

Generate historical

documentation for tracking

Supports data governance

Flexible Reporting

Specification

Auto-generates

DataStage jobs

InfoSphere FastTrack

To reduce Costs of Integration Projects through Automation

15

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

16

Information Management Software

Extending DataStage - Defining Your Own Stage Types

Define your own stage type to be

integrated into data flow

Stage Types

Wrapped

Specify a OS command or

script

Existing Routines, Logic, Apps

BuildOp

Wizard / Macro driven

Development

Custom

API Development

Available to all jobs in the project

All meta data is captured

17

Information Management Software

Building WrappedStages

In a nutshell:

You can wrap a legacy executable:

binary,

Unix command,

shell script

and turn it into a bona fide DataStage stage capable, among

other things, of parallel execution,

as long as the legacy executable is

amenable to data-partition parallelism

no dependencies between rows

pipe-safe

can read rows sequentially

no random access to data, e.g., use of fseek()

18

Information Management Software

Building BuildOp Stages

In a nutshell:

The user performs the fun, glamorous tasks:

encapsulate business logic in a custom operator

The DataStage wizard called buildop automatically

performs the unglamorous, tedious, error-prone tasks:

invoke needed header files, build the necessary

plumbing for a correct and efficient parallel

execution.

19

Information Management Software

Layout interfaces describe what columns the stage:

needs for its inputs

creates for its outputs

Two kinds of interfaces: dynamic and static

Dynamic: adjusts to its

inputs automatically

Custom Stages can be

Dynamic or Static

IBM DataStage supplied

Stages are dynamic

Static: expects input to

contain columns with

specific names and types

BuildOp are only Static

Major difference between BuildOp and Custom Stages

20

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

21

Information Management Software

Scalability is important everywhere

It take me 4 1/2 hours to wash, dry and fold 3 loads of

laundry ( hour for each operation)

Wash Dry Fold

Sequential Approach (4 Hours)

Wash a load, dry the load, fold it

Wash-dry-fold

Wash-dry-fold

Wash Dry Fold

Pipeline Approach (2 Hours)

Wash a load, when it is done, put it in the dryer, etc

Load washing while another load is drying, etc

Wash Dry Fold

Wash Dry Fold

Partitioned Parallelism Approach (1 Hours)

Divide the laundry into different loads (whites, darks,

linens)

Work on each piece independently

3 Times faster with 3 Washing machines, continue with 3

dryers and so on

Wash

Dry

Fold P

a

r

t

i

t

i

o

n

i

n

g

R

e

p

a

r

t

i

t

i

o

n

i

n

g

Whites, Darks, Linens

Workclothes

Delicates, Lingerie, Outside

Linens

Shirts on Hangers, pants

folded

P

a

r

t

i

t

i

o

n

i

n

g

Information Management Software

Source

Data

Processor 1

Processor 2

Processor 3

Processor 4

A-F

G- M

N-T

U-Z

Break up big data into partitions

Run one partition on each processor

4X times faster on 4 processors; 100X faster on 100 processors

Partitioning is specified per stage meaning partitioning can change

between stages

Transform

Transform

Transform

Transform

Data Partitioning

T

y

p

e

s

o

f

P

a

r

t

i

t

i

o

n

i

n

g

o

p

t

i

o

n

s

a

v

a

i

l

a

b

l

e

Information Management Software

Data Pipelining

Source Target

Archived Data

1,000 records

first chunk

1,000 records

second chunk

1,000 records

third chunk

1,000 records

fourth chunk

1,000 records

fifth chunk

1,000 records

sixth chunk

Records 9,001

to 100,000,000

1,000 records

seventh chunk

1,000 records

eighth chunk

Load

Eliminate the write to disk and the read from disk

between processes

Start a downstream process while an upstream

process is still running.

This eliminates intermediate staging to disk, which is

critical for big data.

This also keeps the processors busy.

Information Management Software

Target

Source

P

a

r

t

i

t

i

o

n

i

n

g

Transform

Parallel Dataflow

Parallel Processing achieved in a data flow

Still limiting

Partitioning remains constant throughout flow

Not realistic for any real jobs

For example, what if transformations are based on customer id

and enrichment is a house holding task (i.e., based on post code)

Aggregate Load

Source

Data

Information Management Software

Target

Source

Pipelining

Source

Data

P

a

r

t

i

t

i

o

n

i

n

g

A-F

G- M

N-T

U-Z

Customer last name Customer Postcode Credit card number

Load

R

e

p

a

r

t

i

t

i

o

n

i

n

g

R

e

p

a

r

t

i

t

i

o

n

i

n

g

Transform Aggregate

Parallel Data Flow with Auto Repartitioning

Record repartitioning occurs automatically

No need to repartition data as you

add processors

change hardware architecture

Broad range of partitioning methods

Entire, hash, modulus, random, round robin, same, DB2 range

26

Information Management Software

P

a

r

t

i

t

i

o

n

i

n

g

Data

Warehouse

Source

Data

A-F

G- M

N-T

U-Z

R

e

p

a

r

t

i

t

i

o

n

i

n

g

R

e

p

a

r

t

i

t

i

o

n

i

n

g

Sequential

4-way parallel

128-way parallel

32 4-way nodes

.

.

.

.

.

.

.

.

.

.

.

.

Application Execution: Sequential or Parallel

P

a

r

t

i

t

i

o

n

i

n

g

Target

Data

Warehouse

Source

Source

Data

A-F

G- M

N-T

U-Z

Customer last name

Cust zip code Credit card number

R

e

p

a

r

t

i

t

i

o

n

i

n

g

R

e

p

a

r

t

i

t

i

o

n

i

n

g

Target

Source

Data

Data

Warehouse

Source

Target

Source

Data

Data

Warehouse

Source

P

a

r

t

i

t

i

o

n

i

n

g

Data

Warehouse

Source

Data

A-F

G- M

N-T

U-Z

R

e

p

a

r

t

i

t

i

o

n

i

n

g

R

e

p

a

r

t

i

t

i

o

n

i

n

g

Application Assembly: One Dataflow Graph Created With the DataStage GUI

27

Information Management Software

Data Set stage: allows you to read data from or write data to a data set.

Parallel Extender data sets hide the complexities of handling and storing large

collections of records in parallel across the disks of a parallel computer.

File Set stage: allows you to read data from or write data to a

file set. It only executes in parallel mode.

Sequential File stage: reads data from or writes data to one or more

flat files. It usually executes in parallel, but can be configured to execute

sequentially.

External Source stage: allows you to read data that is

output from one or more source programs.

External Target stage: allows you to write data to one or more

target programs.

Robust mechanisms for handling big data

Information Management Software

. . .

data files

Component A

=

What you see:

What gets processed:

Multiple files per partition

Persistent Data Set

(One object)

CPU 1 CPU 2 CPU 3 CPU 4

of x.ds

x.ds

Component

A

Component

A

Component

A

Component

A

Parallel Data Sets

Hides Complexity of Handling Big Data

Replicates RDBMS Support Outside of Database

Consist of Partitioned Data and Schema

Maintains Parallel Processing even when Data is staged

for a checkpoint, point of reference or persisted between

jobs.

Information Management Software

DataStage SAS Stages

Parallel SAS Data Set stage:

Allows you to read data from or write data to a parallel SAS data set in

conjunction with a SAS stage.

A parallel SAS Data Set is a set of one or more sequential SAS data

sets, with a header file specifying the names and locations of all of the

component files.

SAS stage:

Executes part or all of a SAS application in parallel by processing

parallel streams of data with parallel instances of SAS DATA and

PROC steps.

30

Information Management Software

The Configuration File

{

node " n1" {

f ast name " s1"

pool " " " n1" " s1" " app2" " sor t "

r esour ce di sk " / or ch/ n1/ d1" {}

r esour ce di sk " / or ch/ n1/ d2" {" bi gdat a" }

r esour ce scr at chdi sk " / t emp" {" sor t " }

}

node " n2" {

f ast name " s2"

pool " " " n2" " s2" " app1"

r esour ce di sk " / or ch/ n2/ d1" {}

r esour ce di sk " / or ch/ n2/ d2" {" bi gdat a" }

r esour ce scr at chdi sk " / t emp" {}

}

node " n3" {

f ast name " s3"

pool " " " n3" " s3" " app1"

r esour ce di sk " / or ch/ n3/ d1" {}

r esour ce scr at chdi sk " / t emp" {}

}

node " n4" {

f ast name " s4"

pool " " " n4" " s4" " app1"

r esour ce di sk " / or ch/ n4/ d1" {}

r esour ce scr at chdi sk " / t emp" {}

}

1

4

3

2

Two key aspects:

1. #nodes declared

2. defining subset of resources

"pool" for execution under

"constraints," i.e., using a

subset of resources

31

Information Management Software

Dynamic GRID Capabilities

GRID Tab to help manage execution across a grid

Automatically reconfigures parallelism to fit GRID

resources

Managed through an external grid resource manager

Available for Red Hat and Tivoli Work Scheduler Loadleveler

Locks resources at execution time to ensure SLAs

32

Information Management Software

J ob Monitoring & Logging

Job Performance Analysis

Detail job monitoring

information available during

and after job execution

Start and elapsed times

Record counts per link

% CPU used by each process

Data skew across partitions

Also available from

command line

dsj ob r epor t <pr oj ect >

<j ob> [ <t ype>]

type =BASIC, DETAIL, XML

33

Information Management Software

Provides automatic optimization

of data flows mapping

transformation logic to SQL

Leverages investments in DBMS

hardware by executing data

integration tasks with and within

the DBMS

Optimizes job run-time by

allowing the developer to control

where the job or various parts of

the job will execute.

Transform and aggregate any volume

of information in batch or real time

through visually designed logic

Architects Developers

Optimizing run time through intelligent

use of DBMS hardware

InfoSphere DataStage Balanced Optimization

Data Transformation

34

Information Management Software

Using Balanced Optimization

original

DataStage

job

design

job

DataStage

Designer

job

results

compile

& run

verify

rewritten

optimized

job

optimize

job

Balanced Optimization

compile

& run

choose different options

and reoptimize

manually review/

edit optimized job

35

Information Management Software

Leveraging best-of-breed systems

Optimization is not constrained to a single

implementation style such as ETL or ELT

InfoSphere DataStage Balanced

Optimization fully harnesses available

capacity and computing power in Teradata

and DataStage

Delivering unlimited scalability and

performance through parallel execution

everywhere, all the time

36

Information Management Software

Agenda

Platform Overview

Appetizer - Productivity

First Course Extensibility

Second Course Scalability

Desert Teradata

37

Information Management Software

InfoSphere Rich Connectivity

Rich

Connectivity

Shared, easy to

use connectivity

infrastructure

Event-driven,

real-time, and

batch

connectivity

High volume,

parallel connectivity

to databases and

file systems

Best-of-breed,

metadata-driven

connectivity to

enterprise applications

Frictionless Connectivity

38

Information Management Software

Teradata Connectivity

7+ highly optimized interfaces for

Teradata leveraging Teradata Tools

and Utilities: FastLoad, FastExport,

TPUMP, MultiLoad, Teradata

Parallel Transport, CLI and ODBC

You use the best interfaces

specifically designed for your

integration requirements:

Parallel/bulk extracts/loads with

various/optimized data partitioning

options

Table maintenance

Real-time/transactional trickle

feeds without table locking

FastLoad

FastExport

MulitLoad

TPUMP

TPT

CLI

ODBC

Teradata

EDW

39

Information Management Software

Teradata Connectivty

RequestedSessions determines the total number of distributed

connections to the Teradata source or target

When not specified, it equals the number of Teradata VPROCs

(AMPs)

Can set between 1 and number of VPROCs

SessionsPerPlayer determines the number of connections each player will

have to Teradata. Indirectly, it also determines the number of players

(degree of parallelism).

Default is 2 sessions / player

The number selected should be such that

SessionsPerPlayer * number of nodes * number of players per

node = RequestedSessions

Setting the value of SessionsPerPlayer too low on a large system

can result in so many players that the job fails due to insufficient

resources. In that case, the value for -SessionsPerPlayer should

be increased.

40

Information Management Software

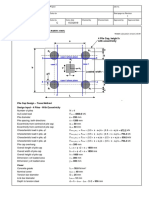

Teradata Server

MPP with 4 TPA nodes

4 AMPs per TPA node

DataStage Server

Configuration File Sessions Per Player Total Sessions

16 nodes 1 16

8 nodes 1 8

8 nodes 2 16

4 nodes 4 16

Example Settings

Teradata SessionsPerPlayer Example

41

Information Management Software

Customer Example Massive Throughput

CSA Production Linux Grid

Teradata

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Teradata

Node

Head Node Compute A Compute B

Teradata

Node

Teradata

Node

Compute C Compute D Compute E

24 Port Switch 24 Port Switch

32 Gb

Interconnect

Legend

Teradata

Linux Grid

Stacked Switches

42

Information Management Software

The IBM InfoSphere Information Server Advantage

A Complete Information Infrastructure

A comprehensive, unified foundation for enterprise information

architectures, scalable to any volume and processing requirement

Auditable data quality as a foundation for trusted information

across the enterprise

Metadata-driven integration, providing breakthrough productivity

and flexibility for integrating and enriching information

Consistent, reusable information services along with

application services and process services, an enterprise essential

Accelerated time to value with proven, industry-aligned solutions

and expertise

Broadest and deepest connectivity to information across diverse

sources: structured, unstructured, mainframe, and applications

43

Information Management Software

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5825)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Arbor BP 9-1 Database Reference Volume 1Document584 pagesArbor BP 9-1 Database Reference Volume 1Vincenzo Presutto100% (1)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- OSH Basic TutorialDocument32 pagesOSH Basic TutorialSteven Kuah100% (1)

- ราคาอ้างอิงของยา เดือนมกราคม-มีนาคม 2561Document143 pagesราคาอ้างอิงของยา เดือนมกราคม-มีนาคม 2561Tommy PanyaratNo ratings yet

- 4 Piles Cap With Eccentricity ExampleDocument3 pages4 Piles Cap With Eccentricity ExampleSousei No Keroberos100% (1)

- Feedback Part of A System (Dylan Wiliam)Document4 pagesFeedback Part of A System (Dylan Wiliam)ajmccarthynzNo ratings yet

- Teradata OverviewDocument64 pagesTeradata OverviewVincenzo PresuttoNo ratings yet

- IBM Exploiting Your Data WarehouseDocument49 pagesIBM Exploiting Your Data WarehouseVincenzo PresuttoNo ratings yet

- Chapter 3 Practice Problems Review and Assessment Solution 2 Use The V T Graph of The Toy Train in Figure 9 To Answer These QuestionsDocument52 pagesChapter 3 Practice Problems Review and Assessment Solution 2 Use The V T Graph of The Toy Train in Figure 9 To Answer These QuestionsAref DahabrahNo ratings yet

- TSL3223 Eby Asyrul Bin Majid Task1Document5 pagesTSL3223 Eby Asyrul Bin Majid Task1Eby AsyrulNo ratings yet

- Sabrina Di Addario (2007)Document29 pagesSabrina Di Addario (2007)AbimNo ratings yet

- Scorpio SCDC LHD Mhawk Eiii v2 Mar12Document154 pagesScorpio SCDC LHD Mhawk Eiii v2 Mar12Romel Bladimir Valenzuela ValenzuelaNo ratings yet

- BSN Ay 2021-2022Document63 pagesBSN Ay 2021-2022Crystal AnnNo ratings yet

- NIT Application For Examination and Membership FormDocument1 pageNIT Application For Examination and Membership FormRhea Mae CarantoNo ratings yet

- 2010 Nissan Versa S Fluid CapacitiesDocument2 pages2010 Nissan Versa S Fluid CapacitiesRubenNo ratings yet

- Quick Installation GuideDocument2 pagesQuick Installation GuidePaulo R. Lemos MessiasNo ratings yet

- On Intuitionistic Fuzzy Transportation Problem Using Pentagonal Intuitionistic Fuzzy Numbers Solved by Modi MethodDocument4 pagesOn Intuitionistic Fuzzy Transportation Problem Using Pentagonal Intuitionistic Fuzzy Numbers Solved by Modi MethodEditor IJTSRDNo ratings yet

- Art AppreciationDocument41 pagesArt AppreciationVEA CENTRONo ratings yet

- Cerebrospinal CSFDocument31 pagesCerebrospinal CSFRashid MohamedNo ratings yet

- Revision Class QuestionsDocument6 pagesRevision Class QuestionsNathiNo ratings yet

- PANASONIC sdr-h100-h101Document73 pagesPANASONIC sdr-h100-h101Marco RamosNo ratings yet

- Cilindros Hidraulicos HydrowaDocument44 pagesCilindros Hidraulicos HydrowaconradoralNo ratings yet

- Barry Thompson Book CHAPTER 8 Cantron - Extraordinary Antioxidant and Ingenious Cancer KillerDocument32 pagesBarry Thompson Book CHAPTER 8 Cantron - Extraordinary Antioxidant and Ingenious Cancer Killermonluck100% (2)

- Fuschi Et Al 2022 Microplastics in The Great Lakes Environmental Health and Socioeconomic Implications and FutureDocument18 pagesFuschi Et Al 2022 Microplastics in The Great Lakes Environmental Health and Socioeconomic Implications and FuturecriscazanNo ratings yet

- DDP Prithla 2021 Exp NoteDocument22 pagesDDP Prithla 2021 Exp Notelalit singhNo ratings yet

- BPO - Bank Payment ObligationDocument2 pagesBPO - Bank Payment ObligationshreyaNo ratings yet

- 66 - Series Singer 66 Sewing Machine ManualDocument16 pages66 - Series Singer 66 Sewing Machine ManualCynthia PorterNo ratings yet

- Base Institute - Namakkal - PH: 900 37 111 66: - Mock - Ibpsguide.in - 1Document288 pagesBase Institute - Namakkal - PH: 900 37 111 66: - Mock - Ibpsguide.in - 1Kartik MaheshwariNo ratings yet

- Dcc9 2019 Summer Model Answer Paper (Msbte Study Resources)Document39 pagesDcc9 2019 Summer Model Answer Paper (Msbte Study Resources)Sunita DhokneNo ratings yet

- TR - 2D Game Art Development NC IIIDocument66 pagesTR - 2D Game Art Development NC IIIfor pokeNo ratings yet

- Ep English Teachers GuideDocument180 pagesEp English Teachers GuideJessy ChrisNo ratings yet

- Seo Services in DelhiDocument9 pagesSeo Services in DelhiSunil KumarNo ratings yet

- D16 DipIFR Answers PDFDocument8 pagesD16 DipIFR Answers PDFAnonymous QtUcPzCANo ratings yet

- Virtual SEA Mid-Frequency Structure-Borne Noise Modeling Based On Finite Element AnalysisDocument11 pagesVirtual SEA Mid-Frequency Structure-Borne Noise Modeling Based On Finite Element AnalysisKivanc SengozNo ratings yet

- Data Loss PreventionDocument6 pagesData Loss Preventionrajesh kesariNo ratings yet