Professional Documents

Culture Documents

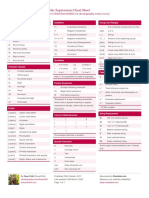

Task Assignment and Scheduling

Task Assignment and Scheduling

Uploaded by

ablog1650 ratings0% found this document useful (0 votes)

145 views4 pagesTask Assignment and Scheduling

Copyright

© Attribution Non-Commercial (BY-NC)

Available Formats

DOC, PDF or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentTask Assignment and Scheduling

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as DOC, PDF or read online from Scribd

Download as doc or pdf

0 ratings0% found this document useful (0 votes)

145 views4 pagesTask Assignment and Scheduling

Task Assignment and Scheduling

Uploaded by

ablog165Task Assignment and Scheduling

Copyright:

Attribution Non-Commercial (BY-NC)

Available Formats

Download as DOC, PDF or read online from Scribd

Download as doc or pdf

You are on page 1of 4

TASK ASSIGNMENT

AND SCHEDULING

The purpose of real-time computing is to execute, by the appropriate deadlines,

its critical control tasks. In this chapter, we will look at techniques for allocating

and scheduling tasks on processors to ensure that deadlines are met.

There is an enormous literature on scheduling: indeed, the field appears to

have grown exponentially since the mid-1970s! We present here the subset of the

results that relates to the meeting of deadlines.

The allocation/scheduling problem can be stated as follows. Given a set

of tasks, task precedence constraints, resource requirements, task characteristics,

and deadlines, we are asked to devise a feasible allocation/schedule on a given

computer. Let us define some of these terms.

Formally speaking, a task consumes resources (¢.g., processor time, memory,

and input data), and puts out one or more results. Tasks may have precedence

constraints, which specify if any task(s) needs to precede other tasks. If task 7j’s

‘output is needed as input by task 7}, then task 7; is constrained to be preceded

by task Tj. The precedence constraints can be most conveniently represented by

means of a precedence graph. We show an example of this in Figure 3.1. The

arrows indicate which task has precedence over which other task. We denote the

precedent-task set of task T by <(T); that is, < (7) indicates which tasks must

be completed before T can begin.

n

¥ ‘r5

%

FIGURE 3.1

% Example of a precedence graph,

‘Example 3.1, In Figure 3.1, we have

i). Commonly, for economy of representation,

we only list the immediate ancestors in the precedence set; for example, we can

write < (5) = {2,3} since < (2) = {1). The precedence operator is transitive.

‘That is,

iixk

In some cases, > and ~ are used to denote which task has higher priority; that is,

i> j can mean that 7; has higher priority than Z;. The meaning of these symbols

should be clear from context.

Each task has resource requirements. All tasks require some execution time

on a processor. Also, a task may require a certain amount of memory or access to

a bus. Sometimes, a resource must be exclusively held by a task (i.e., the task must

have sole possession of it). In other cases, resources are nonexclusive. The same

physical resource may be exclusive or nonexclusive depending on the operation

to be performed on it. For instance, a memory object (or anything else to which

writing is not atomic) that is being read is nonexclusive. However, while it is

being written into, it must be held exclusively by the writing task.

The release time of a task is the time at which all the data that are required

to begin executing the task are available, and the deadline is the time by which the

task must complete its execution. The deadline may be hard or soft, depending

‘on the nature of the corresponding task. The relative deadline of a task is the

absolute deadline minus the release time. That is, if task 7; has a relative deadline

4d; and is released at time z, it must be executed by time 1 + d;. The absolute

deadline is the time by which the task must be completed. In this example, the

absolute deadline of 7; is t + dj.

A task may be periodic, sporadic, or aperiodic. A task 7; is periodic if it is

released periodically, say every P; seconds. P; is called the period of task Tj. The

periodicity constraint requires the task to run exactly once every period; it does

not require that the tasks be run exactly one period apart. Quite commonly, the

period of a task is also its deadline, The task is sporadic if it is not periodic, but

may be invoked at irregular intervals. Sporadic tasks are characterized by an upper

bound on the rate at which they may be invoked. This is commonly specified by

requiring that successive invocations of a sporadic task 7; be separated in time by

at least r(i) seconds. Sporadic tasks are sometimes also called aperiodic. However,

some people define aperiodic tasks to be those tasks which are not periodic and

which also have no upper bound on their invocation rate.

Example 3.2. Consider a pressure vessel whose pressure is measured every 10 ms.

If the pressure exceeds a critical value, an alarm is sounded. The task that measures

the pressure is periodic, with a period of 10 ms. The task that turns on the alarm is

sporadic. What is the maximum rate at which this task can be invoked’

A task assignment'/schedule is said to be feasible if all the tasks start after

their release times and complete before their deadlines. We say that a set of tasks

is A-feasible if an assignment/scheduling algorithm A, when run on that set of

tasks, results in a feasible schedule. The bulk of the work in real-time scheduling

deals with obtaining feasible schedules.

Given these task characteristics and the execution times and deadlines asso-

ciated with the tasks, the tasks are allocated or assigned to the various processors

and scheduled on them. The schedule can be formally defined as a function

5: Set of processors x Time —> Set of tasks GB.2)

S(i, 1) is the task scheduled to be running on processor i at time 1. Most of the

time, we will depict schedules graphically.

A schedule may be precomputed (offline scheduling), or obtained dynami-

cally (online scheduling). Offiine scheduling involves scheduling in advance of the

operation, with specifications of when the periodic tasks will be run and slots for

the sporadic/aperiodic tasks in the event that they are invoked. In online schedul-

ing, the tasks are scheduled as they arrive in the system. The algorithms used in

online scheduling must be fast; an online scheduling algorithm that takes so long

that it leaves insufficient time for tasks to meet their deadlines is clearly useless.

‘The relative priorities of tasks are a function of the nature of the tasks

themselves and the current state of the controlled process. For example, a task

that controls stability in an aircraft and that has to be run at a high frequency

can reasonably be assigned a higher priority than one that controls cabin pressure.

Also, a mission can consist of different phases or modes and the priority of the

same task can vary from one phase to another.

Example 33. The flight of an aircraft can be broken down into phases such as

takeoff, climb, cruise, descend, and land. In each phase, the task mix, task priorities,

and task deadlines may be different.

‘There are algorithms that assume that the task priority does not change within

‘a mode; these are called static-priority algorithms. By contrast, dynamic-priority

algorithms assume that priority can change with time. The best known examples

of static- and dynamic-priority algorithms are the rate-monotonic (RM) algorithm

and the earliest deadline first (EDF) algorithm, respectively. We shall discuss each

in considerable detail.

‘The schedule may be preemptive or nonpreemptive. A schedule is preemp-

tive if tasks can be interrupted by other tasks (and then resumed). By contrast,

once a task is begun in a nonpreemptive schedule, it must be run to completion or

until it gets blocked over a resource. We shall mostly be concerned with preemp-

tive schedules in this book—wherever possible, critical tasks must be allowed to

interrupt less critical ones when it is necessary to meet deadlines.

Preemption allows us the flexibility of not committing the processor to run

a task through to completion once we start executing it. Committing the processor

in a nonpreemptive schedule can cause anomalies,

Example 3.4. Consider a two-task system, Lot the release times of tasks 7; and 7

be 1 and 2, respectively; the deadlines ‘x 9 and 6; and the execution times be 3.25

and 2. Schedule 5; in Figure 3.2 mects both deadlines. However, suppose we follow

the perfecily sensible policy of not keeping the processor idle whenever there is a

task waiting to be run; then, we will have schedule 5.

This results in task 7; missing its deadline!

task 2 deadline

ts Ti

T Te

T th Ty

ua L L L L L t J

0 1 2 3 4 s 1 8

Time

FIGURE 32

Preemptive and nonpreemptive schedules.

IRIS tasks: IRIS stands for increased reward with increased service. Many

algorithms have the property that they can be stopped early and still provide useful

output. The quality of the output is a monotonically nondecreasing function of

the execution time. Iterative algorithms (e.g,, algorithms that compute 3 or ) are

one example of this. In Section 3.3, we provide algorithms suitable for scheduling

such tasks.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5820)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Simulation FIFO, LFU and MFU Page Replacement Algorithms (VB) by Shaify MehtaDocument6 pagesSimulation FIFO, LFU and MFU Page Replacement Algorithms (VB) by Shaify MehtaShaify Mehta69% (13)

- Turbo C++ Debugger Case StudyDocument3 pagesTurbo C++ Debugger Case Studyablog16533% (3)

- Case Study - Linux - Part 1Document9 pagesCase Study - Linux - Part 1ablog165No ratings yet

- BNFDocument14 pagesBNFablog165No ratings yet

- Task Assignment and SchedulingDocument4 pagesTask Assignment and Schedulingablog165No ratings yet

- Key Architectural PrinciplesDocument9 pagesKey Architectural Principlesablog165No ratings yet

- Asynchronous Transfer ModeDocument16 pagesAsynchronous Transfer Modeablog165No ratings yet

- 1 Mathmworld Module 3 Section 3.1-1Document7 pages1 Mathmworld Module 3 Section 3.1-1Pamela LusungNo ratings yet

- Design and Analysis of Algorithms: Logistics, Introduction, and Multiplication!Document57 pagesDesign and Analysis of Algorithms: Logistics, Introduction, and Multiplication!Nguyễn Thanh PhátNo ratings yet

- Cs 503 - Design & Analysis of Algorithm: Multiple Choice QuestionsDocument3 pagesCs 503 - Design & Analysis of Algorithm: Multiple Choice QuestionsHusnain MahmoodNo ratings yet

- Tree TraversalDocument11 pagesTree TraversalHunzala ShaukatNo ratings yet

- PrologDocument4 pagesPrologSaurabh PorwalNo ratings yet

- Unit III Regular GrammarDocument54 pagesUnit III Regular GrammarDeependraNo ratings yet

- Iii Sem - Design Analysis Algorithm PDFDocument36 pagesIii Sem - Design Analysis Algorithm PDFAnil KumarNo ratings yet

- Formal Languages & Finite Theory of Automata: BS CourseDocument15 pagesFormal Languages & Finite Theory of Automata: BS CourseAsim RazaNo ratings yet

- Predicate Calculus AIDocument43 pagesPredicate Calculus AIvepowo LandryNo ratings yet

- Improving Classification With AdaBoostDocument20 pagesImproving Classification With AdaBoostHawking BearNo ratings yet

- Q1 Week 6 Polynomials Long DivisionDocument19 pagesQ1 Week 6 Polynomials Long DivisionsemicolonNo ratings yet

- Problem K: Trash RemovalDocument2 pagesProblem K: Trash RemovalNurujjamanKhanNo ratings yet

- QB FlatDocument8 pagesQB FlatDibyendu DuttaNo ratings yet

- Assignment 6: Huffman Encoding: Assignment Overview and Starter FilesDocument20 pagesAssignment 6: Huffman Encoding: Assignment Overview and Starter FilesHarsh TiwariNo ratings yet

- Schedule of Parallel Talk ICGTIS 17 PDFDocument6 pagesSchedule of Parallel Talk ICGTIS 17 PDFElise ManurungNo ratings yet

- Cse-3201 (Ai - 08) PDFDocument38 pagesCse-3201 (Ai - 08) PDFmna shourovNo ratings yet

- Assignment On Deadlock: Set: 01 Class Test Number: 02Document6 pagesAssignment On Deadlock: Set: 01 Class Test Number: 02Md Saidur Rahman Kohinoor70% (10)

- Inserting Turbo Code Technology Into The DVB Satellite Broadcasting SystemDocument20 pagesInserting Turbo Code Technology Into The DVB Satellite Broadcasting SystemAirCanada SkiNo ratings yet

- It Is Compulsory To Submit The Assignment Before Filling in TheDocument6 pagesIt Is Compulsory To Submit The Assignment Before Filling in Thepranay mondalNo ratings yet

- Đề Quiz01 CSDDocument24 pagesĐề Quiz01 CSDTâm ThanhNo ratings yet

- PythonDocument83 pagesPythonRuel J. SaunarNo ratings yet

- Introduction To Automata Theory, Languages, and Computation: I Hill IiiDocument9 pagesIntroduction To Automata Theory, Languages, and Computation: I Hill IiiGautam KumarNo ratings yet

- Davechild Regular-ExpressionsDocument1 pageDavechild Regular-ExpressionsAilin ApturamanNo ratings yet

- Presentations PPT Unit-4 27042019035826AMDocument44 pagesPresentations PPT Unit-4 27042019035826AMDEPT. OF CSNo ratings yet

- Syntax Directed TranslationDocument21 pagesSyntax Directed TranslationRohit TiwariNo ratings yet

- Compiler Design TutorialDocument29 pagesCompiler Design Tutorialanon_230445096No ratings yet

- Lab 2 ManualDocument11 pagesLab 2 Manualali haiderNo ratings yet

- Asymtotic NotationDocument38 pagesAsymtotic NotationeddyNo ratings yet

- DLD Lab Report 4Document11 pagesDLD Lab Report 4Zain Ullah Inayat UllahNo ratings yet