Professional Documents

Culture Documents

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Uploaded by

ysf1991Copyright:

Available Formats

You might also like

- Mobile Forensic Investigations A Guide To Evidence Collection Analysis and Presentation 1St Edition Reiber Full ChapterDocument67 pagesMobile Forensic Investigations A Guide To Evidence Collection Analysis and Presentation 1St Edition Reiber Full Chapterrita.smith780100% (19)

- Split Operator MethodDocument13 pagesSplit Operator MethodLucian S. VélezNo ratings yet

- Meetings in A Company.Document37 pagesMeetings in A Company.armaanNo ratings yet

- Lecture 8 Beam Deposition TechnologyDocument24 pagesLecture 8 Beam Deposition Technologyshanur begulajiNo ratings yet

- Averaging Oscillations With Small Fractional Damping and Delayed TermsDocument20 pagesAveraging Oscillations With Small Fractional Damping and Delayed TermsYogesh DanekarNo ratings yet

- Unsupervised Clustering in Streaming DataDocument5 pagesUnsupervised Clustering in Streaming DataNguyễn DũngNo ratings yet

- ) W !"#$%&' +,-./012345 Ya - Fi Mu: Discounted Properties of Probabilistic Pushdown AutomataDocument33 pages) W !"#$%&' +,-./012345 Ya - Fi Mu: Discounted Properties of Probabilistic Pushdown Automatasmart_gaurav3097No ratings yet

- Path-Integral Evolution of Multivariate Systems With Moderate NoiseDocument15 pagesPath-Integral Evolution of Multivariate Systems With Moderate NoiseLester IngberNo ratings yet

- TPWL IeeeDocument6 pagesTPWL Ieeezhi.han1091No ratings yet

- Lecture Notes Stochastic Optimization-KooleDocument42 pagesLecture Notes Stochastic Optimization-Koolenstl0101No ratings yet

- Queueing System 3Document20 pagesQueueing System 3ujangketul62No ratings yet

- ReportDocument18 pagesReportapi-268494780No ratings yet

- Joint Optimization of Transceivers With Fractionally Spaced EqualizersDocument4 pagesJoint Optimization of Transceivers With Fractionally Spaced EqualizersVipin SharmaNo ratings yet

- Stochastic ProcessesDocument6 pagesStochastic ProcessesmelanocitosNo ratings yet

- 半稳定随机过程Document18 pages半稳定随机过程wang qingboNo ratings yet

- Hoffman 2017Document12 pagesHoffman 2017Jonathan TeixeiraNo ratings yet

- Discrete-Event Simulation of Fluid Stochastic Petri NetsDocument9 pagesDiscrete-Event Simulation of Fluid Stochastic Petri NetsssfofoNo ratings yet

- Discrete-Time Multiserver Queues With Geometric Service TimesDocument30 pagesDiscrete-Time Multiserver Queues With Geometric Service Timeschege ng'ang'aNo ratings yet

- AMR Juniper Hanifi Theofilis 2014Document43 pagesAMR Juniper Hanifi Theofilis 2014TomNo ratings yet

- A Quantitative Landauer's PrincipleDocument16 pagesA Quantitative Landauer's PrinciplelupinorionNo ratings yet

- Sess 6 Tuenbaeva NazarovDocument7 pagesSess 6 Tuenbaeva NazarovParvez KhanNo ratings yet

- CHP 1curve FittingDocument21 pagesCHP 1curve FittingAbrar HashmiNo ratings yet

- IOSR JournalsDocument7 pagesIOSR JournalsInternational Organization of Scientific Research (IOSR)No ratings yet

- R SimDiffProcDocument25 pagesR SimDiffProcschalteggerNo ratings yet

- Coleman Dcc08Document10 pagesColeman Dcc08Remberto SandovalNo ratings yet

- Nonlinear Filtering For Observations On A Random Vector Field Along A Random Path. Application To Atmospheric Turbulent VelocitiesDocument25 pagesNonlinear Filtering For Observations On A Random Vector Field Along A Random Path. Application To Atmospheric Turbulent VelocitiesleifpersNo ratings yet

- 1 s2.0 S0304414918301418 MainDocument44 pages1 s2.0 S0304414918301418 MainMahendra PerdanaNo ratings yet

- Optimal Control of Stochastic Delay Equations and Time-Advanced Backward Stochastic Differential EquationsDocument25 pagesOptimal Control of Stochastic Delay Equations and Time-Advanced Backward Stochastic Differential EquationsIdirMahroucheNo ratings yet

- Explaining Convolution Using MATLABDocument10 pagesExplaining Convolution Using MATLABRupesh VermaNo ratings yet

- Massively Parallel Semi-Lagrangian Advection: SimulationDocument16 pagesMassively Parallel Semi-Lagrangian Advection: SimulationSandilya KambampatiNo ratings yet

- Heavy Tailed Time SeriesDocument28 pagesHeavy Tailed Time Seriesarun_kejariwalNo ratings yet

- Simulation of Stopped DiffusionsDocument22 pagesSimulation of Stopped DiffusionssupermanvixNo ratings yet

- Bayesian AnalysisDocument20 pagesBayesian AnalysisbobmezzNo ratings yet

- SilveyDocument20 pagesSilveyEzy VoNo ratings yet

- Constrained Stabilization of Continuous-Time Linear Systems: $) RST - , Qlts F. Conlltot. UtnitsDocument8 pagesConstrained Stabilization of Continuous-Time Linear Systems: $) RST - , Qlts F. Conlltot. UtnitsNeetha PrafaNo ratings yet

- Hybrid SchemeDocument35 pagesHybrid SchemeBryan DilamoreNo ratings yet

- 10 1 1 87 2049 PDFDocument23 pages10 1 1 87 2049 PDFPatrick MugoNo ratings yet

- Deep Gaussian Covariance NetworkDocument14 pagesDeep Gaussian Covariance NetworkaaNo ratings yet

- Scale Resolved Intermittency in Turbulence: Siegfried Grossmann and Detlef Lohse October 30, 2018Document13 pagesScale Resolved Intermittency in Turbulence: Siegfried Grossmann and Detlef Lohse October 30, 2018cokbNo ratings yet

- Car Act A Bat ADocument17 pagesCar Act A Bat Amariusz19781103No ratings yet

- Itô's Stochastic Calculus and Its ApplicationsDocument61 pagesItô's Stochastic Calculus and Its Applicationskim haksongNo ratings yet

- Stochastic Processes 2Document11 pagesStochastic Processes 2Seham RaheelNo ratings yet

- Bregni, 2004Document5 pagesBregni, 2004عمار طعمةNo ratings yet

- Physics-Informed Deep Generative ModelsDocument8 pagesPhysics-Informed Deep Generative ModelsJinhan KimNo ratings yet

- Time-Frequency Analysis of Locally Stationary Hawkes ProcessesDocument31 pagesTime-Frequency Analysis of Locally Stationary Hawkes ProcessesPerson PersonsNo ratings yet

- An Adaptive Nonlinear Least-Squares AlgorithmDocument21 pagesAn Adaptive Nonlinear Least-Squares AlgorithmOleg ShirokobrodNo ratings yet

- 16 Aap1257Document43 pages16 Aap1257Guifré Sánchez SerraNo ratings yet

- 16 Fortin1989 PDFDocument16 pages16 Fortin1989 PDFHassan ZmourNo ratings yet

- Delay Differential Equation With Application in PoDocument13 pagesDelay Differential Equation With Application in Pobias sufiNo ratings yet

- Feshbach Projection Formalism For Open Quantum SystemsDocument5 pagesFeshbach Projection Formalism For Open Quantum Systemsdr_do7No ratings yet

- A Brief Introduction To Some Simple Stochastic Processes: Benjamin LindnerDocument28 pagesA Brief Introduction To Some Simple Stochastic Processes: Benjamin Lindnersahin04No ratings yet

- The WSSUS Pulse Design Problem in Multi Carrier TransmissionDocument24 pagesThe WSSUS Pulse Design Problem in Multi Carrier Transmissionhouda88atNo ratings yet

- Heuristic Contraction Hierarchies With Approximation GuaranteeDocument7 pagesHeuristic Contraction Hierarchies With Approximation Guaranteemadan321No ratings yet

- Kuang Delay DEsDocument12 pagesKuang Delay DEsLakshmi BurraNo ratings yet

- A Study of Linear Combination of Load EfDocument16 pagesA Study of Linear Combination of Load EfngodangquangNo ratings yet

- Input Window Size and Neural Network Predictors: XT D FXTXT XT N XT D F T T XDocument8 pagesInput Window Size and Neural Network Predictors: XT D FXTXT XT N XT D F T T Xtamas_orban4546No ratings yet

- MIT - Stochastic ProcDocument88 pagesMIT - Stochastic ProccosmicduckNo ratings yet

- MAT 3103: Computational Statistics and Probability Chapter 7: Stochastic ProcessDocument20 pagesMAT 3103: Computational Statistics and Probability Chapter 7: Stochastic ProcessSumayea SaymaNo ratings yet

- Ku Satsu 160225Document11 pagesKu Satsu 160225LameuneNo ratings yet

- Whitepaper Kihm Rizzi Ferguson Halfpenny RASD2013Document16 pagesWhitepaper Kihm Rizzi Ferguson Halfpenny RASD2013Felipe Dornellas SilvaNo ratings yet

- 2 2 Queuing TheoryDocument18 pages2 2 Queuing TheoryGilbert RozarioNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Casio Service ManualDocument8 pagesCasio Service Manualysf19910% (1)

- Introduction To Mainframe HardwareDocument64 pagesIntroduction To Mainframe Hardwareysf1991No ratings yet

- Natural Language ProcessingDocument31 pagesNatural Language Processingysf1991No ratings yet

- Strath Cis Publication 320Document38 pagesStrath Cis Publication 320ysf1991No ratings yet

- Context-Aware Language Modeling For Conversational Speech TranslationDocument8 pagesContext-Aware Language Modeling For Conversational Speech Translationysf1991No ratings yet

- DocuDocument44 pagesDocuysf19910% (1)

- 3 Layer Dynamic CaptchaDocument7 pages3 Layer Dynamic Captchaysf1991No ratings yet

- Sun Fire X4100 M2 and X4200 M2 Server Architectures: A Technical White Paper October 2006 Sunwin Token # 481905Document45 pagesSun Fire X4100 M2 and X4200 M2 Server Architectures: A Technical White Paper October 2006 Sunwin Token # 481905ysf1991No ratings yet

- Development of Interpersonal Skills: Short-Term CourseDocument4 pagesDevelopment of Interpersonal Skills: Short-Term Courseysf1991No ratings yet

- 2021 1 Eau p2 Estereotomía Programa de CursoDocument6 pages2021 1 Eau p2 Estereotomía Programa de CursoDixom Javier Monastoque RomeroNo ratings yet

- Thermodrain FRP Manhole Cover Price ListDocument1 pageThermodrain FRP Manhole Cover Price Listmitesh20281No ratings yet

- Q & A ReliablityDocument6 pagesQ & A ReliablitypkcdubNo ratings yet

- Strategic Foresight For Innovation Management: A Review and Research AgendaDocument34 pagesStrategic Foresight For Innovation Management: A Review and Research AgendaTABAH RIZKINo ratings yet

- Objectives Overview: Discovering Computers Fundamentals Fundamentals, 2012 EditionDocument17 pagesObjectives Overview: Discovering Computers Fundamentals Fundamentals, 2012 Editionkeith magakaNo ratings yet

- Journal of Safety Research: Bruce J. Ladewski, Ahmed Jalil Al-BayatiDocument8 pagesJournal of Safety Research: Bruce J. Ladewski, Ahmed Jalil Al-BayatiAyoub SOUAINo ratings yet

- K FactorsDocument7 pagesK Factorsjose_alberto2No ratings yet

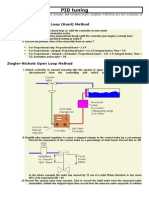

- PID TuningDocument4 pagesPID TuningJitendra Kumar100% (1)

- CL7103 SystemTheoryquestionbankDocument11 pagesCL7103 SystemTheoryquestionbanksyed1188No ratings yet

- P9RyanGauvreau PortfolioDocument21 pagesP9RyanGauvreau PortfolioRyan GauvreauNo ratings yet

- Microsoft Project Exercise 4, School of Business Costs, Levels, Reallocations, 3 Oct 2010Document3 pagesMicrosoft Project Exercise 4, School of Business Costs, Levels, Reallocations, 3 Oct 2010Lam HoangNo ratings yet

- Recruitment & Selection TASKSDocument6 pagesRecruitment & Selection TASKSMarcus McGowanNo ratings yet

- 9 Data Entry Interview Questions and AnswersDocument2 pages9 Data Entry Interview Questions and AnswersBIRIGITA AUKANo ratings yet

- Skrill Quick Checkout Guide v7.10Document118 pagesSkrill Quick Checkout Guide v7.10IbrarAsadNo ratings yet

- Bài Tập Thì Hiện Tại Tiếp DiễnDocument9 pagesBài Tập Thì Hiện Tại Tiếp DiễnHÀ PHAN HOÀNG NGUYỆTNo ratings yet

- Bafna Pharmaceuticals: Regulated GrowthDocument8 pagesBafna Pharmaceuticals: Regulated GrowthpradeepchoudharyNo ratings yet

- Fixed Term Employment Agreement - MLJDRDocument11 pagesFixed Term Employment Agreement - MLJDRrizalyn perezNo ratings yet

- ATA 00 Abbreviation PDFDocument52 pagesATA 00 Abbreviation PDFDiego DeferrariNo ratings yet

- Adel Henna: Education Personal ProjectsDocument2 pagesAdel Henna: Education Personal ProjectsSma ilNo ratings yet

- Safe Implementation Roadmap Series, 12Document2 pagesSafe Implementation Roadmap Series, 12deepak sadanandanNo ratings yet

- Origin Search (DINT) : - NCCPU051 - Home - DINT: EN ON OFFDocument2 pagesOrigin Search (DINT) : - NCCPU051 - Home - DINT: EN ON OFFbobNo ratings yet

- Indian Standard: Door Shutters - Methods of Tests (Third Revision)Document23 pagesIndian Standard: Door Shutters - Methods of Tests (Third Revision)Marc BarmerNo ratings yet

- Service Letter: 1.0 Issue - Incorrectly Located Boom Lifting HolesDocument5 pagesService Letter: 1.0 Issue - Incorrectly Located Boom Lifting HolesedwinNo ratings yet

- Network Layer Routing in Packet Networks Shortest Path RoutingDocument45 pagesNetwork Layer Routing in Packet Networks Shortest Path RoutingHalder SubhasNo ratings yet

- Ariens Sno Thro 924 Series Snow Blower Parts ManualDocument28 pagesAriens Sno Thro 924 Series Snow Blower Parts ManualBradCommander409100% (1)

- BT Quiz - 2Document8 pagesBT Quiz - 2Navdha KapoorNo ratings yet

- How Many of You Remember The 1975 NFC Divisional Playoff Game Between The Dallas Cowboys and The MN VikingsDocument5 pagesHow Many of You Remember The 1975 NFC Divisional Playoff Game Between The Dallas Cowboys and The MN VikingsBillieJean DanielsNo ratings yet

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Uploaded by

ysf1991Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Queueing Analysis of High-Speed Multiplexers Including Long-Range Dependent Arrival Processes

Uploaded by

ysf1991Copyright:

Available Formats

Queueing Analysis of High-Speed Multiplexers including

Long-Range Dependent Arrival Processes.

1

Jinwoo Choe Ness B. Shroff

2

Department of Electrical and Computer Engineering School of Electrical and Computer Engineering

University of Toronto Purdue University

jinwoo@comm.toronto.edu shroff@ecn.purdue.edu

AbstractWith the advent of high-speed networks, a single link will

carry hundreds or even thousands of applications. This results in a very

natural application of the Central Limit Theorem, to model the network

trafc by Gaussian stochastic processes. In this paper we study the tail

probability P({Q > x}) of a queueing system when the input process is

assumed to be a very general class of Gaussian processes which includes

a large class of self-similar or other types of long-range dependent Gaus-

sian processes. For example, past work on Fractional Brownian Motion,

and variations therein, are but a small subset of the work presented in

this paper. Our study is based on Extreme Value Theory and we show that

log P({Q > x}) + mx/ 2 grows at most on the order of log x, where mx

corresponds to the reciprocal of the maximum (normalized) variance of

a Gaussian process directly related to the aggregate input process. Our

result is considerably stronger than the existing results in the literature

based on Large Deviation Theory, and we theoretically show that this im-

provement can be quite important in characterizing the asymptotic behav-

ior of P({Q > x}). Through numerical examples, we also demonstrate

that exp [mx/2] provides a very accurate estimate for a variety of long-

range and short-range dependent input processes over the entire buffer

range.

I. INTRODUCTION

In this paper, we study the supremum distribution of a

Gaussian process having stationary increments. In general, a

stochastic process {X

t

: t 0} is said to have stationary in-

crements if the distribution of X

t+

X

t

depends only on the

time difference , and not on t. The study of the supremum

distribution (i.e., the distribution of sup

t

X

t

) of stochastic pro-

cesses with stationary increments has received a lot of interest

in large part because of its direct relation to the steady state

queue length distribution of a queueing system (e.g. see [11],

[15]). Consider a queueing system, such as the one shown in

Fig. 1. Let

t

be dened in such a way that

t

s

is the

amount of uid that arrives into the system during the time

interval (s, t]. Similarly, we dene M

t

to be a function of t

such that M

t

M

s

is the maximum amount of uid that can

be served during the time interval (s, t]. Assuming that the

queue is empty at t = 0, Q

t

, the amount of uid in the system

(workload) at time t can be expressed as

Q

t

= sup

0st

(N

t

N

s

) ,

where N

t

:=

t

M

t

(e.g., see [11]).

If we assume that

t

and M

t

are independent stochastic pro-

cesses with stationary increments, then

P({Q

t

> x}) = P

__

sup

0st

(N

t

N

s

) > x

__

1

This research was supported in part by NSF CAREER Grant NCR-

9624525, NSF Grant CDA-9422250, and the Purdue Research Foundation

grant 690-1285-2479.

2

Please address all correspondence to this author, Tel. +1 765 494-3471,

Fax. +1 765 494-3358

Q

t

t

t

Fig. 1. A uid queueing system with an innite buffer and a server.

= P

__

sup

ts0

(N

0

N

s

) > x

__

t

P

__

sup

s0

(N

0

N

s

) > x

__

. (1)

Hence, P({Q > x}) := lim

t

P({Q

t

> x}) =

P

__

sup

t0

(N

0

N

t

) > x

__

. So if we dene X

t

:= N

0

N

t

, then {X

t

: t 0} is a stochastic process with station-

ary increments, and P({Q > x}) = P

__

sup

t0

X

t

> x

__

.

For notational simplicity, henceforth, we dene w

:=

sup

, where the index set will be omitted when it cov-

ers the entire domain on which w

is dened. Hence, for ex-

ample,

P({Q > x}) = P({X > x}),

and throughout this paper we will interchange the use of

P({Q > x}) and P({X > x}).

A large body of the work devoted to the study of the supre-

mum distribution has focused on the asymptotic tail behavior

of this distribution; i.e., the asymptotic behavior of P({X >

x}) (or equivalently P({Q > x})). The theory of Large Devia-

tions has been widely used providing very general and elegant

results on the asymptotic behavior of log P({X > x}) [3],

[10], [11] (see [9] for more details about general Large Devia-

tion techniques). For example, in [11], using Large Deviation

techniques, it has been shown for a large class of stochastic

processes that

log P({X > x})

x

x, (2)

where the asymptotic decay rate, , is a positive constant that

can usually be calculated with accuracy, and the similarity re-

lation f(x)

x

g(x) means that for any > 0, there exists

an x

o

such that for all x > x

o

, f(x) lies in the (closed) in-

terval enclosed by (1 )g(x) and (1 + )g(x).

However,

This is a more general denition of similarity () than the typical denition

given by limx g(x)/ f(x) = 1.

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

for many important types of processes, such as self-similar or

other long-range dependent processes [2], [14], the tail prob-

ability may not be exponential, and more generally, even the

above result may not hold. To address this problem, in [10],

the tremendous generality of Large Deviation techniques was

exploited, and the above result was extended through an ele-

gant scaling technique to obtain

log P({X > x})

x

q(x), (3)

where q(x) is some increasing function of x, which may not

be linear in x. Since q(x) can be obtained in a simple form

for many different types of processes, including many self-

similar processes, this result has accelerated recent progress in

studying the queueing behavior of network trafc. However,

the great generality of the results based on the Large Devia-

tion techniques do come at a cost: poor resolution. This is

because the similarity relation given by (3) captures only the

leading (most rapidly growing) termof log P({X > x}). For

example, if q(x) = x satises (3), then so does q(x) = x+

x,

even though it is a very different function of x. Therefore,

approximations for P({X > x}), based on (3), should be

used with some caution, since (3) provides relatively weak

theoretical support to the accuracy of these approximations.

In order to address this difculty, our objective in this paper

is to focus on Gaussian processes, including many types of

long-range dependent processes, and develop a considerably

stronger asymptotic relation.

Recently, Gaussian processes have received a lot of attention

for the modeling and analysis of queueing behavior in high-

speed networks [1], [5], [7], [8], [17]. There are many reasons

for this. Due to the huge link capacity of high-speed networks,

hundreds or even thousands of network applications are likely

to be served by a multiplexer. For example, an OC 3 line

(155.52 Mbps) can accommodate over 7700 typical voice calls

at a link utilization of 0.8. An OC 12 line (622.08 Mbps)

can accommodate over 300 MPEG-1 (1.5 Mbps) video calls

at the same link utilization. Many companies sell commercial

switches that support OC 12 lines, and ATM networks with

OC 24 (1.2 Gbps) lines are already operational (at Cam-

bridge University, for example). Also, switches supporting

link capacities of several gigabits-per-second (and higher) are

on the horizon, all of which suggests that by appealing to the

Central Limit Theorem, we can accurately characterize the in-

put process as a Gaussian process.

Moreover, when a large

number of sources are multiplexed, characterizing the input

process with traditional Markovian models results in computa-

tional infeasibility problems [19] that are not encountered for

Gaussian processes. Finally, a Gaussian process is completely

specied by its rst two moments, the mean and the autoco-

variance function. Therefore, to model the aggregate trafc

as a Gaussian process, we need only the rst two moments of

either the aggregate trafc or the individual packet streams.

We assume that {X

t

: t 0} is a Gaussian process

with stationary increments such that X

0

= 0. We de-

ne := E{X

t

}/ t and v

t

:= Var{X

t

}. Since X

t

In earlier studies with trafc modeling we have empirically found that, typ-

ically, a couple of hundred multiplexed sources are sufcient for the trafc to

be modeled as a Gaussian process [5], [7].

is Gaussian, P({X

t

> x}) can be expressed in terms of

v

t

, , and the standard Gaussian tail function (w) :=

_

w

exp

_

z

2

/2

dz/

2, as

P({X

t

> x}) =

_

x +t

v

t

_

.

Assuming that v

t

/ t

2

0 as t , it is not difcult to see

that v

t

_

(x +t)

2

should attain its maximumvalue at some -

nite t = t

x

, and therefore, the probability P({X

t

> x}) is also

maximized at t = t

x

. The qualitative statement rare event

take place only in the most probable way (e.g. see [10]) sug-

gests that P({X

tx

> x}) = sup

t

P({X

t

> x}) should be

a good lower bound approximation for P({X > x}), and

in fact similar ideas have already been used in different ways

to analyze and approximate the tail probability [7], [10], [11].

In [7], [8], we have provided a rigorous asymptotic result that

theoretically supports the above qualitative statement (this re-

sult is both generalized and strengthened by Theorem 3 in this

paper). Also, in those papers, for a fairly large class of Gaus-

sian processes where the tail probability is asymptotically ex-

ponential, we have found that (i) m

x

:= (x +t

x

)

2

/v

tx

(the

reciprocal of the maximum value of v

t

_

(x +t)

2

) contains

important information about the shape of the tail probability

curve, (ii) exp[m

x

/ 2] asymptotically bounds the tail proba-

bility from above, and (iii) exp[m

x

/ 2] provides a very accu-

rate estimate of the tail probability over a wide range of queue

lengths x, including small values of x.

In this paper, we consider a more general class of Gaussian

processes, including a large class of long-range dependent pro-

cesses, to show that

log P({X > x}) +

m

x

2

O(log x), (4)

where O(f(x)) denotes the set of functions g(x) such that

limsup

x

| g(x)/ f(x)| < . Observe that (4) character-

izes log P({X > x}) in much more detail than (3). Further,

(4) suggests that the asymptotic behavior of log P({X > x})

is very similar to that of m

x

/ 2, and that the difference be-

tween them is asymptotically either a constant (as found in [7]

and [8] in a more restrictive setting) or a very slowly growing

function of x. Therefore, (4) provides more information on the

asymptotic behavior of P({X > x}) than (3), and suggests

that the simple approximation exp[m

x

/ 2] can be used to es-

timate P({X > x}), even for long-range dependent X

t

. In

Section IV, we will show that the improvement from (3) to (4)

can be critical for the accurate characterization (or estimation)

of the tail probability.

Here we should distinguish our work in this paper from

some results in the literature. All of the above discussion

(including the work in this paper) is about x-asymptotics

i.e., the asymptotic behavior of P({Q > x}), as the queue

length x increases. There has been recent work that focuses

on the asymptotic behavior of P({Q > x}) when the number

of sources, the queue length, and the service rate are all pro-

portionally sent to innity (e.g. [3], [16]). We classify these

studies as M-asymptotics, where M represents the number of

sources in the system. In particular, Montgomery and De Ve-

ciana [16] have signicantly strengthened the corresponding

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

log-similarity relation in [3] using Bahadur-Rao asymptotics,

and obtained asymptotic bounds for the tail probability. How-

ever, note that M-asymptotics considers a limit in a differ-

ent direction from that in x-asymptotics. Therefore, results in

M-asymptotics cannot be extended to x-asymptotics (and vice

versa) unless very strong properties such as uniformity of con-

vergence hold (which is usually not the case). Hence, the re-

sults in this paper belong to a different category, from those in

M-asymptotics. However, as a side result of one of our propo-

sitions, we show in Section III, that we can further strengthen

the M-asymptotic result in [16] for Gaussian input processes.

As a nal note, due to space limitations, we do not provide

any proofs for the theoretical results in this paper. Interested

readers are referred to our technical report [6].

II. PRELIMINARIES

Throughout this paper we assume that = E{X

t

}/ t > 0

and that v

t

= Var{X

t

} is twice differentiable. Note that if

the input process

t

is a Gaussian process with stationary in-

crements (which, as mentioned before, is a good characteriza-

tion of the aggregate process to a high-speed multiplexer), and

the service process M

t

increases linearly with t at a xed rate

(i.e., a constant service rate which is typical in many high-

speed network models), then X

t

=

0

t

t is also a

Gaussian process with stationary increments.

We dene (t) := log v

t

and := lim

t

(t)/ log t (we

assume that the limit exists). It should be noted that from the

stationary increments property cannot be greater than 2. We

assume that [1, 2), which covers the majority of non-trivial

Gaussian processes with stationary increments. We next list a

few conditions on v

t

(and (t)) which will frequently be re-

ferred to throughout this paper.

lim

t

t

(t) = . (c1)

lim

t

t

2

(t) = . (c2)

v

t

t

St

for some S > 0. (c3)

limsup

t0

v

t

t

< . (c4)

Conditions (c1) and (c2) are a direct result of the denition

of , (i.e., := lim

t

(t)/ log t) as long as LHospitals

rule can be applied. To elaborate, differentiating both numera-

tor ((t)) and denominator (log t), we get the left hand side of

(c1). Now if the limit in (c1) exists, it must equal . Similarly,

differentiating twice we get the negative of the left hand side of

(c2). Hence, if the limit in (c2) exists, it must equal . Con-

dition (c3) is closely related to the self-similarity of X

t

, i.e.,

if X

t

is (asymptotically) self-similar, then (c3) holds for some

> 1 (for more about the self-similarity and its origination,

see [14] and references therein). Also, a fairly general class of

long-range dependent X

t

satises (c3) for some > 1. Here it

should be noted that strictly second order self-similar Gaussian

processes, i.e., Fractal Brownian motion processes, constitute

but a very small subset of the long-range dependent processes

covered by our conditions with > 1. Condition (c4) is about

the behavior of v

t

around t = 0, and will be satised if v

t

de-

creases as fast as, or faster than t

as t 0. In particular, when

X

t

can be expressed as the integral of a stationary Gaussian

process, (c4) holds for any 2.

The parameter in our denition is directly related to the

well known Hurst (or self-similarity) parameter H by = 2H.

Also, the empirical estimate of has been popularly used to

observe self-similarity in various types of network trafc and

to calculate the corresponding Hurst parameters [2], [14].

We begin with an important property of the time-instant t

x

at

which v

t

_

(x +t)

2

attains its maximum value. More specif-

ically, we show that t

x

is a linear function of x.

Proposition 1 (Proposition 1 of [6]) Under hypothesis (c1),

t

x

x

x

(2 )

.

It should be noted that even if there are multiple indices at

which v

t

_

(x +t)

2

attains its maximum, Proposition 1 holds

for any choice of t

x

, among these indices. In fact, all the fol-

lowing results in this paper are independent of the choice of t

x

.

Also, as will be shown in the following section, under certain

conditions, t

x

becomes unique as x increases.

The next proposition is about the asymptotic behavior of

m

x

= (x +t

x

)

2

_

v

tx

= 1/

_

sup

t0

v

t

_

(x +t)

2

_

, and can

easily be derived using Proposition 1.

Proposition 2 (Proposition 2 of [6]) Under hypotheses (c1)

and (c3),

m

x

x

x

2

S

(2 )

2

.

For convenience, we dene a stochastic process {Y

(x)

t

: t

0} for each x > 0, as

Y

(x)

t

:=

m

x

(X

x

t

+xt)

x(t + 1)

.

From the denition of Y

(x)

t

, it directly follows that for all x >

0 and t 0,

X

t

> x if and only if Y

x

t

>

m

x

. (5)

Therefore, P({X > x}) is equal to P({Y

(x)

>

m

x

}).

This is important because we will study the supremum distri-

bution of X

t

through Y

(x)

t

. One can easily verify that Y

(x)

t

is

a centered (zero mean) Gaussian process, and its variance can

be obtained in terms of v

t

as

2

x,t

:= Var{Y

(x)

t

} =

m

x

v

x

t

x

2

(t + 1)

2

.

From the denition of m

x

, note that

2

x,t

attains its maximum

value of 1 at

t

x

:= t

x

/ x.

We next present the main results in the paper.

III. MAIN RESULTS

We rst demonstrate the importance of the time instant t

x

at

which the v

t

_

(x +t)

2

attains its maximum value.

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

A. Importance of the Dominant Time Scale t

x

The rst theorem in this section is an improved version of

Theorem 4 in [8]. Informally, this theorem shows us how

the tail probability P({Y

(x)

>

m

x

}) will become concen-

trated around

t

x

= t

x

/x, as x increases.

Theorem 3 (Theorem 1 of [6]) Let > 0 and E

x

:=

_

t

x

x

2/2+

,

t

x

+x

2/2+

. Then, under hypotheses

(c1)(c4),

lim

x

P({Y

(x)

Ex

>

m

x

})

P({Y

(x)

>

m

x

})

= 1.

If we rewrite Theorem 3 in terms of conditional probability

as

lim

x

P({Y

(x)

Ex

>

m

x

|Y

(x)

>

m

x

}) = 1, (6)

then its implication becomes more evident. In other words,

(6) tells us that conditioned on the fact that the process Y

(x)

eventually exceeds a threshold (

m

x

), the probability that it

exceeds this threshold over a relatively small interval around t

x

will go to 1, as x goes to innity. Note that if we choose small

enough, the size of the interval E

x

around

t

x

decreases to 0 as

x increases. Hence, (6) tells us that the rarer the event becomes,

the more the event concentrates around the most likely time

t

x

.

Therefore, Theorem 3 can be interpreted as a rigorous veri-

cation of the statement rare events take place only in the most

probable way. Further, Theorem3 more directly explains why

the lower bound P({X

tx

> x}) = (

m

x

) so tightly bounds

the tail probability P({Y

(x)

>

m

x

}) = P({X > x}).

In our previous research [5], [7], we have numerically investi-

gated the accuracy of this lower bound as an approximation to

P({X > x}) under a more restrictive setting; i.e., when X

t

can be expressed as

X

t

=

_

t

0

d, (7)

where

t

is a stationary Gaussian process with negative mean

and absolutely integrable autocovariance. There, we have

found that the lower bound is fairly accurate and matches the

curve of P({X > x}) over a wide range of values of x. Fur-

ther, in [7], [8], we have shown that exp[m

x

/ 2] provides an

asymptotic upper bound to P({X > x}). Interestingly, this

asymptotic upper bound behaves (via empirical results) like a

global upper bound and approximates the tail probability as

accurately from above, as the lower bound does from below.

Note that it can be easily veried that the absolute intergabil-

ity of the autocovariance of

in (7) implies that the corre-

sponding value of should be equal to 1. In other words, our

previous results do not cover the cases when X

t

exhibits long-

range dependence. However, since Theorem 3 is valid for a

more general class of processes X

t

, including those exhibiting

long-range dependence, we expect that both the lower bound

(

m

x

) and the asymptotic upper bound exp[m

x

/ 2] will

The empirical results make sense because exp[mx/ 2] is not very dif-

ferent from (

mx)

x

exp [mx/ 2]

2mx .

accurately approximate the tail probability, even for long-range

dependent X

t

. We will next provide an important asymptotic

property of P({X > x}) that supports this conjecture.

B. A Strong Asymptotic Result for P({X > x})

We begin this section by providing a theorem that shows

that the log of the tail probability log P({X > x}) (or equiv-

alently log P({Q > x}) diverges from m

x

/2 at most loga-

rithmically.

Theorem 4 (Theorem 2 of [6]) Under hypotheses (c1)(c4),

< liminf

x

1

log x

_

log P({X > x}) +

m

x

2

_

limsup

x

1

log x

_

log P({X > x}) +

m

x

2

_

< .

Note that (4) in the introduction is a compact form of Theo-

rem 4. The theorem suggests that m

x

/ 2 is a good estimate

of log P({X > x)} = log P({Y

(x)

>

m

x

}) in the sense

that the error could at most increase as (the order of) log x. In

other words,

P({X > x}) = exp

_

m

x

2

+r(x)

_

, (8)

where r(x) := log P({X > x}) + m

x

/ 2 O(log x).

We next relate our result with existing Large Deviation re-

sults. It should be observed that the leading term of m

x

, by it-

self, satises the Large Deviation relation (3), i.e., from Propo-

sition 2 and Theorem 4, we have

log P({X > x})

x

2

x

2

S

(2 )

2

. (9)

The right hand side of the above relation has been obtained

for the specic case of Fractal Brownian motion [17], and we

believe can also be obtained in greater generality by using the

results in [10]. If we dene R(x) := log P({X > x}) +

2

x

2

_

S

(2 )

2

, then the tail probability can also

be written as

P({X > x}) = exp

_

x

2

S

(2 )

2

+R(x)

_

. (10)

Further, it follows from (9) that R(x) o(x

2

)

where o(f(x)) denotes the set of functions g(x) such that

lim

x

|g(x) /f(x) | = 0. Since o(x

2

) is a much larger

set than O(log x), (4) characterizes the asymptotic tail behav-

ior in much more detail than (9), and therefore, signicantly

improves upon the resolution of (9). As will be illustrated

in the following section, this improvement can be critical for

accurately characterizing the asymptotic behavior of the tail

probability. For example, consider the two approximations

P({X > x}) exp

_

m

x

2

_

and (11)

P({X > x}) exp

_

x

2

S

(2 )

2

_

, (12)

naturally suggested by (8) and (10), respectively. From the

denitions of r(x) and R(x), exp[r(x)] and exp[R(x)] can

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

be viewed as multiplicative factors that cause the error (i.e., its

deviation from 1 reects the inaccuracy of the approximation)

of the above approximations (11) and (12), respectively. Note

that the multiplicative factor of (11) can increase (or decrease)

at most as a power of x (e.g., on the order of x

3/2

or 1

_

x

2

),

while that of (12) can increase (or decrease) as an exponential

function of x (e.g., on the order of exp[

x] or exp

_

x

2/3

).

This indicates that we can signicantly reduce the error possi-

bility by using (11) instead of (12), for Gaussian X

t

.

An interesting remark is that the approximation for P({Q >

x}) based on the large deviation M-asymptotics result by

Botvich and Dufeld [3], results in the same expression as

exp[m

x

/ 2], when applied to Gaussian uid queues. Re-

member, from the introduction, that the M-asymptotics result

in [16] improved upon the result in [3] (from log-similarity

to nearly similarity), and an approximation equivalent to the

lower bound (

m

x

) was suggested based on these stronger

asymptotics. Although both the lower bound and the approxi-

mation (11) provide accurate estimates for the tail probability,

in [7], it has been shown that when the tail is asymptotically

exponential (i.e., the input is not long-range dependent), the

(logarithmic) error the lower bound diverges to , while that

of (11) is bounded. This tells us that the approximationthat sat-

ises only the weaker asymptotics in M-asymptotics [3], may

perform better in the x-asymptotics sense (and vice versa). As

mentioned in Section I, this is because x-asymptotics and M-

asymptotics consider asymptotic properties of P({Q > x}) in

different limiting regimes.

As mentioned earlier, the uniqueness of t

x

(or

t

x

) is not

a major issue in this paper because all the results are valid

for any choice of t

x

when there are multiple indices where

v

t

_

(x +t)

2

attains its maximum. However, from a practical

viewpoint, it may be important to know whether t

x

is unique

or not, and how easily we can nd t

x

and compute the value

of m

x

. For example, when exp[m

x

/ 2] has to be computed

to approximate P({X > x}), its accuracy and computation

time will be determined by how fast and accurately we can cal-

culate m

x

. Since both t

x

and m

x

cannot generally be obtained

in a simple closed form, search algorithms are likely to be used

for the computation of t

x

and m

x

. In this case, the following

proposition guarantees the accurate and fast computation of t

x

and m

x

, for large x.

Proposition 5 (Proposition 3 of [6]) Under hypotheses (c1)

and (c2), for all sufciently large x, log v

t

_

(x +t)

2

is

strictly concave on [x/2, ( +

_

/2)x/(2 )], and

there is a unique index t

x

where v

t

_

(x +t)

2

attains its max-

imum.

Proposition 5 tells us that when x is large, t

x

and m

x

can be

computed by performing a simple local search algorithm start-

ing at x/(2 ). Although this proposition is valid only

for large enough x, according to our numerical studies, local

search algorithms usually nd t

x

and m

x

accurately within a

small number of iterations, even for fairly small values of x.

This is because v

t

_

(x +t)

2

is usually of a distinctly uni-

modal shape even for small x.

It is also worth to note that Proposition 5 can strengthen the

M-asymptotics result in [16] to a similarity relation. In [16,

Theorem A.1], it has been shown for general uid queues that

1 liminf

M

c

M

P({Q

M

> Mx})

limsup

M

c

M

P({Q

M

> Mx}) K,

where Q

M

represent the queue length distribution of a queue

that serves M identical input processes at service rate M (for

the denition of c

M

, refer to the paper). When the input pro-

cesses are stationary Gaussian, it turns out that K is the num-

ber of points t where

2

Mx,t

of the queue attains its maximum.

Therefore, Proposition 5 implies that when x is sufciently

large, we have K = 1 and the above relation can be rewrit-

ten in a stronger form as

P({Q

M

> Mx})

M

1

c

M

.

In the next section we study the asymptotic properties of

m

x

in more detail, and the effect of its secondary terms on the

asymptotic behavior of P({X > x}).

IV. THE IMPACT OF Var{X

t

} ON THE ASYMPTOTIC

BEHAVIOR OF m

x

AND P({X > x})

In this section we will introduce our third theorem, which

relates the asymptotic behavior of Var{X

t

} to that of m

x

and

P({X > x}). We begin with a simple example of Fractal

Brownian motion processes, a well known and studied set of

self-similar processes [17].

A. Fractal Brownian Motion Process

The standard (normalized) Fractal Brownian motion process

{B

(H)

t

: t 0} with Hurst parameter H [1 /2, 1) is a

centered Gaussian process with stationary increments that pos-

sesses the following properties [17]:

(a) B

(H)

0

= 0,

(b) Var{B

(H)

t

} = t

2H

,

(c) B

(H)

t

is sample path continuous.

We rst study the supremumdistribution of X

t

:= SB

(H)

t

t

which is often called Fractal Brownian motion with negative

linear drift.

From the above properties of Fractal Brownian motion pro-

cesses, one can easily verify that X

t

satises all conditions

(c1)(c4) with = 2H. Also, in this case we can compute m

x

explicitly by

m

x

=

4

x

2

S

(2 )

2

. (13)

Therefore, for Fractal Brownian motion processes with nega-

tive linear drift, (9) can be strengthened (by substituting (13)

into (4)) to

log P({X > x}) +

2

x

2

S

(2 )

2

O(log x). (14)

In other words, for Fractal Brownian motion with negative lin-

ear drift, the approximation based on the Large Deviation tech-

niques can in fact be better supported by Theorem4. Of course,

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

Simulation

e e

m

x

x

S

=

2

2

2

2

2

T

a

i

l

P

r

o

b

a

b

i

l

i

t

y

:

P

X

x

>

Supremum Value: x

Fig. 2. The tail probability P({X > x}) for Xt = B

(0.8)

t

2t computed

by simulations and (12). Since Xt is a Fractal Brownian motion process

with drift, (11) and (12) result in the same approximation.

note that in this case (11) and (12) results in the same approx-

imation. Due to this fact, and our numerical studies [7] on

the accuracy of the approximation (11), we expect that (12)

will be an accurate approximation over a wide range of x.

As an example, see Fig. 2 where we plot P({X > x}) for

X

t

= B

(0.8)

t

2t. Since the exact tail probability cannot be

computed, its estimate has been obtained through simulations.

In particular, in order to improve the reliability of the simu-

lation results, the Importance Sampling technique described

in [12] has been employed. To show the accuracy of the es-

timate, 99% condence intervals of the simulation results have

been computed using the method of batch mean [4]. How-

ever, the simulation results are so accurate that the condence

intervals are virtually invisible in the gure. Since the simu-

lation has been performed in discrete-time, the tail probability

estimated via simulations is actually the probability that X

t

ex-

ceeds x at any integer t. Hence, the simulation results provides

an accurate lower bound for the tail probability P({X > x}).

Nevertheless, one can see that the approximation (12) accu-

rately captures the tail probability over the entire range of x

shown in the gure.

It should be noted here that the reason why (9) can be

strengthened to (14) for Fractal Brownian motion processes

with negative linear drift, is that m

x

/ 2 is not different from

the leading term captured by (9). In fact, since we know that

r(x) O(log x), one can easily see that (14) holds if and only

if

r(x) R(x) =

m

x

2

2

x

2

S

(2 )

2

O(log x) (15)

Therefore, if (15) holds, the approximation (12) can be better

supported by (14), just as strongly as the approximation (11) is

supported by (4).

We next provide our third theorem which shows, with more

precision than Proposition 2, how the asymptotic behavior of

Similar observations have been made in [17]. In that paper, the author

used the lower bound

x

2

S

(2 )

2

to approximate

the tail probability. However, since the Gaussian tail function (w) was again

approximated by exp

w

2

, the resulting approximation actually corre-

sponds to (12).

Var{X

t

} impacts the asymptotic behavior of m

x

. This theo-

rem results in a corollary, which provides a sufcient condition

for (15) to hold.

Theorem 6 (Theorem 3 of [6]) Dene h(t) := v

t

_

St

1.

Under hypotheses (c1)(c4), if there exists a positive constant

C such that

t|h

(t)| C|h(t)| for all large t, (16)

then

m

x

2

2

x

2

S

(2 )

2

x

2

x

2

S

(2 )

2

h(t

x

).

Corollary 7 (Corollary to Theorem 6) Under hypotheses (c1)

(c4), if there exists a positive constant C that satises (16) and

if h(t) = v

t

_

St

1 O(t

2

log t), then (15) holds.

The additional condition over (c1)(c4) that Theorem 6 re-

quires, is not a very restrictive condition since as will be illus-

trated in the following section, we can usually nd a constant

C > 0 that satises (16). Therefore, Theorem 6 not only re-

sults in a sufcient condition (Corollary 7) for (15) to hold, but

also tells us in considerable generality when (15) will not hold.

Let u

t

include all auxiliary terms of v

t

(i.e., terms other than

the leading term St

), so that we can write

v

t

= St

+u

t

.

Further assume that u

t

t

ct

for some (0, ) and c =

0. From the denitions of h(t) and u

t

, we can then see that

h(t) = u

t

_

St

t

ct

/S. Hence, provided that (16)

holds for some C > 0, Theorem 6 implies that

m

x

2

2

x

2

S

(2 )

2

x

2c

2

x

2+2

S

2

2

(2 )

2+2

.

(17)

In other words, Theorem 6 tells us that asymptotically, the

more slowly growing the auxiliary term u

t

is, the more

m

x

/ 2 behaves like 2

x

2

_

S

(2 )

2

. In this

sense, the Fractal Brownian motion process with negative lin-

ear drift that we have just studied, is an extreme case, where

u

t

= 0 for all t and (14) trivially holds. Hence, we next con-

sider cases when u

t

is not identically equal to 0, and show that

(14) does not hold in general.

B. Other Gaussian Processes

From (17), if (2 2, ), then r(x) R(x) increases

as a power of x, and since r(x) O(log x), it follows that

R(x)

x

2c

2

x

2+2

S

2

2

(2 )

2+2

. (18)

Therefore, if (2 2, ), then (14) will not hold. Through

a simple example, we now show that can, in fact, be greater

than 2 2.

Assume that 1 < < 3/ 2, and consider a stationary Gaus-

sian process

t

with mean and autocovariance given by

E{

t

} = and (19)

Cov{

t

,

t+

} =

S( 1)

2(|| + 1)

2

. (20)

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

Simulation

e

x

S

2

2

2

2

e

m

x

2

T

a

i

l

P

r

o

b

a

b

i

l

i

t

y

:

P

X

x

>

Supremum Value: x

Fig. 3. Two approximations based on (4) and (9) for P({X > x}), when Xt

is dened by (21), and t is a stationary Gaussian process with E{t} =

1 and Cov{t,

t+

} = 5(|| + 1)

3/4

8.

If we dene

X

t

:=

_

t

0

d for t 0, (21)

then one can easily verify that X

t

is a Gaussian process with

stationary increments that satises (c1)(c4), and that

E{X

t

} = t and

Var{X

t

} = S((t + 1)

1) St. (22)

From (22), it can easily be veried that u

t

= v

t

St

St and that th

(t)

t

(1 )h(t) (a sufcient condition

for (16) to hold for some C). Therefore, we actually have =

1 > 2 2 in this case, and it follows from (18), that

R(x)

x

2

21

x

32

S

22

(2 )

32

.

Further, from (10) note that the approximation (12) will

involve an error (for large x) roughly on the order of

exp

_

2

21

x

32

_

S

22

(2 )

32

, and may result

in signicantly overestimating P({X > x}).

To illustrate the error involved in (12), in Fig. 3, we shownu-

merical results of approximations (11) and (12) for P({X >

x}), when the stationary Gaussian process

t

in (21) is given

by (19) and (20) with S = 4, = 1, and = 5/ 4. Again,

the exact tail probability P({X > x}) is estimated through

discrete-time based simulations, and compared to the approx-

imations. As Theorem 4 and our studies in [7] suggest, the

approximation (11) accurately matches the tail probability es-

timated via simulations. On the other hand, the approximation

(12) diverges from the simulation results quite fast, and the

difference between them increases by several orders of magni-

tude while x increases from 10 to 400. Therefore, this numer-

ical result shows that the approximation (12) based on (9) will

eventually (as x increases) result in a serious error.

As illustrated through the preceding simple example, the

auxiliary term u

t

can be quite arbitrary. Now consider another

example: a uid queue model of a high-speed multiplexer, with

an innite buffer and a constant service rate , serving L in-

dependent stationary Gaussian inputs with instantaneous input

rate

(n)

t

(n = 1, 2, . . . , L); represents the bandwidth of the

output link, and

(n)

t

(n = 1, 2, . . . , L) models the packet ar-

rivals from the L different streams being multiplexed. Hence,

N

t

N

s

, the net amount of input during the interval (s, t] can

be expressed as

N

t

N

s

=

_

t

s

L

n=1

(n)

d.

Also, from (1), the tail P({Q > x}) of the steady state

queue length distribution is the same as P({X > x}), where

{X

t

: t 0} is dened by X

t

:=

_

0

t

L

n=1

(n)

d. Note

that the variance of X

t

can be expressed in terms of the auto-

covariance C

n

() := Cov{

(n)

t

,

(n)

t+

} of L input processes

as

v

t

=

L

n=1

2

_

t

0

(t )C

n

()d. (23)

In other words, v

t

is composed of L terms, each of which is

determined by the autocovariance of the corresponding input

process. If we assume that 2

_

t

0

(t )C

n

()d

t

S

n

t

n

for some S

n

> 0 and

n

[1, 2), one can easily see that

v

t

t

S

1

t

1

, where

1

= max{

1

,

2

, . . . ,

L

} and

S

1

=

{n:n=

1}

S

n

. Therefore, if the values of

n

are not iden-

tical, the leading term

S

1

t

1

of v

t

will capture only the terms

in (23) that increase on the order of t

1

, and hence u

t

is likely

to increase on the order of t

2

, where

2

is the second largest

value among {

1

,

2

, . . . ,

L

}. If

2

is greater than 2

1

2

in this case, then from (18), R(x) will grow on the order of

x

2+

22

1

, and the approximation (12) will be poor for large

x.

V. NON-GAUSSIAN INPUT PROCESSES

As mentioned in Section I, Gaussian uid queueing models

for high-speed networks have been motivated by the large num-

ber of network applications served by a single network link. In

other words, since the aggregate trafc at a high-speed mul-

tiplexer will be the superposition of a large number of packet

(or information) streams, each of which is from different ap-

plications, the Central Limit Theorem suggests that the aggre-

gate trafc should be well characterized by a Gaussian pro-

cess. Although modeling the trafc in a high-speed network as

Gaussian processes is a intuitive and natural approach, the er-

ror that this Gaussian trafc modeling may involve, is usually

difcult to analyze. For this reason, the validity of the Gaus-

sian trafc modeling has been numerically investigated in [5],

[7]. Through this numerical study, we found that the behavior

of a uid queue fed by several hundreds of independent in-

put processes can typically be captured by the Gaussian model

in the sense that the original aggregate trafc and its Gaus-

sian model result in very similar queueing behavior. As a re-

sult, the approximation (11), which is originally for Gaussian

uid queues, is also accurate when applied to the analysis of

high-speed networks. Next, through a numerical example, we

demonstrate the accuracy of the approximation (11) for long-

range dependent non-Gaussian inputs.

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

Simulation

e

m

x

2

69 sources

66 sources

T

a

i

l

P

r

o

b

a

b

i

l

i

t

y

:

(

)

P

Q

>

x

Queue Length: x

Fig. 4. The approximation (11) for 66 and 69 MPEG video sources served by

a 45 Mbps link compared to the simulation results.

In [2], video applications have been found to be typical

sources of long-range dependent network trafc. In the fol-

lowing example, we compare the approximation (11) and the

simulation results of the tail probability at a multiplexer serv-

ing video applications with a 45 Mbps link. For the experi-

ment, we use the real trace of an MPEG-encoded action movie

(007 series) used in [13].

The trace is actually the sequence of

frame size measured in bits, whose entire length corresponds

to about 30 minutes of playing time. Each frame is assumed

to be transmitted at an uniform rate over one frame period

( 1/24 sec). Two sets of curves are obtained in Fig. 4: one

for 66 video sources and the other for 69 sources. To com-

pute the approximations, the mean rate and the autocovariance

function directly measured from the trace are used. To obtain

the simulation curves, we perform the exactly same simulation

described in [13]. In other words, to simulate multiple video

trafc sources from one trace, we assign each source a random

starting point in the trace, increase the pointer as the simu-

lation progresses, and move it to the start of the trace when

the pointer hits the end of the trace. The simulation contin-

ues until the entire trace is read out twice. The rst cycle is

to have the system reach its steady state, and during the sec-

ond cycle, the tail probability is estimated. Since we cannot

apply the importance sampling technique in this case, the 99%

condence intervals are larger than in the previous examples.

Nevertheless, the approximation (11) matchs the tail probabil-

ity as accurately as when the input is Gaussian. This indicates

that the aggregate input can, in fact, be well characterized by a

Gaussian process. Also, note that the shape of the simulation

curve, which reects the multiple time-scale burstiness of the

trafc [13], is also accurately captured by the approximation.

VI. CONCLUSION

The fast growth of network bandwidth has resulted in the

proliferation of a number of new network applications, which,

together with classical network applications, generate many

different types of network trafc. Further, empirical studies

on these various types of network trafc suggest that many

of them will exhibit different kinds of long-range dependence

In this paper, the trace has been found to exhibit long-range dependence.

For more details about the trace, refer to [13] or [18].

and/or self-similar behavior. As illustrated in the previous ex-

ample, under this heterogeneity, the Large Deviation result (9)

may not be precise enough to capture the queueing behavior of

the network trafc, and an approximation like (12) should be

used with caution.

In contrast, (4) considerably improves the resolution of (9),

and naturally leads to the approximation (11), which will not

rapidly diverge from P({X > x}). Therefore, we hope that

the results in this paper will be important in better understand-

ing the behavior of the supremumdistribution of Gaussian pro-

cesses with stationary increments, and in analyzing the queue

length distribution for heterogeneous types of network trafc.

REFERENCES

[1] R. G. Addie and M. Zukerman, An Approximation for Performance

Evaluation of Stationary Single Server Queues, IEEE Transactions on

Communications, vol. 42, pp. 31503160, Dec. 1994.

[2] J. Beran, R. Sherman, M. S. Taqqu, and W. Willinger, Long-range de-

pendence in variable-bit-rate video trafc, IEEE Transactions on Com-

munications, vol. 43, pp. 15661579, Feb.April 1995.

[3] D. D. Botvich and N. G. Dufeld, Large deviations, the shape of the loss

curve, and economies of scale in large multiplexers, Queueing Systems,

vol. 20, pp. 293320, 1995.

[4] P. Bratley, B. L. Fox, and L. E. Schrage, A Guide to Simulation. New

York: Springer-Verlag, second ed., 1987.

[5] J. Choe and N. B. Shroff, A New Method to Determine the Queue

Length Distribution at an ATM Multiplexer, in Proceedings of IEEE

INFOCOM, (Kobe, Japan), pp. 550557, 1997.

[6] J. Choe and N. B. Shroff, Supremum Distribution of Gaussian Pro-

cesses and Queueing Analysis including Long-Range Dependence and

Self-Similarity, tech. rep., Purdue University, West Lafayette, IN, 1997.

submitted to Stochastic Models.

[7] J. Choe and N. B. Shroff, A Central Limit Theorem Based Approach

for Analyzing Queue Behavior in High-Speed Networks, IEEE/ACM

Transactions on Networking, vol. 6, pp. 659671, Oct. 1998.

[8] J. Choe and N. B. Shroff, On the supremum distribution of integrated

stationary Gaussian processes with negative linear drift, Advances in

Applied Probability, March 1999. to appear.

[9] A. Dembo and O. Zeitouni, Large Deviations Techniques and Applica-

tions. Boston: Jones and Bartlett, 1993.

[10] N. G. Dufeld and N. OConnell, Large deviations and overow proba-

bilities for the general single server queue, with application, Proc. Cam-

bridge Philos. Soc., vol. 118, pp. 363374, 1995.

[11] P. W. Glynn and W. Whitt, Logarithmic asymptotics for steady-state tail

probabilities in a single-server queue, Journal of Applied Probability,

pp. 131155, 1994.

[12] C. Huang, M. Devetsikiotis, I. Lambadaris, and A. R. Kaye, Fast Sim-

ulation for Self-Similar Trafc in ATM Networks, in Proceedings of

IEEE INFOCOM, pp. 438444, 1995.

[13] E. W. Knightly, Second Moment Resource Allocation in Multi-Service

Networks, in Proceedings of ACM SIGMETRICS, (Seattle, WA), 1997.

[14] W. E. Leland, M. Taqqu, W. Willinger, and D. V. Wilson, On the

Self-Similar Nature of Ethernet Trafc (Extended Version), IEEE/ACM

Transactions on Networking, vol. 2, pp. 115, Feb. 1994.

[15] R. M. Loynes, The Stability of a Queue with Non-independent Inter-

arrival and Service Times, Proc. Cambridge Philos. Soc., vol. 58,

pp. 497520, 1962.

[16] M. Montgomery and G. De Veciana, On the Relevance of Time Scales

in Performance Oriented Trafc Characterization, in Proceedings of

IEEE INFOCOM, (San Francisco, CA), pp. 513520, 1996.

[17] I. Norros, On the Use of Fractal Brownian Motion in the Theory of

Connectionless Networks, IEEE Journal on Selected Areas in Commu-

nications, vol. 13, pp. 953962, Aug. 1995.

[18] O. Rose, Statistical properties of MPEG video trafc and their impact

on trafc modeling in ATM systems, in Proceedings of the 20th Con-

ference on Local Computer Networks, (Minneapolis, MN), pp. 397406,

Oct. 1995.

[19] N. B. Shroff and M. Schwartz, Improved Loss Calculations at an ATM

Multiplexer, IEEE/ACM Transactions on Networking, vol. 6, pp. 411

422, Aug. 1998.

0-7803-5420-6/99/$10.00 (c) 1999 IEEE

You might also like

- Mobile Forensic Investigations A Guide To Evidence Collection Analysis and Presentation 1St Edition Reiber Full ChapterDocument67 pagesMobile Forensic Investigations A Guide To Evidence Collection Analysis and Presentation 1St Edition Reiber Full Chapterrita.smith780100% (19)

- Split Operator MethodDocument13 pagesSplit Operator MethodLucian S. VélezNo ratings yet

- Meetings in A Company.Document37 pagesMeetings in A Company.armaanNo ratings yet

- Lecture 8 Beam Deposition TechnologyDocument24 pagesLecture 8 Beam Deposition Technologyshanur begulajiNo ratings yet

- Averaging Oscillations With Small Fractional Damping and Delayed TermsDocument20 pagesAveraging Oscillations With Small Fractional Damping and Delayed TermsYogesh DanekarNo ratings yet

- Unsupervised Clustering in Streaming DataDocument5 pagesUnsupervised Clustering in Streaming DataNguyễn DũngNo ratings yet

- ) W !"#$%&' +,-./012345 Ya - Fi Mu: Discounted Properties of Probabilistic Pushdown AutomataDocument33 pages) W !"#$%&' +,-./012345 Ya - Fi Mu: Discounted Properties of Probabilistic Pushdown Automatasmart_gaurav3097No ratings yet

- Path-Integral Evolution of Multivariate Systems With Moderate NoiseDocument15 pagesPath-Integral Evolution of Multivariate Systems With Moderate NoiseLester IngberNo ratings yet

- TPWL IeeeDocument6 pagesTPWL Ieeezhi.han1091No ratings yet

- Lecture Notes Stochastic Optimization-KooleDocument42 pagesLecture Notes Stochastic Optimization-Koolenstl0101No ratings yet

- Queueing System 3Document20 pagesQueueing System 3ujangketul62No ratings yet

- ReportDocument18 pagesReportapi-268494780No ratings yet

- Joint Optimization of Transceivers With Fractionally Spaced EqualizersDocument4 pagesJoint Optimization of Transceivers With Fractionally Spaced EqualizersVipin SharmaNo ratings yet

- Stochastic ProcessesDocument6 pagesStochastic ProcessesmelanocitosNo ratings yet

- 半稳定随机过程Document18 pages半稳定随机过程wang qingboNo ratings yet

- Hoffman 2017Document12 pagesHoffman 2017Jonathan TeixeiraNo ratings yet

- Discrete-Event Simulation of Fluid Stochastic Petri NetsDocument9 pagesDiscrete-Event Simulation of Fluid Stochastic Petri NetsssfofoNo ratings yet

- Discrete-Time Multiserver Queues With Geometric Service TimesDocument30 pagesDiscrete-Time Multiserver Queues With Geometric Service Timeschege ng'ang'aNo ratings yet

- AMR Juniper Hanifi Theofilis 2014Document43 pagesAMR Juniper Hanifi Theofilis 2014TomNo ratings yet

- A Quantitative Landauer's PrincipleDocument16 pagesA Quantitative Landauer's PrinciplelupinorionNo ratings yet

- Sess 6 Tuenbaeva NazarovDocument7 pagesSess 6 Tuenbaeva NazarovParvez KhanNo ratings yet

- CHP 1curve FittingDocument21 pagesCHP 1curve FittingAbrar HashmiNo ratings yet

- IOSR JournalsDocument7 pagesIOSR JournalsInternational Organization of Scientific Research (IOSR)No ratings yet

- R SimDiffProcDocument25 pagesR SimDiffProcschalteggerNo ratings yet

- Coleman Dcc08Document10 pagesColeman Dcc08Remberto SandovalNo ratings yet

- Nonlinear Filtering For Observations On A Random Vector Field Along A Random Path. Application To Atmospheric Turbulent VelocitiesDocument25 pagesNonlinear Filtering For Observations On A Random Vector Field Along A Random Path. Application To Atmospheric Turbulent VelocitiesleifpersNo ratings yet

- 1 s2.0 S0304414918301418 MainDocument44 pages1 s2.0 S0304414918301418 MainMahendra PerdanaNo ratings yet

- Optimal Control of Stochastic Delay Equations and Time-Advanced Backward Stochastic Differential EquationsDocument25 pagesOptimal Control of Stochastic Delay Equations and Time-Advanced Backward Stochastic Differential EquationsIdirMahroucheNo ratings yet

- Explaining Convolution Using MATLABDocument10 pagesExplaining Convolution Using MATLABRupesh VermaNo ratings yet

- Massively Parallel Semi-Lagrangian Advection: SimulationDocument16 pagesMassively Parallel Semi-Lagrangian Advection: SimulationSandilya KambampatiNo ratings yet

- Heavy Tailed Time SeriesDocument28 pagesHeavy Tailed Time Seriesarun_kejariwalNo ratings yet

- Simulation of Stopped DiffusionsDocument22 pagesSimulation of Stopped DiffusionssupermanvixNo ratings yet

- Bayesian AnalysisDocument20 pagesBayesian AnalysisbobmezzNo ratings yet

- SilveyDocument20 pagesSilveyEzy VoNo ratings yet

- Constrained Stabilization of Continuous-Time Linear Systems: $) RST - , Qlts F. Conlltot. UtnitsDocument8 pagesConstrained Stabilization of Continuous-Time Linear Systems: $) RST - , Qlts F. Conlltot. UtnitsNeetha PrafaNo ratings yet

- Hybrid SchemeDocument35 pagesHybrid SchemeBryan DilamoreNo ratings yet

- 10 1 1 87 2049 PDFDocument23 pages10 1 1 87 2049 PDFPatrick MugoNo ratings yet

- Deep Gaussian Covariance NetworkDocument14 pagesDeep Gaussian Covariance NetworkaaNo ratings yet

- Scale Resolved Intermittency in Turbulence: Siegfried Grossmann and Detlef Lohse October 30, 2018Document13 pagesScale Resolved Intermittency in Turbulence: Siegfried Grossmann and Detlef Lohse October 30, 2018cokbNo ratings yet

- Car Act A Bat ADocument17 pagesCar Act A Bat Amariusz19781103No ratings yet

- Itô's Stochastic Calculus and Its ApplicationsDocument61 pagesItô's Stochastic Calculus and Its Applicationskim haksongNo ratings yet

- Stochastic Processes 2Document11 pagesStochastic Processes 2Seham RaheelNo ratings yet

- Bregni, 2004Document5 pagesBregni, 2004عمار طعمةNo ratings yet

- Physics-Informed Deep Generative ModelsDocument8 pagesPhysics-Informed Deep Generative ModelsJinhan KimNo ratings yet

- Time-Frequency Analysis of Locally Stationary Hawkes ProcessesDocument31 pagesTime-Frequency Analysis of Locally Stationary Hawkes ProcessesPerson PersonsNo ratings yet

- An Adaptive Nonlinear Least-Squares AlgorithmDocument21 pagesAn Adaptive Nonlinear Least-Squares AlgorithmOleg ShirokobrodNo ratings yet

- 16 Aap1257Document43 pages16 Aap1257Guifré Sánchez SerraNo ratings yet

- 16 Fortin1989 PDFDocument16 pages16 Fortin1989 PDFHassan ZmourNo ratings yet

- Delay Differential Equation With Application in PoDocument13 pagesDelay Differential Equation With Application in Pobias sufiNo ratings yet

- Feshbach Projection Formalism For Open Quantum SystemsDocument5 pagesFeshbach Projection Formalism For Open Quantum Systemsdr_do7No ratings yet

- A Brief Introduction To Some Simple Stochastic Processes: Benjamin LindnerDocument28 pagesA Brief Introduction To Some Simple Stochastic Processes: Benjamin Lindnersahin04No ratings yet

- The WSSUS Pulse Design Problem in Multi Carrier TransmissionDocument24 pagesThe WSSUS Pulse Design Problem in Multi Carrier Transmissionhouda88atNo ratings yet

- Heuristic Contraction Hierarchies With Approximation GuaranteeDocument7 pagesHeuristic Contraction Hierarchies With Approximation Guaranteemadan321No ratings yet

- Kuang Delay DEsDocument12 pagesKuang Delay DEsLakshmi BurraNo ratings yet

- A Study of Linear Combination of Load EfDocument16 pagesA Study of Linear Combination of Load EfngodangquangNo ratings yet

- Input Window Size and Neural Network Predictors: XT D FXTXT XT N XT D F T T XDocument8 pagesInput Window Size and Neural Network Predictors: XT D FXTXT XT N XT D F T T Xtamas_orban4546No ratings yet

- MIT - Stochastic ProcDocument88 pagesMIT - Stochastic ProccosmicduckNo ratings yet

- MAT 3103: Computational Statistics and Probability Chapter 7: Stochastic ProcessDocument20 pagesMAT 3103: Computational Statistics and Probability Chapter 7: Stochastic ProcessSumayea SaymaNo ratings yet

- Ku Satsu 160225Document11 pagesKu Satsu 160225LameuneNo ratings yet

- Whitepaper Kihm Rizzi Ferguson Halfpenny RASD2013Document16 pagesWhitepaper Kihm Rizzi Ferguson Halfpenny RASD2013Felipe Dornellas SilvaNo ratings yet

- 2 2 Queuing TheoryDocument18 pages2 2 Queuing TheoryGilbert RozarioNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Casio Service ManualDocument8 pagesCasio Service Manualysf19910% (1)

- Introduction To Mainframe HardwareDocument64 pagesIntroduction To Mainframe Hardwareysf1991No ratings yet

- Natural Language ProcessingDocument31 pagesNatural Language Processingysf1991No ratings yet

- Strath Cis Publication 320Document38 pagesStrath Cis Publication 320ysf1991No ratings yet

- Context-Aware Language Modeling For Conversational Speech TranslationDocument8 pagesContext-Aware Language Modeling For Conversational Speech Translationysf1991No ratings yet

- DocuDocument44 pagesDocuysf19910% (1)

- 3 Layer Dynamic CaptchaDocument7 pages3 Layer Dynamic Captchaysf1991No ratings yet

- Sun Fire X4100 M2 and X4200 M2 Server Architectures: A Technical White Paper October 2006 Sunwin Token # 481905Document45 pagesSun Fire X4100 M2 and X4200 M2 Server Architectures: A Technical White Paper October 2006 Sunwin Token # 481905ysf1991No ratings yet

- Development of Interpersonal Skills: Short-Term CourseDocument4 pagesDevelopment of Interpersonal Skills: Short-Term Courseysf1991No ratings yet

- 2021 1 Eau p2 Estereotomía Programa de CursoDocument6 pages2021 1 Eau p2 Estereotomía Programa de CursoDixom Javier Monastoque RomeroNo ratings yet

- Thermodrain FRP Manhole Cover Price ListDocument1 pageThermodrain FRP Manhole Cover Price Listmitesh20281No ratings yet

- Q & A ReliablityDocument6 pagesQ & A ReliablitypkcdubNo ratings yet