Professional Documents

Culture Documents

Intra Com

Intra Com

Uploaded by

GokhanOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Intra Com

Intra Com

Uploaded by

GokhanCopyright:

Available Formats

LDPC Codes

Alexios Balatsoukas-Stimming and Athanasios P. Liavas

Technical University of Crete

Dept. of Electronic and Computer Engineering

Telecommunications Laboratory

December 16, 2011

Intracom Telecom, Peania

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 1 / 61

Outline

1

Introduction to LDPC Codes

2

Iterative Decoding of LDPC Codes

Belief Propagation

Density Evolution

Gaussian Approximation

EXIT Charts

3

Ecient Encoding of LDPC and QC-LDPC Codes

4

Construction of QC-LDPC Codes

Deterministic

Random

5

RA and Structured RA Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 2 / 61

LDPC Codes

When?

Discovered in 1962 by Gallager.

Rediscovered by MacKay and others in late 1990s.

Why?

Capacity approaching on many channels.

Linear decoding complexity using Belief Propagation.

Linear complexity encoding, under certain conditions.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 3 / 61

LDPC Codes

We can dene a linear block code C as the nullspace of its m n

parity check matrix:

C = {c {0, 1}

n

: Hc = 0} .

We can also dene a linear block code C through its k n generator

matrix as follows:

C =

c = G

T

u : u {0, 1}

k

.

LDPC codes are linear block codes with a sparse parity check matrix.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 4 / 61

Factor Graph Representation

An LDPC code can be associated with a bipartite graph, also called a

factor graph or Tanner graph.

Variable nodes correspond to codeword bits and check nodes

correspond to the parity-check equations enforced by the code.

Variable node j is connected with check node i if and only if H

ij

= 1.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 5 / 61

Factor Graph Representation and Degree Distributions

Example ((7, 4) Hamming Code)

H =

1 0 0 1 0 1 1

0 1 0 1 1 1 0

0 0 1 0 1 1 1

+

+

+

Variable

nodes

Check

nodes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 6 / 61

Degree Distributions

The variable node degree distribution from an edge perspective is

denoted as:

(x) =

i

x

i 1

The check node degree distribution from an edge perspective is

denoted as:

(x) =

i

x

i 1

The

i

s and

i

s determine the ratio of edges that are connected to

variable and check nodes of degree i , respectively.

They also determine the codes design rate:

R = 1

i

i

/i

i

i

/i

,

as well as its average performance, as we will see.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 7 / 61

Factor Graph Representation and Degree Distributions

Example ((7, 4) Hamming Code)

H =

1 0 0 1 0 1 1

0 1 0 1 1 1 0

0 0 1 0 1 1 1

(x) =

3

12

+

6

12

x +

3

12

x

2

(x) = x

3

+

+

+

Variable

nodes

Check

nodes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 8 / 61

1

Introduction to LDPC Codes

2

Iterative Decoding of LDPC Codes

Belief Propagation

Density Evolution

Gaussian Approximation

EXIT Charts

3

Ecient Encoding of LDPC and QC-LDPC Codes

4

Construction of QC-LDPC Codes

Deterministic

Random

5

RA and Structured RA Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 9 / 61

Sum-Product Marginalization

Consider a function which can be factorized as follows

f (x

1

, . . . , x

6

) = f

1

(x

1

, x

2

, x

3

)f

2

(x

1

, x

4

, x

6

)f

3

(x

4

)f

4

(x

4

, x

5

)

Brute force calculation of marginal f (x

1

) =

x

1

f (x

1

, . . . , x

6

)

requires O(|X|

6

) operations.

Using the factorization and the distributive law, we can write

f (x

1

) =

x

1

f

1

(x

1

, x

2

, x

3

)

x

1

Kernel

. .. .

f

2

(x

1

, x

4

, x

6

) f

3

(x

4

)f

4

(x

4

, x

5

)

. .. .

Can be further expanded.

reducing complexity to O(|X|

4

).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 10 / 61

Sum-Product Marginalization

By further expanding f (x

1

) we get the following factor graph:

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 11 / 61

Sum-Product Marginalization Rules

(a) Variable node rules. (b) Function node rules.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 12 / 61

Sum-Product Marginalization - Step 1

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 13 / 61

Sum-Product Marginalization - Step 2

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 14 / 61

Sum-Product Marginalization - Step 3

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 15 / 61

Sum-Product Marginalization - Step 4

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 16 / 61

Sum-Product Marginalization - Final Step

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 17 / 61

Sum-Product Decoding

Transmission of a codeword x =

x

1

x

n

C over an AWGN

channel

y

i

= x

i

+ w

i

, w

i

N(0,

2

w

), i = 1, 2, . . . , n.

The MAP decoding rule for x

i

reads

x

i

= arg max

x

i

{1}

p(x

i

|y)

= arg max

x

i

{1}

x

i

p(x|y)

= (Bayes rule, conditional independence)

= arg max

x

i

{1}

x

i

j =1

p(y

j

|x

j

)

1

[xC]

. .. .

Sum-Product form!

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 18 / 61

Belief Propagation

Log-domain decoding simplies the sum-product rules and guarantees

numerical stability of the calculations.

LLRs are used as messages, i.e. each variable node aims to calculate

L

i

= log

p(x

i

= +1|y)

p(x

i

= 1|y)

If L

i

> 0, then x

i

= +1, if L

i

< 0 then x

i

= 1.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 19 / 61

Belief Propagation

(a) Variable node rules. (b) Check node rules.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 20 / 61

Belief Propagation Decoding - Initialization

p(x

i

) and p(y

i

) are known. So, given p(y

i

|x

i

), we can calculate

p(x

i

|y

i

), i.e. the a posteriori probability of x

i

given the channel

observation y

i

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 21 / 61

Belief Propagation

If graph is cycle-free, after running

BP, we get the marginal p(x

1

|y).

The same holds for all

p(x

i

|y), i = 1, 2, . . .

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 22 / 61

Belief Propagation Decoding

If Tanner graph is cycle-free, we can calculate all p(x

i

|y), i = 1, 2, . . .,

simultaneously.

Bad news about cycle-free codes: they necessarily contain many

low-weight codewords and, hence, have a high probability of error.

However, BP still performs very well in practice, even when cycles

are present.

On graphs with cycles, when Hx = 0 or a maximum number of

iterations is reached, decoding halts.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 23 / 61

Other Iterative Decoding Algorithms

Wide variety of performance-complexity trade-os.

In order of increasing complexity and performance:

1

Bit-ipping

2

Majority Logic Decoding

3

Min-Sum Decoding

4

Belief Propagation Decoding

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 24 / 61

Density Evolution

In the limit of innite blocklength, the performance of the

(, )-ensemble of LDPC codes under Belief Propagation decoding

can be predicted by Density Evolution.

Due to the concentration theorem, performance of individual codes in

the ensemble is close to the ensemble average performance

(exponential convergence w.r.t. the codeword length).

Density Evolution tracks the evolution of message probability density

functions throughout the decoding procedure, under the assumption

that the all-zero codeword is transmitted.

For the BI-AWGNC, probability density functions must be tracked

since the received LLR messages are continuous random variables

(computationally very demanding).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 25 / 61

Density Evolution - BI-AWGN Channel

Initial LLR based only on the channel output is:

LLR(y) =

2y

2

N

2

,

4

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 26 / 61

Density Evolution - BI-AWGN Channel

Figure: (3, 6)-regular code,

2

= 0.7692.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 27 / 61

Density Evolution - BI-AWGN Channel

Figure: (3, 6)-regular code,

2

= 0.7692.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 28 / 61

Density Evolution - BI-AWGN Channel

Figure: (3, 6)-regular code,

2

= 0.7692.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 29 / 61

Density Evolution - Gaussian Approximation

Gaussian Approximation (GA): we assume the messages are

symmetric Gaussian random variables, i.e. variance

2

and mean

2

/2 (Central Limit Theorem).

We must track only the mean.

Variable node of degree i at iteration :

m

()

v

i

= m

ch

+

k

m

(1)

k

Average over (x):

m

()

v

=

i

m

()

v

i

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 30 / 61

Density Evolution - Gaussian Approximation

Check node of degree i at iteration :

m

()

u,i

=

1

m

()

v

i

j 1

Average over (x):

m

()

u

=

i

m

()

u,i

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 31 / 61

Gaussian Approximation - Convergence

Under the assumption that the message densities are symmetric

Gaussian and that the all-zero codeword was transmitted, the average

probability of bit error is:

P

()

e

= Q

m

()

v

m

()

v

0.

If

m

()

v

> m

(1)

v

, = 1, 2, . . .

then, the BP decoder converges to a vanishingly small probability

of error.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 32 / 61

EXIT Charts

Message: extrinsic LLR of codebit i :

L

i

= log

p(x

i

= +1|y

i

)

p(x

i

= 1|y

i

)

.

Message Density: density of extrinsic LLR assuming that the

all-zero codeword was transmitted.

BP at a variable node acts as a decoder of a repetition code.

BP at a check node acts as a decoder of a parity-check code.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 33 / 61

EXIT Charts - Repetition Code

Consider an [n, 1, n] repetition code. Transmit X = 1 over

AWGNC:

y

i

= x + w

i

, w

i

N(0,

2

w

), i = 1, . . . , n.

p(x|y) =

p(x, y)

p(y)

=

p(y|x)p(x)

p(y)

p(y|x) =

n

i =1

p(y

i

|x).

. .. .

variable node rule

Before decoding: p(x|y

i

) = a and I (X; Y

i

) = 1 H(a).

After decoding: I (X; Y) = 1 H(a

n

), where n denotes n-fold

convolution.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 34 / 61

EXIT Charts - Parity-Check Code

Consider an [n, n 1, 2] parity-check code. Transmit codeword

x =

x

1

x

2

. . . x

n

, x

i

= 1, over AWGNC:

y

i

= x

i

+ w

i

, w

i

N(0,

2

w

), i = 1, . . . , n.

p(x

i

|y) =

p(x

i

, y)

p(y)

x

i

p(x)p(y|x)

=

x

i

1

[x

1

x

2

...x

n

=1]

n

i =1

p(y

i

|x

i

)

. .. .

check node rule

Before decoding: p(x

i

|y

i

) = b and I (X

i

; Y

i

) = 1 H(b).

After decoding: I (X

i

; Y) = 1 H(b

n

), where n denotes n-fold

convolution in the G-domain.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 35 / 61

EXIT Charts

Now, if we consider the repetition and parity-check codes as being

part of a large system that performs message passing decoding, we

have:

For the variable node, if I (X; L

i

(X)) = 1 H(a), then:

I (X; L(X)) = 1 H(a

n

).

For the check codes, if the I (X

i

; L

i

(X

i

)) = 1 H(b), then:

I (X; L(X)) = 1 H(b

n

).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 36 / 61

EXIT Charts

We dene:

J() = 1

1

(x

2

/2)

2

2

2

log

2

(1 + e

x

)dx.

J() is the mutual information between a codeword bit and the

corresponding message, assuming that the message is a symmetric

Gaussian random variable with standard deviation .

J

1

(I ) is the standard deviation of the symmetric Gaussian

distributed message which has mutual information I with a codeword

bit.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 37 / 61

EXIT Charts - Variable Nodes

I

EV

(resp. I

AV

) is the average mutual information between the

outgoing (resp. incoming) messages of variable nodes and the

codeword bits.

The EXIT chart I

EV

describing the variable

node function of degree i :

I

i

EV

(I

AV

,

2

ch

) = J

(i 1)J

1

(I

AV

)

2

+

2

ch

Average over (x):

I

EV

(I

AV

,

2

ch

) =

i

I

i

EV

(I

AV

,

2

ch

),

with

2

ch

=

4

2

w

,

2

w

is the noise variance.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 38 / 61

EXIT Charts - Check Nodes

I

EC

(resp. I

AC

) is the average mutual information between the

outgoing (resp. incoming) messages of check nodes and the codeword

bits.

The EXIT chart I

EC

describing the check node

function of degree i :

I

i

EC

(I

AC

) 1 J

(i 1)J

1

(1 I

AC

)

Average over (x):

I

EC

(I

AC

)

i

I

i

EC

(I

AC

).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 39 / 61

EXIT Charts - Code Optimization

For every possible incoming average mutual information at variable

nodes, we want the outgoing average mutual information to be larger:

I

EC

(I

EV

(I

AV

)) > I

AV

If the EXIT chart of the variable nodes lies above the inverse of the

EXIT chart for the check nodes, i.e.

I

EV

(I

AV

) > I

1

EC

(I

AV

)

then the decoding converges to a vanishingly small probability of

error.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 40 / 61

EXIT Charts - Code Rate Optimization

For given (x) and (x), the code design rate is:

R = 1

i

i

/i

i

i

/i

.

A common approach is to maximize R over (x) for xed (x). This

gives rise to a continuous linear program:

max

v

max

i =2

i

/i

s.t. I

1

EC

(I

AV

) < I

EV

(I

AV

) =

v

max

i =2

i

I

i

EV

(I

AV

), I

AV

(0, 1)

v

max

i =2

i

= 1,

i

0, i = 2, 3, . . . , v

max

.

We solve it by discretizing I

AV

(0, 1).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 41 / 61

EXIT Charts - Code Optimization

(a) (3, 6)-regular ensemble, r = 0.5. (b) Optimized ensemble, r = 0.58.

Figure: Noise variance

2

= 0.76.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 42 / 61

1

Introduction to LDPC Codes

2

Iterative Decoding of LDPC Codes

Belief Propagation

Density Evolution

Gaussian Approximation

EXIT Charts

3

Ecient Encoding of LDPC and QC-LDPC Codes

4

Construction of QC-LDPC Codes

Deterministic

Random

5

RA and Structured RA Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 43 / 61

Ecient Encoding of LDPC Codes

In the general case, encoding has complexity O(n

2

).

If parity-check matrix has an approximate upper triangular form, it

can be shown that encoding can be achieved with complexity

O(n + g

2

).

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 44 / 61

QC-LDPC Codes - Motivation

In general, storage of parity check matrix requires O(n

2

) memory.

In Quasi-Cyclic (QC) LDPC codes, the parity check matrix consists of

q q sparse circulant submatrices (usually, permutation matrices).

Each circulant is fully characterized by its rst row or column.

Required memory becomes O(n

2

/q), i.e. reduction by a factor of q.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 45 / 61

Ecient Encoding of QC-LDPC Codes

The generator matrix G can be obtained in systematic form from its

cq tq parity-check matrix G = [ I G

P

].

Encoding is done as follows

c = aG = [ a p ],

where

G

P

has the form:

G

P

=

G

1,1

G

1,2

. . . G

1,c

G

2,1

G

2,2

. . . G

2,c

.

.

.

.

.

.

.

.

.

G

tc,1

G

tc,2

. . . G

tc,c

.

G

i ,j

s are circulants, e.g.

G

i ,j

=

0 1 0 . . . 0

0 0 1 . . . 0

0 0 0 . . . 0

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

0 0 0 . . . 1

1 0 0 . . . 0

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 46 / 61

Ecient Encoding of QC-LDPC Codes

For p, we have:

The j -th parity q-tuple can then be obtained as follows:

p

j

= a

1

G

1,j

+ a

2

G

2,j

+. . . + a

tc

G

tc,j

,

where a

j

is the j -th q-tuple of the input.

The G

i ,j

s are also circulants which can be fully described by their

rst row, g

i ,j

.

Thus, we can calculate each term of p

j

as follows:

a

i

G

i ,j

= a

(i 1)b+1

g

(0)

i ,j

+ a

(i 1)b+2

g

(1)

i ,j

+. . . + a

ib

g

(b1)

i ,j

, (1)

where g

(l )

i ,j

denotes the l -th right cyclic shift of g

i ,j

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 47 / 61

Ecient Encoding of QC-LDPC Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 48 / 61

Ecient Encoding of QC-LDPC Codes

Some other schemes have been proposed. Wide variety of

throughput-size trade-os.

Scheme Encoding speed FFs 2-input XOR 2-input AND

1 (t c)q 2cq cq cq

2 cq (t c)q (t c)q 1 (t c)q

3 q tq O(c

2

q) 0

Table: Various encoder architectures and their complexity.

q Size of circulants

t Number of horizontally stacked circulants.

c Number of vertically stacked circulants.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 49 / 61

1

Introduction to LDPC Codes

2

Iterative Decoding of LDPC Codes

Belief Propagation

Density Evolution

Gaussian Approximation

EXIT Charts

3

Ecient Encoding of LDPC and QC-LDPC Codes

4

Construction of QC-LDPC Codes

Deterministic

Random

5

RA and Structured RA Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 50 / 61

Deterministic QC-LDPC Codes (Array Codes)

For a prime q and positive integer m q, we create the matrix:

H =

I I . . . I . . . I

I P

1

. . . P

(m1)

. . . P

(q1)

I P

2

. . . P

2(m1)

. . . P

2(q1)

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

I P

(m1)

. . . P

(m1)(m1)

. . . P

(m1)(q1)

.

P =

0 1 0 . . . 0

0 0 1 . . . 0

0 0 0 . . . 0

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

0 0 0 . . . 1

1 0 0 . . . 0

P

i

is P with all columns cyclically shifted to

the right by i positions.

By convention, P

0

= I and P

= 0.

H represents a (j , q) regular QC-LDPC

code.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 51 / 61

Deterministic QC-LDPC Codes (Modied Array Codes)

For a prime q and positive integer m q, we create the matrix:

H =

I I I . . . I . . . I

0 I P

1

. . . P

(m2)

. . . P

(q2)

0 0 I . . . P

2(m3)

. . . P

2(q3)

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

0 0 0 . . . I . . . P

(m1)(qm)

.

Ecient encoding possible due to structure. Slightly irregular code.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 52 / 61

Random Construction of QC-LDPC Codes (Regular Codes)

The mq nq parity-check matrix is constructed as follows:

H =

P

a

11

P

a

12

. . . P

a

1n

P

a

21

P

a

22

. . . P

a

2n

.

.

.

.

.

.

.

.

.

.

.

.

P

a

m1

P

a

m2

. . . P

a

mn

where a

ij

{0, 1, 2, . . . , q 1}.

If a

ij

{0, 1, 2, . . . , L 1} , then irregular codes can also be

constructed.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 53 / 61

Random Construction of QC-LDPC Codes (Irreg. Codes)

The number of non-zero blocks in each column of the block parity

check matrix can be chosen according to a degree distribution.

Permutation matrices P

i

are used, i is usually chosen at random, and,

if some constraints, e.g. cycle girth, check node distribution, are

violated, another value for i is chosen.

Very similar to one of Gallagers constructions of irregular codes.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 54 / 61

Other Constructions of QC-LDPC Codes

Constructions based on Finite Geometries.

Constructions based on Reed-Solomon codes.

Constructions based on masking of existing LDPC codes.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 55 / 61

1

Introduction to LDPC Codes

2

Iterative Decoding of LDPC Codes

Belief Propagation

Density Evolution

Gaussian Approximation

EXIT Charts

3

Ecient Encoding of LDPC and QC-LDPC Codes

4

Construction of QC-LDPC Codes

Deterministic

Random

5

RA and Structured RA Codes

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 56 / 61

Repeat-Accumulate Codes

RA codes: subclass of LDPC codes.

Encoding:

1

A frame of information symbols of length N is repeated q times.

2

A random (but xed) permutation is applied to the resulting frame.

3

The permuted frame is fed to a rate-1 accumulator with transfer

function 1/(1 + D).

For Irregular RA codes, each information bit is repeated according to

a repetition prole.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 57 / 61

Structured Repeat-Accumulate Codes

The parity-check matrix of an RA code can be written as follows:

H = [ H

1

H

2

],

where:

H

2

=

1 0 0 0 . . . 0

1 1 0 0 . . . 0

0 1 1 0 . . . 0

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

0 0 . . . 1 1 0

0 0 . . . 0 1 1

.

We can make RA codes even simpler by enforcing a QC structure on

H

1

.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 58 / 61

Structured IRA Codes

We construct the matrix H

1

as follows:

H

1

=

P

a

11

P

a

12

. . . P

a

1n

P

a

21

P

a

22

. . . P

a

2n

.

.

.

.

.

.

.

.

.

.

.

.

P

a

m1

P

a

m2

. . . P

a

mn

where P is a right cyclic shift q q permutation matrix and

a

i ,j

{0, 1, . . . , q 1, } are the corresponding exponents.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 59 / 61

Structured IRA Codes

If we use H

1

as is, the resulting code has a poor minimum distance.

We use a permuted version of H

1

instead, where the permutation is

chosen so that the minimum codeword weight for low-weight inputs is

increased.

So, the nal parity-check matrix will be:

H = [ H

1

H

2

]

The resulting code is regular unless we choose to mask out some

entries (by choosing a

ij

= ) in the H

1

matrix in accordance with a

targeted repetition prole.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 60 / 61

Appendix - (x) and J()

The function (x) is dened as follows:

(x) =

1

1

4x

tanh

u

2

exp

(ux)

2

4x

du, x > 0

1, x = 0.

The function J() is dened as follows:

J() = 1

1

(x

2

/2)

2

2

2

log

2

(1 + e

x

)dx.

A. Balatsoukas-Stimming and A. P. Liavas () LDPC Codes December 16, 2011 61 / 61

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5820)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Wjec A2 Chemistry Study and Revision Guide PDFDocument125 pagesWjec A2 Chemistry Study and Revision Guide PDFPakorn WinayanuwattikunNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- 01 - Lineberry - Propulsion Fundamentals - 2019Document95 pages01 - Lineberry - Propulsion Fundamentals - 2019Николай СидоренкоNo ratings yet

- Drugs and Substance Abuse PDFDocument8 pagesDrugs and Substance Abuse PDFMaria Aminta CacerecesNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- IogDocument21 pagesIogCharcravorti AyatollahsNo ratings yet

- Determining The Susceptibility of Stainless Steels and Related Nickel-Chromium-Iron Alloys To Stress-Corrosion Cracking in Polythionic AcidsDocument3 pagesDetermining The Susceptibility of Stainless Steels and Related Nickel-Chromium-Iron Alloys To Stress-Corrosion Cracking in Polythionic AcidsIvan AlanizNo ratings yet

- Hanwa Injection Substation Maintainance Ceck ListDocument18 pagesHanwa Injection Substation Maintainance Ceck ListMubarak Aleem100% (1)

- NBRK0200 Installation Configuration ManualDocument32 pagesNBRK0200 Installation Configuration ManualGoran JovanovicNo ratings yet

- Lesson Plan Solar System (Year 4)Document5 pagesLesson Plan Solar System (Year 4)j_sha93No ratings yet

- Latihan SoalDocument5 pagesLatihan SoalWizka Mulya JayaNo ratings yet

- Application of Molecular Absorption SpectrosDocument52 pagesApplication of Molecular Absorption SpectrosVeliana Teta100% (1)

- Presentare AgroclimberDocument89 pagesPresentare Agroclimberaritmetics0% (1)

- Taiwan: and Its Economic DevelopmentDocument30 pagesTaiwan: and Its Economic DevelopmentBimsara WijayarathneNo ratings yet

- Maximizing The Sharpe RatioDocument3 pagesMaximizing The Sharpe RatioDat TranNo ratings yet

- 17 InterferometersDocument84 pages17 InterferometersAnirban PaulNo ratings yet

- Lecture 7 Adjusted Present ValueDocument19 pagesLecture 7 Adjusted Present ValuePraneet Singavarapu100% (1)

- Report FormatDocument3 pagesReport FormatAditya BorborahNo ratings yet

- Track 1Document6 pagesTrack 1rolli c. badeNo ratings yet

- Gibberellins: Regulators of Plant HeightDocument17 pagesGibberellins: Regulators of Plant HeightPratiwi DwiNo ratings yet

- Recipe Book Part 1Document19 pagesRecipe Book Part 1Justine Keira AyoNo ratings yet

- Assignment 1 2 PumpDocument2 pagesAssignment 1 2 PumpAnkit0% (1)

- SWHUSP401Document55 pagesSWHUSP401leonardo.roslerNo ratings yet

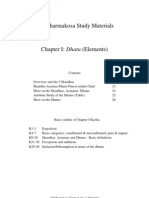

- Chapter 1 DhatuDocument6 pagesChapter 1 Dhatusbec96860% (1)

- Verification of Algorithm and Equipment For Electrochemical Impedance MeasurementsDocument11 pagesVerification of Algorithm and Equipment For Electrochemical Impedance MeasurementsMaria Paulina Holguin PatiñoNo ratings yet

- Experiment N 5: Surface RoughnessDocument3 pagesExperiment N 5: Surface RoughnessG. Dancer GhNo ratings yet

- Fleet MaintenanceDocument36 pagesFleet MaintenanceRery Dwi SNo ratings yet

- Ielts MockDocument40 pagesIelts MockkhalidNo ratings yet

- Hotel Minimum ReqDocument28 pagesHotel Minimum ReqAnusha AshokNo ratings yet

- Explanation Text 2023Document4 pagesExplanation Text 2023NursalinaNo ratings yet

- Mezzanine Floor GuideDocument3 pagesMezzanine Floor GuidedraftingNo ratings yet

- Categorization PCABDocument2 pagesCategorization PCABleonard dela cruzNo ratings yet