Professional Documents

Culture Documents

Art:10.1007/s00170 007 1024 X

Art:10.1007/s00170 007 1024 X

Uploaded by

Michael WareCopyright:

Available Formats

You might also like

- Blanche-An Experimental in Guidance and Navigation of An Autonomous Robot VehicleDocument12 pagesBlanche-An Experimental in Guidance and Navigation of An Autonomous Robot VehiclemaysamshNo ratings yet

- Annual Cyber Security Training New Hire PDFDocument82 pagesAnnual Cyber Security Training New Hire PDFsajeerNo ratings yet

- Practical Development of An Experimental PDFDocument9 pagesPractical Development of An Experimental PDFharold medina martinezNo ratings yet

- Design and Implementation of Cartesian RobotDocument6 pagesDesign and Implementation of Cartesian RobotSang VũNo ratings yet

- Shi Final ReportDocument12 pagesShi Final ReportMohamed SaeedNo ratings yet

- Abstract. This Paper Describes MRL Small Size Soccer Team Activities in MeDocument8 pagesAbstract. This Paper Describes MRL Small Size Soccer Team Activities in MeAli AzkNo ratings yet

- COBEM01 BDocument7 pagesCOBEM01 BEduarda SchwarzNo ratings yet

- Project Title...Document7 pagesProject Title...Abhishek ShahNo ratings yet

- RaDMI 2014Document17 pagesRaDMI 2014Shin Han NxtNo ratings yet

- GSM Based Arial Photography Using Remote Flying RobotDocument4 pagesGSM Based Arial Photography Using Remote Flying RobotseventhsensegroupNo ratings yet

- 3D-Odometry For Rough Terrain - Towards Real 3D Navigation: Pierre Lamon and Roland SiegwartDocument7 pages3D-Odometry For Rough Terrain - Towards Real 3D Navigation: Pierre Lamon and Roland SiegwartTrương Đức BìnhNo ratings yet

- Development of An Advanced Servo Manipulator For Remote Handling in Nuclear InstallationsDocument8 pagesDevelopment of An Advanced Servo Manipulator For Remote Handling in Nuclear InstallationsK. JayarajanNo ratings yet

- Encoder-Free Odometric System For Autonomous MicrorobotsDocument11 pagesEncoder-Free Odometric System For Autonomous MicrorobotsSergeNo ratings yet

- Fabricating A Completely Assemblable Robotic ArmDocument19 pagesFabricating A Completely Assemblable Robotic ArmshanthiniNo ratings yet

- Design Analysis of A Remote Controlled P PDFDocument12 pagesDesign Analysis of A Remote Controlled P PDFVũ Mạnh CườngNo ratings yet

- Chapter Two Literature ReviewDocument14 pagesChapter Two Literature ReviewdesalegnNo ratings yet

- Advance Line Following Robot: Shivam Singh, Prashant KumarDocument3 pagesAdvance Line Following Robot: Shivam Singh, Prashant Kumaranil kasot100% (1)

- Microcontroller Based Robotic ArmDocument5 pagesMicrocontroller Based Robotic Armনূর হোসেন সৌরভ0% (1)

- Design of 6 Dof Robotic Arm Controlled Over The Internet: G. Rajiv and SivakumarDocument5 pagesDesign of 6 Dof Robotic Arm Controlled Over The Internet: G. Rajiv and SivakumarSarmad GulzarNo ratings yet

- 897-900 IJER - 2016 - 1209 KrishnaPrasadh KSDocument4 pages897-900 IJER - 2016 - 1209 KrishnaPrasadh KSInnovative Research PublicationsNo ratings yet

- Automatic Balancing Robot: Madhuram.M (Assistant Professor)Document12 pagesAutomatic Balancing Robot: Madhuram.M (Assistant Professor)رشيد بن صغيرNo ratings yet

- Wireless Robot Control With Robotic Arm Using Mems and ZigbeeDocument5 pagesWireless Robot Control With Robotic Arm Using Mems and ZigbeeCh RamanaNo ratings yet

- Conferencepaper 2Document5 pagesConferencepaper 2Leo BoyNo ratings yet

- Line Following For A Mobile Robot: Proceedings of SPIE - The International Society For Optical Engineering October 1995Document9 pagesLine Following For A Mobile Robot: Proceedings of SPIE - The International Society For Optical Engineering October 1995Fayçal BEN HMIDANo ratings yet

- Navigator Design Report 2011 Intelligent Ground Vehicle CompetitionDocument15 pagesNavigator Design Report 2011 Intelligent Ground Vehicle CompetitionKenDaniswaraNo ratings yet

- Development of CMU Direct-Drive Arm IIDocument24 pagesDevelopment of CMU Direct-Drive Arm IIjain1912praveenNo ratings yet

- Final Year Project WIFI CONTROL ROBOT Ijariie7393Document6 pagesFinal Year Project WIFI CONTROL ROBOT Ijariie7393NayaaNo ratings yet

- Wireless Mobile Robotic ArmDocument7 pagesWireless Mobile Robotic ArmUendel DiegoNo ratings yet

- Metal Detecting RobotDocument51 pagesMetal Detecting RobotBariq MohammadNo ratings yet

- Design and Implementation of Line Follower and Obstacle Detection Robot PDFDocument9 pagesDesign and Implementation of Line Follower and Obstacle Detection Robot PDFVINH Nhan ĐứcNo ratings yet

- Kinematic Analysis of 6 DOF Articulated Robotic ArDocument5 pagesKinematic Analysis of 6 DOF Articulated Robotic ArRoman MorozovNo ratings yet

- Design Implementation and Control of A H PDFDocument11 pagesDesign Implementation and Control of A H PDFNikhil HasabiNo ratings yet

- Automi Research PaperDocument4 pagesAutomi Research PaperHimanshu vermaNo ratings yet

- Harshit Robotics 10Document13 pagesHarshit Robotics 10harshitkargathara21No ratings yet

- Summary of Graduation ProjectDocument1 pageSummary of Graduation ProjectSantiago BritoNo ratings yet

- Restaurant BOTSDocument8 pagesRestaurant BOTSSaudin TantriNo ratings yet

- Chapter 2 SolutionDocument4 pagesChapter 2 SolutionEngr ShabirNo ratings yet

- Engineers and Scientist Around The World Are Working Every Day To Make The Human Life Better and ComfortDocument12 pagesEngineers and Scientist Around The World Are Working Every Day To Make The Human Life Better and ComfortMAHESH SNo ratings yet

- 1902 03547 PDFDocument8 pages1902 03547 PDFDipak KumarNo ratings yet

- Line Follower Robot Is A Mobile Machine That Can Detect and Follow Line Which Is Drawn On The FloorDocument54 pagesLine Follower Robot Is A Mobile Machine That Can Detect and Follow Line Which Is Drawn On The FloorHasna AbdelwahabNo ratings yet

- Foreign 2Document8 pagesForeign 2Rhodora A. BorjaNo ratings yet

- Robotic Arm: Electronic & CommunicationDocument16 pagesRobotic Arm: Electronic & CommunicationDilipsinh DodiyaNo ratings yet

- JETIRFA06001Document5 pagesJETIRFA06001Trần ĐạtNo ratings yet

- Net Operated Land Rover: Hemant C, Ajay V, Shyamal L, Mayuri KDocument4 pagesNet Operated Land Rover: Hemant C, Ajay V, Shyamal L, Mayuri Kneekey17No ratings yet

- Robô Pêndulo 2 Rodas - 1Document7 pagesRobô Pêndulo 2 Rodas - 1Victor PassosNo ratings yet

- Warehouse Management BOT Using ArduinoDocument6 pagesWarehouse Management BOT Using ArduinoIJRASETPublicationsNo ratings yet

- Optimization of PID Control For High Speed Line Tracking RobotsDocument8 pagesOptimization of PID Control For High Speed Line Tracking RobotsPiero RaurauNo ratings yet

- BEARCAT Cub Design ReportDocument16 pagesBEARCAT Cub Design ReportMohd Sazli SaadNo ratings yet

- Articulated Robotic ArmDocument9 pagesArticulated Robotic ArmAkshat MishraNo ratings yet

- SOC For Detecting LandminesDocument4 pagesSOC For Detecting LandminesIOSRjournalNo ratings yet

- Presentation Pick and Place Robo Arm 3d PrintedDocument16 pagesPresentation Pick and Place Robo Arm 3d PrintedKshitij BandarNo ratings yet

- Iot Embedded Login For Stepper Motor Speed ControlDocument10 pagesIot Embedded Login For Stepper Motor Speed ControlThrisul KumarNo ratings yet

- Industral RobotsDocument32 pagesIndustral RobotsSasa AtefNo ratings yet

- WheelchairDocument12 pagesWheelchairmallikaNo ratings yet

- Agribot: An Agriculture Robot: Vol. 4, Issue 1, January 2015Document3 pagesAgribot: An Agriculture Robot: Vol. 4, Issue 1, January 2015Dreamer JayaNo ratings yet

- Robotic Arm For Pick and Place Application: Kaustubh Ghadge, Saurabh More, Pravin GaikwadDocument9 pagesRobotic Arm For Pick and Place Application: Kaustubh Ghadge, Saurabh More, Pravin Gaikwadsachin bibhishan parkhe0% (1)

- Implementing Palletization Application of 4-Axis Igus Robot ManipulatorDocument20 pagesImplementing Palletization Application of 4-Axis Igus Robot ManipulatorKV SAI KIRANNo ratings yet

- 1 PBDocument7 pages1 PBFyndi Aw AwNo ratings yet

- Student Projects 2019-2020: Course On Modelling and Simulation of Mechatronic SystemsDocument58 pagesStudent Projects 2019-2020: Course On Modelling and Simulation of Mechatronic SystemsDomenico GarofaloNo ratings yet

- Formato Paper RusiaDocument12 pagesFormato Paper RusiaHECTOR COCHISE TERAN HERRERANo ratings yet

- Resume 2020version3Document1 pageResume 2020version3api-438683617No ratings yet

- Gujarat PGCET Question Bank ECDocument262 pagesGujarat PGCET Question Bank ECsuhradamNo ratings yet

- Composite CylinderDocument3 pagesComposite CylinderSafarudin RamliNo ratings yet

- Group Assignment For COM2023Document2 pagesGroup Assignment For COM2023mdmmonalissaNo ratings yet

- Fireware Release-Notes v12!1!3Document28 pagesFireware Release-Notes v12!1!3Francisco Vargas RosarioNo ratings yet

- Alcatel-Lucent Omniaccess 5800: Enterprise Services RoutersDocument5 pagesAlcatel-Lucent Omniaccess 5800: Enterprise Services RoutersMoises ReznikNo ratings yet

- Cisco Unity VM Setup and Prompts PDFDocument3 pagesCisco Unity VM Setup and Prompts PDFNovan AndriantoNo ratings yet

- Objective: Michael JonesDocument1 pageObjective: Michael JonesnaveenbalaNo ratings yet

- CoolMaster Programmers Reference Manual (PRM)Document19 pagesCoolMaster Programmers Reference Manual (PRM)nassar_aymanNo ratings yet

- Activity Sheet Week4 7Document2 pagesActivity Sheet Week4 7William Vincent SoriaNo ratings yet

- Implementation of Socket Programming Simulation Using Quantum Communication TechnologiesDocument6 pagesImplementation of Socket Programming Simulation Using Quantum Communication TechnologiesShowribabu KantaNo ratings yet

- Company XYZ Test Strategy Sample Ver 1Document28 pagesCompany XYZ Test Strategy Sample Ver 1StevenBurryNo ratings yet

- (M3S1 POWERPOINT) Pre Reading StrategiesDocument18 pages(M3S1 POWERPOINT) Pre Reading StrategiesJibril DiazNo ratings yet

- Chapter 10 TCP VegasDocument14 pagesChapter 10 TCP VegasGlady PutraNo ratings yet

- Powershell Commands PDFDocument3 pagesPowershell Commands PDFFaique MemonNo ratings yet

- Study On Delhi Metro: Summer Internship Project Report - 2010Document63 pagesStudy On Delhi Metro: Summer Internship Project Report - 2010nitik chakmaNo ratings yet

- 4th Sem Micro-Syllabus All in 1Document30 pages4th Sem Micro-Syllabus All in 1ArpoxonNo ratings yet

- Transport of ABAP QueryDocument2 pagesTransport of ABAP Querytarunaggarwal11No ratings yet

- Lec 05Document48 pagesLec 05Sam VergaraNo ratings yet

- Thinking Inside The Box - Web Services and XML Management Web Services and XML ManagementDocument35 pagesThinking Inside The Box - Web Services and XML Management Web Services and XML Managementpetr_petrovNo ratings yet

- Shadow Dextrous HandDocument9 pagesShadow Dextrous HandMartin LotharNo ratings yet

- Lync - 10533ADTrainerHandbookDocument776 pagesLync - 10533ADTrainerHandbookpichyxwolfgangNo ratings yet

- 07 GEI-100485 - Human-Machine Interface HMIDocument10 pages07 GEI-100485 - Human-Machine Interface HMIEduardo Nascimento100% (1)

- B 57 DiagmanDocument87 pagesB 57 DiagmanMilan JanjićNo ratings yet

- TAC INET 1200 Series SCU Installation SheetDocument2 pagesTAC INET 1200 Series SCU Installation SheetMarin MariusNo ratings yet

- Aixtechblog Wordpress Com 2016 09 19 How To Remove Powerha System Mirror Hacmp ConfigurationDocument1 pageAixtechblog Wordpress Com 2016 09 19 How To Remove Powerha System Mirror Hacmp ConfigurationFouad BaroutNo ratings yet

- c.pCO Sistem PDFDocument64 pagesc.pCO Sistem PDFElşən Yusifoğlu ƏsgərovNo ratings yet

- Curicuram Vitae: Career ObjectiveDocument3 pagesCuricuram Vitae: Career Objectiveprerna nandNo ratings yet

- Guru Nanak Dev UniversityDocument33 pagesGuru Nanak Dev UniversityRibhu SethNo ratings yet

Art:10.1007/s00170 007 1024 X

Art:10.1007/s00170 007 1024 X

Uploaded by

Michael WareOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Art:10.1007/s00170 007 1024 X

Art:10.1007/s00170 007 1024 X

Uploaded by

Michael WareCopyright:

Available Formats

Int J Adv Manuf Technol (2008) 38:536542 DOI 10.

1007/s00170-007-1024-x

ORIGINAL PAPER

Development of autonomous mobile robot with manipulator for manufacturing environment

S. Datta & R. Ray & D. Banerji

Received: 10 January 2007 / Accepted: 22 March 2007 / Published online: 23 May 2007 # Springer-Verlag London Limited 2007

Abstract This paper describes the developmental effort involved in prototyping the first indigenous autonomous mobile robot, AMR, with a manipulator for carrying out tasks related to manufacturing. The objective is to design and develop a vehicle that can navigate autonomously and transport jobs and tools in a manufacturing environment. Proprioceptive and exteroceptive sensors are mounted on AMR for navigation. Among the exteroceptive sensors, a stereovision camera is mounted in front of AMR for mobile robot perception of the environment. Using the widely supported JPEG image file format, full high-resolution color images are transmitted frame by frame from the mobile robot to multiple viewers located within the robot work area, where fast reconstruction of these images enables remote viewing. A CMOS camera mounted on the manipulator identifies jobs for pick-and-place operation. A variation of correlation based adaptive predictive search (CAPS) method, a fast search algorithm in template matching, is used for job identification. The CAPS method justifiably selects a set of search steps rather than consecutive point-to-point search for faster job identification. Search steps, i.e., either coarse search or fine search, are selected by calculating the correlation coefficient between template and the image. Adaptive thresholding is used for image segmentation for parametric calculations

S. Datta (*) : R. Ray : D. Banerji Robotics & Automation, Central Mechanical Engineering Research Institute, M.G. Avenue, Durgapur, West Bengal 713209, India e-mail: sdatta@cmeri.res.in R. Ray e-mail: ranjitray@cmeri.res.in D. Banerji e-mail: dbanerji@cmeri.res.in

required for proper gripping of the object. Communication with the external world allowing remote operation is maintained through wireless connectivity. It is shown that autonomous navigation requires synchronization of different processes in a distributed architecture, while concurrently maintaining the integrity of the network. Keywords Mobile robot . Autonomous navigation . Manufacturing environment . Distributed architecture . AMR

1 Introduction Autonomous mobile robots are becoming an integral part of flexible manufacturing systems designed for material transport, cleaning, and assembly purpose. The advantage of this type of robot is that the existing manufacturing environment does not have to be altered or modified as in the case of conventional AGVs where permanent cable layouts or markers are required for navigation. These robots are also used extensively for survey, inspection, surveillance, bomb and mine disposal, underwater inspection, and space robotics. This paper describes the indigenous effort in prototyping Indias first autonomous mobile robot, AMR, with a manipulator for transporting jobs and tools in a manufacturing environment.

2 Physical description and design specification Worldwide, efforts are being made to use autonomous mobile robots to relieve human operators of tedious, repetitive, and hazardous tasks. One such area where mobile robots are used for supplementing human tasks is in manufacturing. The University of Massachusetts in Amherst is developing a

Int J Adv Manuf Technol (2008) 38:536542

537

mobile robot with a comprehensive suite of sensors that includes LRF and vision along with a dexterous manipulator, as mobility extends the workspace of the manipulator by permitting the robot to be operated in unstructured environments [5]. The Bundeswehr University in Munich is developing vision-guided intelligent robots for automating manufacturing, materials handling, and services, where vision-guided mobile robots ATHENE I and II navigate in structured environments based on the recognition of its current situation and a manipulator handles various objects using a stereo-vision system [1]. In India, mobile robots are being developed in some research institutes in collaboration with academic institutes and private sectors. One such mobile robot is the SmartNav, designed and built by Zenn Systems, Ahmedabad, in collaboration with IIT, Kanpur, and BARC [10]. Our mobile robot, AMR, also consists of a vehicle with a battery bank and a fleet of sensors, but it is autonomous and has a manipulator, which is required in a manufacturing environment for material handling. The physical dimension of AMR is 1,260 mm (L) 955 mm (W) 625 mm (H) and the chassis is made of aluminum channel IS: 3921-1986. The entire structure is wrapped with 3-mm aluminum sheets, bent to proper shape and joined together using argon welding. The structure is powder coated to ensure proper aesthetics as well as environment toughness. The absence of any screw or rivet ensures a very rigid structure with the required mechanical strength. Internal braces that compartmentalize the robot interior ensure additional strength. Couplers are used to ensure minimal transverse load on the motor shafts and bearings. The main load-bearing frame rests on two wheels and castors. The wheels are made of aluminum hubs 397 mm in diameter with rubber tires 69.73 mm wide. As proper dimension of the wheels determines accuracy and performance of the robot, the wheel hub and wheel cap are designed to allow changes of the tires in case they wear out. Further, to avoid slippage, the tire profile is designed to ensure that the contact points of the wheel with the ground are maintained under various turning conditions. There are three compartments inside AMR. The central compartment houses four 12-V dry-type sealed lead acid batteries that generate 48 V basic power supply. The rear compartment houses the motor controller and the drivers, the main micro-controller board, and the power-conditioning circuits as well as the sonar modulator and switching circuitry, two single board PC 104+ computers (SBCs), and the manipulator controller box. The front compartment houses the two Maxon motors of 48 V, 250 W each along with the SICK laser range finder (LRF) and Inteliteks Scorbot-ER-4u manipulator, which is mounted in front of AMR. The total weight of the system is around 125 kg and the payload of the

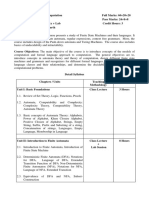

Fig. 1 AMR with manipulator

system is around 25 kg. AMRs speed is 40 m/min with a mission time of 1 h. The distribution of the weight ensures proper traction and stability during robot motion. As shown in Fig. 1, an array of 16 bumper switches and Polaroids 24 ultrasonic sensors circumscribe AMR and a BumbleBee stereovision camera is mounted in front of AMR to capture the navigation scene. Power distribution to various equipment is shown in Fig. 2, which enables the AMR to be operationally fully loaded for 1 h. The manipulator runs on 220 V AC. Hence a DC/AC converter is placed to convert 48 V DC to 220 V AC. The AMR comes in two configurations: in the threewheeled configuration, the two front wheels are the driving wheels and the rear wheel is of a castor-type. In the fourwheeled configuration, one castor is in the front and one

Fig. 2 Power distribution of the AMR

538

Int J Adv Manuf Technol (2008) 38:536542

Fig. 3 Architecture of the AMR

castor is in the rear, and the two driving wheels are in the center. All the wheels are mounted with the vehicle body with suitably designed suspension system. The design is flexible enough to convert from one configuration to another without affecting the stability of the system.

Fig. 4 Block diagram of vehicle control unit

3 AMR architecture Navigation of AMR stands on a distributed architecture comprising two SBCs, residing inside AMR. The work area of AMR consists of a remote host with a joystick and multiple display units. Figure 3 shows the AMR architecture. The SBCs are PC104 and PC 104+ compatible motherboard with a Celeron processor running at 400 MHz. The motherboard has a built-in 10/100 BaseT Ethernet card and supports 128 MB RAM, a 40 GB hard disk and a compact flash card of 1 GB. Each SBC has two USB ports, one RS232 serial port and one RS232/485 port. Each SBC requires only a single 5-V supply, although the harddrive needs an additional 12 V supply. SBC1 runs on Linux 7.3, whereas SBC2 runs on the Windows 2000 platform. The SBCs inside AMR are physically connected over Ethernet but AMR communicates with the outside world through Wireless Access Point, using a 802.11 b protocol. The navigational algorithm resides in the remote host which communicates with the SBCs. The display units display the work area during vehicle navigation, as grabbed by the stereo-vision camera mounted in front of AMR. 3.1 Vehicle control unit A vehicle control unit is the heart of AMR, which is detailed in a block diagram as given in Fig. 4. The microcontroller-based main board controls the motors, monitors the battery voltage of AMR, takes inputs from sonars and

bumper switches, and wheel encoders and maintains serial communication with SBC1. The micro-controller features 64 Kbytes of on-chip flash program memory and 8 Kbytes of on-chip RAM. It provides 32 bi-directional I/O lines. The two main motors are driven through two DC servoamplifiers, EPOS 70/10, from Maxon Motor AG, Switzerland. These take 1170 V DC inputs as the reference that set the motor speeds. On-board dual 12-bit D/A converters provide the servo-amplifiers with the necessary inputs at the required resolution for effective control of AMRs motion. Thus, the micro-controller can set each wheels rotation speed independently by writing to the relevant port to implement a turn or change AMRs acceleration. Motive power is provided by two graphite-brushed, 24-VDC, 250W servo motors that have integrated high-efficiency planetary gears with a 93:1 gear ratio. These provide each wheel with a torque of 35 Nm at 32.3 rpm. The motors have integrated incremental shaft encoders with 500 counts

Fig. 5 Firing sequence of AMR sonars

Int J Adv Manuf Technol (2008) 38:536542

539

per turn that give necessary feedback to both the servoamplifiers and the micro-controller. While the servoamplifiers maintain the required speed using the encoder feedback, the micro-controller uses the same feedback through its internal counters to keep track of motor velocities and position for total motion control. Moreover, the I/O port of the vehicle control unit takes input from 16 bumper switches and 24 sonars. There are eight sonar arrays both in front and rear and four on each side providing 360 degrees of nearly seamless sensing. The sonars provide range information and based on the placement of the sonars, provide bearing information. One modulator board, the Polaroid series 6500, is used to interface all the eight sonar transducers in the front, one for all the rear sonars, and one for the side sonars. The modules interface to the main board through the I/O lines. Cyclic excitation of sonars is maintained starting with sonar 1 from the front array, sonar 1 from the side array and sonar 1 from the rear array, which are fired simultaneously. Sonar configuration and firing scheme of AMR is shown in Fig. 5. The switching time is on the order of 40 ms, resulting in all transducers being scanned within 320 ms as three are switched simultaneously. The sonar range is 150 mm to 10 m, with an accuracy of 0.1%. 3.2 LRF, manipulator, and camera interface LRF, mounted on AMR, provides more accurate mapping for reliable navigation. LRF runs on 24 V DC and has a range of up to 8 m with a resolution of 10 mm and a scanning angle of 180. The LRF communicates directly with the SBC1 through its serial port with RS-232 protocol at 38.4 Kbaud, as shown in Fig. 3. The manipulator and the CMOS camera mounted on the manipulator communicate with SBC2 through USB port1 and USB port2, respectively. The compass communicates through COM1 of SBC2 while the stereovision camera communicates with SBC2 over the IEEE-1394 interface.

Fig. 7 AMR software architecture

3.3 Communication between AMR and remote host SBC1 of AMR, acting as the server, is set in the listening mode with COM1 for vehicle control unit and COM2 for the laser as shown in Fig. 3. The navigational algorithm of the whole mission resides in the remote host. During the initialization phase, the remote host, acting as the client, binds with the server for communicating with the vehicle control unit and the laser. The AMR Operating System (AMROS) resides in the vehicle control unit (i.e., in the micro-controller flash memory) and supervises motor feedback and keeps track of robot position, orientation, and overall robot velocity, acceleration, and angle of turn, etc. The idea being that high level software residing in the remote host will issue these commands in order to perform end-level application tasks. Remote host controls the AMR through AMR GUI, shown in Fig. 6. Hence, for autonomous navigation, COM1 is used for sending motion commands, incorporated in the navigational algorithm, to the vehicle control unit and for receiving sonar, encoder data, and other parametric and status data feedback from the vehicle. Figure 7 shows the AMR software architecture. The data to and from the host is sent in packets. On powering up the AMR, AMROS activates all onboard hardware and awaits an initiation packet from the remote host. Once the connection is initiated, the host sends a packet at least every 100 ms to ensure that connectivity is maintained. Similarly, the server sends a packet back to the host every 100 ms. Failing this, the application program assumes loss of contact and initiates a reconnection or exits the application. Data packets typically consist of low-level commands [10].

Fig. 6 AMR GUI showing laser-based map of navigational work area

540

Int J Adv Manuf Technol (2008) 38:536542

Warehouse Work Station 4 Pallet Box Work Station 1 R1 Machine Tool Tool Store Work Station 2 Work Station 5 Operator Work Station 3 R R2 3

WLAN set at different monitoring points within AMR work area in a manufacturing environment and for reconstructing these images for viewing almost without any perceptible delay [4]. Thus, the operator can track the AMR and in case of emergency, can abort the mission.

4 AMR navigation Given an a priori layout of the work area as shown in Fig. 8, AMR carries out its mission by navigating from one workstation to another, transporting jobs and tools in the order set by the operator. In case of any obstacle, AMR automatically bypasses the obstacle and reorients itself to reach its given destination. Localization is also an integral part of autonomous navigation as at every instant the vehicle should know where it is at with respect to its surrounding and how far it has traversed from its starting position and how far it needs to travel to reach its goal. This is where the role of exteroceptive sensors becomes dominant. As all proprioceptive sensors accrue errors due to slippage, inherent sensor bias etc., for accurate localization this data needs to be fused with comparable data from exteroceptive sensors, i.e., compass, GPS, and beacons. AMRs compass gives a better estimate of its orientation than obtained solely from encoder feedback. 4.1 Obstacle avoidance and AMR localization through map building The objective of mobile robot localization with simultaneous map building is to enable a mobile robot to build a map of an unknown environment while concurrently using that map to localize itself. This is one of the important topics in the field of mobile robot navigation as this method allows a mobile robot to be deployed easily with very little preparation. The SICK laser rangefinder scans a semicircle

Goal (Target)

Raw Material Store

Fig. 8 Workspace of AMR

Similarly, COM2 is used for laser commands from the host and laser data feedback from AMR as shown in Fig. 7. As is shown in Fig. 7, SBC2 of AMR also acts as a server for manipulator control with USB port 1 connected to the manipulator and USB port 2 connected to the CMOS camera mounted on the manipulator. Once AMR reaches the given workstation, the remote host activates SBC2 to carry on with manipulator pick and place operation. During navigation, a Bumblebee stereovision camera, mounted in front of AMR and connected through IEEE-1394 interface for image transmission from SBC2, continuously sends images of the navigation scene to the display units so that the operator can monitor the vehicle remotely, and in case of emergency, can stop the vehicle in its tracks. The remote host also has a joystick mode for added flexibility. The operator can use the 2 DOF joystick attached to the USB port of the remote host to maneuver the robot with ease. The operator can always switch between autonomous mode and joystick mode either to retrieve AMR after a mission or after aborting a mission for emergency reasons. He can also bring the AMR back for recharging or set it at a desired location to start a mission. 3.3.1 JPEG image transfer from AMR to image display units over WLAN Reliable transmission and reception of images is imperative for mobile robot perception. Most transmission schemes take advantage of the popular JPEG image format [11, 13] as responsiveness gained from rapid image transmission is more important than perfect image fidelity, since images are already distorted by lossy compression and since relatively few images are closely examined. Robustness is therefore vital for rapid image transmission and reconstruction in a mobile robot network. Hence, we also take advantage of the most popular and widely supported JPEG image file format for transmitting full-color images of 1024768 resolution, as grabbed by a stereovision camera mounted in front of AMR, frame by frame from AMR to multiple clients over

Robotic Vehicle Obstacle

Input Variable: d= Obstacle distance = Obstacle angle Output Variable: = Deviation angle

Starting Point

Fig. 9 Scheme for obstacle avoidance using a fuzzy logic controller

Int J Adv Manuf Technol (2008) 38:536542

541

Fig. 10 Manipulator setup for material handling using template matching

at about 1 Hz and returns the range of the closest laser reflection within every degree. LRF is used to build the map of the workspace of AMR [3]. AMR localizes itself in that workspace during navigation by feature extraction algorithm. By examining typical scans of the laser rangefinder, the desired features are segregated into small clusters. In order for the sensor data to be used by the map-building routine, the range and bearing of these small clusters are extracted. The feature extraction algorithm relies on the size of the clusters and the free space around them. The threshold is set to define how large the free space has to be around the cluster while it navigates through the workspace by avoiding obstacles [2]. Ultrasonic range finders are used for detecting obstacles by finding range and bearing of the obstacles. For safe and effective navigation, a fuzzy logic controller (FLC) is used for obstacle avoidance by detecting the most critical obstacle. In our case, the FLC is a two input, one output system. The antecedents are nearest obstacle distance and the obstacle angle from the robot. The FLC processes the obstacle data and finds out the angle by which the robot should deviate from its current trajectory, illustrated in Fig. 9. A Mamdani-type fuzzy inference engine is used for determining the deviation angle for avoiding the critical obstacle [6, 7].

correlation based adaptive predictive search (CAPS) method, a fast search algorithm in template matching, is used for job identification [12]. CAPS method justifiably selects a set of search steps rather than consecutive point-to-point search for faster job identification. Search steps, i.e., either coarse search or fine search, are selected by calculating the correlation coefficient between template and the image. In our implementation, as the arm moves over the worktable, the camera scans the worktable. As it approaches the job, with each scan, using coarse search technique through pre-calculated vertical and horizontal steps, the correlation coefficient with respect to the stored template is determined to find out the tentative pose of job and transfer the pose information first with respect to camera and then with respect to manipulator base. Next the camera is moved to this position. When the correlation coefficient is greater than matching threshold value, which is based on the statistics of the template, through fine search technique actual pose of the job is calculated [4]. Manipulator gripper is then moved to that position for picking up the job. Adaptive thresholding is used for dynamic image segmentation required for parametric calculation for proper gripping of the object. Using the gray-level distribution of an image, the neighborhood around the highest peak of the histogram is chosen as the threshold region. For real-time processing a variation of Otsus method [9], which chooses the optimal thresholds by maximizing the between-class variance with a heuristic search method, is used in this region for adaptive thresholding [8]. Once the job is identified through parametric calculations, the gripper picks the job and puts it on the platform. In this way, the jobs are stacked on the vehicle and are transported to the next workstation where they are unloaded using reverse operation and AMR continues with its next mission.

6 Conclusion To fabricate a vehicle, incorporating various equipments as described above and making sure that it is balanced, is a challenge by itself. In addition, special care is taken to prevent electro-magnetic interference amongst various subassemblies such as sonar subsystem, manipulator subsystem, motor-controllers, etc. Moreover, as described above, autonomous navigation in an unstructured environment requires synchronization of different processes in a distributed architecture, while maintaining the integrity of the network. But this project also proves the advantages of a distributed architecture, which includes performing various complex tasks without any perceptible delay and safeguarding the total system

5 AMR material handling system AMR material handling system consists of an Inteliteks 5 DOF Scorbot-ER-4u manipulator with a CMOS camera, mounted on the wrist of the manipulator as shown in Fig. 10. Once the AMR navigates its way to the target workstation as depicted in Fig. 8, the manipulator routine is invoked. SBC2, acting as the server, turns on the control of the manipulator control box, which in turn activates the manipulator. The camera grabs the scene and a variation of

542

Int J Adv Manuf Technol (2008) 38:536542 3. Datta S, Banerji D, Mukherjee R (2006) Mobile robot localization with map building and obstacle avoidance for indoor navigation. IEEE International Conference on Industrial Technology (ICIT 06), Mumbai, December 1517 4. Datta S, Ray R (2007) AMR vision system for perception and job identification in a manufacturing environment. Chapter 27 Int J Adv Robotic Syst. Special Issue on Vision Systems, April 5. Katz et al (2006) The UMass mobile manipulator uman: an experimental platform for autonomous mobile manipulation. Workshop on manipulation for human environments at robotics: science and systems. Philadelphia, USA, August 6. Klir GJ, Yuan B (1995) Fuzzy sets and fuzzy logic-theory and applications. Prentice Hall, Upper Saddle River, NJ 7. Kosko B (1991) Neural networks and fuzzy systems. Prentice Hall, Upper Saddle River, NJ 8. Liao P, Chen T, Chung P (2001) A fast algorithm for multilevel thresholding. J Inf Sci Eng 17:713727 9. Otsu N (1979) A threshold selection method from gray-level histogram. SMC-9(1)6266 10. Sen S, Taktawala PK, Pal PK (2004) Development of a rangesensing, indoor, mobile robot with wireless Ethernet connectivity. In: Shome SN (ed) Proc Nat Conf on Adv Manuf Robotics. ISBN 81-7764-671-0, CMERI, January 2004. Allied Publishers Pvt. Ltd., Durgapur, pp 310 11. Schaefer G (2001) JPEG image retrieval by simple operators. CBM 01, Brescia, Italy, September 1921 12. Shijun S et al (2003) Fast template matching using correlationbased adaptive predictive search. Int J Imaging Technol 13:169 178, 2004 13. Wallace GK (1991) The JPEG still picture compression standard. Communications of the ACM 34(4):3044

against major failure that may occur when the total burden rests on a single point of operation. Overall, AMRs architecture is an open architecture with a user-friendly GUI, so that it can be deployed easily without worrying too much about its internal operations. This is particularly suitable in a shop floor or warehouse, where the operator can deploy it for material handling with minimal knowledge and preparation.

Acknowledgments The authors would like to thank the members of the project team on Autonomous Mobile Robot under CSIR network project on Advanced Manufacturing Technology for their help and support. The authors would like to express their sincere gratitude to the director of the institute for his assent in publishing this work.

References

1. Bischoff R, Volker G (1998) Vision-guided intelligent robots for automating manufacturing, materials handling and services. WESIC98 Workshop on European Scientific and Industrial Collaboration on Promoting Advanced Technologies in Manufacturing, Girona, June 2. Csorba M (1997) Simultaneous localization and map building. PhD Thesis, Oxford University Robotics Research Group, Department of Engineering Science

You might also like

- Blanche-An Experimental in Guidance and Navigation of An Autonomous Robot VehicleDocument12 pagesBlanche-An Experimental in Guidance and Navigation of An Autonomous Robot VehiclemaysamshNo ratings yet

- Annual Cyber Security Training New Hire PDFDocument82 pagesAnnual Cyber Security Training New Hire PDFsajeerNo ratings yet

- Practical Development of An Experimental PDFDocument9 pagesPractical Development of An Experimental PDFharold medina martinezNo ratings yet

- Design and Implementation of Cartesian RobotDocument6 pagesDesign and Implementation of Cartesian RobotSang VũNo ratings yet

- Shi Final ReportDocument12 pagesShi Final ReportMohamed SaeedNo ratings yet

- Abstract. This Paper Describes MRL Small Size Soccer Team Activities in MeDocument8 pagesAbstract. This Paper Describes MRL Small Size Soccer Team Activities in MeAli AzkNo ratings yet

- COBEM01 BDocument7 pagesCOBEM01 BEduarda SchwarzNo ratings yet

- Project Title...Document7 pagesProject Title...Abhishek ShahNo ratings yet

- RaDMI 2014Document17 pagesRaDMI 2014Shin Han NxtNo ratings yet

- GSM Based Arial Photography Using Remote Flying RobotDocument4 pagesGSM Based Arial Photography Using Remote Flying RobotseventhsensegroupNo ratings yet

- 3D-Odometry For Rough Terrain - Towards Real 3D Navigation: Pierre Lamon and Roland SiegwartDocument7 pages3D-Odometry For Rough Terrain - Towards Real 3D Navigation: Pierre Lamon and Roland SiegwartTrương Đức BìnhNo ratings yet

- Development of An Advanced Servo Manipulator For Remote Handling in Nuclear InstallationsDocument8 pagesDevelopment of An Advanced Servo Manipulator For Remote Handling in Nuclear InstallationsK. JayarajanNo ratings yet

- Encoder-Free Odometric System For Autonomous MicrorobotsDocument11 pagesEncoder-Free Odometric System For Autonomous MicrorobotsSergeNo ratings yet

- Fabricating A Completely Assemblable Robotic ArmDocument19 pagesFabricating A Completely Assemblable Robotic ArmshanthiniNo ratings yet

- Design Analysis of A Remote Controlled P PDFDocument12 pagesDesign Analysis of A Remote Controlled P PDFVũ Mạnh CườngNo ratings yet

- Chapter Two Literature ReviewDocument14 pagesChapter Two Literature ReviewdesalegnNo ratings yet

- Advance Line Following Robot: Shivam Singh, Prashant KumarDocument3 pagesAdvance Line Following Robot: Shivam Singh, Prashant Kumaranil kasot100% (1)

- Microcontroller Based Robotic ArmDocument5 pagesMicrocontroller Based Robotic Armনূর হোসেন সৌরভ0% (1)

- Design of 6 Dof Robotic Arm Controlled Over The Internet: G. Rajiv and SivakumarDocument5 pagesDesign of 6 Dof Robotic Arm Controlled Over The Internet: G. Rajiv and SivakumarSarmad GulzarNo ratings yet

- 897-900 IJER - 2016 - 1209 KrishnaPrasadh KSDocument4 pages897-900 IJER - 2016 - 1209 KrishnaPrasadh KSInnovative Research PublicationsNo ratings yet

- Automatic Balancing Robot: Madhuram.M (Assistant Professor)Document12 pagesAutomatic Balancing Robot: Madhuram.M (Assistant Professor)رشيد بن صغيرNo ratings yet

- Wireless Robot Control With Robotic Arm Using Mems and ZigbeeDocument5 pagesWireless Robot Control With Robotic Arm Using Mems and ZigbeeCh RamanaNo ratings yet

- Conferencepaper 2Document5 pagesConferencepaper 2Leo BoyNo ratings yet

- Line Following For A Mobile Robot: Proceedings of SPIE - The International Society For Optical Engineering October 1995Document9 pagesLine Following For A Mobile Robot: Proceedings of SPIE - The International Society For Optical Engineering October 1995Fayçal BEN HMIDANo ratings yet

- Navigator Design Report 2011 Intelligent Ground Vehicle CompetitionDocument15 pagesNavigator Design Report 2011 Intelligent Ground Vehicle CompetitionKenDaniswaraNo ratings yet

- Development of CMU Direct-Drive Arm IIDocument24 pagesDevelopment of CMU Direct-Drive Arm IIjain1912praveenNo ratings yet

- Final Year Project WIFI CONTROL ROBOT Ijariie7393Document6 pagesFinal Year Project WIFI CONTROL ROBOT Ijariie7393NayaaNo ratings yet

- Wireless Mobile Robotic ArmDocument7 pagesWireless Mobile Robotic ArmUendel DiegoNo ratings yet

- Metal Detecting RobotDocument51 pagesMetal Detecting RobotBariq MohammadNo ratings yet

- Design and Implementation of Line Follower and Obstacle Detection Robot PDFDocument9 pagesDesign and Implementation of Line Follower and Obstacle Detection Robot PDFVINH Nhan ĐứcNo ratings yet

- Kinematic Analysis of 6 DOF Articulated Robotic ArDocument5 pagesKinematic Analysis of 6 DOF Articulated Robotic ArRoman MorozovNo ratings yet

- Design Implementation and Control of A H PDFDocument11 pagesDesign Implementation and Control of A H PDFNikhil HasabiNo ratings yet

- Automi Research PaperDocument4 pagesAutomi Research PaperHimanshu vermaNo ratings yet

- Harshit Robotics 10Document13 pagesHarshit Robotics 10harshitkargathara21No ratings yet

- Summary of Graduation ProjectDocument1 pageSummary of Graduation ProjectSantiago BritoNo ratings yet

- Restaurant BOTSDocument8 pagesRestaurant BOTSSaudin TantriNo ratings yet

- Chapter 2 SolutionDocument4 pagesChapter 2 SolutionEngr ShabirNo ratings yet

- Engineers and Scientist Around The World Are Working Every Day To Make The Human Life Better and ComfortDocument12 pagesEngineers and Scientist Around The World Are Working Every Day To Make The Human Life Better and ComfortMAHESH SNo ratings yet

- 1902 03547 PDFDocument8 pages1902 03547 PDFDipak KumarNo ratings yet

- Line Follower Robot Is A Mobile Machine That Can Detect and Follow Line Which Is Drawn On The FloorDocument54 pagesLine Follower Robot Is A Mobile Machine That Can Detect and Follow Line Which Is Drawn On The FloorHasna AbdelwahabNo ratings yet

- Foreign 2Document8 pagesForeign 2Rhodora A. BorjaNo ratings yet

- Robotic Arm: Electronic & CommunicationDocument16 pagesRobotic Arm: Electronic & CommunicationDilipsinh DodiyaNo ratings yet

- JETIRFA06001Document5 pagesJETIRFA06001Trần ĐạtNo ratings yet

- Net Operated Land Rover: Hemant C, Ajay V, Shyamal L, Mayuri KDocument4 pagesNet Operated Land Rover: Hemant C, Ajay V, Shyamal L, Mayuri Kneekey17No ratings yet

- Robô Pêndulo 2 Rodas - 1Document7 pagesRobô Pêndulo 2 Rodas - 1Victor PassosNo ratings yet

- Warehouse Management BOT Using ArduinoDocument6 pagesWarehouse Management BOT Using ArduinoIJRASETPublicationsNo ratings yet

- Optimization of PID Control For High Speed Line Tracking RobotsDocument8 pagesOptimization of PID Control For High Speed Line Tracking RobotsPiero RaurauNo ratings yet

- BEARCAT Cub Design ReportDocument16 pagesBEARCAT Cub Design ReportMohd Sazli SaadNo ratings yet

- Articulated Robotic ArmDocument9 pagesArticulated Robotic ArmAkshat MishraNo ratings yet

- SOC For Detecting LandminesDocument4 pagesSOC For Detecting LandminesIOSRjournalNo ratings yet

- Presentation Pick and Place Robo Arm 3d PrintedDocument16 pagesPresentation Pick and Place Robo Arm 3d PrintedKshitij BandarNo ratings yet

- Iot Embedded Login For Stepper Motor Speed ControlDocument10 pagesIot Embedded Login For Stepper Motor Speed ControlThrisul KumarNo ratings yet

- Industral RobotsDocument32 pagesIndustral RobotsSasa AtefNo ratings yet

- WheelchairDocument12 pagesWheelchairmallikaNo ratings yet

- Agribot: An Agriculture Robot: Vol. 4, Issue 1, January 2015Document3 pagesAgribot: An Agriculture Robot: Vol. 4, Issue 1, January 2015Dreamer JayaNo ratings yet

- Robotic Arm For Pick and Place Application: Kaustubh Ghadge, Saurabh More, Pravin GaikwadDocument9 pagesRobotic Arm For Pick and Place Application: Kaustubh Ghadge, Saurabh More, Pravin Gaikwadsachin bibhishan parkhe0% (1)

- Implementing Palletization Application of 4-Axis Igus Robot ManipulatorDocument20 pagesImplementing Palletization Application of 4-Axis Igus Robot ManipulatorKV SAI KIRANNo ratings yet

- 1 PBDocument7 pages1 PBFyndi Aw AwNo ratings yet

- Student Projects 2019-2020: Course On Modelling and Simulation of Mechatronic SystemsDocument58 pagesStudent Projects 2019-2020: Course On Modelling and Simulation of Mechatronic SystemsDomenico GarofaloNo ratings yet

- Formato Paper RusiaDocument12 pagesFormato Paper RusiaHECTOR COCHISE TERAN HERRERANo ratings yet

- Resume 2020version3Document1 pageResume 2020version3api-438683617No ratings yet

- Gujarat PGCET Question Bank ECDocument262 pagesGujarat PGCET Question Bank ECsuhradamNo ratings yet

- Composite CylinderDocument3 pagesComposite CylinderSafarudin RamliNo ratings yet

- Group Assignment For COM2023Document2 pagesGroup Assignment For COM2023mdmmonalissaNo ratings yet

- Fireware Release-Notes v12!1!3Document28 pagesFireware Release-Notes v12!1!3Francisco Vargas RosarioNo ratings yet

- Alcatel-Lucent Omniaccess 5800: Enterprise Services RoutersDocument5 pagesAlcatel-Lucent Omniaccess 5800: Enterprise Services RoutersMoises ReznikNo ratings yet

- Cisco Unity VM Setup and Prompts PDFDocument3 pagesCisco Unity VM Setup and Prompts PDFNovan AndriantoNo ratings yet

- Objective: Michael JonesDocument1 pageObjective: Michael JonesnaveenbalaNo ratings yet

- CoolMaster Programmers Reference Manual (PRM)Document19 pagesCoolMaster Programmers Reference Manual (PRM)nassar_aymanNo ratings yet

- Activity Sheet Week4 7Document2 pagesActivity Sheet Week4 7William Vincent SoriaNo ratings yet

- Implementation of Socket Programming Simulation Using Quantum Communication TechnologiesDocument6 pagesImplementation of Socket Programming Simulation Using Quantum Communication TechnologiesShowribabu KantaNo ratings yet

- Company XYZ Test Strategy Sample Ver 1Document28 pagesCompany XYZ Test Strategy Sample Ver 1StevenBurryNo ratings yet

- (M3S1 POWERPOINT) Pre Reading StrategiesDocument18 pages(M3S1 POWERPOINT) Pre Reading StrategiesJibril DiazNo ratings yet

- Chapter 10 TCP VegasDocument14 pagesChapter 10 TCP VegasGlady PutraNo ratings yet

- Powershell Commands PDFDocument3 pagesPowershell Commands PDFFaique MemonNo ratings yet

- Study On Delhi Metro: Summer Internship Project Report - 2010Document63 pagesStudy On Delhi Metro: Summer Internship Project Report - 2010nitik chakmaNo ratings yet

- 4th Sem Micro-Syllabus All in 1Document30 pages4th Sem Micro-Syllabus All in 1ArpoxonNo ratings yet

- Transport of ABAP QueryDocument2 pagesTransport of ABAP Querytarunaggarwal11No ratings yet

- Lec 05Document48 pagesLec 05Sam VergaraNo ratings yet

- Thinking Inside The Box - Web Services and XML Management Web Services and XML ManagementDocument35 pagesThinking Inside The Box - Web Services and XML Management Web Services and XML Managementpetr_petrovNo ratings yet

- Shadow Dextrous HandDocument9 pagesShadow Dextrous HandMartin LotharNo ratings yet

- Lync - 10533ADTrainerHandbookDocument776 pagesLync - 10533ADTrainerHandbookpichyxwolfgangNo ratings yet

- 07 GEI-100485 - Human-Machine Interface HMIDocument10 pages07 GEI-100485 - Human-Machine Interface HMIEduardo Nascimento100% (1)

- B 57 DiagmanDocument87 pagesB 57 DiagmanMilan JanjićNo ratings yet

- TAC INET 1200 Series SCU Installation SheetDocument2 pagesTAC INET 1200 Series SCU Installation SheetMarin MariusNo ratings yet

- Aixtechblog Wordpress Com 2016 09 19 How To Remove Powerha System Mirror Hacmp ConfigurationDocument1 pageAixtechblog Wordpress Com 2016 09 19 How To Remove Powerha System Mirror Hacmp ConfigurationFouad BaroutNo ratings yet

- c.pCO Sistem PDFDocument64 pagesc.pCO Sistem PDFElşən Yusifoğlu ƏsgərovNo ratings yet

- Curicuram Vitae: Career ObjectiveDocument3 pagesCuricuram Vitae: Career Objectiveprerna nandNo ratings yet

- Guru Nanak Dev UniversityDocument33 pagesGuru Nanak Dev UniversityRibhu SethNo ratings yet