Professional Documents

Culture Documents

Performance Analysis of Linear Appearance Based Algorithms For Face Recognition

Performance Analysis of Linear Appearance Based Algorithms For Face Recognition

Uploaded by

surendiran123Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Performance Analysis of Linear Appearance Based Algorithms For Face Recognition

Performance Analysis of Linear Appearance Based Algorithms For Face Recognition

Uploaded by

surendiran123Copyright:

Available Formats

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 258

Performance analysis of Linear appearance based

algorithms for Face Recognition

Steven Lawrence Fernandes

Asst. Professor,

Dept of E & C,

Sree Devi Institute of Technology,

Mangalore, India

AbstractAnalysing the face recognition rate of various current

face recognition algorithms is absolutely critical in developing

new robust algorithms. I n his paper we propose performance

analysis of Principal Component Analysis (PCA), Linear

Discriminant Analysis (LDA) and Locality Preserving

Projections (LPP) for face recognition. This analysis was carried

out on various current PCA, LDA and LPP based face

recognition algorithms using standard public databases. Among

various PCA algorithms analyzed, Manual face localization used

on ORL and SHEFFIELD database consisting of 100

components gives the best face recognition rate of 100%, the

next best was 99.70% face recognition rate using PCA based

I mmune Networks (PCA-IN) on ORL database. Among various

LDA algorithms analyzed, I llumination Adaptive Linear

Discriminant Analysis (I ALDA) gives the best face recognition

rate of 98.9% on CMU PIE database, the next best was 98.125%

using Fuzzy Fisherface through genetic algorithm on ORL

database. Among various LPP algorithms analyzed, Subspace

Discriminant LPP (SDLLP) provides the best face recognition

rate of 98.38% on ORL database, the next best was 97.5% using

Contourlet-based Locality Preserving Projection (CLPP) on

ORL database.

Keywords-face recognition; Principal Component Analysis;

Linear Discriminant Analysis; Locality Preserving Projections;

PCA-I mmune Network; I llumination Adaptive LDA; Fisher

Discriminant; Subspace Discriminant LPP; Contourlet-based

Locality Preserving Projection.

I. INTRODUCTION

Facial recognition methods can be divided into appearance-

based or model-based algorithms. Appearance-based methods

represent a face in terms of several raw intensity images. An

image is considered as a high-dimensional vector. Statistical

techniques are usually used to derive a feature space fromthe

image distribution. The sample image is compared to the

training set.

Appearance methods can be classified as linear or non-

linear. Linear appearance-based methods perform a linear

dimension reduction. The face vectors are projected to the

basis vectors, the projection coefficients are used as the

feature representation of each face image, and approaches are

PCA, LDA, and LPP. Non-linear appearance methods are

more complicate. Linear subspace analysis is an

approximation of a nonlinear manifold. Kernel PCA (KPCA)

[23] is a method widely used.

Model-based approaches can be 2-Dimensional or 3-

Dimensional. These algorithms try to build a model of a

human face. These models are often morphable. A morphable

model allows classifying faces even when pose changes are

present, and approaches are Elastic Bunch Graph Matching

[24] or 3D Morphable Models [25].

Important information may be contained in the high-order

relationship among pixels, so Independent Component

Analysis [26-27] seems feasible to be a promising face feature

extraction method but ICA algorithms are iterative, time

consuming and have converge difficultly.

PCA is a transformation that chooses a new coordinate

system for data set that the greatest variance by any projection

of the data set comes to lieon the first axis (the first principal

component), the second axis corresponds to the maximum

remaining variations in the dimension orthogonal to the first

axis, and so on. The fundamental idea behind PCA is that if

there are series of multidimensional data vectors representing

objects which have similarities, it is possible to use

transformation matrix to create a reduces space that accurately

describes the original multidimensional vectors.

In LDA the original data is transformed into a lower

dimensional space (feature space) such that the ratio of the

between-class scatter matrix to within-class scatter matrix is

maximized i.e. the between-class scatter matrix is maximized

while the within-class scatter matrix is minimized and thus

maximumdiscrimination is achieved.

LPP proposed by He and Niyogi is an alternative to PCA.

LPP is a linear manifold learning approach. It can be viewed

as a linear approximation of Laplacian eigenmaps. The first

step of LPP is to generate an unsupervised neighborhood

graph on training data, and then finds an optimal locality

preserving projection matrix under certain criterion.

In this paper we report performance analysis of various

current PCA, LDA and LPP based algorithms for face

recognition. The evaluation parameter for the study is face

recognition rate on various standard public databases. The

remaining of the paper is organized as follows: Section II

provides a brief overview of PCA, Section III presents PCA

algorithms analysed, Section IV provides brief overview of

LDA, Section V presents LDA algorithms analysed. Section

VI provides brief overview of LPP, Section VII presents LPP

algorithms analysed. Section VIII presents performance

analysis of various PCA, LDA and LPP based algorithms

finally Section IX draws the conclusion.

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 259

II. PRINCIPAL COMPONENT ANALYSIS (PCA)

Consider the training sample set of face image

} {

1 2

, , , ,

M

F x x x = .

where

( )

, , 1, ,

n

i i

x x R i M e = . corresponds to the

lexicographically ordered pixels of the ith face image, and

where there are M face images. PCA tries to mapp the

original n-dimensional image space into an mdimensional

feature space, where m<<n. The new feature vectors

m

i

y R e are defined by the following linear transform:

T

k k

y W x = 1, , k M = .

where

1 2

[ , , , ], ,

n

m i

W w w w w R = . e which is

orthogonal with each other is the eigenvector of total scatter

matrix

T

S corresponding to the

th

m largest eigenvalue. The

total scatter matrix is defined as

( )( )

1

M

T

T k k

k

S x x

=

=

Where is the mean value of all training samples.

III. PCA ALGORITHMS ANALYZED

A. PCA and Support Vector Machine (SVM)

PCA is used to extract the essential characteristics of face

images, SVM as classifier. One against one classification

strategy for multi-class pattern recognition is used based on

2D static face image [1].

B. Incremental Two-Dimensional Two-Directional

Principal Component Analysis (I(2D)2PCA)

Feature extraction method that combines advantages of

Two-Directional Principal Component Analysis (2D)

2

PCA

and Incremental PCA (IPCA). I(2D)

2

PCA consumes less

computational load than IPCA as well as smaller memory

waste than (2D)

2

PCA [2].

C. Infrared face recognition based on the Compressive

Sensing (CS) and PCA

The facial image is normalized and then the normalized

image does fast compressive sensing. PCA is used for non-

adaptive linear projections from CS which then classifies the

image using 3-nearest neighbor method [3].

D. Symmetrical Weighted Principal Component

Analysis (SWPCA)

SWPCA applies mirror transformto facial images, and

gets the odd and even symmetrical images based on the odd-

even decomposition theory. The Weighted Principal

Component Analysis (WPCA) is performed on the odd and

even symmetrical training sample sets respectively to extract

facial image features and nearest neighbor classifier is

employed for classification [4].

E. PCA based Immune Networks (PCA-IN)

PCA is utilized to obtain eigenvalues and eigenvectors of

the face images, and then the randomly selected single

training sample is input into the immune networks which are

optimized using genetic algorithms [5]. This experiment is

repeated for 30 times and the Average Recognition Rate

(ARR) is obtained.

F. Manual face localization

Localizes the face and eliminates the background

information fromthe image in a manner that the majority of

the cropped image consists of the facial pattern. Curvelet

transformis used to transformthe image into a new domain

and to calculate initial feature vectors. The feature vectors are

then dimensionally reduced using Two Dimensional Principal

Component Analysis (B2DPCA) and classified using

Extreme Learning Machine (ELM) [9].

G. Fractional Fourier transform(FRFT) and PCA

The face images are transformed into FRFT domain. PCA

is adopted to reduce the dimension of face images and

Mahalanobis distance is used for classifying [10].

H. Supervised learning framework for PCA-based face

recognition using Genetic Network Programming (GNP)

fuzzy data mining (GNP-FDM)

Genetic based Clustering Algorithm (GCA) is used to

reduce the number of classes. A Fuzzy Class Association

Rules (FCARs) based classifier is applied to mine the

inherent relationships between eigen-vectors [11].

I. PCA and minimumdistance classifier

Different facial images of a single human face are taken

together as a cluster. PCA is applied for feature extraction.

Minimumdistance classifier is used for the recognition that

avoids the exploit of threshold value which is changeable

under different distance classifiers [12].

IV. LINEAR DISCRIMANT ANALYSIS

Let us consider a set of N sample images {x

1

, x

2

,..., x

n

}

taking in an n-dimensional image space, and assume that each

image belongs to one of c classes {c

1

,c

2

,c

3

,,c

c

}.

Let Ni be the number of the samples in class

( )

1

1

1, 2, , ,

N

i i i

i

c i c x

N

=

= . . =

be the mean of the samples in class Ci. Then the between-

class scatter matrix Sb is defined as

( ) ( )

1

1

c

T

b i i i

i

S N

N

=

=

The within-class matrix S

w

is defined as

( ) ( )

1

1

k i

c

T

i i i i

i x c

S x x

N

= e

=

In LDA, the projection W

opt

is chosen to maximize the

ratio of the determinant of the between-class scatter matrix of

the projected samples to the determinant of the within-class

scatter matrix of projected samples

| |

1 2 3

arg

T

b

opt d

T

W S W

W mxw

W S W

= = .

where {w

i

|i=1,2, .,d} is the set of generalized eigen-

vectors of S

b

and S

w

corresponding to the m largest

generalized eigenvalues {

i

=1,2,,d}, i.e.,

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 260

b i i i

S S

e = e

,

1,2, , . i d = ..

V. LDA ALGORITHMS ANALYZED

A. Regularized-LDA (R-LDA)

R-LDA is used for extracting low-dimensional discriminant

features fromhigh dimensional training images and then these

features are used by Probabilistic Reasoning Model (PRM) for

classification [13].

B. Multi-Feature Discriminant Analysis (MFDA)

Feature extraction method that combines advantages of

Two-Directional Principal Component Analysis (2D)

2

PCA

and Incremental PCA (IPCA). I(2D)

2

PCA consumes less

computational load than IPCA as well as smaller memory

waste than (2D)

2

PCA [2].

C. Rearranged Modular 2DLDA (Rm2DLDA)

Two-dimensional linear discriminant analysis has lower

time complexity but it implicitly avoids the small sample

problem encountered in classical LDA Rm2DLDA was

developed. It was based on the idea of dividing an image into

sub-images and then concatenating them to form a wide

image matrix [15].

D. Illumination Adaptive Linear Discriminant Analysis

(IALDA)

The images of many subjects under the different lighting

conditions are used to train illumination direction classifier

and varieties of LDA projection matrices. Then the

illumination direction of a test sample is estimated by

illumination direction classifier, the corresponding LDA

feature which is robust to the illumination variation between

images under the standard lighting conditions and the

estimated lighting conditions is extracted [16].

E. Fuzzy Fisherface (FLDA) through genetic algorithm

Searches for optimal parameters of membership function.

The optimal number of nearest neighbors to be considered

during the training is also found through the use of genetic

algorithms [17].

F. Semi-supervised face recognition algorithmbased

on LDA self-training)

Augments a manually labeled training set with new data

from an unlabeled auxiliary set to improve recognition

performance [18]. Without the cost of manual labeling such

auxiliary data is often easily acquired but is not normally

useful for learning.

G. Randomsampling LDA

To reduce the influence of unimportant or redundant

features on the variables generated by PCA, randomsampling

LDA was introduced. By incorporating Feature Selection for

face recognition (FS_RSLDA) was introduced, in this

algorithmunimportant or redundant features are removed at

first, this way the obtained weak classifier is made better

[19].

H. Revised Non-negative Matrix Factorization (NMF)

with LDA based color face recognition)

Block diagonal constraint is imposed on the base image

matrix and coefficient matrix on the basis of the constraints

of traditional NMF. And LDA is then implemented on

factorization coefficients to fuse class information [20].

I. Layered Linear Discriminant Analysis (L-LDA)

Decrease False Acceptance Rate (FAR) by reducing the

face dataset to very small size through L-LDA .It is intensive

to both small subspace (SSS) and large face variations due to

light or facial expressions by optimizing the separability

criteria. Hence it provides significant performance gain,

especially on similar face database and Small Subspace (SSS)

problems [21].

VI. LOCALITY PRESERVING PROJECTIONS

LPP is an unsupervised manifold dimensionality reduction

approach, which aims to find the optimal projection matrix W

by minimizing the following criterion function:

( )

2

1

,

.

i j ij

i j

J W y y S =

,

Substituting

T

i i

y W x =

into above objection function, it

yields by direct computation that

1

( ) 2 ( ),

T T

J W traceW XLX W =

Where L=D-S is called Laplacian matrix, D is a diagonal

matrix defined by

{

11 22

, , , }

NN

D diag D D D =

where .

ii ij

i

D S =

Matrix D provides a natural measure on the data points.

The bigger the value D

ii

(corresponding to y

i

) is, the more

important is y

i

. Thereby, the projection matrix W should

maximize the following constraint objective function

simultaneously:

2

1

( ) ( )

N

T T

i i

i

J W y Dy traceY DY

=

= =

( ).

T T

traceW XDX W =

Solving problems min J

1

(W) and max J

2

(W)

simultaneously is equivalent to minimizing the following

criterion function:

( )

( ) ,

( )

T T

T T

traceW XLX W

J W

traceW XDX W

=

The optimal locality preserving projection matrix

argmin ( )

d l

LPP

W R

W J W

e

=

can be obtained by solving the following generalized

eigen-value problem:

( ) .

T T

XLX W XDX W = A

where is a diagonal eigenvalue matrix. While in most

cases, the number of training data is smaller than the

dimension of feature vector, i.e. d << N. When this 3S

problemoccurs, the matrix

T

XDX is not full rank.

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 261

VII. LPP ALGORITHMS ANALYZED

A. Bilateral Two-Dimensional LPP

It is based on 2D image matrices rather than column

vectors so the image matrix does not need to be transformed

into a long vector before feature extraction. The advantage

arising in this way is that 2D image matrices can be

effectively compressed from horizontal and vertical

directions and uses F-normclassification measure [30].

B. Regularized LPP (RLPP)

Supervised graph and regularization technique which not

only overcomes the singularity problemof LPP but also find

optimal LPP projection matrix in the entire input space [31].

C. Support Vector Machines (SVM) and LPP

It is combined semi-supervised face recognition, which

constructs data model on video sequence to unearth the

space-time information. It then uses semi-supervised LPP to

build a nearest neighbour graph which models the inherent

geometrical structure of the face space. SVM is applied to

find a separating hyperplane for the training data set and

predict the labels for the testing face data [32].

D. Subspace Discriminant LPP (SDLLP)

Based on discriminant graph and modified LPP criterion,

SDLLP method tackles the 3S issue of LPP and gives an

optimal algorithm by introducing corresponding eigen-

systems [33]. The projection feature vectors learned are linear

independent and make modified LPP criterion function reach

maximum.

E. Discrete sine transform(DST)

DST-feature based LPP algorithm is used for face

recognition and to obtain the 2D-DST facial feature. 1D-DST

is performed along two directions of a facial image matrix,

namely horizontal and vertical directions. A column DST-

feature vector is then formed by scanning its 2D-DST

coefficients along a zigzag route starting from the top left

corner and subsequently LPP can be performed in DST

domain directly [34].

F. Contourlet-based Locality Preserving Projection

(CLPP)

For dimensionality reduction and feature extraction CLPP

is used. The main advantage of CLPP over LPP lies in the

fact that the former realize dimensionality reduction

effectively than later. This can reduce the complexity greatly

and avoid the disadvantages of the pre-processing PCA step

[35].

G. Enhanced Supervised Locality Preserving

Projections (ESLPP)

An extension to LPP named ESLPP allows both locality

and class label information to be incorporated. This improves

the performance of classification. ESLPP uses similarity

based on robust path instead of Gaussian heat kernel

similarity. It can capture the underlying geometric

distribution of samples even when there are noise and outliers

[36].

H. Two-Dimensional Discriminant Locality Preserving

Projection (2DDLPP)

2DDLPP is a 2D-based feature extraction method, which

can directly process 2D image matrix without PCA pre-

processing. Hence it can be possible to retain the valid

information of human face images. 2DDLPP aims to preserve

the local manifold structure information and the discriminant

information [37].

I. Fractal Locality Preserving Projections (FLPP)

FLPP calculates the fractal codes of face images, and then use

LPP method to analysis the manifold structure, and do face

recognition [38].

J . Multilinear Principal Component Analysis (MPCA) and

LPP

It involves preprocessing in order to improve the face

image, then the feature extraction is achieved by merging

MPCA along with LPP. To calculate the feature projection

matrices, the test face sample is mapped onto a feature matrix

with the assistance of MPCA, LPP and there by the face

recognition is done by comparing the test feature matrix with

the enrolled face features in the database using L2 distance

[39].

K. Regularised Generalised Discriminant Locality

Preserving Projections (RGDLPP)

RGDLPP locality preserving within-class scatter is

replaced in DLPP approach by locality preserving total

scatter. To alleviate the problemof unreliable small and zero

eigenvalues caused by noise and the limited number of

training samples regularising the small and zero eigenvalues

of locality preserving within-class scatter is done, this enables

RGDLPP to be executed in the full sample space and

alleviates the over-fitting problem[40].

L. Tensor Locality Preserving Projections (TLLP)

TLLP is a natural extension of LPP to the multilinear case,

which can take data directly in the form of tensors of

arbitrary order as input hence there exist significant reduction

in both space complexity and time complexity [41].

VIII. PERFORMANCE ANALYSIS

A. Performance analysis of various PCA based algorithms

Illumination invariant face recognition based on DCT and

PCA on YALE Database B gives accuracy of 94.2% [28].

TABLE I. PERFORMANCE COMPARISON BETWEEN

PCA+NN, SVM AND PCA+SVM ON ORL DATABASE

Class

Number

Training

samples

Test

samples

Method

Recognition

rate (%)

200 C

60

140

PCA+NN 90

SVM 85.71

PCA+SVM 94.29

40 C

120

280

PCA+NN 80.36

SVM 78.93

PCA+SVM 81.10

As Table I shows, face recognition rate of PCA+SVM

method, under small samples circumstance, is better than

PCA+NN and SVM [1].

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 262

TABLE II. PERFORMANCE COMPARISON BETWEEN PCA,

2DPCA, (2D)2PCA, IPCA, I(2D)PCA AND I(2D)2PCA ON YALE

DATABASE

Method Recognition rate (%)

PCA 80.80

2DPCA 82.05

(2D)

2

PCA 82.13

IPCA 78.47

I(2D)PCA 81.19

I(2D)

2

PCA 81.39

TABLE III. PERFORMANCE COMPARISON BETWEEN PCA,

2DPCA, (2D)2PCA, IPCA, I(2D)PCA AND I(2D)2PCA ON ORL

DATABASE

Method Recognition rate(%)

PCA 85.14

2DPCA 86.29

(2D)

2

PCA 86.64

IPCA 84.75

I(2D)PCA 86.16

I(2D)

2

PCA 86.28

Table II and Table III shows, face recognition rate of

I(2D)

2

PCA is better when compared to PCA, 2DPCA,

(2D)

2

PCA, IPCA, I(2D)PCA on YALE and ORL databases

[2].

TABLE IV. PERFORMANCE COMPARISON BETWEEN

EIGENFACE, EIGEN-GEFES AND EIGEN-GEFEW ON FRGC

DATABASE

Methods Recognition rate (%)

Eigenface 87.14

Eigen-GEFeS 86.67

Eigen-GEFeW 91.42

Table IV shows, Eigen-GEFeW is the best performing

instance when compared with Eigenface and Eigen-GEFeS

on Face Recognition Grand Challenge (FRGC) dataset [29].

Infrared face recognition based on the compressive sensing

and PCA is invariant to variations in facial expressions and

viewpoint, and is computationally efficient [3].

TABLE V. PERFORMANCE COMPARISON BETWEEN PCA,

SPCA, WPCA AND SWPCA ON ORL DATABASE

Method

Training

samples/class

Recognition rate

(%)

PCA 6 92.50

SPCA 6 94.37

WPCA 6 94.37

SWPCA 6 96.00

TABLE VI. PERFORMANCE COMPARISON BETWEEN PCA,

SPCA, WPCA AND SWPCA ON YALE DATABASE

Method

Training

samples/class

Recognition rate

(%)

PCA 4 85.71

SPCA 4 88.57

WPCA 4 89.52

SWPCA 4 93.33

Table V and Table VI shows, the correct recognition

accuracy with SWPCA improved almost by 10% compared

with PCA. The reason that the SWPCA method performs

better than other conventional algorithms is that SWPCA not

only utilizes the natural symmetrical property of human face

to enlarge the number of training samples, but also employs

the weighted PCA space to improve the robustness against

variance of illumination and expression [4].

TABLE VII. PERFORMANCE COMPARISON BETWEEN

2DPCA, DDCT AND 2PCA, MODULAR WEIGHTED (2D)2PCA AND

PCA-IN ON ORL DATABASE

Methods Recognition rate (%)

2DPCA [6] 76.70

DDCT and 2PCA [7] 76.22

Modular Weighted

(2D)

2

PCA [8]

72.22

PCA-IN 99.70

Table VII shows, best performance (99.70%) of PCA-IN

classifiers was compared with the results reported in [6-8].

PCA-IN method outperformed all other methods [5]. Face

recognition rate of Manual face localization on ORL and

SHEFFIELD database consisting of 100 components is 100%

[9].

In FRFT face images are transformed into FRFT domain,

it uses several angles characters for classifying. Experiments

on FERET database shows that FRFT provides new insights

into the role that pre-processing methods play in dealing with

images [10].

GNP-FDM successfully prevents the accuracy loss caused by

a large number of classes in the Multiple Training Images per

Person Complicated Illumination Database (MTIP-CID). GCA

reduces the overlaps in the PCA domain [11].

PCA and minimumdistance classifier gives a recognition

rate of 96.7% on ORL database [12].

B. Performance analysis of various LDA based algorithms

TABLE VIII. PERFORMANCE COMPARISON BETWEEN R-LDA

AND R-LDA USING PRM ON YALE DATABASE

Number of

features

Methods

Recognition rate

(%)

32 R-LDA 95

32

R-LDA

Using

PRM

97.5

TABLE IX. PERFORMANCE COMPARISON BETWEEN R-LDA

AND R-LDA USING PRM ON UMIST DATABASE

Number of

features

Methods

Recognition rate

(%)

12 R-LDA 88.50

12

R-LDA Using

PRM

98.48

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 263

Table VIII and Table IX shows, R-LDA Using PRM gives

better recognition when compared to R-LDA on YALE and

UMIST databases. Further it is observed that by taking more

number of features (32), the recognition rate is maximum

(97.5%) for ORL database and by considering 12 number of

features in case of UMIST database the recognition rate is

98.48% [13].

Compared to LDA, MFDA significantly boosts the

recognition performance. The accuracy for LDA is 60%

compared to the 83.9% accuracy of MFDA [14].

TABLE X. PERFORMANCE COMPARISON BETWEEN

2DLDA, RM2DLDA (2X2) AND RM2DLDA (4X4) ON ORL DATABASE

Methods Recognition rate (%)

2DLDA 95.65

Rm2DLDA(2 x 2) 96.65

Rm2DLDA(4 x 4) 97.1

TABLE XI. PERFORMANCE COMPARISON BETWEEN

2DLDA, RM2DLDA (2X2) AND RM2DLDA (4X4) ON YALEB

DATABASE

Methods Recognition rate (%)

2DLDA 88.68

Rm2DLDA(2 x 2) 90.75

Rm2DLDA(4 x 4) 91.55

TABLE XII. PERFORMANCE COMPARISON BETWEEN

2DLDA, RM2DLDA (2X2) AND RM2DLDA (4X4) ON PIE DATABASE

Methods Recognition rate (%)

2DLDA 90.58

Rm2DLDA(2 x 2) 93.0

Rm2DLDA(4 x 4) 95.04

Table X, Table XI and Table XIII shows, Rm2DLDA

gives better recognition when compared to 2DLDA on ORL,

YALE and PIE databases [15].

TABLE XIII. PERFORMANCE COMPARISON BETWEEN LDA

AND IALDA ON B1 DATABASE

Method

Training

samples/class

Test

samples/class

Recognition rate

(%)

LDA 2 43 59.38

IALDA 1 44 85.52

TABLE XIV. PERFORMANCE COMPARISON BETWEEN LDA

AND IALDA ON CMU PIE DATABASE

Method

Training

samples/class

Test

samples/class

Recognition rate

(%)

LDA 2 19 74.25

IALDA 1 20 98.9

Table XIII and Table XIV show, IALDA gives better

recognition when compared with LDA on B1 and CMU

databases [16]. The recognition rate is increased from94.12%

using Fuzzy Fisherface (FLDA) to 98.125% using Fuzzy

Fisherface through genetic algorithmon ORL database [17].

Experiments on ORL database, AR database and CMU

PIE database show that Semi-supervised face recognition

algorithm based on LDA is robust to variations in

illumination, pose and expression and that it outperforms

related approaches in both transductive and semi-supervised

configurations [18].

RSLDA is an effective random sampling LDA method, the

1-NN classifier in the feature subspace obtained by RSLDA

has better classification performance as compared to that

induced by BaseLDA on AR, ORL, YALE, YALEB face

datasets [19]. For ORL, the classification accuracy has an

increase of 15.1% around.

Experimental results on CVL and CMU PIE databases

prove the algorithm improves recognition rate effectively

[20]. L-LDA is insensitive to large dataset and also small

sample size and it provided 93% accuracy and reduced False

Acceptance Rate (FAR) to 0.42 on BANCA face database

[21].

C. Performance analysis of various LPP based algorithms

B2DLPP can better avoid the interference of light, noise

and other factors via extracting discriminate information.

Also dimension reduction by two directions needs less cost of

space and time than that by one side, so eigenvector space is

far smaller than the original space and it can avoid a SSS [30].

TABLE XV. PERFORMANCE COMPARISON BETWEEN DLPP

AND RLPP ON ORL DATABASE

Method

Training

samples

Recognition rate

(%)

DLPP 5 89.65

RLPP 5 96.80

TABLE XVI. PERFORMANCE COMPARISON BETWEEN DLPP

AND RLPP ON FERET DATABSE

Method

Training

samples

Recognition rate

(%)

DLPP 5 78.17

RLPP 5 92.00

Table XV shows, the correct recognition accuracy with

DLPP and RLPP is 89.65% and 96.80% with training number

5 respectively for ORL database. Table XVI shows, the

correct recognition accuracy with DLPP and RLPP is 78.17%

and 92.00 % with training number 5 respectively for FERET

database [31].

Support Vector Machines and Locality Preserving

Projections can not only discover the spatio-temporal

connection between video faces, but also take advantage of

the superiority of semi-supervised learning and manifolds

learning [32].

TABLE XVII. PERFORMANCE COMPARISON BETWEEN DLPP,

PCA+LPP AND SDLPP ON ORL DATABSE

Method

Training

samples

Recognition rate

(%)

DLPP 8 92.75

PCA+LPP 8 95.63

SDLPP 8 98.38

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 264

TABLE XVIII. PERFORMANCE COMPARISON BETWEEN DLPP,

PCA+LPP AND SDLPP ON FERET DATABASE

Method

Training

samples

Recognition rate

(%)

DLPP 8 81.75

PCA+LPP 8 79.92

SDLPP 8 91.58

Table XVII and Table XVIII show, SDLPP provides better

recognition when compared to DLPP and PCA+LPP on ORL

and FERET databases [33].

TABLE XIX. PERFORMANCE COMPARISON BETWEEN LPP,

OLPP, WPCA AND CLPP ON ORL DATABSE

Method

Recognition rate

(%)

LPP 92.0

OLPP 95.5

CLPP 97.5

Table XIX shows, CLPP provide better recognition when

compared to LPP and OLPP on ORL database which contains

images from 40 individuals, each providing 10 different

images [34].

TABLE XX. PERFORMANCE COMPARISON BETWEEN LPP,

OLPP, WPCA AND CLPP ON YALE DATABSE

Method

Recognition rate

(%)

LPP 90.5

OLPP 92.0

CLPP 94.1

Table XX shows, CLPP provide better recognition when

compared to LPP and OLPP on YALE database which

contains 165 images of 15 individuals [34].

TABLE XXI. PERFORMANCE COMPARISON BETWEEN LPP,

OLPP, WPCA AND CLPP ON PIE DATABSE

Method

Recognition rate

(%)

LPP 90.5

OLPP 90.5

CLPP 95.8

Table XXI shows, CLPP provide better recognition when

compared to LPP and OLPP on PIE database of 41,368

images of 68 people [34].

TABLE XXII. PERFORMANCE COMPARISON BETWEEN

LaplacianfaceAND DST-LPP ON ORL DATABSE

Method

Training

samples

Recognition rate

(%)

Laplacianface 5 96.30

DST-LPP 5 96.60

TABLE XXIII. PERFORMANCE COMPARISON BETWEEN

LaplacianfaceAND DST-LPP ON FERET DATABSE

Method

Training

samples

Recognition rate

(%)

Laplacianface 5 89.67

DST-LPP 5 92.33

Table XXII and Table XXIII show, DST-LPP provides

better recognition when compared to Laplacianface on ORL

and FERET databases respectively with 5 training number

[35].

TABLE XXIV. PERFORMANCE COMPARISON BETWEEN LPP,

OLPP AND ESLPP ON ORL DATABSE

Method

Training

samples

Recognition rate

(%)

LPP 5 90.50

OLPP 5 91.00

ESLPP 5 92.45

TABLE XXV. PERFORMANCE COMPARISON BETWEEN LPP,

OLPP AND ESLPP ON YALE DATABSE

Method

Training

samples

Recognition rate

(%)

LPP 5 88

OLPP 5 91.50

ESLPP 5 93.50

Table XXIV and Table XXV show, ESLPP provides better

recognition when compared to LPP and OLPP on ORL and

YALE databases respectively with 5 training number [36].

TABLE XXVI. PERFORMANCE COMPARISON BETWEEN LPP

AND 2DDLDPP ON ORL DATABASE

Method

Training

samples

Recognition rate

(%)

LPP 5 90.58

2DDLDPP 5 96.45

Table XXVI show, 2DDLDPP provides better recognition

when compared to LPP on ORL database with 5 training

number [37].

TABLE XXVII. PERFORMANCE COMPARISON BETWEEN LPP

AND 2DDLDPP on YALE database

Method

Training

samples

Recognition rate

(%)

LPP 8 93.33

2DDLDPP 8 96.56

Table XXVII show, 2DDLDPP provides better recognition

when compared to LPP on YALE database with 5 training

number [37].

TABLE XXVIII. PERFORMANCE COMPARISON BETWEEN LPP

AND FLPP ON ORL DATABASE

Method Recognition rate (%)

LPP 94.3

FLPP 95.4

TABLE XXIX. PERFORMANCE COMPARISON BETWEEN LPP

AND FLPP ON YALE DATABASE

Method Recognition rate (%)

LPP 86.2

FLPP 86.5

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 265

Table XXVIII and Table XIX show, FLPP provides better

recognition when compared to LPP and on ORL and YALE

databases respectively with 5 training number [38].

TABLE XXX. PERFORMANCE COMPARISON BETWEEN

MPCA+LDA AND MPCA+LPP ON FERET DATABASE

Methods Recognition rate (%)

MPCA+LDA 93.75

MPCA+LPP 96.5

TABLE XXXI. PERFORMANCE COMPARISON BETWEEN

MPCA+LDA AND MPCA+LPP ON AT&T DATABASE

Method Recognition rate (%)

MPCA+LDA 95

MPCA+LPP 96.5

Table XXX and Table XXXI show, MPCA+LPP provides

better recognition when compared to MPCA+LDA and on

FERET and AT&T databases respectively [39].

RGDLPP statistically significantly outperforms LPP,

DLPP algorithms on PIE database [40].

TABLE XXXII. PERFORMANCE COMPARISON BETWEEN LPP,

TLPP ON ORL DATABASE

Method Recognition rate (%)

LPP 96.6

TLPP 97.1

Table XXXII show, LPP provides better recognition when

compared to TLPP on ORL database [41].

D. Performance comparison between PCA and LDA based

algorithms

TABLE XXXIII. PERFORMANCE COMPARISON BETWEEN

EIGENFACES AND FISHERFACES ON YALE DATABASE

Number of

features

Methods

Recognition rate

(%)

32 Eigenfaces 90.5

32 Fisherfaces 93.5

Table XXXIII shows, LDA gives better recognition when

compared to PCA while 32 features are considered on YALE

Database [13].

TABLE XXXIV. PERFORMANCE COMPARISON BETWEEN

EIGENFACES AND FISHERFACES ON UMIST DATABASE

Number of

features

Methods

Recognition rate

(%)

12 Eigenfaces 90.62

12 Fisherfaces 94.45

Table XXXIV shows, LDA gives better recognition when

compared to PCA while 12 features are considered on

UMIST Database [13].

TABLE XXXV. PERFORMANCE COMPARISON BETWEEN

2DPCA AND RLDA ON ORL DATABASE

Methods Recognition rate (%)

2DPCA 77.86

RLDA 73.89

Table XXXV shows, 2DPCA gives better recognition

when compared to RLDA on ORL Database [15]

TABLE XXXVI. PERFORMANCE COMPARISON BETWEEN

2DPCA AND RLDA ON YALEB DATABASE

Methods

Recognition rate

(%)

2DPCA 77.86

RLDA 73.89

Table XXXVI shows, 2DPCA gives better recognition

when compared to RLDA on YALEB Database [15].

TABLE XXXVII. PERFORMANCE COMPARISON BETWEEN

2DPCA AND RLDA ON PIE DATABASE

Methods

Recognition rate

(%)

2DPCA 87.74

RLDA 92.22

Table XXXVII shows, RLDA gives better recognition

when compared to 2DPCA on PIE Database [15].

TABLE XXXVIII. PERFORMANCE COMPARISON BETWEEN PCA

AND LDA ON B1 DATABASE

Method

Training

samples/class

Test

samples/class

Recognition rate

(%)

PCA 1 44 57.2

LDA 2 43 59.38

TABLE XXXIX. PERFORMANCE COMPARISON BETWEEN PCA

AND LDA ON CMU PIE DATABASE

Method

Training

samples/class

Test

samples/class

Recognition rate

(%)

PCA 1 20 64.56

LDA 2 19 74.25

Table XXXVIII and Table XXXIX show, LDA gives

better recognition when compared to PCA on B1 and CMU

PIE Databases respectively [16].

TABLE XXXX. PERFORMANCE COMPARISON BETWEEN PCA

AND LDA ON ATT, CROPPED YALE, FACES94, FACES95, FACES96,

J AFE DATABASES

Database Name LDA PCA

ATT 94.40 91.30

CROPPED YALE 93.80 90.30

FACES95 90.80 87.00

FACES96 97.20 94.00

FromTable XXXX, it is evident that the best algorithmto

recognize image without disturbance is PCA, because in the

same recognition rate, PCA takes shorter time than LDA. But

to recognize image with disturbances, LDA is better to use

because it has better recognition rate [22]. In termof time

taken, PCA tends to be much better than LDA, especially to

recognize images with background disturbance [22].

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 266

IX. CONCLUSION

In this paper, we have analysed various current PCA, LDA

and LPP based algorithms for face recognition. This analysis

is vital in developing new robust algorithms for face

recognition. Among various PCA algorithms analysed, the

best result was found when Manual face localization was

used on ORL and SHEFFIELD database consisting of 100

components. The face recognition rate in this case was 100%.

The next best was 99.70% face recognition rate using PCA-

IN on ORL database. Among various LDA algorithms

analysed, it was found that IALDA gives the best face

recognition rate of 98.9 % when 20 test samples and 1

training sample were considered on CMU PIE Database. The

next best was 98.125 % using Fuzzy Fisherface through

genetic algorithm on ORL database. Among various LPP

algorithms analyzed, Subspace Discriminant LPP (SDLLP)

provides the best face recognition rate of 98.38% on ORL

database, the next best was 97.5% using Contourlet-based

Locality Preserving Projection (CLPP) on ORL database.

REFERENCES

[1] Chengliang Wang, Libin Lan, Yuwei Zhang, and Minjie Gu, "Face

Recognition Based on Principle Component Analysis and Support

Vector Machine," 2011 3rd International Workshop on Intelligent

Systems and Applications, pp. 1 - 4, May 2011.

[2] Yonghwa Choi, Tokumoto, T., Minho Lee, and Ozawa, S.,

"Incremental two-dimensional two-directional principal component

analysis (I(2D)2PCA) for face recognition," 2011 IEEE International

Conference on Acoustics, Speech and Signal Processing, pp. 1493 -

1496, May 2011.

[3] Zhang Lin, Zhang Wenrui, Sun Li, and Fang Zhijun, "Infrared face

recognition based on compressive sensing and PCA," 2011 IEEE

International Conference on Computer Science and Automation

Engineering, vol. 2, pp. 51 - 54, J une 2011.

[4] Guoxia Sun, Liangliang Zhang, and Huiqiang Sun, "Face Recognition

Based on Symmetrical Weighted PCA," 2011 International

Conference on Computer Science and Service System, pp. 2249 -

2252, August 2011.

[5] Guan-Chun Luh, "Face recognition using PCA based immune

networks with single training sample per person," 2011 International

Conference on Machine Learning and Cybernetics, vol. 4, pp. 1773 -

1779, J uly 2011.

[6] J ian Yang, David Zhang, Alejandro Frangi, and Jing-yu Yang, Two-

dimensional PCA: a new approach to appearance-based face

representation and recognition, IEEE Transactions on Pattern

Analysis and Machine Intelligence, Vol. 26, No. 1, pp. 131-137, J an.

2004.

[7] J un-ying Gan, J ian-hu Gao, and Chun-zhi Li, Face recognition based

on diagonal discrete cosine transformand two dimensional principal

component analysis, Computer Engineering and Applications, Vol.

43, No. 31, pp. 210-213, 2007.

[8] Dashun Que, Bi Chen and Jin Hu, A Novel SingleTraining Sample

Face Recognition AlgorithmBased on Modular Weighted (2D)2PCA,

Proceedings of ICSP Conference, Beijing, pp. 1552-1555, Oct. 2008

[9] A. Adeel Mohammed, Rashid Minhas, Q. M. J onathan Wu, and M. A.

Sid-Ahmed, "A face portion based recognition system using

multidimensional PCA," 2011 IEEE 54th International Midwest

Symposiumon Circuits and Systems, pp. 1 - 4, August 2011.

[10] Zhoufeng Liu, and Xiangyang Lu, "Face recognition based on

fractional Fourier transform and PCA," 2011 Cross Strait Quad-

Regional Radio Scienceand Wireless Technology Conference, vol. 2,

pp. 1406 - 1409, J uly 2011.

[11] Deng Zhang, Shingo Mabu, Feng Wen, and Kotaro Hirasawa, "A

Supervised Learning Framework for PCA-based Face Recognition

using GNP Fuzzy Data Mining," 2011 IEEE International Conference

on Systems, Man, and Cybernetics, pp. 516 - 520, October 2011.

[12] Bag Soumen, and Sanyal Goutam, "An Efficient Face Recognition

Approach using PCA and Minimum Distance Classifier," 2011

International Conference on Image Information Processing, pp. 1 - 6,

November 2011.

[13] Lingraj Dora, N. P. Rath, "Face Recognition by Regularized-LDA

Using PRM," 2010 International Conference on Advances in Recent

Technologies in Communication and Computing, pp. 140 - 145,

October 2010.

[14] Zhifeng Li, Unsang Park, and J ain, A.K, "A Discriminative Model for

Age Invariant Face Recognition," IEEE Transactions on Information

Forensics and Security, vol. 6, no. 3, pp. 1028 - 1037, September 2011.

[15] Huxidan J umahong, Wanquan Liu, and Chong Lu, "A new rearrange

modular two-dimensional LDA for face recognition," 2011

International Conference on Machine Learning and Cybernetics, vol. 1,

pp. 361 - 366, J uly 2011.

[16] Zhonghua Liu, J ingbo Zhou, and Zhong J in, "Face recognition based

on illumination adaptive LDA," 2010 20th International Conference

on Pattern Recognition, pp. 894 - 897, August 2010.

[17] Amar Khoukhi, Syed Faraz Ahmed, "Fuzzy LDA for Face

Recognition with GA Based Optimization," 2010 Annual Meeting of

the North American Fuzzy Information Processing Society, pp. 1 - 6,

J uly 2010.

[18] Xuran Zhao, Nicholas Evans, and J ean-Luc Dugelay, "Semi-

supervised face recognition with LDA self-training," 2011 18th IEEE

International Conference on Image Processing, pp. 3041 - 3044,

September 2011.

[19] Ming Yang, J ian-Wu Wan, and Gen-Lin J i, "Randomsampling LDA

incorporating feature selection for face recognition," 2010

International Conference on Wavelet Analysis and Pattern

Recognition, pp. 180 - 185, J uly 2010.

[20] Xiaoming Bai, and Chengzhang Wang, "Revised NMF with LDA

based color face recognition," 2010 2

nd

International Conference on

Networking and Digital Society, vol. 1, pp. 156 - 159, May 2010.

[21] Muhammad Imran Razzak, Muhammad Khurram Khan, Khaled

Alghathbar, and Rubiyah Yousaf, "Face Recognition using Layered

Linear Discriminant Analysis and Small Subspace," 2010 IEEE 10th

International Conference on Computer and Information Technology,

pp. 1407 - 1412, J uly 2010.

[22] Erwin Hidayat, Fajrian Nur A., Azah Kamilah Muda, Choo Yun Huoy,

and Sabrina Ahmad, "A Comparative Study of Feature Extraction

Using PCA and LDA for Face Recognition," 2011 7th International

Conference on Information Assurance and Security, pp. 354 - 359,

December 2011.

[23] Chandar K.P., Chandra M.M., Kumar M.R., Latha, B.S., "Multi scale

feature extraction and enhancement using SVD towards secure Face

Recognition system," 2011 International Conference on Signal

Processing, Communication, Computing and Networking

Technologies, pp. 64 - 69, J uly 2011.

[24] Pervaiz, A.Z., "Real Time Face Recognition SystemBased on EBGM

Framework," 2010 12th International Conference on Computer

Modelling and Simulation, pp.262 - 266, March 2010.

[25] Weyrauch, B., Heisele, B., Huang, J ., and Blanz, V., "Component-

Based Face Recognition with 3D Morphable Models," 2004

CVPRW'04 Conference on Computer Vision and Pattern Recognition

Workshop, pp.85, J une 2004.

[26] Zhao Lihong, Wang Ye, and Teng Hongfeng, "Face Recognition

based on Independent Component Analysis," 2011 Chinese Control

and Decision Conference, pp. 426 - 429, May 2011.

[27] J iajin Lei, Chao Lu, and Zhenkuan Pan, "Enhancement of

Components in ICA for Face Recognition," 2011 9th International

Conference on Software Engineering Research, Management and

Applications, pp. 33 - 38, August 2011.

[28] Shermina J , "Illumination invariant face recognition using Discrete

Cosine Transform and Principal Component Analysis," 2011

International Conference on Emerging Trends in Electrical and

Computer Technology, pp. 826 - 830, March 2011.

[29] Abegaz, T., Dozier, G., Bryant, K., Adams, J ., Popplewell, K., Shelton,

J ., Ricanek, K., and Woodard, D.L., Hybrid GAs for Eigen-based

facial recognition, 2011 IEEE Workshop on Computational

International J ournal of Computer Trends and Technology- volume3Issue2- 2012

ISSN: 2231-2803 http://www.internationaljournalssrg.org Page 267

Intelligence in Biometrics and Identity Management, pp. 127 - 130,

April 2011.

[30] J iadong Song, Xiaojuan Li, Jinhua Zhong, Pengfei Xu, and Mingquan

Zhou, A Novel Face Recognition Method: Bilateral Two

Dimensional Locality Preserving Projections (B2DLPP), IEEE-

Pattern Recognition (CCPR), 2010 Chinese Conference on Pattern

Recognition, Oct. 2010, pp. 15

[31] Wen-Sheng Chen, Wei Wang, and J ian-Wei Yang, A Novel

Regularized Locality Preserving Projections for Face Recognition,

IEEE-Information and Computing (ICIC), 2011 Fourth International

Conference on Intelligent Computing, April 2011, pp. 110113

[32] Ke Lu, Zhengming Ding, J idong Zhao, and Yue Wu, A novel Semi-

supervised Face Recognition for Video, IEEE-Intelligent Control

and Information Processing(ICICIP), 2010 International Conference

on Intelligent Control and Information Processing, Aug 2010, pp.

313316

[33] Wei He, Wen-Sheng Chen, and Bin Fang, A Novel Subspace

Discriminant Locality Preserving Projections for Face Recognition,

IEEE-Computational Intelligence and Security(CIS), 2011 Seventh

International Conference on Computational Intelligence and Security,

Dec 2011, pp. 10571061

[34] Yanqi Tan, Yaying Zhao, and Xiaohu Ma, Contourlet-Based Feature

Extraction with LPP for Face Recognition, IEEE-International

Conference on Multimediaand Signal Processing(CMSP), May 2011,

pp. 122125

[35] Wei Wang and Wen-Sheng Chen, DST Feature based Locality

Preserving Projections for Face Recognition, IEEE-International

Conference on Computational Intelligence and Security(CIS), Dec

2010, pp. 288292

[36] Xian-Fa Cai, Gui-Hua Wen, J ia Wei, and Jie Li, Enhanced

supervised locality preserving projections for face recognition, IEEE-

International Conference on Machine Learning and

Cybernetics(ICMLC), J uly 2011, pp. 17621766

[37] Xiajiong Shen, Qing Cong, and Sheng Wang, Face Recognition

Based on Two-Dimensional Discriminant Locality Preserving

Projection, IEEE-3rd International Conference on Advanced

Computer Theory and Engineering(ICACTE), Aug 2010, pp. V4-403-

V4-407

[38] Yang Liu and Jin-guang Sun, Face Recognition Method Based on

FLPP, IEEE-Third International Symposium on Electronic

Commerce and Security(ISECS), J uly 2010, pp. 298301

[39] Shermina.J , Face recognition system using Multilinear Principal

Component Analysis and Locality Preserving Projection, IEEE-GCC

Conference and Exhibition (GCC), Feb 2011, pp. 283286

[40] Lu, G.-F., Lin, Z., and J in, Z., Face recognition using regularised

generalised discriminant locality preserving projections, IEEE-

Computer Vision, IET, March 2011, pp. 107116

[41] Dazhao Zheng, Xiufeng Du, and Limin Cui, Tensor Locality

Preserving Projections for Face Recognition, IEEE-International

Conference on Systems Man and Cybernetics(SMC), Oct 2010, pp.

23472350

You might also like

- Face Recognition Using Radial Basis Neural NetworkDocument6 pagesFace Recognition Using Radial Basis Neural NetworkIOSRjournalNo ratings yet

- Review Module 1-Algebra 1-Part 1Document1 pageReview Module 1-Algebra 1-Part 1Andrea MagtutoNo ratings yet

- SAP FICO OverviewDocument31 pagesSAP FICO OverviewRakesh BarapatreNo ratings yet

- Ivanna K. Timotius 2010Document9 pagesIvanna K. Timotius 2010Neeraj Kannaugiya0% (1)

- Design of Radial Basis Function Network As Classifier in Face Recognition Using EigenfacesDocument6 pagesDesign of Radial Basis Function Network As Classifier in Face Recognition Using EigenfacessamimranNo ratings yet

- Face Recognition Using Pca ThesisDocument6 pagesFace Recognition Using Pca Thesistiarichardsonlittlerock100% (2)

- Performance Improvement For 2-D Face Recognition Using Multi-Classifier and BPNDocument7 pagesPerformance Improvement For 2-D Face Recognition Using Multi-Classifier and BPNWadakam AnnaNo ratings yet

- Face Recognition Based On LBP of GLCM Symmetrical Local RegionsDocument17 pagesFace Recognition Based On LBP of GLCM Symmetrical Local RegionschidambaramNo ratings yet

- Development of An Efficient Face Recognition System Based On Linear and Nonlinear AlgorithmsDocument9 pagesDevelopment of An Efficient Face Recognition System Based On Linear and Nonlinear AlgorithmsIAES IJAINo ratings yet

- Manisha Satone 2014Document14 pagesManisha Satone 2014Neeraj KannaugiyaNo ratings yet

- Compression Based Face Recognition Using DWT and SVMDocument18 pagesCompression Based Face Recognition Using DWT and SVMsipijNo ratings yet

- Learning A Locality Preserving Subspace For Visual RecognitionDocument8 pagesLearning A Locality Preserving Subspace For Visual Recognitionprashanth30sgrNo ratings yet

- Face Detection and Recognition Using Viola-Jones Algorithm and Fusion of LDA and ANNDocument6 pagesFace Detection and Recognition Using Viola-Jones Algorithm and Fusion of LDA and ANNErcherio MarpaungNo ratings yet

- Study of Different Techniques For Face RecognitionDocument5 pagesStudy of Different Techniques For Face RecognitionInternational Journal of Multidisciplinary Research and Explorer (IJMRE)No ratings yet

- A MATLAB Based Face Recognition Using PCA With Back Propagation Neural Network - Research and Reviews - International Journals PDFDocument6 pagesA MATLAB Based Face Recognition Using PCA With Back Propagation Neural Network - Research and Reviews - International Journals PDFDeepak MakkarNo ratings yet

- Application of Soft Computing To Face RecognitionDocument68 pagesApplication of Soft Computing To Face RecognitionAbdullah GubbiNo ratings yet

- Stratified Multiscale Local Binary Patterns: Application To Face RecognitionDocument6 pagesStratified Multiscale Local Binary Patterns: Application To Face RecognitionIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Lap Lac Ian FaceDocument34 pagesLap Lac Ian Faceaditya_kumar_meNo ratings yet

- Independent Component Analysis of Edge Information For Face RecognitionDocument11 pagesIndependent Component Analysis of Edge Information For Face RecognitionnikgovNo ratings yet

- Face Recognition Using Combined Multiscale Anisotropic and Texture Features Based On Sparse CodingDocument15 pagesFace Recognition Using Combined Multiscale Anisotropic and Texture Features Based On Sparse CodingVania V. EstrelaNo ratings yet

- 7 A SVM Based Method For Face Recognition Using A Wavelet PCADocument4 pages7 A SVM Based Method For Face Recognition Using A Wavelet PCAvenkata rao RampayNo ratings yet

- Face Recognition Using PCA and Wavelet Method: IjgipDocument4 pagesFace Recognition Using PCA and Wavelet Method: IjgipAhmed GhilaneNo ratings yet

- Masoud Mazloom Shohreh Kasaei: Face Recognition Using Wavelet, PCA, and Neural NetworksDocument6 pagesMasoud Mazloom Shohreh Kasaei: Face Recognition Using Wavelet, PCA, and Neural NetworkssvijiNo ratings yet

- A Gender Recognition System From Facial ImageDocument10 pagesA Gender Recognition System From Facial Imageahtasham hussainNo ratings yet

- Ieee Conference Paper TemplateDocument4 pagesIeee Conference Paper TemplateMati ur rahman khanNo ratings yet

- A New Face Recognition Method Using PCA, LDA and Neural NetworkDocument6 pagesA New Face Recognition Method Using PCA, LDA and Neural NetworkSujith KumarNo ratings yet

- Face Feature Extraction For Recognition Using Radon TransformDocument6 pagesFace Feature Extraction For Recognition Using Radon TransformAJER JOURNALNo ratings yet

- Robust Face Recognition Approaches Using PCA, ICA, LDA Based On DWT, and SVM AlgorithmsDocument5 pagesRobust Face Recognition Approaches Using PCA, ICA, LDA Based On DWT, and SVM AlgorithmsКдйікі КциNo ratings yet

- Neural Networks: Sung-Hoon Yoo, Sung-Kwun Oh, Witold PedryczDocument15 pagesNeural Networks: Sung-Hoon Yoo, Sung-Kwun Oh, Witold PedryczMario CalderonNo ratings yet

- Face Recognition Based On SVM and 2DPCADocument10 pagesFace Recognition Based On SVM and 2DPCAbmwliNo ratings yet

- Facial Features ExtractionDocument12 pagesFacial Features ExtractionSohaib Lucky CharmNo ratings yet

- Face Recognition Using Laplacian Faces: IEEE Transactions On Pattern Analysis and Machine Intelligence April 2005Document14 pagesFace Recognition Using Laplacian Faces: IEEE Transactions On Pattern Analysis and Machine Intelligence April 2005divya kathareNo ratings yet

- Annual Report 07 Edit BPT 3Document10 pagesAnnual Report 07 Edit BPT 3Cong Jie CjNo ratings yet

- An Efficient Approach Based On LTP and SVM For 3D Face RecognitionDocument12 pagesAn Efficient Approach Based On LTP and SVM For 3D Face RecognitionNasser Al MusalhiNo ratings yet

- (IJCST-V3I2P42) :ms. Gayatri K. Bhole, Mr. M. D. JakheteDocument4 pages(IJCST-V3I2P42) :ms. Gayatri K. Bhole, Mr. M. D. JakheteEighthSenseGroupNo ratings yet

- A Survey: Face Recognition by Sparse Representation: Jyoti Reddy, Rajesh Kumar Gupta, Dr. Mohan AwasthyDocument3 pagesA Survey: Face Recognition by Sparse Representation: Jyoti Reddy, Rajesh Kumar Gupta, Dr. Mohan AwasthyerpublicationNo ratings yet

- A Face Recognition Scheme Based On Principle Component Analysis and Wavelet DecompositionDocument5 pagesA Face Recognition Scheme Based On Principle Component Analysis and Wavelet DecompositionInternational Organization of Scientific Research (IOSR)No ratings yet

- School of Biomedical Engineering, Southern Medical University, Guangzhou 510515, ChinaDocument4 pagesSchool of Biomedical Engineering, Southern Medical University, Guangzhou 510515, ChinaTosh JainNo ratings yet

- Robust Face Verification Using Sparse RepresentationDocument7 pagesRobust Face Verification Using Sparse RepresentationSai Kumar YadavNo ratings yet

- 3 Conf 2009 Icccp7Document5 pages3 Conf 2009 Icccp7ZahirNo ratings yet

- Garkani Nejad2011Document10 pagesGarkani Nejad2011nabilNo ratings yet

- A Method of Face Recognition Based On Fuzzy C-Means Clustering and Associated Sub-NnsDocument11 pagesA Method of Face Recognition Based On Fuzzy C-Means Clustering and Associated Sub-Nnsnadeemq_0786No ratings yet

- Hybrid Features Based Face Recognition Method Using Artificial Neural NetworkDocument8 pagesHybrid Features Based Face Recognition Method Using Artificial Neural NetworkmariauxiliumNo ratings yet

- Performance Comparison of Various Face Detection TechniquesDocument9 pagesPerformance Comparison of Various Face Detection TechniquesijsretNo ratings yet

- Localized Principal Component Analysis Learning For Face Feature Extraction and RecognitionDocument5 pagesLocalized Principal Component Analysis Learning For Face Feature Extraction and Recognitionehab_essaNo ratings yet

- 151 May2019 1Document7 pages151 May2019 1Naveen Sasidharan PillaiNo ratings yet

- A Study On Sparse Representation and Optimal Algorithms in Intelligent Computer VisionDocument3 pagesA Study On Sparse Representation and Optimal Algorithms in Intelligent Computer VisioniirNo ratings yet

- M.Phil Computer Science Image Processing ProjectsDocument27 pagesM.Phil Computer Science Image Processing ProjectskasanproNo ratings yet

- Deep Learning Based Image Processing in Optical MicrosDocument19 pagesDeep Learning Based Image Processing in Optical Microsliukeyin.nccNo ratings yet

- Face Recognition Using Particle Swarm Optimization-Based Selected FeaturesDocument16 pagesFace Recognition Using Particle Swarm Optimization-Based Selected FeaturesSeetha ChinnaNo ratings yet

- Thesis On Face Recognition Using PcaDocument8 pagesThesis On Face Recognition Using Pcabsqbr7px100% (2)

- A Novel Face Recognition Algorithm For Distinguishing Faces With Various AnglesDocument5 pagesA Novel Face Recognition Algorithm For Distinguishing Faces With Various Anglestjvg1991No ratings yet

- A New Approach For Face Image Enhancement and RecognitionDocument10 pagesA New Approach For Face Image Enhancement and RecognitionDinesh PudasainiNo ratings yet

- Correlation Method Based PCA Subspace Using Accelerated Binary Particle Swarm Optimization For Enhanced Face RecognitionDocument4 pagesCorrelation Method Based PCA Subspace Using Accelerated Binary Particle Swarm Optimization For Enhanced Face RecognitionEditor IJRITCCNo ratings yet

- Different Algorithms For Face RecognitionDocument3 pagesDifferent Algorithms For Face RecognitionNishant JadhavNo ratings yet

- Research Article: Applying Artificial Neural Networks For Face RecognitionDocument17 pagesResearch Article: Applying Artificial Neural Networks For Face Recognitionsalman1992No ratings yet

- Abstract For Face DetectionDocument4 pagesAbstract For Face DetectionRitesh TayadeNo ratings yet

- Face Recognition and Micro-Expression Recognition Based On Discriminant Tensor Subspace Analysis Plus Extreme Learning MachineDocument19 pagesFace Recognition and Micro-Expression Recognition Based On Discriminant Tensor Subspace Analysis Plus Extreme Learning MachineRahul YadavNo ratings yet

- Linear Regression PDFDocument7 pagesLinear Regression PDFminkowski1987No ratings yet

- Content Based: Face RecognitionDocument27 pagesContent Based: Face RecognitionArjun ChandranNo ratings yet

- DATA MINING AND MACHINE LEARNING. PREDICTIVE TECHNIQUES: REGRESSION, GENERALIZED LINEAR MODELS, SUPPORT VECTOR MACHINE AND NEURAL NETWORKSFrom EverandDATA MINING AND MACHINE LEARNING. PREDICTIVE TECHNIQUES: REGRESSION, GENERALIZED LINEAR MODELS, SUPPORT VECTOR MACHINE AND NEURAL NETWORKSNo ratings yet

- Ijett V3i2p204Document3 pagesIjett V3i2p204surendiran123No ratings yet

- A Class Based Approach For Medical Classification of Chest PainDocument5 pagesA Class Based Approach For Medical Classification of Chest Painsurendiran123No ratings yet

- Automated Anomaly and Root Cause Detection in Distributed SystemsDocument6 pagesAutomated Anomaly and Root Cause Detection in Distributed Systemssurendiran123No ratings yet

- RFID-Based Mobile Robot Positioning - Sensors and TechniquesDocument5 pagesRFID-Based Mobile Robot Positioning - Sensors and Techniquessurendiran123No ratings yet

- PCA Based Image Enhancement in Wavelet DomainDocument5 pagesPCA Based Image Enhancement in Wavelet Domainsurendiran123No ratings yet

- Dynamic Modeling and Control of A Wind-Fuel Cell Through Hybrid Energy SystemDocument5 pagesDynamic Modeling and Control of A Wind-Fuel Cell Through Hybrid Energy Systemsurendiran123No ratings yet

- Double Encryption Based Secure Biometric Authentication SystemDocument7 pagesDouble Encryption Based Secure Biometric Authentication Systemsurendiran123No ratings yet

- A Study On Auto Theft Prevention Using GSMDocument5 pagesA Study On Auto Theft Prevention Using GSMsurendiran123No ratings yet

- Analysis of Dendrogram Tree For Identifying and Visualizing Trends in Multi-Attribute Transactional DataDocument5 pagesAnalysis of Dendrogram Tree For Identifying and Visualizing Trends in Multi-Attribute Transactional Datasurendiran123No ratings yet

- Transformer Less DC - DC Converter With High Step Up Voltage Gain MethodDocument6 pagesTransformer Less DC - DC Converter With High Step Up Voltage Gain Methodsurendiran123No ratings yet

- Design Architecture For Next Generation Mobile TechnologyDocument6 pagesDesign Architecture For Next Generation Mobile Technologysurendiran123No ratings yet

- Analysis of Leakage Reduction Technique On Different SRAM CellsDocument6 pagesAnalysis of Leakage Reduction Technique On Different SRAM Cellssurendiran123No ratings yet

- Geostatistical Analysis Research: International Journal of Engineering Trends and Technology-Volume2Issue3 - 2011Document8 pagesGeostatistical Analysis Research: International Journal of Engineering Trends and Technology-Volume2Issue3 - 2011surendiran123No ratings yet

- Power Quality Disturbances and Protective Relays On Transmission LinesDocument6 pagesPower Quality Disturbances and Protective Relays On Transmission Linessurendiran123No ratings yet

- Color Feature Extraction of Tomato Leaf DiseasesDocument3 pagesColor Feature Extraction of Tomato Leaf Diseasessurendiran123No ratings yet

- Organizational Practices That Effects Software Quality in Software Engineering ProcessDocument6 pagesOrganizational Practices That Effects Software Quality in Software Engineering Processsurendiran123No ratings yet

- Adaptive Active Constellation Extension Algorithm For Peak-To-Average Ratio Reduction in OfdmDocument11 pagesAdaptive Active Constellation Extension Algorithm For Peak-To-Average Ratio Reduction in Ofdmsurendiran123No ratings yet

- Features Selection and Weight Learning For Punjabi Text SummarizationDocument4 pagesFeatures Selection and Weight Learning For Punjabi Text Summarizationsurendiran123No ratings yet

- Dynamic Search Algorithm Used in Unstructured Peer-to-Peer NetworksDocument5 pagesDynamic Search Algorithm Used in Unstructured Peer-to-Peer Networkssurendiran123No ratings yet

- Model-Driven Performance For The Pattern and Advancement of Software Exhaustive SystemsDocument6 pagesModel-Driven Performance For The Pattern and Advancement of Software Exhaustive Systemssurendiran123No ratings yet

- An Efficient Passive Approach For Quality of Service Routing in ManetsDocument6 pagesAn Efficient Passive Approach For Quality of Service Routing in Manetssurendiran123No ratings yet

- Model-Based Programming of Intelligent Embedded Systems Through Offline CompilationDocument4 pagesModel-Based Programming of Intelligent Embedded Systems Through Offline Compilationsurendiran123No ratings yet

- Extended Linearization Technique: GPS User Position UsingDocument4 pagesExtended Linearization Technique: GPS User Position Usingsurendiran123No ratings yet

- VSC Based DSTATCOM & Pulse-Width Modulation For Power Quality ImprovementDocument4 pagesVSC Based DSTATCOM & Pulse-Width Modulation For Power Quality Improvementsurendiran123No ratings yet

- Experimental Investigation For Welding Aspects of AISI 304 & 316 by Taguchi Technique For The Process of TIG & MIG WeldingDocument6 pagesExperimental Investigation For Welding Aspects of AISI 304 & 316 by Taguchi Technique For The Process of TIG & MIG Weldingsurendiran123No ratings yet

- Lecture 08 Sequence DiagramDocument24 pagesLecture 08 Sequence DiagramMateen HabibNo ratings yet

- Indian Telecom IndustryDocument30 pagesIndian Telecom Industryhahire100% (1)

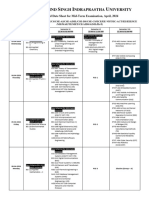

- Mid Term Date Sheet - pq0NsApDocument2 pagesMid Term Date Sheet - pq0NsApSahil HansNo ratings yet

- 93ZJ Secc 8C Overhead ConsoleDocument10 pages93ZJ Secc 8C Overhead Consolehelgith74No ratings yet

- Particles in OpenFOAMDocument17 pagesParticles in OpenFOAMAsmaa Ali El-AwadyNo ratings yet

- Service Parts List: Cordless 12 Volt 3/8" Hammer-DrillDocument2 pagesService Parts List: Cordless 12 Volt 3/8" Hammer-DrillCarlos SalcedoNo ratings yet

- E-Governance: Definitions/Domain Framework and Status Around The WorldDocument20 pagesE-Governance: Definitions/Domain Framework and Status Around The WorldasnescribNo ratings yet

- Number System ChapterDocument8 pagesNumber System ChapterMike SmithNo ratings yet

- Index ConfigDocument8 pagesIndex ConfiginkabebeNo ratings yet

- Whitepaper: Stuff WordsDocument18 pagesWhitepaper: Stuff WordsAntonio VaughnNo ratings yet

- Language Test 9A : Tests Answer KeyDocument4 pagesLanguage Test 9A : Tests Answer KeyАнастасия ВысоцкаяNo ratings yet

- Skematik Robot Penghindar Halangan PDFDocument1 pageSkematik Robot Penghindar Halangan PDFibnu hasanNo ratings yet

- Resume WeeblyDocument1 pageResume Weeblyapi-272647959No ratings yet

- CH 04. Data-Level Parallelism in Vector, SIMD, and GPU ArchitecturesDocument50 pagesCH 04. Data-Level Parallelism in Vector, SIMD, and GPU ArchitecturesFaheem KhanNo ratings yet

- How in STEP 7 (TIA Portal) Do You Save The Value of An "HSC" (High-Speed Counter) For The S7-1200 After STOP Mode or After A Restart?Document3 pagesHow in STEP 7 (TIA Portal) Do You Save The Value of An "HSC" (High-Speed Counter) For The S7-1200 After STOP Mode or After A Restart?KarthickNo ratings yet

- 5-LP Simplex (CJ-ZJ Tableau)Document7 pages5-LP Simplex (CJ-ZJ Tableau)zuluagagaNo ratings yet

- Initial Cell Selection: Srxlev 0 AND Squal 0Document9 pagesInitial Cell Selection: Srxlev 0 AND Squal 0amirfiroozi87No ratings yet

- Iterrows Iteritems ExplainedDocument5 pagesIterrows Iteritems ExplainedAli AshrafNo ratings yet

- Computer Networks Lab: Lab No.9 Configuring IP Routing (Static Routing)Document7 pagesComputer Networks Lab: Lab No.9 Configuring IP Routing (Static Routing)Akira MannahelNo ratings yet

- Curriculum Vitae - Dami EgbeyemiDocument7 pagesCurriculum Vitae - Dami EgbeyemiDami EgbeyemiNo ratings yet

- Systems Proposal On Inventory ManagementDocument16 pagesSystems Proposal On Inventory ManagementSiegfred Laborte0% (2)

- Primary Lithium Handbook PDFDocument36 pagesPrimary Lithium Handbook PDFMedSparkNo ratings yet

- Ansi - Ieee STD 995 - 1987 Efficiency Adjustable Speed Drives PDFDocument32 pagesAnsi - Ieee STD 995 - 1987 Efficiency Adjustable Speed Drives PDFrafaelv123No ratings yet

- Document 2484229.1 Recover Oracle ErrorDocument3 pagesDocument 2484229.1 Recover Oracle Errorandfr2005No ratings yet

- 82C08Document32 pages82C08Teo AntNo ratings yet

- 100606Document232 pages100606jawfirmino@terra.com.brNo ratings yet

- 1.john Lau - ASM-CSIA - Recent Advances in PackagingDocument148 pages1.john Lau - ASM-CSIA - Recent Advances in Packagingyang100% (1)