Professional Documents

Culture Documents

Relying On Algorithms and Bots Can Be Really, Really Dangerous - Wired Opinion - Wired

Relying On Algorithms and Bots Can Be Really, Really Dangerous - Wired Opinion - Wired

Uploaded by

dfjhsdlfiuheOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Relying On Algorithms and Bots Can Be Really, Really Dangerous - Wired Opinion - Wired

Relying On Algorithms and Bots Can Be Really, Really Dangerous - Wired Opinion - Wired

Uploaded by

dfjhsdlfiuheCopyright:

Available Formats

Relying on Algorithms and Bots Can Be Really, Really Dangerous | Wir...

http://www.wired.com/opinion/2013/03/clive-thompson-2104/

Opinion

You're Entitled To Our Opinion

culture & entertainment cars, gadgets, apps

Tweet 391 Like 518 125 Share 65

Relying on Algorithms and Bots Can Be Really, Really Dangerous

By Clive Thompson 03.25.13 6:30 AM

Follow @pomeranian99

Machines can make decisions. That doesnt mean theyre right. Illustration: Kronk So you cant wait for a self-driving car to take away the drudgery of driving? Me neither! But consider this scenario, recently posed by neuroscientist Gary Marcus: Your car is on a narrow bridge when a school bus veers into your lane. Should your self-driving car plunge off the bridge sacrificing your life to save those of the children? Obviously, you wont make the call. Youve ceded that decision to the cars algorithms. You better hope that you agree with its choice. This is a dramatic dilemma, to be sure. But its not a completely unusual one. The truth is, our tools increasingly guide and shape our behavior or even make decisions on our behalf. A small but growing chorus of writers and scholars think were going too far. By taking human decisionmaking out of the equation, were slowly stripping away deliberationmoments where we reflect on the morality of our actions.

1 von 5

23.04.2013 04:33

Relying on Algorithms and Bots Can Be Really, Really Dangerous | Wir...

http://www.wired.com/opinion/2013/03/clive-thompson-2104/

Platinum Age of TV Netflix Isnt Just Rebooting Arrested DevelopmentIts Revolutionizing TV Buckle Your Brainpan: The Primer Director Is Back With a New Film

Not all of these situations are so life-and-death. Some are quite prosaic, like the welter of new gadgets that try to nudge us into better behavior. In his new book To Save Everything, Click Here, Evgeny Morozov casts a skeptical eye on this stuff. He tells me about a recent example hes seen: A smart fork that monitors how much youre eating and warns you if youre overdoing it. Fun and useful, you might argue. But for Morozov, tools like the fork reduce your incentive to think about how youre eating, and the deeper political questions of why todays food ecosystem is so enfattening. Instead of regulating the food industry to make food healthier, Morozov says, were giving people smart forks. Or as Evan Selinger, a philosopher at Rochester Institute of Technology, puts it, tools that make hard things easy can make us less likely to tolerate things that are hard. Outsourcing our self-control to digital willpower has consequences: Use Siri constantly to get instant information and you can erode your ability to be patient in the face of incomplete answers, a crucial civic virtue. Things get even dicier when society at large outsources its biggest moral decisions to technology. For example, some police departments have begun using PredPol, a system that mines crime data to predict future criminal activity, guiding police to areas they might otherwise overlook. It appears to work, cutting some crimes by up to 27 percent. It lets chronically underfunded departments do more with less. But as Morozov points out, the algorithms could wind up amplifying flaws in existing law enforcement. For example, sexual violence is historically underreported, so it cant as easily be predicted. Remove the deliberation of what police focus on and you can wind up deforming policing. And doing more with less, while a worthy short-term goal, lets politicians dodge the political impact of their budgetary choices. And this, really, is the core of the question here: Efficiency isnt always a good thing. Tech lets us do things more easily. But this can mean doing them less reflectively too. Were not going to throw out all technology, nor should we. Efficiency isnt always bad. But Morozov suggests that sometimes tools should do the oppositethey should introduce friction. For example, new parking meters reset when you drive away, so another driver cant draft off of any remaining time. The city makes more money, obviously, but that design also compels your behavior. What if a smart meter instead offered you a choice: Gift remaining time to the next driver or to the city? This would foreground the tiny moral trade-offs of daily lifecity versus citizen. Or consider the Caterpillar, a prototype power strip created by German designers that detects when a plugged-in device is in standby mode. Instead of turning off the devicea traditional efficiency movethe Caterpillar leaves it on, but starts writhing. The point is to draw your attention to your power usage, to force you to turn it off yourself and meditate on why youre

2 von 5

23.04.2013 04:33

Relying on Algorithms and Bots Can Be Really, Really Dangerous | Wir...

http://www.wired.com/opinion/2013/03/clive-thompson-2104/

using so much. These are kind of crazy, of course. Theyre not tools that solve problems. Theyre tools to make you think about problemswhich is precisely the point. Related You Might Like Related Links by Contextly

Why Living in the Present Is a Disorder

Facebook Home Propaganda Makes Selfishness Contagious

How Were Turning Digital Natives Into Etiquette Sociopaths

The Internet of Things Has Arrived And So Have Massive Security Issues

Kill Your Meeting Room The Futures in Walking and Talking

ForgetMore the Internet of Things: Here Comes the Internet of Cars Show

Amazon Studios' New TV Shows: What's Worth Watching?

Ego-Wrangling the World's Most Powerful Leaders for a Portrait

Facebook Home Propaganda Makes Selfishness Contagious

Tags:Next automate this, magazine-april-2013 The Big Thing in Crowdfunding? Kickstarting People Post Comment | 52 Comments and 506 Reactions | Permalink Back to top

Tweet 391 Like 518 125

Reddit Digg Stumble Upon Email

3 von 5

23.04.2013 04:33

Relying on Algorithms and Bots Can Be Really, Really Dangerous | Wir...

http://www.wired.com/opinion/2013/03/clive-thompson-2104/

52 comments

Best

Community

Share

Trout007

a month ago

This article is so funny. Take these two sentences. Instead of regulating the food industry to make food healthier" and "Outsourcing our self-control to digital willpower has consequences:" Isn't the first one just outsourcing your self control to "political willpower"? That has even worse consequences. At least the digital kind you can choose if you want to use it.

31 1

Reply

Share

the_rat

>

Trout007 a month ago

Dude, words off my keyboard! Isn't this an article about giving up choice to robots? What kind of a philosopher misses the point this badly? He complains about a device that leaves the choice to eat or not in our hands and offers up government regulation of our eating habits as a better choice?

11

Reply

Share

Dave O'Connor

>

Trout007 a month ago

I agree with the general idea of the article but the sentences you quote are exactly what I was going to comment on.

7

Reply

Share

Joaquim Ventura

>

Trout007 a month ago

Exactly! I'm always amazed at how people mistrust machines, which have transparent (if open source) rule sets/logic and give you nothing more and nothing less than you put in (unless you want it to give you something emergent) and put faith in politicians/regulators that, on a good day are just humans with human flaws and on a bad day are outright crooks. At least I can trust my smart fork not to be lobbied.

3

Reply

Share

simonsmicrophone

>

Joaquim Ventura a month ago

Yes, very true. However, your smart fork might be lobbied. This is because it's not open and connected to some corporations network of dietary AI. Your average plod will buy it and worship it - not realizing they've sold part of their freedom for some coolness. I'm not sure if it's actually cool. It's just what I've been told by the masses.

1

Reply

Share

Amanda Dawson

>

Trout007 a month ago

If you think Richard`s story is amazing,, last month my uncles step-son basically also brought home $7387 sitting there fourty hours a month an their house and they're co-worker's step-mother`s neighbour did this for 7-months and got paid more than $7387 part-time on line.

4 von 5 23.04.2013 04:33

Relying on Algorithms and Bots Can Be Really, Really Dangerous | Wir...

http://www.wired.com/opinion/2013/03/clive-thompson-2104/

Previous Article

Don't Just Hate CISPA Fix It

Next Article

How We're Turning Digital Natives Into Etiquette Sociopaths

5 von 5

23.04.2013 04:33

You might also like

- Crimes Against Logic: Exposing the Bogus Arguments of Politicians, Priests, Journalists, and Other Serial OffendersFrom EverandCrimes Against Logic: Exposing the Bogus Arguments of Politicians, Priests, Journalists, and Other Serial OffendersRating: 4 out of 5 stars4/5 (163)

- Presentation BE - The Social DilemmaDocument10 pagesPresentation BE - The Social DilemmaSanskriti SinghNo ratings yet

- Summary of Jaron Lanier's Ten Arguments for Deleting Your Social Media Accounts Right NowFrom EverandSummary of Jaron Lanier's Ten Arguments for Deleting Your Social Media Accounts Right NowRating: 5 out of 5 stars5/5 (1)

- A Psychologist Explains How AI and Algorithms Are Changing Our LivesDocument8 pagesA Psychologist Explains How AI and Algorithms Are Changing Our LivesDuc Trung Vu CssrNo ratings yet

- Elon Musk's Interview With BBCDocument8 pagesElon Musk's Interview With BBCwitwiz.witchNo ratings yet

- The Naked Future' and Social Physics' - NYTimesDocument4 pagesThe Naked Future' and Social Physics' - NYTimestomtom1010No ratings yet

- The Naked Future' and Social Physics'Document5 pagesThe Naked Future' and Social Physics'k1103634No ratings yet

- Anonymous AntitechnologyDocument19 pagesAnonymous AntitechnologymavrikataraNo ratings yet

- Embracing ComplexityDocument12 pagesEmbracing ComplexityBlessolutionsNo ratings yet

- Unit 4 PortfolioDocument5 pagesUnit 4 Portfolioapi-455735588No ratings yet

- Daug, Reflection PaperDocument3 pagesDaug, Reflection PaperRodel MatuteNo ratings yet

- The Bad Changes of AIDocument5 pagesThe Bad Changes of AIDure H DasuNo ratings yet

- Machine Learning: An Essential Guide to Machine Learning for Beginners Who Want to Understand Applications, Artificial Intelligence, Data Mining, Big Data and MoreFrom EverandMachine Learning: An Essential Guide to Machine Learning for Beginners Who Want to Understand Applications, Artificial Intelligence, Data Mining, Big Data and MoreNo ratings yet

- Rauhauser's Conspiracy Theory Involving Conspiracy TheoristsDocument3 pagesRauhauser's Conspiracy Theory Involving Conspiracy TheoristsAaronWorthingNo ratings yet

- POLS124 Info 5Document4 pagesPOLS124 Info 5eashan guptaNo ratings yet

- 05 - Dretske, FredDocument12 pages05 - Dretske, FredJuancho HerreraNo ratings yet

- DocumentaryReview TheSocialDilemmaDocument3 pagesDocumentaryReview TheSocialDilemmaRonDaniel PadillaNo ratings yet

- Digital Minimalism - Summarized for Busy People: Choosing a Focused Life in a Noisy World: Based on the Book by Cal NewportFrom EverandDigital Minimalism - Summarized for Busy People: Choosing a Focused Life in a Noisy World: Based on the Book by Cal NewportNo ratings yet

- SubtitleDocument2 pagesSubtitleMuhammad IbrahimNo ratings yet

- Algorithms Reflection TedxDocument1 pageAlgorithms Reflection Tedxapi-364405226No ratings yet

- Our Minds Have Been Hijacked by Our Phones Tristan Harris Wants To Rescue Them - WiredDocument10 pagesOur Minds Have Been Hijacked by Our Phones Tristan Harris Wants To Rescue Them - Wiredkigefo9665No ratings yet

- CRITICAL READING-pre Mid Term RevisionDocument4 pagesCRITICAL READING-pre Mid Term Revisionminhngochoang116No ratings yet

- My Scattered Thoughts On The SingularityDocument3 pagesMy Scattered Thoughts On The SingularitySally MoremNo ratings yet

- Position Paper On How Computers Change The Way Human ThinksDocument3 pagesPosition Paper On How Computers Change The Way Human ThinksAngelo Jay PedroNo ratings yet

- Optimization Vs TransparencyDocument13 pagesOptimization Vs TransparencyÁngel EncaladaNo ratings yet

- PH w3539 d3 Does Surveillance Make Us Morally Better p2011 A11s RedfernDocument11 pagesPH w3539 d3 Does Surveillance Make Us Morally Better p2011 A11s RedfernscottredNo ratings yet

- AI Algorithms and Awful Humans - Daniel SoloveDocument19 pagesAI Algorithms and Awful Humans - Daniel SoloveFacundoNo ratings yet

- Ethics and Software DevelopmentDocument8 pagesEthics and Software DevelopmentJohn Steven Anaya InfantesNo ratings yet

- STS Joys ArticleDocument2 pagesSTS Joys ArticleKimberly Milante100% (2)

- 演算法 草稿Document5 pages演算法 草稿李軒No ratings yet

- The Answers A Machine Will Never Have: "I Suddenly Feel Kinda Guilty... This Is Not A Great Way To Wake Up!"Document6 pagesThe Answers A Machine Will Never Have: "I Suddenly Feel Kinda Guilty... This Is Not A Great Way To Wake Up!"Rony GeorgeNo ratings yet

- Floridi's Lecture: AI: A Divorce Between Agency and IntelligenceDocument56 pagesFloridi's Lecture: AI: A Divorce Between Agency and IntelligenceDimitri VinciNo ratings yet

- Drafting A DebateDocument11 pagesDrafting A DebateHACKER CHAUDHARYNo ratings yet

- 4374 MSPEdDocument6 pages4374 MSPEdDarkgoatNo ratings yet

- ReflectionDocument5 pagesReflectionBethsie MadinnoNo ratings yet

- Immune Reactions Against The Network SocietyDocument4 pagesImmune Reactions Against The Network SocietyDavid OrbanNo ratings yet

- The Social DilemmaDocument1 pageThe Social DilemmaZ3RCKNo ratings yet

- SOCI186 Group Assignment 7Document5 pagesSOCI186 Group Assignment 7Lilland AllanNo ratings yet

- Farenhiet 451 - EssayDocument5 pagesFarenhiet 451 - Essayapi-275502795No ratings yet

- Moonshots 10X Is Easier Than 10 PercentDocument4 pagesMoonshots 10X Is Easier Than 10 PercentOmer Siddiqui100% (1)

- Ethics ArticleDocument3 pagesEthics ArticleMaggie TrimpinNo ratings yet

- Exam 2nd Term 7thDocument3 pagesExam 2nd Term 7thMaría Alexandra Torres BarbosaNo ratings yet

- Top 9 Ethics IssuesDocument4 pagesTop 9 Ethics IssuesJuan Pablo SerraNo ratings yet

- Hooked: The Pitfalls of Media, Technology and Social NetworkingFrom EverandHooked: The Pitfalls of Media, Technology and Social NetworkingRating: 1 out of 5 stars1/5 (1)

- Questions Pariser Intro-2,4Document4 pagesQuestions Pariser Intro-2,4EthanNo ratings yet

- 6.1 ALLiance ScreenDocument8 pages6.1 ALLiance Screenetienne_figueroa_1No ratings yet

- Politics Matters Most To Slaves. Luke's WebpageDocument2 pagesPolitics Matters Most To Slaves. Luke's WebpageDiogo Gaspar SequeiraNo ratings yet

- The 10 Rock Solid Elements of Effective Online Marketing: by Brian Clark Founder and PublisherDocument18 pagesThe 10 Rock Solid Elements of Effective Online Marketing: by Brian Clark Founder and Publisherapi-25895447No ratings yet

- What Is The Future of Artificial IntelligenceDocument2 pagesWhat Is The Future of Artificial IntelligenceBechir MathlouthiNo ratings yet

- The Machine Always Wins - What Drives Our Addiction To Social Media - TechnologyDocument15 pagesThe Machine Always Wins - What Drives Our Addiction To Social Media - TechnologyloveshNo ratings yet

- Interesting Times #07Document76 pagesInteresting Times #07unjohNo ratings yet

- Content: True Happy Inspiring N KDocument24 pagesContent: True Happy Inspiring N KSathyanarayanan Srinivasan RajappaNo ratings yet

- Can We Teach Robots EthicsDocument3 pagesCan We Teach Robots EthicsExtraterrestre 09No ratings yet

- DT Think BetterDocument6 pagesDT Think BetterTJSNo ratings yet

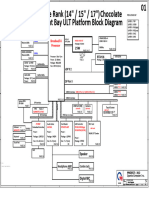

- HP 15 - Ab225ur DAX12AMB6D0 Quanta X12 6L R1aDocument40 pagesHP 15 - Ab225ur DAX12AMB6D0 Quanta X12 6L R1asaffunnutretro-9095No ratings yet

- Apple IncDocument5 pagesApple Incsyg_sahabt26No ratings yet

- CL650FMSPrimerDocument16 pagesCL650FMSPrimerLc CzNo ratings yet

- NTPC TandaDocument97 pagesNTPC Tandamaurya1234No ratings yet

- Network Programming in Python: Mehmet Hadi GunesDocument31 pagesNetwork Programming in Python: Mehmet Hadi GunesSanchita MoreNo ratings yet

- Enterprise Asset Management: Product Management, SAP AGDocument71 pagesEnterprise Asset Management: Product Management, SAP AGDave LlewellynNo ratings yet

- MC6-EX Ordering GuideDocument6 pagesMC6-EX Ordering Guidesunil_dharNo ratings yet

- Unit-5: Database System Concepts, 6 EdDocument67 pagesUnit-5: Database System Concepts, 6 EdSujy CauNo ratings yet

- E22914 Genset Jakarta (Id) Sele Raya Mip1 GBDocument111 pagesE22914 Genset Jakarta (Id) Sele Raya Mip1 GBDavid tangkelangiNo ratings yet

- KNX Introduction Flyer enDocument9 pagesKNX Introduction Flyer enDalpikoNo ratings yet

- BEC23PLDocument2 pagesBEC23PLLucian Coman100% (1)

- C1022 CS153 CPG101 T212 Assignment QPDocument7 pagesC1022 CS153 CPG101 T212 Assignment QPAsiddeen Bn MuhammadNo ratings yet

- ECQ Plots - SCIS Design Guidelines - (v0.5.0)Document22 pagesECQ Plots - SCIS Design Guidelines - (v0.5.0)causeitsoNo ratings yet

- AVR USBasp Users Manual v1.1Document35 pagesAVR USBasp Users Manual v1.1Jarfo100% (1)

- Freebitco - in ScriptDocument3 pagesFreebitco - in Scriptme starkNo ratings yet

- EWM-ECC Organization LevelDocument53 pagesEWM-ECC Organization LevelSwamy KattaNo ratings yet

- Catalogue of Standards of CENELECDocument18 pagesCatalogue of Standards of CENELECrhusmenNo ratings yet

- Acad NFG PDFDocument80 pagesAcad NFG PDFElving MendozaNo ratings yet

- Python InheritanceDocument4 pagesPython InheritanceMarcel Chis100% (1)

- Haltech Elite Series ProgrammerDocument28 pagesHaltech Elite Series ProgrammerJameel KhanNo ratings yet

- Verification of Gates Experimaent: Digital Logic Design Lab ManualDocument5 pagesVerification of Gates Experimaent: Digital Logic Design Lab ManualRana jamshaid Rana jamshaidNo ratings yet

- KSOU Prospectus Semester Mode 2013 14 SemDocument156 pagesKSOU Prospectus Semester Mode 2013 14 SemRanoorNo ratings yet

- 1-Business Analysis Fundamentals BACCM PDFDocument8 pages1-Business Analysis Fundamentals BACCM PDFZYSHANNo ratings yet

- Data Leakage in Machine LearningDocument28 pagesData Leakage in Machine LearningprediatechNo ratings yet

- Keith - Tetra Threat FrameworkDocument12 pagesKeith - Tetra Threat FrameworkkynthaNo ratings yet

- Icwr51868 2021 9443129Document4 pagesIcwr51868 2021 9443129Nagaraj LutimathNo ratings yet

- DP-3520 Service ManualDocument629 pagesDP-3520 Service ManualJohn Bailey50% (2)

- PDF Semiconductor Materials For Solar Photovoltaic Cells 1st Edition M. Parans Paranthaman All ChapterDocument24 pagesPDF Semiconductor Materials For Solar Photovoltaic Cells 1st Edition M. Parans Paranthaman All Chapterpenterawumi100% (3)

- Ott OTT Ecolog 1000 Water Level LoggerDocument3 pagesOtt OTT Ecolog 1000 Water Level LoggerNedimZ1No ratings yet

- Professional Graphic Design CourseDocument38 pagesProfessional Graphic Design CourseabNo ratings yet