Professional Documents

Culture Documents

Lecture 28: Brownian Bridge: t t (δ) a t −2δD −2δD −2δa t t −2δD t∧T

Lecture 28: Brownian Bridge: t t (δ) a t −2δD −2δD −2δa t t −2δD t∧T

Uploaded by

spitzersglareOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lecture 28: Brownian Bridge: t t (δ) a t −2δD −2δD −2δa t t −2δD t∧T

Lecture 28: Brownian Bridge: t t (δ) a t −2δD −2δD −2δa t t −2δD t∧T

Uploaded by

spitzersglareCopyright:

Available Formats

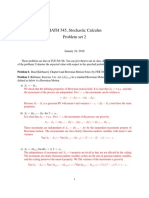

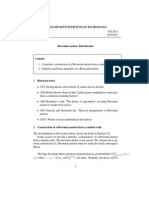

Stat 150 Stochastic Processes

Spring 2009

Lecture 28: Brownian Bridge

Lecturer: Jim Pitman

From last time: Problem: Find hitting probability for BM with drift , Dt := a + t + Bt , ( ) Pa (Dt hits b before 0). Idea: Find a suitable MG. Found e2Dt is a MG. Use this: Under Pa start at a. Ea e2D0 = e2a . Let T = rst time Dt hits 0 or b. Stop the MG at time T , Mt = e2Dt . Look at (MtT , t 0). For simplicity, take > 0, then 1 e2Dt > e2a > e2b > 0. For 0 t T , get a MG with values in [0,1] when we stop at T . Final value of MG = 1 1(hit 0 before b) + e2b 1(hit b before 0) 1 e

2a

= P(hit b) + P(hit 0) = 1 P(hit 0) + e2b P(hit b)

= P(hit b) =

1 e2a 1 e2b

Equivalently, start at 0, P(Bt + t ever reaches a) = e2a . Let M := mint (Bt + t), then P(M a) = e2a = M Exp(2 ). Note the memoryless property: P(M > a + b) = P(M > a)P(M > b) This can be understood in terms of the strong Markov property of the drifting BM at its rst hitting time of a: to get below (a + b) the process must get down to a, and the process thereafter must get down a further amount b.

0 a

Lecture 28: Brownian Bridge

Brownian Bridge This is a process obtained by conditioning on the value of B at a xed time, say time=1. Look at (Bt , 0 t 1|B1 ), where B0 = 0. Key observation: E(Bt |B1 ) = tB1

B1

B0

To see this, write Bt = tB1 + (Bt tB1 ), and observe that tB1 and Bt tB1 are independent. This independence is due to the fact that B is a Gaussian process, so two linear combinations of values of B at xed times are independent if and only if their covariance is zero, and we can compute:

2 E(B1 (Bt tB1 )) = E(Bt B1 tB1 )=tt1=0

since E(Bs Bt ) = s for s < t. Continuing in this vein, let us argue that (Bt tB1 , 0 t 1) and B1 are

process r.v.

independent. Proof: (ti ) := Bt ti B1 , take any linear combination: Let B i E((

i

(ti ))B1 ) = i B

i

(ti )B1 = i EB

i

i 0 = 0

at xed times ti [0, 1] This shows that every linear combination of values of B is independent of B1 . It follows by measure theory (proof beyond scope of this (ti ), 0 course) that B1 is independent of every nite-dimensional vector (B t1 < < tn 1), and hence that B1 is independent of the whole process (t), 0 t 1). This process B (t) := (Bt tB1 , 0 t 1) is called (B Brownian bridge. (0) = B (1) = 0. Intuitively, B is B conditioned on B1 = 0. Moreover, Notice: B (t)+ tb, 0 t 1). These assertions about condiB conditioned on B1 = b is (B tioning on events of probability can be made rigorous either in terms of limiting statements about conditioning on e.g. B1 = (b, b + ) and letting tend to 0, or by integration, similar to the interpretation of conditional distributions and densities.

Lecture 28: Brownian Bridge

. Properties of the bridge B (1) Continuous paths. (2) (ti ) = i B

i i

i (B (ti ) ti B (1)) i B (ti ) (

i i

i ti )B (1)

= some linear combination of B () is a Gaussian process. As such it is determined by its mean and = B (t)) = 0. For 0 s < t 1, covariance functions: E(B (s)B (t)) = E[(Bs sB1 )(Bt tB1 )] E(B 2 = E(Bs Bt ) sE(Bt B1 ) tE(Bs B1 ) + stE(B1 ) = s st st + st = s(1 t) (1 t), 0 t 1) = (B (t), 0 Note symmetry between s and 1 t = (B t 1) is an inhomogeneous Markov Process. (Exercise: describe its transition (3) B t given B s for 0 < s < t.) rules, i.e. the conditional distribution of B

d

Application to statistics Idea: How to test if variables X1 , X2 , . . . are like an independent sample from some continuous cumulative distribution functionF ? Empirical distribution Fn (x) := fraction of Xi , 1 i n, with values x. Fn (x) is a random function of x which increases by 1/n at each data point. If the Xi s are IID(F ), then Fn (x) F (x) as n by LLN. In fact, supx |Fn (x) F (x)| 0. (Glivenko-Cantelli theorem). Now Var(Fn (x)) = F (x)(1 F (x)/n. So consider Dn := n supx |Fn (x) F (x)| as a test statistic for data distributed as F . Key observation: Let Ui := F (Xi ) U[0,1] . The distribution of Dn does not depend on F at all. To compute it, may as well assume that F (x) = x, 0 x 1; that is, sampling from U[0,1] . Then Dn := n sup |Fn (u) u|

n

Fn (t) =

1 n

1(Ui u)

i=1

Lecture 28: Brownian Bridge

Idea: Look at the process Xn (t) := ( n(Fn (t) t), 0 t 1). You can easily check for 0 s t 1: E(Xn (t)) = 0 Var(Xn (t)) = t(1 t) E[Xn (s)Xn (t)] = s(1 t)

d (t), 0 t 1) in the sense of convergence in We see (Xn (t), 0 t 1) (B distribution of all nite-dimensional distributions: In fact, with care from linear combination, d (t1 ), . . . , B (tk )) (Xn (t1 ), . . . , Xn (tk )) (B CenteredandScaledDiscrete Gaussian

Easy: For any choice of i and ti , 1 i m, i Xn (ti ) = centered sum of n IID random variables Normal by usual CLT. And it can be shown that also: d (t)| Dn := supt |Xn (t)| supt |B Formula for the distribution function is a theta function series which has been widely tabulated. The convergence in distribution to an explicit limit distribution is due to Kolmogorov and Smirnov in the 1930s. Identication of the limit in terms of Brownian bridge was made by Doob in the 1940s. Rigorous derivation of a family of such limit results for various functionals of random walks converging to Brownian motion is due to Donsker in the 1950s (Donskers theorem). Theorem: (Dvoretsky-Erd os-Kakutani) With probability 1 the path of a BM is nowhere dierentiable.

m i=1 d

You might also like

- (MAI 4.10) BINOMIAL DISTRIBUTION - SolutionsDocument3 pages(MAI 4.10) BINOMIAL DISTRIBUTION - SolutionsAvatNo ratings yet

- TD Calcul StochastiqueDocument3 pagesTD Calcul StochastiquealexNo ratings yet

- Lecture 26: Brownian Motion: X X X X X X X X X X XDocument5 pagesLecture 26: Brownian Motion: X X X X X X X X X X XspitzersglareNo ratings yet

- Brownian Motion: A TutorialDocument40 pagesBrownian Motion: A TutorialplantorNo ratings yet

- MATH 545, Stochastic Calculus Problem Set 2: January 24, 2019Document7 pagesMATH 545, Stochastic Calculus Problem Set 2: January 24, 2019patriciaNo ratings yet

- 6.MIT15 - 070JF13 - Lec6 - Introduction To Brownian MotionDocument10 pages6.MIT15 - 070JF13 - Lec6 - Introduction To Brownian MotionMarjo KaciNo ratings yet

- Bs MldlaDocument35 pagesBs MldlaRoshan KumarNo ratings yet

- 2 Brownian Motion: T T T T TDocument4 pages2 Brownian Motion: T T T T TwillNo ratings yet

- Ulj FMF Fc2 Fm2 Sno Black Scholes Model 01Document60 pagesUlj FMF Fc2 Fm2 Sno Black Scholes Model 01Dejan DraganNo ratings yet

- Vdocuments - MX - 11 Black ScholesDocument19 pagesVdocuments - MX - 11 Black ScholesandersonvallejosfloresNo ratings yet

- Exam Stochastic Differential Equations (3TU) : June 15, 2009Document3 pagesExam Stochastic Differential Equations (3TU) : June 15, 2009x164No ratings yet

- Brownian Motion and Stochastic CalculusDocument36 pagesBrownian Motion and Stochastic CalculusmuralimoviesNo ratings yet

- We Want To Simulate IndicesDocument10 pagesWe Want To Simulate Indices杨杨柳No ratings yet

- Tutorial On Stochastic Differential EquationsDocument38 pagesTutorial On Stochastic Differential Equationsmariuccio254100% (1)

- BR Mo ST Ca 13Document143 pagesBR Mo ST Ca 13周东旭No ratings yet

- 2016 Lec 6Document5 pages2016 Lec 6Yelsin Leonel Cáceres GómezNo ratings yet

- Sine CurveDocument1 pageSine CurveJeoff Libo-onNo ratings yet

- 1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionDocument11 pages1 IEOR 4700: Notes On Brownian Motion: 1.1 Normal DistributionshrutigarodiaNo ratings yet

- Diffusions and Stochastic Differential EquationsDocument8 pagesDiffusions and Stochastic Differential EquationsLaura StevensNo ratings yet

- DSP4 Fourier Series - UnlockedDocument46 pagesDSP4 Fourier Series - UnlockedluisperikoNo ratings yet

- Parte 9Document16 pagesParte 9Elohim Ortiz CaballeroNo ratings yet

- Fourier Series and Fourier Transforms: EECS2 (6.082), MIT Fall 2006 Lectures 2 and 3Document18 pagesFourier Series and Fourier Transforms: EECS2 (6.082), MIT Fall 2006 Lectures 2 and 3Uzmah JavedNo ratings yet

- 2017optimalcontrol Solution AprilDocument4 pages2017optimalcontrol Solution Aprilenrico.michelatoNo ratings yet

- Ex4 22Document3 pagesEx4 22Harsh RajNo ratings yet

- Lecture 19Document4 pagesLecture 19wandileNo ratings yet

- Solution of The State EquationDocument6 pagesSolution of The State EquationJames KabugoNo ratings yet

- 18.S096 Problem Set Fall 2013: Stochastic CalculusDocument3 pages18.S096 Problem Set Fall 2013: Stochastic CalculusGagik AtabekyanNo ratings yet

- DemostracionesDocument20 pagesDemostracionesEstefanyRojasNo ratings yet

- Brownian Motion and SDE PDFDocument38 pagesBrownian Motion and SDE PDFJames DunkelfelderNo ratings yet

- Optimal Control (Course Code: 191561620)Document4 pagesOptimal Control (Course Code: 191561620)Abdesselem BoulkrouneNo ratings yet

- Section 4 - Stochastic Calculus-1Document12 pagesSection 4 - Stochastic Calculus-1Samuel EricksonNo ratings yet

- Lecture 27: Hitting Probabilities For Brownian MotionDocument4 pagesLecture 27: Hitting Probabilities For Brownian MotionspitzersglareNo ratings yet

- Notes Bug Bites LymeDocument9 pagesNotes Bug Bites LymeandresNo ratings yet

- Homework 1 Solutions: 1 2.1 - Linear Transformations, Null Spaces, and RangesDocument4 pagesHomework 1 Solutions: 1 2.1 - Linear Transformations, Null Spaces, and RangesCody SageNo ratings yet

- MATH4052 - Partial Differential Equations: Analysis On Diffusion EquationDocument9 pagesMATH4052 - Partial Differential Equations: Analysis On Diffusion EquationJohn ChanNo ratings yet

- SPA - Assignment 9 (BM II)Document2 pagesSPA - Assignment 9 (BM II)Peng JinNo ratings yet

- Stochastic Calculus and Applications: Example Sheet 4Document2 pagesStochastic Calculus and Applications: Example Sheet 4KarenNo ratings yet

- Modern Approaches To Stochastic Volatility CalibrationDocument43 pagesModern Approaches To Stochastic Volatility Calibrationhsch345No ratings yet

- Stochastic CalculusDocument3 pagesStochastic CalculussimeferdayNo ratings yet

- Devil's StaircaseDocument3 pagesDevil's StaircasekamallakkannanNo ratings yet

- Topic 04: Complex Integration: MA201 Mathematics IIIDocument41 pagesTopic 04: Complex Integration: MA201 Mathematics IIIhaNo ratings yet

- 1 IEOR 4700: Introduction To Stochastic IntegrationDocument8 pages1 IEOR 4700: Introduction To Stochastic IntegrationhaNo ratings yet

- Lec 0813Document10 pagesLec 0813Chevy TeoNo ratings yet

- Al 7 AmdolilahDocument38 pagesAl 7 AmdolilahAyoub BelachmiNo ratings yet

- Sde PDFDocument11 pagesSde PDFDuyênPhanNo ratings yet

- Assign 5Document1 pageAssign 5Yasir IsmaelNo ratings yet

- CA2019 Topic 04 PDFDocument72 pagesCA2019 Topic 04 PDFDeepanshu RewariaNo ratings yet

- Ergodicity and MixingDocument17 pagesErgodicity and MixingALEX sponsoredNo ratings yet

- Intro To Brownian Motion - MatsudaDocument20 pagesIntro To Brownian Motion - MatsudaUmlol TitaniNo ratings yet

- Fourier Series of Periodic Continuous-Time SignalsDocument15 pagesFourier Series of Periodic Continuous-Time Signalsprem chandranNo ratings yet

- B Splines PDFDocument7 pagesB Splines PDFhello worldNo ratings yet

- ZBOlDoS3W1 Franck OkDocument4 pagesZBOlDoS3W1 Franck OkMaximiliano ThiagoNo ratings yet

- Final Paul BourgadeDocument2 pagesFinal Paul BourgadeaqszaqszNo ratings yet

- Notes On ExercisesDocument44 pagesNotes On ExercisesYokaNo ratings yet

- RectifiablityDocument2 pagesRectifiablityNidhiNo ratings yet

- HW #6Document10 pagesHW #6sshanbhag100% (1)

- Lesson 17Document10 pagesLesson 17Tran NhanNo ratings yet

- EEE 303 HW # 1 SolutionsDocument22 pagesEEE 303 HW # 1 SolutionsDhirendra Kumar SinghNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- Introductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)From EverandIntroductory Differential Equations: with Boundary Value Problems, Student Solutions Manual (e-only)No ratings yet

- Ps 04Document3 pagesPs 04spitzersglareNo ratings yet

- Ece 313: Problem Set 3: Solutions Conditional Probability, Bernoulli, and Binomial PmfsDocument2 pagesEce 313: Problem Set 3: Solutions Conditional Probability, Bernoulli, and Binomial PmfsspitzersglareNo ratings yet

- ECE 313: Problem Set 2: Solutions Discrete Random VariablesDocument3 pagesECE 313: Problem Set 2: Solutions Discrete Random VariablesspitzersglareNo ratings yet

- Combinatorial Genomics: New Tools To Access Microbial Chemical DiversityDocument5 pagesCombinatorial Genomics: New Tools To Access Microbial Chemical DiversityspitzersglareNo ratings yet

- Ece 313: Problem Set 6 Reliability and CdfsDocument2 pagesEce 313: Problem Set 6 Reliability and CdfsspitzersglareNo ratings yet

- Ece 313: Problem Set 3 Conditional Probability, Bernoulli, and Binomial PmfsDocument3 pagesEce 313: Problem Set 3 Conditional Probability, Bernoulli, and Binomial PmfsspitzersglareNo ratings yet

- Parking Placards Reg 3164Document2 pagesParking Placards Reg 3164spitzersglareNo ratings yet

- ECE 313: Problem Set 2 Discrete Random VariablesDocument2 pagesECE 313: Problem Set 2 Discrete Random VariablesspitzersglareNo ratings yet

- ECE 313, Section F: Hour Exam I: Monday March 5, 2012 7:00 P.M. - 8:15 P.M. 163 EverittDocument5 pagesECE 313, Section F: Hour Exam I: Monday March 5, 2012 7:00 P.M. - 8:15 P.M. 163 EverittspitzersglareNo ratings yet

- Stat 150 Class Notes: Onur Kaya 16292609Document4 pagesStat 150 Class Notes: Onur Kaya 16292609spitzersglareNo ratings yet

- ECE 313, Section F: Hour Exam I: Monday March 5, 2012 7:00 P.M. - 8:15 P.M. 163 EverittDocument9 pagesECE 313, Section F: Hour Exam I: Monday March 5, 2012 7:00 P.M. - 8:15 P.M. 163 EverittspitzersglareNo ratings yet

- Suppression of Intermodulation Distortion in Phase-Modulated Analog Photonic LinksDocument4 pagesSuppression of Intermodulation Distortion in Phase-Modulated Analog Photonic LinksspitzersglareNo ratings yet

- Lecture 6: Markov ChainsDocument4 pagesLecture 6: Markov ChainsspitzersglareNo ratings yet

- Lecture 27: Hitting Probabilities For Brownian MotionDocument4 pagesLecture 27: Hitting Probabilities For Brownian MotionspitzersglareNo ratings yet

- Lecture 26: Brownian Motion: X X X X X X X X X X XDocument5 pagesLecture 26: Brownian Motion: X X X X X X X X X X XspitzersglareNo ratings yet

- Lec 25Document4 pagesLec 25spitzersglareNo ratings yet

- Handshake!: Suits: Diamonds, Clubs, Hearts and SpadesDocument50 pagesHandshake!: Suits: Diamonds, Clubs, Hearts and Spadeschedica22No ratings yet

- Eco No Metrics 1Document299 pagesEco No Metrics 1Benard OderoNo ratings yet

- BI348 Chapter11 Video65 CreatingRandomVariablesDocument106 pagesBI348 Chapter11 Video65 CreatingRandomVariableslazarpaladinNo ratings yet

- Binomial vs. Geometric DistributionsDocument11 pagesBinomial vs. Geometric DistributionsRocket FireNo ratings yet

- Bahan Ajar Pemodelan Dan Identifikasi Sistem PDFDocument5 pagesBahan Ajar Pemodelan Dan Identifikasi Sistem PDFDhandy WNo ratings yet

- RV CoefficientDocument3 pagesRV Coefficientdev414No ratings yet

- MATH 1280 Learning Journal Unit 5Document2 pagesMATH 1280 Learning Journal Unit 5Ahmed SesayNo ratings yet

- Entropy Rates of A Stochastic ProcessDocument18 pagesEntropy Rates of A Stochastic ProcessmaxbmenNo ratings yet

- Distribution Tables (T, and Chi)Document2 pagesDistribution Tables (T, and Chi)Anass BNo ratings yet

- From Deterministic To Probabilistic Way of Thinking in Structural EngineeringDocument5 pagesFrom Deterministic To Probabilistic Way of Thinking in Structural EngineeringswapnilNo ratings yet

- Tutorial 5Document5 pagesTutorial 5SOON PAI EE KPM-GuruNo ratings yet

- B.Sc. (H) Probability and Statistics 2011-2012Document2 pagesB.Sc. (H) Probability and Statistics 2011-2012Dharmendra KumarNo ratings yet

- SadasDocument144 pagesSadasibrahimgulay15No ratings yet

- Master Thesis - Akinyemi - OwolabiDocument11 pagesMaster Thesis - Akinyemi - OwolabiAkinNo ratings yet

- Term Test 2016 Act466Document3 pagesTerm Test 2016 Act466ZenitharXNo ratings yet

- Random Number Generator: Comprehensive VersionDocument3 pagesRandom Number Generator: Comprehensive VersionGherasie GabrielNo ratings yet

- StatProb11 Q3 Mod4 Estimation-of-Parameters v5Document35 pagesStatProb11 Q3 Mod4 Estimation-of-Parameters v5jarveyjamespiamonteNo ratings yet

- 18.probability 3-4-2024Document3 pages18.probability 3-4-2024Jaganmohanrao MedaNo ratings yet

- Topic:: Introduction To The Binomial DistributionDocument14 pagesTopic:: Introduction To The Binomial DistributionKim NoblezaNo ratings yet

- 5.probability DistributionsDocument63 pages5.probability Distributionssii raiiNo ratings yet

- Quiz April 6 MA 212M: Maths Minor: Instructor: Dr. Arabin Kumar Dey, Department of Mathematics, IIT GuwahatiDocument2 pagesQuiz April 6 MA 212M: Maths Minor: Instructor: Dr. Arabin Kumar Dey, Department of Mathematics, IIT GuwahatiShreya RaiNo ratings yet

- N K K N KDocument4 pagesN K K N Kvulasi uma satya sai pavanNo ratings yet

- Quantitative Techniques in BusinessDocument36 pagesQuantitative Techniques in Businesstalha mudassirNo ratings yet

- Chapter 16: Introduction To Bayesian Methods of Inference: P P y N P y P P y L N P y FDocument8 pagesChapter 16: Introduction To Bayesian Methods of Inference: P P y N P y P P y L N P y FJeans CarlosNo ratings yet

- Epi Info: Freq Didik Stratavar AsiDocument5 pagesEpi Info: Freq Didik Stratavar AsiDamayanti MayaNo ratings yet

- PROBABILITYDocument19 pagesPROBABILITYmartinmbiririNo ratings yet

- Homework #1: Problem 1Document3 pagesHomework #1: Problem 1雷承慶No ratings yet

- Bivariate Lower and Upper Orthant Value-At-riskDocument37 pagesBivariate Lower and Upper Orthant Value-At-riskMahfudhotinNo ratings yet

- Queueing SystemDocument67 pagesQueueing SystembalwantNo ratings yet