Professional Documents

Culture Documents

Datallegro WP

Datallegro WP

Uploaded by

Meonline7Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Datallegro WP

Datallegro WP

Uploaded by

Meonline7Copyright:

Available Formats

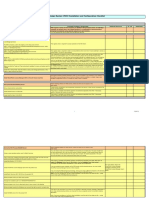

Transitioning Your Centralized EDW to a Hub-and-Spoke Architecture

By Tom Coffing

2008 Coffing Data Warehouse.

Page 1

Tom Coffing is one of the leading experts on both Teradata and DATAllegro. Tom has written over 20 books on data warehousing, Teradata, and DATAllegro. Tom has taught over 1,000 Teradata classes and is considered one of the best technical speakers and writers in the industry. Tom founded Coffing Data Warehousing 15 years ago and is the Chief Executive Officer (CEO) and President. Coffing Data Warehousing performs training, consulting, professional services, and has written almost 90 percent of the books on Teradata. Many people know Tom Coffing as Tera-Tom. Tom Coffings teams of developers are the producers of the Nexus, which is considered the Rosetta Stone of data warehouse software. Not only is it considered the best query tool, but it has been designed to work with Teradata, IBM, Oracle, SQL Server, DATAllegro, Netezza, and Greenplum systems. The Nexus is also a DBA dream tool because it is has point-and-click capabilities for compression, replicating data and DDL between different systems, comparing systems at the database, table, and data level and synchronizing the results. The Nexus is also used to build load scripts and to schedule queries and batch jobs. The Nexus has been tuned to make Teradata and DATAllegro co-existence a breeze. Tom became a DATAllegro partner over a year ago. Please feel free to contact Tom at Tom.Coffing@CoffingDW.com . To download a free trial of the Nexus you can do so at the Coffing Data Warehousing website at www.CoffingDW.com.

2008 Coffing Data Warehouse.

Page 2

All glory comes from daring to begin.

Anonymous

Seeking data warehouse wisdom, I climbed the mountain in search of a Zen Buddhist. After days of climbing I finally came across him. He was deep in meditation, but I could sense he knew I was there. I gently asked him, If you were to buy a data warehouse, what features would you request? He spoke softly and said, Make me one with everything! This white paper will talk about Teradata, its data warehouse strengths and weaknesses, and how moving toward a distributed data warehouse model can bring you everything. How did I get involved? The climb up the data warehouse mountain began 23 years ago, and the view keeps getting clearer. In 1985, I was hired by NCR Corporation as an entry level programmer. My boss sat me down and explained his philosophy on COBOL programming. He asked me if I remember singing songs that were on TV, and how everyone could sing along by following the bouncing ball. I said, Yes sir. He stated that computers worked by executing one line of code at a time just like following the bouncing ball. Anyone who knows my writing understands how much I love simple analogies and examples. I thought about the bouncing ball concept all night. Then I had a vision. What if we could get two bouncing balls to execute code simultaneously and synchronize their actions? We could then get 8, 16, 32, 64, 128, 256 and an unlimited amount of bouncing balls executing code simultaneously. This could be the future of all computing. I put my concept into pictures and showed my boss. He laughed and told me I had no idea of how computers worked and to go back to my cube. In 1992, NCR Corporation bought a company called Teradata, which specialized in a newly invented technology called parallel processing. When I saw the first overview I almost fell out of my seat. It took all my restraint not to leap up and yell, I KNEW IT COULD WORK. The person sitting next to me whispered: Tom, do you think this Teradata system could ever really work? I stated, I know it will work Ive already seen it and this will be the future of all computing! TeraTom was born that day! From that day on, I dedicated my career to parallel processing. I dedicated so much time teaching Teradata technology that people began to call me Tera-Tom. I have written over 20 books on all aspects of Teradata, trained tens of thousands of students, and performed countless hours of consulting and professional services to the largest and most sophisticated data warehouses in the world. My greatest feat is my teams production of our Nexus software, which is considered the Rosetta Stone of data warehousing. The Nexus can simultaneously query every major data warehouse player and allows companies to take a distributed approach to data warehousing. After working with an average of one data warehousing customer each week for 15 straight years, I hope my experience and knowledge will help you build a better data warehouse. How lucky I have been to work with customers in an industry I love.

2008 Coffing Data Warehouse.

Page 3

Teradata The Vision of Parallel Processing

Following the light of the sun, we left the Old World.

Christopher Columbus

When Christopher Columbus first discovered America, he was hard pressed to answer even the simplest questions. When he left Spain he didnt know where he was going. When he got there he didnt know where he was; and when he returned to Spain, he didnt know where hed been. Try explaining that to your boss. It definitely makes hopes of future funding sail down the drain but a great discovery was made in the process. As the author of The Lord of the Rings wrote:

Not all who wander are lost.

J. R. R. Tolkien

Teradata founders, following the light of the sun, worked right in their garage and founded a new computer world. In 1976, Teradata founders set their compass on two goals. Teradata would be the first company to build a database that could support a terabyte, and it would utilize parallel processing. The companys target market was the mainframe. Mainframes provided a centralized repository for running a companys business. Mainframes were transactional systems. Analyzing the data for business intelligence (bi) was unheard of and Teradata founders felt like they could produce a product that was faster and would cost a great deal less than the mainframe. The Teradata concept was to take Intel chips (8080 chips) and string them together. Each processor would have total control and responsibility for a disk. The rows of every table would be spread evenly among the disks, and then data could be retrieved in parallel. In the early days, mainframe vendors laughed at Teradata. How could a bunch of 8080 chips ever compete with a mainframe? Naysayers stated it was like a farmer trying to plow a field with a thousand chickens! Well, Teradata got those chickens to plow the field and a new technology was born.

2008 Coffing Data Warehouse.

Page 4

When Teradata landed the data on the disks and rowed the data ashore, it discovered BI. We called it decision support systems (DSS) back then.

Sequential Processing

Life is a succession of lessons, which must be lived to be understood.

Ralph Waldo Emerson

Before Teradatas introduction of parallel processing, computers followed the bouncing ball and processed data sequentially. The picture below shows a table with four rows. The ball bounces four times and all four records are read. It doesnt take but a blink of an eye to read four rows, but imagine reading millions, billions or even trillions of rows. Teradata learned from the lessons of sequential processing and built a better design.

2008 Coffing Data Warehouse.

Page 5

Parallel Processing Teradatas Greatest Contribution

Fall seven times, stand up eight.

Japanese Proverb

Teradata had some initial problems in the 1980s but kept standing and improving its system. The basic design of Teradata in 1980 (and today) takes the rows of a table and spreads them across many disks. An access module processor (AMP) is responsible for reading and writing to its disk. An AMP is a worker bee. The parsing engine (PE) takes SQL from the user and comes up with a plan for the AMPs to retrieve the data. The PE is the boss and commands the AMPs by calling out steps to do the work. Could you have a Teradata system without AMPs? No, who would do the work? Could you have a system without the parsing engine? Of course not. Could you get along without your boss?

2008 Coffing Data Warehouse.

Page 6

Teradata Strengths

He who asks a question may be a fool for five minutes, but he who never asks a question remains a fool forever.

Unknown

Teradata allows customers the ability to ask any question on any data at any time. The strengths of its system includes: Parallel Processing - Teradata designed its system to load data in parallel, query data in parallel and back-up data in parallel. This provided for a great deal of power and performance. Linear Scalability - Most Teradata customers made relatively small first purchases, but these customers were able to linearly grow their systems indefinitely. This means data, users, and applications. Load Utilities - Teradata produced FastLoad, MultiLoad, and FastExport to move data on and off of the Teradata system in blocks, thus providing for extremely fast load speeds. Experienced Optimizer - Teradata continually enhanced its Optimizer to come up with the fastest and quickest plan for accessing the data. Mixed workload of Queries - Teradata has done a great job of building a system that can handle long intensive queries as well as short sub-second queries. Active Data Warehousing - Teradata has done a nice job of building Active Data Warehouses. These are warehouses that can support BI and take a companys transactions and immediately place them in the warehouse for analysis. Workload Management - Teradata has done a solid job of managing query workloads through software and business rules. Lower priority queries are delayed, placed in a queue, or saved for batch processing in order to not overburden the system.

2008 Coffing Data Warehouse.

Page 7

Why Teradata Made Sense Then

Those who cannot remember the past are condemned to repeat it.

George Santayana, poet and philosopher

A man was driving on the highway when he got a call from his wife. She said, Be careful Bill the news just reported some idiot driving the wrong way on the highway. He said, It is worse than that. It isnt just one car there are hundreds of them! The data warehouse direction five years ago is much different than today, but some customers continue to head the wrong way down the data highway, and an expensive traffic jam is occurring. Teradata creators entered data warehousing because they thought they could build systems that were 10 times faster and cheaper than the mainframe. Now, Teradata systems are known for their enormous expense. The hardware and software are extremely expensive, but the prices charged for education, professional services and consulting have made customers re-think their enterprise data warehouse (EDW) strategy. It is funny how history repeats itself. Those who cannot remember the past are condemned to repeat payments! Teradata systems made sense 10 years ago. The hardware was expensive to produce, but without serious competition, it was the freeway of choice. Plus, parallel processing was considered a near impossible feat. A scalable centralized data warehouse provided a company one version of the truth and the price for the competitive edge was worth it. Here is Teradatas dilemma. All data warehouse vendors today use parallel processing! Each vendor now uses commodity hardware, and each can linearly scale their systems to petabytes of data. Each vendor handles a mixed workload, and each has developed effective and speedy load strategies. It is like parity in the National Football League. Last years champs might not make the playoffs the following year. Todays data warehouse is more complicated than ever before. Geographic locations in different time zones need to access the warehouse at their peak times. Ad hoc queries make it difficult for tuning, and enormous pressure is placed on the warehouse to produce thousands of reports. Couple this with the fact that some departments need to access data that is three months old while others need to access data that is three years old. Also understand that a mixed variety of logical models are now used within a data warehouse. All of these queries are competing for CPU, memory and disk. It just doesnt make logistical sense to try and force it all onto one centralized platform.

2008 Coffing Data Warehouse.

Page 8

Disadvantage No. 1: Inflexible Parallel Processing

Live by the sword, die by the sword.

The above quote is a metaphorical expression meaning that living ones life in a certain way will, in the end, affect ones destiny. Teradata was born to be parallel and one day it could become, dead to be parallel. This is because Teradata has inflexible parallelism. Teradata only works with one design philosophy. That is to spread the rows of each table as evenly as possible across the entire system. Then, the entire system works in parallel to process the queries. Every query is processed in the most expensive manner. Queries that dont necessarily need to be turned around quickly are processed just as expensively as queries that are a rush order. Every user that runs a query on the system is going to a single source to get their request processed. What if the system is down? How about busy? What if a department wants to implement a new data mart? How easy is change? How easy is it to grow the system? Can some data be accessed at a lower cost or is every query processed at great expense? Teradata believes that every bit of processing should be done by one centralized system. Is there only one watering hole for all of the animals in Africa? Why dont we have only one giant airport for every traveler in the United States? So why would a large company only want one EDW where every query is processed by one giant engine? Most companies are changing their philosophy.

2008 Coffing Data Warehouse.

Page 9

Disadvantage No. 2: The Cost

Paying Top Prices for every Query wont kill you. But why take the chance?

Tera-Tom Coffing

All people are created equal, but all data is not! I trained a major Fortune 35 insurance company for years on Teradata. Theyve been one of Teradatas biggest supporters. I was surprised when they told me they had decided to do a proof-of-concept on the Appliance vendors. I have great respect for their data warehouse director. He stated, To me, Teradata is the corporate jet of data warehousing, but you dont have to take the corporate jet everywhere you go. Because Teradata was born to be parallel this means that every request for data has to take the corporate jet. Teradata is the most expensive data warehouse solution in the world. Its architecture requires the entire system to work as a single expensive entity. Every query, data load, batch window process, or touch of a Teradata warehouse has an effect on the entire system. Think about it. Imagine if everywhere you traveled you took a corporate jet. It would be fantastic for international travel and long trips, but what about when traveling short distances? Do you really want to take the jet for a 10 minute drive downtown or even across the state? Appliances have the speed of a corporate jet but the price of a taxi ride. A distributed system that separates certain data allows for treatment of that particular data in the best and most effective manner. Creating a data warehouse environment that allows for multiple travel modes and budgetary options is the data warehouse of the future. Todays data needs to travel by corporate jet, car, bus, truck, train, bike, motorcycle and tricycle. Newer vendors are able to produce corporate jet speed but have designs that allow for more options and cheaper solutions.

2008 Coffing Data Warehouse.

Page 10

Disadvantage No. 3: Load Utilities Incredible Feat or Achilles Heel?

"Time is the coin of your life. It is the only coin you have, and only you can determine how it will be spent. Be careful lest you let other people spend it for you."

Carl Sandburg, poet

The quote above is beautiful and should strike a chord with every human being. Let me convert this beautiful quote into language a data warehouse can understand.

"Night time is the coin of your life. It is the best time to load data. Screw this up and the business will no longer make cents!"

Mia Batchwindows To-Longfellow

Large companies with a centralized EDW are beginning to have problems meeting their batch window. Again the problem is that Teradata systems are an all-or-nothing proposition. The great news is that Teradata has had 20 years to build its load utilities. The companys fastest three utilities move data at the block level with great speed. It has FastLoad to insert data into empty tables, MultiLoad to perform Inserts, updates, and deletes on populated tables, and FastExport to move data off of Teradata. Here is the problem. Teradata only allows up to 15 loads to be run at one time, and since these loads are so resource intensive, many companies set their maximum at five! With this limitation, it is only a matter of time (and growth) until the batch window cant be met. Did someone really decide to dedicate their batch window to one enterprise data warehouse? It comes down to simple math. As a cowboy in the Wild West once said: Never insult seven men when all youre packing is a six gun! Do the math.

2008 Coffing Data Warehouse. Page 11

Disadvantage No. 4: Teradata The Modern Mainframe

Once the game is over, the king and the pawn go back in the same box.

Italian Proverb

Once the Teradata data warehouse game is on, the users query the detail data, data marts and older data on the same box. The data pawns get the same treatment as the king and queen. It is the exact same expense to query older data as newer data. Even data that doesnt have to be processed urgently is just as expensive as the most urgent of queries. And as they say in Australia, implementing new applications and data to the warehouse requires a big check mate. Teradata is conceptually much like a mainframe because it is expensive to purchase, upgrade and maintain. It is also provides for slow response to new data marts, and system performance is greatly effected as new data and users are added. It no longer makes financial or logistical sense to make every user query from one single centralized system. User needs are too diverse and companies are too large and complicated for one central and expensive solution. How are you going to satisfy such a wide variety of users? It is a lot of pressure to place on a system to handle all standard reports, batch loading mixed with near-real-time loads, tactical queries next to long detail data queries, CRM, ad hoc queries and data marts driving BI analytics. Add to the pressure that most companies are global and that each regions peak times for queries or loading conflict with each other.

2008 Coffing Data Warehouse.

Page 12

Disadvantage No. 5: Trusting a Monopoly

The best way to predict the future is to create it.

Sophia Bedford-Pierce

Trusting one company for your data warehouses hardware, software, maintenance, consulting, education and tools is like trusting OPEC to drop oil prices. The best way to predict the future is to create it. So do it. Integrating Teradata with an appliance system is the best strategy because: (1) True competition will drop prices dramatically, and having companies compete can drop your hardware and software costs dramatically. Also, spending less on education, professional services and consulting can rid companies of enormous hidden costs. (2) The year 2007 has produced the biggest rivalries ever in data warehousing. Companies are not going after Teradata because it is No. 1, but because it is vulnerable. The data warehouse vendor that improves the performance and drops its price will be that asset you need on your side. Many companies today are purchasing systems from the major appliance vendors and utilizing them where they make the most sense. Many of the young car drivers of today dont remember the good old days when gas stations charged 50 cents a gallon. There were even times when rival gas stations competed against each other. To earn a customers business the rival stations continued to drop their prices, sometimes reaching 20 cents a gallon. These gasoline wars became a consumers dream come true. Gas prices have continued to rise since OPEC formed an alliance. As you fill up your tank and the price tag approaches fifty dollars, dont you just wish someone would come up with an alternative fuel? How great would it be to see oil prices suddenly drop? What if the new alternative fuel was less expensive and showed potential for much better mileage? The monopoly would be over, prices would drop, and everyone could take advantage of the solution best suited for them. There is a reason monopolies are illegal. They produce inflated prices to consumers and eliminate natural progression. Are you really using one vendor for all your data warehouse needs? We currently have little to no choice but to place our faith in OPEC, and most companies have done the same with their EDW vendor. The time to break the OPEC monopoly will come in the future, but the time to break the data warehouse monopoly is now. Trust me when I tell you that performing a proof-of-concept on vendors in todays marketplace will prove to you that speed, performance and sophistication arent sacrificed whereas the cost-saving benefits are mind-boggling. Other companies are breaking the monopoly now because it is a data warehouse buyers market.

2008 Coffing Data Warehouse. Page 13

DATAllegro A Distributed Processing Architecture

If I have seen farther than others, it is because I was standing on the shoulders of giants

Isaac Newton

Stuart Frost, CEO and founder of DATAllegro, took his years of experiences and built DATAllegro. This architecture provides power through parallel processing, but also provides a hub-and-spoke architecture that is flexible and cost-effective. The load capabilities are outstanding, the ability to handle a mixed workload is excellent, and the cost is extremely low.

2008 Coffing Data Warehouse.

Page 14

Architectural Difference Between Teradata and DATAllegro

Two roads diverged in a wood and I took the one less traveled by, and that has made all the difference.

Robert Frost

A Teradata system processes data in parallel, but the system always spreads the data across all disks and acts as one big entity. Teradata designed its systems back in the 1980s and are constrained by the original design. Moving from a centralized solution to a distributed data warehouse will be the best road you can take. Giving a user the ability to query a road less traveled will make a huge difference in speed and performance.

2008 Coffing Data Warehouse.

Page 15

Architectural Difference Between Teradata and DATAllegro

I saw the angel in the marble and carved until I set him free.

Michelangelo

DATAllegro saw the users in constraints and designed a warehouse that set them free. A DATAllegro system processes data in parallel, but the genius behind the design is the huband-spoke architecture. Dell servers can be grouped together to form a grid. Different grids are tied together, but act as separate systems. This has improved data loads, contention and the ability to quickly implement new data marts. A distributed environment allows you to separate newer data from older data, place data where it makes sense for different logistical time zones. You can free up resources on your Teradata system by allowing departments with different service level agreements (SLAs) to process on different logical platforms. The ability to provide different physical locations for sensitive, security risk data is a huge benefit. Handling mixed workloads such as tactical queries, BI reporting tools, ad hoc queries, geographical time zones, batch windows, continuous data loads and complex queries is not practical on a single platform.

2008 Coffing Data Warehouse.

Page 16

Multi-Temperature Data Warehousing

An invasion of armies can be resisted, but not an idea whose time has come.

Victor Hugo

Not paying an army and a leggy for a data warehouse is an idea whose time has come. Utilizing Dell servers, Cisco routers, EMC disks, and a proven open-source database in Ingres, DATAllegro has brought world-class commodity hardware and software together, and the invasion has begun. DATAllegro utilizes Ingres, which was one of the first relational database products of the 1990s. Built by legend Michael Stonebraker, Ingres has been enhanced by DATAllegro with an added software layer to create an MPP architecture to parallel queries across processors and to manage workloads. DATAllegro is extremely fast because of its parallel processing, but DATAllegro also has the ability to process data at different costs. Most companies keep data for years, but the latest three months of data is usually accessed much more heavily than the older data. It makes sense to process the most recent three months of data on your fastest system, but data that is rarely accessed should be processed at a much lower cost. Multi temperature data warehousing allows you to process certain data at higher speeds and costs and less active data at slower speeds and lower costs.

2008 Coffing Data Warehouse.

Page 17

DATAllegro - The Best of Both Worlds

A man who views the world at 50 the same as he did at 20 has wasted 30 years of his life.

Muhammad Ali

Teradata, a data warehouse pioneer, helped pave the way systems today are built. Teradata was designed 30 years ago and business intelligence has evolved. Did you know that 80 percent of most data warehouse queries can be satisfied by a data mart? The advantage of an appliance is that it is easy to install and has a rapid time-to-productivity. DATAllegro systems have been designed to provide the ability to handle detail data in their hub and have other data in a spoke. This is the best of both worlds. DATAllegro systems are true hub-andspoke distributed systems. This design is the data warehouse implementation of the future. Instead of having all data on a centralized system, why not divide data marts into different spokes? You can have data for one region, time zone or even continent in spokes that make sense. You might take all product data and place it separately from data that doesnt have a relation. This can all be done without duplicating data and allows companies a great deal more options other than throw it on the EDW.

2008 Coffing Data Warehouse.

Page 18

Combining your Teradata System with DATAllegro

First you imitate, and then you innovate.

Miles Davis, Jazz Musician

There is a new trend in Teradata data warehousing. Many companies are combining their current Teradata system with an appliance. This gives them flexibility in adding data marts, meeting their batch window and providing enhanced performance. All of this while dramatically reducing costs. Being able to control the costs and decide at which cost different data should be processed is a big key to competitive advantage. As customers become familiar with a distributed system, they realize serious advantages while opening up financial options through competition. Five years ago you did not see the long-time Teradata customer utilizing other systems. Today, the staunchest, most loyal and most successful Teradata customers have begun implementing appliances to create a distributed environment. The reasons are to drop costs, relieve pressure on their current system and to take advantage of the strengths the new vendors provide.

2008 Coffing Data Warehouse.

Page 19

Three Reasons to Consider DATAllegro Immediately

They can conquer who believe they can.

Virgil, 70 BC

Building an incredible data warehouse environment is something that you can believe in and can do at minimal risk. Take an approach of divide and-conquer. Look for areas that can provide high visibility to management by providing impact on the business. Is there a department or business unit that has a budget but cannot be serviced by IT? Identify the requirements for a set of work and in less than 12 weeks users can be ready for business. Considered migrating historical data. Why pay high prices for data you rarely access? Also, take a strong look at intensive batch processes, such as aggregations and data marts. This will alleviate the stress on your current system and allow users to retrieve important answers quickly. Understanding what data should still be processed on Teradata and what data should be processed on DATAllegro will allow an organization to continually improve their processes and time to market. The beauty of a DATAllegro system is that it can act as a small appliance, but it also is designed to compete directly with the largest traditional data warehouse players.

2008 Coffing Data Warehouse.

Page 20

Converting From Teradata to DATAllegro

Every sunrise is a second chance.

Unknown

Converting the table structures (DDL) and migrating data from Teradata to DATAllegro is a second chance to improve your data warehouse. The DATAllegro utilities are designed to allow companies to easily move DDL and data to and from Teradata. Companies can use DATAllegro and their utilities alongside their existing Teradata data warehouses in order to add capacity and/or increase performance at a very low cost. The utilities are included with implementation packages supplied by DATAllegro, as well as a variety of DATAllegro partners, and are already being used at a number of DATAllegro customer sites. The DATAllegro utilities are moving data from Teradata to DATAllegro at amazing speeds.

2008 Coffing Data Warehouse.

Page 21

Nexus BI Tool Rosetta Stone of Data Warehousing

Only he who attempts the ridiculous may achieve the impossible.

Don Quixote

Nobody has built a BI tool that can simultaneously query Teradata, Oracle, DB2, and DATAllegro, and every other appliance. Nexus is called the Rosetta Stone of data warehousing software because it allows every user to query any and all database vendors and view the results from one brilliant application. DATAllegro bundles Nexus licenses to every customer that buys a DATAllegro system. Please feel free to download a free Nexus trial from our website at www.CoffingDW.com.

2008 Coffing Data Warehouse.

Page 22

Perform a Proof-of-Concept (POC)

I hear and I forget. I see and I remember. I do and I understand.

Confucius

Would you ever buy a car without a test drive? How about attending a college you never visited? Why would you buy a system you havent tested? Being involved with a proof-ofconcept (POC) is an enlightening experience. If you follow the rules below then your POC will be a piece-of-cake (POC). There are two major decisions when conducting a POC. I have seen them both work well. 1. Onsite POC This entails having a system sent to your site. This is the preferred method because you can involve the user community, utilize your own data completely, and allow users to participate and gain experience with the new system. The POC can then accurately represent the potential production environment. 2. Offsite POC This entails shipping 1 TB of cleansed data to a vendor. They can then blow-up the data to 2 TB and then 10 TB. You can send a couple of your own trusted employees to run the POC at the vendors site. You should send anywhere from 25-100 tables at varying size to the vendor a few days before the POC starts. The big advantage to an offsite POC is that it doesnt interrupt your current working environment.

Some good advice about running the POC:

Dont allow every single vendor on the planet to participate. Select potential vendors and then: Have the vendor present to your POC committee. Make the vendor provide a real-world customer with who you can have a conversation. Have an expert give you the scoop on each potential vendor.

Use a query tool such as the Nexus to perform the SQL for each vendor you utilize. This tool was designed to work with every vendor and will allow for apples-to-apples comparisons in areas of timing comparisons, etc. Sign the appropriate paperwork to secure your data with each participating vendor and make sure the data is cleansed before and destroyed after the POC is run.

2008 Coffing Data Warehouse. Page 23

Use as much real data from your operational systems that makes sense. Dont let the vendors see the queries before the POC and then make sure the queries are run without any tuning. After queries are run let the vendors do some tuning. Tuning is something that can be important and you can compare how queries run in both a tuned and untuned environment. This will help you understand strengths and weaknesses of the product and where it fits best within your organization. Make sure you test almost every type of query including multi-table joins, left, right, and full outer joins, subqueries, correlated subqueries, OLAP functions, tactical short queries and long complex queries to ensure there are no surprises. Categorize the testing of queries by running easy, medium and difficult queries. Make sure to include a set of complex queries with a small result set. Test the queries with a single user, multiple users, and for the number of users that you would expect in your production environment. Tests approaching real-world are best and always test for linear scalability. Make sure that data loading is tested as part of the POC. This includes extraction from your current data warehouse and direct loads from operational systems. If continuous loads are expected in your production environment, then test for that. You should also test to see how queries perform as loads are running. Dont be afraid to run a few very complex queries that may or may not work with your current vendor. Demonstrate integration with your current warehouse such as Teradata, Oracle or IBM. Then test integration with your tools such as Informatica, Ab Initio, Business Objects, Cognos, SAS or Microstrategy. Dont forget to demonstrate integration with your backup and restore strategies. Benchmark at least two to five terabytes depending on your expected requirements. Make sure you test the system setup and administration. This will play a role tactically in understanding where the new system fits in your organizational plans. It also helps ensure no hidden costs. Make sure that each vendor does not have any advantages. Make apples-to-apples comparisons on vendor platforms and ensure a set of rules are followed. If a POC is to be performed offsite, then consider no access to the box unless your own people are present to monitor the POC. Test the ability to convert the table structures, data definition language (DDL) from your current data warehouse provider to the vendors DDL. Test current applications to see what SQL conversions might be needed for the new vendor.

2008 Coffing Data Warehouse.

Page 24

Hire or assign a project manager to run the POC in its entirety. You should be able to perform the actual POC within three to five days. Get pricing from each vendor, and remember that everything is negotiable.

Summary

Write a wise saying and your name will live forever.

Anonymous

Teradata was designed back in the 1980s and deserves credit for being the market leader. Its greatest contribution has always been parallel processing. For almost three decades it has provided a centralized solution to many of the largest companies in the world. Todays data warehouse is much more complicated than ever before. Geographic locations in different time zones need to access the warehouse at their peak times. Ad hoc queries make it difficult for tuning, and enormous pressure is placed on the warehouse to produce thousands of reports. Couple this with the fact that some departments need to access data that is three months old while others need to access data that is three years old. There is also a mixed variety of logical models such as 3rd Normal Form and dimensional models residing on the same platform, and a more diverse mix of queries, users and applications than ever before. All are competing for CPU, memory and disk. It just doesnt make logistical sense to try and force it all onto one centralized platform. DATAllegro is a great solution to utilize in conjunction with your Teradata system. DATAllegro utilizes parallel processing, fast load and backup utilities, and a hub-and-spoke architecture. Utilizing Dell servers, Cisco routers, EMC disks, and a proven open-source database in Ingres, DATAllegro has brought world-class commodity hardware and software together, delivering incredible speed and performance, while dropping costs dramatically. DATAllegro has taken cost cutting personally with its multi-temperature data warehouse strategy by allowing companies to process certain data at higher speeds and costs and less active data at slower and even less expensive means. Every query run on Teradata is much more expensive. I hope this whitepaper has given you some things to consider and options when looking at ways to adapt your current data warehouse requirements. The only way to find out for sure if they will work for you is to do a proof-of-concept. Sincerely, Tom Coffing C.E.O. and President Coffing Data Warehouse

2008 Coffing Data Warehouse.

Page 25

Thank you for reading this complimentary paper provided by DATAllegro. For more information on this topic or DATAllegro products, please contact us at (877) 499-3282, or visit www.datallegro.com

V E R S I O N 3

POWERED BY:

ABOUT DATALLEGRO

DATAllegro v3 is the industrys most advanced data warehouse appliance on an enterprise-class platform. By combining DATAllegros patent-pending software with the industrys leading hardware, storage and database technologies, DATAllegro has taken data warehouse performance and innovation to the next level. DATAllegro v3 goes beyond the low cost and high performance of first generation data warehouse appliances and adds the flexibility and scalability that only an open platform can offer. The result is a complete data warehouse appliance that enables companies with large volumes of data to increase their business intelligence. Whether you have a few terabytes of user data or hundreds, DATAllegros data warehouse appliances deliver a fast, flexible and affordable solution that allows a companys data to grow at the pace of its business.

85 Enterprise 2nd Floor Aliso Viejo, CA 92656 Sales (877) 499-3282 Phone (949) 680-3000 www.datallegro.com

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5825)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Murex CVDocument4 pagesMurex CVNaushad AhmadNo ratings yet

- How To Design DWHDocument11 pagesHow To Design DWHMeonline7No ratings yet

- Coffing DW Physical-Table of ContentsDocument9 pagesCoffing DW Physical-Table of ContentsMeonline7No ratings yet

- Data Science Essentials Exam DS200Document1 pageData Science Essentials Exam DS200Meonline7No ratings yet

- Coffing DW Physical-Table of ContentsDocument9 pagesCoffing DW Physical-Table of ContentsMeonline7No ratings yet

- Teradata Database 12 (1) .0Document3 pagesTeradata Database 12 (1) .0Meonline7No ratings yet

- 7 ErwinDocument35 pages7 ErwinMeonline7No ratings yet

- Creating Dashboards Using Dashboard Manager: - Sandeep Kumar JainaDocument103 pagesCreating Dashboards Using Dashboard Manager: - Sandeep Kumar JainaMeonline7No ratings yet

- Dates and Times in TeradataDocument1 pageDates and Times in TeradataMeonline7No ratings yet

- ADUtilitiesDocument4 pagesADUtilitiesapi-3745837No ratings yet

- FLEX12 2trainingDocument380 pagesFLEX12 2trainingmailmempkNo ratings yet

- The Oracle Fusion DBADocument7 pagesThe Oracle Fusion DBAPavan PeriNo ratings yet

- Dorina SAS DeveloperDocument6 pagesDorina SAS DeveloperJoshElliotNo ratings yet

- Configure PDS Servers On Windows 2003: Plant Design System (PDS) Installation and Configuration ChecklistDocument15 pagesConfigure PDS Servers On Windows 2003: Plant Design System (PDS) Installation and Configuration Checklisttranhuy3110No ratings yet

- WebMethods AdaptersDocument4 pagesWebMethods AdaptersFrancescoNo ratings yet

- Subash KumaresanDocument6 pagesSubash KumaresanSyed Javeed RazviNo ratings yet

- AdzdpmanDocument4 pagesAdzdpmanadroit_ramesh1436No ratings yet

- Oracle Warehouse Management: User's GuideDocument326 pagesOracle Warehouse Management: User's GuidekenbooNo ratings yet

- Robert Smith: Oracle DBADocument3 pagesRobert Smith: Oracle DBASPAL BROTHERNo ratings yet

- Otd Exalogic Topology WhitepaperDocument23 pagesOtd Exalogic Topology WhitepapertonNo ratings yet

- Nnmi Support Matrix 9.00Document14 pagesNnmi Support Matrix 9.00scribdxwyuanNo ratings yet

- Sparsh Attendance Management SystemDocument2 pagesSparsh Attendance Management SystemSparsh TechnologiesNo ratings yet

- Oracle SQL HintsDocument6 pagesOracle SQL HintsLakshmi Priya MacharlaNo ratings yet

- CCI/IOne PHP/DRCP Oracle Open World PresentationDocument26 pagesCCI/IOne PHP/DRCP Oracle Open World PresentationnicholastangNo ratings yet

- HP Openview Service Desk 4.5Document111 pagesHP Openview Service Desk 4.5Vlad SimachkovNo ratings yet

- Oracle BI Applications Installation On Cloud Services PDFDocument27 pagesOracle BI Applications Installation On Cloud Services PDFDilip Kumar AluguNo ratings yet

- Oracle Enterprise Manager V4: David Leroy Product Management, SMPDocument13 pagesOracle Enterprise Manager V4: David Leroy Product Management, SMPRsb SarhusNo ratings yet

- Demantra System Requirements GuideDocument26 pagesDemantra System Requirements GuideSandeep JNo ratings yet

- Installing BI Apps 11.1.1.8Document78 pagesInstalling BI Apps 11.1.1.8tonNo ratings yet

- Real Time SQL MonitoringDocument30 pagesReal Time SQL Monitoringmahendra.lal71373No ratings yet

- App SVR Report ServiceDocument540 pagesApp SVR Report ServiceknjhajprNo ratings yet

- OCHRADocument66 pagesOCHRAprince2venkatNo ratings yet

- 717 Platform Support Guide 120814Document37 pages717 Platform Support Guide 120814Venu Gopal NNo ratings yet

- Section 7 QuizDocument6 pagesSection 7 Quizsyahndra100% (1)

- Oracle® Alert: User's Guide Release 12.1Document180 pagesOracle® Alert: User's Guide Release 12.1yadavdevenderNo ratings yet

- Establishing JDBC Connection in JavaDocument39 pagesEstablishing JDBC Connection in JavaManoj KavediaNo ratings yet

- Grid Infrastructure Installation and Upgrade Guide LinuxDocument371 pagesGrid Infrastructure Installation and Upgrade Guide LinuxIvan NovakovNo ratings yet

- Oracle ExamsDocument5 pagesOracle ExamskkgutsNo ratings yet