Professional Documents

Culture Documents

2013-05-13 PDF

2013-05-13 PDF

Uploaded by

SyllogismRXSOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2013-05-13 PDF

2013-05-13 PDF

Uploaded by

SyllogismRXSCopyright:

Available Formats

Human-Robot Interaction in Unstructured Environments Progress Report May 13, 2013 Kevin DeMarco

Pattern Recognition and Machine Learning

As Ive been reading through the literature on Hidden Markov Models and pattern recognition, Ive found that I needed more background in the topic. Thus, I began making my way through Christopher Bishops A book, Pattern Recognition and Machine Learning. Im maintaining a L TEXdocument that I use for deriving equations that are not completely described in his book. Ive included my notes for the rst chapter in this progress report. Im going to be moving through this book in parallel with my other research throughout the summer.

MORSE Simulation

Ive managed to import the Turtlebot / Turtlebot 2 3D model into Blender and equip it with a Kinect sensor model for object detection. Over the next week I will be going through the ROS Navigation Tutorials in order to become better acquainted with the ROS autonomy module. Figure 1 shows the Turtlebot 2 in the MORSE simulation.

Figure 1: Turtlebot 2 in MORSE Simulator The output of the simulated Kinect sensor is sent to ROS where it can be visualized in RVIZ. Figure 2 shows the output of the Kinect sensors PointCloud. In the PointCloud, the chair and desk are the most recognizable objects. I did notice a signicant decrease in performance when I enabled the simulated Kinect sensor. My main goal in this simulation is showing that I can autonomously predict the plan of a human agent based on the humans trajectory and environmental markers (doors, roads, obstacles, etc.). I believe that I will be able to simulate this process without having to use the full Kinect model in order to improve run-time performance. After the basic algorithms are proven in MORSE, I will be able to test the full system on an actual Turtlebot 2.

Other

I also attached a PowerPoint presentation I delivered to DARPA and SAIC last week in order to address a major issue with testing autonomy modules. The main issue is that since most autonomy modules are composed of independent processes that are not synchronized and they communicate through a publishand-subscribe architecture, the simulator needs to throttle the data input to the autonomy module. If the simulator oods the autonomy module with data, it will not behave deterministicly due to CPU load. The

Figure 2: Kinect PointCloud in RVIZ solution is composed of dening a standard interface that allows the simulator to control the autonomy module in pseudo-lockstep.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5820)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (898)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Proposed Changes To Marijuana LawDocument165 pagesProposed Changes To Marijuana LawJessie BalmertNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Bactec BombRiskDocument6 pagesBactec BombRisksandycastle100% (1)

- Income Inequality in Singapore: Causes, Consequences and Policy Options - Ishita DhamaniDocument31 pagesIncome Inequality in Singapore: Causes, Consequences and Policy Options - Ishita DhamaniJunyuan100% (1)

- 1 GTRI Underwater LIDAR TestingDocument1 page1 GTRI Underwater LIDAR TestingSyllogismRXSNo ratings yet

- Simulating Collaborative Robots in A Massive Multi-Agent Game Environment (SCRIMMAGE)Document14 pagesSimulating Collaborative Robots in A Massive Multi-Agent Game Environment (SCRIMMAGE)SyllogismRXSNo ratings yet

- Diver Detection System RequirementsDocument1 pageDiver Detection System RequirementsSyllogismRXSNo ratings yet

- 2013-10-28 Progress ReportDocument2 pages2013-10-28 Progress ReportSyllogismRXSNo ratings yet

- Progress ReportDocument1 pageProgress ReportSyllogismRXSNo ratings yet

- Daily Notes: Human-Robot Interaction in Unstructured Environments Progress Report January 3, 2014 Kevin DemarcoDocument1 pageDaily Notes: Human-Robot Interaction in Unstructured Environments Progress Report January 3, 2014 Kevin DemarcoSyllogismRXSNo ratings yet

- Progress ReportDocument2 pagesProgress ReportSyllogismRXSNo ratings yet

- Ieee Oceans 2013 Kevin Demarco Dr. Ayanna M. Howard Dr. Michael E. WestDocument29 pagesIeee Oceans 2013 Kevin Demarco Dr. Ayanna M. Howard Dr. Michael E. WestSyllogismRXSNo ratings yet

- Social Networking Enabled Wearable Computer ECE Senior Design Project - Fall 2011 1 The ProblemDocument1 pageSocial Networking Enabled Wearable Computer ECE Senior Design Project - Fall 2011 1 The ProblemSyllogismRXSNo ratings yet

- DeMarco Path PlanningDocument3 pagesDeMarco Path PlanningSyllogismRXSNo ratings yet

- Real-Time Computer Vision Processing On A Wearable SystemDocument16 pagesReal-Time Computer Vision Processing On A Wearable SystemSyllogismRXSNo ratings yet

- Eoms Fpga Icd: List of RegistersDocument3 pagesEoms Fpga Icd: List of RegistersSyllogismRXSNo ratings yet

- Price: Graphic CardsDocument3 pagesPrice: Graphic CardsTSGohNo ratings yet

- Threat Hunting Through Email Headers - SQRRLDocument16 pagesThreat Hunting Through Email Headers - SQRRLSyeda Ashifa Ashrafi PapiaNo ratings yet

- Topic 1 - Introduction To C# ProgrammingDocument42 pagesTopic 1 - Introduction To C# ProgrammingKhalid Mehboob100% (1)

- Phase Locked Loops System Perspectives and Circuit Design Aspects 1St Edition Rhee Online Ebook Texxtbook Full Chapter PDFDocument69 pagesPhase Locked Loops System Perspectives and Circuit Design Aspects 1St Edition Rhee Online Ebook Texxtbook Full Chapter PDFmichael.smith524100% (8)

- School BusDocument156 pagesSchool Bussowmeya veeraraghavanNo ratings yet

- EDMDocument20 pagesEDMlogeshboy007No ratings yet

- Barangay Functionaries - Disability, Medical, Death, Burial, Maternal & RetirementDocument1 pageBarangay Functionaries - Disability, Medical, Death, Burial, Maternal & RetirementJemarie TedlosNo ratings yet

- Chapter 2 - 1 - Roots of Equations - Bracketing MethodsDocument24 pagesChapter 2 - 1 - Roots of Equations - Bracketing MethodsRoger FernandezNo ratings yet

- Lesson 4. Statutory Monetary Benefits PDFDocument65 pagesLesson 4. Statutory Monetary Benefits PDFFranz LibreNo ratings yet

- MQ SP P 5011 PDFDocument25 pagesMQ SP P 5011 PDFjaseelNo ratings yet

- Transportation Engineering ThesisDocument75 pagesTransportation Engineering ThesisMichelleDaarolNo ratings yet

- Especificaciones Técnicas Blower 2RB510 7av35z PDFDocument5 pagesEspecificaciones Técnicas Blower 2RB510 7av35z PDFSebas BuitragoNo ratings yet

- The Strategic Highway Research ProgramDocument4 pagesThe Strategic Highway Research ProgramMuhammad YahdimanNo ratings yet

- Final Paper - Ana Maria PumneaDocument2 pagesFinal Paper - Ana Maria PumneaAnna Pumnea0% (1)

- Jackson - Cwa - Lodged Stipulated OrderDocument56 pagesJackson - Cwa - Lodged Stipulated Orderthe kingfishNo ratings yet

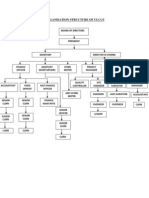

- Organisation Structure of Ulccs: Director in ChargeDocument1 pageOrganisation Structure of Ulccs: Director in ChargeMohamed RiyasNo ratings yet

- GN4-1-SA8T2 Tech Scout - WebRTC2SIP-Gateway - V1.0-FinalDocument19 pagesGN4-1-SA8T2 Tech Scout - WebRTC2SIP-Gateway - V1.0-FinalMakarius YuwonoNo ratings yet

- Occupational Health Hazards Due To Mine Dust: Unit-14Document9 pagesOccupational Health Hazards Due To Mine Dust: Unit-14Dinesh dhakarNo ratings yet

- AssII 466Document2 pagesAssII 466Amr TawfikNo ratings yet

- Applied Pharmacology For The Dental Hygienist 8th Edition Haveles Test BankDocument35 pagesApplied Pharmacology For The Dental Hygienist 8th Edition Haveles Test Bankatop.remiped25zad100% (29)

- Group No Description Remark Mutdate DrawingDocument1 pageGroup No Description Remark Mutdate DrawingCharaf DineNo ratings yet

- Sil ReviewDocument19 pagesSil Reviewtrung2i100% (1)

- Tugas Bahasa InggrisDocument10 pagesTugas Bahasa InggrisHunHan SevenNo ratings yet

- Class 2Document21 pagesClass 2md sakhwat hossainNo ratings yet

- Chapter 5 Accounting For General Assets and Capital ProjectsDocument41 pagesChapter 5 Accounting For General Assets and Capital ProjectsSaja AlbarjesNo ratings yet

- Electrical SystemDocument283 pagesElectrical Systemzed shop73100% (1)

- Well and Testing From Fekete and EngiDocument6 pagesWell and Testing From Fekete and EngiRovshan1988No ratings yet