Professional Documents

Culture Documents

Speech Coding Systems

Speech Coding Systems

Uploaded by

boogamiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Speech Coding Systems

Speech Coding Systems

Uploaded by

boogamiCopyright:

Available Formats

CS578- Speech Signal Processing

Lecture 7: Speech Coding

Yannis Stylianou

University of Crete, Computer Science Dept., Multimedia Informatics Lab

yannis@csd.uoc.gr

Univ. of Crete

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

Digital telephone communication system

Categories of speech coders

Waveform coders (16-64 kbps, f

s

= 8000Hz)

Hybrid coders (2.4-16 kbps, f

s

= 8000Hz)

Vocoders (1.2-4.8 kbps, f

s

= 8000Hz)

Categories of speech coders

Waveform coders (16-64 kbps, f

s

= 8000Hz)

Hybrid coders (2.4-16 kbps, f

s

= 8000Hz)

Vocoders (1.2-4.8 kbps, f

s

= 8000Hz)

Categories of speech coders

Waveform coders (16-64 kbps, f

s

= 8000Hz)

Hybrid coders (2.4-16 kbps, f

s

= 8000Hz)

Vocoders (1.2-4.8 kbps, f

s

= 8000Hz)

Speech quality

Closeness of the processed speech waveform to the original

speech waveform

Naturalness

Background artifacts

Intelligibility

Speaker identiability

Speech quality

Closeness of the processed speech waveform to the original

speech waveform

Naturalness

Background artifacts

Intelligibility

Speaker identiability

Speech quality

Closeness of the processed speech waveform to the original

speech waveform

Naturalness

Background artifacts

Intelligibility

Speaker identiability

Speech quality

Closeness of the processed speech waveform to the original

speech waveform

Naturalness

Background artifacts

Intelligibility

Speaker identiability

Speech quality

Closeness of the processed speech waveform to the original

speech waveform

Naturalness

Background artifacts

Intelligibility

Speaker identiability

Measuring Speech Quality

Subjective tests:

Diagnostic Rhyme Test (DRT)

Diagnostic Acceptability Measure (DAM)

Mean Opinion Score (MOS)

Objective tests:

Segmental Signal-to-Noise Ratio (SNR)

Articulation Index

Measuring Speech Quality

Subjective tests:

Diagnostic Rhyme Test (DRT)

Diagnostic Acceptability Measure (DAM)

Mean Opinion Score (MOS)

Objective tests:

Segmental Signal-to-Noise Ratio (SNR)

Articulation Index

Measuring Speech Quality

Subjective tests:

Diagnostic Rhyme Test (DRT)

Diagnostic Acceptability Measure (DAM)

Mean Opinion Score (MOS)

Objective tests:

Segmental Signal-to-Noise Ratio (SNR)

Articulation Index

Measuring Speech Quality

Subjective tests:

Diagnostic Rhyme Test (DRT)

Diagnostic Acceptability Measure (DAM)

Mean Opinion Score (MOS)

Objective tests:

Segmental Signal-to-Noise Ratio (SNR)

Articulation Index

Measuring Speech Quality

Subjective tests:

Diagnostic Rhyme Test (DRT)

Diagnostic Acceptability Measure (DAM)

Mean Opinion Score (MOS)

Objective tests:

Segmental Signal-to-Noise Ratio (SNR)

Articulation Index

Quantization

Statistical models of speech (preliminary)

Scalar quantization (i.e., waveform coding)

Vector quantization (i.e., subband and sinusoidal coding)

Quantization

Statistical models of speech (preliminary)

Scalar quantization (i.e., waveform coding)

Vector quantization (i.e., subband and sinusoidal coding)

Quantization

Statistical models of speech (preliminary)

Scalar quantization (i.e., waveform coding)

Vector quantization (i.e., subband and sinusoidal coding)

Linear Prediction Coding, LPC

Classic LPC

Mixed Excitation Linear Prediction, MELP

Multipulse LPC

Code Excited Linear Prediction (CELP)

Linear Prediction Coding, LPC

Classic LPC

Mixed Excitation Linear Prediction, MELP

Multipulse LPC

Code Excited Linear Prediction (CELP)

Linear Prediction Coding, LPC

Classic LPC

Mixed Excitation Linear Prediction, MELP

Multipulse LPC

Code Excited Linear Prediction (CELP)

Linear Prediction Coding, LPC

Classic LPC

Mixed Excitation Linear Prediction, MELP

Multipulse LPC

Code Excited Linear Prediction (CELP)

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

Probability Density of speech

By setting x[n] x, the histogram of speech samples can be

approximated by a gamma density:

p

X

(x) =

_

3

8

x

|x|

_

1/2

e

3|x|

2

x

or by a simpler Laplacian density:

p

X

(x) =

1

2

x

e

3|x|

x

Densities comparison

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

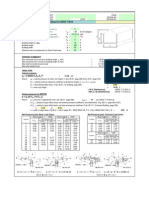

Coding and Decoding

Fundamentals of Scalar Coding

Lets quantize a single sample speech value, x[n] into M

reconstruction or decision levels:

x[n] = x

i

= Q(x[n]), x

i 1

< x[n] x

i

with 1 i M and x

k

denotes the M decision levels with

0 k M.

Assign a codeword in each reconstruction level. Collection of

codewords makes a codebook.

Using B-bit binary codebook we can represent each 2

B

dierent quantization (reconstruction) levels.

Bit rate, I , is dened as: I = Bf

s

Fundamentals of Scalar Coding

Lets quantize a single sample speech value, x[n] into M

reconstruction or decision levels:

x[n] = x

i

= Q(x[n]), x

i 1

< x[n] x

i

with 1 i M and x

k

denotes the M decision levels with

0 k M.

Assign a codeword in each reconstruction level. Collection of

codewords makes a codebook.

Using B-bit binary codebook we can represent each 2

B

dierent quantization (reconstruction) levels.

Bit rate, I , is dened as: I = Bf

s

Fundamentals of Scalar Coding

Lets quantize a single sample speech value, x[n] into M

reconstruction or decision levels:

x[n] = x

i

= Q(x[n]), x

i 1

< x[n] x

i

with 1 i M and x

k

denotes the M decision levels with

0 k M.

Assign a codeword in each reconstruction level. Collection of

codewords makes a codebook.

Using B-bit binary codebook we can represent each 2

B

dierent quantization (reconstruction) levels.

Bit rate, I , is dened as: I = Bf

s

Fundamentals of Scalar Coding

Lets quantize a single sample speech value, x[n] into M

reconstruction or decision levels:

x[n] = x

i

= Q(x[n]), x

i 1

< x[n] x

i

with 1 i M and x

k

denotes the M decision levels with

0 k M.

Assign a codeword in each reconstruction level. Collection of

codewords makes a codebook.

Using B-bit binary codebook we can represent each 2

B

dierent quantization (reconstruction) levels.

Bit rate, I , is dened as: I = Bf

s

Uniform quantization

x

i

x

i 1

= , 1 i M

x

i

=

x

i

+x

i 1

2

, 1 i M

is referred to as uniform quantization step size.

Example of a 2-bit uniform quantization:

Uniform quantization: designing decision

regions

Signal range: 4

x

x[n] 4

x

Assuming B-bit binary codebook, we get 2

B

quantization

(reconstruction) levels

Quantization step size, :

=

2x

max

2

B

and quantization noise.

Classes of Quantization noise

There are two classes of quantization noise:

Granular Distortion:

x[n] = x[n] + e[n]

where e[n] is the quantization noise, with:

2

e[n]

2

Overload Distortion: clipped samples

Assumptions

Quantization noise is an ergodic white-noise random process:

r

e

[m] = E(e[n]e[n + m])

=

2

e

, m = 0

= 0, m = 0

Quantization noise and input signal are uncorrelated:

E(x[n]e[n + m]) = 0 m

Quantization noise is uniform over the quantization interval

p

e

(e) =

1

2

e

2

= 0, otherwise

Assumptions

Quantization noise is an ergodic white-noise random process:

r

e

[m] = E(e[n]e[n + m])

=

2

e

, m = 0

= 0, m = 0

Quantization noise and input signal are uncorrelated:

E(x[n]e[n + m]) = 0 m

Quantization noise is uniform over the quantization interval

p

e

(e) =

1

2

e

2

= 0, otherwise

Assumptions

Quantization noise is an ergodic white-noise random process:

r

e

[m] = E(e[n]e[n + m])

=

2

e

, m = 0

= 0, m = 0

Quantization noise and input signal are uncorrelated:

E(x[n]e[n + m]) = 0 m

Quantization noise is uniform over the quantization interval

p

e

(e) =

1

2

e

2

= 0, otherwise

Dithering

Definition (Dithering)

We can force e[n] to be white and uncorrelated with x[n] by

adding noise to x[n] before quantization!

Signal-to-Noise Ratio

To quantify the severity of the quantization noise, we dene the Signal-to-Noise Ratio (SNR) as:

SNR =

2

x

2

e

=

E(x

2

[n])

E(e

2

[n])

1

N

N1

n=0

x

2

[n]

1

N

N1

n=0

e

2

[n]

For uniform pdf and quantizer range 2x

max

:

2

e

=

2

12

=

x

2

max

3 2

2B

Or

SNR =

3 2

2B

(

x

max

x

)

2

and in dB:

SNR(dB) 6B + 4.77 20 log

10

x

max

and since x

max

= 4

x

:

SNR(dB) 6B 7.2

Signal-to-Noise Ratio

To quantify the severity of the quantization noise, we dene the Signal-to-Noise Ratio (SNR) as:

SNR =

2

x

2

e

=

E(x

2

[n])

E(e

2

[n])

1

N

N1

n=0

x

2

[n]

1

N

N1

n=0

e

2

[n]

For uniform pdf and quantizer range 2x

max

:

2

e

=

2

12

=

x

2

max

3 2

2B

Or

SNR =

3 2

2B

(

x

max

x

)

2

and in dB:

SNR(dB) 6B + 4.77 20 log

10

x

max

and since x

max

= 4

x

:

SNR(dB) 6B 7.2

Signal-to-Noise Ratio

To quantify the severity of the quantization noise, we dene the Signal-to-Noise Ratio (SNR) as:

SNR =

2

x

2

e

=

E(x

2

[n])

E(e

2

[n])

1

N

N1

n=0

x

2

[n]

1

N

N1

n=0

e

2

[n]

For uniform pdf and quantizer range 2x

max

:

2

e

=

2

12

=

x

2

max

3 2

2B

Or

SNR =

3 2

2B

(

x

max

x

)

2

and in dB:

SNR(dB) 6B + 4.77 20 log

10

x

max

and since x

max

= 4

x

:

SNR(dB) 6B 7.2

Signal-to-Noise Ratio

To quantify the severity of the quantization noise, we dene the Signal-to-Noise Ratio (SNR) as:

SNR =

2

x

2

e

=

E(x

2

[n])

E(e

2

[n])

1

N

N1

n=0

x

2

[n]

1

N

N1

n=0

e

2

[n]

For uniform pdf and quantizer range 2x

max

:

2

e

=

2

12

=

x

2

max

3 2

2B

Or

SNR =

3 2

2B

(

x

max

x

)

2

and in dB:

SNR(dB) 6B + 4.77 20 log

10

x

max

and since x

max

= 4

x

:

SNR(dB) 6B 7.2

Signal-to-Noise Ratio

To quantify the severity of the quantization noise, we dene the Signal-to-Noise Ratio (SNR) as:

SNR =

2

x

2

e

=

E(x

2

[n])

E(e

2

[n])

1

N

N1

n=0

x

2

[n]

1

N

N1

n=0

e

2

[n]

For uniform pdf and quantizer range 2x

max

:

2

e

=

2

12

=

x

2

max

3 2

2B

Or

SNR =

3 2

2B

(

x

max

x

)

2

and in dB:

SNR(dB) 6B + 4.77 20 log

10

x

max

and since x

max

= 4

x

:

SNR(dB) 6B 7.2

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Pulse Code Modulation, PCM

B bits of information per sample are transmitted as a

codeword

instantaneous coding

not signal-specic

11 bits are required for toll quality

what is the rate for f

s

= 10kHz?

For CD, with f

s

= 44100 and B = 16 (16-bit PCM), what is

the SNR?

Optimal decision and reconstruction level

if x[n] p

x

(x) we determine the optimal decision level, x

i

and the

reconstruction level, x, by minimizing:

D = E[( x x)

2

]

=

_

p

x

(x)( x x)

2

dx

and assuming M reconstruction levels x = Q[x]:

D =

M

i =1

=

_

x

i

x

i 1

p

x

(x)( x

i

x)

2

dx

So:

D

x

k

= 0, 1 k M

D

x

k

= 0, 1 k M 1

Optimal decision and reconstruction level

if x[n] p

x

(x) we determine the optimal decision level, x

i

and the

reconstruction level, x, by minimizing:

D = E[( x x)

2

]

=

_

p

x

(x)( x x)

2

dx

and assuming M reconstruction levels x = Q[x]:

D =

M

i =1

=

_

x

i

x

i 1

p

x

(x)( x

i

x)

2

dx

So:

D

x

k

= 0, 1 k M

D

x

k

= 0, 1 k M 1

Optimal decision and reconstruction level

if x[n] p

x

(x) we determine the optimal decision level, x

i

and the

reconstruction level, x, by minimizing:

D = E[( x x)

2

]

=

_

p

x

(x)( x x)

2

dx

and assuming M reconstruction levels x = Q[x]:

D =

M

i =1

=

_

x

i

x

i 1

p

x

(x)( x

i

x)

2

dx

So:

D

x

k

= 0, 1 k M

D

x

k

= 0, 1 k M 1

Optimal decision and reconstruction level,

cont.

The minimization of D over decision level, x

k

, gives:

x

k

=

x

k+1

+ x

k

2

, 1 k M 1

The minimization of D over reconstruction level, x

k

, gives:

x

k

=

_

x

k

x

k1

_

p

x

(x)

x

k

x

k1

p

x

(s)ds

_

xdx

=

_

x

k

x

k1

p

x

(x)xdx

Optimal decision and reconstruction level,

cont.

The minimization of D over decision level, x

k

, gives:

x

k

=

x

k+1

+ x

k

2

, 1 k M 1

The minimization of D over reconstruction level, x

k

, gives:

x

k

=

_

x

k

x

k1

_

p

x

(x)

x

k

x

k1

p

x

(s)ds

_

xdx

=

_

x

k

x

k1

p

x

(x)xdx

Example with Laplacian pdf

Principle of Companding

Companding examples

Companding examples:

Transformation to a uniform density:

g[n] = T(x[n]) =

_

x[n]

p

x

(s)ds

1

2

,

1

2

g[n]

1

2

= 0 elsewhere

-law:

T(x[n]) = x

max

log (1 +

|x[n]|

x

max

)

log (1 +)

sign(x[n])

Companding examples

Companding examples:

Transformation to a uniform density:

g[n] = T(x[n]) =

_

x[n]

p

x

(s)ds

1

2

,

1

2

g[n]

1

2

= 0 elsewhere

-law:

T(x[n]) = x

max

log (1 +

|x[n]|

x

max

)

log (1 +)

sign(x[n])

Adaptive quantization

Differential and Residual quantization

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

Motivation for VQ

Comparing scalar and vector quantization

Distortion in VQ

Here we have a multidimensional pdf p

x

(x):

D = E[(x x)

T

(x x)]

=

_

(x x)

T

(x x)p

x

(x)dx

=

M

i =1

_ _

xC

i

_

(r

i

x)

T

(r

i

x)p

x

(x)dx

Two constraints:

A vector x must be quantized to a reconstruction level r

i

that

gives the smallest distortion:

C

i

= {x : ||x r

i

||

2

||x r

l

||

2

, l = 1, 2, , M}

Each reconstruction level r

i

must be the centroid of the

corresponding decision region, i.e., of the cell C

i

:

r

i

=

x

m

C

i

x

m

x

m

C

i

1

i = 1, 2, , M

Distortion in VQ

Here we have a multidimensional pdf p

x

(x):

D = E[(x x)

T

(x x)]

=

_

(x x)

T

(x x)p

x

(x)dx

=

M

i =1

_ _

xC

i

_

(r

i

x)

T

(r

i

x)p

x

(x)dx

Two constraints:

A vector x must be quantized to a reconstruction level r

i

that

gives the smallest distortion:

C

i

= {x : ||x r

i

||

2

||x r

l

||

2

, l = 1, 2, , M}

Each reconstruction level r

i

must be the centroid of the

corresponding decision region, i.e., of the cell C

i

:

r

i

=

x

m

C

i

x

m

x

m

C

i

1

i = 1, 2, , M

The k-means algorithm

S1:

D =

1

N

N1

k=0

(x

k

x

k

)

T

(x

k

x

k

)

S2: Pick an initial guess at the reconstruction levels {r

i

}

S3: For each x

k

elect an r

i

closest to x

k

. Form clusters

(clustering step)

S4: Find the mean of x

k

in each cluster which gives a new r

i

.

Compute D.

S5: Stop when the change in D over two consecutive

iterations is insignicant.

The LBG algorithm

Set the desired number of cells: M = 2

B

Set an initial codebook C

(0)

with one codevector which is set

as the average of the entire training sequence,

x

k

, k = 1, 2, , N.

Split the codevector into two and get an initial new codebook

C

(1)

.

Perform a k-means algorithm to optimize the codebook and

get the nal C

(1)

Split the nal codevectors into four and repeat the above

process until the desired number of cells is reached.

The LBG algorithm

Set the desired number of cells: M = 2

B

Set an initial codebook C

(0)

with one codevector which is set

as the average of the entire training sequence,

x

k

, k = 1, 2, , N.

Split the codevector into two and get an initial new codebook

C

(1)

.

Perform a k-means algorithm to optimize the codebook and

get the nal C

(1)

Split the nal codevectors into four and repeat the above

process until the desired number of cells is reached.

The LBG algorithm

Set the desired number of cells: M = 2

B

Set an initial codebook C

(0)

with one codevector which is set

as the average of the entire training sequence,

x

k

, k = 1, 2, , N.

Split the codevector into two and get an initial new codebook

C

(1)

.

Perform a k-means algorithm to optimize the codebook and

get the nal C

(1)

Split the nal codevectors into four and repeat the above

process until the desired number of cells is reached.

The LBG algorithm

Set the desired number of cells: M = 2

B

Set an initial codebook C

(0)

with one codevector which is set

as the average of the entire training sequence,

x

k

, k = 1, 2, , N.

Split the codevector into two and get an initial new codebook

C

(1)

.

Perform a k-means algorithm to optimize the codebook and

get the nal C

(1)

Split the nal codevectors into four and repeat the above

process until the desired number of cells is reached.

The LBG algorithm

Set the desired number of cells: M = 2

B

Set an initial codebook C

(0)

with one codevector which is set

as the average of the entire training sequence,

x

k

, k = 1, 2, , N.

Split the codevector into two and get an initial new codebook

C

(1)

.

Perform a k-means algorithm to optimize the codebook and

get the nal C

(1)

Split the nal codevectors into four and repeat the above

process until the desired number of cells is reached.

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

Basic coding scheme in LPC

Vocal tract system function:

H(z) =

A

1 P(z)

where

P(z) =

p

k=1

a

k

z

1

Input is binary: impulse/noise excitation.

If frame rate is 100 frames/s and we use 13 parameters

(p = 10, 1 for Gain, 1 for pitch, 1 for voicing decision) we

need 1300 parameters/s, instead of 8000 samples/s for

f

s

= 8000Hz.

Basic coding scheme in LPC

Vocal tract system function:

H(z) =

A

1 P(z)

where

P(z) =

p

k=1

a

k

z

1

Input is binary: impulse/noise excitation.

If frame rate is 100 frames/s and we use 13 parameters

(p = 10, 1 for Gain, 1 for pitch, 1 for voicing decision) we

need 1300 parameters/s, instead of 8000 samples/s for

f

s

= 8000Hz.

Basic coding scheme in LPC

Vocal tract system function:

H(z) =

A

1 P(z)

where

P(z) =

p

k=1

a

k

z

1

Input is binary: impulse/noise excitation.

If frame rate is 100 frames/s and we use 13 parameters

(p = 10, 1 for Gain, 1 for pitch, 1 for voicing decision) we

need 1300 parameters/s, instead of 8000 samples/s for

f

s

= 8000Hz.

Scalar quantization within LPC

For 7200 bps:

Voiced/unvoiced decision: 1 bit

Pitch (if voiced): 6 bits (uniform)

Gain: 5 bits (nonuniform)

Poles d

i

: 10 bits (nonuniform) [5 bits for frequency and 5 bits

for bandwidth] 6 poles = 60 bits

So: (1 + 6 + 5 + 60) 100 frames/s = 7200 bps

Scalar quantization within LPC

For 7200 bps:

Voiced/unvoiced decision: 1 bit

Pitch (if voiced): 6 bits (uniform)

Gain: 5 bits (nonuniform)

Poles d

i

: 10 bits (nonuniform) [5 bits for frequency and 5 bits

for bandwidth] 6 poles = 60 bits

So: (1 + 6 + 5 + 60) 100 frames/s = 7200 bps

Refinements to the basic LPC coding scheme

Companding in the form of a logarithmic operator on pitch

and gain

Instead of poles use the reection (or the PARCOR)

coecients k

i

,(nonuniform)

Companding of k

i

:

g

i

= T[k

i

]

= log

_

1k

i

1+k

i

_

Coecients g

i

can be coded at 5-6 bits each! (which results

in 4800 bps for an order 6 predictor, and 100 frames/s)

Reduce the frame rate by a factor of two (50 frames/s) gives

us a bit rate of 2400 bps

Refinements to the basic LPC coding scheme

Companding in the form of a logarithmic operator on pitch

and gain

Instead of poles use the reection (or the PARCOR)

coecients k

i

,(nonuniform)

Companding of k

i

:

g

i

= T[k

i

]

= log

_

1k

i

1+k

i

_

Coecients g

i

can be coded at 5-6 bits each! (which results

in 4800 bps for an order 6 predictor, and 100 frames/s)

Reduce the frame rate by a factor of two (50 frames/s) gives

us a bit rate of 2400 bps

Refinements to the basic LPC coding scheme

Companding in the form of a logarithmic operator on pitch

and gain

Instead of poles use the reection (or the PARCOR)

coecients k

i

,(nonuniform)

Companding of k

i

:

g

i

= T[k

i

]

= log

_

1k

i

1+k

i

_

Coecients g

i

can be coded at 5-6 bits each! (which results

in 4800 bps for an order 6 predictor, and 100 frames/s)

Reduce the frame rate by a factor of two (50 frames/s) gives

us a bit rate of 2400 bps

Refinements to the basic LPC coding scheme

Companding in the form of a logarithmic operator on pitch

and gain

Instead of poles use the reection (or the PARCOR)

coecients k

i

,(nonuniform)

Companding of k

i

:

g

i

= T[k

i

]

= log

_

1k

i

1+k

i

_

Coecients g

i

can be coded at 5-6 bits each! (which results

in 4800 bps for an order 6 predictor, and 100 frames/s)

Reduce the frame rate by a factor of two (50 frames/s) gives

us a bit rate of 2400 bps

Refinements to the basic LPC coding scheme

Companding in the form of a logarithmic operator on pitch

and gain

Instead of poles use the reection (or the PARCOR)

coecients k

i

,(nonuniform)

Companding of k

i

:

g

i

= T[k

i

]

= log

_

1k

i

1+k

i

_

Coecients g

i

can be coded at 5-6 bits each! (which results

in 4800 bps for an order 6 predictor, and 100 frames/s)

Reduce the frame rate by a factor of two (50 frames/s) gives

us a bit rate of 2400 bps

VQ in LPC coding

A 10-bit codebook (1024 codewords), 800 bps VQ provides a

comparable quality to a 2400 bps scalar quantizer.

A 22-bit codebook (4200000 codewords), 2400 bps VQ provides

a higher output speech quality.

Unique components of MELP

Mixed pulse and noise excitation

Periodic or aperiodic pulses

Adaptive spectral enhancements

Pulse dispersion lter

Line Spectral Frequencies (LSFs) in MELP

LSFs for a pth order all-pole model are denes as follows:

1

Form two polynomials:

P(z) = A(z) + z

(p+1)

A(z

1

)

Q(z) = A(z) z

(p+1)

A(z

1

)

2

Find the roots of P(z) and Q(z),

i

which are on the unit

circle.

3

Exclude trivial roots at

i

= 0 and

i

= .

MELP coding

For a 2400 bps:

34 bits allocated to scalar quantization of the LSFs

8 bits for gain

7 bits for pitch and overall voicing

5 bits for multi-band voicing

1 bit for the jittery state

which is 54 bits. With a frame rate of 22.5 ms, we get an 2400 bps

coder.

Outline

1

Introduction

2

Statistical Models

3

Scalar Quantization

Max Quantizer

Companding

Adaptive quantization

Dierential and Residual quantization

4

Vector Quantization

The k-means algorithm

The LBG algorithm

5

Model-based Coding

Basic Linear Prediction, LPC

Mixed Excitation LPC (MELP)

6

Acknowledgments

Acknowledgments

Most, if not all, gures in this lecture are coming from the book:

T. F. Quatieri: Discrete-Time Speech Signal Processing,

principles and practice

2002, Prentice Hall

and have been used after permission from Prentice Hall

You might also like

- Project 1 Matched Filters: Applications of Cross-CorrelationDocument4 pagesProject 1 Matched Filters: Applications of Cross-CorrelationDavidRubeomNo ratings yet

- G S MiniDocument11 pagesG S MiniparasNo ratings yet

- Start Up Airline Dossier-Creation of A Low Cost Domestic Airline in The Polish MarketDocument63 pagesStart Up Airline Dossier-Creation of A Low Cost Domestic Airline in The Polish Marketntongo58% (12)

- Wind Asce7 10Document5 pagesWind Asce7 10saravanan4286100% (1)

- A320 Aerodynamics StudyDocument7 pagesA320 Aerodynamics StudyGourav DasNo ratings yet

- MDO2 LON Mit Handpult - 3127B02G PDFDocument95 pagesMDO2 LON Mit Handpult - 3127B02G PDFJuan PeraltaNo ratings yet

- LDPC OptimizationDocument32 pagesLDPC OptimizationShajeer KaniyapuramNo ratings yet

- BER For AWGN Channel With BPSK: ReferencesDocument1 pageBER For AWGN Channel With BPSK: ReferenceskannarpadiNo ratings yet

- Low Density Parity Check Codes1Document41 pagesLow Density Parity Check Codes1Prithvi RajNo ratings yet

- ECE4007 Information Theory and Coding: DR - Sangeetha R.GDocument24 pagesECE4007 Information Theory and Coding: DR - Sangeetha R.GTanmoy DasNo ratings yet

- Lossy Compression Iii - 1Document21 pagesLossy Compression Iii - 1Rakesh InaniNo ratings yet

- Unit Iv Linear Block Codes: Channel EncoderDocument26 pagesUnit Iv Linear Block Codes: Channel EncoderSudhaNo ratings yet

- Channelcoding: Dept. of Electrical & Computer Engineering The University of Michigan-DearbornDocument54 pagesChannelcoding: Dept. of Electrical & Computer Engineering The University of Michigan-DearbornJohn PragasamNo ratings yet

- Scs Mit Feb18Document38 pagesScs Mit Feb18pavan2446No ratings yet

- Lec11 Audio CompDocument15 pagesLec11 Audio CompAli AhmedNo ratings yet

- PCM BahaaDocument50 pagesPCM BahaaNazar liveNo ratings yet

- Lec PU March05Document98 pagesLec PU March05Muhammad Awais AshfaqNo ratings yet

- Agniel 2Document14 pagesAgniel 2Killer Boys7No ratings yet

- C1 - Sampling and Quantization - 2Document23 pagesC1 - Sampling and Quantization - 2HuzaifiNo ratings yet

- Linear Block CodingDocument18 pagesLinear Block CodingPavuluri SairamNo ratings yet

- Coding Theory and Its ApplicationsDocument45 pagesCoding Theory and Its Applicationsnavisingla100% (1)

- SC 12Document45 pagesSC 12Warrior BroNo ratings yet

- Principles of Communications: Chapter 4: Analog-to-Digital ConversionDocument35 pagesPrinciples of Communications: Chapter 4: Analog-to-Digital ConversionDr-Eng Imad A. ShaheenNo ratings yet

- Eecs 554 hw2Document3 pagesEecs 554 hw2Fengxing ZhuNo ratings yet

- Multichannel Linear EqualisersDocument4 pagesMultichannel Linear EqualisersIbrahim ÖzkanNo ratings yet

- Block CodesDocument24 pagesBlock CodesbunchyeceNo ratings yet

- Lecture Notes On "Source and Channel Coding"Document107 pagesLecture Notes On "Source and Channel Coding"Ajith KategarNo ratings yet

- 1 Introduction To Linear Block CodesDocument13 pages1 Introduction To Linear Block CodesRini KamilNo ratings yet

- Lecture 2Document19 pagesLecture 2elmzyonasaraNo ratings yet

- Low Density Parity Check CodesDocument21 pagesLow Density Parity Check CodesPrithvi Raj0% (1)

- Audio Quantizer Experiment Using MATLAB .... IIT DELHIDocument3 pagesAudio Quantizer Experiment Using MATLAB .... IIT DELHIAnkushNo ratings yet

- BER For Rayleigh Fading With BPSK: February 9, 2012Document2 pagesBER For Rayleigh Fading With BPSK: February 9, 2012kannarpadiNo ratings yet

- A Short Introduction To Channel CodingDocument21 pagesA Short Introduction To Channel CodingDspNo ratings yet

- Digital Communications: Analog Vs Digital Communication Pulse Code Modulation (PCM) QuantizationDocument20 pagesDigital Communications: Analog Vs Digital Communication Pulse Code Modulation (PCM) QuantizationRiya SinghNo ratings yet

- Quantization: Prof. Pooja M. Bharti IT Department Laxmi Institute of TechnologyDocument35 pagesQuantization: Prof. Pooja M. Bharti IT Department Laxmi Institute of TechnologyPooja BhartiNo ratings yet

- Simulation Results For Algebraic Soft-Decision Decoding of Reed-Solomon CodesDocument5 pagesSimulation Results For Algebraic Soft-Decision Decoding of Reed-Solomon CodesKayol MayerNo ratings yet

- Digital Communications I: Modulation and Coding Course: Term 3 - 2008 Catharina LogothetisDocument22 pagesDigital Communications I: Modulation and Coding Course: Term 3 - 2008 Catharina LogothetiserichaasNo ratings yet

- Quantization: - . - , N N Is The Size of The Set of Output Values. This Chapter Will Present Basic IdeasDocument14 pagesQuantization: - . - , N N Is The Size of The Set of Output Values. This Chapter Will Present Basic IdeasSujitha KurupNo ratings yet

- WalshDocument4 pagesWalshonlineanil007No ratings yet

- Error Correcting CodesDocument38 pagesError Correcting CodeshmbxNo ratings yet

- Hamming CodesDocument31 pagesHamming CodesFrancis SachinNo ratings yet

- QuantizationDocument89 pagesQuantizationannanndhiii100% (1)

- 18EC501_U5LM1Document28 pages18EC501_U5LM1DHANUSHRAGAV MNo ratings yet

- Hamming CodesDocument31 pagesHamming CodesvolooNo ratings yet

- Communication Systems Lecture-6: Pulse Code Modulation: Chadi Abou-RjeilyDocument25 pagesCommunication Systems Lecture-6: Pulse Code Modulation: Chadi Abou-Rjeilyglenne gonzalesNo ratings yet

- DC AssignDocument13 pagesDC AssignSp PriyaNo ratings yet

- Fundamentals of Vector QuantizationDocument87 pagesFundamentals of Vector Quantizationmonacer_ericsonNo ratings yet

- Algorithm: Wireless Communication Experiments Ex. No. 1 AimDocument16 pagesAlgorithm: Wireless Communication Experiments Ex. No. 1 Aimseedr eviteNo ratings yet

- 07 ECE 3125 ECE 3242 - Nov 05 2012 - Formatting and Baseband Pulse ModulationDocument30 pages07 ECE 3125 ECE 3242 - Nov 05 2012 - Formatting and Baseband Pulse ModulationEng-Ahmed ShabellNo ratings yet

- EC21B1005-Tharak L4Document8 pagesEC21B1005-Tharak L4tharakram72No ratings yet

- Speaker Recognition System Based On VQ in MATLAB EnvironmentDocument8 pagesSpeaker Recognition System Based On VQ in MATLAB EnvironmentmanishscryNo ratings yet

- Analog To Digital Conversion: KIE2008 (Week 4-5)Document42 pagesAnalog To Digital Conversion: KIE2008 (Week 4-5)Kisshur KumarNo ratings yet

- TE312: Introduction To Digital Telecommunications: Lecture #4 Pulse Code Modulation (PCM)Document25 pagesTE312: Introduction To Digital Telecommunications: Lecture #4 Pulse Code Modulation (PCM)Ibra NazlaNo ratings yet

- CT216 Exam 2Document6 pagesCT216 Exam 2202201580daiict.ac.inNo ratings yet

- Data Communication AssigmentDocument6 pagesData Communication AssigmentAsif AhmedNo ratings yet

- Coding IntroDocument21 pagesCoding IntroEzhil Azhahi.AM Assistant ProfessorNo ratings yet

- ELG 5372 Error Control CodingDocument453 pagesELG 5372 Error Control Codingremonadly2704No ratings yet

- CHAPTER 01 - Basics of Coding TheoryDocument17 pagesCHAPTER 01 - Basics of Coding TheoryvshlvvkNo ratings yet

- SVM EccDocument6 pagesSVM EccDavid WilsonNo ratings yet

- Practical PCM CircuitsDocument17 pagesPractical PCM CircuitsmewadahirenNo ratings yet

- Some Case Studies on Signal, Audio and Image Processing Using MatlabFrom EverandSome Case Studies on Signal, Audio and Image Processing Using MatlabNo ratings yet

- Gusset Plate Connection To Round HSS Tension MembersDocument7 pagesGusset Plate Connection To Round HSS Tension MembersSergioAlcantaraNo ratings yet

- 1 Lindapter Maggio 2023Document84 pages1 Lindapter Maggio 2023CALGERINo ratings yet

- Reparacion Quemacocos NTB00-001Document12 pagesReparacion Quemacocos NTB00-001Sarah SimpsonNo ratings yet

- Design of 50 MW SolarDocument14 pagesDesign of 50 MW Solarbhargav100% (1)

- TDS-MY-MACLINE SDH, Mar2018Document1 pageTDS-MY-MACLINE SDH, Mar2018Faqrul AzwanNo ratings yet

- MIL-S-38249 Superseded by AMS 3374Document13 pagesMIL-S-38249 Superseded by AMS 3374HenryNo ratings yet

- Properties Value: Ug-23 Maximum Allowable Stress ValuesDocument2 pagesProperties Value: Ug-23 Maximum Allowable Stress ValuesMohamad Faiz ZulkipliNo ratings yet

- 0807 CardsplitterDocument3 pages0807 CardsplitterAlexander WieseNo ratings yet

- Lecture 2 - Participants in The Construction ProjectDocument21 pagesLecture 2 - Participants in The Construction ProjectjbjuanzonNo ratings yet

- 2013 - 05 Organigram Teximp GroupDocument1 page2013 - 05 Organigram Teximp Groupbronec10No ratings yet

- Nasa - Fully-Coupled Fluid-Structure Vibration Analysis Using NastranDocument84 pagesNasa - Fully-Coupled Fluid-Structure Vibration Analysis Using NastranMinseong KimNo ratings yet

- Audi TB 37-06-13Document5 pagesAudi TB 37-06-13Victor GabrielNo ratings yet

- CAREvent ALS BLS Manual Rev K Sept 08Document8 pagesCAREvent ALS BLS Manual Rev K Sept 08KennethAdrianRosaNo ratings yet

- C5 X7 Ignition CoilDocument2 pagesC5 X7 Ignition CoilAnnash KhaidooNo ratings yet

- Meshless Methods For Conservation LawsDocument24 pagesMeshless Methods For Conservation LawsReginaldRemoNo ratings yet

- ASTM-C533 - Calcium Silicate Block and Pipe Thermal InsulationDocument4 pagesASTM-C533 - Calcium Silicate Block and Pipe Thermal InsulationEspie SualogNo ratings yet

- Unit-5 Maintenance of Hydraulic SystemDocument46 pagesUnit-5 Maintenance of Hydraulic SystemprabhathNo ratings yet

- ADAU1592Document25 pagesADAU1592Aniket SinghNo ratings yet

- Sop of Billet Charging BM RHFDocument2 pagesSop of Billet Charging BM RHFwrbmrhfisp100% (1)

- Analysis of Hydrostatic Force On Submerged and Partially Submerged Plane Surface Using Tecquipment H314Document4 pagesAnalysis of Hydrostatic Force On Submerged and Partially Submerged Plane Surface Using Tecquipment H314Lester JayNo ratings yet

- Statement of Interest FormDocument1 pageStatement of Interest FormRodel G. Esteban LptNo ratings yet

- 10 Things You Can Do To Become A Better PHP DeveloperDocument15 pages10 Things You Can Do To Become A Better PHP DeveloperAlejandro NajarroNo ratings yet

- LY IR9000 Manual XModdzDocument6 pagesLY IR9000 Manual XModdzCriz MauricioNo ratings yet

- Squid Proxy3.1Document16 pagesSquid Proxy3.1Norbac OrtizNo ratings yet

- MT Solar Installation Manual Ground Mount V3.0Document8 pagesMT Solar Installation Manual Ground Mount V3.0Lilia BurlacuNo ratings yet