Professional Documents

Culture Documents

A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big Targets

A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big Targets

Uploaded by

Martín FigueroaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big Targets

A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big Targets

Uploaded by

Martín FigueroaCopyright:

Available Formats

722

PIERS Proceedings, Taipei, March 2528, 2013

A New Parallel Version of the DDSCAT Code for Electromagnetic Scattering from Big Targets

R. W. Numrich1 , T. L. Clune2 , and K.-S. Kuo2, 3

1

The Graduate Center, City University of New York, New York, New York, USA 2 NASA Goddard Space Flight Center, Greenbelt, Maryland, USA 3 Earth System Science Interdisciplinary Center, University of Maryland College Park, Maryland, USA

Abstract We have modied the publicly available electromagnetic scattering code called

DDSCAT to handle bigger targets with shorter execution times than the original code. A big target is one whose sphere equivalent radius is large compared to the incident wavelength. DDSCAT uses the discrete-dipole approximation and an accurate result requires the spacing between dipoles to be small relative to the wavelength. This requirement, along with the big target size, implies that the number of discrete grid cells dening the computational grid box surrounding the target must be large. The memory requirement is thus signicant and the execution time long. The original version of the code cannot handle large enough targets that we would like to consider because they require memory exceeding what is available on a single node of our cluster and execution time extending to days. We have removed two limitations of the original version of the code. First, to speed up the execution involing multiple target orientations, the original code assigned one, but only one, MPI process to each independent orientation. We surmount this limitation by assigning each orientation a team of processes. Second, the original code allocated all the memory for the full problem for each MPI process. We surmount this limitation by decomposing the data structures across the members of a team. With these changes, the new version can handle much bigger targets. Moreover, it exhibits strong scaling for xed problem size. The execution time decreases very nearly 1/p as the processor count p increases. Execution time measured in days with the original code can now be reduced to fractions of an hour. 1. INTRODUCTION

The discrete-dipole approximation (DDA), based on the original work of Purcell and Pennypacker [7], is a standard method for calculating electromagnetic scattering cross sections of irregularly shaped targets [6]. One of the more eective codes for this kind of calculation is the DDSCAT code from Draine and Flatau [1]. A comparable one is the ADDA code from Yurkin and Hoekstra [9]. Kahnert provides a review of other solution methods in a recent review paper [3]. DDA reduces the scattering problem to the solution of a large, dense, complex symmetric system of linear equations, Ax = b (1) Design of a code to solve these equations and the resulting performance of the code depend on the data structures chosen to represent the matrix and the solution technique selected to solve the system of equations. Since the matrix is a symmetric Toeplitz matrix, only the elements of a single row need be stored, and these elements can be decomposed and distributed across processors in a straightforward way. To solve the system of equations, the community has, after many years of expermentation and analysis, settled on using one or another variation of Krylovbased solvers [8, Chs. 67]. These iterative solvers require several matrix-vector multiplications at each iteration. Since the matrix is Toeplitz, representing a convolution, this operation can be performed using Fast Fourier Transforms. The matrix-vector multiplication, then, becomes a forward FFT of two vectors followed by multiplication in Fourier space followed by inverse FFT back to physical space. The parallel strategy adopted in the original version of the code assigns a separate MPI process to each independent target orientation. Each MPI process allocates all the memory for the full problem, which may exceed the memory available on a node for big targets, and the number of processes that could be used is limited by the number of orientations. The new code assigns a team of MPI processes to each independent orientation and partitions the data structures by the size of the team. The problem now ts in memory and more processes can be used to reduce the execution time.

Progress In Electromagnetics Research Symposium Proceedings, Taipei, March 2528, 2013 2. THE DISCRETE-DIPOLE APPROXIMATION

723

DDA surrounds the target with a three-dimensional computational grid box discretized into cubical cells of volume d3 with a dipole located at the center of each of the cells occupied by the target [7]. If there are n dipoles in the target, the volume occupied by these dipoles is nd3 , resulting in an sphere-equivalent radius of a, dened by the relationship, nd3 = (4/3)a3 . Scattering cross sections are often measured in units equal to the cross sectional area, a2 [2, Ch. 16, 4, Ch. 2]. The accuracy of the method requires [1, 10, 11] that the grid spacing between cells, d, be small relative to the wavelength, 2|m|kd < 1 (2) where m is the complex refractive index of the target and k = 2/ is the wavenumber. A target is considered big if its size parameter, ka, is much bigger than one, i.e., ka 1. Coupled with requirement (2), it implies a large number of dipoles, n > (2 |m| ka)3 , and almost always an even larger number of grid cells. The memory requirement for a large target may thus exceed what is available on a single computer or a node in a cluster. Furthermore, the computational work that must be done to solve the system of equations increases with the size of the grid box and the execution time for large targets may easily expand to days if only a single node can be used. To address these two problems, we decompose the computational box such that each partition of the box ts into the memory of a single node, and we assign a team of processors to work in parallel on each partition to reduce the execution time.

3. THE PARTITIONED COMPUTATIONAL PROBLEM

The parallel implementation strategy for any application code involves two issues: how to partition data structures and how to assign work. The original code takes into consideration one of these issues, the assignment of work, but does not consider the partition of data structures. The new version addresses both issues. The details of the partition depend on the details of how the system of Equation (1) is represented on the grid points of the discretized computational grid box. The vector on the right side is proportional to the incoming plane wave, exp (ik r), with wavelength , wavenumber k = |k|, and the direction of the wave vector relative to the target by three Euler angles (, , ). At each grid point of the computational grid box, the vector on the right side of the system of equations, bijk = exp [ik (ri , rj , rk )], has values proportional to the value of the plane wave at each grid point r = (ri , rj , rk ). In the same way, the values of the elements of the matrix A are evaluated relative to the grid points, and the solution vector x is found at each grid point. In practice, indices for the points on the three-dimensional grid are serialized so that the quantities b and x can be thought of as vectors of length n3 and the matrix A can be thought of as a matrix of size n3 n3 . The decomposition strategy is straightforward. The vectors involved are partitioned along one of the directions of the computational grid box. If the grid points have been serialized in lexical order, the x-direction rst, followed by the y -direction and then by the z -direction, the vectors can be partitioned in the z -direction by the team size (i.e., number of processes per team) assigned to the partition. Correspondingly, the rows of the matrix are partitioned along the z -direction. This partitioning introduces overhead into the code due to the requirement for moving distributed data structure among team members, but for big targets, this overhead is small as our results show in later sections.

4. PARALLEL CONVOLUTION

Special properties of the matrix A make the problem tractable. The matrix is a function of the magnitude of the plane wave vector, k = |k|, not its direction, i.e., A = A(k ). The matrix can thus be calculated once for each wavelength and used for all orientations of the incoming wave. It is also a Toeplitz matrix. The values of its elements depend on the distance between grid points such that, in the serialization representation, the matrix elements have the property, aij = aij . It can then be represented by one of its rows, a vector of length n3 , rather than a matrix of size n3 n3 . The vector b(k) and the solution vector x(k), on the other hand, are necessarily functions of both the magnitude and the orientation of the incoming wave. Thus, for each orientation, the matrix remains the same but the right-hand-side vector changes so the system of equations, A (k ) x (k) = b (k) , must be solved for the solution vector for each oreientation. (3)

724

PIERS Proceedings, Taipei, March 2528, 2013

When iterative Krylov solvers are used to solve this system, several matrix-vector multiplications of the kind: y = Ax, must be performed for each iteration. Since the matrix is Toeplitz, its elements depend only on the dierence of the indices, and the matrix-vector multiplication becomes a convolution, yi = j aij xj . The operation count for this multiplication is on the order of the square of the rank of the matrix if done in the usual way. But since the Fourier transform of a convolution is the product of the Fourier transforms of the the two factors, F (y) = F (A) F (x), the operation count can be reduced to the order of the matrix size times the logarithm of the matrix size. This dierence results in a signcant saving of computation time. The result vector is retrieved with the inverse transform, y = F 1 [F (A) F (x)]. The Fourier transform is a three-dimensional transform over the grid points of the computational box. Since the box has been decomposed along the z -direction, data communication among processors is required, which is not required on a single processor. Each processor can perform the transform independently for the grid points it owns in the xy -plane, but the data structure must be transposed to do the transform in the z -direction. The matrix can be transformed once for each wavelength and left in the transposed data structure in Fourier space. It does not need to be transformed back to the original decomposed data structure. The vectors at each iteration of the Krylov solver are also left in transformed Fourier space and multiplied by the matrix. Only then is the product of the two vectors transformed back to the original partitioned data structure. An important optimization of the convolution step results from treating the zeros that must be padded onto the vectors before performing the Fourier transform [5]. Total time for the transform, using a Fast Fourier Transform (FFT) library, is the sum of the time in each of the three dimensions, t1 = tx + ty + tz . If the dimensions are about the same in each dimension, the time for the transform is the same in each direction, tx = ty = tz = t0 , and t1 = 3t0 . During the FFT in the x-direction, the time reduces by half by not computing over the zeros in the y -direction and again by half by not computing over the zeros in the z -direction. Likewise, in the y -direction, the time reduces by half by not computing over the zeros in the z -direction. The time in the z -direction remains the same so the reduced time becomes, t2 = t0 /4 + t0 /2 + t0 = (7/4) t0 , and the speedup in performance becomes t1 /t2 = 3t0 /(7t0 /4) = 12/7 1.71. For large targets, where the FFT dominates the execution time, this modication of the code may lead to signicant performance speedup. For smaller targets, where the FFT is only a fraction of the total execution time, the benet will be lower. If the transpose represents 50% of the execution time, the benet may only be about 20%. We have measured a 12% benet per grid point on a single processor for a test case.

5. PARALLEL MATRIX TRANSPOSE

For multiple processors, the analysis of the time saved due to the treatment of the padding is somewhat dierent from that of a single processor due to the nontrivial cost for the matrix transpose. The amount of data transferred between the xy -direction to the z -direction, during the matrix transpose reduces by a factor of 2. For large problem sizes, we have observed that the time required for the transpose was comparable to the total time for the FFT. If the transpose is bandwidth limited, the net speedup for FFT with transpose can be estimated as t1 /t2 = (3t0 + 3t0 )(3t0 /2 + 7t0 /4) = 24/13 1.85. We have, in fact, measured an overall speedup equal to 1.7 with the new treatment of the padding.

6. SCALING ANALYSIS

To compare performance of the old version of the code with the new version, we examine two dierent targets referred to here as medium and large. The medium target ts within the memory of a single node on our machine, and allows direct performance comparisons between the two code versions. The large target, does not t in the memory of a single node, and can only be run with the new version. The large target illustrates that the new version of the code can handle larger targets and that, for these big targets, it exhibits strong scaling. An important advantage of the new implementation, is that it allows the code to utilize far more processors. Whereas the original code limited the number of MPI processes to the number of target orientations, typically O(102 ) the new version uses a team of processes for each orientation. The number of MPI processes in a team is only limited by the size of the computational grid. Parallel strategy for the new version of the code is good for the large case which does not t in the memory of a single node. With the new version of the code, we use teams of size 20 and distribute the MPI processes across nodes with each process requiring one-twentieth of the memory.

Progress In Electromagnetics Research Symposium Proceedings, Taipei, March 2528, 2013

725

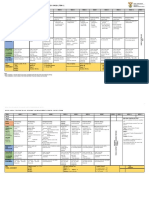

Figure 1: Execution time per grid point, p = t(p)/nx ny nz , as a function of processor count. Large target with |m|kd = 0.3563 contained in a box of size (nx , ny , nz ) = (256, 360, 360) marked with bullets () measured in seconds. The dotted line represents perfect scaling of p1 . The intercept at log(1 ) = 1.3, marked with an asterisk (*), suggests that the problem on a single processor would require at least 19 days to complete compared with about half an hour on 800 processors.

For this problem, the FFT dominates the execution time taking almost 99% of the total. This part of the code is fully parallelized and the execution time, for the xed problem size, exhibits strong scaling decreasing inversely with the processor count as illustrated in Figure 1.

7. ACCURACY OF THE METHOD

The accuracy, as well as the performance, of codes that implement the discrete-dipole approximation depends on a number of empirically determined parameters [10, 11]. The distance between cells, for example, must be small relative to the wavelength of the incident wave as displayed in inequality (2). The rate of convergence of the iterative solver also depends on the value of this parameter, smaller values generating larger matrices requiring more iterations to converge. Now that the execution time has been reduced to a few minutes of time for very big targets, we can perform parameter studies to test the accuracy of the results. In particular, we can follow the technique suggested by Yurkin and coworkers [11] to extrapolate the results to zero cell size.

8. SUMMARY

The major changes to the code, after decomposing the data structures, required a 3D transpose for the FFT and a global reduction for the scalar products and norms in the iterative solver. Data decomposition was by far the hardest change to make to the original code. Performance of the code improved when we removed unnecessary calls to the FFT routine for padded zeros required for the convolution. For big targets, the new code exhibits almost perfect strong scaling as the processor count increases.

ACKNOWLEDGMENT

The authors are grateful to the NASA High-End Computing program for making this improvement possible.

REFERENCES

1. Draine, B. T. and P. J. Flatau, Discrete-dipole approximation for scattering calculations, J. Opt. Soc. Am. A, Vol. 11, No. 4, 14911499, 1994. 2. Jackson, J. D., Classical Electrodynamics, 1st Edition, John Wyley & Sons, 1962. 3. Kahnert, F. M., Numerical methods in electromagnetic scattering theory, J. Quant. Spectrosc. Radiat. Transfer, Vols. 7980, 775824, 2003. 4. Newton, R. G., Scattering Theory of Waves and Particles, 1st Edition, McGraw-Hill Book Company, New York, 1966. 5. Nukada, A., Y. Hourai, A. Hishida, and Y. Akiyama, High performance 3D convolution for protein docking on IBM blue gene, Parallel and Distributed Processing and Applications: 5th International Symposium, ISPA 2007, Vol. 4742 of Lecture Notes in Computer Science, 958969, Springer, 2007.

726

PIERS Proceedings, Taipei, March 2528, 2013

6. Penttil a, A., E. Zubko, K. Lumme, K. Muinonen, M. A. Yurkin, B. Draine, J. Rahola, A. G. Hoekstra, and Y. Shkuratov, Comparison between discrete dipole implementations and exact techniques, J. Quant. Spectrosc. Radiat. Transfer, Vol. 106, 417436, 2007. 7. Purcell, E. M. and C. R. Pennypacker, Scattering and absorption of light by nonshperical dielectric grains, Astrophys. J., Vol. 186, 705714, 1973. 8. Saad, Y., Iterative Methods for Sparse Linear Systems, 2nd Edition, SIAM, 2003. 9. Yurkin, M. A. and A. G. Hoekstra, The discrete-dipole-approximation code ADDA: Capabilities and known limitations, J. Quant. Spectrosc. Radiat. Transfer, Vol. 112, 22342247, 2011. 10. Yurkin, M. A., V. P. Maltsev, and A. G. Hoekstra, Convergence of the discrete dipole approximation. I. Theoretical analysis, J. Opt. Soc. Am. A, Vol. 23, No. 10, 25782591, 2006. 11. Yurkin, M. A., V. P. Maltsev, and A. G. Hoekstra, Convergence of the discrete dipole approximation. II. An extrapolation technique to increase the accuracy, J. Opt. Soc. Am. A, Vol. 23, No. 10, 25922601, 2006.

You might also like

- Holmes-Poirot DocumentDocument2 pagesHolmes-Poirot Documentapi-242851904No ratings yet

- AP YMCA Level 3 Mock Paper - Sports MassageDocument11 pagesAP YMCA Level 3 Mock Paper - Sports Massagemy name0% (1)

- Martyrologium Lent 2012Document60 pagesMartyrologium Lent 2012Filip100% (1)

- DGFDFDDocument8 pagesDGFDFDjs9999No ratings yet

- Empirical Testing of Fast Kernel Density Estimation AlgorithmsDocument6 pagesEmpirical Testing of Fast Kernel Density Estimation AlgorithmsAlon HonigNo ratings yet

- Novel MIMO Detection Algorithm For High-Order Constellations in The Complex DomainDocument14 pagesNovel MIMO Detection Algorithm For High-Order Constellations in The Complex DomainssivabeNo ratings yet

- C++ Code Design For Multi-Purpose Explicit Finite Volume Methods: Requirements and SolutionsDocument8 pagesC++ Code Design For Multi-Purpose Explicit Finite Volume Methods: Requirements and SolutionsThierry Gnasiri Godwe HinsouNo ratings yet

- An Active Contour Based Algorithm For Cell Planning: Reza Danesfahani, Farbod Razzazi, Mohammad Reza ShahbaziDocument5 pagesAn Active Contour Based Algorithm For Cell Planning: Reza Danesfahani, Farbod Razzazi, Mohammad Reza ShahbaziNur AfiyatNo ratings yet

- Hamilton2013hexagonal 1Document9 pagesHamilton2013hexagonal 1Tg WallasNo ratings yet

- Ict 2004Document6 pagesIct 2004Sai Kiran AdusumalliNo ratings yet

- Handling Data Skew in Mapreduce: Benjamin Gufler, Nikolaus Augsten, Angelika Reiser and Alfons KemperDocument10 pagesHandling Data Skew in Mapreduce: Benjamin Gufler, Nikolaus Augsten, Angelika Reiser and Alfons KemperShreyansh DiwanNo ratings yet

- Sandeep Bhatt Et Al - Tree Codes For Vortex Dynamics: Application of A Programming FrameworkDocument11 pagesSandeep Bhatt Et Al - Tree Codes For Vortex Dynamics: Application of A Programming FrameworkVing666789No ratings yet

- Weighted Least Squares Kinetic Upwind Method Using Eigen Vector BasisDocument55 pagesWeighted Least Squares Kinetic Upwind Method Using Eigen Vector BasiskonarkaroraNo ratings yet

- Scale-Based Clustering The Radial Basis Function: NetworkDocument6 pagesScale-Based Clustering The Radial Basis Function: NetworkMahakGoindaniNo ratings yet

- Kohonen Networks and Clustering: Comparative Performance in Color ClusteringDocument9 pagesKohonen Networks and Clustering: Comparative Performance in Color ClusteringDeybi Ypanaqué SilvaNo ratings yet

- Al Ghezi Wiese2018 - Chapter - AdaptiveWorkload BasedPartitioDocument9 pagesAl Ghezi Wiese2018 - Chapter - AdaptiveWorkload BasedPartitionidhiNo ratings yet

- Local Adaptive Mesh Refinement For Shock Hydrodynamics: BergerDocument21 pagesLocal Adaptive Mesh Refinement For Shock Hydrodynamics: BergeryeibiNo ratings yet

- Weighted Subspace Fitting For Two-Dimension DOA Estimation in Massive MIMO SystemsDocument8 pagesWeighted Subspace Fitting For Two-Dimension DOA Estimation in Massive MIMO SystemsJavier MelendrezNo ratings yet

- Fractal Image Compression of Satellite Imageries Using Range and Domain TechniqueDocument4 pagesFractal Image Compression of Satellite Imageries Using Range and Domain TechniqueIJERDNo ratings yet

- Siam J. S - C - 1998 Society For Industrial and Applied Mathematics Vol. 19, No. 2, Pp. 364-386, March 1998 003Document23 pagesSiam J. S - C - 1998 Society For Industrial and Applied Mathematics Vol. 19, No. 2, Pp. 364-386, March 1998 003Sidney LinsNo ratings yet

- Fast Scaling in The Residue Number SystemDocument5 pagesFast Scaling in The Residue Number SystemswarnasureshtrNo ratings yet

- The Double-Base Number System and Its Application To Elliptic Curve CryptographyDocument30 pagesThe Double-Base Number System and Its Application To Elliptic Curve CryptographyVinh CuongNo ratings yet

- A New Diffusion Variable Spatial Regularized QRRLS AlgorithmDocument5 pagesA New Diffusion Variable Spatial Regularized QRRLS AlgorithmAkilesh MDNo ratings yet

- Optimum CKT For Bit Dimension PermutationDocument13 pagesOptimum CKT For Bit Dimension PermutationAnkit ParasharNo ratings yet

- Daubchies Filter PDFDocument10 pagesDaubchies Filter PDFThrisul KumarNo ratings yet

- RJ 2018 048Document9 pagesRJ 2018 048Julián BuitragoNo ratings yet

- Distributed Simulation of Electric Power SystemsDocument7 pagesDistributed Simulation of Electric Power SystemsGentle77No ratings yet

- I Jsa It 04132012Document4 pagesI Jsa It 04132012WARSE JournalsNo ratings yet

- CUDA 2D Stencil Computations For The Jacobi Method: Jos e Mar Ia Cecilia, Jos e Manuel Garc Ia, and Manuel Ujald OnDocument4 pagesCUDA 2D Stencil Computations For The Jacobi Method: Jos e Mar Ia Cecilia, Jos e Manuel Garc Ia, and Manuel Ujald Onopenid_AePkLAJcNo ratings yet

- A Sparse Matrix Approach To Reverse Mode AutomaticDocument10 pagesA Sparse Matrix Approach To Reverse Mode Automaticgorot1No ratings yet

- Combined QuestionsDocument34 pagesCombined QuestionsAmit KulkarniNo ratings yet

- An Efficient Enhanced K-Means Clustering AlgorithmDocument8 pagesAn Efficient Enhanced K-Means Clustering Algorithmahmed_fahim98No ratings yet

- An Efficient Temporal Partitioning Algorithm To Minimize Communication Cost For Reconfigurable Computing SystemsDocument11 pagesAn Efficient Temporal Partitioning Algorithm To Minimize Communication Cost For Reconfigurable Computing SystemsInternational Journal of New Computer Architectures and their Applications (IJNCAA)No ratings yet

- Franklin Note 2Document21 pagesFranklin Note 2WylieNo ratings yet

- Min ImageDocument9 pagesMin ImageRam Charan KonidelaNo ratings yet

- Optimization of Cell Spaces Simulation For The Modeling of Fire SpreadingDocument8 pagesOptimization of Cell Spaces Simulation For The Modeling of Fire SpreadingMarius SuvarNo ratings yet

- Multi-Swarm Pso Algorithm For The Quadratic Assignment Problem: A Massive Parallel Implementation On The Opencl PlatformDocument21 pagesMulti-Swarm Pso Algorithm For The Quadratic Assignment Problem: A Massive Parallel Implementation On The Opencl PlatformJustin WalkerNo ratings yet

- Application of Parallel Tabu Search To Distribution Network Expansion Planning With Distributed GenerationDocument6 pagesApplication of Parallel Tabu Search To Distribution Network Expansion Planning With Distributed GenerationItalo ChiarellaNo ratings yet

- Bisection Method in Multiple DimensionsDocument6 pagesBisection Method in Multiple DimensionshkmydreamsNo ratings yet

- Application of Parallel Tabu Search Distribution Network Expansion Planning Distributed GenerationDocument6 pagesApplication of Parallel Tabu Search Distribution Network Expansion Planning Distributed Generationbluemoon1172No ratings yet

- 2008 Basics DDM FEADocument24 pages2008 Basics DDM FEAwhateverNo ratings yet

- RCM Algorithm-Ipdps17Document10 pagesRCM Algorithm-Ipdps17Marwa HamzaNo ratings yet

- GDBSCANDocument30 pagesGDBSCANkkoushikgmailcomNo ratings yet

- Genetic Algorithms For Graph Partitioning and Incremental Graph PartitioningDocument9 pagesGenetic Algorithms For Graph Partitioning and Incremental Graph Partitioningtkurt2000No ratings yet

- A Fast Approach To Magnetic Equivalent Source Processing Using An Adaptive Quadtree Mesh DiscretizationDocument4 pagesA Fast Approach To Magnetic Equivalent Source Processing Using An Adaptive Quadtree Mesh DiscretizationAvisha N AvisenaNo ratings yet

- 108 MohanDocument10 pages108 MohanRECEP BAŞNo ratings yet

- Blind Deconvolution of DS-CDMA Signals by Means of Decomposition in Rank - (1 L L) TermsDocument10 pagesBlind Deconvolution of DS-CDMA Signals by Means of Decomposition in Rank - (1 L L) Termsfeku fekuNo ratings yet

- DCinv V6 Rev2 CleanDocument38 pagesDCinv V6 Rev2 Cleanyasirarafat91No ratings yet

- Large Margin Multi Channel Analog To Digital Conversion With Applications To Neural ProsthesisDocument8 pagesLarge Margin Multi Channel Analog To Digital Conversion With Applications To Neural ProsthesisAdrian PostavaruNo ratings yet

- LBNL 49407Document8 pagesLBNL 49407Luckysaluter SaluterNo ratings yet

- Comparative Analysis of KL and SA Partitioning Algorithms Implemented On VLSI Circuit PartitioningDocument7 pagesComparative Analysis of KL and SA Partitioning Algorithms Implemented On VLSI Circuit Partitioningwww.irjes.comNo ratings yet

- Wavelet Based Compressive Sensing Techniques For Image CompressionDocument6 pagesWavelet Based Compressive Sensing Techniques For Image CompressiondwipradNo ratings yet

- Gaam: An Energy: Conservation Method Migration NetworksDocument6 pagesGaam: An Energy: Conservation Method Migration Networksseetha04No ratings yet

- Ecient Neighbor Searching in Nonlinear Time Series AnalysisDocument20 pagesEcient Neighbor Searching in Nonlinear Time Series AnalysisСињор КнежевићNo ratings yet

- Optimization Algorithms For Access Point Deployment in Wireless NetworksDocument3 pagesOptimization Algorithms For Access Point Deployment in Wireless NetworksJournal of Computer ApplicationsNo ratings yet

- The Parallel Computation For Tridiagonal System in One-Dimension Diffusion ModelDocument9 pagesThe Parallel Computation For Tridiagonal System in One-Dimension Diffusion ModelMike SusmikantiNo ratings yet

- Implementing Wimax Ofdm Timing and Frequency Offset Estimation in Lattice FpgasDocument21 pagesImplementing Wimax Ofdm Timing and Frequency Offset Estimation in Lattice FpgasvandalashahNo ratings yet

- Computers and Mathematics With ApplicationsDocument10 pagesComputers and Mathematics With Applicationsalireza khoshnoodNo ratings yet

- On The GOn The Grid Implementation of A Quantum Reactive Scattering Programrid Implementation of A Quantum Reactive Scattering ProgramDocument17 pagesOn The GOn The Grid Implementation of A Quantum Reactive Scattering Programrid Implementation of A Quantum Reactive Scattering ProgramFreeWillNo ratings yet

- Vhdl-Models of Parallel Fir Digital FiltersDocument6 pagesVhdl-Models of Parallel Fir Digital Filtersanilshaw27No ratings yet

- 01 Pzflex Ieee93Document7 pages01 Pzflex Ieee93Ridwan WkNo ratings yet

- Mathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsFrom EverandMathematical and Computational Modeling: With Applications in Natural and Social Sciences, Engineering, and the ArtsRoderick MelnikNo ratings yet

- Exploitation of Localized Surface Plasmon Resonance : by Eliza Hutter and Janos H. FendlerDocument22 pagesExploitation of Localized Surface Plasmon Resonance : by Eliza Hutter and Janos H. FendlerMartín FigueroaNo ratings yet

- Substrate Effects On The Optical Properties of Spheroidal NanoparticlesDocument10 pagesSubstrate Effects On The Optical Properties of Spheroidal NanoparticlesMartín FigueroaNo ratings yet

- Scattering and Inverse ScatteringDocument8 pagesScattering and Inverse ScatteringMartín FigueroaNo ratings yet

- 01143871Document6 pages01143871Martín FigueroaNo ratings yet

- Surface Enhanced Raman Spectroscopy of GrapheneDocument11 pagesSurface Enhanced Raman Spectroscopy of GrapheneMartín FigueroaNo ratings yet

- Second Quantization: K K K K K K KDocument2 pagesSecond Quantization: K K K K K K KMartín FigueroaNo ratings yet

- Live Partition Mobility: Power SystemsDocument196 pagesLive Partition Mobility: Power Systemsmevtorres1977No ratings yet

- CCD CMOS WhitePaperDocument28 pagesCCD CMOS WhitePaperVictor CiprianNo ratings yet

- Midterm Exam in P.E 1Document3 pagesMidterm Exam in P.E 1Condrad Casido0% (1)

- Surgical InstrumentsDocument22 pagesSurgical InstrumentsDemiar Madlansacay Quinto100% (1)

- Good Study Guide For RRT ExamDocument2 pagesGood Study Guide For RRT ExamStevenNo ratings yet

- Thesis Statement For A Research Paper On CareersDocument7 pagesThesis Statement For A Research Paper On Careersyscgudvnd100% (1)

- GD - or MPW 84918 1 300 GD - OR..editedDocument2 pagesGD - or MPW 84918 1 300 GD - OR..editediolcdhnlNo ratings yet

- Daaa 1 IntroductionDocument33 pagesDaaa 1 IntroductionNathan ReedNo ratings yet

- Nisha RuchikaDocument24 pagesNisha RuchikaRishabh srivastavNo ratings yet

- Application of I4.0 and The Role of Sustainability in GCC Construction IndustryDocument14 pagesApplication of I4.0 and The Role of Sustainability in GCC Construction IndustryKanchan LakhwaniNo ratings yet

- Numerical ModellingDocument119 pagesNumerical Modellingjanusz_1025No ratings yet

- 2003 Animal Rights AnswersDocument5 pages2003 Animal Rights AnswersVarshLokNo ratings yet

- WHO IVB 11.09 Eng PDFDocument323 pagesWHO IVB 11.09 Eng PDFniaaseepNo ratings yet

- VPS Impression MaterialsDocument55 pagesVPS Impression MaterialsalhaleemNo ratings yet

- Cabinet Cooler System Sizing GuideDocument1 pageCabinet Cooler System Sizing GuideAlexis BerrúNo ratings yet

- Rehab-Plans-and-Exercises Hip-Arthroscopy Protocol-For-Physiotherapy-Following-SurgeryDocument2 pagesRehab-Plans-and-Exercises Hip-Arthroscopy Protocol-For-Physiotherapy-Following-SurgeryMellow Moon RecordsNo ratings yet

- Welcome To Oneg ShabbatDocument19 pagesWelcome To Oneg ShabbatTovaNo ratings yet

- (IJCST-V9I4P3) : Shivaji Chabukswar, Renuka Chopade, Mona Saoji, Manjiri Kadu, Dr. Premchand AmbhoreDocument3 pages(IJCST-V9I4P3) : Shivaji Chabukswar, Renuka Chopade, Mona Saoji, Manjiri Kadu, Dr. Premchand AmbhoreEighthSenseGroupNo ratings yet

- 1.630 ATP 2023-24 GR 9 EMS FinalDocument4 pages1.630 ATP 2023-24 GR 9 EMS FinalNeliNo ratings yet

- Semikron Datasheet SKT 551 01890270Document5 pagesSemikron Datasheet SKT 551 01890270Ga3ielNo ratings yet

- Modern Drummer 1994Document132 pagesModern Drummer 1994michi.drum1602No ratings yet

- Islam & Modern Science by Waleed1991Document118 pagesIslam & Modern Science by Waleed1991waleed1991No ratings yet

- Practice Problems In, R and ChartsDocument2 pagesPractice Problems In, R and ChartsChand Patel100% (1)

- Pemurnian EnzimDocument54 pagesPemurnian EnzimwulanNo ratings yet

- Part 1 固定常考: Work/StudyDocument31 pagesPart 1 固定常考: Work/Study书签No ratings yet

- Enrichment Exercise No. 3 Problem Solving Exercise. Now That You Have Learned The Nature, Scope, FunctionsDocument2 pagesEnrichment Exercise No. 3 Problem Solving Exercise. Now That You Have Learned The Nature, Scope, FunctionsQueenVictoriaAshleyPrietoNo ratings yet

- Multiple SclerosisDocument45 pagesMultiple Sclerosispriyanka bhowmikNo ratings yet