Professional Documents

Culture Documents

Adina Lipai

Adina Lipai

Uploaded by

Adriana CalinCopyright:

Available Formats

You might also like

- Deepthi - Webclustering Report PDFDocument38 pagesDeepthi - Webclustering Report PDFjyotiNo ratings yet

- Building Search Engine Using Machine Learning Technique I-EEEDocument6 pagesBuilding Search Engine Using Machine Learning Technique I-EEECHARAN SAI MUSHAMNo ratings yet

- Semantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & EngineeringDocument36 pagesSemantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & Engineeringqwerty uNo ratings yet

- Deepthi Webclustering ReportDocument38 pagesDeepthi Webclustering ReportRijy Lorance100% (1)

- User ProfilingDocument15 pagesUser ProfilingesudharakaNo ratings yet

- A Review On Query Clustering Algorithms For Search Engine OptimizationDocument5 pagesA Review On Query Clustering Algorithms For Search Engine Optimizationeditor_ijarcsseNo ratings yet

- Web Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)Document4 pagesWeb Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Lit SurveyDocument11 pagesLit SurveyShalini MuthukumarNo ratings yet

- Tadpole: A Meta Search Engine Evaluation of Meta Search Ranking StrategiesDocument8 pagesTadpole: A Meta Search Engine Evaluation of Meta Search Ranking StrategiesRula ShakrahNo ratings yet

- Similarity Search WashingtonDocument12 pagesSimilarity Search WashingtonGohar JavedNo ratings yet

- Web Mining Based On Genetic Algorithm: AIML 05 Conference, 19-21 December 2005, CICC, Cairo, EgyptDocument6 pagesWeb Mining Based On Genetic Algorithm: AIML 05 Conference, 19-21 December 2005, CICC, Cairo, EgyptAffable IndiaNo ratings yet

- Web Search Engine Based On DNS: Wang Liang, Guo Yi-Ping, Fang MingDocument9 pagesWeb Search Engine Based On DNS: Wang Liang, Guo Yi-Ping, Fang MingAinil HawaNo ratings yet

- Search Engine SeminarDocument17 pagesSearch Engine SeminarsidgrabNo ratings yet

- Technical Seminar ON Web Clustering Engines.: Department of Computer Science and EngineeringDocument15 pagesTechnical Seminar ON Web Clustering Engines.: Department of Computer Science and Engineering185G1 Pragna KoneruNo ratings yet

- CS8080 Irt Unit 4 23 24Document36 pagesCS8080 Irt Unit 4 23 24swarnaas2124No ratings yet

- Volume 2 Issue 6 2016 2020Document5 pagesVolume 2 Issue 6 2016 2020samanNo ratings yet

- Hybrid Technique For Enhanced Searching of Text Documents: Volume 2, Issue 4, April 2013Document5 pagesHybrid Technique For Enhanced Searching of Text Documents: Volume 2, Issue 4, April 2013International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Web Service CompositionDocument4 pagesWeb Service Compositionbebo_ha2008No ratings yet

- MS Project ReportDocument26 pagesMS Project ReportvirubhauNo ratings yet

- Web Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Document4 pagesWeb Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Umamageswari KumaresanNo ratings yet

- An Approach For Search Engine Optimization Using Classification - A Data Mining TechniqueDocument4 pagesAn Approach For Search Engine Optimization Using Classification - A Data Mining TechniqueInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Context Based Web Indexing For Semantic Web: Anchal Jain Nidhi TyagiDocument5 pagesContext Based Web Indexing For Semantic Web: Anchal Jain Nidhi TyagiInternational Organization of Scientific Research (IOSR)No ratings yet

- (IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghDocument5 pages(IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghEighthSenseGroupNo ratings yet

- Grouper A Dynamic Cluster Interface To Web Search ResultsDocument15 pagesGrouper A Dynamic Cluster Interface To Web Search ResultsDc LarryNo ratings yet

- Deep Web Content Mining: Shohreh Ajoudanian, and Mohammad Davarpanah JaziDocument5 pagesDeep Web Content Mining: Shohreh Ajoudanian, and Mohammad Davarpanah JaziMuhammad Aslam PopalNo ratings yet

- Seminar Report'04 3D SearchingDocument21 pagesSeminar Report'04 3D SearchingBibinMathewNo ratings yet

- Search Engine Optimization - Using Data Mining ApproachDocument5 pagesSearch Engine Optimization - Using Data Mining ApproachInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Robust Semantic Framework For Web Search EngineDocument6 pagesRobust Semantic Framework For Web Search Enginesurendiran123No ratings yet

- Study of Webcrawler: Implementation of Efficient and Fast CrawlerDocument6 pagesStudy of Webcrawler: Implementation of Efficient and Fast CrawlerIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Search Engine Personalization Tool Using Linear Vector AlgorithmDocument9 pagesSearch Engine Personalization Tool Using Linear Vector Algorithmahmed_trabNo ratings yet

- Web Mining ReportDocument46 pagesWeb Mining ReportNini P Suresh100% (2)

- Dynamic Organization of User Historical Queries: M. A. Arif, Syed Gulam GouseDocument3 pagesDynamic Organization of User Historical Queries: M. A. Arif, Syed Gulam GouseIJMERNo ratings yet

- TorrentsDocument19 pagesTorrentskunalmaniyarNo ratings yet

- Ieee FormatDocument13 pagesIeee FormatSumit SumanNo ratings yet

- IR - Assigment - RKDocument14 pagesIR - Assigment - RKjayesh.gujrathiNo ratings yet

- IRT Unit 1Document27 pagesIRT Unit 1Jiju Abutelin JaNo ratings yet

- Evaluation of Result Merging Strategies PDFDocument14 pagesEvaluation of Result Merging Strategies PDFVijay KarthiNo ratings yet

- A Language For Manipulating Clustered Web Documents ResultsDocument19 pagesA Language For Manipulating Clustered Web Documents ResultsAlessandro Siro CampiNo ratings yet

- Vector Space Model For Deep Web Data Retrieval and ExtractionDocument3 pagesVector Space Model For Deep Web Data Retrieval and ExtractionInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Generating Query Facets Using Knowledge Bases: CSE Dept 1 VMTWDocument63 pagesGenerating Query Facets Using Knowledge Bases: CSE Dept 1 VMTWMahveen ArshiNo ratings yet

- MS CS Manipal University Ashish Kumar Jha Data Structures and Algorithms Used in Search EngineDocument13 pagesMS CS Manipal University Ashish Kumar Jha Data Structures and Algorithms Used in Search EngineAshish Kumar JhaNo ratings yet

- Project FinalDocument59 pagesProject FinalRaghupal reddy GangulaNo ratings yet

- The International Journal of Engineering and Science (The IJES)Document7 pagesThe International Journal of Engineering and Science (The IJES)theijesNo ratings yet

- Advanced Algorithms Project ReportDocument10 pagesAdvanced Algorithms Project ReportVeer Pratap SinghNo ratings yet

- Thesis 1Document4 pagesThesis 1manish21101990No ratings yet

- Search Engines and Web Dynamics: Knut Magne Risvik Rolf MichelsenDocument17 pagesSearch Engines and Web Dynamics: Knut Magne Risvik Rolf MichelsenGokul KannanNo ratings yet

- Design, Analysis and Implementation of A Search Reporter System (SRS) Connected To A Search Engine Such As Google Using Phonetic AlgorithmDocument12 pagesDesign, Analysis and Implementation of A Search Reporter System (SRS) Connected To A Search Engine Such As Google Using Phonetic AlgorithmAJER JOURNALNo ratings yet

- Resource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory NetworksDocument6 pagesResource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory Networksmindvision25No ratings yet

- User Modeling For Personalized Web Search With Self-Organizing MapDocument14 pagesUser Modeling For Personalized Web Search With Self-Organizing MapJaeyong KangNo ratings yet

- Ambiguity Resolution in Information RetrievalDocument7 pagesAmbiguity Resolution in Information RetrievalDarrenNo ratings yet

- This Is The Original WebCrawler PaperDocument13 pagesThis Is The Original WebCrawler PaperAakash BathlaNo ratings yet

- Clustering Algorithm PDFDocument5 pagesClustering Algorithm PDFisssac_AbrahamNo ratings yet

- Working of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitDocument13 pagesWorking of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitaviNo ratings yet

- Towards Automatic Incorporation of Search Engines Into ADocument19 pagesTowards Automatic Incorporation of Search Engines Into ARobert PerezNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document5 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Sample 3 - OutputDocument5 pagesSample 3 - OutputHany aliNo ratings yet

- 4.an EfficientDocument10 pages4.an Efficientiaset123No ratings yet

- An Improved Heuristic Approach To Page Recommendation in Web Usage MiningDocument4 pagesAn Improved Heuristic Approach To Page Recommendation in Web Usage MiningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Working of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitDocument10 pagesWorking of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitaviNo ratings yet

- 17 - Ciovica, CristescuDocument7 pages17 - Ciovica, CristescuAdriana CalinNo ratings yet

- 06 - Cristescu, CiovicaDocument13 pages06 - Cristescu, CiovicaAdriana CalinNo ratings yet

- 05 - PopescuDocument11 pages05 - PopescuAdriana CalinNo ratings yet

- More General Credibility Models: F F P F FDocument6 pagesMore General Credibility Models: F F P F FAdriana CalinNo ratings yet

- Pages 4 5-15 Sandra MARTISIUTE, Gabriele VILUTYTE, Dainora GRUNDEY 16-22Document1 pagePages 4 5-15 Sandra MARTISIUTE, Gabriele VILUTYTE, Dainora GRUNDEY 16-22Adriana CalinNo ratings yet

- Ef 4Document1 pageEf 4Adriana CalinNo ratings yet

- Designing Algorithms Using CAD Technologies: Keywords: Diagrams, Algorithms, Programming, Code GenerationDocument3 pagesDesigning Algorithms Using CAD Technologies: Keywords: Diagrams, Algorithms, Programming, Code GenerationAdriana CalinNo ratings yet

- 15 Ivan, MilodinDocument7 pages15 Ivan, MilodinAdriana CalinNo ratings yet

- Developing Performance-Centered Systems For Higher EducationDocument5 pagesDeveloping Performance-Centered Systems For Higher EducationAdriana CalinNo ratings yet

- 2001 - Iulia Dorobat, Floarea NastaseDocument12 pages2001 - Iulia Dorobat, Floarea NastaseAdriana CalinNo ratings yet

- 11 - MoceanDocument8 pages11 - MoceanAdriana CalinNo ratings yet

- One World - One Accounting: Devon Erickson, Adam Esplin, Laureen A. MainesDocument7 pagesOne World - One Accounting: Devon Erickson, Adam Esplin, Laureen A. MainesAdriana CalinNo ratings yet

- Planning For The Succession of Digital Assets: Laura MckinnonDocument6 pagesPlanning For The Succession of Digital Assets: Laura MckinnonAdriana CalinNo ratings yet

- Common Lesson Plan 3 - Kelly SibrianDocument4 pagesCommon Lesson Plan 3 - Kelly Sibrianapi-510450123No ratings yet

- Grade 5 Chapter 1 The Fish TaleDocument15 pagesGrade 5 Chapter 1 The Fish TaleThulirgalNo ratings yet

- Excavadora 270 CLC John Deere 1719Document524 pagesExcavadora 270 CLC John Deere 1719Angel Rodriguez100% (1)

- lm723 PDFDocument21 pageslm723 PDFjet_media100% (1)

- Classified2019 2 3564799 PDFDocument9 pagesClassified2019 2 3564799 PDFaarianNo ratings yet

- Bakery Report (Interview)Document11 pagesBakery Report (Interview)Abhilasha AgarwalNo ratings yet

- The NEC3 Engineering and Construction Contract - A Comparative Analysis - Part 1 - Energy and Natural Resources - United KingdomDocument7 pagesThe NEC3 Engineering and Construction Contract - A Comparative Analysis - Part 1 - Energy and Natural Resources - United Kingdomiqbal2525No ratings yet

- Binary ClassificationMetrics CheathsheetDocument7 pagesBinary ClassificationMetrics CheathsheetPedro PereiraNo ratings yet

- D493027 en PDFDocument39 pagesD493027 en PDFJose ValenciaNo ratings yet

- Características Dimensiones HofstedeDocument9 pagesCaracterísticas Dimensiones HofstedeJaz WoolridgeNo ratings yet

- Dot Point Physics PreliminaryDocument70 pagesDot Point Physics Preliminaryjdgtree0850% (10)

- 5E LP - Mixture (Year 2) Group 4C2 UpdateDocument8 pages5E LP - Mixture (Year 2) Group 4C2 UpdatecancalokNo ratings yet

- Homo LumoDocument12 pagesHomo LumoShivam KansaraNo ratings yet

- Assignment 3Document7 pagesAssignment 3Midhun MNo ratings yet

- Rubric For Note TakingDocument1 pageRubric For Note TakingJena Mae GonzalesNo ratings yet

- PROGRAM 1: Write A Program To Check Whether A Given Number Is Even or OddDocument45 pagesPROGRAM 1: Write A Program To Check Whether A Given Number Is Even or OddShobhita MahajanNo ratings yet

- Homework: Name DateDocument24 pagesHomework: Name DateboysNo ratings yet

- Raw Material CatalogueDocument6 pagesRaw Material CatalogueKhalil EbrahimNo ratings yet

- Stochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008Document132 pagesStochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008.cadeau01No ratings yet

- Recruitment For The Post of Office AttendantDocument2 pagesRecruitment For The Post of Office AttendantrajnagpNo ratings yet

- 8-2-16 Tina LindaDocument8 pages8-2-16 Tina LindaERICK SIMANJUNTAKNo ratings yet

- Computing Devices (Ii)Document8 pagesComputing Devices (Ii)Adaeze Victoria Pearl OnyekwereNo ratings yet

- Analyzing Your HR LandscapeDocument2 pagesAnalyzing Your HR LandscapeKashis Kumar100% (1)

- Sales Document StructureDocument5 pagesSales Document StructureShashi RayappagariNo ratings yet

- Second Language Lexical Acquisition: The Case of Extrovert and Introvert ChildrenDocument10 pagesSecond Language Lexical Acquisition: The Case of Extrovert and Introvert ChildrenPsychology and Education: A Multidisciplinary JournalNo ratings yet

- Arcelormittal 4q 22 Esg PresentationDocument30 pagesArcelormittal 4q 22 Esg PresentationmukeshindpatiNo ratings yet

- Department of Education: Professional Development PlanDocument2 pagesDepartment of Education: Professional Development PlanJen Apinado80% (5)

- SWRPG Starship VehicleSheetsDocument2 pagesSWRPG Starship VehicleSheetsPatrick J Smith100% (1)

- Mkomagi Et Al 2023. Relationship-Between-Project-Benefits-And-Sustainability-Of-Activities-A-Comparative-Analysis-Of-Selected-Donor-Funded-Agriculture-Related-Projects-In-TanzaniaDocument23 pagesMkomagi Et Al 2023. Relationship-Between-Project-Benefits-And-Sustainability-Of-Activities-A-Comparative-Analysis-Of-Selected-Donor-Funded-Agriculture-Related-Projects-In-TanzaniaJeremiah V. MkomagiNo ratings yet

- Datasheet Pattress Plates 2012Document4 pagesDatasheet Pattress Plates 2012Anil KumarNo ratings yet

Adina Lipai

Adina Lipai

Uploaded by

Adriana CalinOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Adina Lipai

Adina Lipai

Uploaded by

Adriana CalinCopyright:

Available Formats

Revista Informatica Economic nr.

2(46)/2008

World Wide Web Metasearch Clustering Algorithm

Adina LIPAI Academy of Economic Studies, Bucharest As the storage capacity and the processing speed of search engine is growing to keep up with the constant expansion of the World Wide Web, the user is facing an increasing list of results for a given query. A simple query composed of common words sometimes have hundreds even thousands of results making it practically impossible for the user to verify all of them, in order to identify a particular site. Even when the list of results is presented to the user ordered by a rank, most of the time it is not sufficient support to help him identify the most relevant sites for his query. The concept of search result clustering was introduced as a solution to this situation. The process of clustering search results consists of building up thematically homogenous groups from the initial list results provided by classic search tools, and using up characteristics present within the initial results, without any kind of predefined categories. Keywords: search results, clustering algorithm, World Wide Web search.

ntroduction Trying to keep up with the continuous growth of World Wide Web (WWW) the searching tools are engaged in a permanent race for ever faster development in order to reach better performances. In the initial stages the general trend of development was concentrated on bigger data bases, bigger document bases, which to store the web pages accordingly. When the document storage reached considerable sizes the problem of better indexation was addressed. The bigger the storage capacity it became the more performing the indexing algorithm had to be in order to keep the web pages properly ordered. But the WWW was still growing with increasingly speed, so the crawler module had to be developed to reach higher speed in finding and downloading new pages. For many years it was believed that the bigger the data base of the search engine is, the more performing it will be. The more and more efficient crawler was downloading pages at a ever higher speed and proper indexer algorithm was constructing the permanently increasing document base. As a result, the modules were the focus of search engine development for many years. But when document bases reached billions and tens of billions of documents, and the crawlers were downloading new documents

at a speed of hundreds, or even thousand a day, a new problem appeared. With such big quantity of pages, the indexer was retrieving and presenting to a user longer and longer list as result to a query. The simpler and more common the query is the more results will be returned, rendering the user unable to check all of them in order to identify the web pages that best fit his needs. Thus another efficiency criterion was introduced: easy retrieval of the information needed within the results provided by the search tool. The easy retrieval is evaluated both from the speeds perspective (the fastest the user finds what he is looking for, the better) and from the relevance of the results (did he find exactly what he needs, or just almost). A fist solution implemented to solve this problem was ordering the returned results in a list based on some sort of relevance criteria (the more relevant the result was, the higher in the list it would be displayed). Even so, the required result is sometimes hard to find because it is not in the first 20 50 results displayed. The algorithm for clustering search results presented in this paper address this issue. Clustering web metasearch results Clustering search tools results means grouping them into object classes which are

Revista Informatica Economic nr.2(46)/2008

constructed using the search results characteristics, with the purpose of simplifying the users work to retrieve the information it needs, helping him to find faster better quality results. Metasearch means the use of more search tools simultaneously for the same query. Organising search results in clusters is not meant to replace the classical way of presenting results in ranked lists ([Brin, 98]). Its purpose is to provide supplementary organisation for those results. The clustering method will provide a series of search results clusters, with the property that the pages inside one cluster are similar to each other, and the pages belonging to different clusters differ from one another. Inside each cluster the initial ranking order provided will be preserved. To justify its implementation, a search result clustering module has to meet the following requests: � It has to simplify the users work in finding the results it needs in the search result list, at a faster speed;

Web search results:

Web page list, ordered by a ranking algorithm

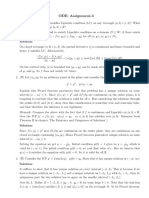

� The module must not over crowd the search tools resources; � It must not over extend the time needed to find the proper web page (e.g. if Google offers results for a query in few milliseconds, an clustering process that will take tens of seconds will be unsatisfactory ([Leouski, 1996]). In order to make the users job more easy the clustering algorithm will divide the list of results in homogenous groups. This way, the user will have to identify the cluster in which his pages are most likely to be and only search through that cluster, ignoring the other clusters. In the image below we have represented schematically the basic principles of how clustering method operates. The initial results R1 , R2 ,..., Rn form a list, which after clustering will become part of one cluster C 1 C n . The cluster can differ in size accordingly to what web pages meet the subject criteria of that cluster.

C1

Rank ordered pages inside a cluster R1 R4 ... Rv

R1:.... R2:.... ... Rv ... Rn:....

Clustering

Cn

R2 ... Rn

Fig.1. Web search result clustering Steps in web search results clustering process 1. Obtaining the web page list 2. Document pre-processing 3. Transforming the documents into vector representations 4. K-means processing, with adequate adaptations for web pages 5. Constructing cluster like representation for final results 1. Obtaining the web page list The initial web page list is obtained by reuniting all results from all search tools used in one centralised list, which will be used to cluster the results. In order to obtain the web page list the next steps have to be done: a) Elimination of multiple links: because the results are obtained from more search tools its more than likely the same site will be returned by more than one tool. b) Calculating the new rank: the formula below will be used Ranknew = IM * NrRez+ IRez , (1) Ranknew : the new rank calculated for each web page

Revista Informatica Economic nr.2(46)/2008

IM : the index of the search engine which provided this page NrRez : total number of results after the duplicates were eliminated IRez : the index of the page in the IM search tool c) Duplicate web pages will receive the rank from the search tool with the lowest index 2. Document pre-processing The clustering algorithm uses information from the pages in order to determine its subject or characteristics. Most document clustering algorithms use the whole document for this process, but such approach would slow our web page clustering algorithm to much. Therefore the algorithm will only use the snippets provided by the initial search tools. Previous work has showed that snippets provide good quality description of web pages, so justifying their use ([Leuski, 2000]). The title of the web page will also be used, if there is one. Title words will become then more important for classification than snippet words. Web pages processing is made up by the following operations:

a) tag cleaning: eliminating portions of the web document which are strictly related to text formatting b) lexical analyse: the purpose of the analyse is to identify distinct words. The process implies eliminating useless characters such as comma, punctuation marks, sometimes numbers, or characters like: #, $... c) word root extraction: the goal is to obtain an homogenous one-word description of similar but not identical words. The word obtain in the end doesnt have to have a meaning, nor be grammatically correct, but it will contain the description and similarity with all the other words that it represents. For example: implementing, implementation, implemented will be described by the root word implement. d) stop word elimination: a stop word is a word that does not have an informational value. In all languages there are a series of words which are considered stop words. For example: on, and, the, in, etc. e) establishing index words: an index word, is a word that is representative in the context of the document. In the pictures below we have represented the document pre-processing steps:

a) Lexical analyse

b) Final list after all steps Fig.2. Web pages pre-processing duplicate eliminations, the final search result list. Supposing we have: � M web pages: d1, d2,... , dM and � N indexed words from 1 to N Than, one web page will be represented in vector space using the following formula: di = [wi1, wi2, , wiM] (2) where wiM represents the weight of word j from document di Example:

3. Transforming the documents into vector representations In order to use the k-means clustering algorithm we need to transform each document into a vector. The vectors will have the same size, turning our result list into an M*N matrix. Each line represents one web page and each column represents one word. The N dimension represents the total number of words that will be processed, from all documents. The dimension M represents the remaining number of web pages after the

Revista Informatica Economic nr.2(46)/2008

Table 1. Vector representation of web page Document (title Word 1: represented) automatic d1 = Data mining course 0.4 d2 = Software development 0.01 .

Word computer 0.1 0.9

2: Word date 0.8 0.3

3: .. .

Word N: analyse 0.7 .5

The words 1..N are usually the index words, and they are obtained after the pre-processing the documents. Assigning each word a weight its crucial in order to distinguish the more relevant words from the less important ones. In the end we will have quantified the importance that each word has for a given web page. Transforming the documents into vector space representations requires the following steps:

I. Index word vector identification: it implies joining together in one vector all the index terms from all the documents. II. Establishing the document vector matrix: for each document and each index term we will count the number of times one word appears in the same page. For example, using as search query the words data mining we will have the next vector space representation for the results:

Table 2. Vector space representation of search results

Document / course Data Mining content Discovery knowledge Words d1 2 3 3 1 1 1 d2 0 2 2 0 0 0 d3 1 3 3 0 1 1 base 1 0 1 automatic computer 0 0 1 1 0 0

III. Weight word determination for each document In order to calculate each words weight we will use the next formula: wij = fTij * log(n / fd j ) (3) [Salton, 89]

fTij : the frequency of word t j in d i document fd i : total number of web pages in which the word t j is present.

wij : the weight of word t j from d i document

Table 3. Word weight of vector documents

Document / Words course d1 0.176 d2 0 d3 0.176 Data 0 0 0 Mining 0 0 0 content 0.477 0 0 Discovery 0.176 0 0.176 knowledge 0.176 0 0.176 base 0.176 0 0.176 Auto matic 0 0.477 0 computer 0 0.477 0

The term log(n / fd j ) is also called inverted document frequency. The words that have a higher frequency in a page will most likely be a better description than the words that have low frequency. But also, the terms that have high frequency in all documents, will not make good description for differences that appear between pages. The term inverted document frequency intends to minimise the importance of the words that appear

frequently in all documents. ([Chi, 2004]). In the following table we have the weights calculated for the example above. The initial query words (data, mining) will receive weight 0 because they are present in all the results initially retrieved by the search tools, therefore they do not bring any informational gain.

Revista Informatica Economic nr.2(46)/2008

4. K-means processing, with adequate adaptations for web pages Classical K-means clustering algorithm K-means algorithm uses numerical input to build up distinct clusters. It splits the total data set into exclusive clusters using a measure called distance. The distance can be calculated using many formulas, but basically having the meaning of metric distance. K-means algorithm is as follows: Step 1: The number of clusters is provided as input: k parameter. Step 2: K points are randomly selected. They will be the first cluster centres: C1, ..., Ck Step 3: The algorithm will place each instance in the cluster to which centre is closest according to the distance measure used. Step 4: After each instance is distributed, for each cluster, the cluster centroid is recalculated using all the instances that are now part of that cluster. Step 5: The new centre of the cluster is the calculated centroid. Step 6: We start the process from step 2, with the new centres. The clustering process is stopped when the same instance is repeatedly distributed to the same cluster. At this point we say the clusters are stable. K-means adapted for web search result clustering has the following demands: � High processing speed � The ability to create high quality clusters � It has to provide as output a description and a label for each cluster. This label and description will enable the user to identify the right cluster for his search. Implementing the algorithm for search result clustering Just as in traditional k-means clustering, a cluster will be represented by its centre or centroid ([Baeza, 99]). For web page clustering this centroid will represent a weighted word document within the vector space documents. This centroid will be called the representative of cluster k noted Rk . In order for Rk to be equivalent with the centre of clusters from the traditional k-means

clustering, it must meet the following conditions: � Each document d i from C k has joined words with R k � The words in R k also appear in the most documents in C k � Not all the words from R k have to also appear in all the documents from C k � The weight of words t j from R k it is calculated as an average weight of all the words present in all documents C k ([Chi, 2004]). After determine the representatives of the cluster, the web metasearch results clustering algorithm is as follows: Input data: � D N web pages (or documents) (provided by the initial search) � K number of clusters (accordingly to kmeans traditional algorithm) Output data: � C k clusters with documents (the clusters can overlap, meaning the same page can be assigned to more than just one cluster, for each distribution there will be an weight assigned to the document) Step 1: K documents from the web page list are randomly selected � This k documents will form the starting representatives of the clusters � We will have: o C1, C 2 ,..., C k clusters

o R1 , R2 ,..., Rk clusters representative Step 2: for each web page from D: d i D and each cluster C k , k = 1, K � We will calculate the similarity between the web page and the representative of the cluster: S (d i , Rk (C k )) Step 3: if the similarity is bigger than a given threshold S (d i , Rk (C k )) > , than: � The document d i will be assigned to cluster C k with a weight attached, the weight being

10

Revista Informatica Economic nr.2(46)/2008

calculated based upon the similarity value:

m( d i , C k ) = S ( d i , Rk (C k ))

Step 4: for each cluster the representative is re-calculated taking into account the new documents that were assigned to that particular cluster Step 5: the process is re-started from step 2 until the new modifications are under a given threshold Step 6: for each web page d n that was not assigned to any cluster: � The closest neighbourhood will be calculated V (d u ) , the neighbourhood must not contain documents that have similarity measure zero � The d n web page will be assigned to the cluster to which the neighbourhood V (d u ) belongs to � The weight for d n assignation to C k is calculated

m(dn , Ck ) = m(V(du ),Ck ) * S(dn , (V(du )))

Step 7: for each cluster C k the representative of the cluster Rk is recalculated Similarity calculation is done using the next formula:

S ( x, y ) =

x y

i =1 i t i =1 2 i

i t

, (4) also named

2 i

x + y

i =1

Saltons cosine coefficient ([Steinbach, 2000]) 5. Constructing cluster like representation for final results a. Label extraction: for each clusters the sequence of words with the highest frequency will be assigned as label for that class. If a sequence of words is not found than a single word can be used b. Creating the cluster structure: determining which cluster will follow which will be done using both the size of the clusters and the weight of the pages inside the cluster. The bigger the cluster and the higher the weight of the documents inside it, the higher the cluster will be in the cluster

structure hierarchy. Also a measure of cluster quality can sometimes be calculated. c. Cluster result presentation: in the preprocessing phase we extracted the root for the similar words and we eliminated the stop words, and some other modifications took place in order to make the document suitable for clustering. In order for the user to understand what a page is about it must be presented with a short relevant description of that web page. In almost all cases the initial search result list provides a short description (the snippet). That description can be kept, and re-presented to the user after clusters are made, but it can also be enhanced with the particular characteristics of the cluster assignment. Search result clustering integration in user search process The clustering module will present to the user a simple interface which is used to provide to the tool the search query and all the search preferences. The clustering module will take this words and options and automatically feed it to all the search tools that are used in the web search process (e.g. Google, Yahoo, MSN search, etc.). For our example we have used local clustering. All the processing needed for clustering will be made on the local computer after all the search tools used have provided their own list of results. After the search and clustering is finished, the user is presented with the final cluster structure, each cluster having attached to it a label and a description, and inside the cluster each web page having its own description and link to the page. In picture 3 we have represented the place of the web metasearch clustering module. The interface provided by the clustering module must provide the user tools necessary to refine its search, like: � Language support � Ability to add / delete the search engines used for the metasearch � Flexible presentation of results � Possibility to ask a certain number of clusters � Special filters: for blog sites, adult sites, etc.

Distinct clusters of subject different web pages

Revista Informatica Economic nr.2(46)/2008

11

Query User Clusters

I N T E R F A C E

Query Clusters Search results Web search clustering module

Search tools - Yahoo - Google - MSN - AltaVista - Lycos ...

Options, settings, user options, user preferences...

Fig.3. The place of the web search clustering module in online user search process Conclusion The paper presented a web page clustering algorithm and the solution to integrate it in user query web search process. The paper started by presenting the circumstances in which the need for web search result clustering algorithm appeared and it presented the characteristics that a clustering module needs to meet in order to be practically efficient. After that we presented a classical approach of k-means clustering algorithm, and the modifications it undertook in order to be adapted to a web page clustering process. The web page clustering process was than described in detail starting with the initial document processing steps, and moving to the clustering process itself. In the end it was presented the representation requirements that the clustering module must meet in order to be efficient in a web search. Even if clustering web search results is only a soft solution for enhancing a users work to find the web pages it needs, it can greatly improve the results of a web search, in both time and quality. The idea to cluster web search results appeared for fist time in 2002, but the solution did not became widely popular even to this day due to the lack of understanding of its mechanisms and improvements that brings to a web search. This paper presented the user benefits given by such a tool compared to the efforts needed to implement it. Bibliography [Brin, 98] Brin, S., i Page, L., The anatomy of a large-scale hypertextual Web search

engine, In Proceedings of the Seventh International World WideWeb Conference, April 1998. [Chi, 2004] Chi, N., L., A tolerance rough set approach to clustering web search results, Master thesis in computer science, index 1891191, Faculty of Mathematics, Informatics and Mechanics, Warsaw University, 2003. [Salton, 89] Salton, G. Automatic text processing: the transformation, analysis, and retrieval of information by computer. Addison-Wesley Longman Publishing Co., Inc., 1989. [Steinbach, 2000] Steinbach, M., Karypis, G., and Kumar, V. A comparison of document clustering techniques. In KDD Workshop on TextMining (2000). [Leouski, 1996] Leouski A. V. and Croft W. B. An Evaluation of Techniques for Clustering Search Results. Technical Report IR-76, Department of Computer Science, University of Massachusetts, Amherst, 1996. (document available at: http://citeseer.ist.psu.edu/leouski96evaluation .html, ) [Leuski, 2000] Leuski A. and Allan J. Improving Interactive Retrieval by Combining Ranked List and Clustering. Proceedings of RIAO, College de France, pp. 665-681, 2000. (document available at: http://citeseer.ist.psu.edu/298378.html) [Baeza, 99] Baeza-Yates, R., Ribeiro-Neto, B., Modern Information Retrieval, Publisher: Addison Wesley; ACM Press, New York. (May 15, 1999)

You might also like

- Deepthi - Webclustering Report PDFDocument38 pagesDeepthi - Webclustering Report PDFjyotiNo ratings yet

- Building Search Engine Using Machine Learning Technique I-EEEDocument6 pagesBuilding Search Engine Using Machine Learning Technique I-EEECHARAN SAI MUSHAMNo ratings yet

- Semantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & EngineeringDocument36 pagesSemantic Web (CS1145) : Department Elective (Final Year) Department of Computer Science & Engineeringqwerty uNo ratings yet

- Deepthi Webclustering ReportDocument38 pagesDeepthi Webclustering ReportRijy Lorance100% (1)

- User ProfilingDocument15 pagesUser ProfilingesudharakaNo ratings yet

- A Review On Query Clustering Algorithms For Search Engine OptimizationDocument5 pagesA Review On Query Clustering Algorithms For Search Engine Optimizationeditor_ijarcsseNo ratings yet

- Web Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)Document4 pagesWeb Mining: Day-Today: International Journal of Emerging Trends & Technology in Computer Science (IJETTCS)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Lit SurveyDocument11 pagesLit SurveyShalini MuthukumarNo ratings yet

- Tadpole: A Meta Search Engine Evaluation of Meta Search Ranking StrategiesDocument8 pagesTadpole: A Meta Search Engine Evaluation of Meta Search Ranking StrategiesRula ShakrahNo ratings yet

- Similarity Search WashingtonDocument12 pagesSimilarity Search WashingtonGohar JavedNo ratings yet

- Web Mining Based On Genetic Algorithm: AIML 05 Conference, 19-21 December 2005, CICC, Cairo, EgyptDocument6 pagesWeb Mining Based On Genetic Algorithm: AIML 05 Conference, 19-21 December 2005, CICC, Cairo, EgyptAffable IndiaNo ratings yet

- Web Search Engine Based On DNS: Wang Liang, Guo Yi-Ping, Fang MingDocument9 pagesWeb Search Engine Based On DNS: Wang Liang, Guo Yi-Ping, Fang MingAinil HawaNo ratings yet

- Search Engine SeminarDocument17 pagesSearch Engine SeminarsidgrabNo ratings yet

- Technical Seminar ON Web Clustering Engines.: Department of Computer Science and EngineeringDocument15 pagesTechnical Seminar ON Web Clustering Engines.: Department of Computer Science and Engineering185G1 Pragna KoneruNo ratings yet

- CS8080 Irt Unit 4 23 24Document36 pagesCS8080 Irt Unit 4 23 24swarnaas2124No ratings yet

- Volume 2 Issue 6 2016 2020Document5 pagesVolume 2 Issue 6 2016 2020samanNo ratings yet

- Hybrid Technique For Enhanced Searching of Text Documents: Volume 2, Issue 4, April 2013Document5 pagesHybrid Technique For Enhanced Searching of Text Documents: Volume 2, Issue 4, April 2013International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Web Service CompositionDocument4 pagesWeb Service Compositionbebo_ha2008No ratings yet

- MS Project ReportDocument26 pagesMS Project ReportvirubhauNo ratings yet

- Web Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Document4 pagesWeb Data Extraction and Alignment: International Journal of Science and Research (IJSR), India Online ISSN: 2319 7064Umamageswari KumaresanNo ratings yet

- An Approach For Search Engine Optimization Using Classification - A Data Mining TechniqueDocument4 pagesAn Approach For Search Engine Optimization Using Classification - A Data Mining TechniqueInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Context Based Web Indexing For Semantic Web: Anchal Jain Nidhi TyagiDocument5 pagesContext Based Web Indexing For Semantic Web: Anchal Jain Nidhi TyagiInternational Organization of Scientific Research (IOSR)No ratings yet

- (IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghDocument5 pages(IJCST-V3I3P47) : Sarita Yadav, Jaswinder SinghEighthSenseGroupNo ratings yet

- Grouper A Dynamic Cluster Interface To Web Search ResultsDocument15 pagesGrouper A Dynamic Cluster Interface To Web Search ResultsDc LarryNo ratings yet

- Deep Web Content Mining: Shohreh Ajoudanian, and Mohammad Davarpanah JaziDocument5 pagesDeep Web Content Mining: Shohreh Ajoudanian, and Mohammad Davarpanah JaziMuhammad Aslam PopalNo ratings yet

- Seminar Report'04 3D SearchingDocument21 pagesSeminar Report'04 3D SearchingBibinMathewNo ratings yet

- Search Engine Optimization - Using Data Mining ApproachDocument5 pagesSearch Engine Optimization - Using Data Mining ApproachInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Robust Semantic Framework For Web Search EngineDocument6 pagesRobust Semantic Framework For Web Search Enginesurendiran123No ratings yet

- Study of Webcrawler: Implementation of Efficient and Fast CrawlerDocument6 pagesStudy of Webcrawler: Implementation of Efficient and Fast CrawlerIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Search Engine Personalization Tool Using Linear Vector AlgorithmDocument9 pagesSearch Engine Personalization Tool Using Linear Vector Algorithmahmed_trabNo ratings yet

- Web Mining ReportDocument46 pagesWeb Mining ReportNini P Suresh100% (2)

- Dynamic Organization of User Historical Queries: M. A. Arif, Syed Gulam GouseDocument3 pagesDynamic Organization of User Historical Queries: M. A. Arif, Syed Gulam GouseIJMERNo ratings yet

- TorrentsDocument19 pagesTorrentskunalmaniyarNo ratings yet

- Ieee FormatDocument13 pagesIeee FormatSumit SumanNo ratings yet

- IR - Assigment - RKDocument14 pagesIR - Assigment - RKjayesh.gujrathiNo ratings yet

- IRT Unit 1Document27 pagesIRT Unit 1Jiju Abutelin JaNo ratings yet

- Evaluation of Result Merging Strategies PDFDocument14 pagesEvaluation of Result Merging Strategies PDFVijay KarthiNo ratings yet

- A Language For Manipulating Clustered Web Documents ResultsDocument19 pagesA Language For Manipulating Clustered Web Documents ResultsAlessandro Siro CampiNo ratings yet

- Vector Space Model For Deep Web Data Retrieval and ExtractionDocument3 pagesVector Space Model For Deep Web Data Retrieval and ExtractionInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Generating Query Facets Using Knowledge Bases: CSE Dept 1 VMTWDocument63 pagesGenerating Query Facets Using Knowledge Bases: CSE Dept 1 VMTWMahveen ArshiNo ratings yet

- MS CS Manipal University Ashish Kumar Jha Data Structures and Algorithms Used in Search EngineDocument13 pagesMS CS Manipal University Ashish Kumar Jha Data Structures and Algorithms Used in Search EngineAshish Kumar JhaNo ratings yet

- Project FinalDocument59 pagesProject FinalRaghupal reddy GangulaNo ratings yet

- The International Journal of Engineering and Science (The IJES)Document7 pagesThe International Journal of Engineering and Science (The IJES)theijesNo ratings yet

- Advanced Algorithms Project ReportDocument10 pagesAdvanced Algorithms Project ReportVeer Pratap SinghNo ratings yet

- Thesis 1Document4 pagesThesis 1manish21101990No ratings yet

- Search Engines and Web Dynamics: Knut Magne Risvik Rolf MichelsenDocument17 pagesSearch Engines and Web Dynamics: Knut Magne Risvik Rolf MichelsenGokul KannanNo ratings yet

- Design, Analysis and Implementation of A Search Reporter System (SRS) Connected To A Search Engine Such As Google Using Phonetic AlgorithmDocument12 pagesDesign, Analysis and Implementation of A Search Reporter System (SRS) Connected To A Search Engine Such As Google Using Phonetic AlgorithmAJER JOURNALNo ratings yet

- Resource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory NetworksDocument6 pagesResource Capability Discovery and Description Management System For Bioinformatics Data and Service Integration - An Experiment With Gene Regulatory Networksmindvision25No ratings yet

- User Modeling For Personalized Web Search With Self-Organizing MapDocument14 pagesUser Modeling For Personalized Web Search With Self-Organizing MapJaeyong KangNo ratings yet

- Ambiguity Resolution in Information RetrievalDocument7 pagesAmbiguity Resolution in Information RetrievalDarrenNo ratings yet

- This Is The Original WebCrawler PaperDocument13 pagesThis Is The Original WebCrawler PaperAakash BathlaNo ratings yet

- Clustering Algorithm PDFDocument5 pagesClustering Algorithm PDFisssac_AbrahamNo ratings yet

- Working of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitDocument13 pagesWorking of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitaviNo ratings yet

- Towards Automatic Incorporation of Search Engines Into ADocument19 pagesTowards Automatic Incorporation of Search Engines Into ARobert PerezNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document5 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Sample 3 - OutputDocument5 pagesSample 3 - OutputHany aliNo ratings yet

- 4.an EfficientDocument10 pages4.an Efficientiaset123No ratings yet

- An Improved Heuristic Approach To Page Recommendation in Web Usage MiningDocument4 pagesAn Improved Heuristic Approach To Page Recommendation in Web Usage MiningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Working of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitDocument10 pagesWorking of Search Engines: Avinash Kumar Widhani, Ankit Tripathi and Rohit Sharma LnmiitaviNo ratings yet

- 17 - Ciovica, CristescuDocument7 pages17 - Ciovica, CristescuAdriana CalinNo ratings yet

- 06 - Cristescu, CiovicaDocument13 pages06 - Cristescu, CiovicaAdriana CalinNo ratings yet

- 05 - PopescuDocument11 pages05 - PopescuAdriana CalinNo ratings yet

- More General Credibility Models: F F P F FDocument6 pagesMore General Credibility Models: F F P F FAdriana CalinNo ratings yet

- Pages 4 5-15 Sandra MARTISIUTE, Gabriele VILUTYTE, Dainora GRUNDEY 16-22Document1 pagePages 4 5-15 Sandra MARTISIUTE, Gabriele VILUTYTE, Dainora GRUNDEY 16-22Adriana CalinNo ratings yet

- Ef 4Document1 pageEf 4Adriana CalinNo ratings yet

- Designing Algorithms Using CAD Technologies: Keywords: Diagrams, Algorithms, Programming, Code GenerationDocument3 pagesDesigning Algorithms Using CAD Technologies: Keywords: Diagrams, Algorithms, Programming, Code GenerationAdriana CalinNo ratings yet

- 15 Ivan, MilodinDocument7 pages15 Ivan, MilodinAdriana CalinNo ratings yet

- Developing Performance-Centered Systems For Higher EducationDocument5 pagesDeveloping Performance-Centered Systems For Higher EducationAdriana CalinNo ratings yet

- 2001 - Iulia Dorobat, Floarea NastaseDocument12 pages2001 - Iulia Dorobat, Floarea NastaseAdriana CalinNo ratings yet

- 11 - MoceanDocument8 pages11 - MoceanAdriana CalinNo ratings yet

- One World - One Accounting: Devon Erickson, Adam Esplin, Laureen A. MainesDocument7 pagesOne World - One Accounting: Devon Erickson, Adam Esplin, Laureen A. MainesAdriana CalinNo ratings yet

- Planning For The Succession of Digital Assets: Laura MckinnonDocument6 pagesPlanning For The Succession of Digital Assets: Laura MckinnonAdriana CalinNo ratings yet

- Common Lesson Plan 3 - Kelly SibrianDocument4 pagesCommon Lesson Plan 3 - Kelly Sibrianapi-510450123No ratings yet

- Grade 5 Chapter 1 The Fish TaleDocument15 pagesGrade 5 Chapter 1 The Fish TaleThulirgalNo ratings yet

- Excavadora 270 CLC John Deere 1719Document524 pagesExcavadora 270 CLC John Deere 1719Angel Rodriguez100% (1)

- lm723 PDFDocument21 pageslm723 PDFjet_media100% (1)

- Classified2019 2 3564799 PDFDocument9 pagesClassified2019 2 3564799 PDFaarianNo ratings yet

- Bakery Report (Interview)Document11 pagesBakery Report (Interview)Abhilasha AgarwalNo ratings yet

- The NEC3 Engineering and Construction Contract - A Comparative Analysis - Part 1 - Energy and Natural Resources - United KingdomDocument7 pagesThe NEC3 Engineering and Construction Contract - A Comparative Analysis - Part 1 - Energy and Natural Resources - United Kingdomiqbal2525No ratings yet

- Binary ClassificationMetrics CheathsheetDocument7 pagesBinary ClassificationMetrics CheathsheetPedro PereiraNo ratings yet

- D493027 en PDFDocument39 pagesD493027 en PDFJose ValenciaNo ratings yet

- Características Dimensiones HofstedeDocument9 pagesCaracterísticas Dimensiones HofstedeJaz WoolridgeNo ratings yet

- Dot Point Physics PreliminaryDocument70 pagesDot Point Physics Preliminaryjdgtree0850% (10)

- 5E LP - Mixture (Year 2) Group 4C2 UpdateDocument8 pages5E LP - Mixture (Year 2) Group 4C2 UpdatecancalokNo ratings yet

- Homo LumoDocument12 pagesHomo LumoShivam KansaraNo ratings yet

- Assignment 3Document7 pagesAssignment 3Midhun MNo ratings yet

- Rubric For Note TakingDocument1 pageRubric For Note TakingJena Mae GonzalesNo ratings yet

- PROGRAM 1: Write A Program To Check Whether A Given Number Is Even or OddDocument45 pagesPROGRAM 1: Write A Program To Check Whether A Given Number Is Even or OddShobhita MahajanNo ratings yet

- Homework: Name DateDocument24 pagesHomework: Name DateboysNo ratings yet

- Raw Material CatalogueDocument6 pagesRaw Material CatalogueKhalil EbrahimNo ratings yet

- Stochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008Document132 pagesStochastic Processes and The Mathematics of Finance: Jonathan Block April 1, 2008.cadeau01No ratings yet

- Recruitment For The Post of Office AttendantDocument2 pagesRecruitment For The Post of Office AttendantrajnagpNo ratings yet

- 8-2-16 Tina LindaDocument8 pages8-2-16 Tina LindaERICK SIMANJUNTAKNo ratings yet

- Computing Devices (Ii)Document8 pagesComputing Devices (Ii)Adaeze Victoria Pearl OnyekwereNo ratings yet

- Analyzing Your HR LandscapeDocument2 pagesAnalyzing Your HR LandscapeKashis Kumar100% (1)

- Sales Document StructureDocument5 pagesSales Document StructureShashi RayappagariNo ratings yet

- Second Language Lexical Acquisition: The Case of Extrovert and Introvert ChildrenDocument10 pagesSecond Language Lexical Acquisition: The Case of Extrovert and Introvert ChildrenPsychology and Education: A Multidisciplinary JournalNo ratings yet

- Arcelormittal 4q 22 Esg PresentationDocument30 pagesArcelormittal 4q 22 Esg PresentationmukeshindpatiNo ratings yet

- Department of Education: Professional Development PlanDocument2 pagesDepartment of Education: Professional Development PlanJen Apinado80% (5)

- SWRPG Starship VehicleSheetsDocument2 pagesSWRPG Starship VehicleSheetsPatrick J Smith100% (1)

- Mkomagi Et Al 2023. Relationship-Between-Project-Benefits-And-Sustainability-Of-Activities-A-Comparative-Analysis-Of-Selected-Donor-Funded-Agriculture-Related-Projects-In-TanzaniaDocument23 pagesMkomagi Et Al 2023. Relationship-Between-Project-Benefits-And-Sustainability-Of-Activities-A-Comparative-Analysis-Of-Selected-Donor-Funded-Agriculture-Related-Projects-In-TanzaniaJeremiah V. MkomagiNo ratings yet

- Datasheet Pattress Plates 2012Document4 pagesDatasheet Pattress Plates 2012Anil KumarNo ratings yet