Professional Documents

Culture Documents

1998 IEEE Multimedia

1998 IEEE Multimedia

Uploaded by

Kaye JacobsCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

1998 IEEE Multimedia

1998 IEEE Multimedia

Uploaded by

Kaye JacobsCopyright:

Available Formats

We propose a

general, exible, and

powerful architecture

to build software

agents that embed

articial emotions.

These articial

emotions achieve

functionalities for

conveying emotions

to humans, thus

allowing more

effective,

stimulating, and

natural interactions

between humans and

agents. An emotional

agent also possesses

rational knowledge

and reactive

capabilities, and

interacts with the

external world,

including other

agents.

I

magine a spacefor example, a museum

exhibit or theater stagein which you can

stand surrounded by music and control its

ebb and ow (the rhythms, orchestration,

and so on) by your own full-body movement

(such as dancing) without touching any controls.

1

In addition, imagine that this space also includes

mobile robots that can interact with, entertain,

sing, and speak to you, as though they were actors

on a stage.

2

Figure 1 shows an example of this

scenario.

This kind of multimedia-multimodal system

requires, among other things, intelligent interfaces

and adaptive behavior. We believe that including

articial emotions (in the sense we explain below)

as one of the main components of these kinds of

complex systems will achieve a more effective, stim-

ulating, and natural interaction with humans. For

example, consider a robot navigating on stage that

after repeatedly nding you in its way, announces

(by playing a digitized voice sample) Stop blocking

my way, Im angry! while ashing its eyes or

announces He hates me, Im so sad while slow-

ly moving to a corner. Clearly, the quality and effec-

tiveness of the interaction between the robot and

you is greatly enhanced by this kind of behavior

because it relates (at least externally) to human

behaviors associated with emotions. In fact, the

robot proves more believable.

3

To implement

these behaviors in the software controlling the

robot, we can codify an emotional state that evolves

over time, is inuenced by external events, and

inuences the robots actions.

Consider, as another example, the widely

accepted belief that music and dance communi-

cate mostly emotional content, embedded in the

interpretation and expression of the performers

intentions.

4

Therefore, an active space producing

music from full-body movement can try to capture

movement features focusing on the discovery of

interpretation and emotionally related content in

the performance

5

and embed emotional content

in the music produced. This can be implemented

by codifying some emotional state that movement

inuences, which in turn inuences the music.

In this article we propose a general architecture

6

to build emotional agentssoftware agents that

possess an emotional state (in the sense above). An

emotional agent interacts with the external world

by receiving inputs and sending outputs, possibly

in real time. The emotional state evolves over time;

it influences outputs

and its evolution

responds to inputs. The

agent also possesses a

rational knowledge (for

example, about the

external world and the

agents goals) that

evolves over time,

whose evolution

depends on inputs and

produces outputs. In

addition, some outputs

are produced reactively

from inputs in a rela-

tively direct and fast

way (possibly in real

time). These emotional,

24 1070-986X/98/$10.00 1998 IEEE

Feature Article

Figure 1. A sample scenario of an active space observing the dancers

movements and gestures. The dancer can generate and control music as well as

communicate to a robot navigating and performing on stage.

An Architecture

for Emotional

Agents

Antonio Camurri

University of Genoa

Alessandro Coglio

Kestrel I nstitute

rational, and reactive computations dont operate

in isolation, but can inuence each other in vari-

ous ways (for example, the emotional state can

modulate the agents reactions).

Unlike Slomans approach,

7

our architecture

doesnt attempt to model human or animal

agents. Instead, we propose it as a convenient and

sound way to structure software agents capable of

functionalities such as those sketched previously

and those described in the remainder of this arti-

cle. The motivations for the architecture, there-

fore, come from the practical behaviors that we

intend our emotional agents to implement. They

dont come from theories of human or animal

behavior, though you can certainly see some

naive analogies.

7

The main criterion for evaluat-

ing our architecture arises from its convenience in

structuring agents whose behaviors convey emo-

tions to the people exposed to the systems.

Were currently employing our architecture

mainly in systems involving music, dance, robots,

and so on. The software for these applications can

be (partially) realized as a population of commu-

nicating emotional agents. For instance, there

might be an agent for each navigating robot and

one or more agents for the sensorized space where

humans move. Inputs for these agents include

data from human-movement and robot sensors, as

well as messages from other agents. Outputs

include commands to audio devices and robot

actuators, as well as messages to other agents. The

emotional states of these agents can be modied,

among other factors, by human movements

wide, upward, and fast movements may give rise

to a happy and lively moodand robot interac-

tion with humansa human blocking a robots

way may generate an angry mood. These states can

influence music composition and robot naviga-

tion. For example, a happy mood may lead to live-

ly timbres and melodies, while a nervous mood

may prompt quick and fast-turning movements.

A number of promising applications have

emerged for emotional agents, such as

T Interactive entertainment, such as an interac-

tive discotheque where dancers can inuence

and change the music, and games like dance-

karaoke where the better you dance the better

music you get

T Interactive home theater

T Interactive tools for aerobics and gymnastics

T Rehabilitation tools such as agents supporting

therapies for mental disabilities where its

important to have nonintrusive environments

with which patients can establish creative

interaction

T Tools for teaching by playing and experiencing

in simulated environments

T Tools for enhancing the communication about

new products or ideas in conventions and

information ateliers such as fashion shows

T Cultural and museum applications that involve

autonomous robots that act as guides to

attract, entertain, and instruct visitors

T Augmented reality applications

Description of the architecture

Figure 2 shows the structure of an emotional

agent. The five rectangles represent active com-

ponents. A thick white arrow from one compo-

nent to another component represents a buffer

upon which the rst component acts as a produc-

er and the second as a consumer. (Table 1 lists the

eight buffers.) A thin black arrow from one com-

25

O

c

t

o

b

e

r

D

e

c

e

m

b

e

r

1

9

9

8

Rational

Emotional

Reactive

I

n

p

u

t

O

u

t

p

u

t

Figure 2. Overall structure of an emotional agent.

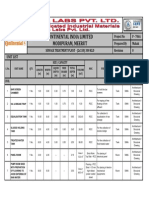

Table 1. Buffers of the agent (thick white arrows).

From To Contains

Input Reactive Reactive inputs

Reactive Output Reactive outputs

Input Rational Rational inputs

Rational Output Rational outputs

Input Emotional Emotional stimuli

Rational Emotional Emotional stimuli

Reactive Emotional Emotional stimuli

Output Input Internal feedbacks

ponent to another component represents a data

container upon which the first component has

read-write access and the second read-only access.

(Table 2 lists the eight containers.) Finally, the two

dashed arrows represent flows of information,

from the external world to Input and from Output

to the external world.

In the remainder of this section, well describe

the ve components in more detail and the data

through which they interact with each other and

with the external world. Note that the architec-

ture provides only conceptual requirements for

such components and data without constraining

their concrete realizations, which can vary wide-

ly across different agents.

The Input component

The Input component obtains inputs from the

external worldsuch as data from movement sen-

sors and messages from other agentsby request-

ing them (such as periodically polling a device

driver) and receiving them (for example, being

sent new data when available). The Input compo-

nent processes these inputs to produce reactive

inputs for Reactive, rational inputs for Rational,

and emotional stimuli for Emotional. The pro-

cessing can be more or less complex and depend

on some internal state of Input. For example,

information produced by analyzing data from

full-body human-movement sensors may include

kinematic and dynamic quantities (positions,

speeds, or energies), recognized symbolic gestures,

the degree to which dancers (or parts of their bod-

ies) remain in tempo, how dancers occupy the

stage space, and the smoothness of the dancers

movement. Typically, we obtain this information

by integrating different sensor data. Reactive and

rational inputs may then include descriptions of

kinematic and dynamic quantities, gestures, and

movement features. Different gestures may pro-

duce different emotional stimuli. For example,

very smooth movements may produce stimuli

opposite to those produced by sharp and nervous

movements.

Since Input can read the emotional-input para-

meter, the information its processing may

depend on the agents current emotional state. In

fact, as well see below, Emotional holds the emo-

tional state of the agent and keeps information

about it in the emotional-input parameter. In this

way, Input can do slightly different actions

according to the parameters current value. For

example, consider a robot navigating on stage

that encounters a human along its path. When

Input recognizes this situation (from the robot

sensor data), it generates a certain emotional stim-

ulus if the robot is sad and depressed (because the

human can be seen as a helping hand) or it pro-

duces an opposite stimulus if the robot is elated

and complacent (because the human is just seen

as a blocking obstacle).

Analogously, the processing of Input may also

depend on the robots current rational state

through the rational-input parameter. This proves

useful, for example, to dynamically change the

focus of attention of the movement analyzers

inside Input. In fact, in different situations (the

current situation being encoded in the rational

state), different aspects and features of human

movement may be relevant instead of others (for

example, arm movements instead of leg move-

ments or speed instead of energy).

The Input component also receives and

processes internal feedbacks coming from Output.

As well see, they provide information about

Outputs state changes to Input and hence indi-

rectly to Reactive, Rational, and Emotional

(through reactive inputs, rational inputs, and

emotional stimuli generated by Input as a result

of processing internal feedback).

Since Input can receive various kinds of inputs

from the external world and produce various

kinds of reactive inputs, emotional stimuli, and

rational inputs, Input usually contains various

modules, each in charge of performing a certain

kind of processing. Of course, the modules must

be properly orchestrated inside Input so that they

operate as an integrated whole (conflicting

information must not be produced from different

sensor data).

The Output component

Output sends outputssuch as audio data and

messages to other agentsto the external world,

for example, by issuing commands to a device dri-

26

I

E

E

E

M

u

l

t

i

M

e

d

i

a

Table 2. Data containers of the agent (thin black arrows).

From To Contains

Emotional Input Emotional-input parameter

Emotional Output Emotional-output parameter

Emotional Reactive Emotional-reactive parameter

Emotional Rational Emotional-rational parameter

Rational Input Rational-input parameter

Rational Output Rational-output parameter

Rational Reactive Rational-reactive parameter

Rational Emotional Rational-emotional parameter

ver. This component produces these outputs by

processing reactive outputs, rational outputs, and

the current emotional-output and rational-output

parameters. Examples of reactive and rational out-

puts from which outputs for sound device drivers

derive include descriptions of actions to play sin-

gle notes, musical excerpts, and digitized speech.

As well see, the emotional-output and rational-

output parameters contain information about the

current emotional and rational states. So the pro-

cessing of Output may depend on these two

states. For instance, the same musical excerpt can

be played with different timbres in different emo-

tional states (a bright timbre for happiness, a

gloomy one for sadness) and different volumes in

different rational states (a higher volume for a

robot loudspeaker in situations where humans are

far from the robot).

Output also sends internal feedbacks to Input,

as we mentioned above. Internal feedbacks con-

tain information about state changes of Output

that must be sent to the agents other compo-

nents. Consider a rational output instructing

Output to play a musical excerpt and a subse-

quent reactive output that, as a result of a sudden

event (for example, a robot being stopped by an

obstacle), instructs Output to abort the excerpt

and utter an exclamation. Output can thus gener-

ate an internal feedback signaling the abort,

which is forwarded to Rational. This might cause

Rational to reissue the rational output to play the

excerpt, for example. Without internal feedback,

Rational would have no way of knowing that the

excerpt was aborted and thus no way of replaying

it. In general, internal feedbacks assure some com-

munication path between any two components of

the agent.

Output can produce various kinds of outputs

for the external world and process various kinds

of reactive and rational outputs. Therefore,

Output usually contains various modules, each in

charge of performing a certain kind of processing,

analogously to Input. Of course, the modules

must be orchestrated to operate as an integrated

whole (conicting commands must not be sent

to the same device driver).

The Reactive component

Reactive processes reactive inputs from Input

and produces reactive outputs for Output and

emotional stimuli for Emotional. Reactive process-

es information relatively fast, and it has little or

no state. In fact, if the agent exchanges data with

the external world in real time (as is customary in

music and dance applications),

Reactive realizes (together with some

modules of Input and Output) the

agents real-time behavior.

Reactive can perform a rich vari-

ety of computations because they

depend on the emotional and ratio-

nal states through the emotional-

reactive and rational-reactive

parameters (for instance, such para-

meters can change the hardwiring of

reactive inputs and outputs). For

example, a robot encountering an

obstacle might utter slightly differ-

ent exclamations in different emo-

tional states. Another interesting

example is that of virtual musical

instruments, where, for instance, a

humans repeated nervous and rhythmic gestures

(evoking the gestures of a percussionist) in certain

locations of the space continuously transform

neutral sounds into drum-like sounds. That is, vir-

tual percussion instruments emerge in those loca-

tions, and subsequent movements in each

location produce percussion sounds. While

Rational creates virtual instruments, Reactive pro-

duces the sounds from movements. To do that,

Reactive must know which virtual instruments

reside in which locations. Rational provides this

information to Reactive via the rational-reactive

parameter.

The Rational component

Rational holds the agents rational state, which

consists of some knowledge about the external

world (how humans are moving, which virtual

instruments have been created, and so on) and

the agent itself (the goals the agent must achieve).

This knowledge evolves by some inference engine,

and its evolution depends on the processing of

rational inputs from Input and produces rational

outputs for Output. Rationals knowledge and

inference engine can be more or less complex

from state variables and transitions to symbolic

assertions and theorem proving, and sometimes a

mix of different things such as hybrid models.

1

In

contrast to Reactive, Rational generally has no

strict timing constraints (except that rational out-

puts must be produced in time to be useful), and

therefore its computations can be quite complex.

Rationals evolving knowledge can also depend

on the current emotional state through the emo-

tional-rational parameter. This dependence usu-

ally takes place in the following ways:

27

O

c

t

o

b

e

r

D

e

c

e

m

b

e

r

1

9

9

8

These emotional,

rational, and reactive

computations dont

operate in isolation,

but can inuence

each other in

various ways.

1. The parameter encodes knowledge about the

emotional state (usually in the same form as

Rationals other knowledge) so that the infer-

ence engine of Rational also operates on this

emotional knowledge (without modifying

it, since Rational has read-only access to it).

2. The parameter affects the inference engine (as

a kind of emotional perturbation) so that

the same knowledge evolves differently in dif-

ferent emotional states.

Of course, these two

mechanisms are not

mutually exclusive.

On the other hand,

the evolution of the

emotional state can

also depend on the cur-

rent rational state

through the rational-

emotional parameter,

as well as on its tempo-

ral evolution through

explicit emotional

stimuli for Emotional

produced by Rational

(analogous to rational

outputs). For instance,

becoming aware that

some of the agents

goals have been ful-

filled might cause

Rational to give a posi-

tive emotional stimu-

lus for Emotional (or a

negative one in case

the agent failed to

achieve its goals).

Of course, part of

Rationals processing

consists in updating the rational-input, rational-

output, rational-reactive, and rational-emotional

parameters to reect changes in its knowledge.

The Emotional component

Emotional holds the agents emotional state,

whose temporal evolution is governed by emo-

tional stimuli from Input, Reactive, and Rational.

An interesting computational realization of an

emotional state, among the many possible, con-

sists in coordinates of a point in some emotional

space. The coordinates can change step by step

according to emotional stimuli or even according

to some physical metaphor where emotional stim-

uli constitute forces acting on a point mass. The

emotional space is usually partitioned into zones

characterized by synoptic, symbolic names such

as happiness, sadness, and excitement. So, differ-

ent emotional stimuli tend to move the mass

toward different zones. Borders between zones, as

well as emotional coordinates and forces, can be

fuzzy. Figures 3a and 3b show two emotional 2D

spaces of these kinds of computational realiza-

tions.

2,8

While we have found these examples of

Emotional very useful and interesting, we stress

that our architecture makes no commitment to

them and in fact allows very different realizations

of Emotional.

Unlike Reactive and Rational, Emotional does

not produce any emotional output for Output.

However, the state of Emotional inuences the pro-

cessing of Output through the emotional-output

parameter. This parameter, as well as the emotion-

al-input, emotional-reactive, and emotional-ratio-

nal parameters, contains information about the

emotional state (such as the name of the zone

where the mass lies or a fuzzy vector of a point

coordinate membership to zones), which

Emotional updates as its state changes.

In addition to receiving emotional stimuli

from Rational, Emotional can be influenced by

the rational state through the rational-emotional

parameter. For example, having faster dynamics

of a robots emotional state changessuch as

emotional stimuli resulting in greater forces upon

the massproves useful in situations of close

interaction with humans.

An application of the architecture

Were successfully employing our architecture

in Citt dei Bambini, (Childrens City) a per-

manent science exhibition at Porto Antico in

Genoa, Italy where children, by playing interac-

tively with various devices and games, learn about

physics, music, biology, and many other subjects.

9

Our lab conceived and realized the Music Atelier

of this exhibition, described in the sidebar The

Music Atelier of Citt dei Bambini. We modeled

the software for our Atelier as a population of ve

communicating emotional agents, one for each

game. Messages exchanged by agentsbesides

informing the robot how visitors are playing the

other gameshelp the agents make adjustments

to the system dynamically (such as adjusting

sound volumes of different games to avoid inter-

ference). In addition, the agents help the Atelier

work as an integrated game by making the current

28

I

E

E

E

M

u

l

t

i

M

e

d

i

a

Vanity

Maladjusted

Pride Depression Apathy

Hope

Melancholy Peacefulness

Happiness

Clown Rapture

Hermit/Withdrawn Revolt

Q

u

i

e

t

Introverted

D

e

p

r

e

s

s

e

d

I

r

r

i

t

a

b

l

e

A

n

g

r

y

Lively

H

a

p

p

y

P

a

t

i

e

n

t

( a)

( b)

Figure 3. Two examples

of emotional spaces.

29

Citt dei Bambini, (Childrens City) a 3,000-

square-meter interactive permanent science muse-

um exhibit for children, recently opened at Porto

Antico in Genoa, Italy. The exhibit consists of two

main modules, one developed by La Villette (Paris)

and the other by Imparagiocando

(formed by the University of Genoa,

National Institute for the Physics of

Matter, National Institute for Cancer

Research and Advanced Biotechnol-

ogy Center, Arciragazzi, and the

International Movement of Loisirs

Science and Technology). The Music

Atelier, part of the latter module, was

conceived and realized by the Labo-

ratory of Musical Informatics of the

Department of Informatics, Systems,

and Telecommunications (DIST) at

the University of Genoa. The Atelier

(see Figure A) consists of ve games

characterized by multimedia-multi-

modal interaction involving music,

movement, dance, and computer

animation.

In the rst game, called Let Your

Body Make the Music, visitors can

create and modify music through

their full-body movement in an

active sensorized space. This biofeed-

back loop helps visitors learn basic

music concepts such as rhythm and orchestration.

This game also serves as a buffer for visitors. In

fact, it can be played by a group of visitors while

they wait their turn for the rest of the Atelier, which

no more than ve people can visit simultaneously.

The second game, Cicerone Robot, features a

navigating and speaking (as well as singing) robot

(see Figure B) that guides groups of up to ve vis-

itors through the remaining three games, explain-

ing how to play, while simultaneously interacting

with the visitors. The robot can change mood and

character in response to whatever is happening in

the Atelier. For instance, it gets angry if visitors do

not play the other games in the way it explained

the games to them. Its emotional state is reected

in the way it moves (swaggering, nervous, or

calm), its voice (inflection; different ways of

explaining things, from short and tense to happy

and lively), the music it produces (happy, serene,

or noisy), and environmental lights in the Atelier

(when sad, the light becomes blue and dim; the

angrier it gets, the redder the light becomes). This

game aims to introduce children to articial intel-

ligence concepts and dispel the myth of science-

ction robots by introducing them to a real robot.

The Music Atelier of Citt dei Bambini

Robots home Harmony of Movement

Environmental lights

What does

the robot see?

Welcome

screen

In

Out

Real and Virtual

Tambourines

Musical String

Cicerone

Robot

Let Your

Body Make

the Music

Figure A. The map of

the Music Atelier at

Citt dei Bambini.

Figure B. The Cicerone

Robot, the guide (or

games partner) for

visitors, at work in the

Music Atelier at Citt

dei Bambini.

continued on p. 30

games operation depend on how previous games

were played. This ensures coherence and conti-

nuity in a visitors tour of the Atelier.

We implemented the emotional agent for the

robot and found that children are generally

impressed by its performance. For the other

games, we initially realized nonemotional appli-

cations, which were now extending toward full

emotional agents as part of the continuing growth

of the Music Atelier. Our architecture has proven

useful in designing and implementing the other

four agents in a clear and reusable way. These

agents and applications are written in C++and

run on the Microsoft Windows NT operating sys-

tem. Next well describe the main features of the

robot agents current implementation.

The emotional agent for the robot

Physically, the robot is an ActiveMedia Pioneer

1, which we dressed and equipped with on-board

remote-controlled loudspeakers and additional

infrared localization sensors. The Pioneer 1 comes

with Saphira navigation software (see http://www.

ai.sri.com/~konolige/saphira), which provides a

high-level interface to control robot movements.

Inputs and outputs for the agent include data from

and to this navigation software. Inputs also

include messages from the Ateliers other software

applications. In addition, outputs consist of speech

and music data for the on-board loudspeakers and

control data for environmental lights.

The robots emotional state is a pair of coordi-

nates in a circular emotional space shown in

Figure 3a.

8

The horizontal coordinate measures

the robots self-esteem, the vertical one the esteem

of other people (that is, visitors of the Atelier) for

the robot. These coordinates divide the space into

eight sectors of moods. The circle also can be

divided into three concentric zones correspond-

ing to the intensity of the moods (weak, average,

and strong). So, the coordinate space has 24 dis-

tinct zones.

Emotional stimuli are carrots (rewards) and

sticks (punishments) either from the robot or

from people. Generally, a carrot or stick from the

robot moves the point in the coordinate space

right or left (because self-esteem increases or

decreases), while a carrot or stick from people

moves the point up or down (for analogous rea-

sons). The dynamics of this model are nonlinear.

When the point lies in the happiness sector and

in the external (strong) ring, a carrot has little or

no effect, while even a single stick can cata-

strophically move the point into an opposite

zone. For example, carrots and sticks from people

are generated by Input for Emotional from mes-

sages coming from the other gamescarrots if vis-

itors are playing them as the robot explained,

sticks otherwise. Carrots and sticks from the robot

originate from Rational for Emotional, respec-

tively, when the robot achieves a goal (such as

reaching a location) and when it cannot achieve

it (for instance, because visitors block its way).

In the absence of carrots and sticks, the point

moves toward a spot in the happiness sector (that

30

I

E

E

E

M

u

l

t

i

M

e

d

i

a

The game Real and Virtual Tambourines con-

sists of a set of three percussive musical instru-

ments. Only one has a real membrane, while the

other two have a computer-simulated sound

source (vibration of the membrane) controlled

by sensors. This game introduces visitors to vir-

tual instruments.

Musical String explores the nature of musical

timbre (see Figure B3 for the physical structure on

the ceiling for this game). Visitors movements

excite a (simulated) string, then dynamically

change parameters of its physical model. The

results can be heard and seen on a computer

screen showing an animation of the vibrating

string.

Harmony of Movement explores some princi-

ples of musical language without using tradi-

tional notation. Instead it employs a visual

metaphor with animated characters (little col-

ored fishes) on a computer screen instead of

notes on a stave. Visitors experiment and recon-

struct a simple ve-voice musical piece by means

of their movements, receiving musical and visual

feedback. Each voice corresponds to a fish. As

soon as a visitor stands on one of the ve colored

tiles (teletransport stations) arranged in a semi-

circle, a sh of the same color appears on screen

and the melody associated with that character

begins to take form. The shs alter ego moves

around and plays the melody following the visi-

tors movements on the teletransport station.

After some time, the shes form a shoal, and the

visitors can move all over the semicircular area,

working together to guide the shoal. This game

aims to obtain the best musical result possible,

which also corresponds to the most harmonic

and coordinated movement of the shoal, half-

way between total chaos and perfect order.

continued from p. 29

is, the robot tends to be happy naturally). A mod-

ule of Output reflects mood changes as color

changes in analogically controlled environmental

lights (through the emotional-output parameter).

Particular colors are associated with some points

in the emotional space (for example, a deep red

with a point in the anger sector), and interpola-

tion determines colors for the other points. For

more information see the sidebar How the Robot

Conveys Emotions and How Visitors React.

We realized Rational as a state machine.

Rational inputs, generated by Input from data

coming from the robot navigation software, cause

state transitions. For example, after the robot

reaches the string game, its state changes from

moving to string to explaining how to play

string. Some state transitions generate rational

outputs for Output and carrots and sticks for

Emotional (see above). Rational outputs, besides

data to the navigation software, include com-

mands to say explanatory sentences about games.

The current zone in the emotional space influ-

ences the exact choice of the sentence (through

the emotional-rational parameter). Different

zones result in the same sentences expressed in

different moods, such as a sharp tone for the

angry sector, a lively tone for the happiness sec-

tor, and so on.

Reactive inputs are generated by Input for

Reactive when the robot encounters an obstacle,

which forces it to stop. When this happens,

Reactive generates a reactive output to utter an

exclamation. The current Emotional zone deter-

mines (through the emotional-reactive parameter)

a set of slightly different exclamations, one of

which the system randomly chooses to avoid

tedious repetitiveness. If Reactive instructs Output

to utter an exclamation while the robot utters an

explanatory sentence, the latter is aborted (quite

a human-like behavior) and an internal feedback

is sent to Input so that Rational can instruct

Output to say the sentence again.

31

O

c

t

o

b

e

r

D

e

c

e

m

b

e

r

1

9

9

8

The robot communicates to children through

lights, style of movement, speech, sound, and

music. Such outputs depend on the emotional

state and how the visit is going so far. Groups of

colored lights (yellow, red, and blue) are displaced

in the Atelier (see Figure B3). A happy, lively robot

will produce full lights of all colorsred means

angry, blue depressed, yellow serene, and so on.

Further, the robot embeds an on-board small light

that pulses at a frequency related to its emotional

state and tension. Such a light is hidden inside the

robot so that only gleams of pulsing light remain

visible. This helps children remember the robot is

active even when its standing still (for example,

it may feel apathetic or be in a difcult navigation-

al situation).

The style of movement is another important

communication channel. For example, the robot

can go from, say, moving in a straightfoward,

courageous manner (getting close to obstacles

before avoiding them) to a slow, shy-like pace (it

doesnt want to reach its goal) to a tail-wagging,

gregarious gait (it might deviate from its naviga-

tional path or evoke some kind of dance). Tail-

wagging usually means an open, friendly, lively,

and happy mood. Slow versus fast and sharp ver-

sus smooth trajectories are important for express-

ing the robots inner tension.

Different spoken sentences can describe the

same content. Furthermore, the robots speech is

slightly modified by modulating it with intensity

dynamics or using different sound source(s) with

digital audio filtering (such as a harmonizer). For

example, for an important message, the on-board

loudspeaker system is reinforced by the other

games environmental loudspeakers. This makes

the voice louder and dynamically spatialized to

command childrens attention in critical or joking

situations.

Music and environmental sounds are also very

important and strictly related to the robots emo-

tional state. A computer dynamically re-creates

and re-orchestrates music in real time, using ad

hoc compositional rules conceived for the musical

context. Further, the music the robot generates

takes into account the sound and music of the

other games (agents) with which the robot inter-

acts. This means that all games possess rules con-

cerning their musical interaction, that is, their

merging and orchestration when the robot

enters the audio range of another game. The over-

all model is cooperative-competitive: a music out-

put can be temporarily turned off to emphasize

another performing a high-priority audio task, or

both can be modied to play as two voices of the

same music. Therefore, music is not a mere back-

How the Robot Conveys Emotions and How Visitors React

continued on p. 32

Concluding remarks

The architecture we propose offers a very pow-

erful, exible, and useful way to structure software

agents that embed articial emotions. The state of

Emotionalthe agents emotional stateis affect-

ed both by the external world and the agent

(through emotional stimuli from Input, Rational,

and Reactive) and inuences how the other four

components work via the four parameters. The

state of Rational represents the agents rational

knowledge, which is affected by and affects the

external world (through inputs from Input and

outputs to Output, respectively) and influences

how the other four components work through the

four parameters. Reactive reactively interacts with

the external world, while Input and Output trans-

late between raw data of the external world and

higher level information inside the agent.

We note that the idea of producing outputs

from inputs in parallel through fast and simple

computations (Reactive) and complex and inten-

sive ones (Rational), is not new (see, for example,

Ferguson

10

). Our main contribution consists in

introducing emotional computations (Emotional)

and integrating them with Rational and Reactive.

Besides working in multimedia-multimodal sys-

tems, our emotional agents seem suited to other

fields as well (for example, the kind of agents

Bates

3

described).

While providing a clear separation of concerns

among the agent components and the data

exchanged by them, our architecture grants many

degrees of freedom in realizing specic agents. As

we mentioned, our architecture provides only a

conceptual blueprint, since it doesnt mandate

how the individual components work or the data

they exchange, thus allowing a wide variety of

concrete realizations. For example, our architec-

ture puts no restrictions on how the ve compo-

nents execute relative to each other (that is, no

restrictions exist on the ow of control inside the

agent). Generally, they can run concurrently, and

even modules inside Input and Output can run

concurrently. When the agent must exchange

data with the external world in real time, its often

necessary to execute Reactive and (part of) Input

and Output in a single thread of control, which

cyclically activates Input, Reactive, and Output

with a cycle time sufficiently small to meet the

applications real-time constraints.

Our architecture finds its roots in Camurris

past work in several research projects, including

the three-year Esprit long-term research project

8579 Miami (Multimodal Interaction in Advanced

32

I

E

E

E

M

u

l

t

i

M

e

d

i

a

ground effect, but an active component to com-

municate the overall emotional state.

Finally, to further enhance emotional commu-

nication, were also experimenting (in a recent

public art installation) with visual output from the

robot. A video camera on top of the robot acquires

the face of a child standing in front of it. Our soft-

ware, Virtual Mirrors, projects a deformation of the

face on a large screen reecting the robots emo-

tional statethe angrier the robot, the more dis-

torted the mirrored face.

Does our robot communicate emotions to the

general audience? Our results from experimental

work encourage us to think so. Our experience

confirmed that children make a good test audi-

ence. They adapt very quickly to new things, but

theyre also very good at discriminating the faults

in your work and explaining it to you more freely

than adults. We collected feedback from a large

number of children through some written ques-

tionnaires and direct verbal communication.

Overall, we found that children are impressed by

the robots performance. We observed that small

groups of children (up to three) are prone to fol-

low and collaborate with the robot. Also, small

groups are often more impressed by the perfor-

mance. When a full classroom of children enters

our installation, the children dont generally under-

stand the robot because it requires more attention.

In fact, in our musical installation the robot is left

alone with children, and no intermediate person is

active in the space.

What about the robots reactions to children?

The robot is scared by a large number of obsta-

cles, so it performs poorly if more than three or four

children are around. The performance degenerates

because the robot cant reach the goal (reaching

the next game and explaining it to the children or

completing an evolution while navigating in the

space). This negative stimulus will eventually make

the robot angry and then depressed. With a large

number of children, the robot changes its goal.

Instead of accompanying the children to the other

interactive games, it becomes a game partner, a vis-

itor itself touring around with children until it reach-

es a calmer situation.

continued from p. 31

33

O

c

t

o

b

e

r

D

e

c

e

m

b

e

r

1

9

9

8

Multimedia Interfaces),

1

which resulted in con-

crete real-world applications such as interactive

multimedia-multimodal systems used in public

concerts, in Luciano Berios first performance of

his opera Outis (at the Opera House Teatro alla

Scala, Milan), and in an interactive art installation

at Biennale Architettura, Venice. This past work

inspired us to imagine how the systems built for

those projects would be realized as emotional

agents and to ensure that such re-realizations

would be natural and convenient. MM

Acknowledgments

We thank Claudio Massucco for useful discus-

sions. We also thank Paolo Coletta, Claudia

Liconte, and Daniela Murta for implementing the

emotional agent of the robot for Citt dei

Bambini.

References

1. A. Camurri, Network Models for Motor Control and

Music, Self-Organization, Computational Maps, and

Motor Control, P. Morasso and V. Sanguineti, eds.,

North-Holland Elsevier Science, Amsterdam, 1997,

pp. 311-355.

2. A.Camurri, P.Ferrentino Interactive Environments

for Music and Multimedia, to be published in

Multimedia Systems(special issue on audio and mul-

timedia), ACM and Springer-Verlag, Berlin, 1999.

3. J. Bates, The Role of Emotions in Believable

Agents, Comm. ACM, Vol. 37, No. 3, 1994, pp.

122-125.

4. J.A. Sloboda, The Musical Mind: The Cognitive

Psychology of Music, Oxford University Press, Oxford,

1985.

5. H. Sawada, S. Ohkura, and S. Hashimoto, Gesture

Analysis Using 3D Acceleration Sensor for Music

Control, Proc. Intl Computer Music Conf. (ICMC 95),

Intl Computer Music Assoc., San Francisco, 1995.

6. A. Camurri et al., An Architecture for Multimodal

Environment Agents, Proc. Italian Assoc. for Musical

Informatics (AIMI) Intl. Workshop Kansei: The

Technology of Emotion, AIMI and DIST (University of

Genoa), Genoa, Italy, 1997, pp. 48-53.

7. A. Sloman, Damasio, Descartes, Alarms, and Meta-

Management, Proc. IEEE Intl. Conf. Systems, Man,

and Cybernetics (SMC 98), IEEE Computer Society

Press, Los Alamitos, Calif., 1998, pp. 2652-2657.

8. A. Camurri et al., Toward Kansei Information

Processing in Music/Dance Interactive Multimodal

Environments, Proc. Italian Assoc. for Musical

Informatics (AIMI) Intl. Workshop Kansei: The

Technology of Emotion, AIMI and DIST (University of

Genoa), Genoa, Italy, 1997, pp. 74-78.

9. A. Camurri, G. Dondi, and G. Gambardella,

Interactive Science Exhibition: A Playground for

True and Simulated Emotions, Proc. Italian Assoc.

for Musical Informatics (AIMI) Intl. Workshop Kansei:

The Technology of Emotion, AIMI and DIST (University

of Genoa), Genoa, Italy, 1997, pp. 193-195.

10.I.A. Ferguson, Touring Machines: Autonomous

Agents with Attitudes, Computer, Vol. 25, No. 5,

May 1992.

Antonio Camurri is an associate

professor in the Department of

Informatics, Systems, and

Telecommunications (DIST) at the

University of Genoa, Italy. His

research interests include comput-

er music, multimodal intelligent interfaces, interactive

systems, kansei information processing and artificial

emotions, and interactive multimodal-multimedia sys-

tems for museum, theatre, and music applications. He

earned an MS in electrical engineering in 1984 and a

PhD in computer engineering in 1991 from the

University of Genoa. He is the founder and scientific

director of the Laboratory of Musical Informatics at

DIST. He is president of the Italian Association for

Musical Informatics (AIMI), member of the executive

committee of the IEEE Computer Society technical com-

mittee on computer-generated music, and associate edi-

tor of the Journal of New Music Research.

Alessandro Coglio is a research

scientist at the Kestrel Institute in

Palo Alto, California, where he is

working in the field of formal

methods for software engineering.

He was previously involved in var-

ious research projects at the University of Genoa in the

fields of theorem proving (in collaboration with

Stanford University, Palo Alto, California), Petri nets and

discrete event systems, and emotional agents. He

received a degree in computer science engineering from

the University of Genoa in 1996.

Readers may contact Camurri at DIST, University of

Genoa, Viale Causa 13, 16145 Genova, Italy, e-mail

music@dist.unige.it, http://musart.dist.unige.it.

You might also like

- Cafo by Animals As LeadersDocument26 pagesCafo by Animals As LeadersPaul DrakeNo ratings yet

- Outlines For A Semiotic Analysis of Objects PDFDocument18 pagesOutlines For A Semiotic Analysis of Objects PDFngeorg76100% (2)

- Manfred Clynes - Generalized Emotion - How It May Be Produced, and Sentic Cycle TherapyDocument52 pagesManfred Clynes - Generalized Emotion - How It May Be Produced, and Sentic Cycle TherapyDositheus Seth100% (1)

- 42 Deep Thought On Life, The Universe, and EverythingDocument195 pages42 Deep Thought On Life, The Universe, and EverythingHenry Ford100% (3)

- Part 5 - From Sibelius To GóreckiDocument60 pagesPart 5 - From Sibelius To GóreckiAdrian PisaltuNo ratings yet

- Graduale Romanum Comitante OrganoDocument3 pagesGraduale Romanum Comitante OrganoKimNo ratings yet

- Influencing The Perceived Emotions of Music With Intent: Steven R. LivingstoneDocument11 pagesInfluencing The Perceived Emotions of Music With Intent: Steven R. LivingstoneBojana RanitovićNo ratings yet

- ButohDocument10 pagesButohShilpa AlaghNo ratings yet

- Affective Computing With Primary and Secondary Emotions in A Virtual HumanDocument18 pagesAffective Computing With Primary and Secondary Emotions in A Virtual HumanIatan Alexandra-GabrielaNo ratings yet

- Emotionally Responsive Poker Playing Agents: Stuart Marquis Clark ElliottDocument5 pagesEmotionally Responsive Poker Playing Agents: Stuart Marquis Clark ElliottpostscriptNo ratings yet

- A Motional Instrument For Emotional ElementsDocument14 pagesA Motional Instrument For Emotional ElementsJayne VidheecharoenNo ratings yet

- Simulation of Emotions of Agents in Virtual Environments Using Neural NetworksDocument15 pagesSimulation of Emotions of Agents in Virtual Environments Using Neural NetworksJarar ShahNo ratings yet

- 21 LeGroux-ICMC08Document4 pages21 LeGroux-ICMC08Judap FlocNo ratings yet

- HCI Foundations - HumanDocument63 pagesHCI Foundations - HumanTarang GargNo ratings yet

- A Basic Gesture and Motion Format For Virtual Reality Multisensory ApplicationsDocument8 pagesA Basic Gesture and Motion Format For Virtual Reality Multisensory ApplicationsipiresNo ratings yet

- Altered States ConsciousnessDocument15 pagesAltered States ConsciousnessJesus AguilarNo ratings yet

- Composing Affective MusicDocument6 pagesComposing Affective MusicJudap FlocNo ratings yet

- Human Computer Interaction: Chapter 2 Human in HCIDocument9 pagesHuman Computer Interaction: Chapter 2 Human in HCIMehari TemesgenNo ratings yet

- Sense-Sational Acting Intuition in Actors Is Supported by Interoceptive, Exteroceptive, and Immersive AbilitiesDocument47 pagesSense-Sational Acting Intuition in Actors Is Supported by Interoceptive, Exteroceptive, and Immersive AbilitiesRaaj DeshmukhNo ratings yet

- A Closed-Loop Brain-Computer Music Interface For Continuous Affective InteractionDocument4 pagesA Closed-Loop Brain-Computer Music Interface For Continuous Affective InteractionAngelRibeiro10No ratings yet

- Tart 1978BDocument8 pagesTart 1978BIno MoxoNo ratings yet

- Affective Computing ReportDocument19 pagesAffective Computing ReportJasir EcNo ratings yet

- 2014 12 Ncaa AuthorDocument30 pages2014 12 Ncaa AuthorSuman BanerjeeNo ratings yet

- Igrace - Emotional Computational Model For Emi Companion RobotDocument27 pagesIgrace - Emotional Computational Model For Emi Companion RobotCarlos Johnnatan Sandoval ArraygaNo ratings yet

- Icat: An Affective Game Buddy Based On Anticipatory Mechanisms (Short Paper)Document4 pagesIcat: An Affective Game Buddy Based On Anticipatory Mechanisms (Short Paper)Ioana IleaNo ratings yet

- Influnce PeopleDocument6 pagesInflunce PeopleGanesh Kumar ArumugamNo ratings yet

- Disambiguating Music Emotion Using Software Agents: Dan Yang Wonsook LeeDocument6 pagesDisambiguating Music Emotion Using Software Agents: Dan Yang Wonsook LeeMaximilian ParadizNo ratings yet

- Impact of Emotion On Prosody Analysis: Padmalaya PattnaikDocument6 pagesImpact of Emotion On Prosody Analysis: Padmalaya PattnaikInternational Organization of Scientific Research (IOSR)No ratings yet

- TR 2009 02Document8 pagesTR 2009 02RichieDaisyNo ratings yet

- Representing Emotion and Mood States For Virtual AgentsDocument8 pagesRepresenting Emotion and Mood States For Virtual AgentsToko Uchiha KaosunikNo ratings yet

- Philosophy of MindDocument10 pagesPhilosophy of MindtiffanykamandaNo ratings yet

- Magic Mirror Table For Social-Emotion Alleviation in The Smart HomeDocument6 pagesMagic Mirror Table For Social-Emotion Alleviation in The Smart HomeMathew GeorgeNo ratings yet

- Early Experiments Using Motivations To Regulate Human-Robot InteractionDocument6 pagesEarly Experiments Using Motivations To Regulate Human-Robot InteractionXagnik Basu MallikNo ratings yet

- Silhouette Based Emotion RecognitionDocument7 pagesSilhouette Based Emotion RecognitionHanhoon ParkNo ratings yet

- Dannenberg Et Bates - 2004 - A Model For Interactive ArtDocument9 pagesDannenberg Et Bates - 2004 - A Model For Interactive ArtjpcoteNo ratings yet

- PMC 2015 S. Mickis PaperDocument17 pagesPMC 2015 S. Mickis PaperSigitas MickisNo ratings yet

- Robot EmocionalDocument28 pagesRobot EmocionalCory GuzmánNo ratings yet

- Virtual Rapport: Abstract. Effective Face-To-Face Conversations Are Highly Interactive. ParticiDocument14 pagesVirtual Rapport: Abstract. Effective Face-To-Face Conversations Are Highly Interactive. ParticiskandashastryNo ratings yet

- Sensosry Motion ControlDocument19 pagesSensosry Motion ControlMarcela PaviaNo ratings yet

- Brain Sciences: Advances in Multimodal Emotion Recognition Based On Brain-Computer InterfacesDocument29 pagesBrain Sciences: Advances in Multimodal Emotion Recognition Based On Brain-Computer InterfacesZaima Sartaj TaheriNo ratings yet

- Robots That Have EmotionsDocument18 pagesRobots That Have Emotionsnicanica44No ratings yet

- Delsarte CameraReadyDocument14 pagesDelsarte CameraReadydaveNo ratings yet

- 2 Sensation and Sense OrgansDocument4 pages2 Sensation and Sense OrgansAbdul RehmanNo ratings yet

- Autonomous Cognitive Robots Need Emotional Modulations: Introducing The eMODUL ModelDocument10 pagesAutonomous Cognitive Robots Need Emotional Modulations: Introducing The eMODUL ModelJuan RomayNo ratings yet

- Technology Based On Touch: Haptics TechnologyDocument5 pagesTechnology Based On Touch: Haptics TechnologyKarthik CatsNo ratings yet

- Affective Computing - A Rationale For Measuring Mood With Mouse and KeyboardDocument10 pagesAffective Computing - A Rationale For Measuring Mood With Mouse and KeyboardMarek KarniakNo ratings yet

- Sound Emotion (Introduction)Document3 pagesSound Emotion (Introduction)Shubham KumarNo ratings yet

- States of Consciousness: Charles T. TartDocument32 pagesStates of Consciousness: Charles T. Tartrenate_ellaNo ratings yet

- Lamm2010 Insula y EmpatiaDocument13 pagesLamm2010 Insula y EmpatiaJosé Francisco Muñoz SilvaNo ratings yet

- Hekkert P. Design Aesthetics Principles of Pleasure in DesignDocument17 pagesHekkert P. Design Aesthetics Principles of Pleasure in DesignGaye KızılcalıogluNo ratings yet

- Supplementary Reading Material IN Psychology Class Xi (Effective From The Academic Session 2008-09 of Class XI)Document30 pagesSupplementary Reading Material IN Psychology Class Xi (Effective From The Academic Session 2008-09 of Class XI)v29vikalNo ratings yet

- Blakesekuler5e Chapter1Document28 pagesBlakesekuler5e Chapter1Susan RodriguezNo ratings yet

- Affect and Machine Design: Lessons For The Development of Autonomous MachinesDocument7 pagesAffect and Machine Design: Lessons For The Development of Autonomous MachinesSaravanan MathiNo ratings yet

- Robot Therapy For Elders Affected by Dementia: Using Personal Robots For Pleasure and RelaxationDocument8 pagesRobot Therapy For Elders Affected by Dementia: Using Personal Robots For Pleasure and RelaxationBhushan ShinkreNo ratings yet

- Gale Researcher Guide for: Overview of Sensation and Perception in PsychologyFrom EverandGale Researcher Guide for: Overview of Sensation and Perception in PsychologyNo ratings yet

- Multimodal Interaction in Music Using The Electromyogram and Relative Position Sensing - Benjamin KnappDocument6 pagesMultimodal Interaction in Music Using The Electromyogram and Relative Position Sensing - Benjamin KnappSergio Nunez MenesesNo ratings yet

- Module 2 PRINCIPLES OF MOTOR CONTROL AND LEARNING - 122636Document27 pagesModule 2 PRINCIPLES OF MOTOR CONTROL AND LEARNING - 122636pempleo254No ratings yet

- Vygotsky 1930 The Instrumental Method in PsychologyDocument5 pagesVygotsky 1930 The Instrumental Method in PsychologyFredrik Eriksson HjelmNo ratings yet

- Methodological Issues in Facilitating Rhythmic Play With RobotsDocument6 pagesMethodological Issues in Facilitating Rhythmic Play With RobotsSarah CosentinoNo ratings yet

- A Dynamic Speech Breathing System For Virtual CharactersDocument10 pagesA Dynamic Speech Breathing System For Virtual CharactersHeoel GreopNo ratings yet

- Report HCI (Autosaved)Document26 pagesReport HCI (Autosaved)Bason, Edric AngeloNo ratings yet

- Modeling Emotion-Based Decision-Making: Juan D. VelásquezDocument6 pagesModeling Emotion-Based Decision-Making: Juan D. VelásquezwisjanuszNo ratings yet

- Emotions in Human and Artificial Intelligence J A A AldeaDocument19 pagesEmotions in Human and Artificial Intelligence J A A AldeaNiky PapucNo ratings yet

- Architecture and Design For Children and Youth.39-40Document2 pagesArchitecture and Design For Children and Youth.39-40Kaye JacobsNo ratings yet

- Preface and Overview For The History of Orphanages ReconsideredDocument19 pagesPreface and Overview For The History of Orphanages ReconsideredKaye JacobsNo ratings yet

- BREEAM Vs LEED PDFDocument11 pagesBREEAM Vs LEED PDFKaye JacobsNo ratings yet

- In Time: A Novel. Book OneDocument423 pagesIn Time: A Novel. Book OneNeville AnimusicNo ratings yet

- Hermeto & Elis Regina, Montreux 1979 - Articles and Interviews About Their Performance TogetherDocument24 pagesHermeto & Elis Regina, Montreux 1979 - Articles and Interviews About Their Performance Togetherzimby12No ratings yet

- Bach Ed. Imamura 2019 Laute Vol 1Document72 pagesBach Ed. Imamura 2019 Laute Vol 1armemarco armemarco100% (1)

- Be BopDocument57 pagesBe Bopbforbes82100% (2)

- Manthana - : Cultural and Literary Competitions 2018Document9 pagesManthana - : Cultural and Literary Competitions 2018Ananthesh RaoNo ratings yet

- Bee Gees StoryDocument9 pagesBee Gees StoryKevin BennettNo ratings yet

- Programacion Semanal Planta 26-02.24Document145 pagesProgramacion Semanal Planta 26-02.24Frank GuerraNo ratings yet

- Justin Bieber - Bigger LyricsDocument2 pagesJustin Bieber - Bigger LyricsRaze CrageNo ratings yet

- English Guideline For 9th GradeDocument5 pagesEnglish Guideline For 9th Gradedonaldo lemosNo ratings yet

- Haydn 3Document12 pagesHaydn 3Thomas PiazzaNo ratings yet

- A Basic Overview of Schenkerian AnalysisDocument11 pagesA Basic Overview of Schenkerian AnalysisTC Ahmet Ayhan Altunoğlu14% (7)

- Continental India Limited Modipuram, Meerut ,: Unit ListDocument10 pagesContinental India Limited Modipuram, Meerut ,: Unit ListAshishRaviNo ratings yet

- Column Systems: TC4300 TC4018ADocument2 pagesColumn Systems: TC4300 TC4018ApanapapakNo ratings yet

- Public Address & Back Ground Music SystemDocument11 pagesPublic Address & Back Ground Music Systemsaravana3kumar3ravicNo ratings yet

- A Life of Aristotle (Blakesley) PDFDocument218 pagesA Life of Aristotle (Blakesley) PDFparasolyNo ratings yet

- Valse Sentimentale Op. 51 No. 6 Pyotr Ilyich Tchaikovsky Arr. Mi MDocument3 pagesValse Sentimentale Op. 51 No. 6 Pyotr Ilyich Tchaikovsky Arr. Mi M김형걸No ratings yet

- Newbury Spring Festival 2012 Full ListingsDocument104 pagesNewbury Spring Festival 2012 Full ListingsnickythomasmediaNo ratings yet

- Twenty One Pilots LyricsDocument2 pagesTwenty One Pilots LyricsVero NikaNo ratings yet

- SESSION 8 - Barre Chords On The 5th String: B B B B B BDocument2 pagesSESSION 8 - Barre Chords On The 5th String: B B B B B BsinaNo ratings yet

- 1996 Interview With Don Gordon of NumbDocument2 pages1996 Interview With Don Gordon of NumbthoushaltnotNo ratings yet

- Materiomics: Biological Protein Materials, From Nano To MacroDocument22 pagesMateriomics: Biological Protein Materials, From Nano To Macrouswatun hasnahNo ratings yet

- 100 Pop Muisc Best SongsDocument3 pages100 Pop Muisc Best SongsBabuBalaramanNo ratings yet

- Full Paper PresentsDocument20 pagesFull Paper Presentshellendro takhelmayumNo ratings yet

- Crown Him With Many Crowns-VioloncelloDocument2 pagesCrown Him With Many Crowns-VioloncelloBrill PatalagsaNo ratings yet

- Midterm Test ReadingDocument5 pagesMidterm Test ReadingDUy LêNo ratings yet

- Gerunds and InfinitivesDocument9 pagesGerunds and InfinitivesNithyanandan MavilapatharaNo ratings yet

- LOVE Chords: Am FM Dm7 E7Document6 pagesLOVE Chords: Am FM Dm7 E7Loh Sin YiNo ratings yet