Professional Documents

Culture Documents

4 Memory Models

4 Memory Models

Uploaded by

savio77Original Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

4 Memory Models

4 Memory Models

Uploaded by

savio77Copyright:

Available Formats

Memory Models for HPC

David Chisnall

February 14, 2011

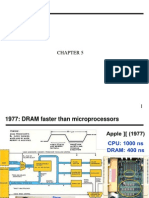

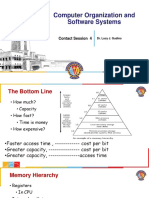

The Memory Hierarchy: Motivation

Fast memory is expensive

Slow memory is cheap

Example Memory Hierarchy

Location Size Access Time (cycles)

Registers < 1KB 1

L1 Data Cache 32KB 4

L2 Cache 256KB 12

L3 Cache 2MB+ 26-32

Main Memory 1GB+ 150+

Cache and Working Sets

Most programs have a small working set

Each part of the program works on a small amount of data

Storing the working set in fast memory gives the same speed

as storing everything in fast memory

(But is much cheaper)

What goes in Cache?

Memory that was accessed recently

Memory near memory that was accessed recently

Memory that will be accessed soon (hopefully!)

Cache Design

Organised in cache lines, typically 64 bytes (8-16 pointer-sized

values)

Simple hash table indexed by some bits in the address

Why do we care?

Cache is from the French meaning hidden programmers

dont normally see it

We do, because we care about performance!

Line size means that its usually very fast to access something

within 32 bytes of the last thing we accessed

Hash conicts mean that we can evict stu from cache too

soon

Explicit Prefetching

Working out what data will be used next is hard!

The CPU tries to guess based on linear access (sometimes)

The compiler can usually make better guesses

The programmer can make even better ones

Prefetching Example

for (int i=0 ; i<pixelCount ; i++)

{

__builtin_prefetch (& pixels[i+1]);

pixels[i].r *= 1.1;

pixels[i].g *= 1.1;

pixels[i].b *= 1.1;

}

__builtin_prefetch() is a GCC extension

Expands to prefetch instruction

This prefetches the pixel needed for the next loop

Is This Sensible?

__builtin_prefetch (& pixels[i+1]);

Pixel is 1/4 of a cache line (16 bytes)

Next pixel is in the current cache line 3/4 of the time

Prefetch will take 12+ cycles

We arent giving it that long

Prefetching pixel i+4 is better

Cache Optimizing: Hopscotch Hash Algorithm

1. Try to insert value at hash

2. If its full, linear scan up to 32 elements along for spare slot,

insert if possible

3. If not, see if any of the 32 values can be moved to a

secondary location, insert in their freed slot

4. If not, resize the table and retry

Hopscotch Hash Performance

Values always within 32 spots of rst lookup

Usually in same cache line

Always within 2 cache lines

Very fast!

Also well-suited to concurrency

The Multicore Problem

Each core has its own cache

Two threads try to access the same data

What happens?

Cache Coherency

Two cores reading the same data, both have copy in cache

One tries to modify, must invalidate other cache rst

Getting data from another cores cache is typically 40-60

cycles

For best performance, threads should operate on dierent data

Going Further: NUMA

Other parts of the memory hierarchy see the same problem

CPU has local memory, accesses other processors memory

indirectly

Common example: Modern x86 chips

Extreme example: SGI Altix

Altix Pointers

36-bit node oset (64GB per node)

11-bit node ID

Main memory used to cache remote nodes memory

Cache coherency problem across nodes, not just cores

Similar problem on all single system image clusters

Look, No Cache: Cell

Cell SPU cores have 256KB of local memory

Same idea as cache, but explicitly managed

Programmer copies between it and main memory

Tries to do work entirely in local memory

Very fast, not so easy to use

No Cache: Stream Processors

Caches work well with certain categories of problem

Very badly with others

Some algorithms run on entire data set...

...but in a predictable sequence

Stream processors are optimised to deliver the entire contents

of memory to the program quickly in order

Typically also have some local memory

Examples: Most DSPs, GPUs

Questions / Feedback?

Is the speed okay? Too fast? Too slow?

Is the material too abstract? Too specic?

You might also like

- BS en 10213 - 2016Document32 pagesBS en 10213 - 2016Артем Титов100% (4)

- Cache Memory - January 2014Document90 pagesCache Memory - January 2014Dare DevilNo ratings yet

- Module 4: Memory System Organization & ArchitectureDocument97 pagesModule 4: Memory System Organization & ArchitectureSurya SunderNo ratings yet

- 2.2 DD2356 ThreadsDocument22 pages2.2 DD2356 ThreadsDaniel AraújoNo ratings yet

- 04 Cache MemoryDocument36 pages04 Cache Memorymubarra shabbirNo ratings yet

- Computer Organization & Architecture: Cache MemoryDocument52 pagesComputer Organization & Architecture: Cache Memorymuhammad farooqNo ratings yet

- Memory Hierarchy: Haresh Dagale Dept of ESEDocument32 pagesMemory Hierarchy: Haresh Dagale Dept of ESEmailstonaikNo ratings yet

- Advanced Computer Architecture: BY Dr. Radwa M. TawfeekDocument32 pagesAdvanced Computer Architecture: BY Dr. Radwa M. TawfeekRadwa TawfeekNo ratings yet

- 23 Cache Memory Basics 11-03-2024Document19 pages23 Cache Memory Basics 11-03-2024suhas kodakandlaNo ratings yet

- Memory HierarchyDocument10 pagesMemory HierarchyMayank KhullarNo ratings yet

- Week 5 DiscussionDocument38 pagesWeek 5 DiscussionSiri Varshitha Reddy LingareddyNo ratings yet

- Main Memory: IS104 Operating Systems ConceptDocument62 pagesMain Memory: IS104 Operating Systems ConceptGABRIEL BUFFON HARAHAP (00000061688)No ratings yet

- 11 Cache Memory, Internal, ExternalDocument102 pages11 Cache Memory, Internal, Externalbezelx1No ratings yet

- Cache and Memory SystemsDocument100 pagesCache and Memory SystemsJay JaberNo ratings yet

- CH06 Memory OrganizationDocument85 pagesCH06 Memory OrganizationBiruk KassahunNo ratings yet

- Computer Architecture and Organization (Eeng 3192) : 4. The Memory UnitDocument25 pagesComputer Architecture and Organization (Eeng 3192) : 4. The Memory UnitEsuyawkal AdugnaNo ratings yet

- Chache Memory, Internal Memory and External MemoryDocument113 pagesChache Memory, Internal Memory and External MemoryEphraimNo ratings yet

- Chapter - 3 Memory SystemsDocument78 pagesChapter - 3 Memory SystemscudarunNo ratings yet

- This Unit: Caches: - Basic Memory Hierarchy ConceptsDocument24 pagesThis Unit: Caches: - Basic Memory Hierarchy ConceptsjameskipkosgeiNo ratings yet

- Memory SystemsDocument36 pagesMemory SystemsBisma AmirNo ratings yet

- Lecture MemoryDocument55 pagesLecture MemoryMohamad YassineNo ratings yet

- Memory OrganizationDocument57 pagesMemory OrganizationvpriyacseNo ratings yet

- Lecture Memory RemovedDocument33 pagesLecture Memory RemovedMohamad YassineNo ratings yet

- Main MemoryDocument57 pagesMain MemoryBilal WarraichNo ratings yet

- Cache MemoryDocument39 pagesCache MemoryhariprasathkNo ratings yet

- Memory CacheDocument18 pagesMemory CacheFunsuk VangduNo ratings yet

- William Stallings Computer Organization and Architecture 8th Edition Cache MemoryDocument43 pagesWilliam Stallings Computer Organization and Architecture 8th Edition Cache MemoryabbasNo ratings yet

- MemoryManagement PDFDocument44 pagesMemoryManagement PDFalihamzaNo ratings yet

- Chapter5-The Memory SystemDocument36 pagesChapter5-The Memory Systemv69gnf5csyNo ratings yet

- R RRRRRRRR FinalDocument28 pagesR RRRRRRRR FinalRachell BenemeritoNo ratings yet

- Memory ManagementDocument71 pagesMemory ManagementRaza AhmadNo ratings yet

- Memory Design: SOC and Board-Based SystemsDocument48 pagesMemory Design: SOC and Board-Based SystemsSathiyaSeelaNo ratings yet

- Chapter 5Document87 pagesChapter 5supersibas11No ratings yet

- Memory2 PDFDocument93 pagesMemory2 PDFvidishsaNo ratings yet

- Storage ManagementDocument11 pagesStorage ManagementSimbisoNo ratings yet

- 5 1Document39 pages5 1tinni09112003No ratings yet

- Week 4Document13 pagesWeek 4hussmalik69No ratings yet

- CH 06Document58 pagesCH 06Yohannes DerejeNo ratings yet

- 9장 캐시Document65 pages9장 캐시민규No ratings yet

- Lecture-04 & 05, Adv. Computer Architecture, CS-522Document63 pagesLecture-04 & 05, Adv. Computer Architecture, CS-522torabgullNo ratings yet

- Characteristics Location Capacity Unit of Transfer Access Method Performance Physical Type Physical Characteristics OrganisationDocument53 pagesCharacteristics Location Capacity Unit of Transfer Access Method Performance Physical Type Physical Characteristics OrganisationMarkNo ratings yet

- 1-MulticoreArchitecture BasicsDocument34 pages1-MulticoreArchitecture BasicspdvdmNo ratings yet

- Lecture 12: Memory Hierarchy - Cache Optimizations: CSCE 513 Computer ArchitectureDocument69 pagesLecture 12: Memory Hierarchy - Cache Optimizations: CSCE 513 Computer ArchitectureFahim ShaikNo ratings yet

- Lecture-04, Adv. Computer Architecture, CS-522Document39 pagesLecture-04, Adv. Computer Architecture, CS-522torabgullNo ratings yet

- Week 4Document12 pagesWeek 4Kamran AnwarNo ratings yet

- 03 HardwareDocument38 pages03 HardwareRasha Elsayed SakrNo ratings yet

- Lecture 3Document24 pagesLecture 3Mohsin AliNo ratings yet

- Core PerformanceDocument13 pagesCore PerformanceNarendra SvNo ratings yet

- MemoryAbstractionDocument23 pagesMemoryAbstractionTu TuNo ratings yet

- COSS - Contact Session - 4Document34 pagesCOSS - Contact Session - 4Prasana VenkateshNo ratings yet

- MemoryDocument57 pagesMemorydokumbaro30No ratings yet

- Lecture 9-10 Computer Organization and ArchitectureDocument25 pagesLecture 9-10 Computer Organization and Architectureshashank kumarNo ratings yet

- More Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitectureDocument31 pagesMore Elaborations With Cache & Virtual Memory: CMPE 421 Parallel Computer ArchitecturedjriveNo ratings yet

- Unit 5 Memory SystemDocument77 pagesUnit 5 Memory SystemsubithavNo ratings yet

- Cache Memory: 13 March 2013Document80 pagesCache Memory: 13 March 2013Guru BalanNo ratings yet

- Chapter 03Document58 pagesChapter 03Tram NguyenNo ratings yet

- Multi-Core ArchitecturesDocument43 pagesMulti-Core Architecturesvinotd1100% (1)

- Memory Part IIDocument56 pagesMemory Part IIRonald FerrufinoNo ratings yet

- Memory Based ApproachDocument7 pagesMemory Based ApproachadnanNo ratings yet

- Nintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8From EverandNintendo 64 Architecture: Architecture of Consoles: A Practical Analysis, #8No ratings yet

- Build Your Own Distributed Compilation Cluster - A Practical WalkthroughFrom EverandBuild Your Own Distributed Compilation Cluster - A Practical WalkthroughNo ratings yet

- Lock FreeDocument4 pagesLock Freesavio77No ratings yet

- CS109A Notes For Lecture 3/15/96 Representing StringsDocument2 pagesCS109A Notes For Lecture 3/15/96 Representing Stringssavio77No ratings yet

- Notes 17Document3 pagesNotes 17savio77No ratings yet

- CS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next ArrayDocument4 pagesCS109A Notes For Lecture 3/8/96 Data Structures: Linked List Next Arraysavio77No ratings yet

- Notes 13Document4 pagesNotes 13savio77No ratings yet

- CS109A Notes For Lecture 2/28/96 Binary Trees: EmptyDocument3 pagesCS109A Notes For Lecture 2/28/96 Binary Trees: Emptysavio77No ratings yet

- CS109A Notes For Lecture 1/31/96 Measuring The Running Time of Programs ExampleDocument4 pagesCS109A Notes For Lecture 1/31/96 Measuring The Running Time of Programs Examplesavio77No ratings yet

- Notes 12Document4 pagesNotes 12savio77No ratings yet

- CS109A Notes For Lecture 3/4/96 Priority Queues: PrioritiesDocument3 pagesCS109A Notes For Lecture 3/4/96 Priority Queues: Prioritiessavio77No ratings yet

- Notes 10Document4 pagesNotes 10savio77No ratings yet

- CS109A Notes For Lecture 1/17/96 Simple Inductions: Basis Inductive Step Inductive Hypothesis NotDocument4 pagesCS109A Notes For Lecture 1/17/96 Simple Inductions: Basis Inductive Step Inductive Hypothesis Notsavio77No ratings yet

- CS109A Notes For Lecture 1/24/96 Proving Recursive Programs WorkDocument3 pagesCS109A Notes For Lecture 1/24/96 Proving Recursive Programs Worksavio77No ratings yet

- CS109A Notes For Lecture 1/26/96 Running TimeDocument4 pagesCS109A Notes For Lecture 1/26/96 Running Timesavio77No ratings yet

- CS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus PonensDocument5 pagesCS109A Notes For Lecture 1/12/96 The Essence of Proof: Modus Ponenssavio77No ratings yet

- System & Network Performance Tuning: Hal SternDocument184 pagesSystem & Network Performance Tuning: Hal Sternsavio77No ratings yet

- CS109A Notes For Lecture 1/19/96 Recursive Denition of Expressions Basis: InductionDocument3 pagesCS109A Notes For Lecture 1/19/96 Recursive Denition of Expressions Basis: Inductionsavio77No ratings yet

- Unix CommandsDocument16 pagesUnix CommandsVageesh PorwalNo ratings yet

- CS109A Notes For Lecture 1/10/96 Major Theme: Data Models: Static Part Dynamic PartDocument4 pagesCS109A Notes For Lecture 1/10/96 Major Theme: Data Models: Static Part Dynamic Partsavio77No ratings yet

- I - Systems Programming Meets Full Dependent Types: Edwin C. BradyDocument12 pagesI - Systems Programming Meets Full Dependent Types: Edwin C. Bradysavio77No ratings yet

- Current Sensing Chip Resistors: NCST SeriesDocument6 pagesCurrent Sensing Chip Resistors: NCST Seriessavio77No ratings yet

- Android Development For C# and Visual Studio 2012Document2 pagesAndroid Development For C# and Visual Studio 2012johnvhorcajoNo ratings yet

- DTC P0560 System Voltage: Monitor DescriptionDocument4 pagesDTC P0560 System Voltage: Monitor Descriptionjeremih alhegnNo ratings yet

- Mercedes-Benz Vito-Van-CrewCab W639-I Specifications 201001Document7 pagesMercedes-Benz Vito-Van-CrewCab W639-I Specifications 201001Ikm KmNo ratings yet

- High Voltage CoilsDocument20 pagesHigh Voltage CoilsMarco SussuaranaNo ratings yet

- 1404 E EUC 3 Manual and Automatic Compensation of Reactive PowerDocument20 pages1404 E EUC 3 Manual and Automatic Compensation of Reactive PowerРаденко МарјановићNo ratings yet

- S525 Shears - enDocument35 pagesS525 Shears - ennoname1789No ratings yet

- Sinumerik 802d SLDocument51 pagesSinumerik 802d SLTony MarascaNo ratings yet

- Clean Limitless Free Energy Establishing A Tesla Tower in SerbiaDocument9 pagesClean Limitless Free Energy Establishing A Tesla Tower in SerbiaBranko R BabicNo ratings yet

- ESI工程学Document34 pagesESI工程学苏毅No ratings yet

- 500kva PCC3201 QSX15 WiringDocument39 pages500kva PCC3201 QSX15 Wiringjesus ontiverosNo ratings yet

- Bussmann Advanced Guide To SCCRsDocument11 pagesBussmann Advanced Guide To SCCRsBrian Burke Sr.No ratings yet

- 01-07-2021-1625129537-6-Impact - Ijrhal-14. Ijrhal - Design and Analysis of FlexvatorDocument4 pages01-07-2021-1625129537-6-Impact - Ijrhal-14. Ijrhal - Design and Analysis of FlexvatorImpact JournalsNo ratings yet

- AIChE Wilhelm April 08 Process SafetyDocument16 pagesAIChE Wilhelm April 08 Process Safetymostafa_1000No ratings yet

- Reflector Antennas PDFDocument78 pagesReflector Antennas PDFsankararao gullipalliNo ratings yet

- OHS Procedures in Installing DevicesDocument7 pagesOHS Procedures in Installing DevicesAmir M. Villas100% (1)

- Asme Section Ix InterpretationsDocument72 pagesAsme Section Ix InterpretationsEmmanuelNo ratings yet

- Java Mail To Yahoo and Gmail AccountsDocument2 pagesJava Mail To Yahoo and Gmail AccountsAshishKumarMishraNo ratings yet

- C32 Marine Engine Electrical System: Harness and Wire Electrical Schematic SymbolsDocument4 pagesC32 Marine Engine Electrical System: Harness and Wire Electrical Schematic Symbolsait mimouneNo ratings yet

- Indicator: User InstructionsDocument32 pagesIndicator: User Instructionsfranki pdgNo ratings yet

- Sap LabsDocument91 pagesSap LabsSachin Singh100% (2)

- Architectural Details and Representation: Sanjana Gupta SRNO24 2013PA0072Document8 pagesArchitectural Details and Representation: Sanjana Gupta SRNO24 2013PA0072Sanjana GuptaNo ratings yet

- (For Sub Engineer) Constructon Materials SanghaDocument65 pages(For Sub Engineer) Constructon Materials Sanghasuman subediNo ratings yet

- Ship Registration and Classification.Document9 pagesShip Registration and Classification.RENU100% (2)

- Sonalika International Di-20 Gardentrac - 4wd - TractorDocument23 pagesSonalika International Di-20 Gardentrac - 4wd - TractorPrannen Batra100% (3)

- A Microcontroller Based System For Determining Instantaneous Wind Speed andDocument4 pagesA Microcontroller Based System For Determining Instantaneous Wind Speed andmhasan_swinNo ratings yet

- Safety Rules Before Starting WorkDocument3 pagesSafety Rules Before Starting WorkGebshet WoldetsadikNo ratings yet

- ISO SymbolsDocument49 pagesISO Symbolstarneem100% (1)

- MicroPower MTM-HF3200, 6000 y 8000 (Cargador) (MultIdioma) PDFDocument68 pagesMicroPower MTM-HF3200, 6000 y 8000 (Cargador) (MultIdioma) PDFJuan Carlos Rubio FrescoNo ratings yet

- Aerodynamic 1Document6 pagesAerodynamic 1BOBNo ratings yet