Professional Documents

Culture Documents

Blur Cvpr12

Blur Cvpr12

Uploaded by

Gary ChangOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Blur Cvpr12

Blur Cvpr12

Uploaded by

Gary ChangCopyright:

Available Formats

Seeing through the Blur

Hossein Mobahi

1

, C. Lawrence Zitnick

2

, and Yi Ma

3,4

1

CS Dept., University of Illinois at Urbana-Champaign, Urbana, IL

2

Interactive Visual Media Group, Microsoft Research, Redmond, WA

3

ECE Dept., University of Illinois at Urbana-Champaign, Urbana, IL

4

Visual Computing Group, Microsoft Research Asia, Beijing, China

{hmobahi2,yima}@illinois.edu, {larryz,mayi}@microsoft.com

Abstract

This paper addresses the problem of image alignment us-

ing direct intensity-based methods for afne and homogra-

phy transformations. Direct methods often employ scale-

space smoothing (Gaussian blur) of the images to avoid lo-

cal minima. Although, it is known that the isotropic blur

used is not optimal for some motion models, the correct blur

kernels have not been rigorously derived for motion models

beyond translations. In this work, we derive blur kernels

that result from smoothing the alignment objective function

for some common motion models such as afne and homog-

raphy. We show the derived kernels remove poor local min-

ima and reach lower energy solutions in practice.

1. Introduction

It is one of the most fundamental problems in computer

vision to establish alignment between images. This task is

crucial for many important problems such as structure from

motion, recognizing an object from different viewpoints,

and tracking objects in videos. Roughly speaking, main-

stream image alignment techniques can be categorized into

intensity-based and feature-based methods. Intensity-

based methods use dense pixel information (such as bright-

ness pattern or correlation) integrated from image regions

to estimate the geometric transformation [17]. In contrast,

feature-based methods rst extract a sparse set of local fea-

tures from individual images, and then establish correspon-

dence among them to infer the underlying transformation

(for larger regions) [28].

In many applications intensity-based methods are ap-

pealing due to their direct access to richer information (i.e.

to every single pixel) [17]. This can be useful, for example,

when working with semi-regular patterns that are difcult

to match by local features [31]. However, the practical per-

formance of direct intensity methods can be undermined by

the associated optimization challenge [39]. Specically, it

is well-known that optimizing a cost function that directly

compares intensities of an image pair is highly susceptible

to nding local minima [14]. Thus, unless very good initial-

ization is provided, plain direct alignment of image intensi-

ties may lead to poor results.

Lucas and Kanade addressed the problem of reducing lo-

cal minima in direct methods by adopting a coarse-to-ne

Gauss-Newton scheme [29]. The Gauss-Newton scheme

uses a rst order Taylors approximation to estimate dis-

placements, which is typically violated except when the dis-

placements are small. Lucas and Kanade overcome this lim-

itation by initially using a coarse resolution image to reduce

the relative magnitude of the displacements. The displace-

ments are iteratively rened using the displacements com-

puted at coarser scales to initialize the ner scales. This re-

sults in the rst order Taylors expansion providing a better

approximation and a reduction in local minima at each iter-

ation. An alternative to reducing the resolution of the image

is to utilize an isotropic Gaussian kernel to blur the image,

so that the higher-order terms in the Taylors expansion are

negligible.

It was later shown that such a coarse-to-ne scheme is

guaranteed to recover the optimal displacement under some

mild conditions [24]. Although a guarantee of correctness

is only established for translational motion, the notion of

coarse-to-ne smoothing followed by local approximation

has been adopted in computer vision to matching with al-

most all parametric transformation models [3, 18, 29, 37,

41, 42]. Despite its popularity, there are serious theoretical

and practical issues with the Lucas-Kanade scheme when

applied to non-translational motions. For example, if the

transformation is scaling, it is easy to show that the Hes-

sian of the image function may grow proportional to the

distance from the origin. To compensate for this effect,

stronger smoothing is required for points farther from the

origin.

1

In this paper, we propose Gaussian smoothing the objec-

tive function of the alignment task, instead of the images,

which was in fact the original goal of coarse-to-ne image

smoothing techniques. In particular, we derive the theoret-

ically correct image blur kernels that arise from (Gaussian)

smoothing an alignment objective function. We show that,

for common motion models, such as afne and homogra-

phy, there exists a corresponding integral operator on the

image space. We refer to the kernels of such integral op-

erators as transformation kernels. As we show, all of these

kernels are spatially varying as long as the transformation

is not a pure translation, and vary from those heuristically

suggested by [7] or [40].

Our goal is to improve understanding of the optimization

problems associated with intensity-based image alignment,

so as to improve its practical performance and effectiveness.

We do not advocate that direct intensity-based alignment is

better or worse than feature-based methods.

2. Related Works

This paper focused on optimization by Gaussian smooth-

ing the objective function combined with a path-following

scheme. In fact, this optimization approach belongs to a

large family of methods. All these methods hope to escape

from brittle local minima by starting from a simplied

problem and gradually deforming it to the desired prob-

lem. This concept has been widely used across different

disciplines under different names and variations. Exam-

ples includes graduated optimization [9] in computer vi-

sion, homotopy continuation [1] in numerical methods, de-

terministic annealing [35] in machine learning, the diffusion

equation method [33] in chemistry, reward shaping [20] in

robotics, etc. Similar concept for discrete spaces has also

been used [4, 5, 21, 38].

The popularity of isotropic Gaussian convolution for

image blurring is, in part, a legacy of scale-space theory.

This inuential theory emerged from a series of seminal

articles in the 80s [19, 46, 47]. This theory shows that

isotropic Gaussian convolution is the unique linear op-

erator obeying some least commitment axioms [25]. In par-

ticular, this operator is unbiased to location and orientation,

due to its convolutional and isotropic nature. Later, Lin-

deberg extended scale-space theory to cover afne blur by

anisotropic spatially invariant kernels [27, 26].

Nevertheless, it is known that the human eye has pro-

gressively less resolution from the center (fovea) toward the

periphery [32]. In computer vision, spatially varying blur

is believed to benet matching and alignment tasks. In that

direction, Berg and Malik [7] introduced the notion of ge-

ometric blur and suggested some spatially varying kernels

inspired by that. However, their kernels are derived heuris-

tically, without a rigorous connection to the underlying geo-

metric transformations. Some limitations of traditional im-

Algorithm 1 Alignment by Gaussian Smoothing.

1: Input: f

1

: X R, f

2

: X R,

0

, . . .

The set {

k

} for k = 1, . . . , K s.t. 0 <

k+1

<

k

2: for k = 1 K do

3:

k

= local maximizer of z(;

k

) initialized at

k1

4: end for

5: Output:

K

age smoothing are discussed in [36] and coped using stacks

of binary images. That work, however, still uses isotropic

Gaussian kernel for smoothing images of the stack.

3. Notation and Denitions

The symbol is used for equality by denition. We use

x for scalars, x for vectors, X for matrices, and X for sets.

scalar valued and vector valued functions are respectively

denoted by f(.) and f(.). We use x for x

2

and

for

x

. Finally, and denote convolution operators in

spaces and X respectively.

Given a signal f : X R, e.g. a 2D image, we dene

a signal warping or domain transformation parameterized

by as : X X. Here is concatenation of all

the parameters of a transformation. For example, in case of

afne Ax + b with x R

2

, is a 6 dimensional vector

containing the the elements of A and b.

The isotropic normalized Gaussian with covariance

2

I

is denoted by k(x;

2

), where I is the identity matrix.

The anisotropic normalized Gaussian with covariance ma-

trix is denoted by K(x; ). The Fourier transform

of a real valued function f : R

n

R is

f()

_

R

n

f(x)e

i

T

x

dx and the inverse Fourier transform is

f(x) = (2)

n

_

R

n

f()e

i

T

x

d.

4. Smoothing the Objective

We use the inner product between the transformed f

1

and

the reference signal f

2

as the alignment objective function.

Note that f

1

and f

2

are the input to the alignment algorithm,

and in many scenarios may be different from the original

signals. For example, they may be mean subtracted or nor-

malized by their

2

norm. The alignment objective function

is denoted by h() and dened as follows,

h()

_

X

f

1

((x, )) f

2

(x) dx, (1)

where f

1

((x, )) is signal f

1

warped by (x, ). Our

goal is to nd the parameters

that optimize the objec-

tive function (1). In practice, h may have multiple local

optima. Thus, instead of directly optimizing h, we itera-

tively optimize a smoothed version of h in a coarse-to-ne

approach. We denote the objective function h() obtained

after smoothing as z(, ), where determines the amount

2

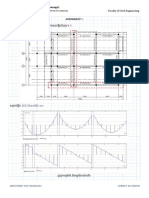

(a) (b) (c) (d)

Figure 1. Basin of attraction for scale alignment. Egg shape input images are shown in (a) and (b), where black and white pixels are

respectively by -1 and 1 intensity values. Obviously, the correct alignment is attained at = 1, due to reection symmetry. The objective

function for zLK is shown in (c) and for z in (d). Blue, green and red respectively indicate local maxima, global maximum and basin of

attraction originating from local maxima of highest blur.

of smoothing. Given z(, ), we adopt the standard opti-

mization approach described by Algorithm 1. That is, we

use the parameters

k1

found at a coarser scale to initial-

ize the solution

k

found at each progressively ner scale.

In the Lucas-Kanade algorithm [29], instead of smooth-

ing the objective function, they directly blur the images.

This results in the following form for the objective function,

z

LK

(, )

_

X

[f

1

(( . , ))k( . ;

2

)] [f

2

k( . ;

2

)](x) dx.

Image smoothing is done in hope of eliminating the brit-

tle local optima in the objective function. However, if the

latter is our goal, we propose the correct approach is to blur

the objective function directly

1

,

z(, ) [h k( ,

2

)]() .

The optimization landscape of these two cases may differ

signicantly. To illustrate, consider the egg shape images in

Figures 1(a) and 1(b). If we assume the only parameter sub-

ject to optimization is the scale factor, i.e. (x, ) = x,

the associated optimization landscape is visualized in gure

1(c) for z

LK

and 1(d) for z. Clearly, z has a single basin

of attraction that leads to the global optimum, unlike z

LK

whose basins do not necessarily land at the global optimum.

5. Transformation Kernels

Our is to perform optimization on the smoothed objec-

tive function. Smoothing the objective function refers to a

convolution in the space of transformation parameters with

a Gaussian kernel. Unfortunately, performing this convo-

lution may be computationally expensive when the dimen-

sionality of the transformation space is large, e.g. eight for

homography of 2D images. This section introduces the no-

tion of transformation kernels, which enables us to equiva-

lently write the smoothed objective function using some in-

tegral transform of the signal. This integration is performed

1

[hk( ,

2

)]() is bounded when either signals decay rapidly enough

or have bounded support. In image scenario, the latter always holds.

in the image space (e.g. 2D for images), reducing the com-

putational complexity.

Denition Given a domain transformation : X

X, where X = R

n

and = R

m

. We dene the transfor-

mation kernel associated with as u

,

: X X R

to be the function satisfying the following integral equation

for all Schwartz

2

functions f,

[f((x, )) k(;

2

)] () =

_

X

f(y) u

,

(, x, y)dy .(2)

Using this denition, the smoothed alignment objective

z can be equivalently written as the following,

z(, ) (3)

[h k( ,

2

)]() (4)

=

_

X

_

f

2

(x)[f

1

((x, .)) k( ,

2

)]()

_

dx (5)

=

_

X

_

f

2

(x)

__

X

f

1

(y)u

,

(, x, y) dy

__

dx, (6)

where the integral transformin (6) uses the denition of ker-

nel provided in (2). A procedure for computing the integral

transform (6) will be provided in section 6.

5.1. Derivation of Kernels

Proposition 1 The following choice of u is a solution to the

denition of a kernel provided in (2). Here X = = R

n

.

u

,

(, x, y)

=

1

(2)

n

_

_

_

e

i

T

((x,t)y)

k(t ;

2

) dt

_

d (7)

2

A Schwartz function is one whose derivatives are rapidly decreasing.

3

Name (x, ) u

,

(, x, y)

Translation d

n1

x +d k((x, ) y;

2

)

Translation+Scale [ a

n1

, d

n1

] a

T

x +d K((x, ) y;

2

diag([1 + x

2

i

]))

Afne [ vec(A

nn

) , b

n1

] Ax +b k((x, ) y;

2

(1 +x

2

))

Homography [ vec(A

nn

) , b

n1

, c

n1

]

1

1+c

T

x

(Ax +b) q(, x, y, ) e

p(,x,y,)

Table 1. Kernels for some of the common transformations arising in vision (for all kernels n 1 except homography where n = 2).

(a) (b) (c) (d)

Figure 2. Visualization of afne and homography kernels specied by A0 = [2 0.2 ; 0.3 4], b0 = [0.15 0.25] (also c0 = [1 5]

for homography). Here x [1, 1] [1, 1], = 0.5 and y = (1, 1) or y = (0, 0). More precisely, afne kernels in (a) u( =

0, x, y = (0, 0)) (b) u( = 0, x, y = (1, 1)) and homography kernels in (c) u( = 0, x, y = (0, 0)) (d) u( = 0, x, y = (1, 1))

.

The proof uses the Fourier representation f(x) =

(2)

n

_

f()e

i

T

x

d, and then application of Parse-

vals theorem. See the appendix for details.

Now by applying the result of proposition 1 to the de-

sired transformation , we can compute the integrals

3

and

derive the corresponding kernel function as shown in Table

1 (see also gure 2 for some visualization). The functions

q and p, associated with the homography kernel, are each a

ratio of polynomials

4

.

The complete derivation of these kernels is provided in

the appendix. Nevertheless, below we present a relatively

easy way to check the correctness of the kernels. Speci-

cally, we check two necessary conditions of the heat equa-

tion and the limit behavior, which must hold for the kernels.

3

Although the integral in (7) does not necessarily have a closed-form

for any arbitrary transformation , it does so for most of the transforma-

tions we care about in practice, as listed in Table 1.

4

Complete expression for the homography kernel qe

p

is as below:

0

1

1 +x

2

1

1 +c

T

x

v Ax +b

q

0

(

0

x

2

y

T

v +

1

)

2

+

2

x

2

(1 +

0

x

2

y

2

)

2

2

(1 +

0

x

2

y

2

)

5

2

p

1

y v

2

+

0

x

2

(v

2

y

1

v

1

y

2

)

2

2

2

(1 +x

2

(1 +y

2

))

.

5.1.1 Heat Equation

Consider the convolution [f((x, )) k( ; )](). Such

Gaussian convolution obeys the heat equation [45]:

[f((x, )) k( ; )]()

= (/)[f((x, )) k( ; )]() . (8)

Since we argue that [f((x, )) k( ; )]() =

_

X

f(y)u

,

(, x, y) dy, the following must hold:

_

X

f(y)u

,

(, x, y) dy

=

_

X

f(y)u

,

(, x, y) dy (9)

_

X

f(y)

u

,

(, x, y) dy

=

_

X

f(y)

u

,

(, x, y) dy (10)

u

,

(, x, y) =

u

,

(, x, y) , (11)

where in (11) means sufcient condition. Now it is much

easier to check the identity (11) for the provided kernels.

For example, in the case of an afne kernel k((x, )

y;

2

(1 + x

2

)), both sides of the identity are equal to

(

(x,)y

2

3

(1+x

2

)

n

) k((x, ) y;

2

(1 +x

2

)).

4

5.1.2 Limit Behavior

When the amount of smoothing approaches zero, the inte-

gral transform must recover the original function. Formally,

we want the following identity to hold,

lim

0

+

_

X

f(y)u

,

(, x, y) dy = f((x, )) . (12)

The sufcient condition for the above identity is that

lim

0

+ u

,

(, x, y) = ((x, ) y), where is

Diracs delta function. This is trivial for the kernels of afne

and its special cases; since the kernel itself is a Gaussian,

lim

0

+ is equivalent to kernels variance approaching to

zero (for any bounded choice of x). It is known that when

the variance of the normal density function tends to zero it

approaches Diracs delta function.

5.2. Remarks

Two interesting observations can be made about Table 1.

First, from a purely objective standpoint, the derived kernels

exhibit foveation, similar to that in the eye. Except for

translation, all the kernels are spatially varying with density

decreasing in x. This is very easy to check for transla-

tion+scale and afne kernels, where they are spatially vary-

ing Gaussian kernels whose variance depends and increases

in x.

Second, the derived kernels are not necessarily rotation

invariant. Therefore, the blur kernels proposed by Berg and

Malik [7] are unable to represent the geometric transforma-

tions listed in Table 1. In fact, to the best of our knowledge,

this work is the rst that rigorously derives kernels for such

transformations.

5.3. Image Blurring vs. Objective Blurring

It is now easy to check that for the translation transfor-

mation, Gaussian convolution of the alignment objective

with respect to the optimization variables is equivalent to

applying a Gaussian convolution to the image f

1

. This is

easy to check by plugging the translation kernel from Table

1 into the smoothed objective function (6) as below:

z(, ) (13)

=

_

X

_

f

2

(x)

_

X

f

1

(y)u

,

(, x, y) dy

_

dx (14)

=

_

X

_

f

2

(x)

_

X

f

1

(y)k( +x y;

2

) dy

_

dx(15)

=

_

X

_

f

2

(x) [f

1

( ) k( ;

2

)] ( +x)

_

dx. (16)

However, such equivalence does not hold for other trans-

formations, e.g. afne. There, Gaussian convolution of the

alignment objective with respect to the optimization vari-

ables is equivalent to an integral transform of f

1

, which

cannot be expressed by the convolution of f

1

with some spa-

tially invariant convolution kernel in image space as shown

below for afne case:

z(, ) (17)

=

_

X

_

f

2

(x)

_

X

f

1

(y)u

,

(, x, y) dy

_

dx (18)

=

_

X

_

X

f

2

(x)f

1

(y)e

Ax+by

2

2

2

(1+x

2

)

(

2

2(1 +x

2

))

n

2

dydx. (19)

6. Computation of the Integral Transform

Kernels can offer computational efciency when com-

puting the smoothed objective (3).

If the kernel u is afne or one of its special cases, then

it is a Gaussian form

5

in variable y according to Table 1.

In such cases, expressing f

1

by Gaussian Basis Functions

6

,

piecewise constant or piecewise polynomial forms leads to

a closed form of the integral transform. Details are provided

in sections 6.1 and 6.2.

If the kernel u is not Gaussian in y (such as in homogra-

phy), the derivation of a closed form for the integral trans-

form may not be possible. However, numerical integration

is done much more efciently using the kernelized form

(6) compared to the original form (3). For example, when

n = 2, integration in the original form is over and for

homography dim() = 8. However, the equivalent integral

transform is over y, where dim(y) = 2.

6.1. Gaussian RBF Representation of f

1

The following result addresses the representation

of f

1

by Gaussian Radial Basis Functions (GRBFs)

(x; x

0

,

0

) = e

xx

0

2

2

2

0

; the more general case of GBFs

can be obtained in a similar fashion.

Proposition 2 Suppose f

1

=

p

k=1

a

k

(y; x

k

,

k

), where

(x; x

k

,

k

) = e

xx

k

2

2

2

k

. Assume that u

,

(, x, y) is

Gaussian in variable y. Then the following identity holds.

_

X

f

1

(y)u

,

(, x, y) dy =

p

i=1

a

i

(

i

_

2

i

+ s

2

)

n

e

x

i

2

2(

2

i

+s

2

)

.

See the appendix for a proof.

5

We say a kernel is Gaussian in y when it can be written as

u,(, x, y) = k((, x) y ; s

2

(, x)), where s : X R

+

is an arbitrary map and the maps and s are independent of y.

6

A GBF is a function of form (x; x

0

,

0

) =

exp(

(xx

0

)

T

0

1

(xx

0

)

2

), where the matrix is positive denite.

It is known that Gaussian RBFs (x; x

0

,

0

) = exp(

xx

0

2

2

2

0

),

which are a special case of GBFs, are general function approximators.

5

6.2. Piecewise Constant Representation of f

1

The following result addresses the representation of f

1

as

piecewise constant; the extension to piecewise polynomial

is straightforward.

Proposition 3 Suppose f

1

(x) = c on a rectangular piece

x X

n

k=1

[x

k

, x

k

]. Assume that u

,

(, x, y) has

the form K(q

()y; diag(s

2

1

, , s

2

n

)), where q

:

R

n

is some map. Then the following identity holds:

_

X

f

1

(y)u

,

(, x, y) dy

=

n

k=1

1

2

_

erf

_

q

k

x

k

2s

k

_

erf

_q

k

x

k

2s

k

_

_

.

The proof uses separability of integrals for diagonal K.

7. Regularization

Regularization may compensate for the numerical insta-

bility caused by excessive smoothing of the objective func-

tion and improve the well-posedness of the task. The lat-

ter means if there are multiple transformations that lead to

equally good alignments (e.g. when image content has sym-

metries), the regularization prefers the closest transforma-

tion to some given

0

. This makes existence of a unique

global optimum more presumable. We achieve these goals

by replacing f

1

with the following regularized version:

f

1

(( , ), x, ,

0

, r) k(

0

; r

2

)f

1

((x; )). (20)

Regularization shrinks the signal f

1

for peculiar trans-

formations with very large

0

. Typically

0

is set to

the identity transformation (x;

0

) = x. Using (20), the

regularized objective function can be written as below:

h(;

0

, r)

_

X

_

f

1

(, x, ,

0

, r)f

2

(x)

_

dx

=

_

X

k(

0

; r

2

)f

1

((x; ))f

2

(x) dx.

Consequently, the smoothed regularized objective is as

follows:

z(,

0

, r, ) [

h( ,

0

, r) k( ;

2

)](). (21)

This form is still amenable to kernel computation using

the following proposition.

Proposition 4 The regularized objective function

z(,

0

, r, ) can be written using transformation ker-

nels as follows.

z(,

0

, r, ) (22)

= [

h( ,

0

, r) k( ;

2

)]() (23)

=

_

X

_

k(

0

; r

2

+

2

)f

2

(x)... (24)

_

X

_

f

1

(y)u

,

r

r

2

+

2

(

r

2

+

2

0

r

2

+

2

, x, y)

_

dy

_

dx.

See the appendix for the proof.

8. Experiments

We evaluate the performance of the proposed scheme for

smoothing the alignment objective function against tradi-

tional Gaussian blurring and no blurring at all. We use the

images provided by [13] (see gure 3-top). This dataset

consists of ve planar scenes, each having six different

views of increasingly dramatic transformations.

For the proposed method, we use the homography ker-

nel. The goal is to maximize the correlation between a pair

of views by transforming one to the other. The local max-

imization in Algorithm 1 and the other methods used for

comparison is achieved by a coordinate ascent method

7

with

a naive line search. The images are represented by a piece-

wise constant model. The integral transform in Algorithm

1 is approximated by the Laplace method.

Pixel coordinates were normalized to range in [1, 1].

Images f

1

and f

2

were converted to grayscale and were

subtracted by their joint mean (i.e. (

f

1

+

f

2

)/2, where

f

1

is the average intensity of f

1

) as a preprocessing step. The

sequence of (for both the proposed kernel and Gaussian

kernel) starts from = 0.1, and is multiplied by 2/3 in each

iteration of algorithm until it falls below 0.0001. The initial

transformation

0

was set to the identity, i.e. A

0

= I and

b

0

= c

0

= 0. Since the initial is large and the images lack

signicant areas of symmetry, no regularization was used.

The performance of these methods is summarized in g-

ure 3-bottom. Each plot corresponds to one of the scenes in

the dataset. For each scene, there is one rectied view that

is used as f

1

. The rest of ve views, indexed from 1 to 5, in

increasing order of complexity

8

are used as f

2

. The vertical

axis in the plots indicates the normalized correlation coef-

cient (NCC) between f

2

and transformed

f

1

. It can clearly

be observed that while Gaussian blur sometimes does a lit-

tle bit better than no blur, the proposed smoothing scheme

leads to a much higher NCC value

9

.

7

A block coordinate ascent is performed by partitioning the 8 parame-

ters of homography to three classes, those that comprise A, b and c. This

improves numerical stability because the sensitivity of parameters within

each partition are similar.

8

Here the complexity of the view is referred to how drastic the homog-

raphy transformation is, in order to bring it to the rectied view.

9

The code for reproducing our results is available at http://

perception.csl.illinois.edu/smoothing.

6

Colors Grace Posters There Underground

Figure 3. Top: Representative rectied views from the dataset provided in [13]. Bottom: NCC value after alignment. Horizontal axis is

the view index (increasing in complexity) of the scene. Four views are used for each scene, each one being as f2 and compared against f1,

which is a rectied view in the dataset.

9. Conclusion & Future Directions

This paper studied the problem of image smoothing for

the purpose of alignment by direct intensity-based methods.

We argued that the use of traditional Gaussian image blur-

ring, mainly inspired by the work of Lucas and Kanade [29],

may not be suitable for non-displacement motions. Instead,

we suggested directly smoothing the alignment objective

function. This led to a rigorous derivation of spatially vary-

ing kernels required for smoothing the objective function of

common model-based alignment tasks including afne and

homography models.

The derivation process of the kernels in this paper may

provide some insights for blur kernels in other tasks such as

image deblurring, motion from blur, matching, optical ow,

etc. For example, in image deblurring, the blur caused by

the motion of the camera or by scene motion typically leads

to spatially varying blur. The estimation of such kernels is

very challenging [10, 44, 16]. Yet if the motion is close to

the models discussed in this paper, our results may provide

new insights for estimation of the blur kernel. Similarly,

our kernels could be relevant to tasks involving motion blur

[12], due to the physical relationship between motion esti-

mation and blur estimation [11]. The coarse-to-ne scheme

is a classic and very effective way to escape from poor lo-

cal minima in optical ow estimation [2, 43]. Using the

proposed kernels may boost the quality of the computed so-

lution.

Another possible application which may benet from

our proposed kernels is visual detection and recognition.

Heuristic spatially-varying kernels [7, 40] have been suc-

cessfully utilized in face detection [8] and object recogni-

tion [6, 15]. Thus, our results may provide new perspective

on using blur kernels for such tasks in a more principled

way. Another related machinery for visual recognition tasks

is convolutional deep architectures [22, 23, 30, 34, 48].

These methods apply learnable convolution lters to the

scale-space representation of the images, hence gain trans-

lation and scale invariance. Utilizing the proposed kernels

instead of traditional convolutional lters and scale-space

representation between layers might extend the invariance

of these methods to a broader range of transformations.

Finally there is a lot of room to improve the computa-

tional efciency of using the proposed kernels. In this work,

the integral transforms are evaluated on a dense grid. How-

ever, since the kernels are smooth and localized in space,

one might be able to get a good approximate of the integral

transform by merely evaluating it at a small subset of image

points.

Acknowledgement

We thank John Wright (Columbia University) and Vadim

Zharnitsky (UIUC) for fruitful discussions and anonymous

reviewers for their thoughtful comments. Hossein Mobahi

was supported by the CSE PhD fellowship of UIUC. This

research was partially supported by ONR N00014-09-1-

0230, NSF CCF 09-64215, and NSF IIS 11-16012.

References

[1] E. L. Allgower and K. Georg. Numerical Continuation Meth-

ods: An Introduction. Springer-Verlag, 1990. 2

[2] L. Alvarez, J. Weickert, and J. S anchez. A scale-space ap-

proach to nonlocal optical owcalculations. Scale-Space99,

pages 235246, 1999. 7

[3] S. Baker and I. Matthews. Lucas-kanade 20 years on: A

unifying framework. IJCV, 56:221255, 2004. 1

[4] Y. Bengio. Learning Deep Architectures for AI. Now Pub-

lishers Inc., 2009. 2

[5] Y. Bengio, J. Louradour, R. Collobert, and J. Weston. Cur-

riculum learning. In International Conference on Machine

Learning, ICML, 2009. 2

[6] A. C. Berg, T. L. Berg, and J. Malik. Shape matching and ob-

ject recognition using low distortion correspondences. vol-

ume 1 of CVPR05, pages 2633, 2005. 7

[7] A. C. Berg and J. Malik. Geometric blur for template match-

ing. CVPR01, pages 607614, 2001. 2, 5, 7

7

[8] T. L. Berg, A. C. Berg, J. Edwards, M. Maire, R. White, Y.-

W. Teh, E. Learned-Miller, and D. A. Forsyth. Names and

faces in the news. CVPR, 2004. 7

[9] A. Blake and A. Zisserman. Visual Reconstruction. MIT

Press, 1987. 2

[10] A. Chakrabarti, T. Zickler, and W. T. Freeman. Analyzing

spatially-varying blur. CVPR10, 2010. 7

[11] W.-G. Chen, N. Nandhakumar, and W. N. Martin. Image

motion estimation from motion smear-a new computational

model. IEEE PAMI, 18(4):412425, 1996. 7

[12] T. S. Cho, A. Levin, F. Durand, and W. T. Freeman. Motion

blur removal with orthogonal parabolic exposures. In Int.

Conf. in Comp. Photography (ICCP), 2010. 7

[13] K. Cordes, B. Rosenhahn, and J. Ostermann. Increasing the

accuracy of feature evaluation benchmarks using differential

evolution. In IEEE Symp. on Differential Evolution (SDE),

2011. 6, 7

[14] M. Cox, S. Lucey, and S. Sridharan. Unsupervised align-

ment of image ensembles. Technical Report CI2CV-MC-

20100609, Auto. Sys. Lab, CSIRO ICT Centre, 2010. 1

[15] A. Frome, F. Sha, Y. Singer, and J. Malik. Learning globally-

consistent local distance functions for shape-based image re-

trieval and classication. ICCV07, 2007. 7

[16] A. Gupta, N. Joshi, C. L. Zitnick, M. Cohen, and B. Curless.

Single image deblurring using motion density functions. In

ECCV, 2010. 7

[17] M. Irani and P. Anandan. All about direct methods. In Work-

shop on Vision Algorithms, ICCV99, pages 267277. 1

[18] J. Jiang, S. Zheng, A. W. Toga, and Z. Tu. Learning based

coarse-to-ne image registration. pages 17, 2008. 1

[19] J. J. Koenderink. The structure of images. Biological Cyber-

netics, 50(5):363370, 1984. 2

[20] G. Konidaris and A. Barto. Autonomous shaping: knowl-

edge transfer in reinforcement learning. ICML 06, pages

489496, 2006. 2

[21] M. P. Kumar, B. Packer, and D. Koller. Self-paced learn-

ing for latent variable models. In NIPS, pages 11891197.

Curran Associates, Inc., 2010. 2

[22] Y. Lecun, K. Kavukcuoglu, and C. Farabet. Convolutional

networks and applications in vision. In Int. Symp. on Circuits

and Systems (ISCAS10), 2010. 7

[23] H. Lee, R. Grosse, R. Ranganath, and A. Y. Ng. Convolu-

tional deep belief networks for scalable unsupervised learn-

ing of hierarchical representations. In ICML09, pages 609

616, 2009. 7

[24] M. Lefebure and L. D. Cohen. Image registration, optical

ow and local rigidity. J. Math. Imaging Vis., 14:131147,

2001. 1

[25] T. Lindeberg. On the Axiomatic Foundations of Lin-

ear Scale-Space: Combining Semi-Group Structure with

Causality vs. Scale Invariance. In Gaussian Scale-Space

Theory: Proc. PhD School on Scale-Space Theory, 1994. 2

[26] T. Lindeberg. Generalized gaussian scale-space axiomatics

comprising linear scale-space, afne scale-space and spatio-

temporal scale-space. J. Math. Imaging Vis., 40:3681, 2011.

2

[27] T. Lindeberg and J. Garding. Shape-adapted smoothing in

estimation of 3-d depth cues from afne distortions of local

2-d brightness structure. In Image and Vision Computing,

pages 389400, 1994. 2

[28] D. G. Lowe. Object recognition from local scale-invariant

features. ICCV99, pages 11501157, 1999. 1

[29] B. D. Lucas and T. Kanade. An iterative image registration

technique with an application to stereo vision. IJCAI81,

pages 674679, 1981. 1, 3, 7

[30] H. Mobahi, R. Collobert, and J. Weston. Deep learning

from temporal coherence in video. ICML09, pages 737

744, 2009. 7

[31] H. Mobahi, Z. Zhou, A. Y. Yang, and Y. Ma. Holistic 3d

reconstruction of urban structures from low-rank textures. In

3DRR Workshop, ICCV11, pages 593600, 2011. 1

[32] G. Osterberg. Topography of the layer of rods and cones in

the human retina. Acta ophthalmologica: Supplementum.

Levin & Munksgaard, 1935. 2

[33] L. Piela, J. Kostrowicki, and H. A. Scheraga. On the

multiple-minima problem in the conformational analysis of

molecules. Journal of Physical Chemistry, 93(8):3339

3346, 1989. 2

[34] M. Ranzato, J. Susskind, V. Mnih, and G. Hinton. On

deep generative models with applications to recognition. In

CVPR11, 2011. 7

[35] K. Rose. Deterministic annealing for clustering, com-

pression, classication, regression, and related optimization

problems. pages 22102239, 1998. 2

[36] L. Sevilla-Lara and E. Learned-Miller. Distribution elds for

tracking. CVPR12, 2012. 2

[37] P. Simard, Y. LeCun, and J. S. Denker. Efcient pattern

recognition using a new transformation distance. NIPS92,

pages 5058, 1993. 1

[38] V. I. Spitkovsky, H. Alshawi, and D. Jurafsky. From Baby

Steps to Leapfrog: How Less is More in unsupervised de-

pendency parsing. In Proc. of NAACL-HLT, 2010. 2

[39] R. Szeliski. Image alignment and stitching: a tutorial. Found.

Trends. Comput. Graph. Vis., 2:1104, 2006. 1

[40] E. Tola, V. Lepetit, and P. Fua. A fast local descriptor for

dense matching. CVPR08, 2008. 2, 7

[41] F. d. l. Torre and M. J. Black. Robust parameterized compo-

nent analysis. ECCV02, pages 653669, 2002. 1

[42] N. Vasconcelos and A. Lippman. Multiresolution tangent

distance for afne-invariant classication. pages 843849,

1998. 1

[43] A. Wedel, T. Pock, C. Zach, H. Bischof, and D. Cremers.

Statistical and geometrical approaches to visual motion anal-

ysis. chapter An Improved Algorithm for TV-L1 Optical

Flow, pages 2345. 2009. 7

[44] O. Whyte, J. Sivic, A. Zisserman, and J. Ponce. Non-uniform

deblurring for shaken images. In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recognition,

2010. 7

[45] D. V. Widder. The Heat Equation. Academic Press, 1975. 4

[46] A. P. Witkin. Scale-Space Filtering. volume 2 of IJCAI83,

pages 10191022, Karlsruhe, 1983. 2

[47] A. L. Yuille and T. A. Poggio. Scaling theorems for zero

crossings. IEEE PAMI, 8(1):1525, 1986. 2

[48] M. D. Zeiler, D. Kirshnan, G. W. Taylor, and R. Fergus. De-

convolutional networks. In CVPR10, 2010. 7

8

Seeing through the Blur

The Supplementary Appendix

Hossein Mobahi

hmobahi2@illinois.edu

Department of Computer Science

University of Illinois at Urbana Champaign (UIUC)

Urbana, IL

Charles Lawrence Zitnick

larryz@microsoft.com

Interactive Visual Media Group

Microsoft Research

Redmond, WA

Yi Ma

yima@illinois.edu , mayi@microsoft.com

Department of Electrical and Computer Engineering

University of Illinois at Urbana Champaign (UIUC)

Urbana, IL

&

Visual Computing Group

Microsoft Research Asia

Beijing, China

April 2012

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

1 Notation

The symbol is used for equality by denition. Also, we use x for scalars, x for

vectors, X for matrices, and X for sets. In addition, f(.) denotes a scalar valued

function and f(.) a vector valued function. Unless stated otherwise, x means x

2

and means

x

. Finally, and denote convolution operators in spaces and X

respectively.

2 Denitions

Denition [Domain Transformation] Given a function f : X R and a vector eld

: X X, where X = R

n

and = R

m

. We refer to (x, ) as the domain

transformation parameterized by . Note that the parameter vector is constructed by

concatenation of all the parameters of a transformation. For example, in case of afne

Ax + b with x R

2

, is a 6 dimensional vectors containing the elements of A and

b.

Denition [Isotropic Gaussian]

k(x;

2

)

1

(

2)

dim(x)

e

x

2

2

2

. (1)

Denition [Anisotropic Gaussian]

K(x; )

1

(

2)

dim(x)

_

det()

e

x

T

1

x

2

.

Denition [Fourier Transform]

We use the following convention for Fourier transform. The Fourier transform of

a real valued function f : R

n

R is

f() =

_

R

n

f(x)e

i

T

x

dx and the inverse

Fourier transform is

f(x) = (2)

n

_

R

n

f()e

i

T

x

d.

Denition [Transformation Kernel]

Given a domain transformation : X X, where X = R

n

and = R

m

.

We dene a transformation kernel associated with as u

,

: X X R such that

it satises the following integral equation,

f :

[f((x, )) k(;

2

)] () =

_

X

f(y) u

,

(, x, y)dy , (2)

where f is assumed to be a Schwartz function. Therefore, any transformation

kernel that satises this equation allows the convolution of the transformed signal

with the Gaussian kernel be equivalently written by the integral transform of the non-

transformed signal with the kernel u

,

(, x, y).

Denition [Smoothed Regularized Objective]

We dene the smoothed regularized objective as the following.

z(,

0

, r, ) [

h( ,

0

, r) k( ;

2

)](). (3)

10

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

3 Proof of Results in the Paper

Proposition 0 The following identity holds for the product of two Gaussians.

k(

1

;

2

1

) k(

2

;

2

2

) =

e

2

2(

2

1

+

2

2

)

(

_

2(

2

1

+

2

2

))

m

k(

2

2

1

+

2

1

2

1

+

2

2

;

2

1

2

2

2

1

+

2

2

) .

Proof

k(

1

;

2

1

) k(

2

;

2

2

)

=

1

(

1

2)

m

e

2

2

2

1

1

(

2

2)

m

e

2

2

2

2

=

1

(2

1

2

)

m

e

2

2

2

1

2

2

2

2

=

1

(2

1

2

)

m

e

2

1

2

2

2

1

+

2

2

(

2

1

+

2

2

)

2

2

2

1

2

2

2

1

+

2

2

2

2(

2

1

+

2

2

)

(4)

=

e

2

2(

2

1

+

2

2

)

(

_

2(

2

1

+

2

2

))

m

k(

2

2

1

+

2

1

2

1

+

2

2

;

2

1

2

2

2

1

+

2

2

) .

Note that (4) is derived by completing the square.

Proposition 1 The following choice of u,

u

,

(, x, y)

=

1

(2)

n

_

_

_

e

i

T

((x,t)y)

k(t ;

2

) dt

_

d (5)

is a solution to the denition of kernel provided in (2). Here X = = R

n

, and

k(t;

2

) is some function k( . ; ) : X R with some parameter , which in our case

is simply an isotropic Gaussian with bandwidth .

Proof The key to the proof is writing f(x) by its Fourier formf(x) = (2)

n

_

f()e

i

T

x

d,

where = R

n

(similar to X = R

n

).

11

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

[f((x, .))

k( .

2

)]()

= [

_

1

(2)

n

_

f()e

i

T

(x,.)

d

_

k( .

2

)]()

=

1

(2)

n

_

__

f()e

i

T

(x,t)

d

_

k(t ;

2

) dt

=

1

(2)

n

_

f()

__

e

i

T

(x,t)

k(t ;

2

) dt

_

d

=

1

(2)

n

_

X

f(y)

__

e

i

T

y

__

e

i

T

(x,t)

k(t ;

2

) dt

_

d

_

dy (6)

=

1

(2)

n

_

X

f(y)

__

e

i

T

((x,t)y)

k(t ;

2

) dt d

_

dy

=

_

X

f(y)u

,

(, x, y) dy , (7)

where (6) uses the Parseval theorem, and (7) uses propositions assumption (5).

Proposition 2 Suppose f

1

=

p

k=1

a

k

(y; x

k

,

k

), where (x; x

k

,

k

) = e

xx

k

2

2

2

k

.

Assume that u

,

(, x, y) is Gaussian in variable y. Then the following identity holds.

_

X

f

1

(y)u

,

(, x, y) dy

=

p

i=1

a

i

(

i

_

2

i

+ s

2

)

n

e

x

i

2

2(

2

i

+s

2

)

.

12

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

Proof

_

X

f

1

(y)u

,

(, x, y) dy

=

_

X

f

1

(y)k( y; s

2

) dy

=

_

R

n

_

p

k=1

a

k

(y; x

k

,

k

)

_

k( y; s

2

) dy

=

p

k=1

a

k

__

R

n

(y; x

k

,

k

)k( y; s

2

) dy

_

=

p

k=1

a

k

(

k

2)

n

__

R

n

k(y x

k

;

2

k

)k( y; s

2

) dy

_

=

p

k=1

a

k

(

k

2)

n

_

_

_

_

R

n

e

x

k

2

2(

2

k

+s

2

)

(

_

2(

2

k

+ s

2

))

n

k(y

s

2

x

k

+

2

k

2

k

+ s

2

;

2

k

s

2

2

k

+ s

2

) dy

_

_

_ (8)

=

p

k=1

a

k

(

k

_

2

k

+ s

2

)

n

e

x

k

2

2(

2

k

+s

2

)

__

R

n

k(y

s

2

x

k

+

2

k

2

k

+ s

2

;

2

k

s

2

2

k

+ s

2

) dy

_

=

p

k=1

a

k

(

k

_

2

k

+ s

2

)

n

e

x

k

2

2(

2

k

+s

2

)

,

where in (8) we use the Gaussian product result from proposition 0.

Proposition 3 The regularized objective function z(,

0

, r, ) can be written using

transformation kernels as follows.

z(,

0

, r, )

= [

h( . ,

0

, r)

k( . ;

2

)]()

=

_

X

_

k(

0

; r

2

+

2

)f

2

(x)

_

X

_

f

1

(y)u

,

r

r

2

+

2

(

r

2

+

2

0

r

2

+

2

, x, y)

_

dy

_

dx.

Proof For computing z, we proceed as below.

13

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

z(,

0

, r, )

= [

h( . ,

0

, r) k( . ;

2

)]()

= [

__

X

_

k(

0

; r

2

)f

1

((x; ))f

2

(x)

_

dx

_

k( . ;

2

)]()

=

_

X

_

f

2

(x)[

_

k(

0

; r

2

)f

1

((x; ))

_

k( . ;

2

)]()

_

dx

=

_

X

_

f

2

(x)

_

_

k(

0

t; r

2

)f

1

((x; t))k( t;

2

)

_

dt

_

dx

=

_

X

_

f

2

(x)

_

_

f

1

((x; t))

e

2

2(r

2

+

2

)

_

_

2(r

2

+

2

)

_

m

k(t

2

0

+ r

2

r

2

+

2

;

r

2

2

r

2

+

2

)

_

dt

_

dx

=

_

X

_

e

2

2(r

2

+

2

)

_

_

2(r

2

+

2

)

_

m

f

2

(x)

_

X

_

f

1

(y)u

,

r

r

2

+

2

(

r

2

+

2

0

r

2

+

2

, x, y)

_

dy

_

dx.

Thus, regularized objective function from (3) leads to the following result.

z(,

0

, r, )

= [

h( . ,

0

, r)

k( . ;

2

)]()

=

_

X

_

k(

0

; r

2

+

2

)f

2

(x)

_

X

_

f

1

(y)u

,

r

r

2

+

2

(

r

2

+

2

0

r

2

+

2

, x, y)

_

dy

_

dx.

4 Derivation of Afne and Homography Kernels

Proposition 4 Suppose n 1 is some integer and let t : R

n

(R {0}). Then for

any real nn matrix A

and any real n1 vectors b

, x, and y, the following identity

holds:

_

A

_

B

e

i

T

Ax+i

T

b

t(x)

i

T

y

k

(AA

)k

(b b

) dAdb

= k(

A

x +b

t(x)

y;

2

(1 +x

2

)

t

2

(x)

) ,

where = B = R

n

and A = R

n

R

n

.

Proof We proceed as below,

14

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

_

A

_

B

e

i

T

Ax+i

T

b

t(x)

i

T

y

k

(AA

)k

(b b

) dAdb

=

_

A

_

B

e

i

n

j=1

k=1

n

w

j

a

jk

x

k

+i

n

k=1

k

b

k

t(x)

i

n

k=1

k

y

k

k

(AA

)k

(b b

) dAdb

= e

i

n

j=1

jyj

n

j=1

_

_

Bj

e

i

j

t(x)

bj

k

(b

j

b

j

) db

j

_

n

j=1

n

k=1

_

_

A

jk

e

iw

j

x

k

t(x)

a

jk

k

(a

jk

a

jk

) da

jk

_

= e

i

n

j=1

jyj

n

j=1

_

e

i

j

t(x)

b

j

+

1

2

2

(

i

j

t(x)

)

2

_

(9)

n

j=1

n

k=1

_

e

iw

j

x

k

t(x)

a

jk

+

1

2

2

(

iw

j

x

k

t(x)

)

2

_

,

where (9) uses the identity

_

R

e

ax

k

(x

x) dx = e

ax

+

1

2

2

a

2

. We proceed by

factorizing

j

and

2

j

in the exponent as the following.

=

_

A

_

B

e

i

T

Ax+i

T

b

t(x)

i

T

y

k

(AA

)k

(b b

) dAdb

= e

i

n

j=1

jyj

n

j=1

_

e

i

j

t(x)

b

j

+

1

2

2

(

i

j

t(x)

)

2

_

n

j=1

n

k=1

_

e

iw

j

x

k

t(x)

a

jk

+

1

2

2

(

iw

j

x

k

t(x)

)

2

_

=

n

j=1

e

ijyj+

i

j

t(x)

b

j

+

1

2

2

(

i

j

t(x)

)

2

+

i(

n

k=1

a

jk

x

k

)

t(x)

wj+

1

2

n

k=1

x

2

k

t

2

(x)

(iwj)

2

=

n

j=1

e

ij

b

j

y

j

+

n

k=1

a

jk

x

k

t(x)

1

2

w

2

j

2

(1+

n

k=1

x

2

k

)

t

2

(x)

(10)

Now dividing both sides by (2)

n

and integrating w.r.t. , we obtain the follow-

ing.

= (2)

n

_

_

A

_

B

e

i

T

Ax+i

T

b

t(x)

i

T

y

k

(AA

)k

(b b

) dAdb d(11)

= (2)

n

_

n

j=1

e

ij

b

j

y

j

t(x)+

n

k=1

a

jk

x

k

t(x)

1

2

w

2

j

2

(1+

n

k=1

x

2

k

)

t

2

(x)

d

=

n

j=1

_

_

j

(2)

1

e

ij

b

j

y

j

t(x)+

n

k=1

a

jk

x

k

t(x)

1

2

w

2

j

2

(1+

n

k=1

x

2

k

)

t

2

(x)

d

j

_

=

n

j=1

_

k(

b

j

y

j

t(x) +

n

k=1

a

jk

x

k

t(x)

;

2

(1 +

n

k=1

x

2

k

)

t

2

(x)

)

_

(12)

= k(

A

x +b

t(x)

y;

2

(1 +x

2

)

t

2

(x)

) ,

15

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

where (12) uses the identity (2)

1

_

R

e

ix

2

2y

d = k(x; y) for y > 0.

Lemma 5 (Derivation of Afne Kernel) Suppose x R

n

, where n 1 is some

integer. The kernel u

,

(

, x, y) for the afne transformation (x) = A

x + b

is

equal to the following expression:

k

_

A

x +b

y;

2

(1 +x

2

)

_

,

where A

is any n n real matrix and b

and y are any n 1 real vectors.

Proof By (5) from Proposition 1, any u that satises the following equation is a kernel

for .

u

,

(

, x, y)

1

(2)

n

_

__

e

i

T

((x,)y)

k

) d

_

d.

We proceed with computing u as follows:

u

,

(

, x, y)

1

(2)

n

_

__

e

i

T

((x,)y)

k

) d

_

d

=

1

(2)

n

_

_

A

_

B

e

i

T

(Ax+by)

k

(AA

)k

(b b

) dAdb d (13)

= k

_

A

x +b

y;

2

(1 +x

2

)

_

,

where (13) applies Lemma 4 with the particular choice of t(x) = 1.

Proposition 6 The following indenite integral identities hold.

t R , c R , p

1

R p

2

R

++

:

_

e

p2t

2

+p1t

dt =

1

2

_

p

2

e

p

2

1

4p

2

erf(

2p

2

t p

1

2

p

2

) + c

_

te

p2t

2

+p1t

dt =

p

1

4p

2

p

2

e

p

2

1

4p

2

erf(

2p

2

t p

1

2

p

2

)

1

2p

2

e

p2t

2

+tp1

+ c

_

t

2

e

p2t

2

+p1t

dt =

8p

2

2

p

2

(2p

2

+ p

2

1

)e

p

2

1

4p

2

erf(

2p

2

t p

1

2

p

2

)

p

1

+ 2p

2

t

4p

2

2

e

p2t

2

+tp1

+ c .

Proof The correctness of these identities can be easily checked by differentiating RHS

w.r.t. t and observing that it becomes equal to the integrand of LHS. Remember

d

dt

erf(t) =

2

e

t

2

.

16

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

Corollary 7 The following denite integral identities hold.

t R , p

1

R p

2

R

++

:

_

R

e

p2t

2

+p1t

dt =

_

p

2

e

p

2

1

4p

2

_

R

te

p2t

2

+p1t

dt =

p

1

2p

2

p

2

e

p

2

1

4p

2

_

R

t

2

e

p2t

2

+p1t

dt =

4p

2

2

p

2

(2p

2

+ p

2

1

)e

p

2

1

4p

2

.

Proof Using the identities for their indenite counterparts provided in Proposition 6,

these denite integrals are easily computed by subtracting their value at the limit t

. Note that lim

t

erf(t) = 1 and that lim

t

f(t) exp(p

2

t

2

+ p

1

t) = 0,

where p

2

> 0 and f : R R is such that f(t) is a polynomial in t.

Lemma 8 (Derivation of Homography Kernel) Suppose x R

2

. The kernel u

,

(

, x, y)

for the homography transformation (x) = (A

x + b

)(1 + c

T

x)

1

is equal to the

following expression:

u

,

(

, x, y) = qe

p

,

where the auxiliary variables are as below:

z

0

1

1 +x

2

z

1

1 +x

T

c

v A

x +b

q z

0

(z

0

x

2

y

T

v + z

1

)

2

+

2

x

2

(1 + z

0

x

2

y

2

)

2

2

(1 + z

0

x

2

y

2

)

5

2

p

z

1

y v

2

+ z

0

x

2

(v

2

y

1

v

1

y

2

)

2

2

2

(1 +x

2

(1 +y

2

))

.

Here A

is any 2 2 real matrix and b

, c

, and y are any 2 1 real vectors.

Proof By (5) from Proposition 1, any u that satises the following equation is a kernel

for .

u

,

(

, x, y)

1

(2)

n

_

__

e

i

T

((x,)y)

k

) d

_

d.

We proceed with computing u as follows:

17

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

u

,

(

, x, y)

1

(2)

n

_

__

e

i

T

((x,)y)

k

) d

_

d

=

_

C

_

1

(2)

n

_

_

A

_

B

e

i

T

(

Ax+b

1+c

T

x

y)

k

(AA

)k

(b b

) dAdb d

_

k

(c c

) dc

=

_

C

_

k

_

A

x +b

1 +c

T

x

y;

2

(1 +x

2

)

(1 +c

T

x)

2

_

k

(c c

)

_

dc (14)

=

_

C2

_

C1

_

k

_

A

x +b

1 +c

T

x

y;

2

(1 +x

2

)

(1 +c

T

x)

2

_

k

(c

1

c

1

)dc

1

_

k

(c

2

c

2

)dc

2

,

where (14) applies Lemma 4 with the particular choice of t(x) = 1 + c

T

x, and

C = C

1

C

2

with C

1

= C

2

= R.

We continue by rst computing the inner integral, aka w.r.t. c

1

. To reduce clutter,

we introduce the following auxiliary variables which are independent of c

1

.

v A

x +b

s 1 + c

2

x

2

z

0

1

2

(1 +x

2

)

z

1

1

2

2

.

Now we proceed with integration w.r.t. c

1

as below.

_

C1

k

(c

1

c

1

) k(

A

x +b

1 +c

T

x

y;

2

(1 +x

2

)

(1 +c

T

x)

2

) dc

1

=

_

C1

1

2

(1 +c

T

x)

2

2

2

(1 +x

2

)

e

(c

1

c

1

)

2

2

2

(1+c

T

x)

2

2

2

(1+x

2

)

x+b

1+c

T

x

y

2

dc

1

= z

0

z

1

_

C1

(q

0

+ c

1

q

1

+ c

2

1

q

2

)e

p2c

2

1

+p1c1+p0

dc

1

= z

0

z

1

_

p

2

e

p0+

p

2

1

4p

2

_

q

0

+ q

1

p

1

2p

2

+ q

2

1

4p

2

2

(2p

2

+ p

2

1

)

_

, (15)

where (15) uses Corollary 7 with the particular choice of p

i

and q

i

for i = 0, 1, 2

as the following. Obviously the following p

2

satises p

2

> 0. Also not that p

i

and q

i

are independent of integration variable c

1

.

18

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

q

0

s

2

q

1

2x

1

s

q

2

x

2

1

p

0

c

1

2

2

2

z

0

2

v sy

2

p

1

c

2

+ z

0

_

x

1

y

T

(v sy)

_

p

2

1

2

2

+ z

0

x

2

1

y

2

2

.

Combining (??) and (15) gives the following.

u

,

(

, x, y)

=

_

C2

z

0

z

1

_

p

2

e

p0+

p

2

1

4p

2

_

q

0

+ q

1

p

1

2p

2

+ q

2

1

4p

2

2

(2p

2

+ p

2

1

)

_

dc

2

.

We can compute the above integral in a similar fashion as shown below.

u

,

(

, x, y)

= z

0

z

1

_

p

2

_

C2

k

(c

2

c

2

)e

p0+

p

2

1

4p

2

_

q

0

+ q

1

p

1

2p

2

+ q

2

1

4p

2

2

(2p

2

+ p

2

1

)

_

dc

2

= z

0

z

1

_

p

2

1

2

_

C2

e

p0+

p

2

1

4p

2

(c

2

c

2

)

2

2

_

q

0

+ q

1

p

1

2p

2

+ q

2

1

4p

2

2

(2p

2

+ p

2

1

)

_

dc

2

=

z

0

z

1

z

2

2

|x

2

|

_

p

2

_

C2

1

2

(Q

0

+ sQ

1

+ s

2

Q

2

)e

P2s

2

+P1s+P0

ds (16)

=

z

0

z

1

z

2

2

|x

2

|

_

2p

2

P

2

e

P0+

P

2

1

4P

2

_

Q

0

+ Q

1

P

1

2P

2

+ Q

2

1

4P

2

2

(2P

2

+ P

2

1

)

_

, (17)

where (16) applies change of variable s = 1 + x

2

c

2

to the integral. Note that,

_

R

f(c

2

)dc

2

= sign(x

2

)

_

R

f((s 1)/x

2

)ds/x

2

= 1/|x

2

|

_

R

f((s 1)/x

2

)ds. Also,

(17) uses Corollary 7 with the particular choice of z

2

, P

i

and Q

i

for i = 0, 1, 2 as the

following. Obviously the following P

2

satises P

2

> 0. Also not that P

i

and Q

i

are

independent of integration variable s.

19

This Appendix does not Appear in the Ofcial CVPR2012 8-Page Manuscript

z

2

1

1 +

2

x

2

1

z

0

y

2

Q

0

1

z

2

2

x

2

1

+ x

2

1

(c

1

+

2

z

0

x

1

y

T

v)

2

Q

1

2x

1

(c

1

+

2

z

0

x

1

y

T

v)

Q

2

1

P

0

1

2

2

x

2

2

(1 + c

2

x

2

)

2

z

0

z

2

2

(v c

1

x

1

y

2

+

2

z

0

x

2

1

(v

2

y

1

v

1

y

2

)

2

)

P

1

z

0

z

2

y

T

(v c

1

x

1

y) +

1

2

x

2

2

(1 + c

2

x

2

)

P

2

1

2

2

x

2

2

+

z

0

z

2

2

y

2

.

In fact, by plugging in the denitions for z

i

, P

i

, and Q

i

and performing elementary

algebraic manipulations, one can write (17) more compactly as the following,

u

,

(

, x, y) = qe

p

,

where the auxiliary variables are as below:

z

0

1

1 +x

2

z

1

1 +x

T

c

v A

x +b

q z

0

(z

0

x

2

y

T

v + z

1

)

2

+

2

x

2

(1 + z

0

x

2

y

2

)

2

2

(1 + z

0

x

2

y

2

)

5

2

p

z

1

y v

2

+ z

0

x

2

(v

2

y

1

v

1

y

2

)

2

2

2

(1 +x

2

(1 +y

2

))

.

20

You might also like

- Coconut Midrib Removing Machine ThesisDocument91 pagesCoconut Midrib Removing Machine ThesisJohn Paul Del MundoNo ratings yet

- Forces Acting On A PropellerDocument21 pagesForces Acting On A Propellercasandra joaquin100% (2)

- Spatial Pyramid Matching For Scene Category RecognitionDocument6 pagesSpatial Pyramid Matching For Scene Category RecognitionVaibhav JainNo ratings yet

- Image Impainting Problem in DeepDocument20 pagesImage Impainting Problem in DeeppunithNo ratings yet

- Hypercomplex Mathematical MorphologyDocument23 pagesHypercomplex Mathematical MorphologyAnonymous Tph9x741No ratings yet

- Convex Quadratic Programming For Object LocalizationDocument4 pagesConvex Quadratic Programming For Object Localizationtamann2004No ratings yet

- Math OptimizationDocument11 pagesMath OptimizationAlexander ValverdeNo ratings yet

- Berg Cvpr05Document8 pagesBerg Cvpr05Babu MadanNo ratings yet

- Framework For Particle Swarm Optimization With Surrogate FunctionsDocument11 pagesFramework For Particle Swarm Optimization With Surrogate FunctionsLucas GallindoNo ratings yet

- Image Deblurring and Super-Resolution by Adaptive Sparse Domain Selection and Adaptive RegularizationDocument35 pagesImage Deblurring and Super-Resolution by Adaptive Sparse Domain Selection and Adaptive RegularizationSiddhesh PawarNo ratings yet

- Level Set Segmentation of Hyperspectral Images Using Joint Spectral Edge and Signature InformationDocument7 pagesLevel Set Segmentation of Hyperspectral Images Using Joint Spectral Edge and Signature InformationmiusayNo ratings yet

- Adaptive Relative Bundle AdjustmentDocument8 pagesAdaptive Relative Bundle AdjustmentIan MedeirosNo ratings yet

- Rank Priors For Continuous Non-Linear Dimensionality ReductionDocument8 pagesRank Priors For Continuous Non-Linear Dimensionality ReductionIon TomitaNo ratings yet

- A Combinatorial Solution For Model-Based Image Segmentation and Real-Time TrackingDocument13 pagesA Combinatorial Solution For Model-Based Image Segmentation and Real-Time TrackingVivek KovarthanNo ratings yet

- How To Deblur The ImageDocument28 pagesHow To Deblur The ImagecoolrsNo ratings yet

- LaplacianDocument25 pagesLaplacianMatteo LionelloNo ratings yet

- Sparse Sparse Bundle AdjustmentDocument11 pagesSparse Sparse Bundle AdjustmentIan MedeirosNo ratings yet

- Zach2008 VMV Fast Global LabelingDocument12 pagesZach2008 VMV Fast Global LabelingmarcniethammerNo ratings yet

- A Computationally Efficient Superresolution Image Reconstruction AlgorithmDocument11 pagesA Computationally Efficient Superresolution Image Reconstruction AlgorithmbinukirubaNo ratings yet

- Relative Bundle AdjustmentDocument26 pagesRelative Bundle Adjustmentbob wuNo ratings yet