Professional Documents

Culture Documents

Facebook Beacon Case Study

Facebook Beacon Case Study

Uploaded by

miindsurferCopyright:

Available Formats

You might also like

- The Memory Healer ProgramDocument100 pagesThe Memory Healer Programdancingelk2189% (18)

- Methods in Behavioral Research 12th Edition Cozby Solutions Manual DownloadDocument8 pagesMethods in Behavioral Research 12th Edition Cozby Solutions Manual DownloadJeffrey Gliem100% (16)

- Fraley v. Facebook, Inc, Original ComplaintDocument22 pagesFraley v. Facebook, Inc, Original ComplaintjonathanjaffeNo ratings yet

- Perform An Ethical Analysis of FacebookDocument1 pagePerform An Ethical Analysis of FacebookArif Sudibp' Rahmanda83% (6)

- Characteristics of Successful Entrepreneurs: David, C. McclellandDocument15 pagesCharacteristics of Successful Entrepreneurs: David, C. McclellandJavier HernandezNo ratings yet

- Final Examination SEMESTER 20-2A SCHOOLYEAR 2020-2021 Exam Code - (1)Document5 pagesFinal Examination SEMESTER 20-2A SCHOOLYEAR 2020-2021 Exam Code - (1)Andy Gian100% (1)

- Facebook Vs MyspaceDocument13 pagesFacebook Vs MyspaceKiran P. Sankhi100% (1)

- 166 Case Studies Prove Social Media Marketing ROIDocument66 pages166 Case Studies Prove Social Media Marketing ROIInnocomNo ratings yet

- Lazada Philippines Affiliate ProgramDocument10 pagesLazada Philippines Affiliate ProgramCharmaine BlancaflorNo ratings yet

- Twitter and The Russian Street (CNMS WP 2012/1)Document23 pagesTwitter and The Russian Street (CNMS WP 2012/1)Center for the Study of New Media & SocietyNo ratings yet

- Case Analysis: Legal ComplianceDocument5 pagesCase Analysis: Legal ComplianceSanket JamuarNo ratings yet

- Medical Statistics and Demography Made Easy®Document353 pagesMedical Statistics and Demography Made Easy®Dareen Seleem100% (1)

- Facebook BeaconDocument18 pagesFacebook BeaconManpreetKaurNo ratings yet

- MIS Assignment 3Document3 pagesMIS Assignment 3Duaa FatimaNo ratings yet

- Santos, Klaudine Kyle K. (CASE STUDY MIS)Document27 pagesSantos, Klaudine Kyle K. (CASE STUDY MIS)Klaudine SantosNo ratings yet

- Case Study 1Document3 pagesCase Study 1Nashid alamNo ratings yet

- Facebook Values, E-Commerce, and Endless PossibilitiesDocument8 pagesFacebook Values, E-Commerce, and Endless PossibilitiesJay CampbellNo ratings yet

- Facebook Privacy: What Privacy?: Slide - 7 QuestionsDocument6 pagesFacebook Privacy: What Privacy?: Slide - 7 QuestionsabdulbabulNo ratings yet

- Facebook LetterDocument6 pagesFacebook Letterjonathan_skillingsNo ratings yet

- AG Facebook DismissalDocument53 pagesAG Facebook Dismissalthe kingfishNo ratings yet

- Fixing Facebook CaseDocument30 pagesFixing Facebook Casemamo20aoNo ratings yet

- Facebook Market AnalysisDocument10 pagesFacebook Market AnalysisYourMessageMedia100% (35)

- Facebook f8 Platform FAQ FINALDocument3 pagesFacebook f8 Platform FAQ FINALMashable100% (1)

- FacebookDocument25 pagesFacebookRamsheed PulappadiNo ratings yet

- Social Media Research Paper FinalDocument7 pagesSocial Media Research Paper Finalapi-302625537No ratings yet

- Crowd Siren V FacebookDocument28 pagesCrowd Siren V FacebookFindLawNo ratings yet

- WhatsApp in Developing CountriesDocument12 pagesWhatsApp in Developing CountriesHope ChidziwisanoNo ratings yet

- What Is FacebookDocument1 pageWhat Is FacebookCybernet NetwebNo ratings yet

- Management Seminar - Facebook.Document32 pagesManagement Seminar - Facebook.Carolina OcampoNo ratings yet

- Fortune 100 and LinkedInDocument4 pagesFortune 100 and LinkedInkishore234No ratings yet

- Facebook Vs MobiBurnDocument15 pagesFacebook Vs MobiBurnTechCrunchNo ratings yet

- Facebook: Corporate Governance and Compliance ReviewDocument10 pagesFacebook: Corporate Governance and Compliance ReviewChristian RindtoftNo ratings yet

- Facebook Response Naag Letter PDFDocument172 pagesFacebook Response Naag Letter PDFPat PowersNo ratings yet

- Consumer Perception and Attributes of Social Media Marketing in Consumer's Buying Decisions Focused On Body Lotion Users in MyanmarDocument56 pagesConsumer Perception and Attributes of Social Media Marketing in Consumer's Buying Decisions Focused On Body Lotion Users in MyanmarAnggia Dian MayanaNo ratings yet

- FB DocumentationDocument21 pagesFB DocumentationKoushik MuthakanaNo ratings yet

- Com - Pubg.imobiles LogcatDocument31 pagesCom - Pubg.imobiles LogcatFacebookNo ratings yet

- Research Paper On The Study of The Effects of Facebook On StudentsDocument5 pagesResearch Paper On The Study of The Effects of Facebook On StudentsPeach Garcia NuarinNo ratings yet

- Karen Hepp-FacebookDocument21 pagesKaren Hepp-FacebookLaw&CrimeNo ratings yet

- Social Media Marketing-A Study On FacebookDocument13 pagesSocial Media Marketing-A Study On FacebookMarcoNo ratings yet

- Instagram LetterDocument10 pagesInstagram LetterTechCrunchNo ratings yet

- Maximizing The Social Media Potential FoDocument5 pagesMaximizing The Social Media Potential Foruksi lNo ratings yet

- Facebook's PlatformDocument6 pagesFacebook's Platformtech2click100% (2)

- FacebookDocument2 pagesFacebookSatyamNo ratings yet

- Media Matters For America Public Comment - 2024-002-FB-UADocument5 pagesMedia Matters For America Public Comment - 2024-002-FB-UAMedia Matters for AmericaNo ratings yet

- SocialmediaportfolioDocument7 pagesSocialmediaportfolioapi-302558641100% (1)

- Twitter in EducationDocument8 pagesTwitter in EducationBert KokNo ratings yet

- Why Is Facebook So PopularDocument2 pagesWhy Is Facebook So Popularredouane lakbibaNo ratings yet

- MICA Digital Marketing BrochureDocument27 pagesMICA Digital Marketing BrochureMortalNo ratings yet

- How To Set Up The Facebook Business ManagerDocument38 pagesHow To Set Up The Facebook Business ManagerLoukia SamataNo ratings yet

- Case Study MsssDocument27 pagesCase Study MsssyashNo ratings yet

- Studi Kasus FacebookDocument8 pagesStudi Kasus FacebookngellezNo ratings yet

- MISS - Case Study - 20.08Document2 pagesMISS - Case Study - 20.08annesan1007No ratings yet

- Case 5Document4 pagesCase 5Vina100% (1)

- Case Study 4Document4 pagesCase Study 4Nam Phuong NguyenNo ratings yet

- Edmodo Module 03 Activity 02Document3 pagesEdmodo Module 03 Activity 02carlos aldayNo ratings yet

- Case Study Facebook Privacy:What Privacy?Document4 pagesCase Study Facebook Privacy:What Privacy?bui ngoc hoangNo ratings yet

- Describe The Weaknesses of Facebook's Privacy Policies and FeaturesDocument3 pagesDescribe The Weaknesses of Facebook's Privacy Policies and Featurestrnavin1233No ratings yet

- Sma Lab ManualDocument34 pagesSma Lab ManualShreeyash GuravNo ratings yet

- Popescu Catalina Ethics FacebookDocument2 pagesPopescu Catalina Ethics FacebookMihaelaNo ratings yet

- Case Study On FacebookDocument6 pagesCase Study On FacebookAditi WaliaNo ratings yet

- CH 4Document5 pagesCH 4Rahul GNo ratings yet

- Facebook Mediation ProblemDocument2 pagesFacebook Mediation ProblemGopal GaurNo ratings yet

- FacebookDocument3 pagesFacebookAshish RamtekeNo ratings yet

- MIS PresentationDocument9 pagesMIS PresentationLê NgọcNo ratings yet

- BEC1034 Course Plan 2nd 09-10Document6 pagesBEC1034 Course Plan 2nd 09-10miindsurferNo ratings yet

- Review Question On Chapter5 Filter StructureDocument5 pagesReview Question On Chapter5 Filter StructuremiindsurferNo ratings yet

- Tutorial 3bDocument2 pagesTutorial 3bmiindsurferNo ratings yet

- Tutorial 1a: o S 1 2 3 4 1 2 3 4 o S 1 oDocument2 pagesTutorial 1a: o S 1 2 3 4 1 2 3 4 o S 1 omiindsurferNo ratings yet

- Tutorial 3Document3 pagesTutorial 3miindsurferNo ratings yet

- Tutorial 1bDocument2 pagesTutorial 1bmiindsurferNo ratings yet

- 2 Tan 2 4 - 0 Tan 0.72654 2 6 - 0 Tan 1.37638: P P S SDocument2 pages2 Tan 2 4 - 0 Tan 0.72654 2 6 - 0 Tan 1.37638: P P S SmiindsurferNo ratings yet

- Tutorial - 4B: Ect 1016 Circuit Theory T1: 2005-06Document1 pageTutorial - 4B: Ect 1016 Circuit Theory T1: 2005-06miindsurferNo ratings yet

- Tutorial 4ADocument1 pageTutorial 4AmiindsurferNo ratings yet

- Circuit Theory ECT1016 Assignment (05-06)Document3 pagesCircuit Theory ECT1016 Assignment (05-06)miindsurferNo ratings yet

- HRM Tutorial 1 Essay Type QuestionsDocument2 pagesHRM Tutorial 1 Essay Type QuestionsmiindsurferNo ratings yet

- RemezDocument3 pagesRemezmiindsurferNo ratings yet

- The Magnitude Response Using The Bilinear TransformDocument2 pagesThe Magnitude Response Using The Bilinear TransformmiindsurferNo ratings yet

- FB 140330135009 Phpapp01Document7 pagesFB 140330135009 Phpapp01miindsurferNo ratings yet

- Dorais 2Document16 pagesDorais 2miindsurferNo ratings yet

- Serv Businessplanwkbk310Document10 pagesServ Businessplanwkbk310miindsurferNo ratings yet

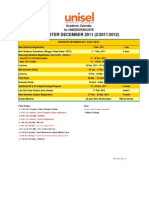

- Academic Calendar-Undergraduate Dec 2011Document1 pageAcademic Calendar-Undergraduate Dec 2011miindsurferNo ratings yet

- Emotions and Moods: EightDocument22 pagesEmotions and Moods: EightmiindsurferNo ratings yet

- EccbcAnalysis of Marketing Plan of Nike and Michael JordanDocument5 pagesEccbcAnalysis of Marketing Plan of Nike and Michael JordanmiindsurferNo ratings yet

- By: Samantha BaboDocument7 pagesBy: Samantha BabomiindsurferNo ratings yet

- L U C. I Fer: P. Blavatsky' and Coj - JlinsDocument4 pagesL U C. I Fer: P. Blavatsky' and Coj - JlinsChockalingam MeenakshisundaramNo ratings yet

- The New Strategic Thinking Robert en 5531Document5 pagesThe New Strategic Thinking Robert en 5531teena6506763No ratings yet

- Criminal Procedure Lesson 3Document34 pagesCriminal Procedure Lesson 3Melencio Silverio Faustino100% (1)

- Socialist Fight No 8Document36 pagesSocialist Fight No 8Gerald J DowningNo ratings yet

- Power System Generation, Transmission and Distribution Prof. D. P. Kothari Centre For Energy Studies Indian Institute of Technology, DelhiDocument19 pagesPower System Generation, Transmission and Distribution Prof. D. P. Kothari Centre For Energy Studies Indian Institute of Technology, DelhiVISHAL TELANGNo ratings yet

- Prevalence and Factors Associated With Self Medication With Antibiotics Among University Students in Moshi Kilimanjaro TanzaniaDocument7 pagesPrevalence and Factors Associated With Self Medication With Antibiotics Among University Students in Moshi Kilimanjaro TanzaniaSamia RoshdyNo ratings yet

- Sts. James The Less and MatthiasDocument3 pagesSts. James The Less and MatthiasCary TolenadaNo ratings yet

- Colonial Stoneware Industry in Charlestown, Mass. Page 1Document1 pageColonial Stoneware Industry in Charlestown, Mass. Page 1Justin W. ThomasNo ratings yet

- Mli102 - Management of Library and Information CentresDocument49 pagesMli102 - Management of Library and Information Centressoda pdfNo ratings yet

- Fsec CR 1537 05Document613 pagesFsec CR 1537 05Amber StrongNo ratings yet

- Caviles V BautistaDocument5 pagesCaviles V BautistaJade Palace TribezNo ratings yet

- Exposed: Jeffrey Gettleman & The New York TimesDocument3 pagesExposed: Jeffrey Gettleman & The New York TimesRick HeizmanNo ratings yet

- Tao Te ChingDocument26 pagesTao Te ChingdrevenackNo ratings yet

- Journalism ModulesDocument24 pagesJournalism ModulesClark BalandanNo ratings yet

- Lesson 3 Moral ActionsDocument33 pagesLesson 3 Moral Actionsjeruel luminariasNo ratings yet

- PertDocument34 pagesPertVikrant ChaudharyNo ratings yet

- Nursing BulletinDocument83 pagesNursing BulletinsrinivasanaNo ratings yet

- Chem Project On Ascorbic AcidDocument16 pagesChem Project On Ascorbic AcidBhupéndér Sharma73% (33)

- Kirishitan Yashiki 切支丹屋敷 - Myōgadani 茗荷谷Document3 pagesKirishitan Yashiki 切支丹屋敷 - Myōgadani 茗荷谷trevorskingleNo ratings yet

- Difference Between Ancient, Medieval and Modern History With Their Detailed ComparisonsDocument1 pageDifference Between Ancient, Medieval and Modern History With Their Detailed ComparisonsKesharkala PckgingNo ratings yet

- Method For Prediction of Micropile Resistance For Slope Stabilization PDFDocument30 pagesMethod For Prediction of Micropile Resistance For Slope Stabilization PDFAnonymous Re62LKaACNo ratings yet

- Paley CommentaryDocument746 pagesPaley CommentaryTrivia Tatia Moonson100% (2)

- Effectiveness of Structured Teaching Programme On Knowledge Regarding Acid Peptic Disease and Its Prevention Among The Industrial WorkersDocument6 pagesEffectiveness of Structured Teaching Programme On Knowledge Regarding Acid Peptic Disease and Its Prevention Among The Industrial WorkersIJAR JOURNALNo ratings yet

- An Apply A Day Keeps The Doctor Away PDFDocument4 pagesAn Apply A Day Keeps The Doctor Away PDFjamesNo ratings yet

- Prodhive: B.A.S.H 5.0Document9 pagesProdhive: B.A.S.H 5.0Goverdhan Gupta 4-Yr B.Tech.: Mechanical Engg., IIT(BHU)No ratings yet

- Stanford Circus Severance MotionDocument14 pagesStanford Circus Severance MotionMark W. BennettNo ratings yet

Facebook Beacon Case Study

Facebook Beacon Case Study

Uploaded by

miindsurferOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Facebook Beacon Case Study

Facebook Beacon Case Study

Uploaded by

miindsurferCopyright:

Available Formats

Facebook Case Study

Nikki Batchelor

Daniel Houston

Suzanne Larson

Sunil Nanjundaram

Wayan Vota

George Washington University School of Business

Business Ethics

Facebook Case Study

In November 2007, social media giant, Facebook pushed the limit of online marketing by

introducing a controversial marketing ploy, the Beacon tool. Using Beacon to track user

browsing data and purchases from partner sites, Facebook then broadcast this information to the

users network. The tool immediately drew criticism from the online community, claiming

violation of privacy and breach of user agreement by the company.

The moral issue of the case centers on privacy concerns of the new online advertising

tool, Beacon, which is embedded into a Facebook partners website (e.g.- Blockbuster,

Overstock, and The New York Times). The tool recorded a Facebook users Internet activity and

transmitted this activity back to Facebook, which then publicizes it across a users Facebook

network. Beacon was applied to all Facebook users by default, and then users were prompted to

opt-out with a discrete opt-out notice to block Beacons 3rd party data communication. Unless

the user opted-out quickly, sensitive user activity was broadcast to a Facebook users News Feed.

Users also had to visit each of the 44 Beacon affiliate Web sites, and opt out of the program on

each site individually (Gohring, 2008) to stop those partner sites from transmitting user data back

to Facebook. Users were not permitted to disable all data sharing activity and Beacon tracked

activity every time Facebook users accessed a partner website. Seeking new revenue to justify

the companys recent exorbitant valuation and bring additional money to shareholders, this tool

promised potential financial innovation.

What is the choice or decision to be made?

The moral dilemma Facebook faces is to determine how to deal with the conflict the

companys new Beacon product has with its own mission statement - to ensure users have

jurisdiction over their own private information. In addition, to ensure that Facebook can continue

to exist as a free service, its management must also determine how to generate revenue from the

millions of Facebook users, as the market demands.

Relevant Facts

User Privacy- According to the case, Facebook differentiated its service from its main

competitor, MySpace, by offering various privacy settings and requiring users join using a real

name. The company also offered control over which personal information was available to

others. In addition, the company stated organizational principles as: 1.) an individual should

have control over his or her personal information and 2.) an individual should have access to the

information others want to share.

Financial background- Although Facebook reported a user base of more than 80 million

active users, the company obtained a relatively anemic $125 million in ad revenue in 2007,

roughly one-fourth of the companys main competitor, MySpace. Facebooks small advertising

revenue was also magnified by Facebooks massive valuation of $15 billion, or 500 times

earnings, placing an extraordinary emphasis on short term profits to justify that valuation and the

companys bottom line.

What additional information is needed?

In order to better understand the impact of the companys decisions, answers to the

following questions would be useful in determining Facebooks best path forward:

Did Facebook intentionally make Beacon hard to opt out of?

Did Facebook violate any legal rights of users (e.g- sharing medical information, breach

of contract, etc)?

What was the opt-out rate, ratio, or aggregate number of opt-outs?

What was the percentage of Beacon prompts not disabled?

What was the number of users with Beacon broadcastings?

What was the revenue generated from the Beacon tool?

What other revenue options did Facebook have in lieu of Beacon?

Key Decision Stakeholders

P

r

i

m

a

r

y

Facebook

Interests - Generating new income, gaining greater social media market share, increasing

the user base, aligning organizational mission with delivery

Power - Delivering a free service, leading social media product, personal information of

over 80 million users, significant disposable income

Facebook users

Interests - Protection of profile privacy, a functional social media product

Power - Switch to other social media sites, influence over followers

S

e

c

o

n

d

a

r

y

A Facebook users network

Interests - Access to users network, protection of privacy

Power - Influence over other Facebook users, consumer influence

Advertisers

Interests - Reaching Facebook consumers, access to personal user data, generating

revenue, brand awareness

Power - Funding for advertisements, using other social media/advertising outlets,

influence over Facebooks business decisions

Enforcing organizations

Interests - Protecting the rights of consumers, prosecuting illegal business

Power - Intervention and litigation

Social media competitors (Myspace)

Interests - Gaining social media market share, profit generation

Power - Greater advertising revenue, greater transparency with site advertising, attracting

dissatisfied Facebook users

List of Available Alternatives

Facebook is faced with three sets of alternatives. Namely, Option 1 keep Beacons involvement

with Facebook the same; Option 2 modify it; or Option 3 cancel it altogether.

Options 1 and 3 are straightforward, however, Option 2, to modify Beacons involvement

with Facebook, has many subsets of alternatives. Facebook can make opt-out preferences more

clear and noticeable, provide incentives for users to allow Facebook to transmit their data to

Beacon, permit users to opt out of Beacon if they are willing to pay financially, or restructure

Beacon to function as an opt-in service.

If Facebook chooses to modify the Beacon plan by making opt-out alternatives

transparent and easy to access, they will be shifting their emphasis to giving consumers all of the

information up front. Users would be able to make an informed decision as to what degree, if

any, Beacon will be allowed to track them, and whether this information is shared with any of

their friends.

Offering incentives, such as access to additional application features, financial incentives,

or viewing preferences on their Facebook page may be enough motivation for users to allow

Beacon to track their website viewing history and publish their recent purchases. This

alternative must focus on making the incentives beneficial enough for users to convince many to

allow Beacon to continue to track them.

Facebook could require users to start paying for their service if they opt-out of Beacon.

Or similarly, they could join the likes of Pandora and Amazons Kindle line of tablets that allow

users to, for a fee, opt-out of advertising, or in this case, Beacon.

Lastly, Beacon could be implemented similarly to how users already broadcast all of their

other information on Facebook, on a voluntarily basis only. By opting-in to Beacon, users could

have their information shared at their own free-will, just like users willingly show that they have

checked-in at a location or liked a group.

The Option 2 subsets all have a focus on giving the user more control. Ultimately

Facebook is a business that needs to generate revenue, so the best recommendation for Option 2,

modify Beacon, is a combination of updates to the Beacon program granting the user more clear

and concise access for how to opt-out, while concurrently incentivizing the user to allow Beacon

to track their internet usage.

Describe the Personal Impact

Facebook will personally be impacted by all three alternatives of whether to keep

Beacon, modify its implementation, or delete it. Facebook has separated themselves from their

competition by making a website where consumers can share many different types of

information with their friends in one central location. Option 1 would likely subject Facebook to

the backlash of consumers who feel their privacy rights are being violated by not being in control

of their data. Because users were having data transmitted without their knowledge, and are not

given a viable, user-friendly option to opt out, groups like MoveOn.org will gain strength with

their petitions against Facebook, and Facebook risks losing significant membership in the highly

competitive social networking field. Facebook may also receive lawsuits from those who feel

their privacy rights have been violated. Keeping Beacon however allows Facebook to continue

the revenue stream it gained by capitalizing on their information sharing.

By choosing Option 2, to modify, Facebook would attempt to balance their own interests

with that of their users. The public would view Facebook more favorably as they would be seen

as acknowledging the missteps of their original implementation and a smooth and speedy

resolution would mend relations with disgruntled consumers and advocates. Admitting their

mistake could give Facebook a positive image as a company that has a sincere moral compass

and one that is responsive to its users needs. A company that is able to quickly adapt, is a

company that is poised to continue dominance in the future. Investors may even be more willing

to invest in Facebook because they have proven results responding to a negative situation.

If Facebook looks to cancel Beacon, they would be putting a higher value on keeping

privacy groups quiet and everyday users content, than on the lost revenue stream. No longer

would someone have to risk spoiling the surprise of a birthday gift because it was unknowingly

posted on his or her profile. However, canceling Beacon would create urgency for Facebook to

find an alternative way to generate revenue. One option is to continue to make profiles free for

consumers, but require advertisers to pay to maintain a Facebook profile or a list of followers.

For example, every time someone Liked the page for the TV show Modern Family,

Facebook would receive financial compensation. Another alternative way to generate revenue

would be to have Facebook expand their paid advertisers visibility within Facebooks mobile

interface and Applications. Ultimately, Facebook will be looking for a quick way to replace

Beacons lost revenue stream.

Utilitarian Screen

Jeremy Bentham's (1988) fundamental axiom, that "it is the greatest happiness of the

greatest number that is the measure of right and wrong," is a basis to review Facebooks Beacon

through a Utilitarian lens. In that context, we can group the primary Beacon stakeholders by

relative size to determine their importance in the utilitarian lens.

Stakeholder Type Stakeholder Size Importance

Primary

Facebook 700 employees Low

Facebook Users 80 million active users High

Using this ranking as a basis, Facebooks first alternative, to continue to run Beacon in its

current state, does not pass the Utilitarian screen at first glance. With 80 million active users, it

was surprising when only 80,000 users, or 0.1% of users, signed MoveOn.orgs online petition

decrying Beacons breach of their privacy. It can be assumed that the count was low for several

reasons, including apathy, users being unaware of Beacon, or many users simply felt the benefit

of Facebook was of greater value than their privacy. Regardless, even that vocal minority is a

very large group of people, larger in number than the total number of Facebook employees. So

with further analysis, Option 1 to continue Beacon in its present form, only provides for the

greatest benefit for the greatest number of people if its revenue generation is critical to the

survival of Facebook.

The second alternative to modify Beacon to include clear and multiple options to opt-out

could resolve the issues for the greatest number of people. First, Facebook would be able to

continue the Beacon program, would continue to share data with advertisers, and both Facebook,

its users, and advertisers could gain from the relationship. Facebook users who did not want their

data tracked could opt-out, freeing themselves from the data tracking and active sharing that is

core to the Beacon service. Finally, with Option 2 those users who do not care or who enjoy the

Beacon service could continue to be involved and receive the happiness they receive from

participation in Beacon and usage of Facebook.

The third alternative, to cancel Beacon, would directly benefit Facebook users and

privacy advocates who were displeased by Beacon. However, Facebook itself would be

negatively impacted, as would the advertisers who participated in the program. Facebook would

lose needed revenue and advertisers would lose access to potential customer data and advertising

opportunities. Therefore, Option 3 to cancel Beacon, would resolve the issues of the largest

number of people as long as Facebook itself was able to continue operating without Beacon.

Justice Screen

John Rawls (2005) argues that justice as fairness is central to the human condition and

justice is the first virtue of social institutions. With this context, we can examine Facebooks

options with Beacon to find the alternative that is the most fair for all parties and would enhance

the social contract between Facebook and its users. Another aspect to the Justice screen is the

legality of Beacon - did it violate any laws?

In Option 1, Facebook violated a central tenant of fairness. It allowed advertisers to view

data that users felt was their own, not to be shared without their permission. Facebook users had

the expectation of privacy from Facebooks original platform that allowed fine-grained control

over what data could be shared with others. In the class-action lawsuit Lane et al v. Facebook,

Inc., (2008) the plaintiffs accused Facebook of violating three laws with Beacon:

1. Electronic Communications Privacy Act: violated by Facebook when users browsing data

was intercepted and used for an unlawful purpose - advertizing.

2. Video Privacy Protection Act: violated by Beacon advertisers by disclosing personally

identifiable information to Facebook without the users consent.

3. California Consumer Legal Remedies Act: violated because Beacons terms did not state

that personally identifiable information was being transmitted to Facebook.

However, the guilt of Facebook was questionable (Vijayan 2007) and the case was settled

without an admission of guilt by Facebook, even though Facebook agreed to pay $9 million in

damages, attorneys fees, and to fund an online privacy foundation. So while there was an

accusation of illegality, and the settlement could be inferred as Facebooks acceptance that it

might have been found guilty, actual guilt in breaking a law was not proven in a court of law.

Still, continuing Beacon in its present form would not pass the Justice screen as it is not fair to

Facebook users and potentially breaks the law.

If Facebook followed Option 2, users would have a full, clear, and prominent disclosure

of Beacons implementation and tactics. Users therefore would have a fair understanding of how

to opt-out and what it means if they choose not to not. In addition, the lawsuit mentioned above

was specific to a time frame before Facebook altered Beacons opt-out procedures, suggesting

that changes to Beacon would reduce its law breaking potential. Therefore, Option 2 passes the

Justice Screen because it is clear that Facebook is focused on providing fair and legal alternatives

for their users.

Option 3 also passes the Justice Screen because it removes any opportunity for Facebook

to do further wrong or be unfair with its implementation of Beacon.

Rights Screen

The fundamental right at stake in this case is the end-users right to privacy. The

eighteenth century philosopher Immanuel Kant argued that each individual has a dignity that

must be respected and a moral right to choose for oneself (Velasquez 1990). Related to the right

to choose, are other positive and negative rights, one being the right to privacy (Velasquez 1990).

The right to privacy, for example, imposes on us the duty not to intrude into the private

activities of a person (Velasquez 1990). Facebooks implementation of Beacon showed a

blatant disregard for the right to privacy by tracking user activity in a deceitful way. Facebook

made it difficult for users to be made aware of the Beacon tracking, and then used the collected

information to allow companies to use Facebook members to endorse their products without

explicit approval. Therefore, Option 1 of continuing to run Beacon in its current state does not

pass the rights screen as it violates the end-users right to privacy.

The second alternative available to Facebook is to continue use of the Beacon program

but implement a series of modifications. One of the primary modifications being recommended

is to offer a clear explanation of Beacon to all users, and provide them with the choice to opt-out

of the program and allow FB to share personal information with participating companies. This

alternative addresses the rights violation by reinstating the individuals right to choose. By

enabling users to choose to opt-out of Beacon, they are able to maintain their right to privacy, so

Option 2 passes the rights screen.

The third alternative available to Facebook is the complete discontinuance of the Beacon

program. This alternative removes even the possibility of violating an individual's right to

privacy, so it also passes the rights screen.

Virtue Screen

When looking at a case through the virtue screen, it is important to assess the culture of

the organization as well as their reputation and consistency in behavior. One of Facebooks early

distinguishing characteristics from its closest competitor, MySpace, was its privacy setting

offerings. Facebook required members to join using a real name and allowed them to limit the

type of information available to others by offering users a variety of designations for each piece

of information. This was different than competitors who only offered one choice of whether to

make all information private or public. This feature was a significant part of Facebooks

reputation, and showed an organizational culture that valued personalization and protection.

The implementation of the Beacon program was not in line with Facebooks

organizational principles, and therefore Option 1, continuing the program unchanged, would not

pass the virtue screen. Facebooks organizational principles centered around privacy and held

that 1) individuals should have control over their personal information and 2) should have access

to information that others want to share. When FB implemented Beacon there was a lack of

transparency with user. Their efforts to conceal the opt-out notice by making it appear in a very

small window, and allowing it to disappear without users needing to take action were in direct

violation of the companys first principle. Any continuation of the program in its current state

would not pass the virtue screen.

The second alternative of modifying the implementation of Beacon by enabling a clear

process of opting-into the program would be in line with both of the company principles.

Beacon does allow for additional sharing of information on a level that did not previously exist

which supports Option 2. If Facebook can modify the program effectively to provide members

with all the necessary information about Beacon to make an educated decision about whether or

not to opt-in, this alternative passes the virtue screen.

Similarly, Option 3 of Facebook cancelling the Beacon program all together would also

pass the virtue test by reinstating the organizations commitment to privacy and would help

restore their reputation among members who felt betrayed by the implementation of the Beacon

program.

Conclusion

In each of the screens, there are stakeholders that benefit and those that will lose based on

the judgments that are required. This table assigns values to each option based on the results of

the screen, providing the best option for Facebook.

Utilitarian

Screen

Justice

Screen

Rights

Screen

Virtue

Screen

TOTAL

Options Max Points Score Score Score Score Score

Option 1

Keep Beacon

10 5 2 2 2

11

Option 2

Modify Beacon

10 7 10 10 7

34

Option 3

Cancel Beacon

10 5 8 8 8

29

In each screen there are two core groups with vested interests in each option. The first

group, composed of a large group of Facebook user and privacy rights advocates, is focused on

ensuring the highest level of privacy for Facebook users. The second group, made up of

Facebook management, investors, and advertisers, is intent to find new avenues to market and

sell their product, specifically to other Facebook users. With two divergent goals, it was clear

that the score for each screen was not easily calculated, but a variable that we assigned after

evaluating the effect each option would have on all the stakeholders. After review, Option 2

scored the highest in the screens and was our choice for Facebook for its balanced benefits for

the greatest number of stakeholders.

Even though each core group of stakeholders has divergent goals, they also have one

common goal: the survival of Facebook. As a private enterprise, it is clear that Facebook must

generate revenue to keep up with the demands of its users for more functionality. Option 2

allows Facebook to continue to use Beacon, but only for those users that make a careful decision

to allow Facebook to monitor their internet usage and purchases, thus meeting all the moral

requirements and allowing Facebook to achieve financial success.

Facebook must act immediately to inform all stakeholders of the upcoming changes in

Beacon. Beacon must be redesigned to allow users clear paths to opt-out of the service, but also

Facebook must gather their product development and marketing teams and devise a new revenue

generation portfolio to incentivize users to allow Beacon to continue operating. This will require

that Facebook restructure all contracts with advertisers and find ways for advertisers to reach

consumers that will not violate their right to privacy.

References

Bentham, J. (1988). Bentham: A Fragment on Government. (R. Harrison, Ed.). Cambridge

University Press.

Duell, M. (2012). Thanks for making us filthy rich! (n.d.).Mail Online. Retrieved September 20,

2012, from http://www.dailymail.co.uk/news/article-2095011/Facebook-IPO-Staff-plan-lavish-

spending-IPO-set-create-1-000-millionaires.html

Lane et al v. Facebook, Inc. et al. (2008). Justia Dockets & Filings. Retrieved September 20,

2012, from http://dockets.justia.com/docket/california/candce/5:2008cv03845/206085/.

Rawls, J. (2005). A Theory of Justice: Original Edition. Belknap Press of Harvard University

Press.

Velasquez, M., Andre, C., Shanks, S.J., & Meyer, M.J. (Winter 1990). Rights. Issues in Ethics,

V3 (N1). Retreived from http://www.scu.edu/ethics/practicing/decision/rights.html.

Vijayan, J., (2007, December 13). Did Blockbuster, Facebook break video privacy law with

Beacon? Computerworld. Retrieved September 21, 2012, from

http://www.computerworld.com/s/article/9053002/Did_Blockbuster_Facebook_break_video_pri

vacy_law_with_Beacon_

You might also like

- The Memory Healer ProgramDocument100 pagesThe Memory Healer Programdancingelk2189% (18)

- Methods in Behavioral Research 12th Edition Cozby Solutions Manual DownloadDocument8 pagesMethods in Behavioral Research 12th Edition Cozby Solutions Manual DownloadJeffrey Gliem100% (16)

- Fraley v. Facebook, Inc, Original ComplaintDocument22 pagesFraley v. Facebook, Inc, Original ComplaintjonathanjaffeNo ratings yet

- Perform An Ethical Analysis of FacebookDocument1 pagePerform An Ethical Analysis of FacebookArif Sudibp' Rahmanda83% (6)

- Characteristics of Successful Entrepreneurs: David, C. McclellandDocument15 pagesCharacteristics of Successful Entrepreneurs: David, C. McclellandJavier HernandezNo ratings yet

- Final Examination SEMESTER 20-2A SCHOOLYEAR 2020-2021 Exam Code - (1)Document5 pagesFinal Examination SEMESTER 20-2A SCHOOLYEAR 2020-2021 Exam Code - (1)Andy Gian100% (1)

- Facebook Vs MyspaceDocument13 pagesFacebook Vs MyspaceKiran P. Sankhi100% (1)

- 166 Case Studies Prove Social Media Marketing ROIDocument66 pages166 Case Studies Prove Social Media Marketing ROIInnocomNo ratings yet

- Lazada Philippines Affiliate ProgramDocument10 pagesLazada Philippines Affiliate ProgramCharmaine BlancaflorNo ratings yet

- Twitter and The Russian Street (CNMS WP 2012/1)Document23 pagesTwitter and The Russian Street (CNMS WP 2012/1)Center for the Study of New Media & SocietyNo ratings yet

- Case Analysis: Legal ComplianceDocument5 pagesCase Analysis: Legal ComplianceSanket JamuarNo ratings yet

- Medical Statistics and Demography Made Easy®Document353 pagesMedical Statistics and Demography Made Easy®Dareen Seleem100% (1)

- Facebook BeaconDocument18 pagesFacebook BeaconManpreetKaurNo ratings yet

- MIS Assignment 3Document3 pagesMIS Assignment 3Duaa FatimaNo ratings yet

- Santos, Klaudine Kyle K. (CASE STUDY MIS)Document27 pagesSantos, Klaudine Kyle K. (CASE STUDY MIS)Klaudine SantosNo ratings yet

- Case Study 1Document3 pagesCase Study 1Nashid alamNo ratings yet

- Facebook Values, E-Commerce, and Endless PossibilitiesDocument8 pagesFacebook Values, E-Commerce, and Endless PossibilitiesJay CampbellNo ratings yet

- Facebook Privacy: What Privacy?: Slide - 7 QuestionsDocument6 pagesFacebook Privacy: What Privacy?: Slide - 7 QuestionsabdulbabulNo ratings yet

- Facebook LetterDocument6 pagesFacebook Letterjonathan_skillingsNo ratings yet

- AG Facebook DismissalDocument53 pagesAG Facebook Dismissalthe kingfishNo ratings yet

- Fixing Facebook CaseDocument30 pagesFixing Facebook Casemamo20aoNo ratings yet

- Facebook Market AnalysisDocument10 pagesFacebook Market AnalysisYourMessageMedia100% (35)

- Facebook f8 Platform FAQ FINALDocument3 pagesFacebook f8 Platform FAQ FINALMashable100% (1)

- FacebookDocument25 pagesFacebookRamsheed PulappadiNo ratings yet

- Social Media Research Paper FinalDocument7 pagesSocial Media Research Paper Finalapi-302625537No ratings yet

- Crowd Siren V FacebookDocument28 pagesCrowd Siren V FacebookFindLawNo ratings yet

- WhatsApp in Developing CountriesDocument12 pagesWhatsApp in Developing CountriesHope ChidziwisanoNo ratings yet

- What Is FacebookDocument1 pageWhat Is FacebookCybernet NetwebNo ratings yet

- Management Seminar - Facebook.Document32 pagesManagement Seminar - Facebook.Carolina OcampoNo ratings yet

- Fortune 100 and LinkedInDocument4 pagesFortune 100 and LinkedInkishore234No ratings yet

- Facebook Vs MobiBurnDocument15 pagesFacebook Vs MobiBurnTechCrunchNo ratings yet

- Facebook: Corporate Governance and Compliance ReviewDocument10 pagesFacebook: Corporate Governance and Compliance ReviewChristian RindtoftNo ratings yet

- Facebook Response Naag Letter PDFDocument172 pagesFacebook Response Naag Letter PDFPat PowersNo ratings yet

- Consumer Perception and Attributes of Social Media Marketing in Consumer's Buying Decisions Focused On Body Lotion Users in MyanmarDocument56 pagesConsumer Perception and Attributes of Social Media Marketing in Consumer's Buying Decisions Focused On Body Lotion Users in MyanmarAnggia Dian MayanaNo ratings yet

- FB DocumentationDocument21 pagesFB DocumentationKoushik MuthakanaNo ratings yet

- Com - Pubg.imobiles LogcatDocument31 pagesCom - Pubg.imobiles LogcatFacebookNo ratings yet

- Research Paper On The Study of The Effects of Facebook On StudentsDocument5 pagesResearch Paper On The Study of The Effects of Facebook On StudentsPeach Garcia NuarinNo ratings yet

- Karen Hepp-FacebookDocument21 pagesKaren Hepp-FacebookLaw&CrimeNo ratings yet

- Social Media Marketing-A Study On FacebookDocument13 pagesSocial Media Marketing-A Study On FacebookMarcoNo ratings yet

- Instagram LetterDocument10 pagesInstagram LetterTechCrunchNo ratings yet

- Maximizing The Social Media Potential FoDocument5 pagesMaximizing The Social Media Potential Foruksi lNo ratings yet

- Facebook's PlatformDocument6 pagesFacebook's Platformtech2click100% (2)

- FacebookDocument2 pagesFacebookSatyamNo ratings yet

- Media Matters For America Public Comment - 2024-002-FB-UADocument5 pagesMedia Matters For America Public Comment - 2024-002-FB-UAMedia Matters for AmericaNo ratings yet

- SocialmediaportfolioDocument7 pagesSocialmediaportfolioapi-302558641100% (1)

- Twitter in EducationDocument8 pagesTwitter in EducationBert KokNo ratings yet

- Why Is Facebook So PopularDocument2 pagesWhy Is Facebook So Popularredouane lakbibaNo ratings yet

- MICA Digital Marketing BrochureDocument27 pagesMICA Digital Marketing BrochureMortalNo ratings yet

- How To Set Up The Facebook Business ManagerDocument38 pagesHow To Set Up The Facebook Business ManagerLoukia SamataNo ratings yet

- Case Study MsssDocument27 pagesCase Study MsssyashNo ratings yet

- Studi Kasus FacebookDocument8 pagesStudi Kasus FacebookngellezNo ratings yet

- MISS - Case Study - 20.08Document2 pagesMISS - Case Study - 20.08annesan1007No ratings yet

- Case 5Document4 pagesCase 5Vina100% (1)

- Case Study 4Document4 pagesCase Study 4Nam Phuong NguyenNo ratings yet

- Edmodo Module 03 Activity 02Document3 pagesEdmodo Module 03 Activity 02carlos aldayNo ratings yet

- Case Study Facebook Privacy:What Privacy?Document4 pagesCase Study Facebook Privacy:What Privacy?bui ngoc hoangNo ratings yet

- Describe The Weaknesses of Facebook's Privacy Policies and FeaturesDocument3 pagesDescribe The Weaknesses of Facebook's Privacy Policies and Featurestrnavin1233No ratings yet

- Sma Lab ManualDocument34 pagesSma Lab ManualShreeyash GuravNo ratings yet

- Popescu Catalina Ethics FacebookDocument2 pagesPopescu Catalina Ethics FacebookMihaelaNo ratings yet

- Case Study On FacebookDocument6 pagesCase Study On FacebookAditi WaliaNo ratings yet

- CH 4Document5 pagesCH 4Rahul GNo ratings yet

- Facebook Mediation ProblemDocument2 pagesFacebook Mediation ProblemGopal GaurNo ratings yet

- FacebookDocument3 pagesFacebookAshish RamtekeNo ratings yet

- MIS PresentationDocument9 pagesMIS PresentationLê NgọcNo ratings yet

- BEC1034 Course Plan 2nd 09-10Document6 pagesBEC1034 Course Plan 2nd 09-10miindsurferNo ratings yet

- Review Question On Chapter5 Filter StructureDocument5 pagesReview Question On Chapter5 Filter StructuremiindsurferNo ratings yet

- Tutorial 3bDocument2 pagesTutorial 3bmiindsurferNo ratings yet

- Tutorial 1a: o S 1 2 3 4 1 2 3 4 o S 1 oDocument2 pagesTutorial 1a: o S 1 2 3 4 1 2 3 4 o S 1 omiindsurferNo ratings yet

- Tutorial 3Document3 pagesTutorial 3miindsurferNo ratings yet

- Tutorial 1bDocument2 pagesTutorial 1bmiindsurferNo ratings yet

- 2 Tan 2 4 - 0 Tan 0.72654 2 6 - 0 Tan 1.37638: P P S SDocument2 pages2 Tan 2 4 - 0 Tan 0.72654 2 6 - 0 Tan 1.37638: P P S SmiindsurferNo ratings yet

- Tutorial - 4B: Ect 1016 Circuit Theory T1: 2005-06Document1 pageTutorial - 4B: Ect 1016 Circuit Theory T1: 2005-06miindsurferNo ratings yet

- Tutorial 4ADocument1 pageTutorial 4AmiindsurferNo ratings yet

- Circuit Theory ECT1016 Assignment (05-06)Document3 pagesCircuit Theory ECT1016 Assignment (05-06)miindsurferNo ratings yet

- HRM Tutorial 1 Essay Type QuestionsDocument2 pagesHRM Tutorial 1 Essay Type QuestionsmiindsurferNo ratings yet

- RemezDocument3 pagesRemezmiindsurferNo ratings yet

- The Magnitude Response Using The Bilinear TransformDocument2 pagesThe Magnitude Response Using The Bilinear TransformmiindsurferNo ratings yet

- FB 140330135009 Phpapp01Document7 pagesFB 140330135009 Phpapp01miindsurferNo ratings yet

- Dorais 2Document16 pagesDorais 2miindsurferNo ratings yet

- Serv Businessplanwkbk310Document10 pagesServ Businessplanwkbk310miindsurferNo ratings yet

- Academic Calendar-Undergraduate Dec 2011Document1 pageAcademic Calendar-Undergraduate Dec 2011miindsurferNo ratings yet

- Emotions and Moods: EightDocument22 pagesEmotions and Moods: EightmiindsurferNo ratings yet

- EccbcAnalysis of Marketing Plan of Nike and Michael JordanDocument5 pagesEccbcAnalysis of Marketing Plan of Nike and Michael JordanmiindsurferNo ratings yet

- By: Samantha BaboDocument7 pagesBy: Samantha BabomiindsurferNo ratings yet

- L U C. I Fer: P. Blavatsky' and Coj - JlinsDocument4 pagesL U C. I Fer: P. Blavatsky' and Coj - JlinsChockalingam MeenakshisundaramNo ratings yet

- The New Strategic Thinking Robert en 5531Document5 pagesThe New Strategic Thinking Robert en 5531teena6506763No ratings yet

- Criminal Procedure Lesson 3Document34 pagesCriminal Procedure Lesson 3Melencio Silverio Faustino100% (1)

- Socialist Fight No 8Document36 pagesSocialist Fight No 8Gerald J DowningNo ratings yet

- Power System Generation, Transmission and Distribution Prof. D. P. Kothari Centre For Energy Studies Indian Institute of Technology, DelhiDocument19 pagesPower System Generation, Transmission and Distribution Prof. D. P. Kothari Centre For Energy Studies Indian Institute of Technology, DelhiVISHAL TELANGNo ratings yet

- Prevalence and Factors Associated With Self Medication With Antibiotics Among University Students in Moshi Kilimanjaro TanzaniaDocument7 pagesPrevalence and Factors Associated With Self Medication With Antibiotics Among University Students in Moshi Kilimanjaro TanzaniaSamia RoshdyNo ratings yet

- Sts. James The Less and MatthiasDocument3 pagesSts. James The Less and MatthiasCary TolenadaNo ratings yet

- Colonial Stoneware Industry in Charlestown, Mass. Page 1Document1 pageColonial Stoneware Industry in Charlestown, Mass. Page 1Justin W. ThomasNo ratings yet

- Mli102 - Management of Library and Information CentresDocument49 pagesMli102 - Management of Library and Information Centressoda pdfNo ratings yet

- Fsec CR 1537 05Document613 pagesFsec CR 1537 05Amber StrongNo ratings yet

- Caviles V BautistaDocument5 pagesCaviles V BautistaJade Palace TribezNo ratings yet

- Exposed: Jeffrey Gettleman & The New York TimesDocument3 pagesExposed: Jeffrey Gettleman & The New York TimesRick HeizmanNo ratings yet

- Tao Te ChingDocument26 pagesTao Te ChingdrevenackNo ratings yet

- Journalism ModulesDocument24 pagesJournalism ModulesClark BalandanNo ratings yet

- Lesson 3 Moral ActionsDocument33 pagesLesson 3 Moral Actionsjeruel luminariasNo ratings yet

- PertDocument34 pagesPertVikrant ChaudharyNo ratings yet

- Nursing BulletinDocument83 pagesNursing BulletinsrinivasanaNo ratings yet

- Chem Project On Ascorbic AcidDocument16 pagesChem Project On Ascorbic AcidBhupéndér Sharma73% (33)

- Kirishitan Yashiki 切支丹屋敷 - Myōgadani 茗荷谷Document3 pagesKirishitan Yashiki 切支丹屋敷 - Myōgadani 茗荷谷trevorskingleNo ratings yet

- Difference Between Ancient, Medieval and Modern History With Their Detailed ComparisonsDocument1 pageDifference Between Ancient, Medieval and Modern History With Their Detailed ComparisonsKesharkala PckgingNo ratings yet

- Method For Prediction of Micropile Resistance For Slope Stabilization PDFDocument30 pagesMethod For Prediction of Micropile Resistance For Slope Stabilization PDFAnonymous Re62LKaACNo ratings yet

- Paley CommentaryDocument746 pagesPaley CommentaryTrivia Tatia Moonson100% (2)

- Effectiveness of Structured Teaching Programme On Knowledge Regarding Acid Peptic Disease and Its Prevention Among The Industrial WorkersDocument6 pagesEffectiveness of Structured Teaching Programme On Knowledge Regarding Acid Peptic Disease and Its Prevention Among The Industrial WorkersIJAR JOURNALNo ratings yet

- An Apply A Day Keeps The Doctor Away PDFDocument4 pagesAn Apply A Day Keeps The Doctor Away PDFjamesNo ratings yet

- Prodhive: B.A.S.H 5.0Document9 pagesProdhive: B.A.S.H 5.0Goverdhan Gupta 4-Yr B.Tech.: Mechanical Engg., IIT(BHU)No ratings yet

- Stanford Circus Severance MotionDocument14 pagesStanford Circus Severance MotionMark W. BennettNo ratings yet