Professional Documents

Culture Documents

A Revisit To Block and Recursive Least Squares For Parameter Estimation

A Revisit To Block and Recursive Least Squares For Parameter Estimation

Uploaded by

Anil Kumar DavuOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Revisit To Block and Recursive Least Squares For Parameter Estimation

A Revisit To Block and Recursive Least Squares For Parameter Estimation

Uploaded by

Anil Kumar DavuCopyright:

Available Formats

A revisit to block and recursive least squares

for parameter estimation

Jin Jiang

a,

*

, Youmin Zhang

b,1

a

Department of Electrical and Computer Engineering, The University of Western Ontario, London,

Ontario, Canada N6A 5B9

b

Department of Computer Science and Engineering, Aalborg University Esbjerg, Niels Bohrs Vej 8,

6700 Esbjerg, Denmark

Received 17 January 2003; received in revised form 25 January 2004; accepted 20 May 2004

Abstract

In this paper, the classical least squares (LS) and recursive least squares (RLS) for parameter estima-

tion have been re-examined in the light of the present day computing capabilities. It has been demon-

strated that for linear time-invariant systems, the performance of blockwise least squares (BLS) is

always superior to that of RLS. In the context of parameter estimation for dynamic systems, the current

computational capability of personal computers are more than adequate for BLS. However, for time-

varying systems with abrupt parameter changes, standard blockwise LS may no longer be suitable

due to its ineciency in discarding old data. To deal with this limitation, a novel sliding window

blockwise least squares approach with automatically adjustable window length triggered by a change

detection scheme is proposed. Two types of sliding windows, rectangular and exponential, have been

investigated. The performance of the proposed algorithm has been illustrated by comparing with the

standard RLS and an exponentially weighted RLS (EWRLS) using two examples. The simulation results

have conclusively shown that: (1) BLS has better performance than RLS; (2) the proposed variable-

length sliding window blockwise least squares (VLSWBLS) algorithm can outperform RLS with forget-

ting factors; (3) the scheme has both good tracking ability for abrupt parameter changes and can ensure

the high accuracy of parameter estimate at the steady-state; and (4) the computational burden of

0045-7906/$ - see front matter 2004 Elsevier Ltd. All rights reserved.

doi:10.1016/j.compeleceng.2004.05.002

*

Corresponding author. Tel.: +1 519 661 2111x88320; fax: +1 519 850 2436.

E-mail addresses: jjiang@uwo.ca (J. Jiang), ymzhang@cs.aue.auc.dk (Y. Zhang).

1

Tel.: +45 7912 7741; fax: +45 7912 7710.

www.elsevier.com/locate/compeleceng

Computers and Electrical Engineering 30 (2004) 403416

VLSWBLS is completely manageable with the current computer technology. Even though the idea pre-

sented here is straightforward, it has signicant implications to virtually all areas of application where

RLS schemes are used.

2004 Elsevier Ltd. All rights reserved.

Keywords: Blockwise least squares (BLS); Recursive least squares (RLS); Sliding window blockwise least squares

(SWBLS); Variable-length window; Change detection

1. Introduction

Since Karl Gauss proposed the technique of least squares around 1795 to predict the motion of

planets and comets using telescopic measurements, least squares (LS) and its many variants have

been extensively applied to solving estimation problems in many elds of application [6,7,9,1113].

The classical formula of least squares is in batch or block form, meaning that all measurements are

collected rst and then processed simultaneously. Such a formula poses major computational

problems since the computational complexity is in the order of O(N

3

) which grows continuously

with the number of data collected, where N is the number of parameters to be estimated. Because

of its non-iterative nature, block/batch LS algorithms are often regarded to be less desirable for

on-line applications.

To increase the eciency of LS algorithms, a recursive variant, known as recursive least squares

(RLS), has been derived. The computational complexity of RLS is in the order of O(N

2

).

Although RLS algorithm is theoretically equivalent to a block LS, it suers from the following

shortcomings: (1) numerical instability due to round-o errors caused by its recursive operations

in a nite-word length environment; (2) slow tracking capability for time-varying parameters; and

(3) sensitive to the initial conditions of the algorithm.

To improve the numerical properties, several numerically more robust variants based on QR

decomposition, U-D factorization and singular value decomposition (SVD) implementations of

RLS algorithms have been developed [2,9,11]. To improve the tracking capability of RLS, tech-

niques based on variable/time-varying forgetting factors [5,11,15], covariance matrix re-setting/

modication [6,8,14], and sliding window [3,4,10,13] have been developed.

It is true that the computational complexity of an estimation algorithm was the main concern

ten to twenty years ago, but it is no more! To date, beneting from rapid advance in computer

technology, the computational complexity of LS becomes much less an issue. One may choose

to view the advancement in computer technology being relative to the complexity of the problem

that we are able to solve with RLS. In the context of dynamic system identication, the fact of

matter is that for any practical system with more than two dozen of unknown parameters, the

requirement on the system input to be persistently exciting is so high that most of the LS-based

schemes will no longer provide accurate parameter estimate anyway. For systems with fewer

parameters, the computational power of existing personal computers will be far more adequate

for block LS. It is under this circumstance that the research reported in this paper has been carried

out.

By simply extending the block least squares to blockwise least squares (BLS), the classical LS

algorithm can be easily extended to on-line applications. Leaving the computational issues aside,

404 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

this paper has shown that BLS leads to superior performance to standard RLS in time-invariant

parameter estimation problems.

To improve the tracking capability of BLS for estimating parameters in time-varying systems,

certain techniques to eectively discard old data is necessary. In view of this, a new sliding-win-

dow blockwise least squares (SWBLS) algorithm is developed in this paper. Furthermore, to ob-

tain parameter estimates with both good parameter tracking and high accuracy at the steady-state

for systems with abruptly changed parameters, an automatically adjustable window length sliding

window BLS technique (abbreviated as VLSWBLS) is also proposed. The window length adjust-

ment is triggered by a parameter change detection scheme. In addition, the added advantages of

the developed algorithm are: (1) simpler to implement and (2) no need to specify the initial condi-

tions. The use of forgetting factor within the sliding window has also been investigated to further

increase the tracking capability. The paper has shown that the VLSWBLS can signicantly out-

perform the existing RLS algorithms with forgetting factors, even for time-varying systems.

The performance of the newly developed algorithms has been evaluated in comparison with

that of the existing ones for estimating parameters of time-invariant and time-varying systems,

and the superior performance has been obtained in all cases.

It is important to emphasize also that the results presented in this paper have tremendous impli-

cations. The paper may prompt those who are relying on RLS now to re-think about other op-

tions in their own eld of applications.

2. Review of block and recursive least squares

Let us consider a discrete dynamic system described by

yk /

T

k 1h

zk yk vk

/

T

k 1 zk 1; . . . ; zk n; uk 1; . . . ; uk m

h

T

a

1

; . . . ; a

n

; b

1

; . . . ; b

m

1

where u(k) and y(k) are the system input and output, respectively. z(k) is measured system output

and v(k) is a zero-mean white Gaussian noise term which counts for measurement noise and mod-

elling uncertainties. /

T

(k 1) and h are the information vector and the unknown parameter vec-

tor, respectively. The parameters in h can either be constant or subject to infrequent jumps. The

number of parameters to be estimated is denoted as N = n + m.

2.1. Block and blockwise least squares

To estimate the parameter vector, h, using all available measurements up to the current instant,

j = 1, 2, . . ., k, one can rst dene the following cost function:

Jh; k

X

k

j1

zj /

T

j 1h

2

2

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 405

Dierentiating J(h, k) with respect to h and setting the derivative to zero will lead to the well-

known LS solution [6,11]:

^

h

X

k

j1

/j 1/

T

j 1

" #

1

X

k

j1

/

T

j 1zj

" #

3

where

^

h is known as the least squares estimate of h.

Since

^

h is obtained using the sample data up to the time instant k, it is often denoted as

^

hk. An

alternative compact matrix-vector form can be written as follows:

^

hk U

T

k

U

k

1

U

T

k

z

k

4

where

z

k

z1 z2 . . . zk

T

k1

U

k

/

T

0

/

T

1

.

.

.

/

T

k 1

2

6

6

6

6

6

6

4

3

7

7

7

7

7

7

5

z0 z1 n u0 u1 m

z1 z2 n u1 u2 m

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

.

zk 1 zk n uk 1 uk m

2

6

6

6

6

6

6

4

3

7

7

7

7

7

7

5

kN

If this formulation of LS is used for on-line parameter estimation, one has to re-calculate the

Eq. (4) each time when a new measurement becomes available. This style of implementation is

referred to as BLS in this paper. To circumvent the problem of re-calculating Eq. (4), a recursive

version of the above scheme has been derived.

2.2. Recursive least squares

The basic idea behind a RLS algorithm is to compute the parameter update at time instant k by

adding a correction term to the previous parameter estimate

^

hk 1 once the new information

becomes available, rather than re-calculating Eq. (4) with the entire measurements. Such reformu-

lation has reduced the computational requirement signicantly, making the RLS extremely attrac-

tive in the last three decades for on-line parameter estimation applications.

Derivation of RLS algorithms can be found in many textbooks and papers (see e.g. [6,11]). For

the sake of easy reference, a typical RLS algorithm is presented here:

^

hk

^

hk 1 Pk/k 1ek 5

ek zk /

T

k 1

^

hk 1 6

Pk Pk 1

Pk 1/k 1/

T

k 1Pk 1

1 /

T

k 1Pk 1/k 1

7

406 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

where e(k) is the one-step ahead prediction error. Thus, the amount of correction is proportional

to the prediction error.

It can be seen that due to its recursive nature, the complexity of the RLS has been reduced con-

siderably from O(N

3

) in BLS to O(N

2

) in each estimate update. For more details about the block

and recursive least squares algorithms, readers are referred to, for example, [6,1113].

3. A variable-length sliding window blockwise least squares algorithm

3.1. Sliding window blockwise least squares formulation

Both of the above-discussed BLS and RLS algorithms can lead to the convergence of the

parameter estimate to their true value for time-invariant systems. However, when LS is applied

to non-stationary environments, e.g. system identication and parameter estimation in the pre-

sence of unknown parameter changes, forgetting factor or nite length sliding window techniques

have been widely used in association with the RLS. Such techniques can also be incorporated in

BLS.

In addition to enhancing the tracking capability of the BLS or RLS algorithm, the sliding win-

dow technique also has an inherent advantage to keep the computational complexity to a xed

level by replacing the growing dimension in U

k

with a xed and lower dimension matrix. To fur-

ther improve the tracking capability of the algorithm and to exploit the advantages of both sliding

window and forgetting factor techniques, an exponential weighting technique can be used within

the sliding window. Hence, a modied cost function of Eq. (2) can be described as follows:

J

L

h; k

X

k

jkL1

k

kj

zj /

T

j 1h

2

8

where L denotes the length of the sliding window. k(0 < k 6 1) is the forgetting factor to exponen-

tially discard the old data within the length of the window. The LS problem based on parameter

estimation of h is then to minimize the above cost function with the most recent data of size L.

Following the same derivation as in the BLS, a sliding exponentially weighted window block-

wise least squares (SEWBLS) algorithm can be obtained as follows:

^

h

L

k

X

k

jkL1

k

kj

/j 1/

T

j 1

" #

1

X

k

jkL1

k

kj

/

T

j 1zj

" #

9

or, in a compact matrix-vector form as

^

h

L

k U

k

kL1

T

K

k

kL1

U

k

kL1

1

U

k

kL1

T

K

k

kL1

z

k

kL1

10

where U

k

kL1

is similarly dened as in Eq. (4), and K

k

kL1

is a L L diagonal matrix with diagonal

elements being the forgetting factors, k

L1

, k

L2

, . . ., k

0

.

The performance of the above algorithm will depend on the length of the sliding window.

For time-invariant systems, the longer the window length, the higher the estimation accuracy.

However, for a system with abrupt parameter changes, to achieve fast tracking of the changed

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 407

parameters, the window length should be adjusted accordingly so that the out-of-date information

from the past measurements can be discarded eectively to assign a relatively heavier weight on

the latest measurement.

3.2. Automatic window length adjustment

To automatically initiate the window length adjustment, a change detection mechanism and a

window length adjustment strategy must be developed.

3.2.1. Change detection mechanism

There are many ways that one can detect parameter changes, an easy way to implement change

detector can be just based on the prediction error of the system within the sliding-window:

ek z

k

kL1

^z

k

kL1

z

k

kL1

U

k

kL1

^

h

L

k 11

where z

k

kL1

zk L 1 zk L zk

T

and ^z

k

kL1

is its estimated counterpart.

A decision to decrease the window length at time k = k

D

can be made if the following averaged

sliding-window detection index:

dk

1

M

X

k

ikM1

e

T

iei 12

exceeds a pre-set threshold q

dk

H

1

>

6

H

0

q 13

where H

0

= {No change in the system parameters}, H

1

= {Parameter changes have occurred in

the system}. M is the window length for calculating the detection index. If the change is detected

at time k

D

, k

D

will be referred to as the detection time.

It should be noted that Eq. (12) is only a very simple scheme for change detection. There are

many other more sophisticated change detection techniques available. Detailed discussion will

be beyond the scope of this paper. Interested readers are referred to [1,7] for more information.

3.2.2. Length adjustable sliding window

To improve the tracking ability of BLS, a new window length adjustment strategy has been

developed. The essence of the proposed approach lies in its ability to quickly shrink the win-

dow length, should a change in the system parameters be detected. By using a shorter win-

dow length, it allows the algorithm to track the parameter changes quickly. From the onset

of this window shrinking, the window length will be progressively expanding and returning to

the original width to maintain the steady-state performance of the algorithm. Such a fast

shrinking and gradual expanding window makes this approach suitable for a wide range of

applications.

408 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

To illustrate this concept clearly, consider ve snap-shots shown in Fig. 1. If there are no de-

tected changes, a sliding window of length L is used. This situation is shown in Fig. 1(a) and (b).

Suppose some parameters of the system have undergone changes, and they are detected at k

D

. At

this point, the window length is reduced drastically to discount the measurements in the subse-

quent LS calculations. This case is illustrated in Fig. 1(c). As new data becomes available, the win-

dow size will increase step-by-step until it again reaches the full length L. Thereafter, the original

sliding window scheme resumes as shown in Fig. 1(d) and (e).

As we know, the window size for a LS calculation has to be greater than the number of param-

eters to be estimated. In this case, the minimum window length will be N + 1. Furthermore, at the

instant of the window shrinking, U

k

kL1

may still contain some data prior to the parameter

change. One way to further improving the performance of this scheme is to use an exponential

weighting within this shrunk window. However, our experience indicates that, because of the rela-

tively short window length at the initial stage of shrinking, the eect of such weighting is not

signicant.

4. Illustrative examples

To illustrate the concepts presented in this paper, two systems with respective time-invariant

and abrupt change parameters have been used in this section.

Fig. 1. Demonstration for automatic adjustment of the window length.

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 409

The purpose of the rst example is to compare the performance of a RLS algorithm and a sim-

ple BLS. The second example is to demonstrate the capability of the proposed VLSWBLS algo-

rithms in comparison with RLS-type algorithms in the presence of parameter changes.

4.1. Example 1: parameter estimation of a time-invariant system

A second order system from [11] is adopted here. The system is described by the following dif-

ference equation:

yk a

1

yk 1 a

2

yk 2 b

1

uk 1 b

2

uk 2

zk yk vk

14

where

a

1

1:5; a

2

0:7; b

1

1:0; b

2

0:5

and y(k) is the output of the system, z(k) the measured output, and u(k) the input. v(k) is zero-

mean white Gaussian noise sequence with variance r

2

v

0:01. The external input u(k) is also a

zero-mean white Gaussian sequence with unit variance r

2

u

1:0, independent of v(k).

In an ideal situation, if there were no noise and a perfect initial parameter vector were available

for RLS, both RLS and BLS algorithms would have produced a perfect parameter estimation.

However, in reality, there are always some measurement noises presented in the system. Further-

more, the initial settings for the estimated parameter vector and covariance matrix cannot be speci-

ed accurately. All these facts have contributed negatively on the performance of RLS. It can be

seen in Table 1 and Fig. 2 that, compared with the results of RLS, much higher estimation accu-

racy has been obtained by using the BLS. Note that all curves in Figs. 2, 3 and 5 represent the

norm of the parameter estimation error dened by

e

h

k

1

N

run

X

N

run

j1

h

^

h

j

L

k

2

15

These results are drawn based on 100 independent runs, i.e. N

run

= 100 for 1000 measurement

data points.

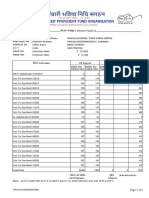

Table 1

Comparison of estimation accuracy between RLS and BLS

Algorithms Cases a

1

a

2

b

1

b

2

AE STD

RLS Noise free 1.4980 0.6974 0.9990 0.5009 1.5706 10

2

1.9375 10

1

With noise 1.4978 0.6972 0.9980 0.5021 1.6655 10

2

1.9369 10

1

^

h

0

0 1.4986 0.6984 0.9985 0.5018 9.1101 10

3

9.7834 10

2

Larger P

0

1.4998 0.6998 0.9990 0.5012 1.3638 10

2

1.8703 10

1

BLS Noise free 1.5000 0.7000 1.0000 0.5000 8.9727 10

15

7.2497 10

15

With noise 1.4999 0.6999 1.0001 0.5000 1.9741 10

3

4.0577 10

3

True parameters 1.5 0.7 1.0 0.5

AE, average error of e

h

(k), k = 1000; STD, standard deviation.

410 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

For the RLS algorithm in Table 1, the rst row represents the case with no measurement noise

and randomly chosen initial parameter vector

^

h

0

and covariance matrix P

0

= 10I, while the second

row shows the result with the addition of measurement noise of r

2

v

0:01 to the 1st case (this cor-

responds to a signal-noise-ratio (SNR) of 20 dB for the simulated example). To demonstrate the

performance of the RLS with dierent initial parameter vectors and initial covariance matrices,

additional cases are analyzed. The 3rd row presents a case which uses the same initial covariance

matrix as in the second case but with

^

h

0

0, while the 4th row uses the same

^

h

0

as in 2nd case but

with a larger P

0

= 10

3

I. For the BLS algorithm, the two cases shown in the table are under the

same conditions as in the rst two cases in RLS, respectively. It can be seen that RLS is very sen-

sitive to the initial conditions. However, there is no initial condition issue for BLS. The results in

Table 1 clearly indicate that BLS outperforms RLS in every case in terms of estimation accuracy.

To evaluate the sensitivity of the RLS and BLS algorithms to dierent SNR levels, the system in

Eq. (14) has been simulated at dierent noise levels. The results are shown in Table 2. The input to

the system is still a zero-mean white Gaussian sequence with a xed unit variance of r

2

u

1:0,

while the variance of the noise term v(k) has been adjusted to count for the dierent levels of

Sample number

0 100 200 300 400 500

10

-3

10

-2

10

-1

10

0

10

1

RLS

BLS

N

o

r

m

o

f

e

s

t

i

m

a

t

i

o

n

e

r

r

o

r

Fig. 2. Trajectories of averaged estimation error.

Table 2

Comparison of estimation accuracy at dierent SNRs

SNR (dB) RLS BLS

AE STD AE STD

30.0 1.5711 10

2

1.9375 10

1

1.9710 10

4

4.0528 10

4

20.0 1.6655 10

2

1.9369 10

1

1.9741 10

3

4.0577 10

3

10.0 3.8487 10

2

1.9383 10

1

2.7867 10

2

9.6821 10

2

5.0 1.7060 10

1

1.9387 10

1

1.6165 10

1

1.3146 10

1

1.0 6.7118 10

1

1.8474 10

1

6.6664 10

1

2.1505 10

1

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 411

the measurement noise. It can be observed that for dierent levels of noise intensity, the BLS can

still achieve much better estimation accuracy.

It is also interesting to compare the required CPU time per run (CTPR) for BLS and RLS algo-

rithms running on a relatively older and a relatively newer computer. This gure of merit is mea-

sured in terms of the MATLAB function cputime. Again, the results are obtained by an average

of 100 independent runs using 1000 data points. The CTPRs using the older computer with CPU

200 MHz and RAM 64 KB and the newer computer with CPU 1.7 GHz and RAM 256 KB are

listed in Table 3.

It can be seen that using the newer computer, the required CTPR of the BLS is even signi-

cantly lower than that of the RLS in the older computer. This observation re-arms our view

on replacing RLS by BLS for achieving better performance.

4.2. Example 2: systems with abrupt parameter changes

In this example, the performance of BLS and RLS for systems with abruptly changed param-

eters is examined. The system used in Example 1 has been modied by introducing parameter

changes at k = 500. These changes are described by

a

1

1:5; a

2

0:7; b

1

1:0; b

2

0:5; 0 < k < 500

a

1

1:2; a

2

0:5; b

1

0:7; b

2

0:3; 500 6 k < 1000

16

4.2.1. Comparison between the proposed and the RLS-type algorithms

To compare the estimation accuracy and the tracking performance, the norm of the estimation

error using the variable-length sliding rectangular window blockwise least squares (VLSRWBLS),

the exponentially weighted recursive least squares (EWRLS) and the RLS are shown in Fig. 3. In

the EWRLS, a constant forgetting factor of k = 0.95 is used for improving the tracking perform-

ance. It can be seen that signicantly better tracking performance has been achieved by the

VLSRWBLS algorithm. The estimate converges quickly to the new parameter values and the pro-

posed scheme also results in a much smaller steady-state estimation error in comparison to the

other two RLS algorithms. Compared with the estimation error prior to the change occurrence,

almost the same level of the estimation accuracy has been obtained for post-changed parameters.

However, RLS without forgetting factor simply could not track the changed parameters.

Although the EWRLS can still track the new parameters, the rate of convergence is signicantly

slower and the steady-state estimation error is relatively larger.

To demonstrate the time history of the parameter estimate, Fig. 4 shows the trajectories of the

estimated parameters using these three dierent algorithms. It can be seen clearly that the pro-

posed approach leads to excellent estimation both in pre- and post-change periods. Excellent

Table 3

CPU time per run required by RLS and BLS

Algorithms Older computer Newer computer

RLS 1.3222 0.1483

BLS 3.6901 0.3356

412 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

tracking performance has been achieved for all four parameters. Unfortunately, the RLS scheme

cannot track the changed parameters. The EWRLS can provide somewhat acceptable results. The

Sample number

0 100 200 300 400 500 600 700 800 900 1000

P

a

r

a

m

e

t

e

r

,

a

1

-1.55

-1.50

-1.45

-1.40

-1.35

-1.30

-1.25

-1.20

-1.15

VLSRWBLS

True value

RLS

EWRLS

Sample number

0 100 200 300 400 500 600 700 800 900 1000

P

a

r

a

m

e

t

e

r

,

a

2

0.45

0.50

0.55

0.60

0.65

0.70

0.75

0.80

VLSRWBLS

True value

RLS

EWRLS

Sample number

0 100 200 300 400 500 600 700 800 900 1000

P

a

r

a

m

e

t

e

r

,

b

1

0.65

0.70

0.75

0.80

0.85

0.90

0.95

1.00

1.05

VLSRWBLS

True value

RLS

EWRLS

Sample number

0 100 200 300 400 500 600 700 800 900 1000

,

r

e

t

e

m

a

r

a

P

b

2

0.20

0.25

0.30

0.35

0.40

0.45

0.50

0.55

VLSRWBLS

True value

RLS

EWRLS

Fig. 4. Parameter estimates via dierent algorithms.

Sample number

0 100 200 300 400 500 600 700 800 900 1000

N

o

r

m

o

f

e

s

t

i

m

a

t

i

o

n

e

r

r

o

r

10

-3

10

-2

10

-1

10

0

10

1

EWRLS

VLSRWBLS

RLS

Fig. 3. Comparison between the proposed and RLS-based algorithms.

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 413

superiority of the proposed algorithm lies mainly in the eective adjustment of the sliding window

length which leads to inherently faster convergence rate.

4.2.2. Performance for xed- and variable-length windows

To evaluate the eect of window length on the performance of the xed-length sliding window

algorithm and to demonstrate the superiority of the variable-length window, the estimation errors

with dierent xed window lengths of L = 50, 100, 200 and the one using proposed variable-length

sliding window algorithm have been shown in Fig. 5. For comparison purposes, results using rec-

tangular and exponential weighting are shown in Fig. 5(a) and (b), respectively.

For sliding window with dierent xed-lengths, it can be seen that the longer the window, the

higher the estimation accuracy. However, a longer window leads to larger delay to obtain good

post-change parameter estimation. It is clearly observable that, by using a xed window length,

one could not achieve good performance in both the steady-state and the transient periods. How-

ever, by using the proposed variable-length sliding window approach, signicant improvement in

both the transient and the steady-state periods has been obtained using a rectangular window

(VLSRWBLS, Fig. 5(a)) and an exponential window (VLSEWBLS, Fig. 5(b)).

In VLSRWBLS, since there is no data prior to the change is included in the sliding window

after the change has been detected, equal weights are given to all measurements. Therefore, the

same accuracy as the one using a longer xed-length window of L = 200 has been achieved. Fur-

thermore, due to the automatic adjustment of the window length, much faster convergence and

smaller estimation error has been obtained as shown in Fig. 5(a). By comparing the performance

between the rectangular and the exponential windows, it can be seen that excellent performance

during the transient period has also been obtained by the exponential window. However, since the

use of forgetting factor, k = 0.95, within the sliding window, relatively larger estimation errors at

both steady-state periods, before and after the parameter change, are also observed.

The proposed algorithms can also be extended to systems with gradual/incipient parameter

changes. However, results for such cases are not shown here due to space reason. Extension of

the developed algorithms to numerically more stable implementation, such as QR decomposition,

Sample number

0 100 200 300 400 500 600 700 800 900 1000

r

o

r

r

e

n

o

i

t

a

m

i

t

s

e

f

o

m

r

o

N

10

-3

10

-2

10

-1

10

0

L=50

VLSRWBLS

L=200

L=100

Sample number

0 100 200 300 400 500 600 700 800 900 1000

r

o

r

r

e

n

o

i

t

a

m

i

t

s

e

f

o

m

r

o

N

10

-3

10

-2

10

-1

10

0

L=50

VLSEWBLS

L=200

L=100

(a) (b)

Fig. 5. Comparison between dierent window shapes and lengths: (a) rectangular window and (b) exponential window.

414 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

U-D factorization or SVD, is relatively straightforward, which will not be elaborated further in

this paper.

5. Conclusions

With todays readily available computing power, one could not help but to think and re-think

some of the existing computationally more ecient, numerically less perfect algorithms. In this

paper, the standard least squares (LS) parameter estimation approach has been revisited in view

of its better accuracy and simpler implementation as compared to the standard recursive least

squares (RLS) algorithm. To address the weak tracking ability of BLS, an eective sliding window

blockwise least squares approach with an adjustable window length has been proposed for exten-

ding the LS approach to parameter estimation of systems with abrupt parameter changes. The

proposed approach outperforms the RLS-type algorithms signicantly and possesses both excel-

lent tracking and steady-state performance for systems with abrupt parameter changes. Simula-

tion results have also shown that the variable length sliding rectangular window algorithm

provides the best performance among tracking ability, steady-state estimation accuracy and com-

putational complexity.

The results presented in this paper is signicant in the sense that one may need to re-think about

the following issue: Should we use blockwise or recursive least squares? In view of its excellent per-

formance, it is our view that the blockwise LS approaches will play even more important role in

signal processing, communication, control and other engineering applications in a very near fu-

ture in the situations where RLS algorithms have been predominant up to now.

Acknowledgment

The research was partially supported by the Natural Sciences and Engineering Research Coun-

cil of Canada.

References

[1] Basseville M, Nikiforov I. Detection of abrupt changes: theory and application. Englewood Clis, NJ: Prentice-

Hall; 1993.

[2] Baykal B, Constantinides AG. Sliding window adaptive fast QR and QR-lattice algorithms. IEEE Trans Signal

Process 1998;46(11):287787.

[3] Belge M, Miller EL. A sliding window RLS-like adaptive algorithm for ltering alpha-stable noise. IEEE Signal

Process Lett 2000;7(4):869.

[4] Choi BY, Bien Z. Sliding-windowed weighted recursive least-squares method for parameter estimation. Electron

Lett 1989;25(20):13812.

[5] Fortescue TR, Kershenbaum LS, Ydstie BE. Implementation of self-tuning regulators with variable forgetting

factors. Automatica 1981;17(6):8315.

[6] Goodwin GC, Sin KS. Adaptive ltering, prediction and control. Englewood Clis, NJ: Prentice-Hall; 1984.

[7] Gustafsson F. Adaptive ltering and change detection. West Sussex: Wiley; 2000.

J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416 415

[8] Jiang J, Cook R. Fast parameter tracking RLS algorithm with high noise immunity. Electron Lett

1992;28(22):20425.

[9] Lawson CL, Hanson RJ. Solving least squares problems. Englewood Clis, NJ: Prentice-Hall; 1974.

[10] Liu H, He Z. A sliding-exponential window RLS adaptive algorithm: properties and applications. Signal Process

1995;45:35768.

[11] Ljung L, Soderstrom T. Theory and practice of recursive identication. Cambridge, MA: MIT Press; 1983.

[12] Mendel JM. Lessons in estimation theory for signal processing, communications, and control. Englewood Clis,

NJ: Prentice-Hall; 1995.

[13] Niedzwiecki M. Identication of time-varying processes. Chichester: Wiley; 2000.

[14] Park DJ, Jun BE. Self-perturbing recursive least squares algorithm with fast tracking capability. Electron Lett

1992;28(6):5589.

[15] Toplis B, Pasupathy S. Tracking improvements in fast RLS algorithms using a variable forgetting factor. IEEE

Trans Acoust Speech Signal Process 1988;ASSP-36(2):20627.

Jin Jiang obtained his Ph.D. degree in 1989 from the Department of Electrical Engineering,

University of New Brunswick, Fredericton, New Brunswick, Canada. Currently, he is a Professor

in the Department of Electrical and Computer Engineering at The University of Western Ontario,

London, Ontario, Canada. His research interests are in the areas of fault-tolerant control of

safety-critical systems, power system dynamics and controls, and advanced signal processing.

Youmin Zhang received his Ph.D. degree in 1995 from the Department of Automatic Control,

Northwestern Polytechnical University, Xian, PR China. He is currently an Assistant Professor in

the Department of Computer Science and Engineering at Aalborg University Esbjerg, Esbjerg,

Denmark. His main research interests are in the areas of fault diagnosis and fault-tolerant

(control) systems, dynamic systems modelling, identication and control, and signal processing.

416 J. Jiang, Y. Zhang / Computers and Electrical Engineering 30 (2004) 403416

You might also like

- DR6000 MK2 Diversity Receiver Manual, Installation & OperationDocument39 pagesDR6000 MK2 Diversity Receiver Manual, Installation & Operationoscar horacio floresNo ratings yet

- Kattan CDDocument6 pagesKattan CDSebastiao SilvaNo ratings yet

- M A P U PT PX A U P: Easuring Ctive Ower Sing SER ErspectiveDocument13 pagesM A P U PT PX A U P: Easuring Ctive Ower Sing SER ErspectiveStudent100% (1)

- A Chaos-Based Complex Micro-Instruction Set For Mitigating Instruction Reverse EngineeringDocument14 pagesA Chaos-Based Complex Micro-Instruction Set For Mitigating Instruction Reverse EngineeringMesbahNo ratings yet

- Journal of Statistical Software: Warping Functional Data in R and C Via A Bayesian Multiresolution ApproachDocument22 pagesJournal of Statistical Software: Warping Functional Data in R and C Via A Bayesian Multiresolution Approacha a aNo ratings yet

- Delay Extraction-Based Passive Macromodeling Techniques For Transmission Line Type Interconnects Characterized by Tabulated Multiport DataDocument13 pagesDelay Extraction-Based Passive Macromodeling Techniques For Transmission Line Type Interconnects Characterized by Tabulated Multiport DataPramod SrinivasanNo ratings yet

- Cover Page: Paper Title: On Obtaining Maximum Length Sequences For Accumulator-BasedDocument22 pagesCover Page: Paper Title: On Obtaining Maximum Length Sequences For Accumulator-BasedΚάποιοςΧρόνηςNo ratings yet

- On The Performance of Linear Least-Squares Estimation in Wireless Positioning SystemsDocument8 pagesOn The Performance of Linear Least-Squares Estimation in Wireless Positioning SystemskhanziaNo ratings yet

- Multimachine Power System Stabiliser Design Based On New ApproachDocument9 pagesMultimachine Power System Stabiliser Design Based On New ApproachUsman AliNo ratings yet

- Active Power PrimetimeDocument13 pagesActive Power PrimetimeRamakrishnaRao SoogooriNo ratings yet

- Approximation of Large-Scale Dynamical Systems: An Overview: A.C. Antoulas and D.C. Sorensen August 31, 2001Document22 pagesApproximation of Large-Scale Dynamical Systems: An Overview: A.C. Antoulas and D.C. Sorensen August 31, 2001Anonymous lEBdswQXmxNo ratings yet

- Chapter 3Document22 pagesChapter 3epsilon8600No ratings yet

- 2021 - Solving Multistage Stochastic Linear Programming Via Regularized Linear Decision Rules. An Application To Hydrothermal Dispatch PlanningDocument35 pages2021 - Solving Multistage Stochastic Linear Programming Via Regularized Linear Decision Rules. An Application To Hydrothermal Dispatch PlanningAlejandro AnguloNo ratings yet

- RK4 MethodDocument6 pagesRK4 MethodfurqanhamidNo ratings yet

- Articulo SampleDocument16 pagesArticulo SamplePaulina MarquezNo ratings yet

- ObbDocument27 pagesObbSachin GNo ratings yet

- QPSK Mod&Demodwith NoiseDocument32 pagesQPSK Mod&Demodwith Noisemanaswini thogaruNo ratings yet

- NL-HomeWork - 1 by DANISHDocument10 pagesNL-HomeWork - 1 by DANISHDANI DJNo ratings yet

- Artigo Publicado TerezaDocument6 pagesArtigo Publicado TerezaConstantin DorinelNo ratings yet

- ECG QRS Complex DetectorDocument6 pagesECG QRS Complex DetectorIjsrnet EditorialNo ratings yet

- Storace (2004) - Piecewise-Linear Approximation of Nonlinear Dynamical SystemsDocument13 pagesStorace (2004) - Piecewise-Linear Approximation of Nonlinear Dynamical SystemsSohibul HajahNo ratings yet

- Lowering Power Consumption in Clock by Using Globally Asynchronous Locally Synchronous Design StyleDocument6 pagesLowering Power Consumption in Clock by Using Globally Asynchronous Locally Synchronous Design StyleSambhav VermanNo ratings yet

- Localization in Wireless Sensor Networks ThesisDocument6 pagesLocalization in Wireless Sensor Networks Thesistifqbfgig100% (2)

- 1.artificial Neural Network For Discrete Model Order Reduction With Substructure PreservationDocument10 pages1.artificial Neural Network For Discrete Model Order Reduction With Substructure PreservationRamesh KomarasamiNo ratings yet

- Applied Sciences: A Novel Cross-Latch Shift Register Scheme For Low Power ApplicationsDocument11 pagesApplied Sciences: A Novel Cross-Latch Shift Register Scheme For Low Power ApplicationsVaishnavi B VNo ratings yet

- Stability Assessment of Power-Converter-Based AC Systems by LTP Theory: Eigenvalue Analysis and Harmonic Impedance EstimationDocument13 pagesStability Assessment of Power-Converter-Based AC Systems by LTP Theory: Eigenvalue Analysis and Harmonic Impedance EstimationLiz CastilloNo ratings yet

- NLPQLPDocument36 pagesNLPQLPLuis Alfredo González CalderónNo ratings yet

- Distributed State Space MinimizationDocument12 pagesDistributed State Space MinimizationNacer NacerNo ratings yet

- Kf-Power IEEE 2003Document11 pagesKf-Power IEEE 2003Srikantan KcNo ratings yet

- Automatic Heterogeneous Quantization of Deep Neural Networks For Low-Latency Inference On The Edge For Particle DetectorsDocument16 pagesAutomatic Heterogeneous Quantization of Deep Neural Networks For Low-Latency Inference On The Edge For Particle DetectorsEdgar Alejandro GuerreroNo ratings yet

- On Projection-Based Algorithms For Model-Order Reduction of InterconnectsDocument23 pagesOn Projection-Based Algorithms For Model-Order Reduction of InterconnectsKb NguyenNo ratings yet

- Research Paper On Clustering in Wireless Sensor NetworksDocument5 pagesResearch Paper On Clustering in Wireless Sensor NetworkszrdhvcaodNo ratings yet

- PR Oof: Memristor Models For Machine LearningDocument23 pagesPR Oof: Memristor Models For Machine LearningtestNo ratings yet

- 2.KRLE Data CompressionDocument6 pages2.KRLE Data CompressionKishore CheralaNo ratings yet

- Andrew J. Coyle Boudewijn R. Haverkort William Henderson Charles E. M. Pearce July 29, 1996Document22 pagesAndrew J. Coyle Boudewijn R. Haverkort William Henderson Charles E. M. Pearce July 29, 1996Lily GohNo ratings yet

- Photonics: Lcos SLM Study and Its Application in Wavelength Selective SwitchDocument16 pagesPhotonics: Lcos SLM Study and Its Application in Wavelength Selective SwitchMD. SHEFAUL KARIMNo ratings yet

- A N G D (Angd) A S A: Daptive Onlinear Radient Ecent Lgorithm For Mart NtennasDocument9 pagesA N G D (Angd) A S A: Daptive Onlinear Radient Ecent Lgorithm For Mart NtennasLawrence AveryNo ratings yet

- Vlsi95 Power SurveyDocument6 pagesVlsi95 Power Surveyjubincb2No ratings yet

- Optoelectronic Reservoir Computing: Scientific ReportsDocument6 pagesOptoelectronic Reservoir Computing: Scientific ReportsGeraud Russel Goune ChenguiNo ratings yet

- Optimal Sorting Algorithms For A Simplified 2D Array With Reconfigurable Pipelined Bus SystemDocument10 pagesOptimal Sorting Algorithms For A Simplified 2D Array With Reconfigurable Pipelined Bus Systemangki_angNo ratings yet

- VlsiDocument32 pagesVlsibalasaravanan0408No ratings yet

- Streaming Algorithms For Data in MotionDocument11 pagesStreaming Algorithms For Data in MotionLiel BudilovskyNo ratings yet

- Prediction of Critical Clearing Time Using Artificial Neural NetworkDocument5 pagesPrediction of Critical Clearing Time Using Artificial Neural NetworkSaddam HussainNo ratings yet

- Novel MIMO Detection Algorithm For High-Order Constellations in The Complex DomainDocument14 pagesNovel MIMO Detection Algorithm For High-Order Constellations in The Complex DomainssivabeNo ratings yet

- New Technique For Computation of Closest Hopf Bifurcation Point Using Real-Coded Genetic AlgorithmDocument8 pagesNew Technique For Computation of Closest Hopf Bifurcation Point Using Real-Coded Genetic AlgorithmJosaphat GouveiaNo ratings yet

- Information Systems: Hyeonseung Im, Jonghyun Park, Sungwoo ParkDocument16 pagesInformation Systems: Hyeonseung Im, Jonghyun Park, Sungwoo ParkAhmed GaweeshNo ratings yet

- Timing Analysiswithcompactvariation AwarestandardcellmodelsDocument9 pagesTiming Analysiswithcompactvariation Awarestandardcellmodelsan_nomeNo ratings yet

- A Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesDocument12 pagesA Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesYaseen HussainNo ratings yet

- 09-03-03 PDCCH Power BoostingDocument5 pages09-03-03 PDCCH Power BoostingANDRETERROMNo ratings yet

- Kfvsexp Final LaviolaDocument8 pagesKfvsexp Final LaviolaMila ApriliyantiNo ratings yet

- Transformer Top-Oil Temperature Modeling and Simulation: T. C. B. N. Assunção, J. L. Silvino, and P. ResendeDocument6 pagesTransformer Top-Oil Temperature Modeling and Simulation: T. C. B. N. Assunção, J. L. Silvino, and P. ResendeConstantin Dorinel0% (1)

- Power System State EstimationDocument13 pagesPower System State Estimationchoban1984100% (1)

- FPGA Implementation of NLMS Algorithm For Receiver in Wireless Communication SystemDocument15 pagesFPGA Implementation of NLMS Algorithm For Receiver in Wireless Communication SystemvijaykannamallaNo ratings yet

- 2 Survey Sensors 12 07350Document60 pages2 Survey Sensors 12 07350Dung Manh NgoNo ratings yet

- Daubchies Filter PDFDocument10 pagesDaubchies Filter PDFThrisul KumarNo ratings yet

- Low Complexity Design of Ripple Carry and Brent-Kung Adders in QCADocument15 pagesLow Complexity Design of Ripple Carry and Brent-Kung Adders in QCAmurlak37No ratings yet

- 1 s2.0 S0263224113001966 MainDocument7 pages1 s2.0 S0263224113001966 MainMadhusmita PandaNo ratings yet

- Sieve Algorithms For The Shortest Vector Problem Are PracticalDocument28 pagesSieve Algorithms For The Shortest Vector Problem Are PracticalSidou SissahNo ratings yet

- Transactions Letters: Efficient and Configurable Full-Search Block-Matching ProcessorsDocument8 pagesTransactions Letters: Efficient and Configurable Full-Search Block-Matching ProcessorsrovillareNo ratings yet

- Alternative SPICE Implementation of Circuit Uncertainties Based On Orthogonal PolynomialsDocument4 pagesAlternative SPICE Implementation of Circuit Uncertainties Based On Orthogonal Polynomialsxenaman17No ratings yet

- Spline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsFrom EverandSpline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsNo ratings yet

- Autocad Commands: 3D Commands and System VariablesDocument14 pagesAutocad Commands: 3D Commands and System Variablesgv Sathishkumar KumarNo ratings yet

- PHP NotesDocument28 pagesPHP NotesIndu GuptaNo ratings yet

- RGPV Syllabus PDFDocument44 pagesRGPV Syllabus PDFAshutosh TripathiNo ratings yet

- CHE3703-Exam OctNov 2023Document15 pagesCHE3703-Exam OctNov 2023leratobaloyi386No ratings yet

- FR6800 Mix Box Frame: Edition B 175-000365-00Document46 pagesFR6800 Mix Box Frame: Edition B 175-000365-00Techne PhobosNo ratings yet

- Medical Mirror ReportDocument19 pagesMedical Mirror Reportpuru0% (1)

- Using Technology To Address Barriers To Learning: Technical Assistance SamplerDocument66 pagesUsing Technology To Address Barriers To Learning: Technical Assistance SamplerKAINo ratings yet

- Cis82 7 RoutingDynamicallyDocument166 pagesCis82 7 RoutingDynamicallyAhmad AbidNo ratings yet

- Math RubricDocument2 pagesMath Rubricapi-479813313No ratings yet

- Basic Fire Alram TraningDocument46 pagesBasic Fire Alram Traningfatmawaticity.safetyNo ratings yet

- Coinpayments Apis: Process OverviewDocument4 pagesCoinpayments Apis: Process Overviewmas gantengNo ratings yet

- The Optical Transport Network (OTN) - G.709Document23 pagesThe Optical Transport Network (OTN) - G.709nokiaa7610No ratings yet

- Assignment No. 3: 1. Plot of Loss Function J Vs Number of IterationsDocument6 pagesAssignment No. 3: 1. Plot of Loss Function J Vs Number of Iterationssun_917443954No ratings yet

- Int Is - Gis - Kms NotesDocument16 pagesInt Is - Gis - Kms NotesMulumudi PrabhakarNo ratings yet

- Placement Management System For Campus RecruitmentDocument6 pagesPlacement Management System For Campus RecruitmentInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diwali Greeting CodeDocument4 pagesDiwali Greeting CodeVaneet Kumar NagpalNo ratings yet

- Pan Card Apply Process ListDocument4 pagesPan Card Apply Process ListKRS ACCOUNTINGNo ratings yet

- Manual WM Security Routers V2.04 enDocument127 pagesManual WM Security Routers V2.04 enOscar TamayoNo ratings yet

- Sem II C++ ProgramsDocument40 pagesSem II C++ ProgramsTeju TejaswiNo ratings yet

- RS-All Digital PET 2022 FlyerDocument25 pagesRS-All Digital PET 2022 FlyerromanNo ratings yet

- Business Information System: Department of Management Admas UniversityDocument28 pagesBusiness Information System: Department of Management Admas UniversitytesfaNo ratings yet

- Design of A Hamming Neural Network BasedDocument9 pagesDesign of A Hamming Neural Network BasedPhạm Minh NhậtNo ratings yet

- 4.4.8 Packet Tracer - Configure Secure Passwords and SSHDocument3 pages4.4.8 Packet Tracer - Configure Secure Passwords and SSHElvis Jamil DamianNo ratings yet

- Series DCT1000 Dust Collector Timer Controller: Dwyer Instruments, IncDocument8 pagesSeries DCT1000 Dust Collector Timer Controller: Dwyer Instruments, IncValZann SpANo ratings yet

- Power Electronics Question Model 1Document5 pagesPower Electronics Question Model 1sagarNo ratings yet

- Applicant Form PDFDocument5 pagesApplicant Form PDFDaniela ManNo ratings yet

- Albrt931002 SpecDocument148 pagesAlbrt931002 SpecArturo Treviño MedinaNo ratings yet

- LNL Iklcqd /: Grand Total 10,496 3,208 0 0 7,212Document2 pagesLNL Iklcqd /: Grand Total 10,496 3,208 0 0 7,212Naveen SinghNo ratings yet