Professional Documents

Culture Documents

Improvement of Text Dependent Speaker Identification System Using Neuro-Genetic Hybrid Algorithm in Office Environmental Conditions

Improvement of Text Dependent Speaker Identification System Using Neuro-Genetic Hybrid Algorithm in Office Environmental Conditions

Uploaded by

Shiv Ram ChOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Improvement of Text Dependent Speaker Identification System Using Neuro-Genetic Hybrid Algorithm in Office Environmental Conditions

Improvement of Text Dependent Speaker Identification System Using Neuro-Genetic Hybrid Algorithm in Office Environmental Conditions

Uploaded by

Shiv Ram ChCopyright:

Available Formats

IJCSI International Journal of Computer Science Issues, Vol.

1, 2009

ISSN (Online): 1694-0784

ISSN (Print): 1694-0814

42

Improvement of Text Dependent Speaker Identification

System Using Neuro-Genetic Hybrid Algorithm in Office

Environmental Conditions

Md. Rabiul Islam

1

and Md. Fayzur Rahman

2

1

Department of Computer Science & Engineering

Rajshahi University of Engineering & Technology (RUET), Rajshahi-6204, Bangladesh

2

Department of Electrical & Electronic Engineering

Rajshahi University of Engineering & Technology (RUET), Rajshahi-6204, Bangladesh

Abstract

In this paper, an improved strategy for automated text dependent

speaker identification system has been proposed in noisy

environment. The identification process incorporates the Neuro-

Genetic hybrid algorithm with cepstral based features. To

remove the background noise from the source utterance, wiener

filter has been used. Different speech pre-processing techniques

such as start-end point detection algorithm, pre-emphasis

filtering, frame blocking and windowing have been used to

process the speech utterances. RCC, MFCC, MFCC, MFCC,

LPC and LPCC have been used to extract the features. After

feature extraction of the speech, Neuro-Genetic hybrid algorithm

has been used in the learning and identification purposes.

Features are extracted by using different techniques to optimize

the performance of the identification. According to the VALID

speech database, the highest speaker identification rate of

100.000 % for studio environment and 82.33 % for office

environmental conditions have been achieved in the close set text

dependent speaker identification system.

Key words: Bio-informatics, Robust Speaker Identification,

Speech Signal Pre-processing, Neuro-Genetic Hybrid

Algorithm.

1. Introduction

Biometrics are seen by many researchers as a solution to a

lot of user identification and security problems now a days

[1]. Speaker identification is one of the most important

areas where biometric techniques can be used. There are

various techniques to resolve the automatic speaker

identification problem [2, 3, 4, 5, 6, 7, 8].

Most published works in the areas of speech recognition

and speaker recognition focus on speech under the

noiseless environments and few published works focus on

speech under noisy conditions [9, 10, 11, 12]. In some

research work, different talking styles were used to

simulate the speech produced under real stressful talking

conditions [13, 14, 15]. Learning systems in speaker

identification that employ hybrid strategies can potentially

offer significant advantages over single-strategy systems.

In this proposed system, Neuro-Genetic Hybrid algorithm

with cepstral based features has been used to improve the

performance of the text dependent speaker identification

system under noisy environment. To extract the features

from the speech, different types of feature extraction

technique such as RCC, MFCC, MFCC, MFCC, LPC

and LPCC have been used to achieve good result. Some of

the tasks of this work have been simulated using Matlab

based toolbox such as Signal processing Toolbox,

Voicebox and HMM Toolbox.

2. Paradigm of the Proposed Speaker

Identification System

The basic building blocks of speaker identification system

are shown in the Fig.1. The first step is the acquisition of

speech utterances from speakers. To remove the

background noises from the original speech, wiener filter

has been used. Then the start and end points detection

algorithm has been used to detect the start and end points

from each speech utterance. After which the unnecessary

parts have been removed. Pre-emphasis filtering technique

has been used as a noise reduction technique to increase

the amplitude of the input signal at frequencies where

signal-to-noise ratio (SNR) is low. The speech signal is

segmented into overlapping frames. The purpose of the

overlapping analysis is that each speech sound of the input

IJCSI International Journal of Computer Science Issues, Vol. 1, 2009

43

sequence would be approximately centered at some frame.

After segmentation, windowing technique has been used.

Features were extracted from the segmented speech. The

extracted features were then fed to the Neuro-Genetic

hybrid techniques for learning and classification.

Fig. 1 Block diagram of the proposed automated speaker identification

system.

3. Speech Signal Pre-processing for Speaker

Identification

To capture the speech signal, sampling frequency of

11025 Hz

,

sampling resolution of 16-bits, mono recording

channel and Recorded file format = *.wav have been

considered. The speech preprocessing part has a vital role

for the efficiency of learning. After acquisition of speech

utterances, wiener filter has been used to remove the

background noise from the original speech utterances [16,

17, 18]. Speech end points detection and silence part

removal algorithm has been used to detect the presence of

speech and to remove pulse and silences in a background

noise [19, 20, 21, 22, 23]. To detect word boundary, the

frame energy is computed using the sort-term log energy

equation [24],

+

=

=

1

2

) ( log 10

N n

n t

i

i

i

t S E

(1)

Pre-emphasis has been used to balance the spectrum of

voiced sounds that have a steep roll-off in the high

frequency region [25, 26, 27]. The transfer function of the

FIR filter in the z-domain is [26]

1 0 , . 1 ) (

1

=

z Z H (2)

Where is the pre-emphasis parameter.

Frame blocking has been performed with an overlapping

of 25[%] to 75[%] of the frame size. Typically a frame

length of 10-30 milliseconds has been used. The purpose

of the overlapping analysis is that each speech sound of

the input sequence would be approximately centered at

some frame [28, 29].

From different types of windowing techniques, Hamming

window has been used for this system. The purpose of

using windowing is to reduce the effect of the spectral

artifacts that results from the framing process [30, 31, 32].

The hamming window can be defined as follows [33]:

Otherwise , 0

)

2

1

( )

2

1

( ,

2

cos 46 . 0 54 . 0

) (

=

N

n

N

N

n

n w

(3)

4. Speech parameterization Techniques for

Speaker Identification

This stage is very important in an ASIS because the

quality of the speaker modeling and pattern matching

strongly depends on the quality of the feature extraction

methods. For the proposed ASIS, different types of speech

feature extraction methods [34, 35, 36, 37, 38, 39] such as

RCC, MFCC, MFCC, MFCC, LPC, LPCC have been

applied.

5. Training and Testing Model for Speaker

Identification

Fig.2 shows the working process of neuro-genetic hybrid

system [40, 41, 42]. The structure of the multilayer neural

network does not matter for the GA as long as the BPNs

parameters are mapped correctly to the genes of the

chromosome the GA is optimizing. Basically, each gene

represents the value of a certain weight in the BPN and the

chromosome is a vector that contains these values such

that each weight corresponds to a fixed position in the

vector as shown in Fig.2.

The fitness function can be assigned from the

identification error of the BPN for the set of pictures used

for training. The GA searches for parameter values that

minimize the fitness function, thus the identification error

of the BPN is reduced and the identification rate is

maximized [43].

IJCSI International Journal of Computer Science Issues, Vol. 1, 2009

44

Fig.2 Learning and recognition model for the Neuro-Genetic hybrid

system.

The algorithm for the Neuro-Genetic based weight

determination and Fitness Function [44] is as follows:

Algorithm for Neuro-Genetic Weight determination:

{

i 0;

Generate the initial population P

i

of real coded

chromosomes C

i

j

each representing a weight set for the

BPN;

Generate fitness values F

i

j

for each C

i

j

P

i

using the

algorithm FITGEN();

While the current population P

i

has not converged

{

Using the cross over mechanism reproduced offspring

from the parent chromosome and performs mutation on

offspring;

i i+1;

Call the current population P

i

;

Calculate fitness values F

i

j

for each C

i

j

P

i

using the

algorithm FITGEN();

}

Extract weight from P

i

to be used by the BPN;

}

Algorithm for FITGEN():

{Let ( ,

i

I

j

T ), i=1,2,..N where

i

I = ( ,

1i

I

,

2i

I

li

I ) and

i

T = ( ,

1i

T ,

2i

T

li

T )

represent the input-output pairs of the problem to be

solved by BPN with a configuration l-m-n.

{

Extract weights

i

W from C

i

;

Keeping

i

W as a fixed weight setting, train the BPN for

the N input instances (Pattern);

Calculate error E

i

for each of the input instances using the

formula:

E

i,

=

j

ji ji

O T

2

) ( (3)

Where

i

O is the output vector calculated by BPN;

Find the root mean square E of the errors E

i,

I = 1,2,.N

i.e.

E = N E

i

i

(4)

Now

the fitness value F

i

for each of the individual string of

the population as F

i

= E;

}

Output F

i

for each C

i,

i = 1,2,.P; }

}

6. Optimum parameter Selection for the BPN

and GA

6.1 Parameter Selection on the BPN

There are some critical parameters in Neuro-Genetic

hybrid system (such as in BPN, gain term, speed factor,

number of hidden layer nodes and in GA, crossover rate

and the number of generation) that affect the performance

of the proposed system. A trade off is made to explore the

optimal values of the above parameters and experiments

are performed using those parameters. The optimal values

of the above parameters are chosen carefully and finally

find out the identification rate.

6.1.1 Experiment on the Gain Term,

In BPN, during the training session when the gain term

was set as:

1

=

2

= 0.4, spread factor was set as k

1

= k

2

= 0.20 and tolerable error rate was fixed to 0.001[%] then

the highest identification rate of 91[%] has been achieved

which is shown in Fig.3.

Fig. 3 Performance measurement according to gain term.

6.1.2 Experiment on the Speed Factor, k

The performance of the BPN system has been measured

according to the speed factor, k. We set

1

=

2

= 0.4 and

tolerable error rate was fixed to 0.001[%]. We have

studied the value of the parameter ranging from 0.1 to 0.5.

We have found that the highest recognition rate was 93[%]

at k

1

= k

2

= 0.15 which is shown in Fig.4.

IJCSI International Journal of Computer Science Issues, Vol. 1, 2009

45

Fig. 4 Performance measurement according to various speed factor.

6.1.3 Experiment on the Number of Nodes in Hidden

Layer, N

H

In the learning phase of BPN, We have chosen the hidden

layer nodes in the range from 5 to 40. We set

1

=

2

=

0.4, k

1

= k

2

= 0.15 and tolerable error rate was fixed to

0.001[%]. The highest recognition rate of 94[%] has been

achieved at N

H

= 30 which is shown in Fig.5.

Fig. 5 Results after setting up the number of internal nodes in BPN.

6.2 Parameter Selection on the GA

To measure the optimum value, different parameters of the

genetic algorithm were also changed to find the best

matching parameters. The results of the experiments are

shown below.

6.2.1 Experiment on the Crossover Rate

In this experiment, crossover rate has been changed in

various ways such as 1, 2, 5, 7, 8, 10. The highest speaker

identification rate of 93[%] was found at crossover point 5

which is shown in the Fig.6.

Fig. 6 Performance measurement according to the crossover rate.

6.2.2 Experiment on the Crossover Rate

Different values of the number of generations have been

tested for achieving the optimum number of generations.

The test results are shown in the Fig.7. The maximum

identification rate of 95[%] has been found at the number

of generations 15.

Fig.7 Accuracy measurement according to the no. of generations.

7. Performance Measurement of the Text-

Dependent Speaker Identification System

VALID speech database [45] has been used to measure the

performance of the proposed hybrid system. In learning

phase, studio recording speech utterances ware used to

make reference models and in testing phase, speech

utterances recorded in four different office conditions

were used to measure the accurate performance of the

proposed Neuro-Genetic hybrid system. Performance of

the proposed system were measured according to various

cepstral based features such as LPC, LPCC, RCC, MFCC,

MFCC and MFCC which are shown in the following

table.

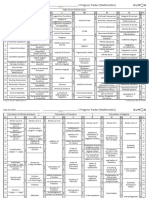

Table 1: Speaker identification rate (%) for VALID speech corpus

Type of

environments

MFCC

MFCC

MFCC

RCC LPCC

Clean speech

utterances

100.00 100.00 98.23 90.43 100.00

Office

environments

80.17 82.33 68.89 70.33 76.00

IJCSI International Journal of Computer Science Issues, Vol. 1, 2009

46

speech

utterances

Table 1 shows the overall average speaker identification

rate for VALID speech corpus. From the table it is easy to

compare the performance among MFCC, MFCC,

MFCC, RCC and LPCC methods for Neuro-Genetic

hybrid algorithm based text-dependent speaker

identification system. It has been shown that in clean

speech environment the performance is 100.00 [%] for

MFCC, MFCC and LPCC and the highest identification

rate (i.e. 82.33 [%]) has been achieved at MFCC for

four different office environments.

8. Conclusion and Observations

The experimental results show the versatility of the Neuro-

Genetic hybrid algorithm based text-dependent speaker

identification system. The critical parameters such as gain

term, speed factor, number of hidden layer nodes,

crossover rate and the number of generations have a great

impact on the recognition performance of the proposed

system. The optimum values of the above parameters have

been selected effectively to find out the best performance.

The highest recognition rate of BPN and GA have been

achieved to be 94[%] and 95[%] respectively. According

to VALID speech database, 100[%] identification rate in

clean environment and 82.33 [%] in office environment

conditions have been achieved in Neuro-Genetic hybrid

system. Therefore, this proposed system can be used in

various security and access control purposes. Finally the

performance of this proposed system can be populated

according to the largest speech recognition database.

References

[1] A. Jain, R. Bole, S. Pankanti, BIOMETRICS Personal

Identification in Networked Society, Kluwer Academic

Press, Boston, 1999.

[2] Rabiner, L., and Juang, B.-H., Fundamentals of Speech

Recognition, Prentice Hall, Englewood Cliffs, New Jersey,

1993.

[3] Jacobsen, J. D., Probabilistic Speech Detection,

Informatics and Mathematical Modeling, DTU, 2003.

[4] Jain, A., R.P.W.Duin, and J.Mao., Statistical pattern

recognition: a review, IEEE Trans. on Pattern Analysis

and Machine Intelligence 22 (2000), pp. 437.

[5] Davis, S., and Mermelstein, P., Comparison of parametric

representations for monosyllabic word recognition in

continuously spoken sentences, IEEE 74 Transactions on

Acoustics, Speech, and Signal Processing (ICASSP), Vol.

28, No. 4, 1980, pp. 357-366.

[6] Sadaoki Furui, 50 Years of Progress in Speech and Speaker

Recognition Research, ECTI TRANSACTIONS ON

COMPUTER AND INFORMATION TECHNOLOGY,

Vol.1, No.2, 2005.

[7] Lockwood, P., Boudy, J., and Blanchet, M., Non-linear

spectral subtraction (NSS) and hidden Markov models for

robust speech recognition in car noise environments, IEEE

International Conference on Acoustics, Speech, and

Signal Processing (ICASSP), 1992, Vol. 1, pp. 265-268.

[8] Matsui, T., and Furui, S., Comparison of text-independent

speaker recognition methods using VQ-distortion and

discrete/ continuous HMMs, IEEE Transactions on

Speech Audio Process, No. 2, 1994, pp. 456-459.

[9] Reynolds, D.A., Experimental evaluation of features for

robust speaker identification, IEEE Transactions on SAP,

Vol. 2, 1994, pp. 639-643.

[10] Sharma, S., Ellis, D., Kajarekar, S., Jain, P. & Hermansky,

H., Feature extraction using non-linear transformation for

robust speech recognition on the Aurora database., in

Proc. ICASSP2000, 2000.

[11] Wu, D., Morris, A.C. & Koreman, J., MLP Internal

Representation as Disciminant Features for Improved

Speaker Recognition, in Proc. NOLISP2005, Barcelona,

Spain, 2005, pp. 25-33.

[12] Konig, Y., Heck, L., Weintraub, M. & Sonmez, K.,

Nonlinear discriminant feature extraction for robust text-

independent speaker recognition, in Proc. RLA2C, ESCA

workshop on Speaker Recognition and its Commercial

and Forensic Applications, 1998, pp. 72-75.

[13] Ismail Shahin, Improving Speaker Identification

Performance Under the Shouted Talking Condition Using

the Second-Order Hidden Markov Models, EURASIP

Journal on Applied Signal Processing 2005:4, pp. 482

486.

[14] S. E. Bou-Ghazale and J. H. L. Hansen, A comparative

study of traditional and newly proposed features for

recognition of speech under stress, IEEE Trans. Speech,

and Audio Processing, Vol. 8, No. 4, 2000, pp. 429442.

[15] G. Zhou, J. H. L. Hansen, and J. F. Kaiser, Nonlinear

feature based classification of speech under stress, IEEE

Trans. Speech, and Audio Processing, Vol. 9, No. 3,

2001, pp. 201216.

[16] Simon Doclo and Marc Moonen, On the Output SNR of

the Speech-Distortion Weighted Multichannel Wiener

Filter, IEEE SIGNAL PROCESSING LETTERS, Vol.

12, No. 12, 2005.

[17] Wiener, N., Extrapolation, Interpolation and Smoothing

of Stationary Time Series with Engineering

Applications, Wiely, Newyork, 1949.

[18] Wiener, N., Paley, R. E. A. C., Fourier Transforms in the

Complex Domains, American Mathematical Society,

Providence, RI, 1934.

[19] Koji Kitayama, Masataka Goto, Katunobu Itou and

Tetsunori Kobayashi, Speech Starter: Noise-Robust

Endpoint Detection by Using Filled Pauses, Eurospeech

2003, Geneva, pp. 1237-1240.

[20] S. E. Bou-Ghazale and K. Assaleh, A robust endpoint

detection of speech for noisy environments with application

to automatic speech recognition, in Proc. ICASSP2002,

2002, Vol. 4, pp. 38083811.

[21] A. Martin, D. Charlet, and L. Mauuary, Robust speech /

non-speech detection using LDA applied to MFCC, in

Proc. ICASSP2001, 2001, Vol. 1, pp. 237240.

IJCSI International Journal of Computer Science Issues, Vol. 1, 2009

47

[22] Richard. O. Duda, Peter E. Hart, David G. Strok, Pattern

Classification, A Wiley-interscience publication, John

Wiley & Sons, Inc, Second Edition, 2001.

[23] Sarma, V., Venugopal, D., Studies on pattern recognition

approach to voiced-unvoiced-silence classification,

Acoustics, Speech, and Signal Processing, IEEE

International Conference on ICASSP'78, 1978, Vol. 3,

pp. 1-4.

[24] Qi Li. Jinsong Zheng, Augustine Tsai, Qiru Zhou, Robust

Endpoint Detection and Energy Normalization for Real-

Time Speech and Speaker Recognition, IEEE

Transaction on speech and Audion Processing, Vol. 10,

No. 3, 2002.

[25] Harrington, J., and Cassidy, S., Techniques in Speech

Acoustics, Kluwer Academic Publishers, Dordrecht, 1999.

[26] Makhoul, J., Linear prediction: a tutorial review, in

Proceedings of the IEEE 64, 4 (1975), pp. 561580.

[27] Picone, J., Signal modeling techniques in speech

recognition, in Proceedings of the IEEE 81, 9 (1993), pp.

12151247.

[28] Clsudio Beccchetti and Lucio Prina Ricotti, Speech

Recognition Theory and C++ Implementation, John

Wiley & Sons. Ltd., 1999, pp.124-136.

[29] L.P. Cordella, P. Foggia, C. Sansone, M. Vento., "A Real-

Time Text-Independent Speaker Identification System", in

Proceedings of 12th International Conference on Image

Analysis and Processing, 2003, IEEE Computer Society

Press, Mantova, Italy, pp. 632 - 637.

[30] J. R. Deller, J. G. Proakis, and J. H. L. Hansen, Discrete-

Time Processing of Speech Signals, Macmillan, 1993.

[31] F. Owens., Signal Processing Of Speech, Macmillan New

electronics. Macmillan, 1993.

[32] F. Harris, On the use of windows for harmonic analysis

with the discrete fourier transform, in Proceedings of the

IEEE 66, 1978, Vol.1, pp. 51-84.

[33] J. Proakis and D. Manolakis, Digital Signal Processing,

Principles, Algorithms and Aplications, Second edition,

Macmillan Publishing Company, New York, 1992.

[34] D. Kewley-Port and Y. Zheng, Auditory models of formant

frequency discrimination for isolated vowels, Journal of

the Acostical Society of America, 103(3), 1998, pp. 1654

1666.

[35] D. OShaughnessy, Speech Communication - Human and

Machine, Addison Wesley, 1987.

[36] E. Zwicker., Subdivision of the audible frequency band

into critical bands (frequenzgruppen), Journal of the

Acoustical Society of America, 33, 1961, pp. 248260.

[37] S. Davis and P. Mermelstein, Comparison of parametric

representations for monosyllabic word recognition in

continuously spoken sentences, IEEE Transactions on

Acoustics Speech and Signal Processing, 28, 1980, pp.

357366.

[38] S. Furui., Speaker independent isolated word recognition

using dynamic features of the speech spectrum, IEEE

Transactions on Acoustics, Speech and Signal

Processing, 34, 1986, pp. 5259.

[39] S. Furui, Speaker-Dependent-Feature Extraction,

Recognition and Processing Techniques, Speech

Communication, Vol. 10, 1991, pp. 505-520.

[40] Siddique and M. & Tokhi, M., Training Neural Networks:

Back Propagation vs. Genetic Algorithms, in Proceedings

of International Joint Conference on Neural Networks,

Washington D.C.USA, 2001, pp. 2673- 2678.

[41] Whiteley, D., Applying Genetic Algorithms to Neural

Networks Learning, in Proceedings of Conference of the

Society of Artificial Intelligence and Simulation of

Behavior, England, Pitman Publishing, Sussex, 1989, pp.

137- 144.

[42] Whiteley, D., Starkweather and T. & Bogart, C., Genetic

Algorithms and Neural Networks: Optimizing Connection

and Connectivity, Parallel Computing, Vol. 14, 1990, pp.

347-361.

[43] Kresimir Delac, Mislav Grgic and Marian Stewart Bartlett,

Recent Advances in Face Recognition, I-Tech Education

and Publishing KG, Vienna, Austria, 2008, pp. 223-246.

[44] Rajesskaran S. and Vijayalakshmi Pai, G.A., Neural

Networks, Fuzzy Logic, and Genetic Algorithms-

Synthesis and Applications, Prentice-Hall of India Private

Limited, New Delhi, India, 2003.

[45] N. A. Fox, B. A. O'Mullane and R. B. Reilly, The Realistic

Multi-modal VALID database and Visual Speaker

Identification Comparison Experiments, in Proc. of the

5th International Conference on Audio- and Video-

Based Biometric Person Authentication (AVBPA-2005),

New York, 2005.

Md. Rabiul Islam was born in Rajshahi,

Bangladesh, on December 26, 1981. He

received his B.Sc. degree in Computer

Science & Engineering and M.Sc. degrees

in Electrical & Electronic Engineering in

2004, 2008, respectively from the Rajshahi

University of Engineering & Technology,

Bangladesh. From 2005 to 2008, he was a

Lecturer in the Department of Computer

Science & Engineering at Rajshahi University of Engineering &

Technology. Since 2008, he has been an Assistant Professor in

the Computer Science & Engineering Department, University of

Rajshahi University of Engineering & Technology, Bangladesh. His

research interests include bio-informatics, human-computer

interaction, speaker identification and authentication under the

neutral and noisy environments.

Md. Fayzur Rahman was born in 1960 in

Thakurgaon, Bangladesh. He received the

B. Sc. Engineering degree in Electrical &

Electronic Engineering from Rajshahi

Engineering College, Bangladesh in 1984

and M. Tech degree in Industrial

Electronics from S. J . College of

Engineering, Mysore, India in 1992. He

received the Ph. D. degree in energy and environment

electromagnetic from Yeungnam University, South Korea, in 2000.

Following his graduation he joined again in his previous job in BIT

Rajshahi. He is a Professor in Electrical & Electronic Engineering

in Rajshahi University of Engineering & Technology (RUET). He is

currently engaged in education in the area of Electronics &

Machine Control and Digital signal processing. He is a member of

the Institution of Engineers (IEB), Bangladesh, Korean Institute of

Illuminating and Installation Engineers (KIIEE), and Korean

Institute of Electrical Engineers (KIEE), Korea.

You might also like

- Accp 2021 P 1Document584 pagesAccp 2021 P 1Sarra HassanNo ratings yet

- Speech Recognition Using Linear Predictive Coding and Artificial Neural Network For Controlling Movement of Mobile RobotDocument5 pagesSpeech Recognition Using Linear Predictive Coding and Artificial Neural Network For Controlling Movement of Mobile RobotAhmadElgendyNo ratings yet

- Isolated Speech Recognition Using Artificial Neural NetworksDocument5 pagesIsolated Speech Recognition Using Artificial Neural Networksmdkzt7ehkybettcngos3No ratings yet

- Dushyant Sharma, Patrick A. Naylor, Nikolay D. Gaubitch and Mike BrookesDocument4 pagesDushyant Sharma, Patrick A. Naylor, Nikolay D. Gaubitch and Mike Brookescreatedon2003No ratings yet

- IOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringDocument5 pagesIOSRJEN (WWW - Iosrjen.org) IOSR Journal of EngineeringIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Speaker Recognition System Using MFCC and Vector QuantizationDocument7 pagesSpeaker Recognition System Using MFCC and Vector QuantizationlambanaveenNo ratings yet

- Ma KaleDocument3 pagesMa KalesalihyucaelNo ratings yet

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDocument6 pagesIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)No ratings yet

- Analysis of Feature Extraction Techniques For Speech Recognition SystemDocument4 pagesAnalysis of Feature Extraction Techniques For Speech Recognition SystemsridharchandrasekarNo ratings yet

- Voice Controlled Robot: En407 RoboticsDocument9 pagesVoice Controlled Robot: En407 RoboticsGaus PatelNo ratings yet

- Afaster Training Algorithm and Genetic Algorithm To Recognize Some of Arabic PhonemesDocument13 pagesAfaster Training Algorithm and Genetic Algorithm To Recognize Some of Arabic Phonemeselamraouikarim99No ratings yet

- MFCC StepDocument5 pagesMFCC StepHuỳnh Thanh Dư100% (1)

- 2019 BE Emotionrecognition ICESTMM19Document8 pages2019 BE Emotionrecognition ICESTMM19Gayathri ShivaNo ratings yet

- De-Noising The Speech Signal With FIR Filter Based On MatlabDocument6 pagesDe-Noising The Speech Signal With FIR Filter Based On MatlabBasma JumaaNo ratings yet

- Control of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodDocument6 pagesControl of Robot Arm Based On Speech Recognition Using Mel-Frequency Cepstrum Coefficients (MFCC) and K-Nearest Neighbors (KNN) MethodMada Sanjaya WsNo ratings yet

- Fusion of Spectrograph and LPC Analysis For Word Recognition: A New Fuzzy ApproachDocument6 pagesFusion of Spectrograph and LPC Analysis For Word Recognition: A New Fuzzy ApproachsusasureshNo ratings yet

- Home Automation Please Read This ShitDocument5 pagesHome Automation Please Read This ShitEnh ManlaiNo ratings yet

- Voice RecognitionDocument18 pagesVoice Recognitionr100% (1)

- Speech Analysis in Search of Speakers With MFCC, PLP, Jitter and ShimmerDocument4 pagesSpeech Analysis in Search of Speakers With MFCC, PLP, Jitter and ShimmerLuis Carlos EspeletaNo ratings yet

- 2 Text Independent Voice Based Students Attendance System Under Noisy Environment Using RASTA-MFCC FeatureDocument6 pages2 Text Independent Voice Based Students Attendance System Under Noisy Environment Using RASTA-MFCC FeatureSusanta SarangiNo ratings yet

- Algorithm For The Identification and Verification PhaseDocument9 pagesAlgorithm For The Identification and Verification PhaseCalvin ElijahNo ratings yet

- Utterance Based Speaker IdentificationDocument14 pagesUtterance Based Speaker IdentificationBilly BryanNo ratings yet

- Performance Evaluation of MLP For Speech Recognition in Noisy Environments Using MFCC & WaveletsDocument5 pagesPerformance Evaluation of MLP For Speech Recognition in Noisy Environments Using MFCC & WaveletsaijazmonaNo ratings yet

- An Approach To Extract Feature Using MFCDocument5 pagesAn Approach To Extract Feature Using MFCHai DangNo ratings yet

- On Learning To Identify Genders From Raw Speech Signal Using CnnsDocument5 pagesOn Learning To Identify Genders From Raw Speech Signal Using CnnsharisbinziaNo ratings yet

- Robust Endpoint Detection For Speech Recognition Based On Discriminative Feature ExtractionDocument4 pagesRobust Endpoint Detection For Speech Recognition Based On Discriminative Feature ExtractionMicro TuấnNo ratings yet

- A Noise Suppression System For The AMR Speech Codec: 2.1 Analysis and SynthesisDocument4 pagesA Noise Suppression System For The AMR Speech Codec: 2.1 Analysis and SynthesisMamun AlNo ratings yet

- MFCC and Vector Quantization For Arabic Fricatives2012Document6 pagesMFCC and Vector Quantization For Arabic Fricatives2012Aicha ZitouniNo ratings yet

- The Process of Feature Extraction in Automatic Speech Recognition System For Computer Machine Interaction With Humans: A ReviewDocument7 pagesThe Process of Feature Extraction in Automatic Speech Recognition System For Computer Machine Interaction With Humans: A Reviewsouvik5000No ratings yet

- Speech Recognition Using AANNDocument4 pagesSpeech Recognition Using AANNtestNo ratings yet

- Gender Detection by Voice Using Deep LearningDocument5 pagesGender Detection by Voice Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- JAWS (Screen Reader)Document18 pagesJAWS (Screen Reader)yihoNo ratings yet

- Voice RecognitionDocument6 pagesVoice RecognitionVuong LuongNo ratings yet

- Realization of Embedded Speech Recognmition Module Based On STM32Document5 pagesRealization of Embedded Speech Recognmition Module Based On STM32German Dario BuitragoNo ratings yet

- ROMAIJCADocument8 pagesROMAIJCAAkah Precious ChiemenaNo ratings yet

- Speech Recognition Based On Convolutional Neural Networks and MFCC AlgorithmDocument8 pagesSpeech Recognition Based On Convolutional Neural Networks and MFCC AlgorithmMohit SNo ratings yet

- Rafaqat, Article 2Document6 pagesRafaqat, Article 2Melaku DinkuNo ratings yet

- Attendance System by Biometric Authorization by SpeechDocument43 pagesAttendance System by Biometric Authorization by Speechshu3h0jee7100% (1)

- A Review of Various Techniques Related To Feature Extraction and Classi Fication For Speech Signal AnalysisDocument16 pagesA Review of Various Techniques Related To Feature Extraction and Classi Fication For Speech Signal AnalysisAlodiaD.YusufNo ratings yet

- G R S U S S: Ender Ecognition Ystem Sing Peech IgnalDocument9 pagesG R S U S S: Ender Ecognition Ystem Sing Peech IgnalLuu HarryNo ratings yet

- Malaysian Journal of Computer ScienceDocument14 pagesMalaysian Journal of Computer ScienceAadil MuklhtarNo ratings yet

- Speaker Identification E6820 Spring '08 Final Project Report Prof. Dan EllisDocument16 pagesSpeaker Identification E6820 Spring '08 Final Project Report Prof. Dan EllisRakhi RamachandranNo ratings yet

- $Xwrpdwlf6Shhfk5Hfrjqlwlrqxvlqj&Ruuhodwlrq $Qdo/Vlv: $evwudfw - 7Kh Jurzwk LQ Zluhohvv FRPPXQLFDWLRQDocument5 pages$Xwrpdwlf6Shhfk5Hfrjqlwlrqxvlqj&Ruuhodwlrq $Qdo/Vlv: $evwudfw - 7Kh Jurzwk LQ Zluhohvv FRPPXQLFDWLRQTra LeNo ratings yet

- Speech Emotion Recognition With Deep LearningDocument5 pagesSpeech Emotion Recognition With Deep LearningHari HaranNo ratings yet

- Enhanced Speech Recognition Using ADAG SVM ApproachDocument5 pagesEnhanced Speech Recognition Using ADAG SVM ApproachInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Entropy 21 00479 PDFDocument17 pagesEntropy 21 00479 PDFAshwani RatheeNo ratings yet

- EC5011 Task2 2021E187,2020E023Document3 pagesEC5011 Task2 2021E187,2020E023Sasindu AmeshNo ratings yet

- Digital Signal Processing "Speech Recognition": Paper Presentation OnDocument12 pagesDigital Signal Processing "Speech Recognition": Paper Presentation OnSiri SreejaNo ratings yet

- Voice Disorder Detection Using Long Short Term Memory (LSTM) ModelDocument4 pagesVoice Disorder Detection Using Long Short Term Memory (LSTM) ModelWISSALNo ratings yet

- Spoken Language Identification Using Hybrid Feature Extraction MethodsDocument5 pagesSpoken Language Identification Using Hybrid Feature Extraction MethodsMelissa MulèNo ratings yet

- Speech Recognition With Artificial Neural NetworksDocument6 pagesSpeech Recognition With Artificial Neural Networkshhakim32No ratings yet

- Acoustic Detection of Drone:: Introduction: in Recent YearsDocument6 pagesAcoustic Detection of Drone:: Introduction: in Recent YearsLALIT KUMARNo ratings yet

- Feature Extraction Methods LPC, PLP and MFCCDocument5 pagesFeature Extraction Methods LPC, PLP and MFCCRasool Reddy100% (1)

- Matlab Analysis of Eeg Signals For Diagnosis of Epileptic Seizures by Ashwani Singh 117BM0731 Under The Guidance of Prof. Bibhukalyan Prasad NayakDocument29 pagesMatlab Analysis of Eeg Signals For Diagnosis of Epileptic Seizures by Ashwani Singh 117BM0731 Under The Guidance of Prof. Bibhukalyan Prasad NayakAshwani SinghNo ratings yet

- Voice Activation Using Speaker Recognition For Controlling Humanoid RobotDocument6 pagesVoice Activation Using Speaker Recognition For Controlling Humanoid RobotDyah Ayu AnggreiniNo ratings yet

- Transformer Embedded With Learnable Filters For Heart Murmur DetectionDocument4 pagesTransformer Embedded With Learnable Filters For Heart Murmur DetectionDalana PasinduNo ratings yet

- Confusion Matrix Validation of An Urdu Language Speech Number SystemDocument7 pagesConfusion Matrix Validation of An Urdu Language Speech Number SystemhekhtiyarNo ratings yet

- Some Case Studies on Signal, Audio and Image Processing Using MatlabFrom EverandSome Case Studies on Signal, Audio and Image Processing Using MatlabNo ratings yet

- Automatic Target Recognition: Advances in Computer Vision Techniques for Target RecognitionFrom EverandAutomatic Target Recognition: Advances in Computer Vision Techniques for Target RecognitionNo ratings yet

- Insulin TherapyDocument14 pagesInsulin TherapyElimar Alejandra David GilNo ratings yet

- Envi Scie QuizDocument5 pagesEnvi Scie QuizJenemarNo ratings yet

- An Analysis of Consumption in The Fast Fashion IndustryDocument9 pagesAn Analysis of Consumption in The Fast Fashion IndustryFulbright L.No ratings yet

- Hemhem Crown - WikipediaDocument2 pagesHemhem Crown - WikipediamanandpatelNo ratings yet

- Manual Zte 8900 PDFDocument51 pagesManual Zte 8900 PDFAhmed IsmailNo ratings yet

- Le CorbusierDocument30 pagesLe CorbusierSoumyaNo ratings yet

- Electronics System Design Manufacturing ESDM May 2021Document35 pagesElectronics System Design Manufacturing ESDM May 2021wofedor973No ratings yet

- Radial Fan Conical Vs Parallel Impeller Casing AnalysisDocument10 pagesRadial Fan Conical Vs Parallel Impeller Casing AnalysisvirgilioNo ratings yet

- B1-Test 11-CorrectedDocument9 pagesB1-Test 11-CorrectedMinh ChauNo ratings yet

- ChirdsDocument5 pagesChirdsWise FidelityNo ratings yet

- Physical Sciences P2 Grade 10 Nov 2017 EngDocument15 pagesPhysical Sciences P2 Grade 10 Nov 2017 EngSesanda SibiyaNo ratings yet

- National Biogas Manure Management Programme (NBMMP) 12thplanDocument7 pagesNational Biogas Manure Management Programme (NBMMP) 12thplanRG SegaranNo ratings yet

- 7 E'sDocument9 pages7 E'sShiello JuanicoNo ratings yet

- Corrosion Probe TypeDocument26 pagesCorrosion Probe TypeFirman MuttaqinNo ratings yet

- Unit-I PPC & Functions PDFDocument5 pagesUnit-I PPC & Functions PDFHari Prasad Reddy YedulaNo ratings yet

- SM 25Document144 pagesSM 25Jan Svein HammerNo ratings yet

- Paper Size - WikipediaDocument22 pagesPaper Size - WikipediaMoku GinadaNo ratings yet

- EFX By-Pass Level Transmitter - NewDocument32 pagesEFX By-Pass Level Transmitter - Newsaka dewaNo ratings yet

- 6.VLSI Architecture Design For Analysis of Fast Locking ADPLL Via Feed Forward Compensation Algorithm (33-38)Document6 pages6.VLSI Architecture Design For Analysis of Fast Locking ADPLL Via Feed Forward Compensation Algorithm (33-38)ijcctsNo ratings yet

- Barlas-The Quran and Hermeneutics PDFDocument24 pagesBarlas-The Quran and Hermeneutics PDFAmmar Khan NasirNo ratings yet

- Hierapolis of Phrygia The Drawn Out DemiDocument33 pagesHierapolis of Phrygia The Drawn Out Demivladan stojiljkovicNo ratings yet

- Obesity AND The Metabolic Syndrome: BY DR Anyamele IbuchimDocument49 pagesObesity AND The Metabolic Syndrome: BY DR Anyamele IbuchimPrincewill SmithNo ratings yet

- Kumon Mathematics Progress Tracker Levels C To O PDFDocument2 pagesKumon Mathematics Progress Tracker Levels C To O PDFcharmradeekNo ratings yet

- Fishing Crafts and Gear in River Krishna: Manna Ranjan K, Das Archan K, Krishna Rao DS, Karthikeyan M & Singh DNDocument7 pagesFishing Crafts and Gear in River Krishna: Manna Ranjan K, Das Archan K, Krishna Rao DS, Karthikeyan M & Singh DNUMA AKANDU UCHENo ratings yet

- TubosDocument12 pagesTubosASHIK TNo ratings yet

- Q1.Starch and Cellulose Are Two Important Plant PolysaccharidesDocument15 pagesQ1.Starch and Cellulose Are Two Important Plant PolysaccharidesafshinNo ratings yet

- CID Foundation Only PermitDocument1 pageCID Foundation Only PermitOsvaldo CalderonUACJNo ratings yet

- Nano Satellite REPORTDocument9 pagesNano Satellite REPORTHemlata SharmaNo ratings yet

- Section - III, Pipe SupportsDocument2 pagesSection - III, Pipe SupportspalluraviNo ratings yet