Professional Documents

Culture Documents

Memory Management: Abstract

Memory Management: Abstract

Uploaded by

Thota Indra MohanOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Memory Management: Abstract

Memory Management: Abstract

Uploaded by

Thota Indra MohanCopyright:

Available Formats

Chapter 8

Memory Management

Abstract.

Until this chapter, memory has always been considered as a one dimensional

homogeneous array, possibly subdivided in segments, which are also one dimensional

homogeneous arrays. Memory technology, however, results in very large differences in

cost as a function of access times. This is one of he primary reasons why actual

memories have a much more complex organization, allowing frequently needed data to

reside in very fast memories while seldom used data is stored in very cheap memories.

In this chapter various techniques to organize heterogeneous memories will be

discussed. In addition to being economically attractive these technique can also

significantly increase the versatility of computers.

8.1. Memory hierarchies.

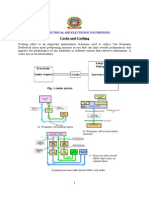

s shown in fig.!.", the cost of memories is strongly influenced by their access time.

#ne can distinguish two different categories of memories, fast and expensive on one

hand, slow and cheap on the other. ccess times as well as cost differ between these

categories by several orders of magnitude. This results from entirely different

technologies$ the fast memories store their information in transistors while the slow

memories use moving magnetic or optical storage devices such as magnetic or optical

dis%s. &ithin both categories, access times also influence significantly the cost$

memory chips with "' ns access time are much more expensive than those requiring

"'' ns or more and magnetic dis%s, with access times in the order of tens of ms are

more expensive than optical dis%s which have access times in the range of hundreds of

ms s a consequence, in order to optimize the overall price(performance ratio, the

memory of modern computers has evolved towards a complex hierarchy of memories

with different access times. )ig.!.* shows such a hierarchy.

&hich of the levels of the hierarchy should be visible to the programmer and which

should be managed transparently by the hardware or the operating system remains a

question of personal preferences. +ome designers have opted for hiding all of the

hierarchy, allowing application programmers to view the whole memory as a one

dimensional array, while the vast ma,ority tend to ma%e visible three main levels, the

registers, the central memory and part of the peripheral storage devices. In this last

approach, cache memories between registers and the central memory and between

central memory and the dis%s are transparent to the programmer, part of the peripheral

memories are transparently used as an extension of central memory -this is often called

.virtual memory/0, but an other part can explicitly be accessed by the programmers. In

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1

large professionally operated computer centers, the hierarchy of explicitly accessible

peripheral memories is managed in a transparent way in order to dispense the users

from ma%ing bac%1up copies and %eeping archives.

Access

time

10

-7

10

-8

10

-6

10

-5

10

-4

10

-3

10

-2

10

-1

10

0

S

Relatie cost per !it

Central memories

Peripheral memories

1

1000

Fig. 8.1. Memory Access-Time vs. Cost

Registers

Central Memory

"is#s

C"$R%M

Archial Stores

Si&e 'log scale(

Spee)

P

e

r

i

p

h

e

r

a

l

m

e

m

o

r

i

e

s

C*+ Cache

"is# Cache

Fig. 1.8. Memory Hierarchy

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -2

8.2. Central Memory Caches.

8.2.1. Principle.

In chapter 3, it was shown that access to central memory is a major performance

bottleneck for sequential computers. This bottleneck got even a name, the Von

Neumann Bottleneck. To minimize the effects of that bottleneck it is essential to

reduce as much as possible the access time of the central memory. Ideally, the access

time of central memory should be in the order of one or two processor clock ticks, but

this would make the cost of central memory prohibitively expensive. Fortunately,

successive memory accesses tend to be strongly clustered, so that it would suffice to

load regularly the most active clusters in a faster memory to, apparently, reduce the

access time of central memory. The principle of a cache memory, located between the

processor and the central memory, is shown in fig.8.3. Whenever, in a read cycle, the

processor issues an address, it is presented both to the cache and to central memory. If

a copy of the requested word is present in the cache, it is send to the processor and the

memory access is aborted, otherwise, the memory access is completed and, in

addition, some words located next to the requested address are copied in the cache.

The performance of a cache memory is best described by its hit-rate, which is the

percentage of memory accesses which can be handled by the cache alone. In well

designed memory systems, hit rates as high as 90% are common.

To implement the described principle, several issues need to be solved. They will be

discussed in the next sections.

Cache

CPU

M

E

M

O

R

Y

Fig.8.3. Principle of a central memory cache.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -!

8.2.2. Cache organization.

The most critical issue for an efficient and cost effective cache is the organization of

the data to allow fast retrieval of stored items. Two extreme approaches are possible,

one is very efficient but prohibitively expensive, while the other is much cheaper, but

potentially inefficient. Both approaches will be discussed and thereafter, a

compromise, which is implemented in most modern computers, will be presented.

Whatever the cache organization is, it is based upon a subdivision of central memory

in small pages of 4, 8 or 16 consecutive words. If a central memory address is k bits

long, and if a cache page contains 2

l

words, each page is identified by the k-l most

significant bits of the addresses of its words. Whenever one of the words of a page is

accessed, the whole page is copied in the cache because the other words have a high

probability of being accessed in the near future.

8.2.2.1. Fully associative cache.

A fully associative cache has a large number of storage units, which can each store

any page, together with its number. When the processor issues an address, the page

number part of it is compared simultaneously with the page numbers of all pages

present in the cache (fig.8.4).

If one of the comparators recognizes the desired page, the requested word is fetched

from the cache and send to the processor, otherwise, the entire page is fetched from

central memory and loaded in one of the storage units of the cache. The optimal

strategy would consist in loading the new page in a unit which contains a page which

will not be accessed any more. Unfortunately, this strategy can not be implemented

because the future behavior of programs is unknown. The next best strategy would

consist in replacing the page which has not been accessed for the longest time, but this

strategy would require to keep an history of all cache accesses, which, considering the

speed of cache memories, would require a prohibitive amount of costly hardware. The

best compromise from a price/performance point of view consists in systematically

replacing the oldest page in the cache.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -4

=?

=?

=?

=?

=?

=?

=?

=?

Page Number

P

a

g

e

N

u

m

b

e

r

Wor !umber

"ithi! #age

$ata

Fig.8.4. Fully associative cache.

Fully associative caches are the most performant, but they require one comparator per

stored page, which, again, considering the speed of cache memories, would be

prohibitively expensive.

8.2.2.2. Fully mapped cache.

A fully mapped cache has 2

m

storage units, which can each store an entire page and

the k-m-l most significant bits of the page number. The m least significant bits of the

page number are called the set number and are used to select the storage unit where

a specific page can be stored. As each page can be stored in just one storage unit, only

one comparator is needed to check if a page is present in the cache (fig.8.5).

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -"

=?

Page Number

Page

Number

Wor !umber

"ithi! #age

$ata

%et

Number

Fig.8.5. Fully mapped cache.

Fully mapped caches are very cost effective but are subject to severe performance

problems: by chance, pages containing instructions and pages containing the data used

by these instructions can belong to the same set, forcing the cache controller to

continuously replace these pages by each other in a single storage unit (cache

trashing).

8.2.2.3. Set associative cache.

Even if some computers use fully mapped caches, most designers prefer to use a

compromise between the performance of fully associative caches and the low cost of

fully mapped caches: instead of one storage unit per set, they use two or our storage

units per set, which requires two or four comparators (fig.8.6), but considerably

reduces the risk of cache trashing.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -#

=?

Page Number

Page

Number

Wor !umber

"ithi! #age

$ata

%et

Number

=?

Fig.8.6. Set associative cache.

8.2.3. Cache consistency.

Memory writes could result in inconsistencies between the contents of central

memory and the contents of the cache. Two approaches are used to prevent such

inconsistencies: they are respectively called write through and dirty bit.

In the write through approach, every write is performed simultaneously in central

memory and in the cache. This does not cause a very important degradation of the

cache performance as read operations are typically much more frequent than write

operations.

In the dirty bit approach, write operations are restricted to the cache. With each

storage unit a dirty bit is associated which is set whenever a write operation has

modified the contents of the unit. Before a storage unit in the cache is overwritten by

a new page, the dirty bit is checked, and, if set, the contents of the storage unit are

written back in central memory. This is more efficient than the write through

approach, but is more complex to manage in case of direct memory access and when

processes terminate before all their pages have been removed from cache.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -$

8.2.4. Cache technology.

In older computers, cache memories were build with very fast RAM and associative

memory chips. Progress in VLSI technology and in computer architecture has

resulted, the last few years, in caches being integrated with the processor on a single

chip. This results in major performance benefits, as, in modern low power digital

electronics, speed inside a chip can be several times higher than what is attainable

when the signals have to leave the chip. This difference is due to the parasitic

capacitances which have to be charged or discharged at each state transition. Inside a

chip these capacitances are very small, while outside a chip, due to the sockets and the

printed interconnections, they can be orders of magnitude larger. Older processors

tended to have very complex instruction sets, requiring very large ROMs for storing

the microcode, and leaving no room on the chip for caches. Computer architects in the

eighties found out that eliminating seldom used instructions does not significantly

affect overall performance, but frees silicon space which can be used for on-chip

cache memories. This design philosophy underlies most of the modern Reduced

Instruction Set Computers such as the Hewlett Packard Precision Architecture, the

Digital Equipment Alpha processor, the IBM RS6000 processors and the SUN

Sparc.

8.3. Disk caches.

Exactly the same considerations can be developed about the data traffic between disk

and central memory as those which were developed about the data traffic between

central memory and the processor. The only differences reside in the time scale and

the page size: central memory caches must be capable of improving upon the typical

100 ns central memory access times, while disk caches only have to improve upon the

mechanically imposed access times of peripheral memories which are, at least, in the

10 ms range. Central memory caches have to be implemented in hardware, preferably

even on the processor chip, while disk caches can be implemented by software, and

can use a fully associative organization, with Least Recently Used replacement

policy, without running into prohibitive cost issues. Pages used in central memory

caches typically contain a few tens of bytes while disk caches normally handle entire

disk sectors or tracks containing often more than 1000 bytes.

The program SMARTDRV, which is standard in MS/DOS, is a good example of a disk

cache implemented in software, which uses part of central memory to store

temporarily data in transit between other parts of central memory and the disk. To be

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -

convinced of the benefits of a disk cache, the reader can remove (temporarily) the

SMARTDRV command from the AUTOEXEC.BAT or CONFIG.SYS files and evaluate

the performance of programs exchanging lots of data with the disk.

Some disk controllers have a build-in cache memory. Such disk caches use in general

very large but very slow RAM memories and a simple 8 bit microprocessor for the

cache management. As slow RAM chips are much cheaper than the high performance

RAM chips normally used for central memory, disk controllers with build-in caches

can be more cost effective than disk caches implemented entirely in software.

8.4. Memory management.

Memory management is a collective name for an whole set of hardware and software

techniques which translate the addresses used by the application programs, called

logical addresses into physical addresses meaningful to the computer hardware

(fig.8.7). The mapping of segmented addresses onto the physical data memory

described in chapter three is one example of memory management, but, as will be

shown further in this chapter, other techniques exist and are used, both to optimally

use memory hierarchies and to increase the versatility of computers.

Logical Addresses

Physical Addresses

Memory Ma!ageme!t %ystem

Fig.8.7. Memory management.

8.4.1. Memory segmentation.

In chapter 3 memory segmentation was introduced in the context of block structured

high-level languages and it was shown that it simplified considerably the issues of

dynamic allocation of memory space for variables and the enforcement of the scope

rules. The concept has a much broader field of application: in any large system, the

software should be subdivided in logically coherent modules and using for each such

module an independent addressing space can only contribute to better modularity.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -9

When segmentation is available, a logical address has two parts: the s most significant

bits identify the segment (segid) and the l least significant bits identify a specific

address within the segment (offset). The maximum number of different segments is

2

s

and the length of each segment can not exceed 2

l

words. The memory management

system assigns to each segment a range of physical addresses, trying to make the best

possible usage of the available physical memory (fig.8.8).

P

h

y

s

i

c

a

&

M

e

m

o

r

y

%egme!t a

%egme!t

%egme!t c

%egme!t b

Fig.8.8. Mapping of segments in physical memory.

The translation of segmented addresses into physical addresses uses a segment table.

This table is an array, indexed by the segment identifier, which contains, for each

segment, the physical address of the first word of the segment, and, possibly, the

actual length of the segment and some attributes, such as read only. In fact, the

hardware display, described in chapter 3 was a specialized, and simplified, version of

a segment table.

A segmented address is translated in the corresponding physical address by looking

up the begin address in the segment table and by adding the offset to it. If the segment

table contains also the segment length and attributes, an interrupt can be generated if

the offset exceeds the segment length or if the intended memory access conflicts with

the segment attributes (fig.8.9).

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -10

P

h

y

s

i

c

a

&

M

e

m

o

r

y

%egme!t Number O''set

Logical Address

%eg.ar. %eg.&e!gth r("

) *

i!t.

%egme!t +ab&e

Fig.8.9. Segmented address translation.

To make sure that programmers would never be restricted in their use of segments

many designers of modern processors have adopted segment identifiers with a fairly

large number of bits (for instance, the Intel 386 family of processors can handle up to

16384 segments). When the programmer needs only a few segments, this would result

in a very large, mostly unused, segment table. To avoid such a waste of table space,

classical techniques for storing and retrieving sparse sets of data, such as AVL trees

or hashing are sometimes used by the memory management system.

8.4.2. Memory pagination.

Pages are fixed length blocks of contiguous memory words. In sections 8.2 and 8.3 it

was shown that the page concept is central in the operation of cache memories. In this

section some other applications of the same concept will be introduced. It is however

essential to realize that the small pages used in central memory caches are completely

unrelated with the pages considered in this section. The pages of central memory

caches contain typically 16 to 64 bytes, while the pages to be discussed here often

contain 4 Kbytes (the later, however, could be used in disk caches).

In a system with pagination, both the logical and the physical address spaces are

subdivided in a certain number of equally sized pages. The l least significant bits of an

address define the offset within a page while the most significant bits define the page

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -11

number. The primary goal of pagination is to dissociate logical addresses from

physical addresses by allowing an arbitrary mapping of logical into physical pages

(fig.8.10).

P

h

y

s

i

c

a

&

M

e

m

o

r

y

,

o

g

i

c

a

&

M

e

m

o

r

y

Fig.8.10. Memory mapping by means of pagination.

This mapping is done by means of a table, similar to the segment table, the page table,

which is an array, indexed by the logical page number and containing the physical

page number, plus possibly, some pages attributes such as a read only flag

(fig.8.11).

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -12

P

h

y

s

i

c

a

&

M

e

m

o

r

y

Page Number O''set

Logical Address

r(" Pag.Nbr.

i!t.

Page +ab&e

Fig.8.11. Paginated address translation.

In computers having very wide address fields, the size of the page table could become

prohibitively large: for instance, 64 bit addresses, with pages of 4 Kbytes would result

in a page table with 2

64-12

= 2

52

entries. It is quite obvious that such a table is

impossible to store but, one should also notice that it would be an almost empty table

because no program needs up to 2

52

pages. Just as for large segment tables the

solution is to be found in the classical techniques to store and retrieve sparse sets of

data, such as hashing or trees. Techniques based upon two or three successive layers

of page tables, organized as a multiway tree, seem to be the most popular approach.

The principle of a two-levels page table is described in fig.8.12.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1!

P

h

y

s

i

c

a

&

M

e

m

o

r

y

O''set

Logical Address

Page +ab&e high

Page Nbr. &o" Page Nbr. high

Page +ab&es &o"

Fig.8.12. Two-levels page table.

Pagination has several applications, two will be described in the following

paragraphs, a third will be the subject of the next section.

In many older computer families, the number of bits in the address fields is

inadequate for the size of modern memories. Memory mapping is often used to allow

larger central memories to be used despite the short addresses. In such situations, the

physical page number has more bits than the logical page number. This means, that at

a given moment only a subset of the physical memory can be accessed from a

program, but, it suffices that, when requested by the program or by the operating

system, the memory manager changes the contents of the page table, to make an other

part of physical memory accessible.

This technique was used in early PCs to expand the memory size over the 1 Mbytes

limit imposed by the 8086 architecture: expanded memory cards could contain

several thousands of 16 Kbytes pages of RAM memory, but, at any moment, only four

of these pages were accessible by the processor, through four reserved blocks of 16 K

addresses. The page table, mapping the normal PC addresses into pages of the

expanded memory was part of the expanded memory board, and its contents could be

modified by the processor by means of IO commands. Figure 8.13 gives a schematic

representation of the expanded memory concept, limited to one block, instead of four.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -14

2

e

n

t

r

a

l

M

e

m

o

r

y

3xpanded Memory -"4 5 pages0

Fig.8.13. Expanded memory principle in PCs.

Memory mapping can also be used to free application programs from addressing

constraints imposed by the hardware. In a multitasking system, for instance, all

programs can be written as if they were loaded in memory starting at address 0, while,

in reality, they are all loaded at different locations in physical memory. In such

systems, the process scheduler must instruct the memory manager to load the

appropriate page numbers in the page table whenever a program is activated.

A typical example of this application is to be found in the PC windows environment.

The screen of a PC is memory mapped, so that to write something on the screen it

suffices to write it at predefined addresses in memory. Programs written for MS/DOS,

which, initially, was a single task operating system, do all their screen output in that

way, and, of course have access to the entire screen. The windows environment,

however, wants to restrict the output of each program to a single window, which can

be arbitrarily sized and moved over the screen. In the Windows 386 enhanced mode,

pagination is used to map the logical pages corresponding to the screen into other

physical pages. A special Windows task regularly scans these pages, and transfers

their contents, after scaling, in the appropriate window on the screen.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1"

8.4.3. Virtual memory.

Until here, it was implicitly assumed that the physical addresses stored in segment or

in page tables were central memory addresses. Allowing also disk addresses has

considerable advantages: seldom used segments or pages can be kept on disk until

they are needed, and by swapping transparently segments or pages between disk and

central memory, programs requiring more central memory than physically available

can be executed without adaptations. Expanding transparently central memory by

managing part or whole of the peripheral memories by means of the segmentation or

the pagination mechanism is called virtual memory. Due to the variable size of

segments and the difficulty of managing efficiently a changing set of blocks with

different lengths, virtual memory is almost always implemented through pagination

rather than through segmentation (fig.8.14).

C

e

!

t

r

a

&

M

e

m

o

r

y

Page Number O''set

Logical Address

r(" Pag.Nbr.

Mem.#rot.i!t.

Page +ab&e

#(c

Peri#hera& Memory

Page 'au&t i!t.

Fig.8.14. Virtual memory principle, with pagination.

The principle of operation of virtual memory is fairly simple: whenever a reference is

made to a page which is not present in central memory, a page fault interrupt is

generated and the memory management software is activated to load the requested

page in central memory. Once the page is loaded, the program which requested it can

be reactivated by the process scheduler. Normally, when an interrupt occurs, the

processor first completes the current instruction and thereafter responds to the

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1#

interrupt, but this mechanism is inadequate for page fault interrupts, as the instruction

which caused the interrupt can not be completed until the page it requested has been

loaded in central memory. Page fault interrupts undo whatever the responsible

instruction already did, so that, when the requested page will be present, this

instruction will be reexecuted (fig.8.15).

time

6rogress of program

inst.n1*

inst.n7"

inst.n

inst.n1"

Missing page is being loaded

in central memory by OS.

6age )ault

Interrupted

program resumes

Fig.8.15. Page Fault interrupt.

Some issues in virtual memories are quite similar to those encountered in the design

of cache memories: consistency between central and peripheral memories must be

enforced after write operations and whenever a page must be loaded, a page which

can be overwritten must be chosen. The most commonly adopted solutions are the

least recently used replacement policy and the dirty bit technique to enforce

consistency.

8.4.5. Combination of segmentation and pagination.

Segments, as they reflect the organization of the software, are ideally suited to support

data protection. Pages, on the other hand, due to their fixed length, are efficient for

mapping logical addresses into physical addresses and for supporting virtual memory.

There is no objection against combining segmentation and pagination in a single

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1$

memory management scheme, by defining each segment as a variable number of

pages.

In such systems, addresses are composed of three fields: the segment identifier, the

page identifier and the offset within the page. The translation mechanism uses a

segment table, which contains pointers towards a page table for each segment

(fig.8.16). Of course the page mechanism can also be used to support virtual memory.

P

h

y

s

i

c

a

&

M

e

m

o

r

y

O''set

Logical Address

%egme!t +ab&e

Page Nbr. %egme!t Nbr.

Page +ab&es

Fig.8.16. Address translation in a segmented and paginated system.

8.4.6. Translate Look Aside buffers.

In a system combining segmentation and pagination, every memory access requires at

least two table lookups. As the segment and page tables can be very large, it would be

prohibitively expensive to use very fast memories for these tables, so that, in fact

segmentation and pagination would increase the access time of central memory by, at

least, a factor three. Fortunately, memory accesses are not made at random, successive

accesses have a high probability to reach the same segment and even the same page.

Therefore, each time a logical address is translated into a physical address, both the

logical segment and page identifiers and the corresponding physical page number are

stored in a Translate Look Aside buffer. This buffer can keep the results of the 8 or

16 most recent address translations and each time a logical address is issued by the

processor, it is compared with all the logical addresses stored in the TLB. If the

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -1

logical address is present in the TLB, the physical address is directly available.

Otherwise, the translation process has to take place and its result will be saved in the

TLB for future use. With the help of a TLB, the frequency of translations can be kept

so low that, without unacceptable performance penalties, address translations can be

done by software, with segment and page tables stored in central memory. These

translations are initiated by an interrupt generated by the TLB, similar to the page

fault interrupt described in the section on virtual memory (fig.8.17). In fact, the

interrupt generated by the TLB is also the one used for page faults, as the absence of a

page from central memory will be discovered during the address translation process

initiated by the TLB.

P

h

y

s

i

c

a

&

M

e

m

o

r

y

%egme!t +ab&e

O''set

Logical Address

Page Nbr. %egme!t Nbr.

Page +ab&es

=?

=?

=?

=?

+

o

f

t

w

a

r

e

6age )ault Interrupt

8og.+egment96age :br. 6h.6age :br.

Peri#hera& Memory

Fig.8.17. Translate Look-aside buffer.

8.5. References.

A.Silberschatz, J.L.Peterson.

Operating System Concepts

Addison-Wesley, 1988.

ISBN 0-201-18760-4

H.S.Stone.

High-performance Computer Architecture.

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -19

Addison-Wesley, 1987.

ISBN 0-201-16802-2

J.L.Hennessy, D.A. Patterson

Computer Architecture, A Quantitative Approach

Morgan Kaufmann Publishers, 1990.

ISBN 1-55860-069-8

J.Tiberghien - Computer Systems - Printed: 29/09/2014

Chapter -20

You might also like

- Exstream01PrepApps PDFDocument147 pagesExstream01PrepApps PDFAymen EL ARBINo ratings yet

- Sprint PDFDocument9 pagesSprint PDFPamela Tillett0% (1)

- Introduction To Knowledge ManagementDocument8 pagesIntroduction To Knowledge Managementvphaniendra1No ratings yet

- Design of Cache Memory Mapping Techniques For Low Power ProcessorDocument6 pagesDesign of Cache Memory Mapping Techniques For Low Power ProcessorhariNo ratings yet

- Cache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTDocument8 pagesCache: Why Level It: Departamento de Informática, Universidade Do Minho 4710 - 057 Braga, Portugal Nunods@ipb - PTsothymohan1293No ratings yet

- Shared-Memory Multiprocessors - Symmetric Multiprocessing HardwareDocument7 pagesShared-Memory Multiprocessors - Symmetric Multiprocessing HardwareSilvio DresserNo ratings yet

- Research Article: Memory Map: A Multiprocessor Cache SimulatorDocument13 pagesResearch Article: Memory Map: A Multiprocessor Cache SimulatorMuhammad Tehseen KhanNo ratings yet

- A New Memory Allocation Method For Shared Memory Multiprocessors With Large Virtual Address SpaceDocument18 pagesA New Memory Allocation Method For Shared Memory Multiprocessors With Large Virtual Address SpacepippoNo ratings yet

- 18bce2429 Da 2 CaoDocument13 pages18bce2429 Da 2 CaoLatera GonfaNo ratings yet

- Cache MemoriesDocument58 pagesCache MemoriesFrans SandyNo ratings yet

- Dynamic Storage Allocation Techniques Final PDFDocument14 pagesDynamic Storage Allocation Techniques Final PDFsravanNo ratings yet

- Shashank Aca AssignmentDocument21 pagesShashank Aca AssignmentNilesh KmNo ratings yet

- Computer Organization AnswerDocument6 pagesComputer Organization Answersamir pramanikNo ratings yet

- Chapter 2Document6 pagesChapter 2AadilNo ratings yet

- Smart MemoriesDocument10 pagesSmart MemoriesroseNo ratings yet

- Smart MemoriesDocument11 pagesSmart MemoriesHari KrishNo ratings yet

- CA Classes-171-175Document5 pagesCA Classes-171-175SrinivasaRaoNo ratings yet

- Memory ManagementDocument6 pagesMemory ManagementAgung PambudiNo ratings yet

- Unit 4 - Operating System - WWW - Rgpvnotes.in PDFDocument16 pagesUnit 4 - Operating System - WWW - Rgpvnotes.in PDFPrakrti MankarNo ratings yet

- Limitation of Memory Sys PerDocument38 pagesLimitation of Memory Sys PerJyotiprakash NandaNo ratings yet

- Evaluating Stream Buffers As A Secondary Cache ReplacementDocument10 pagesEvaluating Stream Buffers As A Secondary Cache ReplacementVicent Selfa OliverNo ratings yet

- Term Paper: Cahe Coherence SchemesDocument12 pagesTerm Paper: Cahe Coherence SchemesVinay GargNo ratings yet

- Unit 5 Memory ManagementDocument20 pagesUnit 5 Memory ManagementlmmincrNo ratings yet

- Cache MemoryDocument4 pagesCache MemoryPlay ZoneNo ratings yet

- Advantages and Disadvantages of Virtual Memory Management SchemesDocument2 pagesAdvantages and Disadvantages of Virtual Memory Management SchemesMichael John Sabido100% (3)

- National Institute of Technology, Durgapur: Submitted By: Vipin Saharia (10/MCA/11) Submitted To: Prof. P. ChaudharyDocument18 pagesNational Institute of Technology, Durgapur: Submitted By: Vipin Saharia (10/MCA/11) Submitted To: Prof. P. ChaudharyRahul RaushanNo ratings yet

- Interleaved Memory Organisation, Associative MemoDocument19 pagesInterleaved Memory Organisation, Associative MemoGourav SallaNo ratings yet

- Cache Memory ThesisDocument5 pagesCache Memory Thesisjenniferwrightclarksville100% (2)

- Term Paper On Memory Management UnitDocument5 pagesTerm Paper On Memory Management Unitaflsktofz100% (1)

- CPU Cache: From Wikipedia, The Free EncyclopediaDocument19 pagesCPU Cache: From Wikipedia, The Free Encyclopediadevank1505No ratings yet

- Non Inclusive CachesDocument10 pagesNon Inclusive CachesJohnNo ratings yet

- Operating System Assignment #2 9, Sep. 2011Document2 pagesOperating System Assignment #2 9, Sep. 2011plairotnyNo ratings yet

- CA Classes-186-190Document5 pagesCA Classes-186-190SrinivasaRaoNo ratings yet

- Mastering Stack and Heap For System ReliabilityDocument10 pagesMastering Stack and Heap For System Reliabilitystu807No ratings yet

- Assignment4-Rennie RamlochanDocument7 pagesAssignment4-Rennie RamlochanRennie RamlochanNo ratings yet

- CA Classes-181-185Document5 pagesCA Classes-181-185SrinivasaRaoNo ratings yet

- Os Chap3Document13 pagesOs Chap3Mohammad AhmadNo ratings yet

- Dagatan Nino PRDocument12 pagesDagatan Nino PRdagatan.ninoNo ratings yet

- CHAPTER FIVcoaEDocument18 pagesCHAPTER FIVcoaEMohammedNo ratings yet

- Supporting Ordered Multiprefix Operations in Emulated Shared Memory CmpsDocument7 pagesSupporting Ordered Multiprefix Operations in Emulated Shared Memory CmpstlidiaNo ratings yet

- Cache and Caching: Electrical and Electronic EngineeringDocument15 pagesCache and Caching: Electrical and Electronic EngineeringEnock OmariNo ratings yet

- OriginalDocument5 pagesOriginalT VinassaurNo ratings yet

- CH 6 CoaDocument14 pagesCH 6 Coabinaboss24No ratings yet

- Part 1B, DR S.W. MooreDocument16 pagesPart 1B, DR S.W. MooreElliottRosenthal100% (3)

- Literature Review of Cache MemoryDocument7 pagesLiterature Review of Cache Memoryafmzhuwwumwjgf100% (1)

- Memory Organization: Registers, Cache, Main Memory, Magnetic Discs, and Magnetic TapesDocument15 pagesMemory Organization: Registers, Cache, Main Memory, Magnetic Discs, and Magnetic TapesShivam KumarNo ratings yet

- CH 08Document8 pagesCH 08kapil_arpitaNo ratings yet

- Nandha Engineering College (Autonomous) E-AssignmentDocument5 pagesNandha Engineering College (Autonomous) E-Assignmentmonishabe23No ratings yet

- Unix and C/C++ Runtime Memory Management For Programmers: Excellent Site That Contains Notes About Memory ManagementDocument41 pagesUnix and C/C++ Runtime Memory Management For Programmers: Excellent Site That Contains Notes About Memory ManagementLe Vo Xuan TruongNo ratings yet

- Aca Assignment 1 NewDocument5 pagesAca Assignment 1 NewMalick AfeefNo ratings yet

- Assignment1-Rennie Ramlochan (31.10.13)Document7 pagesAssignment1-Rennie Ramlochan (31.10.13)Rennie RamlochanNo ratings yet

- Data Memory Organization and Optimizations in Application-Specific SystemsDocument13 pagesData Memory Organization and Optimizations in Application-Specific SystemsGowtham SpNo ratings yet

- Hamacher 5th ChapterDocument45 pagesHamacher 5th Chapterapi-3760847100% (1)

- 10 1 1 92 377 PDFDocument22 pages10 1 1 92 377 PDFAzizul BaharNo ratings yet

- Parallel Processing Unit - 6Document11 pagesParallel Processing Unit - 6Balu vakaNo ratings yet

- Computer Organization and Architecture Module 3Document34 pagesComputer Organization and Architecture Module 3Assini Hussain100% (1)

- Module 5Document39 pagesModule 5adoshadosh0No ratings yet

- Asplos 2000Document12 pagesAsplos 2000Alexandru BOSS RONo ratings yet

- TAREKEGN OSA - Computer Organization and ArchitectureDocument38 pagesTAREKEGN OSA - Computer Organization and Architectureyayatarekegn123No ratings yet

- Where Is The Memory Going? Memory Waste Under Linux: Andi Kleen, SUSE Labs August 15, 2006Document11 pagesWhere Is The Memory Going? Memory Waste Under Linux: Andi Kleen, SUSE Labs August 15, 2006Dacong YanNo ratings yet

- Multicore Software Development Techniques: Applications, Tips, and TricksFrom EverandMulticore Software Development Techniques: Applications, Tips, and TricksRating: 2.5 out of 5 stars2.5/5 (2)

- Mac ProtocolDocument38 pagesMac ProtocolThota Indra MohanNo ratings yet

- Input-Output Operations.: J.Tiberghien - Computer Systems - Printed: 29/09/2014Document10 pagesInput-Output Operations.: J.Tiberghien - Computer Systems - Printed: 29/09/2014Thota Indra MohanNo ratings yet

- Amplitude Shift Keying: Experiment - 7Document2 pagesAmplitude Shift Keying: Experiment - 7Thota Indra MohanNo ratings yet

- Carparking Verilog CodeDocument4 pagesCarparking Verilog CodeThota Indra MohanNo ratings yet

- ADC and DACDocument16 pagesADC and DACThota Indra MohanNo ratings yet

- Magnetic Single/Multishot: Mining - Geotechnical - Civil Sale - RentalDocument2 pagesMagnetic Single/Multishot: Mining - Geotechnical - Civil Sale - RentalEmrah MertyürekNo ratings yet

- Caracteristicas DB2Document4 pagesCaracteristicas DB2Sylvia J. VelSanNo ratings yet

- SAP Program For Dumping Already Posted InvoicesDocument3 pagesSAP Program For Dumping Already Posted InvoicesJedeetjeNo ratings yet

- CSC Job Portal: Mgo Gutalac, Zamboanga Del Norte - Region IxDocument1 pageCSC Job Portal: Mgo Gutalac, Zamboanga Del Norte - Region IxPrincess Jhejaidie M. SalipyasinNo ratings yet

- University of Sydney Postgraduate Coursework Application FormDocument5 pagesUniversity of Sydney Postgraduate Coursework Application Formafiwfbuoy100% (1)

- Dahua DH - XVR5x08-X - MultiLang - V4.001.0000000.15.R.211013 - Release-NotesDocument6 pagesDahua DH - XVR5x08-X - MultiLang - V4.001.0000000.15.R.211013 - Release-Notesiceman2k7777No ratings yet

- Proposal Bag ChuchuDocument5 pagesProposal Bag ChuchuAntonio DoloresNo ratings yet

- IBM ECM EMEA Partner Solution Handbook v3Document102 pagesIBM ECM EMEA Partner Solution Handbook v3Ihab HarbNo ratings yet

- Pulser Guide: Supported Pulsers & WiringDocument20 pagesPulser Guide: Supported Pulsers & WiringBac Pham DinhNo ratings yet

- Products Interface MxAutomationDocument55 pagesProducts Interface MxAutomationPedro Tapia JimenezNo ratings yet

- S200 ManualDocument22 pagesS200 ManualNikola PaulićNo ratings yet

- MidexamDocument4 pagesMidexamJack PopNo ratings yet

- Dseries Manual EngDocument198 pagesDseries Manual EngJorge Lacruz GoldingNo ratings yet

- Gawad KALASAG Seal For LDRRMCO Toolkit Guide MR Mario Peralta JRDocument29 pagesGawad KALASAG Seal For LDRRMCO Toolkit Guide MR Mario Peralta JRJosephine Templa-Jamolod100% (1)

- Sap Note PDFDocument3 pagesSap Note PDFSalam YafaiNo ratings yet

- Agility QuestionnaireDocument101 pagesAgility QuestionnaireDeshdeepGupta100% (1)

- Question Paper Computer NetworksDocument3 pagesQuestion Paper Computer NetworksSudhanshu KumarNo ratings yet

- Computer Software: Computer Applications in CommerceDocument15 pagesComputer Software: Computer Applications in CommerceParul PachporNo ratings yet

- DBMS Lesson PlanDocument4 pagesDBMS Lesson PlanShivam PanjtaniNo ratings yet

- Kyocera Km-1620 Parts ListDocument45 pagesKyocera Km-1620 Parts ListcolonsitoNo ratings yet

- Linksys - Spa8000Document233 pagesLinksys - Spa8000DrugacijiBoracNo ratings yet

- Linux Device DriversDocument39 pagesLinux Device Driverscoolsam.sab100% (1)

- 这个就在ADS安装文件里面Document4 pages这个就在ADS安装文件里面loverdayNo ratings yet

- PCS-978 Transformer and Reactor Relay PDFDocument412 pagesPCS-978 Transformer and Reactor Relay PDFKishore KumarNo ratings yet

- CPK 2 Grafika Komputer - Kelompok 3Document11 pagesCPK 2 Grafika Komputer - Kelompok 3Ikfani DifanggaNo ratings yet

- CELF ELC Europe 2009: On Threads, Processes and Co-ProcessesDocument35 pagesCELF ELC Europe 2009: On Threads, Processes and Co-ProcessesrajNo ratings yet

- 3 Exercises OOPDocument4 pages3 Exercises OOPNguyễn Quang Khôi100% (1)