Professional Documents

Culture Documents

Simulating Performance of Parallel Database Systems

Simulating Performance of Parallel Database Systems

Uploaded by

KhacNam NguyễnOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Simulating Performance of Parallel Database Systems

Simulating Performance of Parallel Database Systems

Uploaded by

KhacNam NguyễnCopyright:

Available Formats

SIMULATING PERFORMANCE OF PARALLEL DATABASE SYSTEMS

Julie A. McCann*

Department of computer Science, CITY University,

Northampton Square, LONDON, EC1V 0HB, ENGLAND

jam@cs.city.ac.uk

ABSTRACT

With the introduction of parallelism the measurement and

prediction, of Database system performance, varying the

many design parameters has become a more complex issue.

In this paper we illustrate how simulation techniques can

assist the evaluation process from a design point of view. A

model which simulates DBMS (Database Management

System) sub-query processing performance is presented.

Simulation complexities and turnaround time is improved

using an analytical model. To illustrate this methodology

the design of a transputer based parallel DBMS is

modelled. A number of potential Join algorithms are

simulated and each is compared. Using simulation

techniques, we found that we could quickly and simply

make important design decisions regarding choice of Join

algorithm.

INTRODUCTION

The conventional single processor architecture is, in

performance terms, inadequate to handle today's vast

information processing requirements (Kiessling 88; Hanson

90; Parallelogram 91; Page 92). Applications such as

Decision Support which involve extensive dataset

manipulation are not being fully utilised. The answer is the

database machine and parallelism. Highly parallel database

systems, based on standard general purpose architectures,

are displacing mainframe computers for large database

applications (DeWitt 92), refuting the predicted demise in

1983 (Boral 83).

Parallelism has introduced new and diverse approaches to

architecture and algorithm design. Consequently the

measurement, and more specifically the prediction, of the

effect on performance varying the various design

parameters has become a more complex issue. The major

problem with current DBMS .performance methods is that

* This

work was part of a DPhil project supervised by Prof.

David A. Bell at the University of Ulster, Jordanstown,

BT37 0QB, N.Ireland.

they fail to address the issue of relating poor performance

to specific modules of code, much less reflecting the nature

of parallel execution. Specifically, analytical modelling

alone cannot reflect the non-deterministic behaviour of

situations such as contention and bottlenecks. Therefore we

present a tool which makes use of Simulation techniques to

model the dynamic nature of Parallel DBMSs The problem

of measuring low-level DBMS and hardware component

performance is reduced by employing a simple analytical

model.

MODELLING TECHNIQUE

The proposed simulation tool models CPU consumption at

the level of the elementary serial DBMS modules e.g. page

fetch and tuple merge. To decrease modelling complexity

and furthermore increase experimentation turnaround,

module times are derived analytically (McCann 92). This

hybrid Simulation/Analytical approach has been suggested

before for evaluating general parallel system operation

e.g.(Hanson 90). Hanson's model is geared towards

scientific applications and is relatively difficult to

implement, requiring a great deal of code level knowledge,

to measure the analytical part alone. Our model is unique

in that fine-grain DBMS measurements are obtained simply

and yet are extensive enough to accurately model the

system. The tool can be visualised as measuring the system

in two major layers: the Serial Layer and the Parallel Layer.

The Serial Layer

In the Serial Layer a DBMS query is assumed to consist of

a number of elementary operations the CPU consumption of

which depends on DBMS and hardware configuration and

not the query or its data set. This is termed the operation's

'CPU coefficient'. Initial work on definition of DBMS

elementary units for very simple queries was carried out by

(Hwang 87). This set was expanded to cover more complex

query processing (McCann 92) and the complete set is

presented in Figure 1. The total CPU consumption of a

complete query is the sum of is operation counts, each

multiplied by its corresponding CPU coefficient.

The CPU coefficients are calibrated mostly using linear

regression techniques. Here a query is designed to perform

a relational operation against a number of tables. The

elementary operation parameter being examined is

amplified and varied while all other parameters are held

static. The CPU time is measured for each run of the query

Elementary

Operation

GET PAGE

GET TUPLE

COMPARE

ATTRIBUTE

COMPARE

CHARACTER

OUTPUT

TUPLE

STABLE

OVERHEAD

VARIABLE

OVERHEAD

MERGE

Description

fetch a page from the

disk to memory

fetch a tuple from a

page

compare single integer

or single character

compare a character

within a string

output a tuple satisfying

the query condition

start-up the DBMS

initialise

its

data

tables;(Preci_C only)

concatenate the tuples

into one for output.

a number of parallel Join algorithms on a particular

hardware platform, showing relative performance, but

more importantly demonstrating use, and assets of the

simulation model. Incidentally the same technique was

successfully used to measure Select queries in (McCann

and Bell 93)

Operation Count

number of pages in the queried relation.

total number of tuples in the relation i.e. C

number of bytes compared * C

number of characters actually compared in the string * C

(excluding the first character which is compare attribute).

number of tuples that meet the condition.

static for all DBMS applications running on a particular hardware

configuration

number of bytes in the index file which holds relational

information.

number of tuples merged & no. of words in the resulting tuple

Figure 1. Elementary Operations For Relational Query Processing.

and this is regressed against the extent to which the

parameters varied. The slope of the line is then taken to be

the CPU coefficient. These coefficients can easily be

validated measuring actual query CPU time and comparing

with the total calculated CPU consumption.

In this section we present the hardware configuration and

the proposed parallel DBMS to demonstrate the benefits of

using simulation modelling.

The Parallel Layer

the parallel database system under consideration is being

developed as part of a research project at the University of

Ulster. Its aim is to produce a flexible parallel database

management system using transputer technology. A 386/ix

PC with a root transputer on board connected to a back end

set of transputers is the proposed hardware testbed. The

DBMS itself will not be written from scratch.

Consequently an academic DBMS named Preci_C will

provide the basic relational query processing modules to be

parallelised.

The non-deterministic parallel behaviour is transcribed

form the schematic view of the proposed system design to a

network of processes and queues. In the case study

presented in this paper processes represent the modules and

queues the intermediate buffers. The model is solved using

a simulation package with the capability of producing

statistics identifying bottlenecks and hardware utilisation.

We demonstrate this technique more fully presenting the

input parameters using a case study.

CASE STUDY

One of the most important and time consuming DBMS

modules is the Join, which is used extensively in Decision

Support systems. Consequently we present the simulation of

Hardware and DBMS Configuration.

A communicating process model is used, both in the

implementation of the database operations and the

provision of system services. More detailed information on

this can be found in (Bell 92). When a query enters the

system it is optimised and then transformed into task

schedules. Each schedule is sent out to the appropriate

relational processors own local schedulers. Here each

local scheduler assigns template processes to carry out the

work. On a given processor the template processes are

concurrent elementary operations which co-operate to

perform a given

database operation. They can

communicate both with other template processes on

different processors or within their own processor. Double

buffers are used to transfer data between processes which

have been pipelined together.

Serial layer Measurement

The template process previously described directly relate to

the elementary operations provided in the Serial Layer.

Using the analytical model each was measured on a single

transputer running serial Preci_C. Table 1 shows the CPU

consumption observed for each elementary unit.

The observed coefficients were then validated by executing

a set of queries measuring the total CPU time for each

query. The predicted CPU time for the whole query was

calculated using the coefficients obtained from the model,

and compared to the actual CPU time. The relative error

between the actual and predicted CPU times was also

calculated averaging at 3.7% (McCann and Bell 93). This

result was quite accurate and therefore validates the serial

layer of the model.

Elementary Unit

CPU Coefficient

(sec)

Network II.5 can provide an animated simulation of the

system, and can output measures of hardware utilisation,

software execution and conflicts (Network 90).

The parallel parameters are obtained empirically from the

machine. These can be seen in Table 2. The disk

input/output time is defined as the time to transfer a unit of

data over the communications link between each disk and

transputer. This was measured by varying the amount of

data communicated over the line and performing a

regression.

The intra process and intra processor communications were

measured using the Transputers own internal clock. The

intra-process time is the time to transfer data over the

communications links internal to each Transputer. This

involves communications between processes, such as the

Get process and the Tuple Buffer in the Memory. Again

these are presented in Table 2.

Parameter

Quantity

Number of processors

4

processor cycle time

1

usec.

Storage devices

disk

4

memory

4

DISK I/O TIME:

0.16667 bits/usec.

INTRA-PROCESS TIME

100.00

bits/usec.

INTER-PROCESSOR TIME

3.75

bits/usec.

Table 2 Parallel Layer Parameters

OVERHEAD

variable

static

0.000940

2.740800

0.008460

0.000004

GET PAGE

GET TUPLE

COMPARE ATTRIBUTE

Character

0.000090

Character in a string

0.000005

Integer

0.000050

0.000140

OUTPUT TUPLE

0.036550

SORT TUPLE

0.001200

MERGE CARDINALITY

0.184870

MERGE WIDTH

Table 1 : Preci_C CPU Coefficient Table

Parallel Layer Measurement

The hardware configuration and dynamic DBMS

processing is modelled using a simulation package.

The hardware is modelled in terms of Processing, transfer

and storage Devices - Each Transputer is defined as a

Processing device. In our model we have assumed that

messages can be received by the processor while it is

processing modules i.e. it has an input controller.

MODELLING JOIN ALGORITHMS

The Join operation can require a considerable amount of

resources, and is often a source of poor performance.

Several Join algorithms are available to the DBMS

designer, each with varying degrees of performance and

ease of programming. To demonstrate the models

usefulness, three algorithms were chosen for investigation:

Nested Loop, Hash and a Sort Merge Join. These may not

follow popularly recognised definitions of each algorithm,

therefore they are described in more detail below,

highlighting any simplifying assumptions made.

With the following algorithms, we assume that the Join

relations are horizontally partitioned evenly over a 4 node

file system by some key value. On a number of occasions

the table's key attribute will not necessarily be the Join

attribute. This is the worst case scenario and it is the

situation we have assumed here. With this the designer is

faced with a number of Join algorithms.

Hash Join

The most popular Join algorithm in the database machine

arena is the parallel Hash Join, which has demonstrated

considerable performance improvements. In our simulation

data from both Join relations is read in and each tuple is

hashed on the join attribute which provides the processor

identifier to which the tuple will be redistributed. The Hash

process chosen for demonstration purposes is the wellknown:

Key MOD Number of Processors.

RESULTS

Scale-up measurements were obtained by executing the

Network II.5 simulations for all three Join algorithms. For

each Join, the amount of data input into the system was

increased in steps from 100 pages to 3200 pages, and the

statistics recorded by Network II.5. The amount of

information produced by the simulation package is vast, so

we have decided not to present it in its entirety here.

Instead, summaries of these results are presented

graphically and discussed. The major areas of interest to

the DBMS designer are:

varying response time,

the identification of bottle necks,

hardware utilisation, and

module wait time.

The next few sections present the measurements obtained

from the simulation experiments, bearing in mind the

DBMS designers requirements.

The execution time for the Hash process was measured by

implementing it in C on a transputer, and was found to be

negligible.

Sort Merge Join

Again the tables' key attributes are not the Join attribute. In

this simulation the largest relation is held local to a

processor and sorted by the Join attribute. Meanwhile the

smaller relation is redistributed to all other nodes and then

sorted when received using Preci_C's own insertion type

sort. When the Sort process is finished the sorted relations

are fed directly into the Merge phase.

Nested Loop Join

This is popular in serial DBMS mainly due to its ease of

programming. In this algorithm the larger relation is again

held locally, and the shorter redistributed to all nodes.

When a tuple is received the Merge module compares the

tuple to each tuple in the local relation.

500

H as h

AAAA

AAAAAAAA

AAAA

AAAAAAAA

AAAA

AAAAAAAA

AAAA

AAAAAAAA

AAAA

AAAAAAAA

AAAA

AAAA

AAAA

300

AAAA

AAAAAAAA

AAAA

AAAA

AAAA

L oop

AAAA

AAAAAAAA

AAAA

AAAA

AAAA

AAAAAAAAAAAA

AAAA

AAAA

AAAA

200

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAAAAAA

AAAAAAAAAAAA

AAAA

AAAA

AAAA

100

AAAA

AAAAAAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAAAAAAAAAA

AAAAAAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAAAAAAAAAA

AAAAAAAAAAAA

AAAAAAAAAAAA

400

response time (sec)

Once redistributed the tuple is placed into the destined

processors hash bucket. The number of pages in the

relation may not fit into memory, therefore page swapping

may occur (This is catered for in the simulation).The tuples

for each relation are then merged.

Table 3. Join Response Time

AAAA

AAAAAAAA

AAAA S ort-Merge

AAAA

AAAAAAAA

AAAA Nes ted

0

100

200

400

800

1600

3200

number of pages

Response Time

The response time gives the database designer the most

comprehensive overview of the whole systems

performance. Table 3 shows the response times for all three

Joins. The results confirm the reason why the Hash Join is

popular in terms of performance; out performing the Nested

Loop simulation by 4 times and the Sort Merge twice. The

poor performance may be due to the communications cost

incurred by redistributing the shorter relation in full to all

the nodes, plus some other issues. Therefore it would be

useful to examine our results in further detail.

Module Response Times

Examining individual module response time can identify

the modules causing the inferior performance. Table 4

clearly illustrates where the bottlenecks occur within the

Join processing. Firstly the Merge module in the Nested

Table 4. Module times for 3200 pages

400

350

300

250

200

150

100

responsetime(sec)

50

0

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

S ort M erge

AAAA

AAAA

AAAA

AAAA

AAAAn es t ed loop

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAA

AAAAAAAA

AAAAAAAA

AAAAAAAA

AAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAA AAAAAAAAAAAAAAAA

AAAA

AAAAH as h

200

sort time

100

50

0

100

800

1600

3200

The model presented here does, however, give an indication

of how long the process has spent in waiting. Network II.5

identifies this as the precondition time and is shown in

Table 6.

T abl e 6 . M odu l e W a i t ti m e s

160

140

H as h

120

S o rt M e rg e

100

n e s t e d lo o p

80

60

40

responsetime(sec)

Research has found that using the sort of data flow parallel

architecture we describe here, utilisation figures for

processors can be deceiving. This is because they do not

400

usually show the time spent in the wait state This is

defined as the processor time spent by the module who is

busy waiting on some synchronisation primitive (Gupta

91). In the architecture modelled here, the modules are preempted, waiting to run when the data is available for

processing. Anderson also emphasises the importance of

measuring wait-time to identify critical sections and

bottlenecks (Anderson 90).

Hardware Utilisation

Module Wait-time

200

number of pages

module

Network II.5 produces utilisation results for each hardware

device. For the simulation time it was reported that the

processors were utilised at almost 100% for all joins. This

indicates that a good load balance was achieved. At a given

instance a given processor executes in a interleaved fashion

a number of template modules, as in traditional multitasking machines. Consequently one process can be

performing I/O whilst the other is executing. This would

therefore provide the high processor utilisation observed by

the simulator. However this does not indicate the extent to

which a module waits between execution cycles. This area

is expanded in the next section.

total response

time

150

response time (sec)

Loop algorithm is seen to consume a much greater amount

of processing time. This is because, for each tuple received

the complete local relation is scanned. Secondly we can

also detect a bottleneck with the Sort module in the Sort

Merge algorithm. We can observe further the extent to

which the Sort module is a bottleneck in Table 5. That is,

on average the Sort module takes up 70% of the total

response time consumed by the Sort Merge Join. The Sort

process measured here is based on a rather simplistic

sorting routine used by Preci_C. It has consequently been

suggested that this routine is to be replaced by a more

sophisticated parallel sorting routine.

Table 5. Scaled up Sort Module time

20

0

m o d u le

We can observe the Merge module has the largest wait time

especially in the Sort Merge join. In the Sort Merge

algorithm the Merge must wait on the relations being

sorted once received. Likewise, the Hash's merge must wait

on all the redistributed tuples to arrive before proceeding

with the merge phase. On the other hand in the Nested

Loop join we observe a smaller wait time because the merge

processing can commence immediately the first tuple is

received.

implementation of a large commercial DBMS onto a multicomputer shared nothing architecture.

Hardware precondition times are also measured. The

maximum time the processors spent idle waiting on storage

or transfer devices is charted is quite a low figure, at a

maximum approximately 60 micro seconds were wasted

by the processor waiting on a file request or the use of a

transfer device. This is mainly due to the shared nothing

architecture, i.e. distributed memory and disks therefore

reducing contention.

REFERENCES

CONCLUSION

We describe a general technique which supports the

prediction of database query performance using simulation

techniques. The model consists of two stages. First, a

sequential model of the DBMS is used to obtain various

experimental parameters measured at the elementary

operation level of input, comparison and output. Parallel

database operations are then modelled as networks of

communicating sequential processes, allowing particular

weaknesses and strengths in a given system to be analysed.

We illustrated how simulation modelling provides

substantial insights into proposed database system

architectures using Join processing as an example. The

Joins simulated were variations on the Nested Loop, Sort

Merge and Hash algorithms. Each was simulated and their

respective results examined with regard to response time,

utilisation and wait time. The knowledge gained from these

measures enabled us to confirm that the Hash algorithm

will indeed provide the best performance for non-key Joins

on our particular architecture. We identified that the

bottlenecks associated with the other two algorithms lay in

the amount of data being redistributed over the network,

the inferior Sorting process in the Sort Merge Join and the

great processing requirement of the looping in the Nested

Loop Join. In conclusion we have demonstrated the

methodologys ability to aid the DBMS designer in tuning a

developing parallel database system.

FUTURE

Use of simulation techniques to measure performance has

continued as part of the PRIMA (Parallel Relational

Information Management Architecture) project in City

University. It has been successfully employed to evaluate

migration strategies in File System management (McCann

et al. 93). It is currently being used to aid the

Anderson T.E., Lazowska E.D., "Quartz: A tool for tuning Parallel

Program Performance" ACM, 1990

Bell D. A., Hull M. E., Cai F. F., Guthrie D., Shapcott C.M., A

Flexible Parallel Database System Using Transputers. IEEE

International Conference on Computer Systems and Software

Engineering, The Hague, May 1992

Boral H, DeWitt D, "Database Machines: An idea whose time has

passed? A critique of the future of database machines". In

Proceedings of the 1983 Workshop on Database Machines.

Springer-Verlag, 1983

DeWitt D, Gray J, "Parallel Database Systems: The Future of

High Performance Database System", In Communications of the

ACM, June 1992, Vol. 35, No. 6

Gupta A., et al., "The Impact of Operating System Scheduling

Policies on Synchronisation Methods on the Performance of

Parallel Applications" ACM SIG metrics Performance Evaluation

Review, Vol. 16, No.1, May 1991

Hanson J G, Orooji A, "Predictive Performance Analysis of a

Multi-Computer Database System", In \italic{Information

Systems, Vol. 15, Pt. 4, 1990

Hwang H-Y., Yu Y-T., "An Analytical Method for Estimating and

Interpreting Query Time", Proceedings of 13th VLDB Conference,

Brighton, 1987.

Kiessling K, "Access Path Selection in Databases With Intelligent

Disk Subsystem", In The Computer Journal, Vol. 31, No. 1, 1988

McCann J A, Bell D A, "A Hybrid Benchmarking Model for

database Machine Performance Studies", In Computer

Benchmarks, eds. Dongarra J, Gentzsch W, North-Holland 1993

McCann J A, et. al. "Multi-processor File-System Performance

Modelling", To be published in the proceedings of UK

Performance Engineering Workshop for Computer and

Telecommunication Systems, Pentech Press, 1993

Network II.5 Users Manual, Version 6, CACI Products Company,

July 1990

Page J, "An Introduction to the Teradata DBC/1012", In

Proceedings of the Conference on Parallel Processors Benchmarking and Assessment, National Physical Laboratory and

British Computer Society, March 1992

Parallelogram 91 In Parallelogram, August 1991, p 4

You might also like

- 1Document11 pages1aiadaiadNo ratings yet

- Ijait 020602Document12 pagesIjait 020602ijaitjournalNo ratings yet

- Plugin Science 1Document18 pagesPlugin Science 1Ask Bulls BearNo ratings yet

- Motor Drive SystemDocument6 pagesMotor Drive SystemJay MjNo ratings yet

- The Data Locality of Work Stealing: Theory of Computing SystemsDocument27 pagesThe Data Locality of Work Stealing: Theory of Computing SystemsAnonymous RrGVQjNo ratings yet

- Stanley AssignmentDocument6 pagesStanley AssignmentTimsonNo ratings yet

- The Simulation and Evaluation of Dynamic Voltage Scaling AlgorithmsDocument6 pagesThe Simulation and Evaluation of Dynamic Voltage Scaling AlgorithmsArmin AhmadzadehNo ratings yet

- Performance Comparison of Three Modern DBMS Architectures: Alexios Delis Nick RoussopoulosDocument31 pagesPerformance Comparison of Three Modern DBMS Architectures: Alexios Delis Nick Roussopoulossammy21791No ratings yet

- Performance PDFDocument109 pagesPerformance PDFRafa SoriaNo ratings yet

- A Data Throughput Prediction Using Scheduling and Assignment TechniqueDocument5 pagesA Data Throughput Prediction Using Scheduling and Assignment TechniqueInternational Journal of computational Engineering research (IJCER)No ratings yet

- TT156 EditedDocument13 pagesTT156 EditedFred MillerNo ratings yet

- A New Parallel Architecture For Sparse Matrix Computation Based On Finite Projective Geometries - N KarmarkarDocument12 pagesA New Parallel Architecture For Sparse Matrix Computation Based On Finite Projective Geometries - N KarmarkarvikrantNo ratings yet

- Weighting Techniques in Data Compression Theory and AlgoritmsDocument187 pagesWeighting Techniques in Data Compression Theory and AlgoritmsPhuong Minh NguyenNo ratings yet

- Improving Analysis of Data Mining by Creating Dataset Using SQL AggregationsDocument6 pagesImproving Analysis of Data Mining by Creating Dataset Using SQL Aggregationswww.irjes.comNo ratings yet

- Computation-Aware Scheme For Software-Based Block Motion EstimationDocument13 pagesComputation-Aware Scheme For Software-Based Block Motion EstimationmanikandaprabumeNo ratings yet

- Application of Queueing Network Models in The Performance Evaluation of Database DesignsDocument24 pagesApplication of Queueing Network Models in The Performance Evaluation of Database DesignsGabriel OvandoNo ratings yet

- Formal Verification of Distributed Dynamic Thermal ManagementDocument8 pagesFormal Verification of Distributed Dynamic Thermal ManagementFaiq Khalid LodhiNo ratings yet

- Turbomachinery CFD On Parallel ComputersDocument20 pagesTurbomachinery CFD On Parallel ComputersYoseth Jose Vasquez ParraNo ratings yet

- Final Project InstructionsDocument2 pagesFinal Project InstructionsjuliusNo ratings yet

- Simulation-Based Automatic Generation Signomial and Posynomial Performance Models Analog Integrated Circuit SizingDocument5 pagesSimulation-Based Automatic Generation Signomial and Posynomial Performance Models Analog Integrated Circuit Sizingsuchi87No ratings yet

- SPE 141402 Accelerating Reservoir Simulators Using GPU TechnologyDocument14 pagesSPE 141402 Accelerating Reservoir Simulators Using GPU Technologyxin shiNo ratings yet

- JaJa Parallel - Algorithms IntroDocument45 pagesJaJa Parallel - Algorithms IntroHernán Misæl50% (2)

- Exploiting Dynamic Resource Allocation For Efficient Parallel Data Processing in Cloud-By Using Nephel's AlgorithmDocument3 pagesExploiting Dynamic Resource Allocation For Efficient Parallel Data Processing in Cloud-By Using Nephel's Algorithmanon_977232852No ratings yet

- A Gradient-Based Approach For Power System Design Using Electromagnetic Transient SimulationDocument5 pagesA Gradient-Based Approach For Power System Design Using Electromagnetic Transient SimulationBarron JhaNo ratings yet

- Gridmpc: A Service-Oriented Grid Architecture For Coupling Simulation and Control of Industrial SystemsDocument8 pagesGridmpc: A Service-Oriented Grid Architecture For Coupling Simulation and Control of Industrial SystemsIrfan Akbar BarbarossaNo ratings yet

- Process FlowsheetingDocument2 pagesProcess FlowsheetingEse FrankNo ratings yet

- A Comparison of Performance and AccuracyDocument14 pagesA Comparison of Performance and AccuracyUmer SaeedNo ratings yet

- A Low-Power Reconfigurable Data-Flow Driven DSP System: Motivation and BackgroundDocument10 pagesA Low-Power Reconfigurable Data-Flow Driven DSP System: Motivation and BackgroundChethan JayasimhaNo ratings yet

- Data Mining Project 11Document18 pagesData Mining Project 11Abraham ZelekeNo ratings yet

- PR Oof: Memristor Models For Machine LearningDocument23 pagesPR Oof: Memristor Models For Machine LearningtestNo ratings yet

- Assignment-2 Ami Pandat Parallel Processing: Time ComplexityDocument12 pagesAssignment-2 Ami Pandat Parallel Processing: Time ComplexityVICTBTECH SPUNo ratings yet

- A Multiway Partitioning Algorithm For Parallel Gate Level Verilog SimulationDocument8 pagesA Multiway Partitioning Algorithm For Parallel Gate Level Verilog SimulationVivek GowdaNo ratings yet

- Tutorial On High-Level Synthesis: and WeDocument7 pagesTutorial On High-Level Synthesis: and WeislamsamirNo ratings yet

- Mi 150105Document8 pagesMi 150105古鹏飞No ratings yet

- A New Vlsi Architecture For Modi EdDocument6 pagesA New Vlsi Architecture For Modi Edsatishcoimbato12No ratings yet

- Composing Modeling and Simulation With Machine Learning in JuliaDocument11 pagesComposing Modeling and Simulation With Machine Learning in Juliaachraf NagihiNo ratings yet

- Achieving High Speed CFD Simulations: Optimization, Parallelization, and FPGA Acceleration For The Unstructured DLR TAU CodeDocument20 pagesAchieving High Speed CFD Simulations: Optimization, Parallelization, and FPGA Acceleration For The Unstructured DLR TAU Codesmith1011No ratings yet

- Ijesrt: Performance Analysis of An Noc For Multiprocessor SocDocument5 pagesIjesrt: Performance Analysis of An Noc For Multiprocessor SocRajesh UpadhyayNo ratings yet

- Critical Path ArchitectDocument11 pagesCritical Path ArchitectBaluvu JagadishNo ratings yet

- Ambimorphic, Highly-Available Algorithms For 802.11B: Mous and AnonDocument7 pagesAmbimorphic, Highly-Available Algorithms For 802.11B: Mous and Anonmdp anonNo ratings yet

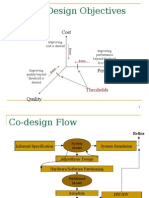

- Review: Design Objectives: ThresholdsDocument19 pagesReview: Design Objectives: ThresholdsSahadev RoyNo ratings yet

- Scimakelatex 14592 XXXDocument8 pagesScimakelatex 14592 XXXborlandspamNo ratings yet

- Opengl-Performance and Bottlenecks: S A, P K SDocument15 pagesOpengl-Performance and Bottlenecks: S A, P K SmartinsergNo ratings yet

- 0026 2714 (86) 90542 1Document1 page0026 2714 (86) 90542 1Jitendra PanthiNo ratings yet

- A Methodology For The Understanding of Gigabit Switches: BstractDocument4 pagesA Methodology For The Understanding of Gigabit Switches: BstractLarchNo ratings yet

- Electromagnetic Transients Simulation As An Objective Function Evaluator For Optimization of Power System PerformanceDocument6 pagesElectromagnetic Transients Simulation As An Objective Function Evaluator For Optimization of Power System PerformanceAhmed HussainNo ratings yet

- Introducing A Unified PCA Algorithm For Model Size ReductionDocument9 pagesIntroducing A Unified PCA Algorithm For Model Size ReductionjigarmoradiyaNo ratings yet

- Computer Model TarvoDocument13 pagesComputer Model TarvoNarayan ManeNo ratings yet

- Dynamic Data Management AmongDocument11 pagesDynamic Data Management AmongCS & ITNo ratings yet

- A Case For Courseware: Trixie Mendeer and Debra RohrDocument4 pagesA Case For Courseware: Trixie Mendeer and Debra RohrfridaNo ratings yet

- Probabilistic Digital Twin For Additive Manufacturing Process Design and ControlDocument3 pagesProbabilistic Digital Twin For Additive Manufacturing Process Design and ControlMadhavarao KulkarniNo ratings yet

- Outer Space An Outer Product Based Sparse Matrix Multiplication AcceleratorDocument13 pagesOuter Space An Outer Product Based Sparse Matrix Multiplication Accelerator陳威宇No ratings yet

- Reconfigurable Architectures For Bio-Sequence Database Scanning On FpgasDocument5 pagesReconfigurable Architectures For Bio-Sequence Database Scanning On Fpgasfer6669993No ratings yet

- Final Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryDocument24 pagesFinal Report: Delft University of Technology, EWI IN4342 Embedded Systems LaboratoryBurlyaev DmitryNo ratings yet

- An Inside Look at Version 9 and Release 9.1 Threaded Base SAS ProceduresDocument6 pagesAn Inside Look at Version 9 and Release 9.1 Threaded Base SAS ProceduresNagesh KhandareNo ratings yet

- A Systematic Approach To Composing and Optimizing Application WorkflowsDocument9 pagesA Systematic Approach To Composing and Optimizing Application WorkflowsLeo Kwee WahNo ratings yet

- An Overview of Cell-Level ATM Network SimulationDocument13 pagesAn Overview of Cell-Level ATM Network SimulationSandeep Singh TomarNo ratings yet

- An Analytical Model of The Working-Set Sizes in Decision-Support SystemsDocument11 pagesAn Analytical Model of The Working-Set Sizes in Decision-Support SystemsNser ELyazgiNo ratings yet

- Design and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesFrom EverandDesign and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesNo ratings yet

- Sic Mos Trench SJ Micromachines-13-01770-V2Document12 pagesSic Mos Trench SJ Micromachines-13-01770-V2terry chenNo ratings yet

- Numerical Methods For Partial Differential Equations: CAAM 452 Spring 2005 Instructor: Tim WarburtonDocument26 pagesNumerical Methods For Partial Differential Equations: CAAM 452 Spring 2005 Instructor: Tim WarburtonAqib SiddiqueNo ratings yet

- State-Of-Charge Estimation of Lithium-Ion Batteries Using Extended Kalman Filter and Unscented Kalman FilterDocument4 pagesState-Of-Charge Estimation of Lithium-Ion Batteries Using Extended Kalman Filter and Unscented Kalman FilterRick MaityNo ratings yet

- Dmedi or Dmaic That Is The Question 1137Document4 pagesDmedi or Dmaic That Is The Question 1137David PatrickNo ratings yet

- Parts of The House and FurnitureDocument1 pageParts of The House and FurnitureJOSE MARIA ARIGUZNAGA ORDUÑANo ratings yet

- Attributes and Distinctive Features of Adult Learning and (Autosaved) (Autosaved)Document32 pagesAttributes and Distinctive Features of Adult Learning and (Autosaved) (Autosaved)Mericar EsmedioNo ratings yet

- Module For Questioned Document ExaminationDocument13 pagesModule For Questioned Document ExaminationMark Jayson Pampag Muyco100% (1)

- Marketing Mix MK Plan Infographics 1Document35 pagesMarketing Mix MK Plan Infographics 1putrikasbi410No ratings yet

- 0743 Windows 10 Backup Restore PDFDocument11 pages0743 Windows 10 Backup Restore PDFsumyNo ratings yet

- Initial Investment Invested: Basic Steps: A L + C Expanded Form: A L + C - (D) + R / I - (E)Document9 pagesInitial Investment Invested: Basic Steps: A L + C Expanded Form: A L + C - (D) + R / I - (E)Charlize Adriele C. Comprado3210183No ratings yet

- Bca 2sem SyDocument10 pagesBca 2sem SyRounakNo ratings yet

- Cloud Security - Security Best Practice Guide (BPG) PDFDocument21 pagesCloud Security - Security Best Practice Guide (BPG) PDFHakim ShakurNo ratings yet

- Module 2 - Lesson Plan ComponentsDocument5 pagesModule 2 - Lesson Plan ComponentsMay Myoe KhinNo ratings yet

- HashingDocument1,668 pagesHashingDinesh Reddy KommeraNo ratings yet

- Mobile Computing Multiple Choice Questions With Answers PDFDocument10 pagesMobile Computing Multiple Choice Questions With Answers PDFvikes singhNo ratings yet

- CMC Vellore Admission InstructionsDocument57 pagesCMC Vellore Admission InstructionsNaveen JaiNo ratings yet

- L01 - Review of Z TransformDocument17 pagesL01 - Review of Z TransformRanjith KumarNo ratings yet

- اول 4 وحدات من مذكرة كونكت 4 مستر محمد جاد ترم اول 2022Document112 pagesاول 4 وحدات من مذكرة كونكت 4 مستر محمد جاد ترم اول 2022Sameh IbrahimNo ratings yet

- WHO Food Additives Series 59 2008Document479 pagesWHO Food Additives Series 59 2008jgallegosNo ratings yet

- Analgesics in ObstetricsDocument33 pagesAnalgesics in ObstetricsVeena KaNo ratings yet

- Steam TurbineDocument16 pagesSteam TurbineVinayakNo ratings yet

- OligohydramniosDocument20 pagesOligohydramniosjudssalangsangNo ratings yet

- Training Day-1 V5Document85 pagesTraining Day-1 V5Wazabi MooNo ratings yet

- 1st Sem Final Exam SummaryDocument16 pages1st Sem Final Exam SummaryAbu YazanNo ratings yet

- Lingerie Insight February 2011Document52 pagesLingerie Insight February 2011gab20100% (2)

- Vocabulary Grammar: A Cake A Decision Best A Mess Mistakes Time HomeworkDocument3 pagesVocabulary Grammar: A Cake A Decision Best A Mess Mistakes Time HomeworkMianonimoNo ratings yet

- Machine Spindle Noses: 6 Bison - Bial S. ADocument2 pagesMachine Spindle Noses: 6 Bison - Bial S. AshanehatfieldNo ratings yet

- QuadEquations PPT Alg2Document16 pagesQuadEquations PPT Alg2Kenny Ann Grace BatiancilaNo ratings yet

- 11 RECT TANK 4.0M X 3.0M X 3.3M H - Flocculator PDFDocument3 pages11 RECT TANK 4.0M X 3.0M X 3.3M H - Flocculator PDFaaditya chopadeNo ratings yet

- Beyond Science (Alternity)Document98 pagesBeyond Science (Alternity)Quintus Domitius VinskusNo ratings yet