Professional Documents

Culture Documents

Remainder of CIS501: Parallelism: System Software App App App

Remainder of CIS501: Parallelism: System Software App App App

Uploaded by

Eng BahanzaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Remainder of CIS501: Parallelism: System Software App App App

Remainder of CIS501: Parallelism: System Software App App App

Uploaded by

Eng BahanzaCopyright:

Available Formats

Remainder of CIS501: Parallelism

! Last unit: pipeline-level parallelism

! Work on execute of one instruction in parallel with decode of next

! Next: instruction-level parallelism (ILP)

CIS 501

Computer Architecture

! Execute multiple independent instructions fully in parallel

! Today: multiple issue

! Next week: dynamic scheduling

! Extract much more ILP via out-of-order processing

! Data-level parallelism (DLP)

Unit 7: Superscalar

! Single-instruction, multiple data

! Example: one instruction, four 16-bit adds (using 64-bit registers)

! Thread-level parallelism (TLP)

! Multiple software threads running on multiple cores

CIS 501 (Martin/Roth): Superscalar

This Unit: Superscalar Execution

App

App

App

System software

Mem

CPU

I/O

Readings

! Superscalar scaling issues

!

!

!

!

CIS 501 (Martin/Roth): Superscalar

! H+P

Multiple fetch and branch prediction

Dependence-checks & stall logic

Wide bypassing

Register file & cache bandwidth

! TBD

! Paper

! Edmondson et al., Superscalar Instruction Execution in the 21164

Alpha Microprocessor

! Multiple-issue designs

! Superscalar

! VLIW and EPIC (Itanium)

! Research: Grid Processor

CIS 501 (Martin/Roth): Superscalar

CIS 501 (Martin/Roth): Superscalar

Scalar Pipeline and the Flynn Bottleneck

Multiple-Issue Pipeline

regfile

I$

regfile

I$

D$

B

P

! Overcome this limit using multiple issue

! So far we have looked at scalar pipelines

! One instruction per stage

! With control speculation, bypassing, etc.

! Performance limit (aka Flynn Bottleneck) is CPI = IPC = 1

! Limit is never even achieved (hazards)

! Diminishing returns from super-pipelining (hazards + overhead)

CIS 501 (Martin/Roth): Superscalar

Superscalar Pipeline Diagrams - Ideal

scalar

lw 0(r1)!r2

lw 4(r1)!r3

lw 8(r1)!r4

add r14,r15!r6

add r12,r13!r7

add r17,r16!r8

lw 0(r18)!r9

1

F

2-way superscalar 1

lw 0(r1)!r2

lw 4(r1)!r3

lw 8(r1)!r4

add r14,r15!r6

add r12,r13!r7

add r17,r16!r8

lw 0(r18)!r9

D$

B

P

F

F

CIS 501 (Martin/Roth): Superscalar

2

D

F

2

D

D

F

F

3

X

D

F

3

X

X

D

D

F

F

4

M

X

D

F

5 6 7 8 9 10 11 12

W

M W

X M W

D X M W

F D X M W

F D X M W

F D X M W

4

M

M

X

X

D

D

F

5 6 7 8

W

W

M W

M W

X M W

X M W

D X M W

! Also called superscalar

! Two instructions per stage at once, or three, or four, or eight

! Instruction-Level Parallelism (ILP) [Fisher, IEEE TC81]

! Today, typically 4-wide (Intel Core 2, AMD Opteron)

! Some more (Power5 is 5-issue; Itanium is 6-issue)

! Some less (dual-issue is common for simple cores)

CIS 501 (Martin/Roth): Superscalar

Superscalar Pipeline Diagrams - Realistic

scalar

lw 0(r1)!r2

lw 4(r1)!r3

lw 8(r1)!r4

add r4,r5!r6

add r2,r3!r7

add r7,r6!r8

lw 0(r8)!r9

1

F

2-way superscalar 1

10 11 12

lw 0(r1)!r2

lw 4(r1)!r3

lw 8(r1)!r4

add r4,r5!r6

add r2,r3!r7

add r7,r6!r8

lw 0(r8)!r9

7

F

F

CIS 501 (Martin/Roth): Superscalar

2

D

F

2

D

D

F

F

3

X

D

F

3

X

X

D

d*

F

4 5 6 7

M W

X M W

D X M W

F d* D X

F D

F

4

M

M

X

d*

d*

5 6 7

W

W

M W

D X M

D X M

F D X

F d* D

10 11 12

M

X

D

F

W

M W

X M W

D X M W

10 11 12

W

W

M W

X M W

8

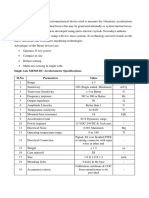

Superscalar CPI Calculations

Simplest Superscalar: Split Floating Point

! Base CPI for scalar pipeline is 1

! Base CPI for N-way superscalar pipeline is 1/N

I-regfile

I$

! Amplifies stall penalties

! Assumes no data stalls (an overly optmistic assumption)

B

P

! Example: Branch penalty calculation

! 20% branches, 75% taken, no explicit branch prediction

! Split integer and

floating point

! 1 integer + 1 FP

! Scalar pipeline

! 1 + 0.2*0.75*2 = 1.3 ! 1.3/1 = 1.3 ! 30% slowdown

! 2-way superscalar pipeline

+! Limited modifications

! Limited speedup

! 0.5 + 0.2*0.75*2 = 0.8 ! 0.8/0.5 = 1.6 ! 60% slowdown

! 4-way superscalar

! 0.25 + 0.2*0.75*2 = 0.55 ! 0.55/0.25 = 2.2 ! 120% slowdown

CIS 501 (Martin/Roth): Superscalar

A Four-issue Pipeline (2 integer, 2 FP)

! Floating points loads

in integer pipe

E*

E

+

E

+

E*

E/

F-regfile

10

A Typical Dual-Issue Pipeline

regfile

I$

D$

B

P

! 2 integer + 2 FP

! Similar to

Alpha 21164

E*

CIS 501 (Martin/Roth): Superscalar

regfile

I$

D$

D$

B

P

E*

E*

E

+

E

+

! Fetch an entire 16B or 32B cache block

E*

! 4 to 8 instructions (assuming 4-byte fixed length instructions)

! Predict a single branch per cycle

! Parallel decode

! Need to check for conflicting instructions

! Output of I1 is an input to I2

! Other stalls, too (for example, load-use delay)

E/

F-regfile

CIS 501 (Martin/Roth): Superscalar

11

CIS 501 (Martin/Roth): Superscalar

12

A Typical Dual-Issue Pipeline

Superscalar Challenges - Front End

! Wide instruction fetch

regfile

I$

! Modest: need multiple instructions per cycle

! Aggressive: predict multiple branches, trace cache

D$

! Wide instruction decode

B

P

! Replicate decoders

! Wide instruction issue

! Multi-ported register file

! Determine when instructions can proceed in parallel

! Not all combinations possible

! More complex stall logic - order N2 for N-wide machine

! Larger area, latency, power, cost, complexity

! Multiple execution units

! Simple adders are easy, but bypass paths are expensive

! Wide register read

! Memory unit

! Single load per cycle (stall at decode) probably okay for dual issue

! Alternative: add a read port to data cache

! Larger area, latency, power, cost, complexity

CIS 501 (Martin/Roth): Superscalar

13

! One port for each register read

! Each port needs its own set of address and data wires

! Example, 4-wide superscalar ! 8 read ports

CIS 501 (Martin/Roth): Superscalar

Superscalar Challenges - Back End

How Much ILP is There?

! Wide instruction execution

! The compiler tries to schedule code to avoid stalls

! Replicate arithmetic units

! Multiple cache ports

14

! Even for scalar machines (to fill load-use delay slot)

! Even harder to schedule multiple-issue (superscalar)

! Wide instruction register writeback

! How much ILP is common?

! One write port per instruction that writes a register

! Example, 4-wide superscalar ! 4 write ports

! Wide bypass paths

! More possible sources for data values

! Order (N2 * P) for N-wide machine with execute pipeline depth P

! Even given unbounded ILP, superscalar has limits

! Fundamental challenge:

! IPC (or CPI) vs clock frequency trade-off

! Given these challenges, what is reasonable N? 3 or 4 today

! Amount of ILP (instruction-level parallelism) in the program

! Compiler must schedule code and extract parallelism

CIS 501 (Martin/Roth): Superscalar

! Greatly depends on the application

! Consider memory copy

! Unroll loop, lots of independent operations

! Other programs, less so

15

CIS 501 (Martin/Roth): Superscalar

16

Wide Decode

N2 Dependence Cross-Check

! Stall logic for 1-wide pipeline with full bypassing

regfile

! Full bypassing ! load/use stalls only

X/M.op==LOAD && (D/X.rs1==X/M.rd || D/X.rs2==X/M.rd)

! Two terms: " 2N

! Now: same logic for a 2-wide pipeline

! What is involved in decoding multiple (N) insns per cycle?

! Actually doing the decoding?

! Easy if fixed length (multiple decoders), doable if variable length

! Reading input registers?

! 2N register read ports (latency " #ports)

+! Actually less than 2N, most values come from bypasses

! More about this in a bit

! What about the stall logic?

CIS 501 (Martin/Roth): Superscalar

17

Wide Execute

X/M1.op==LOAD && (D/X1.rs1==X/M1.rd ||

X/M1.op==LOAD && (D/X2.rs1==X/M1.rd ||

X/M2.op==LOAD && (D/X1.rs1==X/M2.rd ||

X/M2.op==LOAD && (D/X2.rs1==X/M2.rd ||

! Eight terms: " 2N2

! N2 dependence cross-check

! Not quite done, also need

! D/X2.rs1==D/X1.rd || D/X2.rs2==D/X1.rd

D/X1.rs2==X/M1.rd) ||

D/X2.rs2==X/M1.rd) ||

D/X1.rs2==X/M2.rd) ||

D/X2.rs2==X/M2.rd)

CIS 501 (Martin/Roth): Superscalar

18

Wide Memory Access

D$

! What is involved in executing N insns per cycle?

! Multiple execution units N of every kind?

!

!

!

!

! What about multiple loads/stores per cycle?

N ALUs? OK, ALUs are small

N FP dividers? No, FP dividers are huge and fdiv is uncommon

How many branches per cycle? How many loads/stores per cycle?

Typically some mix of functional units proportional to insn mix

! Intel Pentium: 1 any + 1 ALU

! Alpha 21164: 2 integer (including 2 loads) + 2 FP

CIS 501 (Martin/Roth): Superscalar

19

! Probably only necessary on processors 4-wide or wider

! More important to support multiple loads than multiple stores

! Insn mix: loads (~2025%), stores (~1015%)

! Alpha 21164: two loads or one store per cycle

CIS 501 (Martin/Roth): Superscalar

20

D$ Bandwidth: Multi-Porting, Replication

D$ Bandwidth: Banking

! How to provide additional D$ bandwidth?

! Option#3: banking (or interleaving)

! Have already seen split I$/D$, but that gives you just one D$ port

! How to provide a second (maybe even a third) D$ port?

! Option#1: multi-porting

+! Most general solution, any two accesses per cycle

! Expensive in terms of latency, area (cost), and power

! Divide D$ into banks (by address), 1 access/bank-cycle

! Bank conflict: two accesses to same bank ! one stalls

+! No latency, area, power overheads (latency may even be lower)

+! One access per bank per cycle, assuming no conflicts

! Complex stall logic ! address not known until execute stage

! To support N accesses, need 2N+ banks to avoid frequent conflicts

! Which address bit(s) determine bank?

! Option #2: replication

! Additional read bandwidth only, but writes must go to all replicas

+! General solution for loads, no latency penalty

! Not a solution for stores (thats OK), area (cost), power penalty

! Is this what Alpha 21164 does?

CIS 501 (Martin/Roth): Superscalar

21

Wide Register Read/Write

! Offset bits? Individual cache lines spread among different banks

+!Fewer conflicts

! Must replicate tags across banks, complex miss handling

! Index bits? Banks contain complete cache lines

! More conflicts

+!Tags not replicated, simpler miss handling

CIS 501 (Martin/Roth): Superscalar

Wide Bypass

regfile

! N2 bypass network

!

!

!

!

!

N+1 input muxes at each ALU input

N2 point-to-point connections

Routing lengthens wires

Expensive metal layer crossings

Heavy capacitive load

! And this is just one bypass stage (MX)!

! There is also WX bypassing

! Even more for deeper pipelines

! One of the big problems of superscalar

! How many register file ports to execute N insns per cycle?

! Nominally, 2N read + N write (2 read + 1 write per insn)

! Latency, area " #ports2

! In reality, fewer than that

! Read ports: many values come from bypass network

! Write ports: stores, branches (35% insns) dont write registers

! Implemented as bit-slicing

! 64 1-bit bypass networks

! Mitigates routing problem somewhat

! Replication works great for regfiles (used in Alpha 21164)

! Banking? Not so much

CIS 501 (Martin/Roth): Superscalar

22

23

CIS 501 (Martin/Roth): Superscalar

24

Aside: Not All N2 Created Equal

Clustering

! Clustering: mitigates N2 bypass

! N2 bypass vs. N2 dependence cross-check

! Group ALUs into K clusters

! Full bypassing within a cluster

! Limited bypassing between clusters

! With 1 cycle delay

! (N/K) + 1 inputs at each mux

! (N/K)2 bypass paths in each cluster

! Which is the bigger problem?

! N2 bypass by far

! 32- or 64- bit quantities (vs. 5-bit)

! Multiple levels (MX, WX) of bypass (vs. 1 level of stall logic)

! Must fit in one clock period with ALU (vs. not)

! Steering: key to performance

! Steer dependent insns to same cluster

! Statically (compiler) or dynamically

! Dependence cross-check not even 2nd biggest N2 problem

! E.g., Alpha 21264

! Regfile is also an N2 problem (think latency where N is #ports)

! And also more serious than cross-check

CIS 501 (Martin/Roth): Superscalar

! Bypass wouldnt fit into clock cycle

! 4-wide, 2 clusters, static steering

! Each cluster has register file replica

25

Wide Fetch - Sequential Instructions

I$

1020

1021

1022

1023

26

Wide Non-Sequential Fetch

! Two related questions

! How many branches predicted per cycle?

! Can we fetch across the branch if it is predicted taken?

B

P

! Simplest, most common organization: 1 and No

! What is involved in fetching multiple instructions per cycle?

! In same cache block? ! no problem

! Favors larger block size (independent of hit rate)

! Compilers align basic blocks to I$ lines (.align assembly directive)

! Reduces I$ capacity

+! Increases fetch bandwidth utilization (more important)

! In multiple blocks? ! Fetch block A and A+1 in parallel

! One prediction, discard post-branch insns if prediction is taken

! Lowers effective fetch width and IPC

! Average number of instructions per taken branch?

! Assume: 20% branches, 50% taken ! ~10 instructions

! Consider a 10-instruction loop body with an 8-issue processor

! Without smarter fetch, ILP is limited to 5 (not 8)

! Compiler can help

! Banked I$ + combining network

! May add latency (add pipeline stages to avoid slowing down clock)

CIS 501 (Martin/Roth): Superscalar

CIS 501 (Martin/Roth): Superscalar

! Reduce taken branch frequency (e.g., unroll loops)

27

CIS 501 (Martin/Roth): Superscalar

28

Parallel Non-Sequential Fetch

Trace Cache

I$

b0

I$

b1

B

P

T$

T

P

! Trace cache (T$) [Peleg+Weiser, Rotenberg+]

! Allowing embedded taken branches is possible

! Requires smart branch predictor, multiple I$ accesses in one cycle

! Can try pipelining branch prediction and fetch

!

!

!

!

Branch prediction stage only needs PC

Transmits two PCs to fetch stage, next PC and next-next PC

Elongates pipeline, increases branch penalty

Pentium II & III do something like this

CIS 501 (Martin/Roth): Superscalar

! Coupled with trace predictor (TP)

! Predicts next trace, not next branch

29

Trace Cache Example

0

4

addi,beq #4,ld,sub

st,call #32,ld,add

0:

1:

4:

5:

addi r1,4,r1

beq r1,#4

st r1,4(sp)

call #32

1

F

F

f*

f*

2

D

D

F

F

addi r1,4,r1

beq r1,#4

st r1,4(sp)

call #32

1

F

F

F

F

2

D

D

D

D

0:T

addi,beq #4,st,call #32

0:

1:

4:

5:

! Traces can pre-decode dependence information

! Helps fix the N2 dependence check problem

CIS 501 (Martin/Roth): Superscalar

30

! Pentium4 has a trace cache

!

!

!

!

But doesnt use it to solve the branch prediction/fetch problem

Uses it to solve decode problem instead

Traces contain decoded RISC ops (not CISC x86 insns)

Traces are short (3 ops each)

! What is the decoding problem?

! Trace cache

Tag Data (insns)

CIS 501 (Martin/Roth): Superscalar

Pentium4 Trace Cache

! Traditional instruction cache

Tag Data (insns)

! Overcomes serialization of prediction and fetch by combining them

! New kind of I$ that stores dynamic, not static, insn sequences

! Blocks can contain statically non-contiguous insns

! Tag: PC of first insn + N/T of embedded branches

! Used in Pentium 4 (actually stores decoded ops)

31

! Breaking x86 insns into ops is slow and area-/energy-consuming

! Especially problematic is converting x86 insns into mulitple ops

! Average op/x86 insns ratio is 1.61.7

! Pentium II (and III) only had 1 multiple-op decoder

! Big performance hit vis--vis AMDs Athlon (which had 3)

! Pentium4 uses T$ to simulate multiple multliple-op decoders

! And to shorten pipeline (which is still 22 stages)

CIS 501 (Martin/Roth): Superscalar

32

Aside: Multiple-issue CISC

Multiple-Issue Implementations

! How do we apply superscalar techniques to CISC

! Statically-scheduled (in-order) superscalar

! Such as x86

! Or CISCy ugly instructions in some RISC ISAs

+! Executes unmodified sequential programs

! Hardware must figure out what can be done in parallel

! E.g., Pentium (2-wide), UltraSPARC (4-wide), Alpha 21164 (4-wide)

! Break macro-ops into micro-ops

! Also called ops or RISC-ops

! A typical CISCy instruction add [r1], [r2] ! [r3] becomes:

! Load [r1] ! t1 (t1 is a temp. register, not visible to software)

! Load [r2] ! t2

! Add t1, t2 ! t3

! Store t3![r3]

! However, conversion is expensive (latency, area, power)

! Solution: cache converted instructions in trace cache

! Used by Pentium 4

! Internal pipeline manipulates only these RISC-like instructions

CIS 501 (Martin/Roth): Superscalar

33

! Very Long Instruction Word (VLIW)

+! Hardware can be dumb and low power

! Compiler must group parallel insns, requires new binaries

! E.g., TransMeta Crusoe (4-wide)

! Explicitly Parallel Instruction Computing (EPIC)

! A compromise: compiler does some, hardware does the rest

! E.g., Intel Itanium (6-wide)

! Dynamically-scheduled superscalar

! Pentium Pro/II/III (3-wide), Alpha 21264 (4-wide)

! Weve already talked about statically-scheduled superscalar

CIS 501 (Martin/Roth): Superscalar

VLIW

History of VLIW

! Hardware-centric multiple issue problems

! Started with horizontal microcode

! Academic projects

! Wide fetch+branch prediction, N2 bypass, N2 dependence checks

! Hardware solutions have been proposed: clustering, trace cache

! Software-centric: very long insn word (VLIW)

! Effectively, a 1-wide pipeline, but unit is an N-insn group

! Compiler guarantees insns within a VLIW group are independent

! If no independent insns, slots filled with nops

! Group travels down pipeline as a unit

+!Simplifies pipeline control (no rigid vs. fluid business)

+!Cross-checks within a group un-necessary

! Downstream cross-checks still necessary

! Typically slotted: 1st insn must be ALU, 2nd mem, etc.

+!Further simplification

CIS 501 (Martin/Roth): Superscalar

35

34

! Yale ELI-512 [Fisher, 85]

! Illinois IMPACT [Hwu, 91]

! Commercial attempts

!

!

!

!

!

Multiflow [Colwell+Fisher, 85] ! failed

Cydrome [Rau, 85] ! failed

Motorolla/TI embedded processors ! successful

Intel Itanium [Colwell,Fisher+Rau, 97] ! ??

Transmeta Crusoe [Ditzel, 99] ! mostly failed

CIS 501 (Martin/Roth): Superscalar

36

Pure and Tainted VLIW

What Does VLIW Actually Buy You?

! Pure VLIW: no hardware dependence checks at all

+! Simpler I$/branch prediction

+! Slightly simpler dependence check logic

! Doesnt help bypasses or regfile

! Not even between VLIW groups

+! Very simple and low power hardware

! Compiler responsible for scheduling stall cycles

! Requires precise knowledge of pipeline depth and structure

! These must be fixed for compatibility

! Doesnt support caches well

! Used in some cache-less micro-controllers, but not generally useful

! Tainted (more realistic) VLIW: inter-group checks

! Compiler doesnt schedule stall cycles

+! Precise pipeline depth and latencies not needed, can be changed

+! Supports caches

! TransMeta Crusoe

CIS 501 (Martin/Roth): Superscalar

37

EPIC

! Which are the much bigger problems

! Although clustering and replication can help VLIW, too

! Not compatible across machines of different widths

! Is non-compatibility worth all of this?

! PS did TransMeta deal with compatibility problem?

! Dynamically translates x86 to internal VLIW

CIS 501 (Martin/Roth): Superscalar

Trends in Single-Processor Multiple Issue

! Tainted VLIW

!

!

!

!

Compatible across pipeline depths

But not across pipeline widths and slot structures

Must re-compile if going from 4-wide to 8-wide

TransMeta sidesteps this problem by re-compiling transparently

! EPIC (Explicitly Parallel Insn Computing)

!

!

!

!

!

New VLIW (Variable Length Insn Words)

Implemented as bundles with explicit dependence bits

Code is compatible with different bundle width machines

Compiler discovers as much parallelism as it can, hardware does rest

E.g., Intel Itanium (IA-64)

! 128-bit bundles (3 41-bit insns + 4 dependence bits)

! Still does not address bypassing or register file issues

CIS 501 (Martin/Roth): Superscalar

38

39

Year

Width

486

Pentium

PentiumII

Pentium4

Itanium

ItaniumII

Core2

1989

1993

1998

2001

2002

2004

2006

! Issue width has saturated at 4-6 for high-performance cores

! Canceled Alpha 21464 was 8-way issue

! No justification for going wider

! Out-of-order execution (or EPIC) needed to exploit 4-6 effectively

! For high-performance/power cores, issue width is 1-2

! Out-of-order execution not needed

! Multi-threading (a little later) helps cope with cache misses

CIS 501 (Martin/Roth): Superscalar

40

Multiple Issue Redux

Research: Grid Processor

! Multiple issue

! Grid processor (TRIPS) [Nagarajan+, MICRO01]

! Needed to expose insn level parallelism (ILP) beyond pipelining

! Improves performance, but reduces utilization

! 4-6 way issue is about the peak issue width currently justifiable

! EDGE (Explicit Dataflow Graph Execution) execution model

! Holistic attack on many fundamental superscalar problems

! Specifically, the nastiest one: N2 bypassing

! But also N2 dependence check

! And wide-fetch + branch prediction

! Two-dimensional VLIW

! Horizontal dimension is insns in one parallel group

! Vertical dimension is several vertical groups

! Executes atomic code blocks

! Uses predication and special scheduling to avoid taken branches

! Problem spots

! Fetch + branch prediction ! trace cache?

! N2 bypass ! clustering?

! Register file ! replication?

! Implementations

! (Statically-scheduled) superscalar, VLIW/EPIC

! UT-Austin research project

! Fabricated an actual chip with help from IBM

! Are there more radical ways to address these challenges?

CIS 501 (Martin/Roth): Superscalar

41

CIS 501 (Martin/Roth): Superscalar

42

Grid Processor

Aside: SAXPY

! Components

! SAXPY (Single-precision A X Plus Y)

! Linear algebra routine (used in solving systems of equations)

! Part of early Livermore Loops benchmark suite

! next block logic/predictor (NH), I$, D$, regfile

! NxN ALU grid: here 4x4

! Pipeline stages

! Fetch block to grid

! Read registers

! Execute/memory

! Cascade

! Write registers

! Block atomic

! No intermediate regs

! Grid limits size/shape

CIS 501 (Martin/Roth): Superscalar

NH

I$

for (i=0;i<N;i++)

Z[i]=A*X[i]+Y[i];

regfile

read

read

read

read

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

ALU

D$

43

0:

1:

2:

3:

4:

5:

6:

ldf X(r1),f1

mulf f0,f1,f2

ldf Y(r1),f3

addf f2,f3,f4

stf f4,Z(r1)

addi r1,4,r1

blt r1,r2,0

CIS 501 (Martin/Roth): Superscalar

// loop

// A in f0

// X,Y,Z are constant addresses

// i in r1

// N*4 in r2

44

Grid Processor SAXPY

read

pass

pass

pass

blt

r2,0

0

0

0

read f1,0

pass 1

pass 0,1

addi

nop

Grid Processor SAXPY Cycle 1

read

pass

mulf

pass

pass

r1,0,1

-1,1

1

1

0,r1

! Map code block to grid

nop

ldf X,-1

ldf Y,0

addf 0

stf Z

NH

! A code block for this Grid processor has 5 4-insn words

! Atomic unit of execution

! Some notes about Grid ISA

! read: read register from register file

! pass: null operation

! 1,0,1: routing directives send result to next word

! one insn left (-1), insn straight down (0), one insn right (1)

! Directives specify value flow, no need for interior registers

CIS 501 (Martin/Roth): Superscalar

I$

regfile

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

pass

addi

pass

addf

pass

stf

D$

ble

45

CIS 501 (Martin/Roth): Superscalar

46

Grid Processor SAXPY Cycle 2

Grid Processor SAXPY Cycle 3

! Read registers

! Execute first grid row

! Execution proceeds in data flow fashion

! Not lock step

NH

I$

regfile

NH

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

I$

regfile

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

addi

pass

addf

pass

stf

D$

pass

ble

CIS 501 (Martin/Roth): Superscalar

addi

D$

pass

addf

pass

pass

stf

ble

47

CIS 501 (Martin/Roth): Superscalar

48

Grid Processor SAXPY Cycle 4

Grid Processor SAXPY Cycle 5

! Execute second grid row

! Execute third grid row

! Recall, mulf takes 5 cycles

NH

I$

regfile

NH

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

I$

regfile

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

addi

pass

addf

pass

stf

D$

pass

addi

ble

D$

pass

addf

pass

pass

stf

ble

CIS 501 (Martin/Roth): Superscalar

49

CIS 501 (Martin/Roth): Superscalar

50

Grid Processor SAXPY Cycle 6

Grid Processor SAXPY

! Execute third grid row

! When all instructions are done

! Write registers and next code block PC

NH

I$

regfile

NH

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

I$

regfile

r2

f1

r1

pass

pass

pass

ldf

pass

pass

mulf

ldf

addi

pass

addf

pass

stf

D$

pass

ble

CIS 501 (Martin/Roth): Superscalar

addi

D$

pass

addf

pass

pass

stf

ble

51

CIS 501 (Martin/Roth): Superscalar

52

Grid Processor SAXPY Performance

Grid Processor Redux

! Performance

+! No hardware dependence checks period

!

!

!

!

!

1 cycle fetch

1 cycle read regs

8 cycles execute

1 cycle write regs

11 cycles total

! Insn placement encodes dependences, still get dynamic issue

+! Simple, forward only, short-wire bypassing

! No wraparound routing, no metal layer crossings, low input muxes

NH

! 7 / (11 * 16) = 4%

! Whats the point?

regfile

r2

! Utilization

pass

I$

+! Simpler components

+! Faster clock?

! Code size

f1

pass

! Lots of nop and pass operations

! Non-compatibility

r1

pass

! Code assumes horizontal and vertical grid layout

ldf

! No scheduling between hyperblocks

! Poor utilization

pass

pass

mulf

ldf

pass

addi

pass

addf

pass

stf

! Can be overcome, but is pretty nasty

D$

ble

CIS 501 (Martin/Roth): Superscalar

! Grid is gone, replaced by simpler window scheduler

! Forward nature of ISA exploited to create tiled implementations

53

Next Up

! Extracting more ILP via

! Static scheduling by compiler

! Dynamic scheduling in hardware

CIS 501 (Martin/Roth): Superscalar

! Overcome by multiple concurrent executing hyperblocks

! Interesting: TRIPS has morphed into something else

55

CIS 501 (Martin/Roth): Superscalar

54

You might also like

- Current Personal CVDocument1 pageCurrent Personal CVEng BahanzaNo ratings yet

- Sartorius Pma 7501 Serice ManualDocument29 pagesSartorius Pma 7501 Serice ManualkleberdelacerdaNo ratings yet

- Interviews Question For Physical Design: Digital Design Interview QuestionsDocument44 pagesInterviews Question For Physical Design: Digital Design Interview QuestionsPavan Raj100% (1)

- 1 15 Powerblock Repair PDFDocument20 pages1 15 Powerblock Repair PDFDaniel100% (1)

- Metrocluster Ip: Solution Architecture and DesignDocument36 pagesMetrocluster Ip: Solution Architecture and DesignpedirstuffNo ratings yet

- Parallel Wireless e Book Everything You Need To Know About Open RANDocument62 pagesParallel Wireless e Book Everything You Need To Know About Open RANRaghavan Kumar100% (1)

- SEMIKRON Product-Catalogue EN PDFDocument126 pagesSEMIKRON Product-Catalogue EN PDFAngelo Aco Mendoza100% (1)

- This Unit: Superscalar Execution: - Idea of Instruction-Level Parallelism - Superscalar Scaling IssuesDocument13 pagesThis Unit: Superscalar Execution: - Idea of Instruction-Level Parallelism - Superscalar Scaling Issuesg7812No ratings yet

- 07 SuperscalarDocument12 pages07 Superscalarsmitaasalunkhe1999No ratings yet

- A Key Theme of CIS 371: ParallelismDocument7 pagesA Key Theme of CIS 371: ParallelismAhuja MeghnaNo ratings yet

- William Stallings Computer Organization and Architecture 8 EditionDocument38 pagesWilliam Stallings Computer Organization and Architecture 8 EditionNEOROVENo ratings yet

- Instruction Set Architecture (ISA)Document14 pagesInstruction Set Architecture (ISA)Sukhi DhimanNo ratings yet

- SuperscalarDocument38 pagesSuperscalarNusrat Mary ChowdhuryNo ratings yet

- Picture Courtesy: Intel CorporationDocument20 pagesPicture Courtesy: Intel CorporationSanjaykumar GowreNo ratings yet

- Reduced Instruction Set Computers: William Stallings Computer Organization and Architecture 7 EditionDocument38 pagesReduced Instruction Set Computers: William Stallings Computer Organization and Architecture 7 EditionSaimo FortuneNo ratings yet

- DSP 2Document19 pagesDSP 2Mukul AggarwalNo ratings yet

- William Stallings Computer Organization and Architecture 8 Edition Instruction Level Parallelism and Superscalar ProcessorsDocument50 pagesWilliam Stallings Computer Organization and Architecture 8 Edition Instruction Level Parallelism and Superscalar ProcessorsMinkpoo Lexy UtomoNo ratings yet

- UntitledDocument46 pagesUntitledGaurab GhoseNo ratings yet

- Instruction Level Parallelism and Superscalar ProcessorsDocument34 pagesInstruction Level Parallelism and Superscalar ProcessorsJohn Michael MarasiganNo ratings yet

- CS 6290 Instruction Level ParallelismDocument45 pagesCS 6290 Instruction Level ParallelismTathagata Roy ChowdhuryNo ratings yet

- DigitalLogic ComputerOrganization L26 RevisionDocument13 pagesDigitalLogic ComputerOrganization L26 RevisionPhan Tuấn KhôiNo ratings yet

- Embedded Systems Design: Pipelining and Instruction SchedulingDocument48 pagesEmbedded Systems Design: Pipelining and Instruction SchedulingMisbah Sajid ChaudhryNo ratings yet

- Unit Iv Pic MicrocontrollerDocument68 pagesUnit Iv Pic MicrocontrollerShubham MadneNo ratings yet

- Lec 08Document20 pagesLec 08rsrajasthanofficial600No ratings yet

- Lecture 13: Trace Scheduling, Conditional Execution, Speculation, Limits of ILPDocument21 pagesLecture 13: Trace Scheduling, Conditional Execution, Speculation, Limits of ILPBalasubramanian JayaramanNo ratings yet

- Instruction Level ParallelismDocument49 pagesInstruction Level ParallelismBijay MishraNo ratings yet

- Micro ControllersDocument146 pagesMicro ControllersVidhanNo ratings yet

- Unit IIDocument84 pagesUnit IISenthil RajNo ratings yet

- Computer FundamentalsDocument86 pagesComputer FundamentalspratibhaNo ratings yet

- CSE 820 Graduate Computer Architecture Week 5 - Instruction Level ParallelismDocument38 pagesCSE 820 Graduate Computer Architecture Week 5 - Instruction Level ParallelismkbkkrNo ratings yet

- HC11 ArchitectureDocument28 pagesHC11 ArchitecturebaabysNo ratings yet

- Cpe 242 Computer Architecture and Engineering Instruction Level ParallelismDocument46 pagesCpe 242 Computer Architecture and Engineering Instruction Level ParallelismSagar ManeNo ratings yet

- CH - 14 - Instruction Level Parallelism and Superscalar ProcessorsDocument42 pagesCH - 14 - Instruction Level Parallelism and Superscalar ProcessorsGita RamdhaniNo ratings yet

- Lapukhov Move Fast UnbreakDocument46 pagesLapukhov Move Fast UnbreakJavier AlejandroNo ratings yet

- Lecture19 ILP SMTDocument31 pagesLecture19 ILP SMTkhang1011121314No ratings yet

- ARMDocument44 pagesARMBiplab RoyNo ratings yet

- 13 RiscDocument38 pages13 RiscAtif RehmanNo ratings yet

- CAunitiiiDocument36 pagesCAunitiiiKishore Arun KumarNo ratings yet

- Multiple IssueDocument10 pagesMultiple IssueNusrat Mary ChowdhuryNo ratings yet

- I Series CommDocument19 pagesI Series CommYoel OcminNo ratings yet

- A Study On Hyper-Threading: Vimal Reddy Ambarish Sule Aravindh AnantaramanDocument29 pagesA Study On Hyper-Threading: Vimal Reddy Ambarish Sule Aravindh AnantaramanVetrivel SubramaniNo ratings yet

- Parallel Programming Using OpenMPDocument76 pagesParallel Programming Using OpenMPluiei1971No ratings yet

- Essential Electronics & Microcontrollers: FYS3240-4240 Data Acquisition & ControlDocument26 pagesEssential Electronics & Microcontrollers: FYS3240-4240 Data Acquisition & ControljackNo ratings yet

- Unit 1-MicroprocessorsDocument110 pagesUnit 1-MicroprocessorsRaj DesaiNo ratings yet

- Advanced Buffer Overflow Technique: Greg HoglundDocument76 pagesAdvanced Buffer Overflow Technique: Greg HoglundNunu CreebowNo ratings yet

- This Unit: Shared Memory Multiprocessors: - Three IssuesDocument17 pagesThis Unit: Shared Memory Multiprocessors: - Three Issuesmeenu_duttaNo ratings yet

- Digital Signal Processor 3Document25 pagesDigital Signal Processor 3Vijayaraghavan VNo ratings yet

- Core Dump AnalysisDocument31 pagesCore Dump AnalysisRahul BiliyeNo ratings yet

- VHDL Mansoor - Sessions1 - Session2Document16 pagesVHDL Mansoor - Sessions1 - Session2Akash MeenaNo ratings yet

- Superscalar Architectures: COMP375 Computer Architecture and OrganizationDocument35 pagesSuperscalar Architectures: COMP375 Computer Architecture and OrganizationIndumathi ElayarajaNo ratings yet

- Course Name: Microprocessor Architecture: B. Sc. (Information Technology) Semester - IIDocument45 pagesCourse Name: Microprocessor Architecture: B. Sc. (Information Technology) Semester - IISolanki Prakash DevilalNo ratings yet

- FPGA Design TechniquesDocument32 pagesFPGA Design TechniquesRavindra SainiNo ratings yet

- Pic 16F877Document115 pagesPic 16F877narendramaharana39100% (1)

- Reduced Instruction Set Computers (Risc) : What Are Riscs and Why Do We Need Them?Document5 pagesReduced Instruction Set Computers (Risc) : What Are Riscs and Why Do We Need Them?iamumpidiNo ratings yet

- Routing Architecture and Forwarding: (Intro To Homework 4) Olof Hagsand KTH /CSCDocument36 pagesRouting Architecture and Forwarding: (Intro To Homework 4) Olof Hagsand KTH /CSCSyed Zia Sajjad RizviNo ratings yet

- Intel Sandy Ntel Sandy Bridge ArchitectureDocument54 pagesIntel Sandy Ntel Sandy Bridge ArchitectureJaisson K SimonNo ratings yet

- Chapter 2 - History of Programming LanguagesDocument30 pagesChapter 2 - History of Programming Languageserdene khutagNo ratings yet

- Approaches For Power Management Verification of SoC Having Dynamic Power and Voltage SwitchingDocument30 pagesApproaches For Power Management Verification of SoC Having Dynamic Power and Voltage SwitchingNageshwar ReddyNo ratings yet

- Rabbit - Overview of The Rabbit 4000 Product Line & Dynamic C SoftwareDocument62 pagesRabbit - Overview of The Rabbit 4000 Product Line & Dynamic C SoftwareJose Luis Castro AguilarNo ratings yet

- Lec 01Document34 pagesLec 01Lekha PylaNo ratings yet

- Unit4 Session1 Intro To Parallel ComputingDocument24 pagesUnit4 Session1 Intro To Parallel ComputingbhavanabadayNo ratings yet

- This Unit: - ! Multicycle Datapath - ! Clock Vs CPI - ! CPU Performance Equation - ! Performance Metrics - ! BenchmarkingDocument5 pagesThis Unit: - ! Multicycle Datapath - ! Clock Vs CPI - ! CPU Performance Equation - ! Performance Metrics - ! BenchmarkingavssrinivasavNo ratings yet

- PLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.From EverandPLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.No ratings yet

- IP Routing Protocols All-in-one: OSPF EIGRP IS-IS BGP Hands-on LabsFrom EverandIP Routing Protocols All-in-one: OSPF EIGRP IS-IS BGP Hands-on LabsNo ratings yet

- Question-Answer System For Object-Oriented Analysis and DesignDocument6 pagesQuestion-Answer System For Object-Oriented Analysis and DesignEng BahanzaNo ratings yet

- New Doc 2019-03-05 18.05.57Document1 pageNew Doc 2019-03-05 18.05.57Eng BahanzaNo ratings yet

- Project Proposal On Fruit and Vegetable ProductionDocument6 pagesProject Proposal On Fruit and Vegetable ProductionEng Bahanza100% (1)

- CV Amali Hassani BahanzaDocument3 pagesCV Amali Hassani BahanzaEng BahanzaNo ratings yet

- Tn100-Tutorial Questions OverallDocument15 pagesTn100-Tutorial Questions OverallEng BahanzaNo ratings yet

- Form 1 PhysicsDocument37 pagesForm 1 PhysicsEng Bahanza80% (5)

- Certificate of AttrendanceDocument1 pageCertificate of AttrendanceEng BahanzaNo ratings yet

- ThermionicDocument9 pagesThermionicEng BahanzaNo ratings yet

- Chapter 5Document13 pagesChapter 5Eng BahanzaNo ratings yet

- RadioactivityDocument16 pagesRadioactivityEng BahanzaNo ratings yet

- Radioactivity QuestionDocument16 pagesRadioactivity QuestionEng BahanzaNo ratings yet

- Magnetism and Electromagnetism PDFDocument7 pagesMagnetism and Electromagnetism PDFEng Bahanza100% (1)

- MTL5500 Range: Isolating Interface UnitsDocument88 pagesMTL5500 Range: Isolating Interface UnitsUssamaKhanNo ratings yet

- CH 02Document27 pagesCH 02Petter PNo ratings yet

- MEMS AccelerometerDocument4 pagesMEMS AccelerometerkadiravannNo ratings yet

- Samsung GalaxyDocument8 pagesSamsung GalaxyBibhuti JhaNo ratings yet

- CHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyDocument73 pagesCHAPTER 2 Memory Hierarchy Design & APPENDIX B. Review of Memory HeriarchyRachmadio Nayub LazuardiNo ratings yet

- Maxon 145391Document2 pagesMaxon 145391Mathieu BrasseurNo ratings yet

- 7400 Series (TTL) : Symbol DescriptionDocument11 pages7400 Series (TTL) : Symbol DescriptionBobyNo ratings yet

- Exam 4 Set CDocument4 pagesExam 4 Set Cmarktaborete0No ratings yet

- ZXR10 5950-H DatasheetDocument11 pagesZXR10 5950-H DatasheetAlexander PischulinNo ratings yet

- Ieee Cts and Pts 6-10Document99 pagesIeee Cts and Pts 6-10Thyago De Moura JorgeNo ratings yet

- Test Report For Feeder Protection RelayDocument3 pagesTest Report For Feeder Protection RelayHari haranNo ratings yet

- LABVIEW Based Low Noise ECG Signal Processing: Sarad Joshi and Aniket KumarDocument4 pagesLABVIEW Based Low Noise ECG Signal Processing: Sarad Joshi and Aniket KumarIoana GuțăNo ratings yet

- Frequently Asked Questions PDFDocument6 pagesFrequently Asked Questions PDFgotti45No ratings yet

- Va710 User ManualDocument17 pagesVa710 User ManualSpecial Sicim ProcessesNo ratings yet

- Company Profile Surge Protective DevicesDocument6 pagesCompany Profile Surge Protective DevicesAhmed WafiqNo ratings yet

- MCWC GTU Study Material Presentations Unit-2 25072021031143PMDocument27 pagesMCWC GTU Study Material Presentations Unit-2 25072021031143PM1932 Parekh JeelNo ratings yet

- EasymacroDocument65 pagesEasymacroRZNo ratings yet

- Application Study On Induction Heating Using Half Bridge LLC Resonant InverterDocument4 pagesApplication Study On Induction Heating Using Half Bridge LLC Resonant Inverteru18348794No ratings yet

- DC Motor Control Using Atmega16Document5 pagesDC Motor Control Using Atmega16Gaurav ShindeNo ratings yet

- ICT OutineDocument4 pagesICT OutineAly ChannarNo ratings yet

- Analog Electro Acoustic Studio Tech Specs 1.3Document12 pagesAnalog Electro Acoustic Studio Tech Specs 1.3jfkNo ratings yet

- Manual Programacion YK368LBDocument4 pagesManual Programacion YK368LBCarolina Zapata Moreno0% (1)

- DC5xD MKII Series Configuration and Instructions V1.0-20180608Document11 pagesDC5xD MKII Series Configuration and Instructions V1.0-20180608Alvaro Gomez Gomez100% (10)

- BU1-AC - Voltage RelayDocument8 pagesBU1-AC - Voltage Relayfaraz24No ratings yet

- GPW MTB FX Mh01ea ConnectDocument98 pagesGPW MTB FX Mh01ea ConnecttungocgoogleNo ratings yet