Professional Documents

Culture Documents

IO Workload in Virtualized Data Center Using Hypervisor

IO Workload in Virtualized Data Center Using Hypervisor

Uploaded by

Editor IJRITCCCopyright:

Available Formats

You might also like

- Microsoft Azure Fundamentals Exam Cram: Second EditionFrom EverandMicrosoft Azure Fundamentals Exam Cram: Second EditionRating: 5 out of 5 stars5/5 (1)

- Final Report On VirtualizationDocument27 pagesFinal Report On VirtualizationMukesh BuradkarNo ratings yet

- C - TADM70 - 74 - SAP Certified Technology Associate - OS - DB Migration For SAP NetWeaver 7.4 - SAP TrainingDocument5 pagesC - TADM70 - 74 - SAP Certified Technology Associate - OS - DB Migration For SAP NetWeaver 7.4 - SAP TrainingRamaNo ratings yet

- Rhcsa Exam DumpsDocument63 pagesRhcsa Exam DumpsANIMESH301No ratings yet

- Cloud Computing: (A Computing Platform For Next-Generation Internet)Document7 pagesCloud Computing: (A Computing Platform For Next-Generation Internet)Srini VasuluNo ratings yet

- (IJCST-V5I3P25) :suman PandeyDocument7 pages(IJCST-V5I3P25) :suman PandeyEighthSenseGroupNo ratings yet

- Riasetiawan 2015Document5 pagesRiasetiawan 2015Raghed EDRISSNo ratings yet

- Server Virtualization ThesisDocument6 pagesServer Virtualization Thesisfexschhld100% (1)

- A Comparative Survey On Function of Virtualization in Cloud EnvironmentDocument4 pagesA Comparative Survey On Function of Virtualization in Cloud EnvironmentInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Cloud Infrastructure and Security NotesDocument13 pagesCloud Infrastructure and Security Notesisha gaonkarNo ratings yet

- Cloud Computing Notes (UNIT-2)Document43 pagesCloud Computing Notes (UNIT-2)AmanNo ratings yet

- Cloud Computing I Unit IIIDocument33 pagesCloud Computing I Unit IIIjigneshahir992No ratings yet

- An Updated Performance Comparison of Virtual Machines and Linux ContainersDocument15 pagesAn Updated Performance Comparison of Virtual Machines and Linux ContainersAnonymous CRJcPvczNo ratings yet

- Chapter-1: Cloud Computing FundamentalsDocument42 pagesChapter-1: Cloud Computing FundamentalsfsmondiolatiroNo ratings yet

- Server Virtualization Research Paper PDFDocument8 pagesServer Virtualization Research Paper PDFkifmgbikf100% (2)

- Cloud Computing 18CS72: Microsoft Windows AzureDocument10 pagesCloud Computing 18CS72: Microsoft Windows AzureAnushaNo ratings yet

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Document5 pagesIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNo ratings yet

- Notes CC Unit 03Document33 pagesNotes CC Unit 03kailashnath.tripathiNo ratings yet

- A Survey of Present Research On Virtual ClusterDocument10 pagesA Survey of Present Research On Virtual ClusterZhang YuNo ratings yet

- Comp Sci - IJCSEITR - POLVMParallel Optimized Live VM Migration - Jayasree PDocument16 pagesComp Sci - IJCSEITR - POLVMParallel Optimized Live VM Migration - Jayasree PTJPRC PublicationsNo ratings yet

- 07 R 51 A 0510Document25 pages07 R 51 A 0510Balaji PenneruNo ratings yet

- Virtualization in Distributed System: A Brief OverviewDocument5 pagesVirtualization in Distributed System: A Brief OverviewBOHR International Journal of Computer Science (BIJCS)100% (1)

- Welcome To International Journal of Engineering Research and Development (IJERD)Document5 pagesWelcome To International Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Cloud CMPTNG 2Document6 pagesCloud CMPTNG 2Asad AliNo ratings yet

- GCC 2 MarksDocument10 pagesGCC 2 MarksInformation Techn. HODNo ratings yet

- BaadalDocument10 pagesBaadalAbdul KhaderNo ratings yet

- Cloud 2Document53 pagesCloud 2GFx KidNo ratings yet

- JTIT 3 2015 GrzonkaDocument6 pagesJTIT 3 2015 GrzonkaIrina VorobevaNo ratings yet

- Cloud Computing: Hottest Buzzword in Information TechnologyDocument19 pagesCloud Computing: Hottest Buzzword in Information TechnologySatish KumarNo ratings yet

- Round Robin Load BalancingDocument6 pagesRound Robin Load BalancingRiddhi PrajapatiNo ratings yet

- Shashank TiwariDocument24 pagesShashank TiwariShashank TiwariNo ratings yet

- Midterm Project ReportDocument39 pagesMidterm Project Reportabhikhanna020No ratings yet

- Unit - 1: Cloud Architecture and ModelDocument9 pagesUnit - 1: Cloud Architecture and ModelRitu SrivastavaNo ratings yet

- Unit-I CCDocument56 pagesUnit-I CCpreethiNo ratings yet

- RS - Week 1-2Document15 pagesRS - Week 1-2amberNo ratings yet

- 2 and 16 Marks - RejinpaulDocument12 pages2 and 16 Marks - RejinpaulYESHWANTH NNo ratings yet

- Ijesrt: Improve Virtualization Technique Using Packet Aggregation MechanismDocument4 pagesIjesrt: Improve Virtualization Technique Using Packet Aggregation MechanismAndrew HowardNo ratings yet

- Data Center Evolu - On and The "Cloud": Paul A. Strassmann George Mason University November 5, 2008, 7:20 To 10:00 PMDocument38 pagesData Center Evolu - On and The "Cloud": Paul A. Strassmann George Mason University November 5, 2008, 7:20 To 10:00 PMjobsmilesNo ratings yet

- Comparative Analysis On Docker and Virtual Machine in Cloud ComputingDocument10 pagesComparative Analysis On Docker and Virtual Machine in Cloud ComputingClyde MarNo ratings yet

- Term Paper - Virtualization in Cloud ComputingDocument10 pagesTerm Paper - Virtualization in Cloud ComputingSudipNo ratings yet

- Xen and VirtualizationDocument14 pagesXen and VirtualizationdasishereNo ratings yet

- Literature Review On Server VirtualizationDocument8 pagesLiterature Review On Server Virtualizationzgkuqhxgf100% (1)

- Ijcatr06041001 PDFDocument3 pagesIjcatr06041001 PDFATSNo ratings yet

- Proxy Re Encryptions (Pre) Based Deduplication Scheme On Encrypted Big Data For Cloud AbstractDocument31 pagesProxy Re Encryptions (Pre) Based Deduplication Scheme On Encrypted Big Data For Cloud AbstractsantoshNo ratings yet

- 5 SKG Intro To CloudDocument86 pages5 SKG Intro To CloudasimgulzarjanNo ratings yet

- SSRN Id4549044Document5 pagesSSRN Id4549044away007007007007No ratings yet

- Online Java CompilerDocument3 pagesOnline Java CompilerMayank S PatelNo ratings yet

- Virtualization: Transforming The IT LandscapeByDocument22 pagesVirtualization: Transforming The IT LandscapeByadrianmihuNo ratings yet

- Title: Virtualization in Cloud ComputingDocument3 pagesTitle: Virtualization in Cloud ComputingAnkur gamingNo ratings yet

- PDC Viva QsDocument6 pagesPDC Viva Qsvanshj314No ratings yet

- A Study On Virtualization Techniques and Challenges in Cloud ComputingDocument5 pagesA Study On Virtualization Techniques and Challenges in Cloud ComputingIndu Shekhar SharmaNo ratings yet

- Private Cloud Using Vmware Workstation: Project ReportDocument18 pagesPrivate Cloud Using Vmware Workstation: Project ReportVanshika VermaNo ratings yet

- Data Center Migration Using VMWareDocument33 pagesData Center Migration Using VMWareRebecca KhooNo ratings yet

- Unit - Ii Cloud Computing Architecture: Architecture and Event-Driven ArchitectureDocument30 pagesUnit - Ii Cloud Computing Architecture: Architecture and Event-Driven ArchitectureGeeky MnnNo ratings yet

- Introduction To Cloud Computing: Prof. Shie-Jue Lee Dept. of Electrical Engineering National Sun Yat-Sen UniversityDocument21 pagesIntroduction To Cloud Computing: Prof. Shie-Jue Lee Dept. of Electrical Engineering National Sun Yat-Sen UniversityNusrat Mary ChowdhuryNo ratings yet

- Practical File Cloud Computing: Department of Information TechnologyDocument14 pagesPractical File Cloud Computing: Department of Information TechnologyShubham BaranwalNo ratings yet

- Types of Virtualization: Autonomic ComputingDocument6 pagesTypes of Virtualization: Autonomic ComputingjattniNo ratings yet

- Unit-3: Grid FrameworkDocument44 pagesUnit-3: Grid FrameworkMUKESH KUMAR P 2019-2023 CSENo ratings yet

- B. Discuss Key Enabling Technologies in Cloud Computing SystemsDocument3 pagesB. Discuss Key Enabling Technologies in Cloud Computing SystemsMuveenaNo ratings yet

- Information TechnologyDocument4 pagesInformation TechnologyDilpreet SinghNo ratings yet

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingFrom EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo ratings yet

- Cloud Computing: Harnessing the Power of the Digital Skies: The IT CollectionFrom EverandCloud Computing: Harnessing the Power of the Digital Skies: The IT CollectionNo ratings yet

- Importance of Similarity Measures in Effective Web Information RetrievalDocument5 pagesImportance of Similarity Measures in Effective Web Information RetrievalEditor IJRITCCNo ratings yet

- A Review of 2D &3D Image Steganography TechniquesDocument5 pagesA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCNo ratings yet

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 pagesChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCNo ratings yet

- Diagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmDocument5 pagesDiagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmEditor IJRITCCNo ratings yet

- Prediction of Crop Yield Using LS-SVMDocument3 pagesPrediction of Crop Yield Using LS-SVMEditor IJRITCCNo ratings yet

- A Study of Focused Web Crawling TechniquesDocument4 pagesA Study of Focused Web Crawling TechniquesEditor IJRITCCNo ratings yet

- Itimer: Count On Your TimeDocument4 pagesItimer: Count On Your Timerahul sharmaNo ratings yet

- Predictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth KotianDocument3 pagesPredictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth Kotianrahul sharmaNo ratings yet

- 45 1530697786 - 04-07-2018 PDFDocument5 pages45 1530697786 - 04-07-2018 PDFrahul sharmaNo ratings yet

- Hybrid Algorithm For Enhanced Watermark Security With Robust DetectionDocument5 pagesHybrid Algorithm For Enhanced Watermark Security With Robust Detectionrahul sharmaNo ratings yet

- 44 1530697679 - 04-07-2018 PDFDocument3 pages44 1530697679 - 04-07-2018 PDFrahul sharmaNo ratings yet

- Paper On Design and Analysis of Wheel Set Assembly & Disassembly Hydraulic Press MachineDocument4 pagesPaper On Design and Analysis of Wheel Set Assembly & Disassembly Hydraulic Press MachineEditor IJRITCCNo ratings yet

- 49 1530872658 - 06-07-2018 PDFDocument6 pages49 1530872658 - 06-07-2018 PDFrahul sharmaNo ratings yet

- Safeguarding Data Privacy by Placing Multi-Level Access RestrictionsDocument3 pagesSafeguarding Data Privacy by Placing Multi-Level Access Restrictionsrahul sharmaNo ratings yet

- 41 1530347319 - 30-06-2018 PDFDocument9 pages41 1530347319 - 30-06-2018 PDFrahul sharmaNo ratings yet

- Etsi Gs Mec 003: Mobile Edge Computing (MEC) Framework and Reference ArchitectureDocument18 pagesEtsi Gs Mec 003: Mobile Edge Computing (MEC) Framework and Reference ArchitectureJasonNo ratings yet

- p.4 Computer Lesson Notes 2021Document22 pagesp.4 Computer Lesson Notes 2021Kasule MichaelNo ratings yet

- Essential Perl One-LinersDocument92 pagesEssential Perl One-LinersMichael DinglerNo ratings yet

- Demo: Matlab Management File and Menu Editor, Toolbar EditorDocument13 pagesDemo: Matlab Management File and Menu Editor, Toolbar EditorJans HendryNo ratings yet

- Shrinathji Institute of Technology & Engineering, NathdwaraDocument7 pagesShrinathji Institute of Technology & Engineering, Nathdwarainfinity technical online classesNo ratings yet

- E E E D: E 226 Signals and Systems I 226 - Signals and SystemsDocument3 pagesE E E D: E 226 Signals and Systems I 226 - Signals and SystemsTlektes SagingaliyevNo ratings yet

- Lenovo Thinkpad L420 DAGC9EMB8E0 REV-E PDFDocument53 pagesLenovo Thinkpad L420 DAGC9EMB8E0 REV-E PDFOgan AltrkyNo ratings yet

- PUCIT - Software Engineering - Course OutlineDocument7 pagesPUCIT - Software Engineering - Course Outlineibrahim.samad100% (2)

- WorkDocument4 pagesWorkfjt859977No ratings yet

- DVR 4 Canales Cpcam MDR672MDocument2 pagesDVR 4 Canales Cpcam MDR672MTecnoSmartNo ratings yet

- M Crocus SHDSLDocument380 pagesM Crocus SHDSLNicushoara ConstantinNo ratings yet

- KCS 713 Unit 1 Lecture 2Document25 pagesKCS 713 Unit 1 Lecture 2Shailesh SaxenaNo ratings yet

- 3 - Objectives and TargetsDocument3 pages3 - Objectives and TargetsAlaaNo ratings yet

- Rpcppe Jpdes 2021Document19 pagesRpcppe Jpdes 2021Ricardo MartinNo ratings yet

- VbaDocument3 pagesVbahukaNo ratings yet

- CS 2252 - Microprocessors and Microcontrollers PDFDocument2 pagesCS 2252 - Microprocessors and Microcontrollers PDFvelkarthi92No ratings yet

- Isu - Fica TablesDocument5 pagesIsu - Fica TablesSachin SinghNo ratings yet

- Model 3500 Manual TLD Reader With Winrems 3500-W-O-0602 Page A-1 Operator'S Manual - Appendix A Troubleshooting DocumentationDocument20 pagesModel 3500 Manual TLD Reader With Winrems 3500-W-O-0602 Page A-1 Operator'S Manual - Appendix A Troubleshooting DocumentationAntonio PlazasNo ratings yet

- Evaluation of Human-Robot Interaction Awareness in Search and RescueDocument6 pagesEvaluation of Human-Robot Interaction Awareness in Search and RescueAbhishekNo ratings yet

- Parallel Port Control With Delphi - Parallel Port, Delphi, LPTPort, Device DriverDocument8 pagesParallel Port Control With Delphi - Parallel Port, Delphi, LPTPort, Device DriverRhadsNo ratings yet

- Lenovo Noise Cancelling Half-Ear Low Latency HIFI Stereo Sound Gaming Wireless Earbuds - SHEIN SingaDocument1 pageLenovo Noise Cancelling Half-Ear Low Latency HIFI Stereo Sound Gaming Wireless Earbuds - SHEIN SingaTestingNo ratings yet

- Mandalapu2015 PDFDocument6 pagesMandalapu2015 PDFChaitu MunirajNo ratings yet

- The Pirate BayDocument3 pagesThe Pirate BayTiểu ThúyNo ratings yet

- Cisco 7200 Series Routers Troubleshooting Documentation RoadmapDocument2 pagesCisco 7200 Series Routers Troubleshooting Documentation RoadmapMohamed YagoubNo ratings yet

- Enterprise PLI PresentationDocument30 pagesEnterprise PLI PresentationAnjuraj RajanNo ratings yet

- Mazhar Hasan Resume NewDocument3 pagesMazhar Hasan Resume NewNajeem ThajudeenNo ratings yet

- Latest Research Papers On Database SecurityDocument4 pagesLatest Research Papers On Database Securityijsgpibkf100% (1)

- 4CP0 01 Que 20201103Document20 pages4CP0 01 Que 20201103Mahir ShahriyarNo ratings yet

IO Workload in Virtualized Data Center Using Hypervisor

IO Workload in Virtualized Data Center Using Hypervisor

Uploaded by

Editor IJRITCCCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

IO Workload in Virtualized Data Center Using Hypervisor

IO Workload in Virtualized Data Center Using Hypervisor

Uploaded by

Editor IJRITCCCopyright:

Available Formats

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 8

ISSN: 2321-8169

2256 2260

_______________________________________________________________________________________________

I/O Workload in Virtualized Data Center Using Hypervisor

Mr.M.Rajesh

Dr .G.Singaravel

Research Scholar, Bharath University,

Chennai.

goldmraja@gmail.com

Professor and Head of information technology

K.S.R college of Engineering (Autonomous),

Tiruchengode.

singaravelg@gmail.com

Abstract: Cloud computing [10] is gaining popularity as its the way to virtualize the datacenter and increase flexibility in the use of

computation resources. This virtual machine approach can dramatically improve the efficiency, power utilization and availability of costly

hardware resources, such as CPU and memory. Virtualization in datacenter had been done in the back end of Eucalyptus software and Front end

was installed on another CPU. The operation of performance measurement had been done in network I/O applications environment of virtualized

cloud. Then measurement was analyzed based on performance impact of co-locating applications in a virtualized cloud in terms of throughput

and resource sharing effectiveness, including the impact of idle instances on applications that are running concurrently on the same physical

host. This project proposes the virtualization technology which uses the hypervisor to install the Eucalyptus software in single physical machine

for setting up a cloud computing environment. By using the hypervisor, the front end and back end of eucalyptus software will be installed in the

same machine. The performance will be measured based on the interference in parallel processing of CPU and network intensive workloads by

using the Xen Virtual Machine Monitors. The main motivation of this project is to provide the scalable virtualized datacenter.

Keywords: Cloud computing, virtualization, Hypervisor, Eucalyptus.

__________________________________________________*****_________________________________________________

INTRODUCTION

Cloud Computing [1] is more than a collection

of computer resources as it provides a mechanism to manage

those resources. It is the delivery of computing as a service

rather than a product, whereby shared resources, software, and

information are provided to computers and other devices as a

utility over a network. A Cloud Computing platform supports

redundant, self-recovering, highly scalable programming

models that allow workloads to recover from many inevitable

hardware/software failures. The concept of cloud computing

and virtualization offers so many innovative opportunities that

it is not surprising that there are new announcements every

day.

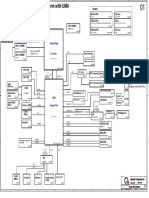

Fig no. 1 Cloud Computing Architecture

It is defined as a pool of virtualized computer

resources. Based on this virtualization the Cloud Computing

paradigm allows workloads to be deployed and scaled-out

quickly through the rapid provisioning of virtual machines or

physical machines. In a Cloud Computing platform software is

migrating from the desktop into the "clouds" of the Internet,

promising users anytime, anywhere access to their programs

and data. The innovation will continue and there will be

massive value created for customers over the coming years.

Most technologists are sold on the fundamentals of cloud

computing and it's a realization of the last 20 years of

architecture development.

It describes a new supplement, consumption, and

delivery model for IT services based on Internet protocols, and

it typically involves provisioning of dynamically scalable and

often virtualized resources. It provides computation, software,

data access, and storage services that do not require end-user

knowledge of the physical location and configuration of the

system that delivers the services.

Cloud computing providers deliver applications

via the internet, which are accessed from a web browser, while

the business software and data are stored on servers at a

remote location. In some cases, legacy applications (line of

business applications that until now have been prevalent in

thin client Windows computing) are delivered via a screensharing technology, while the computing resources are

consolidated at a remote data center location; in other cases,

entire business applications have been coded using web-based

technologies such as AJAX.

In this paper, performance interference among

different VMs is running on the same hardware platform with

the focus on network I/O processing. The main motivation for

targeting our measurement study on performance interference

of processing concurrent network I/O workloads in virtualized

environment is simply because network I/O applications are

becoming dominating workloads in current cloud computing

systems. By carefully design of our measurement study and

the set of performance metrics we use to characterize the

network I/O workloads, we derive some important factors of

I/O performance conflicts based on application throughput

interference and net I/O interference. Our performance

2256

IJRITCC | August 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 8

ISSN: 2321-8169

2256 2260

_______________________________________________________________________________________________

measurement and workload analysis also provide some

insights on performance optimizations for CPU scheduler and

I/O channel and efficiency management of workload and VM

configurations

VIRTUALIZATION

Virtualization [9],[6],[5] in computing is a process of

creating virtual (rather than actual) version of something, such

as a hardware platform, operating system, a storage device or

network resources. It is a software acts like hardware. The

software used for virtualization is known as hypervisors. There

are different types of hypervisors which are used for

virtualization like, XEN, VMware, and KVM.

XEN HYPERVISOR

Xen is a Virtual-Machine Monitor (VMM) providing

services that allow multiple computer operating systems to

execute on the same computer hardware concurrently. The

Xen community develops and maintains Xen as free software,

licensed under the GNU General Public License (GPLv2). It is

available for the IA-32, x86-64, Itanium and ARM computer

architectures.

VMWARE

VMware is proprietary software developed in 1998

and based in Palo Alto, California, USA. It is majorly owned

by Corporation. VMwares desktop software runs on

Microsoft Windows, Linux, and Mac OS X, while VMware's

enterprise software hypervisors runs for servers, VMware ESX

and VMware ESXi, are bare-metal embedded hypervisors that

run directly on server hardware without requiring an additional

underlying operating system.

Kernel-based Virtual Machine (KVM)

KVM provides infrastructure virtualization for the

Linux kernel. KVM supports native virtualization on

processors with hardware virtualization extensions. This

supports a paravirtual Ethernet card, a paravirtual disk I/O

controller, a balloon device for adjusting guest memory-usage,

and VGA graphics interface using SPICE or VMware drivers.

PARAVIRTUALIZATION

Paravirtualization is a virtualization technique that

provides a software interface to virtual machines that is similar

but not identical to that of the underlying hardware. The intent

of the modified interface is to reduce the portion of the guest's

execution time spent for performing operations which are

substantially more difficult to run in a virtual environment

compared to a non-virtualized environment.

The paravirtualization provides specially defined

'hooks' to allow the guest(s) and host to request and

acknowledge these tasks, which would otherwise be executed

in the virtual domain. A successful paravirtualized platform

may allow the Virtual Machine Manager (VMM) to be simpler

and reduce the overall performance degradation of machineexecution inside the virtual-guest.

Credit-Based CPU Scheduler

Introduction

The credit scheduler[4] is a proportional fair share

CPU scheduler built from the ground up to be work

conserving on SMP hosts. It is now the default scheduler in

the Xen-unstable trunk. The SEDF and BVT schedulers are

still optionally available but the plan of record is for them to

be phased out and eventually removed.

Description

Each domain (including Host OS) is assigned

a weight and a cap.

Weight: A domain with a weight of 512 will get twice as

much CPU as a domain with a weight of 256 on a contended

host. Legal weights range from 1 to 65535 and the default is

256.

Cap: The cap optionally fixes the maximum amount of CPU a

domain will be able to consume, even if the host system has

idle CPU cycles. The cap is expressed in percentage of one

physical CPU: 100 is 1 physical CPU, 50 is half a CPU, 400 is

4 CPUs, etc... The default, 0, means there is no upper cap.

Smp Load Balancing

The credit scheduler performs automatic load

balances among guest VCPUs across all available physical

CPUs on an SMP host. The administrator does not need to

manually pin VCPUs to load balance the system.

Algorithms

Each CPU manages a local run queue of runnable

VCPUs. This queue is sorted by VCPU priority. A VCPU's

priority can be one of the two value, they are

over or under representing whether this VCPU has or hasn't

yet exceeded its fair share of CPU resource in the ongoing

accounting period. When inserting a VCPU onto a run queue,

it is put after all other VCPUs of equal priority to it.

As a VCPU runs, it consumes credits. Every so

often, a system-wide accounting thread recomputes how many

credits each active VM has earned and bumps the credits.

Negative credits imply a priority of over. Until a VCPU

consumes its allotted credits, it priority is under.

On each CPU, at every scheduling decision, the

next VCPU to run is picked off the head from the run queue.

The scheduling decision is the common path of the scheduler

and is therefore designed to be light weight and efficient. No

accounting takes place in this code path.

When a CPU doesn't find a VCPU of

priority under on its local run queue, it will look on other

CPUs for one. This load balancing guarantees that each VM

receives its fair share of CPU resources system-wide. Before a

2257

IJRITCC | August 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 8

ISSN: 2321-8169

2256 2260

_______________________________________________________________________________________________

CPU goes idle, it will look on other CPUs to find any runnable

VCPU. This guarantees that no CPU remains idles when there

is runnable work in the system.

Related work

Most of the efforts to date can be classified into three

main categories: (1) performance monitoring and enhancement

of VMs hosted on a single physical machine (2) performance

evaluation, enhancement, and migration of VMs running on

multiple physical hosts(3) performance comparison conducted

with different platforms or different implementations of

VMMs, such as Xen and KVM , as well as the efforts on

developing benchmarks Given that the focus of this paper is

on performance measurement and analysis of network I/O

applications in a virtualized single host, in this section we

provide a brief discussion on the state of art in literature to

date on this topic. Most of the research on virtualization in a

single host has been focused on either developing the

performance monitoring or profiling tools for VMM and VMs,

represented by or conducting performance evaluation work by

varying VM configurations on host capacity utilization or by

varying CPU scheduler configurations, especially for I/O

related performance measurements. For example, some work

has focused on I/O performance improvement by tuning I/O

related parameter such as TCP Segmentation Offload, network

bridging.

PROPOSED SYSTEM ARCHITECTURE

Proposed Architecture

Here the virtualization technology which uses the hypervisor

to install the Eucalyptus software in single physical machine

for setting up a cloud computing environment. By using the

hypervisor, the front end and back end of eucalyptus software

will be installed in the same machine. The performance will be

measured based on the interference in parallel processing of

CPU and network intensive workloads by using the Xen

Virtual Machine Monitors.

EXPERIMENTAL SETUP

A new open-source framework called Eucalyptus

has been released that allows users to create private cloud

computing grids that are API-compatible with the existing

Amazon

standards.

Eucalyptus

leverages

existing

virtualization technology (the KVM or Xen hypervisors) and

popular Linux distributions.

To test Eucalyptus, a simplified two-node cluster

was used. A front-end node with two network interfaces was

connected to both the campus network and a private test

network, and a back-end node was connected only to the

private network. Both networks ran at gigabit speeds. The

private network was configured to support jumbo frames with

a 9000 byte MTU to accelerate EBS performance.

The ATA over Ethernet protocol used as the transport

mechanism behind EBS limits the disk request size to a single

Ethernet frame for simplicity, and fragments larger client

requests. Thus, using the largest Ethernet frame size possible

for both uses the disk more efficiently and reduces the

protocol overhead in relation to the payload. The front-end

node was equipped with two AMD Opteron processors

running at 2.4GHz with 4GB of RAM and a 500GB hard

drive.

It was configured to run the CLC, CC, EBS, and

WS3 services. The back-end node was equipped with two

Quad-Core AMD Opteron processors running at 3.1GHz with

16GB of RAM and a 500GB hard drive. These processors

support the AMD-V virtualization extensions as required for

KVM support in Linux. The back-end node was configured to

run the NC service and all virtual machine images.

To provide a performance baseline, the storage and

network components were profiled outside of the virtual

machine. For storage, the Seagate Barracuda 7200.11 500GB

hard drive has a peak read and write bandwidth of

approximately 110MB/s, assuming large block sizes (64kB+)

and streaming sequential access patterns. For networking the

virtual switch was provided for bridging. In an ideal cloud

computing system, this performance would be available to

applications running inside the virtual environment.

Eucalyptus with KVM

In the first configuration, Eucalyptus with the KVM

hypervisor was used. This is a default installation of Ubuntu

Enterprise Cloud (UEC), which couples Eucalyptus 1.60 with

the latest release of Ubuntu 9.10. The key benefit of UEC is

ease-of-installation as it took less than 30 minutes to install

and configure the simple two-node system.

Eucalyptus with Xen

Eucalyptus was used with the Xen hypervisor. Unfortunately,

Ubuntu 9.10 is not compatible with Xen when used as the host

domain (only as a guest domain). Thus, the Centos 5.4

distribution was used instead because of its native

compatibility with Xen

2258

IJRITCC | August 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 8

ISSN: 2321-8169

2256 2260

_______________________________________________________________________________________________

PERFORMANCE METRICS

The following metrics are used in our measurement

study. They are collected using Xenmon [8] and Xentop [15].

Server throughput (#req/sec). It quantitatively measures

the maximum number of successful requests served per second

when retrieving web documents.

Normalized throughput. We typically choose one measured

throughput as our baseline reference throughput and normalize

the throughputs of different configuration settings in order to

make adequate comparison.

Aggregated throughput (#req/sec). We use aggregated

throughput as a metric to measure the impact of using varying

number of VMs on the aggregated throughput performance of

a physical host.

CPU utilization (%). To understand the CPU resource

sharing across VMs running on a single physical machine, we

measure the average CPU utilization of each VM, including

Domain0 CPU usage and guest domain CPU usage

respectively.

Network I/O per second (Kbytes/sec). We measure the

amount of network I/O traffic in KB per second, transferred

from a remote web server for the corresponding workload.

IMPACT ANALYSIS

In this section we provide a detailed performance

analysis of maintaining idle VM instances, focusing on the

cost and benefit of maintaining idle guest domains in the

presence of network I/O workloads on a separate VM sharing

the same physical host. Concretely, we focus our measurement

study on addressing the following two questions: First, we

want to understand the advantages and drawbacks of keeping

idle instances from the perspectives of both cloud providers

and cloud consumers. Second, we want to measure and

understand the start-up time of creating one or more new guest

domains on a physical host, and its impact on existing

applications. Consider a set of n (n>0) VMs hosted on a

physical machine, at any given point of time, a guest domain

(VM) can be in one of the following three states: (1) execution

state, namely the guest domain is currently using CPU; (2)

runnable state, namely the guest domain is on the run queue,

waiting to be scheduled for execution on the CPU; and (3)

blocked state, namely the guest domain is blocked and is not

on the run queue. A guest domain is called idle when the guest

OS is executing idle-loop.

MEASUREMENT

we set up our environment with one VM (VM1) running one

of the six selected network I/O workloads of 1 KB, 4 KB, 30

KB, 50 KB, 70 KB and 100 KB. The value of each I/O

workload characteristics is measured at 100% workload rate

for the given workload type. Comparing with networkintensive workloads of 30 KB, 50 KB, 70 KB, and 100 KB

files, the CPU-intensive workloads of 1 KB and 4 KB files

have at least 30% and 60% lower event and switch costs

respectively because the network I/O processing is more

efficient in these cases. Then normalized throughput, CPU

utilization and Network I/O between Domain1 and Domain2,

both with identical 1kB application at 50% workload rate.

Concretely, for 1 KB and 4 KB workloads, driver domain has

to wait about 2.5 times longer on the CPU run queue for being

scheduled into the execution state and the guest domain

(VM1) has 30 times longer waiting time. However, they are

infrequently blocked for acquiring more CPU resource,

especially in the guest domain the block time is less than 6%.

We notice that the borderline network-intensive 10 KB

workload has the most efficient I/O processing ability with

7.28 pages per execution while the event and switch numbers

are 15% larger than CPU-intensive workloads. Interesting to

note is that initially, the I/O execution is getting more and

more efficient as file size increases. However, with file size of

workload grows larger and larger, more and more packets

need to be delivered for each request. The event and switch

number are increasing gradually as observed. Note that the

VMM events per second are also related to request rate of the

workload. Though it drops slightly for the workload of file

size 30-100 KB, the overall event number of networkintensive workloads is still higher than CPU-intensive ones.

With increasing file size of shorter workloads (1 KB, 4 KB

and 10 KB), VMs are blocked more and more frequently.

Finally, I/O execution starts to decline when the file size is

greater than 10 KB. The network I/O workloads that exhibit

CPU bound are now transformed to network bounded as the

file size of the workloads exceeding 10 KB and the contention

for network resource is growing higher as the file size of the

workload increases. In our experiments, the 100 KB workload

shows the highest demand for the network resource. These

basic system level characteristics in our basecase scenario can

help us to compare and understand better the combination of

different workloads and the multiple factors that may cause

different levels of performance interferences with respect to

both throughput and net I/O.

CONCLUSION

2259

IJRITCC | August 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

International Journal on Recent and Innovation Trends in Computing and Communication

Volume: 2 Issue: 8

ISSN: 2321-8169

2256 2260

_______________________________________________________________________________________________

Cloud computing offers scalable infrastructure and

software off site, saving labor, hardware, and power costs.

Financially, the clouds virtual resources are typically cheaper

than dedicated physical resources connected to a personal

computer or network. This project proposes the virtualization

technology of using the hypervisor to install the Eucalyptus

software in single physical machine. By using the hypervisor,

the front end and back end will be installed in the same

machine. Then performance will be measured based on the

interference in parallel processing of CPU and network

intensive workloads by using the Xen Virtual Machine

Monitors. The main motivation of this project is to provide

the scalable virtualized data center.

[13]

[14]

[15]

Virtualized Data Centers IEEE transactions on

service computing,2010.

Single Root I/O Virtualization and Sharing

Specification revision 1.0, specification by Peripheral

Component Interconnect Special Interest Group,

Sept. 2007.

D. Gupta, R. Gardner, L. Cherkasova, XenMon: QoS

Monitoring and Performance Profiling Tool,

http://www.hpl.hp.com/techreports/2005/HPL-2005187.html

REFERENCES:

[1]

[2]

[3]

[4]

[5]

[6]

[7]

Chen.V,Kaeli.D, and Murphy.D, Performance

Evaluation of Virtual Appliances, Proc. First

International

Workshop

on

Virtualization

Performance: Analysis, Characterization, and Tools

(VPACT 08), April, 2008.

Chinni.s and Hiremane .R, Virtual Machine Device

Queues: An Integral Part of Intel Virtualization

Technology for Connectivity that Delivers Enhanced

Network Performance, white paper, Intel, 2007.

Gupta, R. Gardner, L. Cherkasova, XenMon: QoS

Monitoring and Performance Profiling Tool,

http://www.hpl.hp.com/techreports/2005/HPL-2005187.html

www.xen.org/files/summit_3/sched.pdf

P. Padala, X. Zhu, Z. Wang, S. Singhal and K. Shin,

Performance

evaluation

of

virtualization

technologies for server consolidation, HP

Laboratories

Report,

NO.

HPL-2007-59R1,

September 2008..

R. Rose, Survey of system virtualization

techniques, Technical report, March 2004

S. Wardley, E. Goyer, and N. Barcet. Ubuntu

enterprise cloud architecture. Technical report,

Canonical, August 2009.

[8]

http://linux.die.net/man/1/xentop

[9]

P. Padala, X. Zhu, Z. Wang, S. Singhal and K. Shin,

Performance

evaluation

of

virtualization

technologies for server consolidation, HP

Laboratories

Report,

NO.

HPL-2007-59R1,

September 2008.

http://en.wikipedia.org/wiki/Cloud_computing

KVM http://www.linux-kvm.org/page/Main_PageP.

Yiduo Mei, Ling Liu, Senior Member, IEEE,Xing Pu,

Sankaran Sivathanu, and Xiaoshe Dong ,

Performance Analysis of Network I/O Workloads in

[10]

[11]

[12]

2260

IJRITCC | August 2014, Available @ http://www.ijritcc.org

_______________________________________________________________________________________

You might also like

- Microsoft Azure Fundamentals Exam Cram: Second EditionFrom EverandMicrosoft Azure Fundamentals Exam Cram: Second EditionRating: 5 out of 5 stars5/5 (1)

- Final Report On VirtualizationDocument27 pagesFinal Report On VirtualizationMukesh BuradkarNo ratings yet

- C - TADM70 - 74 - SAP Certified Technology Associate - OS - DB Migration For SAP NetWeaver 7.4 - SAP TrainingDocument5 pagesC - TADM70 - 74 - SAP Certified Technology Associate - OS - DB Migration For SAP NetWeaver 7.4 - SAP TrainingRamaNo ratings yet

- Rhcsa Exam DumpsDocument63 pagesRhcsa Exam DumpsANIMESH301No ratings yet

- Cloud Computing: (A Computing Platform For Next-Generation Internet)Document7 pagesCloud Computing: (A Computing Platform For Next-Generation Internet)Srini VasuluNo ratings yet

- (IJCST-V5I3P25) :suman PandeyDocument7 pages(IJCST-V5I3P25) :suman PandeyEighthSenseGroupNo ratings yet

- Riasetiawan 2015Document5 pagesRiasetiawan 2015Raghed EDRISSNo ratings yet

- Server Virtualization ThesisDocument6 pagesServer Virtualization Thesisfexschhld100% (1)

- A Comparative Survey On Function of Virtualization in Cloud EnvironmentDocument4 pagesA Comparative Survey On Function of Virtualization in Cloud EnvironmentInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Cloud Infrastructure and Security NotesDocument13 pagesCloud Infrastructure and Security Notesisha gaonkarNo ratings yet

- Cloud Computing Notes (UNIT-2)Document43 pagesCloud Computing Notes (UNIT-2)AmanNo ratings yet

- Cloud Computing I Unit IIIDocument33 pagesCloud Computing I Unit IIIjigneshahir992No ratings yet

- An Updated Performance Comparison of Virtual Machines and Linux ContainersDocument15 pagesAn Updated Performance Comparison of Virtual Machines and Linux ContainersAnonymous CRJcPvczNo ratings yet

- Chapter-1: Cloud Computing FundamentalsDocument42 pagesChapter-1: Cloud Computing FundamentalsfsmondiolatiroNo ratings yet

- Server Virtualization Research Paper PDFDocument8 pagesServer Virtualization Research Paper PDFkifmgbikf100% (2)

- Cloud Computing 18CS72: Microsoft Windows AzureDocument10 pagesCloud Computing 18CS72: Microsoft Windows AzureAnushaNo ratings yet

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Document5 pagesIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNo ratings yet

- Notes CC Unit 03Document33 pagesNotes CC Unit 03kailashnath.tripathiNo ratings yet

- A Survey of Present Research On Virtual ClusterDocument10 pagesA Survey of Present Research On Virtual ClusterZhang YuNo ratings yet

- Comp Sci - IJCSEITR - POLVMParallel Optimized Live VM Migration - Jayasree PDocument16 pagesComp Sci - IJCSEITR - POLVMParallel Optimized Live VM Migration - Jayasree PTJPRC PublicationsNo ratings yet

- 07 R 51 A 0510Document25 pages07 R 51 A 0510Balaji PenneruNo ratings yet

- Virtualization in Distributed System: A Brief OverviewDocument5 pagesVirtualization in Distributed System: A Brief OverviewBOHR International Journal of Computer Science (BIJCS)100% (1)

- Welcome To International Journal of Engineering Research and Development (IJERD)Document5 pagesWelcome To International Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Cloud CMPTNG 2Document6 pagesCloud CMPTNG 2Asad AliNo ratings yet

- GCC 2 MarksDocument10 pagesGCC 2 MarksInformation Techn. HODNo ratings yet

- BaadalDocument10 pagesBaadalAbdul KhaderNo ratings yet

- Cloud 2Document53 pagesCloud 2GFx KidNo ratings yet

- JTIT 3 2015 GrzonkaDocument6 pagesJTIT 3 2015 GrzonkaIrina VorobevaNo ratings yet

- Cloud Computing: Hottest Buzzword in Information TechnologyDocument19 pagesCloud Computing: Hottest Buzzword in Information TechnologySatish KumarNo ratings yet

- Round Robin Load BalancingDocument6 pagesRound Robin Load BalancingRiddhi PrajapatiNo ratings yet

- Shashank TiwariDocument24 pagesShashank TiwariShashank TiwariNo ratings yet

- Midterm Project ReportDocument39 pagesMidterm Project Reportabhikhanna020No ratings yet

- Unit - 1: Cloud Architecture and ModelDocument9 pagesUnit - 1: Cloud Architecture and ModelRitu SrivastavaNo ratings yet

- Unit-I CCDocument56 pagesUnit-I CCpreethiNo ratings yet

- RS - Week 1-2Document15 pagesRS - Week 1-2amberNo ratings yet

- 2 and 16 Marks - RejinpaulDocument12 pages2 and 16 Marks - RejinpaulYESHWANTH NNo ratings yet

- Ijesrt: Improve Virtualization Technique Using Packet Aggregation MechanismDocument4 pagesIjesrt: Improve Virtualization Technique Using Packet Aggregation MechanismAndrew HowardNo ratings yet

- Data Center Evolu - On and The "Cloud": Paul A. Strassmann George Mason University November 5, 2008, 7:20 To 10:00 PMDocument38 pagesData Center Evolu - On and The "Cloud": Paul A. Strassmann George Mason University November 5, 2008, 7:20 To 10:00 PMjobsmilesNo ratings yet

- Comparative Analysis On Docker and Virtual Machine in Cloud ComputingDocument10 pagesComparative Analysis On Docker and Virtual Machine in Cloud ComputingClyde MarNo ratings yet

- Term Paper - Virtualization in Cloud ComputingDocument10 pagesTerm Paper - Virtualization in Cloud ComputingSudipNo ratings yet

- Xen and VirtualizationDocument14 pagesXen and VirtualizationdasishereNo ratings yet

- Literature Review On Server VirtualizationDocument8 pagesLiterature Review On Server Virtualizationzgkuqhxgf100% (1)

- Ijcatr06041001 PDFDocument3 pagesIjcatr06041001 PDFATSNo ratings yet

- Proxy Re Encryptions (Pre) Based Deduplication Scheme On Encrypted Big Data For Cloud AbstractDocument31 pagesProxy Re Encryptions (Pre) Based Deduplication Scheme On Encrypted Big Data For Cloud AbstractsantoshNo ratings yet

- 5 SKG Intro To CloudDocument86 pages5 SKG Intro To CloudasimgulzarjanNo ratings yet

- SSRN Id4549044Document5 pagesSSRN Id4549044away007007007007No ratings yet

- Online Java CompilerDocument3 pagesOnline Java CompilerMayank S PatelNo ratings yet

- Virtualization: Transforming The IT LandscapeByDocument22 pagesVirtualization: Transforming The IT LandscapeByadrianmihuNo ratings yet

- Title: Virtualization in Cloud ComputingDocument3 pagesTitle: Virtualization in Cloud ComputingAnkur gamingNo ratings yet

- PDC Viva QsDocument6 pagesPDC Viva Qsvanshj314No ratings yet

- A Study On Virtualization Techniques and Challenges in Cloud ComputingDocument5 pagesA Study On Virtualization Techniques and Challenges in Cloud ComputingIndu Shekhar SharmaNo ratings yet

- Private Cloud Using Vmware Workstation: Project ReportDocument18 pagesPrivate Cloud Using Vmware Workstation: Project ReportVanshika VermaNo ratings yet

- Data Center Migration Using VMWareDocument33 pagesData Center Migration Using VMWareRebecca KhooNo ratings yet

- Unit - Ii Cloud Computing Architecture: Architecture and Event-Driven ArchitectureDocument30 pagesUnit - Ii Cloud Computing Architecture: Architecture and Event-Driven ArchitectureGeeky MnnNo ratings yet

- Introduction To Cloud Computing: Prof. Shie-Jue Lee Dept. of Electrical Engineering National Sun Yat-Sen UniversityDocument21 pagesIntroduction To Cloud Computing: Prof. Shie-Jue Lee Dept. of Electrical Engineering National Sun Yat-Sen UniversityNusrat Mary ChowdhuryNo ratings yet

- Practical File Cloud Computing: Department of Information TechnologyDocument14 pagesPractical File Cloud Computing: Department of Information TechnologyShubham BaranwalNo ratings yet

- Types of Virtualization: Autonomic ComputingDocument6 pagesTypes of Virtualization: Autonomic ComputingjattniNo ratings yet

- Unit-3: Grid FrameworkDocument44 pagesUnit-3: Grid FrameworkMUKESH KUMAR P 2019-2023 CSENo ratings yet

- B. Discuss Key Enabling Technologies in Cloud Computing SystemsDocument3 pagesB. Discuss Key Enabling Technologies in Cloud Computing SystemsMuveenaNo ratings yet

- Information TechnologyDocument4 pagesInformation TechnologyDilpreet SinghNo ratings yet

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingFrom EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo ratings yet

- Cloud Computing: Harnessing the Power of the Digital Skies: The IT CollectionFrom EverandCloud Computing: Harnessing the Power of the Digital Skies: The IT CollectionNo ratings yet

- Importance of Similarity Measures in Effective Web Information RetrievalDocument5 pagesImportance of Similarity Measures in Effective Web Information RetrievalEditor IJRITCCNo ratings yet

- A Review of 2D &3D Image Steganography TechniquesDocument5 pagesA Review of 2D &3D Image Steganography TechniquesEditor IJRITCCNo ratings yet

- Channel Estimation Techniques Over MIMO-OFDM SystemDocument4 pagesChannel Estimation Techniques Over MIMO-OFDM SystemEditor IJRITCCNo ratings yet

- Diagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmDocument5 pagesDiagnosis and Prognosis of Breast Cancer Using Multi Classification AlgorithmEditor IJRITCCNo ratings yet

- Prediction of Crop Yield Using LS-SVMDocument3 pagesPrediction of Crop Yield Using LS-SVMEditor IJRITCCNo ratings yet

- A Study of Focused Web Crawling TechniquesDocument4 pagesA Study of Focused Web Crawling TechniquesEditor IJRITCCNo ratings yet

- Itimer: Count On Your TimeDocument4 pagesItimer: Count On Your Timerahul sharmaNo ratings yet

- Predictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth KotianDocument3 pagesPredictive Analysis For Diabetes Using Tableau: Dhanamma Jagli Siddhanth Kotianrahul sharmaNo ratings yet

- 45 1530697786 - 04-07-2018 PDFDocument5 pages45 1530697786 - 04-07-2018 PDFrahul sharmaNo ratings yet

- Hybrid Algorithm For Enhanced Watermark Security With Robust DetectionDocument5 pagesHybrid Algorithm For Enhanced Watermark Security With Robust Detectionrahul sharmaNo ratings yet

- 44 1530697679 - 04-07-2018 PDFDocument3 pages44 1530697679 - 04-07-2018 PDFrahul sharmaNo ratings yet

- Paper On Design and Analysis of Wheel Set Assembly & Disassembly Hydraulic Press MachineDocument4 pagesPaper On Design and Analysis of Wheel Set Assembly & Disassembly Hydraulic Press MachineEditor IJRITCCNo ratings yet

- 49 1530872658 - 06-07-2018 PDFDocument6 pages49 1530872658 - 06-07-2018 PDFrahul sharmaNo ratings yet

- Safeguarding Data Privacy by Placing Multi-Level Access RestrictionsDocument3 pagesSafeguarding Data Privacy by Placing Multi-Level Access Restrictionsrahul sharmaNo ratings yet

- 41 1530347319 - 30-06-2018 PDFDocument9 pages41 1530347319 - 30-06-2018 PDFrahul sharmaNo ratings yet

- Etsi Gs Mec 003: Mobile Edge Computing (MEC) Framework and Reference ArchitectureDocument18 pagesEtsi Gs Mec 003: Mobile Edge Computing (MEC) Framework and Reference ArchitectureJasonNo ratings yet

- p.4 Computer Lesson Notes 2021Document22 pagesp.4 Computer Lesson Notes 2021Kasule MichaelNo ratings yet

- Essential Perl One-LinersDocument92 pagesEssential Perl One-LinersMichael DinglerNo ratings yet

- Demo: Matlab Management File and Menu Editor, Toolbar EditorDocument13 pagesDemo: Matlab Management File and Menu Editor, Toolbar EditorJans HendryNo ratings yet

- Shrinathji Institute of Technology & Engineering, NathdwaraDocument7 pagesShrinathji Institute of Technology & Engineering, Nathdwarainfinity technical online classesNo ratings yet

- E E E D: E 226 Signals and Systems I 226 - Signals and SystemsDocument3 pagesE E E D: E 226 Signals and Systems I 226 - Signals and SystemsTlektes SagingaliyevNo ratings yet

- Lenovo Thinkpad L420 DAGC9EMB8E0 REV-E PDFDocument53 pagesLenovo Thinkpad L420 DAGC9EMB8E0 REV-E PDFOgan AltrkyNo ratings yet

- PUCIT - Software Engineering - Course OutlineDocument7 pagesPUCIT - Software Engineering - Course Outlineibrahim.samad100% (2)

- WorkDocument4 pagesWorkfjt859977No ratings yet

- DVR 4 Canales Cpcam MDR672MDocument2 pagesDVR 4 Canales Cpcam MDR672MTecnoSmartNo ratings yet

- M Crocus SHDSLDocument380 pagesM Crocus SHDSLNicushoara ConstantinNo ratings yet

- KCS 713 Unit 1 Lecture 2Document25 pagesKCS 713 Unit 1 Lecture 2Shailesh SaxenaNo ratings yet

- 3 - Objectives and TargetsDocument3 pages3 - Objectives and TargetsAlaaNo ratings yet

- Rpcppe Jpdes 2021Document19 pagesRpcppe Jpdes 2021Ricardo MartinNo ratings yet

- VbaDocument3 pagesVbahukaNo ratings yet

- CS 2252 - Microprocessors and Microcontrollers PDFDocument2 pagesCS 2252 - Microprocessors and Microcontrollers PDFvelkarthi92No ratings yet

- Isu - Fica TablesDocument5 pagesIsu - Fica TablesSachin SinghNo ratings yet

- Model 3500 Manual TLD Reader With Winrems 3500-W-O-0602 Page A-1 Operator'S Manual - Appendix A Troubleshooting DocumentationDocument20 pagesModel 3500 Manual TLD Reader With Winrems 3500-W-O-0602 Page A-1 Operator'S Manual - Appendix A Troubleshooting DocumentationAntonio PlazasNo ratings yet

- Evaluation of Human-Robot Interaction Awareness in Search and RescueDocument6 pagesEvaluation of Human-Robot Interaction Awareness in Search and RescueAbhishekNo ratings yet

- Parallel Port Control With Delphi - Parallel Port, Delphi, LPTPort, Device DriverDocument8 pagesParallel Port Control With Delphi - Parallel Port, Delphi, LPTPort, Device DriverRhadsNo ratings yet

- Lenovo Noise Cancelling Half-Ear Low Latency HIFI Stereo Sound Gaming Wireless Earbuds - SHEIN SingaDocument1 pageLenovo Noise Cancelling Half-Ear Low Latency HIFI Stereo Sound Gaming Wireless Earbuds - SHEIN SingaTestingNo ratings yet

- Mandalapu2015 PDFDocument6 pagesMandalapu2015 PDFChaitu MunirajNo ratings yet

- The Pirate BayDocument3 pagesThe Pirate BayTiểu ThúyNo ratings yet

- Cisco 7200 Series Routers Troubleshooting Documentation RoadmapDocument2 pagesCisco 7200 Series Routers Troubleshooting Documentation RoadmapMohamed YagoubNo ratings yet

- Enterprise PLI PresentationDocument30 pagesEnterprise PLI PresentationAnjuraj RajanNo ratings yet

- Mazhar Hasan Resume NewDocument3 pagesMazhar Hasan Resume NewNajeem ThajudeenNo ratings yet

- Latest Research Papers On Database SecurityDocument4 pagesLatest Research Papers On Database Securityijsgpibkf100% (1)

- 4CP0 01 Que 20201103Document20 pages4CP0 01 Que 20201103Mahir ShahriyarNo ratings yet