Professional Documents

Culture Documents

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

Uploaded by

Rajendiran SundaravelCopyright:

Available Formats

You might also like

- Electronic Commerce 10th Edition Gary P Schneider PDFDocument4 pagesElectronic Commerce 10th Edition Gary P Schneider PDFJulius E. Catipon0% (3)

- 100 - Successful Bank TransfersDocument4 pages100 - Successful Bank Transfersdddvf100% (7)

- C5050-062 Urbancode DeployDocument30 pagesC5050-062 Urbancode DeployRAMESHBABU100% (1)

- Digital Health Care Mini Project ReportDocument34 pagesDigital Health Care Mini Project ReportJeevika KsNo ratings yet

- Friend Mapper On MobileDocument6 pagesFriend Mapper On MobileMohammed Fadhl AlbadwiNo ratings yet

- One Sim Card ManualDocument16 pagesOne Sim Card ManualUkah FranklinNo ratings yet

- Real Estate Management System Literature ReviewDocument7 pagesReal Estate Management System Literature ReviewfgwuwoukgNo ratings yet

- Task 1: Design The Database TogetherDocument16 pagesTask 1: Design The Database TogetherSanchithya AriyawanshaNo ratings yet

- Trabalho Pi Web ScrapperDocument3 pagesTrabalho Pi Web ScrapperJoão NevesNo ratings yet

- Thesis On Web Log MiningDocument8 pagesThesis On Web Log Mininghannahcarpenterspringfield100% (2)

- 4.1. Functional Requirements: 1. AchievementDocument2 pages4.1. Functional Requirements: 1. AchievementKushal BajracharyaNo ratings yet

- DSE 3 Unit 3Document4 pagesDSE 3 Unit 3Priyaranjan SorenNo ratings yet

- Service Mining Based On The Knowledge and Customer DatabaseDocument40 pagesService Mining Based On The Knowledge and Customer DatabaseHarikrishnan ShunmugamNo ratings yet

- Introduction To Web ScrapingDocument3 pagesIntroduction To Web ScrapingRahul Kumar100% (1)

- MIS Chapter 6 Review QuestionsDocument4 pagesMIS Chapter 6 Review QuestionsvhsinsNo ratings yet

- 1analysis of The ServerDocument8 pages1analysis of The ServerRyan AidenNo ratings yet

- WP WebDataExtractionPlaybookDocument13 pagesWP WebDataExtractionPlaybookjmpelNo ratings yet

- DMDWDocument4 pagesDMDWRKB100% (1)

- Part Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesDocument31 pagesPart Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesSrikar ChintalaNo ratings yet

- Software and Information Technology Glossary of TermsDocument19 pagesSoftware and Information Technology Glossary of TermsBusiness Software Education CenterNo ratings yet

- Cloud ComputingDocument17 pagesCloud ComputingUdit KathpaliaNo ratings yet

- FK&A Hub and Spoke Architecture vs. Point To Point: Page - 1Document4 pagesFK&A Hub and Spoke Architecture vs. Point To Point: Page - 1api-284580693No ratings yet

- New ReportDocument63 pagesNew ReportParkash engineering worksNo ratings yet

- Web Usage Mining Master ThesisDocument7 pagesWeb Usage Mining Master Thesishmnxivief100% (2)

- Assignment1 LeeDocument3 pagesAssignment1 LeeJENNEFER LEENo ratings yet

- Accounting Information Systems 8th Edition Hall Solutions Manual 1Document27 pagesAccounting Information Systems 8th Edition Hall Solutions Manual 1fannie100% (38)

- Accounting Information Systems 8Th Edition Hall Solutions Manual Full Chapter PDFDocument36 pagesAccounting Information Systems 8Th Edition Hall Solutions Manual Full Chapter PDFmary.wiles168100% (21)

- Case StudyDocument13 pagesCase StudyMohammad Naushad ShahriarNo ratings yet

- Information System Used by Fedex: NAME: - Abhimanyu Choudhary. ROLL NO: - SMBA10001Document10 pagesInformation System Used by Fedex: NAME: - Abhimanyu Choudhary. ROLL NO: - SMBA10001Abhimanyu ChoudharyNo ratings yet

- Web Enabled Data WarehouseDocument7 pagesWeb Enabled Data WarehouseZubin MistryNo ratings yet

- SeminDocument8 pagesSeminMomin Mohd AdnanNo ratings yet

- Type of Data BaseDocument7 pagesType of Data BaseMuhammad KamilNo ratings yet

- CMS PrimerDocument6 pagesCMS PrimerAntonio P. AnselmoNo ratings yet

- Enterprise Resource PlanningDocument37 pagesEnterprise Resource Planningsharadp007No ratings yet

- MIS TermsDocument8 pagesMIS TermssamiNo ratings yet

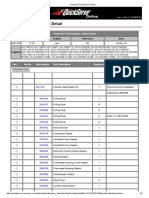

- Leading Autoloader Customer Purchase RequirementsDocument6 pagesLeading Autoloader Customer Purchase RequirementsSathish KumarNo ratings yet

- Eds Unit 1Document28 pagesEds Unit 1Adhiban RNo ratings yet

- Emerging Client Server Threats: (Rising Era of New Network Technology)Document11 pagesEmerging Client Server Threats: (Rising Era of New Network Technology)nexus_2110No ratings yet

- Office Management SystemDocument134 pagesOffice Management SystemPratiyushJuyal100% (1)

- Efficient Techniques For Online Record LinkageDocument4 pagesEfficient Techniques For Online Record Linkagesurendiran123No ratings yet

- 9 - 13 - 22 - (2) Data IntegrationDocument15 pages9 - 13 - 22 - (2) Data IntegrationArun SharmaNo ratings yet

- E-Commerce App. TestingDocument8 pagesE-Commerce App. TestingRakesh100% (1)

- SAP Integration With Windows Server 2000 Active DirectoryDocument22 pagesSAP Integration With Windows Server 2000 Active DirectoryRodrigo Andres Arias ZambonNo ratings yet

- Front Room ArchitectureDocument3 pagesFront Room ArchitectureNiharikaPendem100% (1)

- Chapter 2 Strategies For An Effective Information TechnologyDocument58 pagesChapter 2 Strategies For An Effective Information TechnologyMark Lawrence YusiNo ratings yet

- Full Ebook of Federated Ai For Real World Business Scenarios 1St Edition Dinesh C Verma Online PDF All ChapterDocument69 pagesFull Ebook of Federated Ai For Real World Business Scenarios 1St Edition Dinesh C Verma Online PDF All Chapterrocannehindi100% (5)

- MIS Assignment 2Document5 pagesMIS Assignment 2akash NegiNo ratings yet

- Data ProcessingDocument5 pagesData ProcessingJhean ManlegroNo ratings yet

- Manual TestingDocument56 pagesManual TestingcamjoleNo ratings yet

- Data Processing in Data MiningDocument11 pagesData Processing in Data MiningKartik TiwariNo ratings yet

- Literature Review On Network Monitoring SystemDocument4 pagesLiterature Review On Network Monitoring Systemetmqwvqif100% (1)

- A2 Ict Database CourseworkDocument6 pagesA2 Ict Database Courseworkafjwdryfaveezn100% (2)

- Goldengate For Real-Time Data WarehousingDocument16 pagesGoldengate For Real-Time Data Warehousingivan_o114722No ratings yet

- Main ReportDocument194 pagesMain ReportMANISH KUMARNo ratings yet

- Ebook Low Code Automation Guide 2211Document13 pagesEbook Low Code Automation Guide 2211Freddy ParraNo ratings yet

- Frequent Shopper Program ArchitectureDocument6 pagesFrequent Shopper Program ArchitectureChikato 710No ratings yet

- MedicalDocument3 pagesMedicalddNo ratings yet

- Contact Management System & CRMDocument7 pagesContact Management System & CRMNguyễn Phương AnhNo ratings yet

- Literature Review On Data Warehouse PDFDocument8 pagesLiterature Review On Data Warehouse PDFafmzkbysdbblih100% (1)

- Junk Van 1Document8 pagesJunk Van 1Priya PriyaNo ratings yet

- Sourav Main ReportDocument193 pagesSourav Main Reportwilp.bits.csNo ratings yet

- Main ReportDocument197 pagesMain Reportwilp.bits.csNo ratings yet

- Thesis Asset Management Client LoginDocument4 pagesThesis Asset Management Client Loginafloblnpeewxby100% (1)

- Hspa/Hsdpa: (Beyond 3G)Document16 pagesHspa/Hsdpa: (Beyond 3G)Romain BelemsobgoNo ratings yet

- "M-Banking": Software Requirements Specification ONDocument44 pages"M-Banking": Software Requirements Specification ONKhushboo GautamNo ratings yet

- Kilowatt HourDocument6 pagesKilowatt HourAnonymous E4Rbo2sNo ratings yet

- Dr.N.G.P. Institute of Technology: Department of Mechanical EngineeringDocument22 pagesDr.N.G.P. Institute of Technology: Department of Mechanical Engineeringvasanthmech092664No ratings yet

- FA Tech TestDocument3 pagesFA Tech Testkhaleel.epomcNo ratings yet

- Planning Checklist For New IGSS ProjectsDocument18 pagesPlanning Checklist For New IGSS ProjectsBabar SaleemNo ratings yet

- Users Guide PMAT 2000®Document45 pagesUsers Guide PMAT 2000®Khaled Elmabrouk50% (6)

- Ricoh Error Code ListDocument14 pagesRicoh Error Code ListMacarioNo ratings yet

- Colt Jet FanDocument2 pagesColt Jet FanOliver HermosaNo ratings yet

- Choosing The Right GPT Mode: Asynchronous or Synchronous RenderingDocument4 pagesChoosing The Right GPT Mode: Asynchronous or Synchronous RenderingAmit KumarNo ratings yet

- Application Modernization - Delivery Enablement ResourcesDocument2 pagesApplication Modernization - Delivery Enablement ResourcesAishu NaiduNo ratings yet

- Service Manual EPSONDocument115 pagesService Manual EPSONJoao Jose Santos NetoNo ratings yet

- 1-Calculation of The Peak Power Output of The PV SystemDocument2 pages1-Calculation of The Peak Power Output of The PV SystemMohammad MansourNo ratings yet

- COMPLAINT and ADJUSTMENT by SaadDocument2 pagesCOMPLAINT and ADJUSTMENT by Saadaonabbasabro786No ratings yet

- Part I: The Essentials of Network Perimeter Security Chapter 1. Perimeter Security FundamentalsDocument4 pagesPart I: The Essentials of Network Perimeter Security Chapter 1. Perimeter Security Fundamentalsnhanlt187No ratings yet

- Ip ProjectDocument40 pagesIp Projectramji mNo ratings yet

- SCANPRO20IDocument3 pagesSCANPRO20IKamal FernandoNo ratings yet

- LD Systems Catalogue PDFDocument151 pagesLD Systems Catalogue PDFCami DragoiNo ratings yet

- Java Programming Chapter IIIDocument50 pagesJava Programming Chapter IIIMintesnot Abebe100% (1)

- DDJ-RB: DJ ControllerDocument48 pagesDDJ-RB: DJ ControllerSilvinho JúniorNo ratings yet

- GSM&PSTN Dual-Network Burglar Alarm System User GuideDocument38 pagesGSM&PSTN Dual-Network Burglar Alarm System User Guidesalamanc59No ratings yet

- Postern User Guide EngDocument17 pagesPostern User Guide Englynx17No ratings yet

- Daewoo AMI-917 AudioDocument26 pagesDaewoo AMI-917 AudioHugo Flores0% (1)

- Electronic Parts Catalog - Option DetailDocument2 pagesElectronic Parts Catalog - Option DetailBac NguyenNo ratings yet

- Web Desigining Syllabus SSQUAREITDocument12 pagesWeb Desigining Syllabus SSQUAREITazadtanwar104No ratings yet

- Call For Papers 16th Ibcast PDFDocument11 pagesCall For Papers 16th Ibcast PDFNuman KhanNo ratings yet

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

Uploaded by

Rajendiran SundaravelOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

White Paper: Automating Your Data Collection: Reaching Beyond The Firewall

Uploaded by

Rajendiran SundaravelCopyright:

Available Formats

Automating Your Data Collection:

Reaching Beyond the Firewall

White Paper

WHITE

PAPER

Automating Your Data Collection:

Reaching Beyond the Firewall

Automating repetitive

steps and eliminating

those that waste

time will increase

information worker

productivity and

save an organization

millions of dollars

IDC White Paper

Much has been written about the complexity of programmatically extracting data from unstructured sources

such as the web but little about the cost of failing to do so. A recent study by IDC revealed that information

workers typically spend 17.8 hours per week searching and gathering information at an annual cost of

$26,733.60 per worker.1

Large companies employing a thousand information workers are hemorrhaging money, as much as $5.7 million

annually, in time wasted reformatting information between applications.

IDCs conclusion? Automating repetitive steps and eliminating those that waste time will increase information

worker productivity and save an organization millions of dollars.

Given the shift toward an information economy, the intensity of globalization and hyper-competition,

automating your external data collection may be a significant cost savings as well as a competitive advantage.

Fortunately, the technology to automate your data collection already exists. Intelligent agents are software

programs capable of navigating complex data structures, extracting targeted data with a high degree of

precision, then repurposing the data into an actionable format exactly the sort of tedious, repetitive tasks

that are costing American industry so much money. Solutions range from simple agents with rudimentary

intelligence to smart software highly autonomous agents capable of reading numerous file formats,

negotiating security protocols, duplicating session variables, submitting forms, and extracting gigabytes of

data from the deepest crevices of the web.

Three Approaches to Data Collection

THREE

APPROACHES:

Manual Cut & Paste,

Custom Programming,

or Integrated

Development

Environments

There are three basic approaches to collecting data beyond the corporate firewall: cut-and-paste, custom

programming, and Integrated Development Environments (IDE). Each approach has applications, costs

and benefits.

Data collection suitable for automation is typically repetitive extraction from a known source. An energy

trading company provides a good example.

High volume trading of crude oil and refined products, natural gas and power requires monitoring hundreds of

information sources subscription services, internal data repositories, and websites posting production data.

Some of these sources may need to be queried only weekly, others almost in real-time. The extracted data

must then be structured and imported into a database or data warehouse where it can power other programs

and be distributed throughout the enterprise.

In the business of energy trading, a few seconds is a lifetime. Numerous factors affect the price of energy

weather, water flows, and transmission capacities, for example. Data must be continually collected and

integrated in order to make split-second decisions.

In this example, the volume of the data collected and the need for near real-time collection and repurposing

(translating unstructured or semi-structured data into a structured format) precludes a cut-and-paste approach.

What Makes Software Smart?

Intelligent agents may

need to cooperate

with other agents to

complete their task.

What makes one piece of software smarter than another? Theres still some debate but generally intelligent

agents are defined as Autonomous, Proactive, Responsive, and Sociable.

Autonomous: Once released, an intelligent agent carries out its mission without further instructions. Most

software requires constant prodding from its user a keystroke or mouse click. Intelligent agents, also known

as autonomous agents, exercise greater independence.

1

Based upon an average annual salary of $60,000, including benefits

Automating Your Data Collection

Proactive: Agents are goal-oriented. They are driven by a single imperative: to accomplish their task.

They never tire, never sleep, never get surly. They can be thwarted in their mission but never distracted

or dissuaded.

Responsive: The more intelligent an agent, the more capably it can recognize and respond to changes in its

environment and resolve challenges to the successful completion of its mission. Very bright agents can even

learn from their environment.

Sociable: They may need to communicate with other agents to complete their task. Theyre expected to

communicate with their employer what theyve learned in the wild. And should a mission fail, an agent needs

to debrief on the cause of its failure in order to craft a more perfect mission.

Features of an intelligent agent include:

Identifying targeted data

Programmatically navigating the data source

Reading file formats and contents

Conservation of external resources

Self-defense

Identifying Data

Physical pinpointing

is quick and dirty.

Logical pinpointing,

while more demanding,

is also more robust.

There are two methods of identifying data in unstructured sources such as the web: physical and

logical pinpointing.

Physical pinpointing requires that an agents programmer first visit the page and physically highlight the

desired data, providing the agent something akin to a geographical reference. Its quick and dirty.

Physical pinpointing has the advantage of being cheap. Its easily programmed using inexpensive tools.

Unfortunately, a slight change to the underlying structure of the page even the reformatting of text can

invalidate physical pinpointing. Ultimately, high maintenance costs may erode the initial savings.

A more resilient method of identifying data is logical pinpointing a combination of Boolean expressions and

pattern matching. Logical pinpointing attempts to orient the agent by identifying data values. Its a technique

that accommodates greater changes in the page structure without breaking the agents programming.

Programmatic Navigation

The navigational

ability of an agent

distinguishes its

intelligence.

The ability to navigate the dynamic, sessionless state of the web most clearly distinguishes the intelligence

of one agent from another.

The fact that each agent request for a web page is a discrete and discontinuous event with no relationship to

the previous request has resulted in some clever workarounds. One of these is cookies, a few lines of code

saved to the browsers hard drive that uniquely identifies the user. Cookies provide an elegant, economical

way for a server to recognize a browser over time and are sometimes required as part of the servers

security protocol.

Another means of maintaining state is a unique identifier assigned by a web server to each browser that visits.

The identifier is appended to the URL of any page requested by that browser (or hidden in an invisible form

passed from page to page), providing continuity between page requests, but the unique identifier persists

only as long as the browser is active on that site. Returning precisely to the same data over time may require

that the agent duplicate the session variable.

And, of course, a user may be required to identify themselves before being admitted to a site. The challenge/

response protocol of username/password is commonly used to guard proprietary or paid content on the web.

Automating Your Data Collection

The programmatic navigation of the web may require that the agent duplicates cookies, server variables, and

username/password. And sometimes it requires the agent to intentionally remove all traces of its visit.

The Invisible Web

The invisible web

or deep web is

completely opaque to

search engines.

From a business perspective, much of the really valuable information on the web product pricing, inventory,

descriptions, schedules, tabular data, management profiles, patent registrations, government mandated

disclosures is stored in databases and accessible only after filling out and submitting a form.

An example: a number of sites on the web offer unique information about particular genes, each identified by

its gene sequence. Sequences are similar to reference numbers and are required as form data submitted to

a database in order to retrieve each sites unique information about that gene. Many of these sites also have

calculators to compute things like thermal dynamic properties. Calculators also use the gene sequence

as input.

Because this data is inaccessible to search engines, its sometimes called the invisible web. An intelligent

agent, however, can submit each gene sequence of interest, follow dynamic links generated in response,

re-submit the form based on the data returned, extract the relevant data, and export it to a database or

spreadsheet for further analysis or redistribution.

An agent may need to replicate all of these variables cookies, session variables, username/password

as well as iteratively populate and submit forms in order to extract the desired data.

Popular File Formats

An agents fluency with

different file formats

increases its utility.

The web is a vast network housing numerous popular file formats other than HTML/XML. Adobe Acrobat (PDF)

files have become as ubiquitous as Microsoft Office files (Word, Excel, and PowerPoint). Increasingly publishers

are incorporating text in graphic images, requiring Optical Character Recognition (OCR) on the part of

the agents.

Extending an agents reach inside the corporate firewall requires the ability to read email archives (POP3 and

IMAP) and RSS feeds.

The ability to query ODBC-compliant databases enables an agent to integrate external and internal data

without the use of web services or COM objects.

Treading Lightly

Poorly programmed

agents can consume

web server resources.

External sources such as public websites should be used discreetly and with care to tread lightly on server

resources and bandwidth. Used maliciously or even carelessly, the power of intelligent agents can overwhelm

a web server. Any agent that queries external repositories should include the ability to throttle its own page

requests, controlling the load imposed on remote servers.

Gentle Art of Self-Defense

An agent programmed

to gather competitive

intelligence may need

to defend itself from

counter-intelligence.

There are perfectly legitimate situations where you may not want to leave any footprints such as an IP

address in a competitors web log. This has long been true in the competitive intelligence arena where

counter-intelligence techniques have evolved to prevent the robotic harvesting of publicly available data. Of

course, the data can still be retrieved manually it is, after all, publicly available but the idea is to make it

more difficult to incorporate the intelligence into competitors automated processes and consequently less

useful. The ability to cloak an agents identity or conceal its point of origin is a useful option.

Weve addressed the qualities that make intelligent agents so useful but how do you employ an agent? How

do you begin taking advantage of the technology?

Automating Your Data Collection

Building Intelligent Agents

Basically, there are two paths to building intelligent agents that automate your data collection: custom

programming or Integrated Development Environment (IDE). Either may be appropriate, depending

upon your application.

Custom Programming

Programming of

intelligent agents

must scale effortlessly

to meet enterprise

demand.

There are custom software shops that program ad hoc agents. Custom programming, however, can quickly

become an expensive solution. Given the challenges that agents face in the field, hiring programming talent

with the experience to build adept agents isnt cheap.

Scalability can also be an issue. When an enterprise discovers the utility of employing intelligent agents

for external data collection, its appetite for information can become voracious. The sheer horsepower

of the solution must keep pace. TCP/IP processing, error handling, data file formatting, all need to occur

transparently. Massively parallel deployment engines, multi-threading and load balancing across multiple

servers may be required.

Some questions to address when considering whether custom programming should be done in-house

or out-sourced:

1. If the object is to gather sensitive competitive intelligence, how secure is your data?

How protected is your identity?

2. What is your time-to-market? How much time will it take to maintain?

3. If the data proves useful, can you quickly scale production? At what cost?

4. Are you re-inventing the wheel? Is the desired functionality available off-the-shelf?

IDE

An Integrated Development Environment includes everything you need to build, deploy, and debug an

intelligent agent. IDEs can be either programmatic or point-and-click.

Point-and-Click

Point-and-Click

development can

quickly create

rudimentary agents for

simple missions.

Point-and-click IDEs typically consist of wizards that guide the user through a linear progression of choices

with successive options dependent upon previous choices. The idea is that non-programming staff can create

effective agents at less expense than IT staff.

The users guide for one point-and-click IDE package recommends a basic understanding of programming

constructs, debugging, HTML/XML, and scripting languages such as JavaScript, VBScript, and regular

expressions hardly the skill set of most non-technical staff.

Basic may be disingenuous given the complexity of navigating and identifying data on the web,

de-constructing conditional logic in client-side script, or understanding changes in a web page that have

invalidated an agents programming. In reality, point-and-click software often disguises the complexity of the

problem rather than simplifying the solution.

While its true that staff with no programming experience may be able to build rudimentary agents with simple

missions, some web programming skills are required for anything more complex.

Point-and-click IDEs actually tend to penalize expertise, segmenting the task into very small chunks and

preventing a holistic view of the solution. This fragmented approach requires even an experienced developer

to deal with each small segment of the solution sequentially through the IDE interface rather than confronting

the code directly.

Automating Your Data Collection

Programmatic

Use of SQL reduces

the learning curve for

most analysts...

An alternative to point-and-click development leverages SQL (Structured Query Language) syntax to query

unstructured data sources. SQL is a powerful declarative programming language with a concise syntax. It has

the added advantage of a broad user base, reducing staffing cost and availability issues.

Employing a SQL-like syntax allows the developer to use nested queries and views from various data sources,

restrict data sets using where and having clauses, test against case and if statements, group and order data

sets, iterate using arrays, manipulate data with string and numeric functions, and match patterns with

regular expressions.

In a report entitled Commercial Information Technology Possibilities written for the Center for Technology

and National Security Policy, the author states, Use of SQL reduces the learning curve for most analysts by

not requiring mastery of computer languages that support artificial intelligence-based approaches to symbol

manipulation. The strategy is to pull from a variety of source documents that are hosted on the Internet

or maintained by subscription services or internal network servers. This data, once located, pinpointed,

and extracted, is then repurposed by converting and storing it in a format of use to the client, such as a

spreadsheet or graphic.

Point-and-click development makes sense in situations that dont require the power and flexibility of a

declarative language similar to SQL or when programming staff are simply unavailable. In those situations,

however, a hosted service should also be considered.

Installed Software or Hosted Service?

Installing software or

using a hosted service

may ultimately depend

upon the sensitivity

of the data.

In some applications, especially competitive intelligence applications, security of the data is a primary

consideration. Even the nature of the query and the targeted source may be a security issue. In such cases the

building and maintaining of intelligent agents and the data extracted is typically housed entirely behind a

corporate firewall. This requires software to be installed on corporate servers, programming staff, and the IT

infrastructure necessary to support the application.

In other situations security is less of an issue than the availability of programming staff and IT support. A

hosted service that requires only a browser and Internet connection to access the data may be an

attractive alternative.

Many of the manufacturers of intelligent agent software offer only one option or the other. The ability to

migrate between options provides greater flexibility as business requirements, IT staff, or the competitive

environment changes. It may also make sense to incubate agents on a vendors hosted service before

bringing it in-house.

And dont forget to ask if the company offers a pre-packaged solution that will meet your requirements

off-the-shelf or with some custom tweaking.

Agents for Hire

Set high expectations

for your data

collection agent.

QL2 is in the business of building intelligent agents for data collection. WebQL, our flagship product,

represents a feature set you would expect of a robust IDE for intelligent agents:

Ability to navigate, extract, and reformat information from both external and internal sources

Support for commonly used file formats including HTML/XML, Adobe Acrobat (PDF), POP3 and

IMAP email archives, RSS, Microsoft Office files (Word, Excel, PowerPoint), RSS feeds, dBase and

ODBC-compliant databases

Optical Character Recognition (OCR) capability

Concise notation based on ANSI SQL standard

Massively parallel deployment engine

Automating Your Data Collection

Load distribution across multiple servers

Support for cookies, agents, frames, tables, and authentication

Form discovery and completion

Identity protection (anonymization)

Robust APIs for Java, SOAP, VBA ActiveX/COM, .NET, & C++

Error handling

Powerful network monitoring tools

Page change detection and reporting

Page request throttling

Query execution scheduling

Available as installed software or hosted service

Integrated Development Environment (IDE

More Info?

The simple fact is that automating the extraction of data from unstructured sources such as web pages, PDF,

RSS feeds, and Microsoft Office files can not only provide your company with a competitive advantage, it

can save you a ton of money.

Interested in more information about QL2s agent technology? Visit www.QL2.com and request a

free demonstration.

sales@QL2.com

Automating Your Data Collection

You might also like

- Electronic Commerce 10th Edition Gary P Schneider PDFDocument4 pagesElectronic Commerce 10th Edition Gary P Schneider PDFJulius E. Catipon0% (3)

- 100 - Successful Bank TransfersDocument4 pages100 - Successful Bank Transfersdddvf100% (7)

- C5050-062 Urbancode DeployDocument30 pagesC5050-062 Urbancode DeployRAMESHBABU100% (1)

- Digital Health Care Mini Project ReportDocument34 pagesDigital Health Care Mini Project ReportJeevika KsNo ratings yet

- Friend Mapper On MobileDocument6 pagesFriend Mapper On MobileMohammed Fadhl AlbadwiNo ratings yet

- One Sim Card ManualDocument16 pagesOne Sim Card ManualUkah FranklinNo ratings yet

- Real Estate Management System Literature ReviewDocument7 pagesReal Estate Management System Literature ReviewfgwuwoukgNo ratings yet

- Task 1: Design The Database TogetherDocument16 pagesTask 1: Design The Database TogetherSanchithya AriyawanshaNo ratings yet

- Trabalho Pi Web ScrapperDocument3 pagesTrabalho Pi Web ScrapperJoão NevesNo ratings yet

- Thesis On Web Log MiningDocument8 pagesThesis On Web Log Mininghannahcarpenterspringfield100% (2)

- 4.1. Functional Requirements: 1. AchievementDocument2 pages4.1. Functional Requirements: 1. AchievementKushal BajracharyaNo ratings yet

- DSE 3 Unit 3Document4 pagesDSE 3 Unit 3Priyaranjan SorenNo ratings yet

- Service Mining Based On The Knowledge and Customer DatabaseDocument40 pagesService Mining Based On The Knowledge and Customer DatabaseHarikrishnan ShunmugamNo ratings yet

- Introduction To Web ScrapingDocument3 pagesIntroduction To Web ScrapingRahul Kumar100% (1)

- MIS Chapter 6 Review QuestionsDocument4 pagesMIS Chapter 6 Review QuestionsvhsinsNo ratings yet

- 1analysis of The ServerDocument8 pages1analysis of The ServerRyan AidenNo ratings yet

- WP WebDataExtractionPlaybookDocument13 pagesWP WebDataExtractionPlaybookjmpelNo ratings yet

- DMDWDocument4 pagesDMDWRKB100% (1)

- Part Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesDocument31 pagesPart Ii: Applications of Gas: Ga and The Internet Genetic Search Based On Multiple Mutation ApproachesSrikar ChintalaNo ratings yet

- Software and Information Technology Glossary of TermsDocument19 pagesSoftware and Information Technology Glossary of TermsBusiness Software Education CenterNo ratings yet

- Cloud ComputingDocument17 pagesCloud ComputingUdit KathpaliaNo ratings yet

- FK&A Hub and Spoke Architecture vs. Point To Point: Page - 1Document4 pagesFK&A Hub and Spoke Architecture vs. Point To Point: Page - 1api-284580693No ratings yet

- New ReportDocument63 pagesNew ReportParkash engineering worksNo ratings yet

- Web Usage Mining Master ThesisDocument7 pagesWeb Usage Mining Master Thesishmnxivief100% (2)

- Assignment1 LeeDocument3 pagesAssignment1 LeeJENNEFER LEENo ratings yet

- Accounting Information Systems 8th Edition Hall Solutions Manual 1Document27 pagesAccounting Information Systems 8th Edition Hall Solutions Manual 1fannie100% (38)

- Accounting Information Systems 8Th Edition Hall Solutions Manual Full Chapter PDFDocument36 pagesAccounting Information Systems 8Th Edition Hall Solutions Manual Full Chapter PDFmary.wiles168100% (21)

- Case StudyDocument13 pagesCase StudyMohammad Naushad ShahriarNo ratings yet

- Information System Used by Fedex: NAME: - Abhimanyu Choudhary. ROLL NO: - SMBA10001Document10 pagesInformation System Used by Fedex: NAME: - Abhimanyu Choudhary. ROLL NO: - SMBA10001Abhimanyu ChoudharyNo ratings yet

- Web Enabled Data WarehouseDocument7 pagesWeb Enabled Data WarehouseZubin MistryNo ratings yet

- SeminDocument8 pagesSeminMomin Mohd AdnanNo ratings yet

- Type of Data BaseDocument7 pagesType of Data BaseMuhammad KamilNo ratings yet

- CMS PrimerDocument6 pagesCMS PrimerAntonio P. AnselmoNo ratings yet

- Enterprise Resource PlanningDocument37 pagesEnterprise Resource Planningsharadp007No ratings yet

- MIS TermsDocument8 pagesMIS TermssamiNo ratings yet

- Leading Autoloader Customer Purchase RequirementsDocument6 pagesLeading Autoloader Customer Purchase RequirementsSathish KumarNo ratings yet

- Eds Unit 1Document28 pagesEds Unit 1Adhiban RNo ratings yet

- Emerging Client Server Threats: (Rising Era of New Network Technology)Document11 pagesEmerging Client Server Threats: (Rising Era of New Network Technology)nexus_2110No ratings yet

- Office Management SystemDocument134 pagesOffice Management SystemPratiyushJuyal100% (1)

- Efficient Techniques For Online Record LinkageDocument4 pagesEfficient Techniques For Online Record Linkagesurendiran123No ratings yet

- 9 - 13 - 22 - (2) Data IntegrationDocument15 pages9 - 13 - 22 - (2) Data IntegrationArun SharmaNo ratings yet

- E-Commerce App. TestingDocument8 pagesE-Commerce App. TestingRakesh100% (1)

- SAP Integration With Windows Server 2000 Active DirectoryDocument22 pagesSAP Integration With Windows Server 2000 Active DirectoryRodrigo Andres Arias ZambonNo ratings yet

- Front Room ArchitectureDocument3 pagesFront Room ArchitectureNiharikaPendem100% (1)

- Chapter 2 Strategies For An Effective Information TechnologyDocument58 pagesChapter 2 Strategies For An Effective Information TechnologyMark Lawrence YusiNo ratings yet

- Full Ebook of Federated Ai For Real World Business Scenarios 1St Edition Dinesh C Verma Online PDF All ChapterDocument69 pagesFull Ebook of Federated Ai For Real World Business Scenarios 1St Edition Dinesh C Verma Online PDF All Chapterrocannehindi100% (5)

- MIS Assignment 2Document5 pagesMIS Assignment 2akash NegiNo ratings yet

- Data ProcessingDocument5 pagesData ProcessingJhean ManlegroNo ratings yet

- Manual TestingDocument56 pagesManual TestingcamjoleNo ratings yet

- Data Processing in Data MiningDocument11 pagesData Processing in Data MiningKartik TiwariNo ratings yet

- Literature Review On Network Monitoring SystemDocument4 pagesLiterature Review On Network Monitoring Systemetmqwvqif100% (1)

- A2 Ict Database CourseworkDocument6 pagesA2 Ict Database Courseworkafjwdryfaveezn100% (2)

- Goldengate For Real-Time Data WarehousingDocument16 pagesGoldengate For Real-Time Data Warehousingivan_o114722No ratings yet

- Main ReportDocument194 pagesMain ReportMANISH KUMARNo ratings yet

- Ebook Low Code Automation Guide 2211Document13 pagesEbook Low Code Automation Guide 2211Freddy ParraNo ratings yet

- Frequent Shopper Program ArchitectureDocument6 pagesFrequent Shopper Program ArchitectureChikato 710No ratings yet

- MedicalDocument3 pagesMedicalddNo ratings yet

- Contact Management System & CRMDocument7 pagesContact Management System & CRMNguyễn Phương AnhNo ratings yet

- Literature Review On Data Warehouse PDFDocument8 pagesLiterature Review On Data Warehouse PDFafmzkbysdbblih100% (1)

- Junk Van 1Document8 pagesJunk Van 1Priya PriyaNo ratings yet

- Sourav Main ReportDocument193 pagesSourav Main Reportwilp.bits.csNo ratings yet

- Main ReportDocument197 pagesMain Reportwilp.bits.csNo ratings yet

- Thesis Asset Management Client LoginDocument4 pagesThesis Asset Management Client Loginafloblnpeewxby100% (1)

- Hspa/Hsdpa: (Beyond 3G)Document16 pagesHspa/Hsdpa: (Beyond 3G)Romain BelemsobgoNo ratings yet

- "M-Banking": Software Requirements Specification ONDocument44 pages"M-Banking": Software Requirements Specification ONKhushboo GautamNo ratings yet

- Kilowatt HourDocument6 pagesKilowatt HourAnonymous E4Rbo2sNo ratings yet

- Dr.N.G.P. Institute of Technology: Department of Mechanical EngineeringDocument22 pagesDr.N.G.P. Institute of Technology: Department of Mechanical Engineeringvasanthmech092664No ratings yet

- FA Tech TestDocument3 pagesFA Tech Testkhaleel.epomcNo ratings yet

- Planning Checklist For New IGSS ProjectsDocument18 pagesPlanning Checklist For New IGSS ProjectsBabar SaleemNo ratings yet

- Users Guide PMAT 2000®Document45 pagesUsers Guide PMAT 2000®Khaled Elmabrouk50% (6)

- Ricoh Error Code ListDocument14 pagesRicoh Error Code ListMacarioNo ratings yet

- Colt Jet FanDocument2 pagesColt Jet FanOliver HermosaNo ratings yet

- Choosing The Right GPT Mode: Asynchronous or Synchronous RenderingDocument4 pagesChoosing The Right GPT Mode: Asynchronous or Synchronous RenderingAmit KumarNo ratings yet

- Application Modernization - Delivery Enablement ResourcesDocument2 pagesApplication Modernization - Delivery Enablement ResourcesAishu NaiduNo ratings yet

- Service Manual EPSONDocument115 pagesService Manual EPSONJoao Jose Santos NetoNo ratings yet

- 1-Calculation of The Peak Power Output of The PV SystemDocument2 pages1-Calculation of The Peak Power Output of The PV SystemMohammad MansourNo ratings yet

- COMPLAINT and ADJUSTMENT by SaadDocument2 pagesCOMPLAINT and ADJUSTMENT by Saadaonabbasabro786No ratings yet

- Part I: The Essentials of Network Perimeter Security Chapter 1. Perimeter Security FundamentalsDocument4 pagesPart I: The Essentials of Network Perimeter Security Chapter 1. Perimeter Security Fundamentalsnhanlt187No ratings yet

- Ip ProjectDocument40 pagesIp Projectramji mNo ratings yet

- SCANPRO20IDocument3 pagesSCANPRO20IKamal FernandoNo ratings yet

- LD Systems Catalogue PDFDocument151 pagesLD Systems Catalogue PDFCami DragoiNo ratings yet

- Java Programming Chapter IIIDocument50 pagesJava Programming Chapter IIIMintesnot Abebe100% (1)

- DDJ-RB: DJ ControllerDocument48 pagesDDJ-RB: DJ ControllerSilvinho JúniorNo ratings yet

- GSM&PSTN Dual-Network Burglar Alarm System User GuideDocument38 pagesGSM&PSTN Dual-Network Burglar Alarm System User Guidesalamanc59No ratings yet

- Postern User Guide EngDocument17 pagesPostern User Guide Englynx17No ratings yet

- Daewoo AMI-917 AudioDocument26 pagesDaewoo AMI-917 AudioHugo Flores0% (1)

- Electronic Parts Catalog - Option DetailDocument2 pagesElectronic Parts Catalog - Option DetailBac NguyenNo ratings yet

- Web Desigining Syllabus SSQUAREITDocument12 pagesWeb Desigining Syllabus SSQUAREITazadtanwar104No ratings yet

- Call For Papers 16th Ibcast PDFDocument11 pagesCall For Papers 16th Ibcast PDFNuman KhanNo ratings yet