Professional Documents

Culture Documents

A Review On Storage and Task Scheduling in Heterogeneous Hadoop Clusters

A Review On Storage and Task Scheduling in Heterogeneous Hadoop Clusters

Uploaded by

IJAFRCOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Review On Storage and Task Scheduling in Heterogeneous Hadoop Clusters

A Review On Storage and Task Scheduling in Heterogeneous Hadoop Clusters

Uploaded by

IJAFRCCopyright:

Available Formats

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

A Review on Storage and Task Scheduling in Heterogeneous

Hadoop Clusters

Anand Kadu, Vishal Patil, Swapnil Bhavsar

JSPMs Imperial College of Engineering And Research, Wagholi, Pune, Maharashtra, India

anandkadu13@gmail.com, vishalpatilissac@gmail.com, swapnilbhavsar66@gmail.com

ABSTRACT

The task scheduling algorithm for homogeneous Hadoop clusters is incapable of proper

utilization of resources in heterogeneous clusters. To overcome this issue, an adaptive task

scheduling algorithm has been proposed. With adaptive task scheduling we aim for better

resource utilization by dynamically adjusting the workload at runtime. Also we are making the

storage of data resource aware so that the load is balanced according to processing capabilities.

With the adaptive strategy task trackers can self-regulate the tasks while maintaining

performance and providing stability and scalability. The adaptive approach promises higher

efficiency and better performance.

Index Terms: MapReduce, heterogeneous, clusters, performance, scheduling, allocation, dynamic.

I.

INTRODUCTION

The data explosion caused by the growth of internet during the last and current decade has urged the

need for Big Data storage and management tool. Data explosion i.e. generation of huge amounts of data

made it difficult to store, manage, and retrieve information from traditional data processing techniques.

As a solution to this problem, Hadoop, a distributed data management platform was developed. Hadoop

is an open source tool developed by Yahoo! Due to its efficiency in handling Big Data, various internet

service providers such as Yahoo, Facebook, Amazon, Twitter, Alibaba and their likes prefer Hadoop for

storage, management and analysis of data.

Hadoop has two components1. Hadoop Distribute File System (HDFS)

2. MapReduce

75 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

Figure 1 Hadoop Architecture

Hadoop is a distributed data management platform usually deployed in homogeneous clusters. The high

cost of homogeneous clusters has encouraged researchers to move on to heterogeneous clustering [1]

[9]. Hadoop uses a specialized scheduling mechanism for allocating task to every node. Scheduling is an

important aspect of Hadoop which ensures fair task allocation and load balancing. In heterogeneous

clusters the performance of every node differs from all other nodes. To maximize the performance of

such clusters and for better resource utilization, the task scheduling should be adaptive. With dynamic

storage and adaptive task scheduling, the storage of data will be resource aware. Also the task trackers

will be able to adapt according to resource availability. The adaptive nature will allow task trackers to

adjust to the workload at runtime, better load balancing and higher utilization of resources.

In this paper, we are reviewing some popularly used and some proposed scheduling mechanisms used in

Hadoop. The paper has been organized as follows. In Section- II, we have analyzed some highlights of

popular scheduling techniques. In Section- III, we have a comparative study of the analyzed techniques.

In Section- IV, probable scope of improvement have been proposed. Finally, Section- V concludes the

paper with the summary of our study and the contribution of this paper.

II. SCHEDULING MECHANISMS IN HADOOP

In Hadoop, data is not stored in a single cluster. In a cluster for a given task many nodes work together on

a MapReduce function. These nodes communicate among other nodes over a high speed communication

network by message passing. This results in high energy consumption. Reference [2] proposed an Energy

Aware Cluster Task Scheduling (EATS) to optimize the energy consumption in clusters. In Energy Aware

Task Scheduling this problem is overcome by shortening the length of scheduling. They used clustering

scheduling to minimize the schedule length of parallel tasks. The first task is assigned to a queue and all

its successor tasks are assigned to the first task queue. They offer clusters to processor on the basis of

task so as to reduce the communication between the clusters. This in turn reduces the energy

consumption. However EATS is not suitable for dynamic data storage as same type of data cannot be

localized to a single cluster.

MapReduce splits jobs into multiple smaller jobs and runs them in parallel. Later the outputs of the

smaller tasks are merged to get the final output. Reference [6] proposes a technique to dynamically

manage multi-job MapReduce workloads and to build dynamic performance models based on the

performance of every node. An adaptive strategy has been used to monitor task completion at runtime to

76 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

analyze the performance of nodes. The principle behind this technique is to analyze the execution of

smaller tasks to estimate the total runtime of other similar tasks and dynamically adjust the allocation of

available slots so as to meet the deadline. Concepts of data-affinity and hardware-affinity have been used

to improve task allocation where the tasks are assigned based on the nature of previous tasks. Though

this technique improves overall performance, it is useful only in the case when the current task is similar

to the previous task. Also the problem of over utilization of active nodes arises as new tasks get allocated

to previously used nodes.

Processing speed is a key factor affecting performance. Heterogeneous clusters contain nodes with

different processing speeds. Traditional scheduling mechanisms assign equal tasks to every node without

considering the ability of the node to handle the given task. As a solution to this problem a Novel Data

Distribution Technique [4] [8] has been proposed. The Novel Data-Distribution Technique for Hadoop in

Heterogeneous Cloud Environments [4] considers the processing speed of nodes for allocating tasks.

Speed analyzer [4] is used for measuring the processing speed of all the data nodes in terms of computing

ratio. In this case, larger tasks are assigned to nodes with more processing speed and the smaller tasks

are assigned to nodes with less processing speed. However, this technique does not consider the

replication factor which is most likely to create storage and processing overhead. Data availability must

be considered before allocation of task. Also its not necessary that a node with more processing power

will justify the given task, it may have to struggle to complete the assigned task.

In traditional Hadoop scheduling techniques, the Master usually tries to assign equal work to all nodes.

This technique fails to utilize resources in heterogeneous environments where the performance of each

node varies considerably. Resource Aware Scheduling [5] provides a better approach for resource

utilization. This scheduling strategy works by distributing workload present on a cluster according to

computational CPU time, I/O requirements, bandwidth, and processing time. TRWS [5] collects resource

information and reports it to the master. This information consists of CPU utilization, I/O utilization, disk

or network utilization, etc. The prime focus is on CPU utilization. If there are two nodes, the first with

higher CPU utilization and the second with higher disk utilization, then at the time of allocation of task

higher priority will be given to the node with more CPU power. The major drawbacks of this technique is

that task slots remain unused. The nodes with less CPU utilization remain idle while others are burdened

with more tasks. The residual resource are left unused until a new task is assigned. This technique is

static and is unable to make flexible changes according to performance and real time load.

Deadline scheduling [3] is another technique for speeding up execution of jobs. In this technique the

scheduled job has to be completed within the given time. Deadlines are allocated to all the jobs and the

nodes must finish the assigned job within the deadline. The deadlines are assigned according to the Job

Execution Cost Model [3] based on the size of input data, MapReduce running time, etc. It conducts

schedulability tests to estimate weather the job will be completed within the speculated time and gives

feedback to the user. The job is executed if it can meet the deadline otherwise it is rejected and

resubmitted with a modified deadline.

With this strategy maximum number of jobs are assigned to a cluster. The major drawback of this

technique is that it only focuses on satisfying the deadline and does not try to achieve maximum

performance. This technique does not perform as per requirements in real time jobs.

Scheduling is of two types- Adaptive and Non-adaptive [10]. Adaptive task scheduling strategy based on

dynamic workload adjustment (ATSDWA) [14] algorithm which dynamically allocates tasks based on

availability of resource and the workload. Tasks are assigned on the basis of workload on the node. The

task tracker on the node asks for a new task only if it has the capacity to execute the task. In this way the

workload is maintained in every node. This technique helps in overcoming node failure caused by

burdening of node thereby increasing fault tolerance. This technique has proven to increase performance

on a small scale heterogeneous cluster, but has not been tested on large clusters where there is more

distributed workload. The issues caused by data locality and replication still persist.

III. COMPARATIVE STUDY

77 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

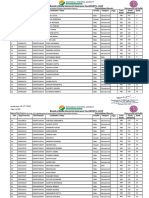

After analysis of various scheduling techniques, we compare them based on various aspects. Not all the

schedulers are suitable for heterogeneous environments. The various schedulers and their comparison is

as given in the table.

Table 1 Comparison of Various Hadoop Schedulers

Scheduling

algorithm

FIFO/

FJFS

Size of

Advantage

cluster

Job tracker pulls Small

Efficient

and

job which came

simple

first

from

the

implementation,

oldest queue.

Does not depend

on priority or

size of job.

Fair

scheduler

Organize jobs into Small

equal

resource

pool and each pool

has

guaranteed

capacity to run the

scheduled task.

Multiple job queue Large

allocated and each

queue

has

guaranteed

capacity

for

executing job.

Manage

job Large

allocation based on

resource

utilization

Capacity

scheduler

Resource

Aware

Scheduling

Deadline

constraint

LATE

SAMR

Technique

Uses job execution Large

cost

model

to

determine whether

job execution is

done

within

deadline or not

Speculative

Large

execution

Using

historical Small

information read

from

node

to

estimate executing

task

Limitations

Environment

Poor response Homogeneous

time

for

shortest job,

No

preemption,

Low

performance

Fast response Poor support Homogeneous

time for smallest for

job,

concurrency

Less

complex

than FIFO,

Preemptive

Support

for Higher

Homogeneous

multiple queue,

complexity,

Improved

Nonresponse time

preemptive

Higher resource

utilization and

overall

performance,

Decrease

workload

Decrease

execution time,

Reduce delay,

Maximum

number of tasks

run on a cluster

Scalable,

Nodes

are

robust,

Improve

response time

Scalable,

Decrease

execution time,

Save

system

resource

Over

Both

burdening of

high capacity

nodes

Data locality Both

problem,

Cannot

be

used in real

time jobs,

No preemption

Cannot

find Both

slow tasks at

the end,

Not reliable

Data locality Heterogeneous

conflicts

IV. FUTURE SCOPE

78 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

Scheduling remains a key factor which affects performance of Hadoop. The traditional scheduling

technique focusses on homogeneous clusters and is not suitable for heterogeneous clusters. More work

needs to be done for scheduling in heterogeneous environments. The ATSDWA [14] algorithm is a novel

approach towards obtaining maximum performance in heterogeneous Hadoop clusters. But this

technique needs testing on large clusters. This techniques tolerance to faults is questionable and needs

improvement. Resource aware scheduling promises to increase the utilization of resources. Resource

aware adaptive scheduling would certainly provide higher utilization and better performance. More

work needs to be done with respect to this concept for a more sophisticated Hadoop.

V. CONCLUSION

After reviewing some popular scheduling strategies [11] for Hadoop and a comparative analysis of these

strategies has brought us to the conclusion that the performance of Hadoop highly depends on

scheduling. Scheduling highly affects the performance of Hadoop, so it is crucial that the scheduling must

be highly efficient. Especially, in case of heterogeneous environments, more sophisticated scheduling is

required to overcome problems of uneven resources and unbalanced load. Our study shows that work

has been done by researchers to improve scheduling techniques, but still there are certain drawbacks

that need to be overcome.

Different strategies focus on different aspects of scheduling [12] [13] and each one has its benefits.

Scheduling must be able to judiciously utilize the resources while ensuring that all the tasks are

completed as fast as possible with accurate results. It should be efficient in handling faults and must be

able to dynamically balance the workload so as to ensure that performance is not hampered. The

adaptive strategy is a very good approach towards improving performance by automatically adjusting

the task execution according to the workload and available resources. Though this technique is meant to

handle the problems and improve throughput in heterogeneous environments, it also improves the

overall performance in homogeneous environments. It is highly fault tolerant and efficient in

maintaining the workflow. With some improvements and by overcoming some limitations, the adaptive

strategy promises to improve the performance of Hadoop to a greater extent.

VI. REFERENCES

[1]

L. C., Feng Yan, "Heterogeneous Cores For MapReduce Processing Opportunity or Challenges?,"

IEEE, pp. 1-7, 2014.

[2]

H. L., W. D., F. S. Wei Liu, "Energy-Aware Task Clustering Scheduling," IEEE, pp. 1-4, 2011.

[3]

J. Z., K. L., R. L., Zhuo Tang, "MTSD: A task scheduling algorithm for MapReduce base on deadline

constraints," IEEE, pp. 1-7, 2012.

[4]

A.M., P. H., G., Vrushali Ubarhande, "Novel Data-Distribution Technique for Hadoop in

Heterogeneous Cloud Environments," IEEE, pp. 1-8, 2015.

[5]

N. G., S. M., Mark Yong, "Towards A Reaource Aware Scheduler in Hadoop," 2009.

[6]

Y. B., D. C., M. I., M. S., M. I., I. W., Jord`a Polo, "Deadline-Based MapReduce Workload

Management," IEEE Transaction, pp. 1-14, June 2013.

[7]

S. G., Quan Chen Daqiang Zhang Minyi Guo Qianni Deng, "SAMR: A Self-adaptive MapReduce

Scheduling Algorithm," IEEE, pp. 1-8, 2010.

79 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

International Journal of Advance Foundation and Research in Computer (IJAFRC)

Volume 2, Issue 10, October - 2015. ISSN 2348 4853, Impact Factor 1.317

[8]

X. Zhang, Y. Feng, S. Feng, J. Fan, and Z. Ming, An effective data locality aware task scheduling

method for MapReduce framework in heterogeneous environments, in CSC 11. Hong Kong: IEEE,

Dec. 2011, pp. 235242.

[9]

Tom white, Hadoop Definitive Guide, Third Edition, 2012.

[10]

Hadoop Tutorial: http://developer.yahoo.com/hadoop/tutorial/module1.html/

[11]

Hadoop job scheduling: http://blog.cloudera.com/blog/2008/11/job-scheduling-in-hadoop/.

[12]

Hadoop Scheduling: http://www.ibm.com/developerworks//library/os-hadoop-scheduling/

[13]

Apache Hadoop: http://Hadoop.apache.org

[14]

L. C., X. W., Xiaolong Xu, "Adaptive Task Scheduling Strategy Based on Dynamic Workload

Adjustment for Heterogeneous Hadoop Clusters," IEEE Systems Journal, pp. 1-12, 2014.

80 | 2015, IJAFRC All Rights Reserved

www.ijafrc.org

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Wilhelm Schulze StudyDocument11 pagesWilhelm Schulze Studyإلياس بن موسى بوراس100% (2)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Phonetic PlacementDocument2 pagesPhonetic Placementokadamayanthi100% (1)

- Auto Invoice ErrorsDocument25 pagesAuto Invoice ErrorsConrad RodricksNo ratings yet

- CFS Based Feature Subset Selection For Software Maintainance PredictionDocument11 pagesCFS Based Feature Subset Selection For Software Maintainance PredictionIJAFRCNo ratings yet

- Efficient Algorithm Comparison To Solve Sudoku PuzzleDocument5 pagesEfficient Algorithm Comparison To Solve Sudoku PuzzleIJAFRCNo ratings yet

- The Various Ways of Programming and Embedding Firmware Into An ARM Cortex-M3 Microcontroller Based HardwareDocument6 pagesThe Various Ways of Programming and Embedding Firmware Into An ARM Cortex-M3 Microcontroller Based HardwareIJAFRCNo ratings yet

- A Survey On Designing of Turbo Encoder & Turbo DecoderDocument8 pagesA Survey On Designing of Turbo Encoder & Turbo DecoderIJAFRCNo ratings yet

- Automated Leukemia Detection by Using Contour Signature MethodDocument8 pagesAutomated Leukemia Detection by Using Contour Signature MethodIJAFRCNo ratings yet

- Application of Malicious URL Detection in Data MiningDocument6 pagesApplication of Malicious URL Detection in Data MiningIJAFRCNo ratings yet

- Metamaterial Based Fractal Body Worn Antenna - A ReviewDocument6 pagesMetamaterial Based Fractal Body Worn Antenna - A ReviewIJAFRCNo ratings yet

- A Review of Compact Asymmetric Coplanar Strip Fed Monopole Antenna For Multiband ApplicationsDocument5 pagesA Review of Compact Asymmetric Coplanar Strip Fed Monopole Antenna For Multiband ApplicationsIJAFRCNo ratings yet

- Effects of Social Media On The Psychology of PeopleDocument6 pagesEffects of Social Media On The Psychology of PeopleIJAFRCNo ratings yet

- A Comparative Study of K-Means, K-Medoid and Enhanced K-Medoid AlgorithmsDocument4 pagesA Comparative Study of K-Means, K-Medoid and Enhanced K-Medoid AlgorithmsIJAFRCNo ratings yet

- Defective Ground Structure Microstrip Patch Antenna For ISM, Wi-MAX and C-Band Using U Slotted StructureDocument8 pagesDefective Ground Structure Microstrip Patch Antenna For ISM, Wi-MAX and C-Band Using U Slotted StructureIJAFRCNo ratings yet

- Radc TR 90 72Document584 pagesRadc TR 90 72Shandy Canester100% (1)

- Guides in Using The Measurement Package On DiscernmentDocument16 pagesGuides in Using The Measurement Package On DiscernmentHelen LomahanNo ratings yet

- Geddes (2003) Big QuestionsDocument42 pagesGeddes (2003) Big QuestionsJelena KoturNo ratings yet

- CalcMaster Leaflet EnglishDocument8 pagesCalcMaster Leaflet Englishsunil_lasker200No ratings yet

- Compendium Bs 2014 en File Complet PDFDocument225 pagesCompendium Bs 2014 en File Complet PDFJIgnacio123No ratings yet

- Basement Wall Design NotesDocument5 pagesBasement Wall Design NotesGermar PorquerinoNo ratings yet

- Freedom Notes Class 11Document2 pagesFreedom Notes Class 11vsurendar159No ratings yet

- Statistical Tolerance AnalysisDocument2 pagesStatistical Tolerance AnalysisSelvaraj BalasundramNo ratings yet

- A Report DC Motor ControlDocument6 pagesA Report DC Motor ControlEngr Farhanullah SarkiNo ratings yet

- Imanager U2000 V200R016C50 Single-Server System Software Installation and Commissioning Guide (Windows 7) 05 PDFDocument159 pagesImanager U2000 V200R016C50 Single-Server System Software Installation and Commissioning Guide (Windows 7) 05 PDFAnonymous mQYwVSJEdNo ratings yet

- Crop Tool and Lasso Tool Lesson PlanDocument2 pagesCrop Tool and Lasso Tool Lesson PlanJorene ApuyaNo ratings yet

- FINS 3635 Short Computer Assignment-2017-2Document1 pageFINS 3635 Short Computer Assignment-2017-2joannamanngoNo ratings yet

- Bubble-Up Effects of Subculture Fashion.20120924.161844Document2 pagesBubble-Up Effects of Subculture Fashion.20120924.161844anon_699035352No ratings yet

- Commander's Handbook For Assessment Planning and ExecutionDocument156 pagesCommander's Handbook For Assessment Planning and Executionlawofsea100% (2)

- Placement RulesDocument2 pagesPlacement RulesMuhammad Aqeel DarNo ratings yet

- Heat Engine & IC EnginesDocument26 pagesHeat Engine & IC EnginesVeeresh Kumar G BNo ratings yet

- 13-14 April 2010: Sào Paulo, BrazilDocument6 pages13-14 April 2010: Sào Paulo, Brazilmurugesh_adodisNo ratings yet

- BTechITMathematicalInnovationsClusterInnovationCentre Res PDFDocument300 pagesBTechITMathematicalInnovationsClusterInnovationCentre Res PDFTushar SharmaNo ratings yet

- Writing Opening To A SpeechDocument4 pagesWriting Opening To A SpeechMuhammadd YaqoobNo ratings yet

- Stat - Prob-Q3-Module-8Document21 pagesStat - Prob-Q3-Module-8Rowena SoronioNo ratings yet

- Riding 2002Document69 pagesRiding 2002Elmira RomanovaNo ratings yet

- Anthropometric ProceduresDocument4 pagesAnthropometric ProceduresdrkrishnaswethaNo ratings yet

- Automatic Toll E-Ticketing For Transportation SystemsDocument4 pagesAutomatic Toll E-Ticketing For Transportation SystemsRaji GaneshNo ratings yet

- Air IndiaDocument12 pagesAir IndiaSathriya Sudhan MNo ratings yet

- Data CompressionDocument43 pagesData CompressionVivek ShuklaNo ratings yet

- IBM Integration Bus: MQ Flexible Topologies: Configuring MQ Nodes Using MQ Connection PropertiesDocument53 pagesIBM Integration Bus: MQ Flexible Topologies: Configuring MQ Nodes Using MQ Connection PropertiesdivaNo ratings yet

- Questions and Solutions Wit - 1665247828131Document5 pagesQuestions and Solutions Wit - 1665247828131ManishNo ratings yet