Professional Documents

Culture Documents

Linearly Independent, Orthogonal, and Uncorrelated Variables

Linearly Independent, Orthogonal, and Uncorrelated Variables

Uploaded by

Brandon McguireOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Linearly Independent, Orthogonal, and Uncorrelated Variables

Linearly Independent, Orthogonal, and Uncorrelated Variables

Uploaded by

Brandon McguireCopyright:

Available Formats

Linearly Independent, Orthogonal, and Uncorrelated Variables

JOSEPH LEE RODGERS, W. ALAN NICEWANDER, and LARRY TOOTHAKER*

Linearly independent, orthogonal, and uncorrelated are

three terms used to indicate lack of relationship between variables. This short didactic article compares

these three terms in both an algebraic and a geometric

framework. An example is used to illustrate the

differences.

KEY WORDS: Linear independence; Orthogonality;

Uncorrelated variables; Centered variables; n dimensional space.

INTRODUCTION

In the early 19701s, a series of articles and letters

discussed the relationships among statistical independence, zero correlation, and mutual exclusivity (see

Gibbons 1968; Hunter 1972; Pollak 1971; and Shih

1971). A related set of concepts that are equally confusing to students is firmly rooted in linear algebra:

linear independence, orthogonality, and zero correlation. The series of articles cited above deal with statistical concepts in which the link between the sample and

the population is critical. On the other hand, the concepts with which we are dealing apply most naturally to

variables that have been fixed, either by design or in the

sample. Thus, our framework is primarily algebraic and

geometric rather than statistical. Unfortunately, the

mathematical distinctions between our three terms are

subtle enough to confuse both students and teachers of

statistics. The purpose of this short didactic article is to

present explicitly the differences among these three

concepts and to portray the relationships among them.

0, where

and

are the means of X and Y,

respectively, and 1 is a vector of ones.

The first important distinction here is that linear independence and orthogonality are properties of the raw

variables, while zero correlation is a property of the

centered variables. Secondly, orthogonality is a special

case of linear independence. Both of these distinctions

can be elucidated by reference to a geometric

framework.

A GEOMETRIC PORTRAYAL

Given two variables, the traditional geometric model

that is used to portray their relationship is the scatterplot, in which the rows are plotted in the column space,

each variable defines an axis, and each observation is

plotted as a point in the space. Another useful, although less common, geometric model involves turning

the space "inside-out", where the columns of the data

matrix lie in the row space. Variables are vectors from

the origin to the column points, and the n axes correspond to observations. While this (potentially) hugedimensional space is difficult to visualize, the two variable vectors define a two-dimensional subspace that is

easily portrayed. This huge-dimensional space was often used by Fisher in his statistical conceptualizations

(Box 1978), and it is commonly used in geometric portrayals of multiple regression (see Draper and Smith

n

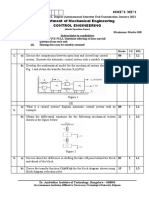

lanearly andependent

1!

( 1 ) lanearly andepend.

(2) not uncorrelated

AN ALGEBRAIC PORTRAYAL

(3) not orthogonal

Algebraically, the concepts of linearly independent,

orthogonal, and uncorrelated variables can be stated as

follows.

Let X and Y be vector observations of the variables X

and Y. Then

-X -Y

(1) linearly andepend.

1. X and Y are linearly independent iff there exists no

constant a such that a x - Y = 0 (X and Y nonnull

vectors).

2. X and Y are orthogonal iff X'Y = 0.

3. X and Y are uncorrelated iff (X - Z ~ ) ' ( Y- P1) =

*Joseph Lee Rodgers is Assistant Professor and W. Alan Nicewander and Larry Toothaker are Professors in the Department of

Psychology at the University of Oklahoma, Norman, O K 73019. The

authors express their appreciation to two reviewers whose suggestions

improved this article.

(1

L1

OJ

(2) uncorrelated

(3) not orthogonal

5I

1: 3

I ?.,, linearly andepend.

I not uncarelated

(3) orthogonal

Figure 1 . The relationship between linearly independent, ofthogonal, and uncorrelated variables.

O The American Statistician, May 1984, Vol. 38, No. 2

133

1981, pp. 201-203). This n-dimensional space and its

two-dimensional subspace are the ones to which we

direct attention.

Each variable is a vector lying in the observation

space of n dimensions. Linearly independent variables

are those with vectors that do not fall along the same

line; that is, there is no multiplicative constant that will

expand, contract, or reflect one vector onto the other.

Orthogonal variables are a special case of linearly independent variables. Not only do their vectors not fall

along the same line, but they also fall perfectly at right

angles to one another (or, equivalently, the cosine of

the angle between them is zero). The relationship between "linear independence" and "orthogonality" is

thus straightforward and simple.

Uncorrelated variables are a bit more complex. To

say variables are uncorrelated indicates nothing about

the raw variables themselves. Rather, "uncorrelated"

implies that once each variable is centered (i.e., the

mean of each vector is subtracted from the elements of

that vector), then the vectors are perpendicular. The

key to appreciating this distinction is recognizing that

centering each variable can and often will change the

angle between the two vectors. Thus, orthogonal denotes that the raw variables are perpendicular. Uncorrelated denotes that the centered variables are

perpendicular.

Each of the following situations can occur: Two vari-

ables that are perpendicular can become oblique once

they are centered; these are orthogonal but not uncorrelated. Two variables not perpendicular (oblique)

can become perpendicular once they are centered;

these are uncorrelated but not orthogonal. And finally,

two variables may be both orthogonal and uncorrelated

if centering does not change the angle between their

vectors. In each case, of course, the variables are linearly independent. Figure 1 gives a pictorial portrayal of

the relationships among these three terms. Examples of

sets of variables that correspond to each possible situation are shown.

[Received April 1983. Revised October 1983. ]

REFERENCES

BOX, J.F. (1978), R . A . Fisher: The Life of a Scientist, New York:

John Wiley.

DRAPER, N., and SMITH, H. (1981), Applied Regression Analysis,

New York: John Wiley.

GIBBONS, J.D. (1968), "Mutually Exclusive Events, Independence,

and Zero Correlation," The American Statistician, 22, 31-32.

HUNTER, J . J . (1972), "Independence, Conditional Expectation,

and Zero Covariance," The American Statistician, 26, 22-24.

POLLAK, E . (1971), "A Comment on Zero Correlation and Independence," The American Statistician, 25, 53.

SHIH, W. (1971), "More on Zero Correlation and Independence,"

The American Statistician, 25, 62.

Kruskal's Proof of the Joint Distribution of x and s2

STEPHEN M. STIGLER*

In introductory courses in mathematical statistics, the

proof that the sample mean and sample variance s 2

are independent when one is sampling from normal

populations is commonly deferred until substantial

mathematical machinery has been developed. The

proof may be based on Helmert's transformation

(Brownlee 1965, p. 271; Rao 1973, p. 182), or it may use

properties of moment-generating functions (Hogg and

Craig 1970, p. 163; Shuster 1973). The purpose of this

note is to observe that a simple proof, essentially due

to Kruskal (1946), can be given early in a statistics

course; the proof requires no matrix algebra, momentgenerating functions, or characteristic functions. All

that is needed are two minimal facts about the bivariate

normal distribution: Two linear combinations of a pair

of independent normally distributed random variables

are themselves bivariate normal, and hence if they are

uncorrelated, they are independent.

*Stephen M. Stigler is Professor, Department of Statistics, University of Chicago, Chicago, IL 60637.

134

Let XI, . . . ,Xn be independent, identically distributed N ( p . , u2). Let

x,= -n1E"x , ,

s;=-

"

c

(X,

1),=1

X")?

.

(n We suppose that the chi-squared distribution x2(k)has

been defined as the distribution of Uf + . . . + U;,

where the U, are independent N ( 0 , l ) .

,=I

Theorem. (a) Xnhas a N(p.,u2/n) distribution. (b)

(n - l)s2/u2 has a X2(n - 1) distribution. (c) Xnand s,?

are independent.

Proof. The proof is by induction. First consider the

= (XI + X2)/2 and, after a little alcase n = 2. Here

gebra, s i = (XI - X2)2/2.Part (a) is an immediate consequence of the assumed knowledge of normal distributions, and since (XI - x 2 ) / f i is N(0, I), (b) follows

too, from the definition of ~ ' ( 1 ) . Finally, since

cov(Xl - X2,XI + X2) = 0, X, - X2 and XI + X2 are independent and (c) follows.

Now assume the conclusion holds for a sample of size

n. We prove it holds for a sample of size n + 1. First

establish the two relationships

0 The American Statistician, May 1984, Vol. 38, No. 2

x2

You might also like

- AP Statistics Chapter 3Document3 pagesAP Statistics Chapter 3jose mendoza0% (1)

- Perception in PracticeDocument4 pagesPerception in Practiceakm29790No ratings yet

- Red Blue Green BookDocument1 pageRed Blue Green BookRufus ChengNo ratings yet

- (Lawrence Hubert and Phipps Arabie) Comparing Partitions (1985)Document26 pages(Lawrence Hubert and Phipps Arabie) Comparing Partitions (1985)Zhiqiang XuNo ratings yet

- X Global Lorentzian Geometry BeemDocument331 pagesX Global Lorentzian Geometry BeemzahorskiNo ratings yet

- Bill BrufordDocument10 pagesBill BrufordBrandon Mcguire100% (1)

- Ruperto ChapiDocument2 pagesRuperto ChapiBrandon McguireNo ratings yet

- Joseph Lee Rodgers W. Alan Nicewander Larry ToothakerDocument3 pagesJoseph Lee Rodgers W. Alan Nicewander Larry Toothakermanaj_mohapatra2041No ratings yet

- Anderson 1976Document20 pagesAnderson 1976ainNo ratings yet

- (2nd Stage) Detecting Dependence I - Detecting Dependence Between Spatial ProcessesDocument52 pages(2nd Stage) Detecting Dependence I - Detecting Dependence Between Spatial ProcessesMa GaNo ratings yet

- 1 PDFDocument15 pages1 PDFemilianocarpaNo ratings yet

- Paper 10Document21 pagesPaper 10Arnoldo TapiaNo ratings yet

- Rcihards 1993 Spurius CorrelationDocument14 pagesRcihards 1993 Spurius CorrelationFlorencia StelzerNo ratings yet

- Do The Innovations in A Monetary VAR Have Finite VariancesDocument33 pagesDo The Innovations in A Monetary VAR Have Finite VariancesrunawayyyNo ratings yet

- Chapter 2Document11 pagesChapter 2Minaw BelayNo ratings yet

- Regression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurDocument36 pagesRegression Analysis - Chapter 4 - Model Adequacy Checking - Shalabh, IIT KanpurAbcNo ratings yet

- Tube Dislocations in GravityDocument27 pagesTube Dislocations in GravityBayer MitrovicNo ratings yet

- Bab I2 StatistikDocument29 pagesBab I2 Statistikkomarul vadliNo ratings yet

- Target Population: Mathematical AspectsDocument18 pagesTarget Population: Mathematical AspectsHofidisNo ratings yet

- Regression Analysis - SSBDocument2 pagesRegression Analysis - SSBVishesh SharmaNo ratings yet

- CONSERVATISM AND ART PREFERENCES1Wilson - Ausman - Mathews - 1973 - PDFDocument3 pagesCONSERVATISM AND ART PREFERENCES1Wilson - Ausman - Mathews - 1973 - PDFNi peNo ratings yet

- Correlation and Regression-1Document32 pagesCorrelation and Regression-1KELVIN ADDONo ratings yet

- Unit 3-1Document12 pagesUnit 3-1Charankumar GuntukaNo ratings yet

- Simple Regression Model: Erbil Technology InstituteDocument9 pagesSimple Regression Model: Erbil Technology Institutemhamadhawlery16No ratings yet

- Applied Statistics 1 - Week 1Document5 pagesApplied Statistics 1 - Week 1Chuyện của Hành TâyNo ratings yet

- ARTICLE 3, Vol 1, No 3, Central Limit Theorem and Its ApplicationsDocument12 pagesARTICLE 3, Vol 1, No 3, Central Limit Theorem and Its ApplicationsJoséNo ratings yet

- Chapter 3Document35 pagesChapter 3Minaw BelayNo ratings yet

- Applications of Knot Theory in PhysicsDocument12 pagesApplications of Knot Theory in PhysicsMelvin CarterNo ratings yet

- Multiply Connected Topological Sociology, and Some Applications of Mathematical Methods in Social SciencesDocument8 pagesMultiply Connected Topological Sociology, and Some Applications of Mathematical Methods in Social SciencesAJHSSR JournalNo ratings yet

- Correlation Analysis-Students NotesMAR 2023Document24 pagesCorrelation Analysis-Students NotesMAR 2023halilmohamed830No ratings yet

- Delta-P Statistic Peterson 1984Document3 pagesDelta-P Statistic Peterson 1984Sayri ArotingoNo ratings yet

- Fractals and PhysiologyDocument20 pagesFractals and Physiologyياسر عوض اللّهNo ratings yet

- Bivariate Analysis: Research Methodology Digital Assignment IiiDocument6 pagesBivariate Analysis: Research Methodology Digital Assignment Iiiabin thomasNo ratings yet

- Scatter Plot Linear CorrelationDocument4 pagesScatter Plot Linear Correlationledmabaya23No ratings yet

- Leemis McQueston 08 Univariate Distributions RelationshipDocument9 pagesLeemis McQueston 08 Univariate Distributions RelationshipPeterParker1983No ratings yet

- Correlation: DefinitionsDocument24 pagesCorrelation: DefinitionsBhawna JoshiNo ratings yet

- Chapter 2 EconometricsDocument59 pagesChapter 2 EconometricsAshenafi ZelekeNo ratings yet

- Degree of FreedomDocument16 pagesDegree of FreedomMuhammad SaeedNo ratings yet

- Chi-Plots For Assessing Dependence: Biometrika (1985), 71, 2, Pp. 263-65 2 5 3Document13 pagesChi-Plots For Assessing Dependence: Biometrika (1985), 71, 2, Pp. 263-65 2 5 3Damon SNo ratings yet

- Random Coefficients On Endogenous Variables in Simultaneous Equations ModelsDocument58 pagesRandom Coefficients On Endogenous Variables in Simultaneous Equations ModelsTriana GyshelaNo ratings yet

- (A) Personal Details Role Name Affiliation: Introduction To Bivariate Linear Regression AnalysisDocument10 pages(A) Personal Details Role Name Affiliation: Introduction To Bivariate Linear Regression AnalysisNeha MishraNo ratings yet

- Smoothed Generalized Method of Moments Estimation of Threshold Spatial Autoregressive ModelDocument38 pagesSmoothed Generalized Method of Moments Estimation of Threshold Spatial Autoregressive Modelbryce1987No ratings yet

- Journal of TurbulenceDocument29 pagesJournal of TurbulenceUmair IsmailNo ratings yet

- Personality Types and Traits in The VocaDocument8 pagesPersonality Types and Traits in The VocaCebong KocokNo ratings yet

- BivariateDocument8 pagesBivariateF.Ramesh DhanaseelanNo ratings yet

- Two Dimensional Measure Preserving Mappings: 148.206.159.132 On: Sat, 30 May 2015 05:13:22Document10 pagesTwo Dimensional Measure Preserving Mappings: 148.206.159.132 On: Sat, 30 May 2015 05:13:22Alex ZepedaNo ratings yet

- Variograms: Brian Klinkenberg GeographyDocument34 pagesVariograms: Brian Klinkenberg Geographym_kabilia100% (1)

- Appendix 1 Basic Statistics: Summarizing DataDocument9 pagesAppendix 1 Basic Statistics: Summarizing Dataapi-19731569No ratings yet

- Degrees of FreedomDocument16 pagesDegrees of FreedomFabio SilvaNo ratings yet

- Project On Ordinary Differential EquationsDocument26 pagesProject On Ordinary Differential Equationsommprakashpanda15scribdNo ratings yet

- Louis H. Kauffman and Sofia Lambropoulou - On The Classification of Rational TanglesDocument49 pagesLouis H. Kauffman and Sofia Lambropoulou - On The Classification of Rational TanglesJupweNo ratings yet

- Book Stein 1987Document15 pagesBook Stein 1987christian roblesNo ratings yet

- M.R Dennis and J.H Hannay - Geometry of Calugareanu's TheoremDocument11 pagesM.R Dennis and J.H Hannay - Geometry of Calugareanu's TheoremPlamcfeNo ratings yet

- Subjective QuestionsDocument8 pagesSubjective QuestionsKeerthan kNo ratings yet

- Business Statistics Chapter 5Document43 pagesBusiness Statistics Chapter 5K venkataiahNo ratings yet

- Simple Linear Regression: Definition of TermsDocument13 pagesSimple Linear Regression: Definition of TermscesardakoNo ratings yet

- Geometric Views of Partial Correlation Coefficient in Regression AnalysisDocument10 pagesGeometric Views of Partial Correlation Coefficient in Regression Analysissebastian rodriguez muñozNo ratings yet

- Fractal: A Set Which Is Larger Than The UniverseDocument5 pagesFractal: A Set Which Is Larger Than The UniversesoumyaprakashsahuNo ratings yet

- BBA - 106 - Lecture Notes On Regression AnalysisDocument2 pagesBBA - 106 - Lecture Notes On Regression AnalysisTanmay Chakraborty67% (3)

- Econometrics: Ultimate Goal of The Econometric Model Is To Uncover The Deeper CausalDocument3 pagesEconometrics: Ultimate Goal of The Econometric Model Is To Uncover The Deeper CausalFilip Rašković RašoNo ratings yet

- Lissajous-Like Figures With Triangular and Square Waves: J. Flemming, A. HornesDocument4 pagesLissajous-Like Figures With Triangular and Square Waves: J. Flemming, A. Horneshemanta gogoiNo ratings yet

- Band Handbook 2018-2019: CommunicationDocument7 pagesBand Handbook 2018-2019: CommunicationBrandon McguireNo ratings yet

- FlugelhornDocument4 pagesFlugelhornBrandon McguireNo ratings yet

- EpicurusDocument18 pagesEpicurusBrandon McguireNo ratings yet

- Rick WakemanDocument16 pagesRick WakemanBrandon Mcguire0% (1)

- TonnetzDocument3 pagesTonnetzBrandon McguireNo ratings yet

- Scoring For EuphoniumDocument5 pagesScoring For EuphoniumBrandon McguireNo ratings yet

- Cornet, Trumpet, and FlugelhornDocument3 pagesCornet, Trumpet, and FlugelhornBrandon Mcguire100% (1)

- Charles RosenDocument7 pagesCharles RosenBrandon McguireNo ratings yet

- Palaeography: Palaeography (UK) or Paleography (USDocument27 pagesPalaeography: Palaeography (UK) or Paleography (USBrandon Mcguire100% (1)

- The Bowed StringDocument5 pagesThe Bowed StringBrandon McguireNo ratings yet

- Post ColonialismDocument17 pagesPost ColonialismBrandon McguireNo ratings yet

- Vibrating Structure GyroscopeDocument6 pagesVibrating Structure GyroscopeBrandon McguireNo ratings yet

- Part3 PDFDocument27 pagesPart3 PDFBrandon McguireNo ratings yet

- Violin Resonance - Violin, Strings or Both?Document4 pagesViolin Resonance - Violin, Strings or Both?Brandon McguireNo ratings yet

- Violin Modes ConstructionDocument7 pagesViolin Modes ConstructionBrandon McguireNo ratings yet

- PartimentoDocument2 pagesPartimentoBrandon Mcguire100% (1)

- Brass Instrument Acoustics - An IntroductionDocument22 pagesBrass Instrument Acoustics - An IntroductionBrandon Mcguire100% (1)

- NihoniumDocument21 pagesNihoniumBrandon McguireNo ratings yet

- Leonard BernsteinDocument22 pagesLeonard BernsteinBrandon Mcguire50% (2)

- C.6 Time-Domain EM MethodsDocument20 pagesC.6 Time-Domain EM MethodsRickyRiccardoNo ratings yet

- Nancy Lec (1.mesurments)Document11 pagesNancy Lec (1.mesurments)Mohamed AbdelAzimNo ratings yet

- Chemistry Form 4 Definition ListDocument3 pagesChemistry Form 4 Definition ListAliif IsmailNo ratings yet

- Me71 Model QP 2022Document3 pagesMe71 Model QP 2022Pramod RajNo ratings yet

- Quantum Logic Gate - WikipediaDocument15 pagesQuantum Logic Gate - Wikipediarajkumar chinnasamyNo ratings yet

- AMCA 500 Damper Pressure Drop PDFDocument4 pagesAMCA 500 Damper Pressure Drop PDFsinofreebird0% (1)

- UNIT - 5 E - BankingDocument8 pagesUNIT - 5 E - BankingVimaleshNo ratings yet

- 20-21 S6 Maths Uniform Test (25!9!20) Question PaperDocument11 pages20-21 S6 Maths Uniform Test (25!9!20) Question Papermichael tongNo ratings yet

- Flow VisualizationDocument6 pagesFlow VisualizationMuralikrishnan GMNo ratings yet

- Introduction To Directional Drilling 5572381Document26 pagesIntroduction To Directional Drilling 5572381Cristopher Ortiz100% (1)

- Literature SurveyDocument19 pagesLiterature SurveyRavi KumarNo ratings yet

- Motor Sizing Calculations: Selection ProcedureDocument9 pagesMotor Sizing Calculations: Selection Procedurearunkumar100% (1)

- Inspection Lot - No StatusDocument6 pagesInspection Lot - No Statusnikku115No ratings yet

- Chapter 18 ElectrochemistryDocument21 pagesChapter 18 ElectrochemistryRizza Mae RaferNo ratings yet

- Reliability of 3D NAND Memories PDFDocument35 pagesReliability of 3D NAND Memories PDFJeff Wank100% (1)

- Area Calculation GuideDocument137 pagesArea Calculation GuideUmair Hassan100% (1)

- Bs Physics DGW Ee ProgramDocument36 pagesBs Physics DGW Ee ProgramEmerie Romero AngelesNo ratings yet

- The Optimal Design of Pressure Swing Adsorption SystemsDocument27 pagesThe Optimal Design of Pressure Swing Adsorption SystemsEljon OrillosaNo ratings yet

- Project Report - THDCILDocument36 pagesProject Report - THDCILMukesh VermaNo ratings yet

- 85018v2 Manual Vol 2 Rev CDocument150 pages85018v2 Manual Vol 2 Rev CyavuzovackNo ratings yet

- Rocas EjerciciosDocument2 pagesRocas EjerciciosStiwarth Cuba Asillo0% (1)

- Composites Material 4 Edition CallisterDocument5 pagesComposites Material 4 Edition CallisterDwi AntoroNo ratings yet

- Thermal ImagingDocument15 pagesThermal ImagingRutuja PatilNo ratings yet

- Sex Education For High School Infographics by SlidesgoDocument32 pagesSex Education For High School Infographics by SlidesgoAni TiênNo ratings yet

- Donaldson - Silencer Exhaust SystemDocument25 pagesDonaldson - Silencer Exhaust SystemBenjamín AlainNo ratings yet

- DLL - Science 4 - Q3 - W2Document2 pagesDLL - Science 4 - Q3 - W2Bambi BandalNo ratings yet

- Revised Objective Questions & Answers: Correct Answer Is Marked at EndDocument102 pagesRevised Objective Questions & Answers: Correct Answer Is Marked at Endsprashant19100% (1)

- A Quantitative Approach To Selecting Nozzle Flow Rate and StreamDocument25 pagesA Quantitative Approach To Selecting Nozzle Flow Rate and StreamLeo MeliNo ratings yet