Professional Documents

Culture Documents

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Uploaded by

jas_i_ti9Copyright:

Available Formats

You might also like

- Ebook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFDocument67 pagesEbook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFsamuel.chapman41697% (38)

- Press Release TemplateDocument2 pagesPress Release TemplateJocelyn MartensonNo ratings yet

- Computational Models For The Human Body PDFDocument679 pagesComputational Models For The Human Body PDFGuilherme AyresNo ratings yet

- Inductive Learning Algorithms For Coplex Systems Modeling PDFDocument373 pagesInductive Learning Algorithms For Coplex Systems Modeling PDFggropNo ratings yet

- Cybernetics, Semiotics and Meaning in The Cinema. Keyan G TomaselliDocument18 pagesCybernetics, Semiotics and Meaning in The Cinema. Keyan G TomaselliIsaac KnarsNo ratings yet

- A Mathematical Model of DNA ReplicationDocument8 pagesA Mathematical Model of DNA ReplicationRasoul GmdriNo ratings yet

- Report Cover For Postgraduate Students: /subject: Empirically Reducing Complex System Into One Representative AgentDocument26 pagesReport Cover For Postgraduate Students: /subject: Empirically Reducing Complex System Into One Representative AgentLaamaarii AmineeNo ratings yet

- Category Theory For Autonomous and Networked Dynamical SystemsDocument11 pagesCategory Theory For Autonomous and Networked Dynamical SystemsBranimir BobanacNo ratings yet

- Numerical Continuation Methods For Dynamical SystemsDocument411 pagesNumerical Continuation Methods For Dynamical SystemsDionisis Pettas0% (1)

- ChaptersDocument20 pagesChaptersAlexanderNo ratings yet

- Barber Et Al 2005 Learning As A Social ProcessDocument37 pagesBarber Et Al 2005 Learning As A Social ProcessGaston Becerra100% (1)

- 156 CarteDocument15 pages156 CarteLiv TariganNo ratings yet

- Chapter 1 (Chip-Scale Quantum Imaging and Spectroscopy)Document81 pagesChapter 1 (Chip-Scale Quantum Imaging and Spectroscopy)Muhammad AhsanNo ratings yet

- 2 - 1973-Outline of A New Approach To The Analysis of Complex Systems and Decision ProcessesDocument18 pages2 - 1973-Outline of A New Approach To The Analysis of Complex Systems and Decision ProcessesDr. Ir. R. Didin Kusdian, MT.No ratings yet

- Models of Communication 2Document2 pagesModels of Communication 2Jurie Leann DaanNo ratings yet

- Computational Social ScineceDocument21 pagesComputational Social ScineceAndrej KranjecNo ratings yet

- Information Theory Applications in Signal ProcessingDocument4 pagesInformation Theory Applications in Signal Processingalt5No ratings yet

- Computational IntelligenceDocument54 pagesComputational Intelligencebogdan.oancea3651No ratings yet

- Representations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopDocument47 pagesRepresentations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopSherjil OzairNo ratings yet

- Khrennikov, A. Et Al. (2018) - On Interpretational Questions For Quantum-Like Modeling of Social Lasing. EntropyDocument24 pagesKhrennikov, A. Et Al. (2018) - On Interpretational Questions For Quantum-Like Modeling of Social Lasing. EntropyMauricio Mandujano MNo ratings yet

- Stat Phys Info TheoDocument214 pagesStat Phys Info TheomaxhkNo ratings yet

- A New Study in Maintenance For Transmission LinesDocument10 pagesA New Study in Maintenance For Transmission LinesbladdeeNo ratings yet

- Abdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsDocument33 pagesAbdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsGilmario BispoNo ratings yet

- Systems: Visual Analysis of Nonlinear Dynamical Systems: Chaos, Fractals, Self-Similarity and The Limits of PredictionDocument18 pagesSystems: Visual Analysis of Nonlinear Dynamical Systems: Chaos, Fractals, Self-Similarity and The Limits of PredictionbwcastilloNo ratings yet

- Theoretical Computer Science: Flavio Chierichetti, Silvio Lattanzi, Alessandro PanconesiDocument9 pagesTheoretical Computer Science: Flavio Chierichetti, Silvio Lattanzi, Alessandro PanconesiIván AveigaNo ratings yet

- Information Theory and Security Quantitative Information FlowDocument48 pagesInformation Theory and Security Quantitative Information FlowFanfan16No ratings yet

- tmpD1FF TMPDocument7 pagestmpD1FF TMPFrontiersNo ratings yet

- A Mathematical Theory of Communication 1957Document79 pagesA Mathematical Theory of Communication 1957scribblybarkNo ratings yet

- Blanchard P., Volchenkov D. - Random Walks and Diffusions On Graphs and Databases. An Introduction - (Springer Series in Synergetics) - 2011Document277 pagesBlanchard P., Volchenkov D. - Random Walks and Diffusions On Graphs and Databases. An Introduction - (Springer Series in Synergetics) - 2011fictitious30No ratings yet

- Entropy 21 01124 v2Document13 pagesEntropy 21 01124 v2peckingappleNo ratings yet

- Entropy: Quantum Entropy and Its Applications To Quantum Communication and Statistical PhysicsDocument52 pagesEntropy: Quantum Entropy and Its Applications To Quantum Communication and Statistical PhysicsRaktim HalderNo ratings yet

- BPT InitiaDocument5 pagesBPT InitiakihtrakaNo ratings yet

- UntitledDocument116 pagesUntitledTsega TeklewoldNo ratings yet

- La Utilidad de Los Constructos MatematicoseScholarship - UC - Item - 8g19k58xDocument33 pagesLa Utilidad de Los Constructos MatematicoseScholarship - UC - Item - 8g19k58xMario VFNo ratings yet

- Entropy: Information Theory For Data Communications and ProcessingDocument4 pagesEntropy: Information Theory For Data Communications and ProcessingDaniel LeeNo ratings yet

- SCHOLKOPF (2019) - Robustifying Independent Component Analysis by Adjusting For Group-Wise Stationary NoiseDocument50 pagesSCHOLKOPF (2019) - Robustifying Independent Component Analysis by Adjusting For Group-Wise Stationary NoisemvbadiaNo ratings yet

- Vdocuments - MX - Classical Mechanics With Mathematica PDFDocument523 pagesVdocuments - MX - Classical Mechanics With Mathematica PDFOrlando Baca100% (2)

- Entropy: Information and Self-OrganizationDocument6 pagesEntropy: Information and Self-OrganizationMiaou157No ratings yet

- Paper 74Document10 pagesPaper 74Vikas KhannaNo ratings yet

- Outline of New Approach To The Analysis of Complex Systems and Decision ProcessesDocument17 pagesOutline of New Approach To The Analysis of Complex Systems and Decision ProcessesEKS VIDEOSNo ratings yet

- Theory Culture Society 2005 Gane 25 41Document18 pagesTheory Culture Society 2005 Gane 25 41Bruno ReinhardtNo ratings yet

- Information TheoryDocument1 pageInformation TheoryTrần DươngNo ratings yet

- 549 1496 2 PBDocument10 pages549 1496 2 PBgokhancantasNo ratings yet

- A Mathematical Theory of CommunicationDocument3 pagesA Mathematical Theory of CommunicationMorfolomeoNo ratings yet

- Paper 24 RelStat-2019 LSTM Jackson Grakovski EN FINAL1Document10 pagesPaper 24 RelStat-2019 LSTM Jackson Grakovski EN FINAL1Alexandre EquoyNo ratings yet

- Fqxi Essay 2017 RGDocument9 pagesFqxi Essay 2017 RGFrancisco Vega GarciaNo ratings yet

- Computer Graphics Meets Chaos and Hyperchaos. Some Key ProblemsDocument9 pagesComputer Graphics Meets Chaos and Hyperchaos. Some Key ProblemsAlessandro SoranzoNo ratings yet

- A Fogalmi Terek Általános Megfogalmazása Mezo Szintű ReprezentációkéntDocument44 pagesA Fogalmi Terek Általános Megfogalmazása Mezo Szintű ReprezentációkéntFarkas GyörgyNo ratings yet

- A Theory of ResonanceDocument40 pagesA Theory of ResonanceckreftNo ratings yet

- Berliner1992a PDFDocument23 pagesBerliner1992a PDFGiovanna S. S.No ratings yet

- Mathematical Image Processing 9783030014575 9783030014582Document481 pagesMathematical Image Processing 9783030014575 9783030014582SasikalaNo ratings yet

- Data Mining1Document3 pagesData Mining1Nurul NadirahNo ratings yet

- Chaotic SystemsDocument322 pagesChaotic SystemsOsman Ervan100% (1)

- Connectionism and Cognitive Architecture - A Critical Analysis - 1Document8 pagesConnectionism and Cognitive Architecture - A Critical Analysis - 1Marcos Josue Costa DiasNo ratings yet

- A Mathematical Theory of CommunicationDocument55 pagesA Mathematical Theory of CommunicationJoão Henrique Gião BorgesNo ratings yet

- DatascienceDocument14 pagesDatasciencejoseluisapNo ratings yet

- Statistical Mechanics of NetworksDocument13 pagesStatistical Mechanics of NetworksSilviaNiñoNo ratings yet

- Johannes ColloquiunDocument31 pagesJohannes ColloquiunTanmay HansNo ratings yet

- Blind Identification of Multichannel Systems Driven by Impulsive SignalsDocument7 pagesBlind Identification of Multichannel Systems Driven by Impulsive SignalsRafael TorresNo ratings yet

- A Mathematical Theory of Communication by C. E. SHANNONDocument79 pagesA Mathematical Theory of Communication by C. E. SHANNONandreslorcaNo ratings yet

- A Mathematical Theory of CommunicationDocument79 pagesA Mathematical Theory of CommunicationCosmin B.No ratings yet

- Emergent Nested Systems: A Theory of Understanding and Influencing Complex Systems as well as Case Studies in Urban SystemsFrom EverandEmergent Nested Systems: A Theory of Understanding and Influencing Complex Systems as well as Case Studies in Urban SystemsNo ratings yet

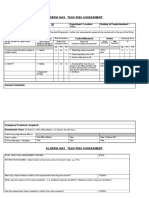

- TRA 02 Lifting of Skid Units During Rig MovesDocument3 pagesTRA 02 Lifting of Skid Units During Rig MovesPirlo PoloNo ratings yet

- Le Wagon FullstackDocument23 pagesLe Wagon FullstackClara VaninaNo ratings yet

- Lab Assignment 11313Document2 pagesLab Assignment 11313abhaybaranwalNo ratings yet

- Mandate Hub User GuideDocument53 pagesMandate Hub User GuideMuruganand RamalingamNo ratings yet

- Ship Maintenance Planning With NavCadDocument4 pagesShip Maintenance Planning With NavCadtheleepiper8830No ratings yet

- Weight-Volume Relationships: Principles of Geotechnical Engineering Eighth EditionDocument22 pagesWeight-Volume Relationships: Principles of Geotechnical Engineering Eighth EditionThony CayNo ratings yet

- ENGLISH 9 DLP For DEMODocument9 pagesENGLISH 9 DLP For DEMOMary Joy Corpuz100% (2)

- Relationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in JakartaDocument6 pagesRelationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in Jakartakritis ardiansyahNo ratings yet

- Devops MCQ PDFDocument6 pagesDevops MCQ PDFSaad Mohamed SaadNo ratings yet

- (Adi Kuntsman (Eds.) ) Selfie CitizenshipDocument169 pages(Adi Kuntsman (Eds.) ) Selfie CitizenshipUğur GündüzNo ratings yet

- CBME 102 REviewerDocument17 pagesCBME 102 REviewerEilen Joyce BisnarNo ratings yet

- Group Work 1957 and On: Professional Knowledge and Practice Theory Extended at Adelphi School of Social WorkDocument8 pagesGroup Work 1957 and On: Professional Knowledge and Practice Theory Extended at Adelphi School of Social WorkgheljoshNo ratings yet

- CVE 3rd PTDocument4 pagesCVE 3rd PTAlexa AustriaNo ratings yet

- DK Selling StrategiesDocument7 pagesDK Selling StrategiesMilan DzigurskiNo ratings yet

- Crown WAV60 Operator Manual - CompressedDocument39 pagesCrown WAV60 Operator Manual - CompressedHector PeñaNo ratings yet

- Flight1 DC-9 Power SettingsDocument2 pagesFlight1 DC-9 Power SettingsAlfonso MayaNo ratings yet

- Ecology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalDocument58 pagesEcology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalSantoshi ShresthaNo ratings yet

- 5th Ed Ch02 QCDocument6 pages5th Ed Ch02 QCmohanNo ratings yet

- On Annemarie Mols The Body MultipleDocument4 pagesOn Annemarie Mols The Body MultipleMAGALY GARCIA RINCONNo ratings yet

- Ch-2 - Production PlanningDocument14 pagesCh-2 - Production PlanningRidwanNo ratings yet

- 300 Compact/1233: ManualDocument22 pages300 Compact/1233: ManualbogdanNo ratings yet

- Emg ManualDocument20 pagesEmg ManualSalih AnwarNo ratings yet

- XSGlobe On Double Fold Seam En-Product DataDocument3 pagesXSGlobe On Double Fold Seam En-Product DataAdefisayo HaastrupNo ratings yet

- Pca. Inglés 6toDocument15 pagesPca. Inglés 6toJuan Carlos Amores GuevaraNo ratings yet

- Datasheet Af11Document11 pagesDatasheet Af11kian pecdasenNo ratings yet

- Video Encoding: Basic Principles: Felipe Portavales GoldsteinDocument33 pagesVideo Encoding: Basic Principles: Felipe Portavales GoldsteinPrasad GvbsNo ratings yet

- Reflection #1Document4 pagesReflection #1Jaypee Morgan100% (3)

- Lohia (Democracy)Document11 pagesLohia (Democracy)Madhu SharmaNo ratings yet

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Uploaded by

jas_i_ti9Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Hans Marko The Bidirectional Communication Theroy - A Generalization of Information Theory 1973

Uploaded by

jas_i_ti9Copyright:

Available Formats

IEEE TRANSACTIONS

COMMUNICATIONS,

ON

VOL. COM-21, NO. 12, DECEMBER

1 3 4159 7 3

and Europzan areas where he designed and installed LF and HF audio teletype systems. He

engaged in signal andcomponent design for

UHF and microwave scatter and satellite communications systems. From1967to1969he

was a manager of .the ALTAIR radar site at

Kwajalein. Since 1970, as the Assistant Leader

of the Educational TechnologyGroup atLincoln

Laboratory, Massachusetts Institute of Technology, Lexington,he has workedon the Lincoln

Training System.

WilliamP. Harris received the Ph.D. degree in

psychology fromthe

University of Chicago,

Chicago, Ill., in, 1956.

He joined Massachusetts Institute of Technology, Lincoln Laboratory, Lexington, in 1952.

His early contributions to the Laboratory were

in the area of human factors design and operational testing for SAGE, and several subsequent

large scale military systems. He assisted in the

design and program management of the Reckoner facility onthe IBM 360/67. His current

work is on the Lincoln Training System as Leader of the Educational

Technology Group. He has published papers

on

human

decision

processes, on-line computing, a three-dimensional display and applications of LTS.

David Karp received the B.S. degree in electrical engineering from the

City College of New York, New York, N.Y. in 1951.

He worked with Philco Corporation, Philadelphia, Pa., from 1951 to

1955 as a field engineer assigned to the U.S. Air Force in North Atlantic

The Bidirectional Communication TheoryA Generalization. of Information Theory

Abstract-A generalization of information theory is presentedwith

the aim of distinguishing the direction of information flow for mutually

coupledstatistical systems. The bidirectionalcommunication theory

refers totwo systems. Twodirectedtransinformationsaredefined

which are a measure of the statistical coupling between the systems.

Their sum equals Shannons transinformation. An information flow

diagram explains the relationbetween the directedtransinformations

and theentropies of the sources. An extension to a group of such

systems has also been proposed. The theory is able to describe the informational relationships between living beings and other multivariate

complex systems as encountered in economy. An application example

referring to groupbehavior with monkeys is given.

Paper approved by the Associate Editor for Communication Theory

of the IEEE Communications Society for publication after presentation

at the 1968 Cybernetics Congress, Munich, Germany. Manuscript received August 28, 1972; revised June 11, 1973.

The author is with the Institut fur Nachrichtentechnik,Technische

Universitat, Munich, Germany.

I. INTRODUCTION

N THISpaper thetheory of bidirectionalcommunication is discussed. This theory is an extension of Shannons

information theory: its definitions are used as far as possible.

While Shannons theory describes a unidirectional transmission

linkage (transmissionchannel), thetheory presentedhere is

concerned with a bidirectional linkage (communication). The

limiting case of a unidirectional transinformation yields

Shannons channel.

The

receiver for

the

proposed communication model is, in contrast with Shannons channel, an

active statistical generator and therefore it is not possible to

distinguish it from the transmitter.

This theory resulted from the desire to describe generation

living beings, especially

and processing of informationby

humans, as well as information transmission from man to man.

1346

Forthispurpose

the following essential characteristics are

considered.

1) Information transmitter and receiver of a human being

are identical; a person has a characteristic information source

which is differentfromtheinformation

source ofanother

person. Therefore a mansupplies a stochastic processwhich

is typical for him with an entropy which characterizes him.

2) Information transmission betweentwo persons is considered as a !stochastic synchronization of thisstochastic

process. The entropy of each process has therefore a free

and a dependent part.

The latter represents the received

transinformation.

To describe this idea mathematically, the theory of Markov

processes is used;and stationarity has tobe assumed.

Even the philosophers of antiquity tried to describe the

process of thinking bymeans of association andmemory.

Aristotle described with a remarkable clearness the ,sequence

of imagination as a statistical process with inner linkage, as we

would call it liowadays, in his short essay Memory and

Recollection. According to him, recollection is characterized

by the fact that movements (i.e., imagination) follow each

otherhabitually

(i.e., mostly).The

presentsequence

is

essentially determined by the sequence of the earlier process.

Caused by the mechanical systems of Galilei andNewton, the

psychology of association came to a concept of a mechanisticdeterministic behavior in its search fora physics of soul.

Locke

and

Hartley

in

England and Herbart in Germany

attributedthe process of thinkingtoa causally determined

mechanism of association.This view has been corrected by

the modern psychology of thinking which recognized the importanceofintuition.Naturally,the

value of all of these

theories is limited because the statements cannot be quantized.

At theendofthe19thcentury,Galton,

Ebbinghaus,and

Wundt began with the experimentalinvestigation of association

processes, including association sequences. Finally, Shannons

fundamental work [ 11 rendered a quantitative description and

therefore made ameasuring of information possible.

The idea ofthe bidirectional communicationtheory was

first presentedbytheauthoratthecybernetics

congress at

Kiel, Germany, in September 1965 [2] and in May 1966 with

a lecture at the congress of the Popov Society in Moscow. An

explicit representation of this theory isgiven in thejournal

Kybernetik [ 3 ] , and short representations are given in [4] and

[ 5 ] . Related mathematicalproofs are presented in [6] and

[7]. An extension to the communication of a group has been

given byNeuburger [8], [9]. Thebidirectionalcommunication theory has beenapplied so far with behavioral sciences

[IO], [ 111 . Other applications for statistically coupled

systems, i.e., economical systems,are possible.

Two-way communication channels have beeninvestigated

earlier by Shannon and others, especially the feedback channel

[ 12]., [ 131 and the crosstalk channel [ 141 . In these investigations, however, the conventional definitionof transinformation

is used, and the information source is considered independent

and not statistically dependent, as in the present work.

The same applies for previous work with the aim to investigatemultivariate

correlation using informationalquantities

IEEE TRANSACTIONS O N COMMUNICATIONS, DECEMBER 1973

[ 151 - [ 171 . The conventional information theory is capable

of giving a generalized measure of correlation, but not of distinguishing the direction of information flow. This exactly is

the aim of the bidirectional communication theory.

Inthe

following part,ashort

description o f Shannons

channel with inner statistical linkages between the symbols i s

presented. Then the model of a communication is established.

The last part is a mathematical representation of communication, and the variables of the directed information are defined.

Information flow diagrams are presentedforillustration.

There it can be seen that Shannons information channel 1s a

special case of the more general communication theory given

in this pqper.

11. SHANNONSTRANSMISSIONCHANNEL

Fig. 1 shows the block diagram of unidirectionaltransmission according to Shannon. It consists of a message source

a coding device (C) which codes the message in an appro:

priate way for the transmission, the transmission channel,a

and the receiver (R). The channel (Ch)

decoding device (D),

contains a noise source ( N ) which represents the disturbance.

It is essential foraquantitativedescription

of information

transmission. Thus the block diagram contains two statistical

generators: the message source Q andthe noise source N .

According to the usual symbolism, the sources are represented

by circles; all the other parts are passive and drawn as boxes.

The receiver is passive in contrast to the bidirectional communication model. The receiver usually is considered as ideal,

supposing thatit canevaluate the received message in its

statistical propertiesoptimally (i.e., it is supposed to have

storage ofinfinite size). Because of these assumptions,the

transmissionbecomes independent of the receiver and is determined solely by the properties of the message sourceand

thechannel.Toapply

Shannons formulasforthe

general

case of a channel with memory considered here, stationarity

must be assumed. A sequence of symbols x, = x l x 2 . . . at the

receiver is observed. Both transmitted and received sequences

are supposed to have n symbols. The followingprobabilities

are defined.

(e),

p(x,)

Probability

for

the

occurrence

of

the

sequence

x, at the transmitter.

pb,)

Probability

for

the

occurrence

of

the

sequence

receiver.

p ( x , y,) Joint probability for the occurrence of x , and y,.

p(x,ly,) Conditionalprobability.for

t h e occurrenceof

x, when y , is known.

p b , [x,) Conditionalprobabilityfortheoccurrenceof

y , when x

, is known.

.

yn the

at

{ } designates theexpectation value (mean value). Theentropies related to onesymbol can be calculated fromthe

probabilities as follows.

Entropy at the transmitter:

1

H ( x ) = lim - { - log p ( x , ) } .

n-+m

Entropy at the receiver:

1347

MARKO: BIDIRECTIONAL COMMUNICATION THEORY

source

coder

decoder

rqwrer

channel

Ch

! y

1 noire

Fig. 1. Shannons block schematic of a Unidirectional communication

channel.

processes). Furthermore, they have, like Shannons channel, a

codingand a decoding device (neurophysiologically the deand the coding

coding device corresponds totheafferent

device to the efferent signal processing). M1 andM2 are c w Joint entropy:

nectedbytwo

transmissionchannels(corresponding

to this

connection is the external world, as, far example, an optical

or acoustical linkage). These channelscangenerally,

as in

Shannons case, be disturbed. However, it is not necessary to

Equivocation:

consider the disturbances isolated; their influence is contained

in the statistical description of the model.

The essential characterigics of theinformation generation

and transmission according to this conception are as follows.

Irrelevancy:

1) The receiver is active and identical with the transmitter

of the same side. It generates information continuously, even

when both transmission channels are interrupted.

2) The transinformation transmitted during a linkage causes

The mean transinformation between the

sequence x and the

a .stochastic synchronization of the receiver, and because of

sequence y , referred to one symbol pair, is found tobe

this it influences its information.

T = H ( x ) -t H ( Y ) - H(x, V >

Fig. 3 shows for M 1 and M 2 the stochastic processes and

the

statisticalcoupling,which is shownby the dashed lines.

=Nx)- f w y )

The choice of the present message elements (symbols) is de=H(y) - H W ) .

( 6 ) pendent on the past symbols of its own process and the past

This is the fundamental law of the information theory. It can symbols of theother process. Influencing atthe same time

be written in those three versions because of the relation

doesnottake

place; with this,causality

has been taken

into account.

P(xny,)=p(xnIY,).P~n)=pcV,lxn).p(xn).

Thefollowingdefinitions

are valid for Markov processes

with decreasing statistical linkages; however, it has to be menThe coding theorems of information andtransinfoymation-not

tionedthat

all variables defined exist in amore

general

being discussed in this paper-show that a channel defined in

themanner

described above is actually able to transmit representationfor whichonly stationarity is required.The

messages of T binary digits (bits) with an arbitrary small error entropy of the two processes is denoted H1 and H z . The directed mean transinformation is denoted T I 2for the direction

probability if the message considered is infinitely long.

Shannons conception describes a unidirectional linkage M2 M I and T21 for MI M2 (the first index refers to the

with an active transmitter and a passive receiver. To apply this receiver, the second to the transmitter).

Three cases can be distinguished.

conception f0r.a bidirectional linkage, it could be repeated for

I ) Recoupling, M1 I M 2 : The linkage is interrupted in both

the opposite direction. This, however, would yield two indedirections. Therefore, T 1 2= 0 and TZ1 =O. The two stochastic

pendent systems where both directions are repeated entirely.

processes are independent of one another.

A N D THE

111. THE MODEL OF A COMMUNICATION

2 ) Monologue, M1 + M 2 orM2 -+MI: The linkage is interGENERATION

OF INFORMATION

rupted only in one direction, for instance, the lower of Fig. 2.

Fig. 2 shows the block diagram of the new conception. It is Then M 2 is the transmitter and M1 is the receiver. Now the

process of MI is

supposed to describe the communication between two persons process of M 2 is not influenced;the

(from here on denoted M1 and M 2 ) information-theoretically. stochastically synchronized. Consequently TZl = 0 and

Both have as an essential part the information source Ql , re- T I exists.

3) Dialogue, M1 2 M 2 : The linkage exists in both direcspectively, Q 2 , two statistical generators with a symbol alphaprocessesinfluence each other.

bet which may be generally different (the information which tions, and therefore the two

T

1

2

as

well

as

T2,

exist.

Generally,

they produce may be interpreted psychologically as conscious

+

-+

IEEE TRANSACTIONS ON COMMUNICATIONS, DECEMBER 1973

1348

Directed transinformation M1

Fig. 3. Time run of the stochastic processes of M1 and M2 and their

statistical interdependence.

--f

M2 :

Coincidence M1 +-+ M 2 :

The last case (dialogue) is the most general: the first two

cases are contained in it as special cases. Thedefinitions of

the following part therefore refer to this general case.

According to these definitions,thetotalinformation

is

single process. It can be

equal to the usual entropyofthe

Iv. MATHEMATICALDESCRIPTIONOF COMMUNICATION, shown, for example in [ 6 ] ,that (7) and (1) correspond; the

DEFINITIONOF THE DIRECTED TRANSINFORMATION same is valid for (10) and (2). The free information is the

A N D THE FREEINFORMATION

entropy of one process with knowledge of theother. NuThe following.conditiona1 probabilities are to be defined: x merically it is naturally smaller than the entropy without this

is a symbol of M1, and y is a symbol ofM2 at the same time. knowledge. The difference of

T 1 2= H1 - F1

entropy

designates

the

statistical

influence

of

the

second

process on the

p(xl x,)

Conditionalprobabilityfortheoccurrenceof

x

first

process

and

is

defined

as

the

directed

transinformation.

when n previous symbols x, of the own process

Furthermore, it can be shown [ 6 ] that the coincidence acare known.

cording

to (13) agrees with Shannons transinformation equap ( y I y,)

Similarly.

(6):

tion

p(xl x,y,)

Conditional probability for the occurrence of x

when n previous symbols of the own process

x, as well as of the otherprocess yn are known.

p(yly,x,)

Similarly.

as before,of

decreasing statistical

p(xyl xny,) Conditional probability for the occurrence of x withtheassumption,

and y when n previous symbols of both pro- linkages.

It could be called undirected or total transinformation.

cesses are known.

It couples the two processes in a symmetrical manner; thereThe equations

fore it does not

have a special direction.The followingimportant

relation

for

the coincidence is valid:

P(xlxnyn) = ~ ( x l x m ~ m )

K =-TI2-I-T21.

P(YIYnXn) = ~ ( Y l ~ m x m )

are valid for Markov processes of the order m when n 2 m .

The transition probabilities p(xl xmym)and p(ylymxm)

determine the two stochastic processes completely; therefore

they can be designated as generator probabilities.

The symbol { } represents expectation values. The following equations represent mean information values per symbol

(entropy), according to the following definitions.

Total information ofMI :

H1= lim { - log p(x)x,)}.

n-*-

(7)

Free information o f M l :

F 1 = lim { - logp(xlx,y,)}.

n+

For the case monologue, one of the two transinformations

vanishes; therefore the other transinformation is equal to the

coincidence and to Shannons transinformation. With this, it

is proven that Shannons channel represents a

special case of

the bidirectional communication.

All the variables defined above are positive. Thedirected

transinformation represents the information gain for the next

own produced symbol due to the received message. The total

information is theentropy of theown process; ithasone

part which is due tothe

received transinformation, and

another part, the free information; this can be shown from

the definitions since

(8)

Directed transinformationM 2 -+MI :

Hi

= Ti2

H2

+ Fl

T21 -t F2.

Finally, the following conditionalentropies,

entropies, are introduced:

Total information ofM 2 :

R 1 = H 1 - K =F1

R2

Free information ofM 2 :

(14)

=Hz

T21

- K = F 2 - T12.

-

(15)

(1 6 )

called residual

(17)

(18)

Then the above relations can be represented clearly by the information flow diagram of Fig. 4. The two stochastical

generators of MI and M 2 generate thefreeinformation. At

the nodes (15)-( 18) are valid as Kirchhoff laws.

MARKO: BIDIRECTIONAL COMMUNICATION THEORY

1349

T12

Fig. 4. Information flow diagram with a bidirectional communication.

Fig. 4 shows, for example, that the total information H 1 is

composed of the freegenerated information F 1 and the received transinformation T 12 . In the transmission direction

T 1 2 hasto be subtracted,forit

is already knownbythe

counterpart. F 1 is then transmitted, but due to the absence of

a strong coupling, the residual entropy R 1 is subtracted. .This

may be interpreted as the effect of disturbances, if desired.

Finally, TZ1reaches the counterpart and yields together with

his free generated information F2 to its total information H z .

For the opposite direction, thesame reasoning holds.

Fig. 5 represents the special case of monologue M 2 +M1 .

It corresponds to Shannons unidirectional transmission channel because TZ1= 0. Here R 2 represents the equivocation and

R = F 1 the irrelevancy.

Fig. 6 shows finally theinformation flow diagram for the

special case of decoupling.

Now the laws which the information flows obey are considered. From Fig. 4 it follows that the sum of the arriving

currents has to be equal to the sum of the leaving currents:

F1 + F z = R I + R , + K = H 1+ H z K

- .

(19)

From this we get an important statement. The larger the coincidence, that is, thetotaltransmittedtransinformation

in

both directions, the smaller is (for given entropies H 1 and H z )

the sum of the free informations. An effective communication

limits the sum of the free generated information, which seems

to be logical because of thestrong coupling of the .two

processes in this case. There is another limitation for the magnitude of the transinformation flows whichresults from the

identity of thetotalinformation defined herewith Shannons

entropy. The following inequalities are valid, since Shannons

transinformation, here the coincidence, is always less than

H(x) as well as H ( y ) .

H1 & K

Hz

2K.

(20)

R1

Fig. 5. Information flow diagram with a monologue M2 + M I .

t

Rl=Hl

Fig. 6. Information flow diagram with decoupling.

clear are (22)and (23). Theystatethatthe

received transinformation at M 1 cannot be larger than the freegenerated

information of M 2 . It is not possible, for example, that the

and retransinformation generatedby M1 canbereflected

mitted by M 2 because M1 knows this information already.

Because of this limitation, no loop current can develop in the

loop of Fig. 4. The two transinformations as well as the coincidence become maximum if the ine,qualities become equations. This case is called maximum coupling. If the relations

Tl2 = F2

(26)

T21 =F1

(27)

exist, then the information flow diagram of Fig. 7 is valid. It

can be seen thatthefreeinformation

of theoppositeend

appears as transinformation; that means it is accepted entirely.

Both residual entropies vanish. The total information in both

cases has the same magnitude and is composed of the sum of

the two free informations.

It seems to be meaningful to define a stochastical degree of

synchronization or-psychologically-a

degree of perception which is given by the received transinformation referred

to the total information.

(21)

Inserting this in(1 5)-( 18) yields

u1 as well as u2 can vary between 0 and 1. The case u1 = 1 is

With this the directed transinformations are limited. Especially

called suggestion. The free informationatthe

receiver

vanishes, and the total information is only determined by the

received transinformation. It can be shown that either u1 = 1

same time, can

or u2 = 1, but not both equal to one at the

1350

IEEE TRANSACTIONS ON COMMUNICATIONS, DECEMBER 1973

T2 1

M1

'G1

,suggestion M2 +Ml

Fig. 7. Information flow diagram with maximum coupling.

/

decouplmg

occur, i.e., a suggestion in both directions at the same time is

impossible. For the sum of the two degrees of synchronization

the following holds generally:

Fig. 8. Possible values of the degrees of synchronization

Dominant

animal

171

and 172.

Subdominod

onimd

"dictator"-

<

Tl2

T2 1

= 1.

T12 + T21 T21 + T12

tehaviour

It may be noted that the minimum values for F1 and F2 are

"hero"introduced in this equation according to (22) and (23). The

behviour

equality sign holds for the case of maximum coupling, according to (26) and (27). This means that ul + u2 = 1 for maximum coupling. Given this case, ul = 1 corresponds to the Fig. 9. Information flow diagram for bidirectional communication as

case of suggestion M 2 M I , and u2 = 1 to the case of sug- group behavior between two monkeys. (All figures given by bit/action.)

gestion M 1-+ M2.

1) Thebehavior of "dictator"inwhich

the free action as

Fig. 8 shows the possible values of u1 and u2 according to

the free entropyofthedominant

animal is

(30). They are situated within a rectangulartriangle.

The expressedby

The major

hypotenuse represents the case of maximum coupling. The greater as comparedwiththesubdominantone.

(ul = 0 , re- behavioral influence as expressed by the directed transinformatwo cathetusses correspondtothemonologue

spectively, u2 = 0 ) and therefore to,Shannon's unidirectional tion goes.from the dominant to the subdominant animal.

2) The behavior of "hero" in which the free actions of both

channel.Twocornerpoints

describe thesuggestion,andthe

origin means decoupling.The

diagram contains all special animals are about equal. Most of the behavioral influence goes

the opposite direction, namely, from the subdominant

to the

cases, and showsinwhich

mannerthebidirectionalcommunication is a generalization of Shannon's information theory. dominant animal.

The"dictator"

modification is unstable. It occurred imWith the definitions suggested in this paper, it seems to be

possible to give a quantitative description of the communica- mediately afterthe establishment of thedominantrelationtionbetweenhuman

beings in termsofacommunication

ship,and changed continuouslyinabout 6 weeks time into

theory. The mathematical modelrequires the existence of the "hero" modification, which proved to be the stable one.

two (or more) statistical processes, and describes their mutual

The observations and evaluations have been performed as a

coupling by means of a stochastical synchronization.

cooperationbetweentheInstitutfurNachrichtentechnik

of

the TechnischeHochschule,

Munchen,and

Deutsche ForV. APPLICATIONS

schungsanstalt fur Psychiatrie, Munchen, by W. Mayer.

The bidirectional communication theory has been applied to

In biologyandsociology,

communication theory seems to

the social behavior ofmonkeys [ 111. Twomonkeysofa

have wideapplications due to the fact that

living beings are

social group have been put together in one cage and their ac- (by their actions) sources of information which influence each

tions have been observed and registered for a period of 15 min. other in all possible directions.

Five typical behavioral activities (seating, leaving, genital disIn order to avoid misunderstanding, it has to be mentioned

play,churrcall[expressing

aggressive motivation] , pushing) that the present theory is just able to describe the communicaformed two statistical time sequencesto which the bidirectional tion between two persons or between two living beings only

view. Thelimited

communicationtheoryhas

beenapplied.Theresults

were from an informationtheoreticalpointof

typical for the social relation and agreed for each selected pair importance of this view results from the definition of the inof two individuals. Two already known modifications of the formation based on the probability of the message. Furtherdominant behaviorshowedtypical

values with regard to the more, this conception is onlya first approximation of this

communication quantities (see Fig. 9).

problem because stationarity is assumed, therefore excluding

+

'

MARKO: BIDIRECTIONAL COMMUNICATION THEORY

learning processes. An extensiontothenonstationary,

respectively, the quasi-stationary case considering learning and

forgetting, seems to be possible and meaningful. Furthermore,

an extension of this theory to communication relations within

a group has been done in [9]. Using this, the investigation of

multivariatesystems,i.e.,socioeconomicalsystems,

seems to

be possible in a similar way. The ability of distinguishing the

direction of information flow with this theory may prove to

be a useful tool for examining multivariate complex systems.

ACKNOWLEDGMENT

The author wishes to thank Dr. Neuburger, who helped with

many fruitful discussions and mathematical proofs, and who

extended the theory to the multidirectionalcase.

REFERENCES

[ 11 C. E. Shannon, A mathematicaltheory

of communication,

Bell Syst. Tech. J., vol. 21, pp. 379-423, 623-652, 1948.

[2] H. Marko, Informationstheorie und Kybernetik.Fortschr.d.Kybernetik, in Bericht uber die Tagung Kiel 1965 der Deutschen

Arbeitsgemeinschaft

Kybernetik.

Munich, Germany: Oldenbourg-Verlag, 1967, pp. 9-28.

[ 31 -,

Die Theorie der bidirektionalen Kommunikation und ihre

Anwendung auf die Informationsiibermittlung zwischen Menschen

(subjective information), Kybernetik, vol. 3, pp. 128-136, 1966.

Information theory and cybernetics, IEEESpectrum,

[4] -,

pp. 75-83,1967.

[5] H. Marko and E. Neuburger, A short reviewof thetheory of

bidirectionalcommunication, Nachrichtentech. Z., vol. 6,pp.

320-323, 1970.

[6] -, Uber gerichtete Groi3en in der Informationstheorie. Untersuchungen zur Theoriederbidirektionalen

Kommunikation,

Arch. Elek. Ubertragung, vol. 21, no. 2, pp. 61-69, 1967.

[ 71 E. Neuburger, Zwei Fundamentalgesetzederbidirektionalen

Kommunikation, Arch. Elek. Ubertragung, no. 5, pp. 208-214,

1970.

Beschreibung der gegenseitigen Abhangigkeit von Signal[8] -,

folgen durchgerichteteInformationsgroDen,

Nachrichtentech.

Fachberichte, vol. 33, pp. 49-59, 1967.

[9] -, Kommunikation der Gruppe (Ein Beitrag zur Informationstheorie). Munich, Germany: Oldenbourg-Verlag, 1970.

1351

[ 101 G. Hauske and E. Neuburger, Gerichtet:,Informationsgrofien

zur Analyse gekoppelterVerhaltensweisen,

Kybernetik, vol. 4,

pp. 171-181, 1968.

[ 111 W. Mayer, Gruppenverhalten

von

Totenkopfaffen

unter

besonderer Beriicksichtigung der Kommunikationstheorie, Kybernetik, vol. 8, pp. 59-68, 1970.

of a noisy channel,

[12] C. E. Shannon,Thezero-errorcapacity

IRE Trans. Inform. Theory, vol. IT-2, pp. 8-19, Sept. 1956.

[13] J. P. M. Schalkwijk, Recent developments in feedback communication, Proc. IEEE, vol. 57, pp. 1242-1249, July 1969.

[ 141 C. E. Shannon,Two way communicationchannels, in Proc.

4thBerkeleySymp.Math.Stat.

and Prob., vol. 1. Berkeley:

Univ. California Press, pp. 6 11-644.

[ 151 W. J. McGill, Multivariate information transmission, Psychometrica, vol. 19, pp. 97-116, 1954.

[ 161 W. R. Ashby, Information flow withincoordinated systems,

Publ. 203, Int. Congr. Cybern., John Rose, Ed. London:1969,

pp. 57-64.

[ 171 S . Watanabe, Information theoretical analysis of multivariate

correlation, IBM J., pp. 66-82, 1960.

You might also like

- Ebook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFDocument67 pagesEbook Strategic Management Competitiveness and Globalization Concepts and Cases 14E PDF Full Chapter PDFsamuel.chapman41697% (38)

- Press Release TemplateDocument2 pagesPress Release TemplateJocelyn MartensonNo ratings yet

- Computational Models For The Human Body PDFDocument679 pagesComputational Models For The Human Body PDFGuilherme AyresNo ratings yet

- Inductive Learning Algorithms For Coplex Systems Modeling PDFDocument373 pagesInductive Learning Algorithms For Coplex Systems Modeling PDFggropNo ratings yet

- Cybernetics, Semiotics and Meaning in The Cinema. Keyan G TomaselliDocument18 pagesCybernetics, Semiotics and Meaning in The Cinema. Keyan G TomaselliIsaac KnarsNo ratings yet

- A Mathematical Model of DNA ReplicationDocument8 pagesA Mathematical Model of DNA ReplicationRasoul GmdriNo ratings yet

- Report Cover For Postgraduate Students: /subject: Empirically Reducing Complex System Into One Representative AgentDocument26 pagesReport Cover For Postgraduate Students: /subject: Empirically Reducing Complex System Into One Representative AgentLaamaarii AmineeNo ratings yet

- Category Theory For Autonomous and Networked Dynamical SystemsDocument11 pagesCategory Theory For Autonomous and Networked Dynamical SystemsBranimir BobanacNo ratings yet

- Numerical Continuation Methods For Dynamical SystemsDocument411 pagesNumerical Continuation Methods For Dynamical SystemsDionisis Pettas0% (1)

- ChaptersDocument20 pagesChaptersAlexanderNo ratings yet

- Barber Et Al 2005 Learning As A Social ProcessDocument37 pagesBarber Et Al 2005 Learning As A Social ProcessGaston Becerra100% (1)

- 156 CarteDocument15 pages156 CarteLiv TariganNo ratings yet

- Chapter 1 (Chip-Scale Quantum Imaging and Spectroscopy)Document81 pagesChapter 1 (Chip-Scale Quantum Imaging and Spectroscopy)Muhammad AhsanNo ratings yet

- 2 - 1973-Outline of A New Approach To The Analysis of Complex Systems and Decision ProcessesDocument18 pages2 - 1973-Outline of A New Approach To The Analysis of Complex Systems and Decision ProcessesDr. Ir. R. Didin Kusdian, MT.No ratings yet

- Models of Communication 2Document2 pagesModels of Communication 2Jurie Leann DaanNo ratings yet

- Computational Social ScineceDocument21 pagesComputational Social ScineceAndrej KranjecNo ratings yet

- Information Theory Applications in Signal ProcessingDocument4 pagesInformation Theory Applications in Signal Processingalt5No ratings yet

- Computational IntelligenceDocument54 pagesComputational Intelligencebogdan.oancea3651No ratings yet

- Representations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopDocument47 pagesRepresentations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopSherjil OzairNo ratings yet

- Khrennikov, A. Et Al. (2018) - On Interpretational Questions For Quantum-Like Modeling of Social Lasing. EntropyDocument24 pagesKhrennikov, A. Et Al. (2018) - On Interpretational Questions For Quantum-Like Modeling of Social Lasing. EntropyMauricio Mandujano MNo ratings yet

- Stat Phys Info TheoDocument214 pagesStat Phys Info TheomaxhkNo ratings yet

- A New Study in Maintenance For Transmission LinesDocument10 pagesA New Study in Maintenance For Transmission LinesbladdeeNo ratings yet

- Abdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsDocument33 pagesAbdallah, Gold, Marsden - 2016 - Analysing Symbolic Music With Probabilistic GrammarsGilmario BispoNo ratings yet

- Systems: Visual Analysis of Nonlinear Dynamical Systems: Chaos, Fractals, Self-Similarity and The Limits of PredictionDocument18 pagesSystems: Visual Analysis of Nonlinear Dynamical Systems: Chaos, Fractals, Self-Similarity and The Limits of PredictionbwcastilloNo ratings yet

- Theoretical Computer Science: Flavio Chierichetti, Silvio Lattanzi, Alessandro PanconesiDocument9 pagesTheoretical Computer Science: Flavio Chierichetti, Silvio Lattanzi, Alessandro PanconesiIván AveigaNo ratings yet

- Information Theory and Security Quantitative Information FlowDocument48 pagesInformation Theory and Security Quantitative Information FlowFanfan16No ratings yet

- tmpD1FF TMPDocument7 pagestmpD1FF TMPFrontiersNo ratings yet

- A Mathematical Theory of Communication 1957Document79 pagesA Mathematical Theory of Communication 1957scribblybarkNo ratings yet

- Blanchard P., Volchenkov D. - Random Walks and Diffusions On Graphs and Databases. An Introduction - (Springer Series in Synergetics) - 2011Document277 pagesBlanchard P., Volchenkov D. - Random Walks and Diffusions On Graphs and Databases. An Introduction - (Springer Series in Synergetics) - 2011fictitious30No ratings yet

- Entropy 21 01124 v2Document13 pagesEntropy 21 01124 v2peckingappleNo ratings yet

- Entropy: Quantum Entropy and Its Applications To Quantum Communication and Statistical PhysicsDocument52 pagesEntropy: Quantum Entropy and Its Applications To Quantum Communication and Statistical PhysicsRaktim HalderNo ratings yet

- BPT InitiaDocument5 pagesBPT InitiakihtrakaNo ratings yet

- UntitledDocument116 pagesUntitledTsega TeklewoldNo ratings yet

- La Utilidad de Los Constructos MatematicoseScholarship - UC - Item - 8g19k58xDocument33 pagesLa Utilidad de Los Constructos MatematicoseScholarship - UC - Item - 8g19k58xMario VFNo ratings yet

- Entropy: Information Theory For Data Communications and ProcessingDocument4 pagesEntropy: Information Theory For Data Communications and ProcessingDaniel LeeNo ratings yet

- SCHOLKOPF (2019) - Robustifying Independent Component Analysis by Adjusting For Group-Wise Stationary NoiseDocument50 pagesSCHOLKOPF (2019) - Robustifying Independent Component Analysis by Adjusting For Group-Wise Stationary NoisemvbadiaNo ratings yet

- Vdocuments - MX - Classical Mechanics With Mathematica PDFDocument523 pagesVdocuments - MX - Classical Mechanics With Mathematica PDFOrlando Baca100% (2)

- Entropy: Information and Self-OrganizationDocument6 pagesEntropy: Information and Self-OrganizationMiaou157No ratings yet

- Paper 74Document10 pagesPaper 74Vikas KhannaNo ratings yet

- Outline of New Approach To The Analysis of Complex Systems and Decision ProcessesDocument17 pagesOutline of New Approach To The Analysis of Complex Systems and Decision ProcessesEKS VIDEOSNo ratings yet

- Theory Culture Society 2005 Gane 25 41Document18 pagesTheory Culture Society 2005 Gane 25 41Bruno ReinhardtNo ratings yet

- Information TheoryDocument1 pageInformation TheoryTrần DươngNo ratings yet

- 549 1496 2 PBDocument10 pages549 1496 2 PBgokhancantasNo ratings yet

- A Mathematical Theory of CommunicationDocument3 pagesA Mathematical Theory of CommunicationMorfolomeoNo ratings yet

- Paper 24 RelStat-2019 LSTM Jackson Grakovski EN FINAL1Document10 pagesPaper 24 RelStat-2019 LSTM Jackson Grakovski EN FINAL1Alexandre EquoyNo ratings yet

- Fqxi Essay 2017 RGDocument9 pagesFqxi Essay 2017 RGFrancisco Vega GarciaNo ratings yet

- Computer Graphics Meets Chaos and Hyperchaos. Some Key ProblemsDocument9 pagesComputer Graphics Meets Chaos and Hyperchaos. Some Key ProblemsAlessandro SoranzoNo ratings yet

- A Fogalmi Terek Általános Megfogalmazása Mezo Szintű ReprezentációkéntDocument44 pagesA Fogalmi Terek Általános Megfogalmazása Mezo Szintű ReprezentációkéntFarkas GyörgyNo ratings yet

- A Theory of ResonanceDocument40 pagesA Theory of ResonanceckreftNo ratings yet

- Berliner1992a PDFDocument23 pagesBerliner1992a PDFGiovanna S. S.No ratings yet

- Mathematical Image Processing 9783030014575 9783030014582Document481 pagesMathematical Image Processing 9783030014575 9783030014582SasikalaNo ratings yet

- Data Mining1Document3 pagesData Mining1Nurul NadirahNo ratings yet

- Chaotic SystemsDocument322 pagesChaotic SystemsOsman Ervan100% (1)

- Connectionism and Cognitive Architecture - A Critical Analysis - 1Document8 pagesConnectionism and Cognitive Architecture - A Critical Analysis - 1Marcos Josue Costa DiasNo ratings yet

- A Mathematical Theory of CommunicationDocument55 pagesA Mathematical Theory of CommunicationJoão Henrique Gião BorgesNo ratings yet

- DatascienceDocument14 pagesDatasciencejoseluisapNo ratings yet

- Statistical Mechanics of NetworksDocument13 pagesStatistical Mechanics of NetworksSilviaNiñoNo ratings yet

- Johannes ColloquiunDocument31 pagesJohannes ColloquiunTanmay HansNo ratings yet

- Blind Identification of Multichannel Systems Driven by Impulsive SignalsDocument7 pagesBlind Identification of Multichannel Systems Driven by Impulsive SignalsRafael TorresNo ratings yet

- A Mathematical Theory of Communication by C. E. SHANNONDocument79 pagesA Mathematical Theory of Communication by C. E. SHANNONandreslorcaNo ratings yet

- A Mathematical Theory of CommunicationDocument79 pagesA Mathematical Theory of CommunicationCosmin B.No ratings yet

- Emergent Nested Systems: A Theory of Understanding and Influencing Complex Systems as well as Case Studies in Urban SystemsFrom EverandEmergent Nested Systems: A Theory of Understanding and Influencing Complex Systems as well as Case Studies in Urban SystemsNo ratings yet

- TRA 02 Lifting of Skid Units During Rig MovesDocument3 pagesTRA 02 Lifting of Skid Units During Rig MovesPirlo PoloNo ratings yet

- Le Wagon FullstackDocument23 pagesLe Wagon FullstackClara VaninaNo ratings yet

- Lab Assignment 11313Document2 pagesLab Assignment 11313abhaybaranwalNo ratings yet

- Mandate Hub User GuideDocument53 pagesMandate Hub User GuideMuruganand RamalingamNo ratings yet

- Ship Maintenance Planning With NavCadDocument4 pagesShip Maintenance Planning With NavCadtheleepiper8830No ratings yet

- Weight-Volume Relationships: Principles of Geotechnical Engineering Eighth EditionDocument22 pagesWeight-Volume Relationships: Principles of Geotechnical Engineering Eighth EditionThony CayNo ratings yet

- ENGLISH 9 DLP For DEMODocument9 pagesENGLISH 9 DLP For DEMOMary Joy Corpuz100% (2)

- Relationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in JakartaDocument6 pagesRelationship Between Nurse Case Manager's Communication Skills and Patient Satisfaction at Hospital in Jakartakritis ardiansyahNo ratings yet

- Devops MCQ PDFDocument6 pagesDevops MCQ PDFSaad Mohamed SaadNo ratings yet

- (Adi Kuntsman (Eds.) ) Selfie CitizenshipDocument169 pages(Adi Kuntsman (Eds.) ) Selfie CitizenshipUğur GündüzNo ratings yet

- CBME 102 REviewerDocument17 pagesCBME 102 REviewerEilen Joyce BisnarNo ratings yet

- Group Work 1957 and On: Professional Knowledge and Practice Theory Extended at Adelphi School of Social WorkDocument8 pagesGroup Work 1957 and On: Professional Knowledge and Practice Theory Extended at Adelphi School of Social WorkgheljoshNo ratings yet

- CVE 3rd PTDocument4 pagesCVE 3rd PTAlexa AustriaNo ratings yet

- DK Selling StrategiesDocument7 pagesDK Selling StrategiesMilan DzigurskiNo ratings yet

- Crown WAV60 Operator Manual - CompressedDocument39 pagesCrown WAV60 Operator Manual - CompressedHector PeñaNo ratings yet

- Flight1 DC-9 Power SettingsDocument2 pagesFlight1 DC-9 Power SettingsAlfonso MayaNo ratings yet

- Ecology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalDocument58 pagesEcology and Distribution of Sea Buck Thorn in Mustang and Manang District, NepalSantoshi ShresthaNo ratings yet

- 5th Ed Ch02 QCDocument6 pages5th Ed Ch02 QCmohanNo ratings yet

- On Annemarie Mols The Body MultipleDocument4 pagesOn Annemarie Mols The Body MultipleMAGALY GARCIA RINCONNo ratings yet

- Ch-2 - Production PlanningDocument14 pagesCh-2 - Production PlanningRidwanNo ratings yet

- 300 Compact/1233: ManualDocument22 pages300 Compact/1233: ManualbogdanNo ratings yet

- Emg ManualDocument20 pagesEmg ManualSalih AnwarNo ratings yet

- XSGlobe On Double Fold Seam En-Product DataDocument3 pagesXSGlobe On Double Fold Seam En-Product DataAdefisayo HaastrupNo ratings yet

- Pca. Inglés 6toDocument15 pagesPca. Inglés 6toJuan Carlos Amores GuevaraNo ratings yet

- Datasheet Af11Document11 pagesDatasheet Af11kian pecdasenNo ratings yet

- Video Encoding: Basic Principles: Felipe Portavales GoldsteinDocument33 pagesVideo Encoding: Basic Principles: Felipe Portavales GoldsteinPrasad GvbsNo ratings yet

- Reflection #1Document4 pagesReflection #1Jaypee Morgan100% (3)

- Lohia (Democracy)Document11 pagesLohia (Democracy)Madhu SharmaNo ratings yet