Professional Documents

Culture Documents

03d Algind KNN Eng

03d Algind KNN Eng

Uploaded by

Itss MudOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

03d Algind KNN Eng

03d Algind KNN Eng

Uploaded by

Itss MudCopyright:

Available Formats

K-nearest neighbours

Javier Bejar c b e a

LSI - FIB

Term 2012/2013

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

1 / 23

Outline

Non Parametric Learning

K-nearest neighbours

K-nn Algorithm

K-nn Regression

Advantages and drawbacks

Application

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

2 / 23

Non Parametric Learning

Non Parametric Learning

K-nearest neighbours

K-nn Algorithm

K-nn Regression

Advantages and drawbacks

Application

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

3 / 23

Non Parametric Learning

Parametric vs Non parametric Models

In the models that we have seen, we select a hypothesis space and

adjust a fixed set of parameters with the training data (h (x))

We assume that the parameters summarize the training and we can

forget about it

This methods are called parametric models

When we have a small amount of data it makes sense to have a small

set of parameters and to constraint the complexity of the model

(avoiding overfitting)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

4 / 23

Non Parametric Learning

Parametric vs Non parametric Models

When we have a large quantity of data, overfitting is less an issue

If data shows that the hipothesis has to be complex, we can try to

adjust to that complexity

A non parametric model is one that can not be characterized by a

fixed set of parameters

A family of non parametric models is Instance Based Learning

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

5 / 23

Non Parametric Learning

Instance Based Learning

Instance based learning is based on the memorization of the dataset

The number of parameters is unbounded and grows with the size of

the data

There is not a model associated to the learned concepts

The classification is obtained by looking into the memorized examples

The cost of the learning process is 0, all the cost is in the

computation of the prediction

This kind learning is also known as lazy learning

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

6 / 23

K-nearest neighbours

Non Parametric Learning

K-nearest neighbours

K-nn Algorithm

K-nn Regression

Advantages and drawbacks

Application

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

7 / 23

K-nearest neighbours

K-nearest neighbours

K-nearest neighbours uses the local neighborhood to obtain a

prediction

The K memorized examples more similar to the one that is being

classified are retrieved

A distance function is needed to compare the examples similarity

pP

Euclidean distance (d(xj , xk ) = P i (xj,i xk,i )2 )

Mahnattan distance (d(xj , xk ) = i |xj,i xk,i |)

This means that if we change the distance function, we change how

examples are classified

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

8 / 23

K-nearest neighbours

K-nearest neighbours - hypothesis space (1 neighbour)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

9 / 23

K-nearest neighbours

K-nn Algorithm

K-nearest neighbours - Algorithm

Training: Store all the examples

Prediction: h(xnew )

Let be x1 , . . . , xk the k more similar examples to xnew

h(xnew )= combine predictions(x1 , . . . , xk )

The parameters of the algorithm are the number k of neighbours and

the procedure for combining the predictions of the k examples

The value of k has to be adjusted (crossvalidation)

We can overfit (k too low)

We can underfit (k too high)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

10 / 23

K-nearest neighbours

K-nn Algorithm

K-nearest neighbours - Prediction

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

11 / 23

K-nearest neighbours

K-nn Algorithm

Looking for neighbours

Looking for the K-nearest examples for a new example can be

expensive

The straightforward algorithm has a cost O(n log(k)), not good if the

dataset is large

We can use indexing with k-d trees (multidimensional binary search

trees)

They are good only if we have around 2dim examples, so not good for

high dimensionality

We can use locality sensitive hashing (approximate k-nn)

Examples are inserted in multiple hash tables that use hash functions

that with high probability put together examples that are close

We retrieve from all the hash tables the examples that are in the bin of

the query example

We compute the k-nn only with these examples

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

12 / 23

K-nearest neighbours

K-nn Algorithm

K-nearest neighbours - Variants

There are different possibilities for computing the class from the k

nearest neighbours

Majority vote

Distance weighted vote

Inverse of the distance

Inverse of the square of the distance

Kernel functions (gaussian kernel, tricube kernel, ...)

Once we use weights for the prediction we can relax the constraint of

using only k neighbours

1

2

We can use k examples (local model)

We can use all examples (global model)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

13 / 23

K-nearest neighbours

K-nn Regression

K-nearest neighbours - Regression

We can extend this method from classification to regression

Instead of combining the discrete predictions of k-neighbours we have

to combine continuous predictions

This predictions can be obtained in different ways:

Simple interpolation

Averaging

Local linear regression

Local weighted regression

The time complexity of the prediction will depend on the method

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

14 / 23

K-nearest neighbours

K-nn Regression

K-nearest neighbours - Regression

Simple interpolation

Javier B

ejar c b ea (LSI - FIB)

K-nn averaging

K-nearest neighbours

Term 2012/2013

15 / 23

K-nearest neighbours

K-nn Regression

K-nearest neighbours - Regression (linear)

K-nn linear regression fits the best line between the neighbors

A linear regression problem has to be solved for each query (least

squares regression)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

16 / 23

K-nearest neighbours

K-nn Regression

K-nearest neighbours - Regression (LWR)

Local weighted regression uses a function to weight the contribution

of the neighbours depending on the distance, this is done using a

kernel function

1

0.5

0

-4

-2

Kernel functions have a width parameter that determines the decay of

the weight (it has to be adjusted)

Too narrow = overfitting

Too wide = underfitting

A weighted linear regression problem has to be solved for each query

(gradient descent search)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

17 / 23

K-nearest neighbours

K-nn Regression

K-nearest neighbours - Regression (LWR)

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

18 / 23

K-nearest neighbours

Advantages and drawbacks

K-nearest neighbours - Advantages

The cost of the learning process is zero

No assumptions about the characteristics of the concepts to learn

have to be done

Complex concepts can be learned by local approximation using simple

procedures

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

19 / 23

K-nearest neighbours

Advantages and drawbacks

K-nearest neighbours - Drawbacks

The model can not be interpreted (there is no description of the

learned concepts)

It is computationally expensive to find the k nearest neighbours when

the dataset is very large

Performance depends on the number of dimensions that we have

(curse of dimensionality ) = Attribute Selection

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

20 / 23

K-nearest neighbours

Advantages and drawbacks

The Curse of dimensionality

The more dimensions we have, the more examples we need to

approximate a hypothesis

The number of examples that we have in a volume of space decreases

exponentially with the number of dimensions

This is specially bad for k-nearest neighbors

If the number of dimensions is very high the nearest neighbours can be

very far away

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

21 / 23

K-nearest neighbours

Application

Optical Character Recognition

A A

A A A

A A A

A A A

Javier B

ejar c b ea (LSI - FIB)

OCR capital letters

14 Attributes (All continuous)

Attributes: horizontal position of box,

vertical position of box, width of box,

height of box, total num on pixels,

mean x of on pixels in box, . . .

20000 instances

26 classes (A-Z)

Validation: 10 fold cross validation

K-nearest neighbours

Term 2012/2013

22 / 23

K-nearest neighbours

Application

Optical Character Recognition: Models

K-nn 1 (Euclidean distance, weighted): accuracy 96.0%

K-nn 5 (Manhattan distance, weighted): accuracy 95.9%

K-nn 1 (Correlation distance, weighted): accuracy 95.1%

Javier B

ejar c b ea (LSI - FIB)

K-nearest neighbours

Term 2012/2013

23 / 23

You might also like

- Health Insurance Off The GridDocument163 pagesHealth Insurance Off The GridDaryl Kulak100% (4)

- Chapter 9 Frequency Response of BJT & MosfetDocument54 pagesChapter 9 Frequency Response of BJT & Mosfetshubhankar palNo ratings yet

- 6 - KNN ClassifierDocument10 pages6 - KNN ClassifierCarlos LopezNo ratings yet

- Basics of Structured Finance PDFDocument22 pagesBasics of Structured Finance PDFNaomi Alberg-BlijdNo ratings yet

- OliverKNN PresentationDocument29 pagesOliverKNN Presentationlinux87sNo ratings yet

- K Nearest Neighbors: Probably A Duck."Document14 pagesK Nearest Neighbors: Probably A Duck."Daneil RadcliffeNo ratings yet

- 1694600817-Unit2.3 KNN CU 2.0Document25 pages1694600817-Unit2.3 KNN CU 2.0prime9316586191No ratings yet

- KNN Algorithm: Gnitc Mrs - Sumitra Mallick CSE DeptDocument12 pagesKNN Algorithm: Gnitc Mrs - Sumitra Mallick CSE Dept19-361 Sai PrathikNo ratings yet

- Isbm College of Engineering, Pune Department of Computer Engineering Academic Year 2021-22Document9 pagesIsbm College of Engineering, Pune Department of Computer Engineering Academic Year 2021-22Vaibhav SrivastavaNo ratings yet

- EE514 - CS535 KNN Algorithm: Overview, Analysis, Convergence and ExtensionsDocument53 pagesEE514 - CS535 KNN Algorithm: Overview, Analysis, Convergence and ExtensionsZubair KhalidNo ratings yet

- Distance Metric Learning For Large Margin Nearest Neighbor ClassificationDocument8 pagesDistance Metric Learning For Large Margin Nearest Neighbor Classificationgheorghe garduNo ratings yet

- T20 Cricket Score Prediction.Document17 pagesT20 Cricket Score Prediction.AvinashNo ratings yet

- Jntuk R20 ML Unit-IiDocument37 pagesJntuk R20 ML Unit-IiThanuja MallaNo ratings yet

- 17 Dimensionality ReductionDocument46 pages17 Dimensionality ReductionPhạm KhoaNo ratings yet

- Data Set Property Based K' in VDBSCAN Clustering AlgorithmDocument5 pagesData Set Property Based K' in VDBSCAN Clustering AlgorithmWorld of Computer Science and Information Technology JournalNo ratings yet

- Mathematical Foundations For Machine Learning and Data ScienceDocument25 pagesMathematical Foundations For Machine Learning and Data SciencechaimaeNo ratings yet

- Instance-Based Learning: K-Nearest Neighbour LearningDocument21 pagesInstance-Based Learning: K-Nearest Neighbour Learningfeathh1No ratings yet

- K NNDocument4 pagesK NNshivangjaiswal.222509No ratings yet

- Full Download PDF of Test Bank For Introductory Statistics, 9th Edition, Prem S. Mann All ChapterDocument32 pagesFull Download PDF of Test Bank For Introductory Statistics, 9th Edition, Prem S. Mann All Chapterryoukoprayet100% (3)

- A Hierarchical Approach To The A Posteriori Error Estimation of Isogeometric Kirchhoff Plates and Kirchhoff-Love ShellsDocument20 pagesA Hierarchical Approach To The A Posteriori Error Estimation of Isogeometric Kirchhoff Plates and Kirchhoff-Love ShellsJorge Luis Garcia ZuñigaNo ratings yet

- ML NotesDocument125 pagesML NotesAbhijit Das100% (2)

- K-Medoids For K-Means SeedingDocument9 pagesK-Medoids For K-Means Seedingphuc2008No ratings yet

- Machine Learning-Lecture 03Document19 pagesMachine Learning-Lecture 03Amna AroojNo ratings yet

- P. Balamurugan Deep Learning - Theory and PracticeDocument47 pagesP. Balamurugan Deep Learning - Theory and PracticeAnkit KumarNo ratings yet

- Full Download PDF of Test Bank For Introductory Statistics 9th by Mann All ChapterDocument32 pagesFull Download PDF of Test Bank For Introductory Statistics 9th by Mann All Chapterlusviabaduza100% (4)

- Introduction To K-Nearest Neighbors: Simplified (With Implementation in Python)Document125 pagesIntroduction To K-Nearest Neighbors: Simplified (With Implementation in Python)Abhiraj Das100% (1)

- A Complete Guide To K Nearest Neighbors Algorithm 1598272616Document13 pagesA Complete Guide To K Nearest Neighbors Algorithm 1598272616「瞳」你分享No ratings yet

- Pec-Cs 701eDocument4 pagesPec-Cs 701esuvamsarma67No ratings yet

- An Improved Local Search Algorithm For K-Median: Vincent Cohen-Addad Anupam Gupta Lunjia Hu Hoon Oh David SaulpicDocument68 pagesAn Improved Local Search Algorithm For K-Median: Vincent Cohen-Addad Anupam Gupta Lunjia Hu Hoon Oh David SaulpicAnupam GuptaNo ratings yet

- Econometrics - Session V-VI - FinalDocument43 pagesEconometrics - Session V-VI - FinalAparna JRNo ratings yet

- Instance Based Learning: November 2015Document11 pagesInstance Based Learning: November 2015Manu SNo ratings yet

- LR Ratios TestDocument12 pagesLR Ratios Testdr musafirNo ratings yet

- AIBSlide 15Document37 pagesAIBSlide 15Dimple RoheraNo ratings yet

- Lecture 13 KNNDocument14 pagesLecture 13 KNNharshita kasaundhanNo ratings yet

- A Survey of Adaptive Beamforming Strategy in Smart Antenna For Mobile CommunicationDocument4 pagesA Survey of Adaptive Beamforming Strategy in Smart Antenna For Mobile CommunicationAnonymous CUPykm6DZNo ratings yet

- ML Assignment No. 3: 3.1 TitleDocument6 pagesML Assignment No. 3: 3.1 TitlelaxmanNo ratings yet

- Week 7 Part 1KNN K Nearest Neighbor ClassificationDocument47 pagesWeek 7 Part 1KNN K Nearest Neighbor ClassificationMichael ZewdieNo ratings yet

- A Simple Introduction To K-Nearest Neighbors Algorithm: What Is KNN?Document7 pagesA Simple Introduction To K-Nearest Neighbors Algorithm: What Is KNN?Sailla Raghu rajNo ratings yet

- High Performance Data Mining Using The Nearest Neighbor JoinDocument8 pagesHigh Performance Data Mining Using The Nearest Neighbor JoinUpinder KaurNo ratings yet

- K-Nearest Neighbors AlgorithmDocument11 pagesK-Nearest Neighbors Algorithmwatson191No ratings yet

- Visit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareDocument20 pagesVisit:: Join Telegram To Get Instant Updates: Contact: MAIL: Instagram: Instagram: Whatsapp ShareMoldyNo ratings yet

- Rajan Dhabalia Netflix PrizeDocument9 pagesRajan Dhabalia Netflix Prizerajan_sfsu100% (1)

- KNN AlgorithmDocument3 pagesKNN Algorithmrr_adi94No ratings yet

- Example of Building and Using A Bivariate Regression ModelDocument3 pagesExample of Building and Using A Bivariate Regression ModelOlivera VukovicNo ratings yet

- CS 3035 (ML) - CS - End - May - 2023Document11 pagesCS 3035 (ML) - CS - End - May - 2023Rachit SrivastavNo ratings yet

- Background Subtraction Using Generalised Gaussian Family ModelDocument2 pagesBackground Subtraction Using Generalised Gaussian Family ModelMohammad Bassam El-HalabiNo ratings yet

- Chapter 7 - K-Nearest-Neighbor: Data Mining For Business AnalyticsDocument16 pagesChapter 7 - K-Nearest-Neighbor: Data Mining For Business AnalyticsEverett TuNo ratings yet

- Anguage Model Compression With Weighted LOW Rank FactorizationDocument15 pagesAnguage Model Compression With Weighted LOW Rank FactorizationLi DuNo ratings yet

- 05 K-Nearest NeighborsDocument15 pages05 K-Nearest NeighborsLalaloopsie The GreatNo ratings yet

- Adaptive Local RegressionDocument10 pagesAdaptive Local Regressionvanessahoang001No ratings yet

- Stat Guide MinitabDocument37 pagesStat Guide MinitabSeptiana RizkiNo ratings yet

- Nearest Neighbor Classification: Presented by Sam Brown Sbbrown@uvm - Edu DATA MINING - Xindong WuDocument39 pagesNearest Neighbor Classification: Presented by Sam Brown Sbbrown@uvm - Edu DATA MINING - Xindong WuHussainNo ratings yet

- Monday - The K-Nearest Neighbours (KNN) - DS Core 13 Machine LearningDocument4 pagesMonday - The K-Nearest Neighbours (KNN) - DS Core 13 Machine LearningMaureen GatuNo ratings yet

- K Nearest NeighbourDocument35 pagesK Nearest NeighbourSpiffyladd100% (1)

- Refrence Image Captured Image: Figure 5.1 Sequence DiagramDocument6 pagesRefrence Image Captured Image: Figure 5.1 Sequence Diagramvivekanand_bonalNo ratings yet

- LectureDocument39 pagesLectureSourav SenguptaNo ratings yet

- STAT 451: Introduction To Machine Learning Lecture NotesDocument22 pagesSTAT 451: Introduction To Machine Learning Lecture NotesSHUYUAN JIANo ratings yet

- Instance Based LearningDocument39 pagesInstance Based LearningDarshan R GowdaNo ratings yet

- ML Lecture 11 (K Nearest Neighbors)Document13 pagesML Lecture 11 (K Nearest Neighbors)Md Fazle RabbyNo ratings yet

- ML Unit 2 r20 JntukDocument34 pagesML Unit 2 r20 JntukRiya PuttiNo ratings yet

- Project 1 - Wifi PositioningDocument8 pagesProject 1 - Wifi Positioningthap_dinhNo ratings yet

- Students Tutorial Answers Week12Document8 pagesStudents Tutorial Answers Week12HeoHamHố100% (1)

- Dơnload Corporate Finance A Focused Approach 6th Edition Michael C Ehrhardt Eugene F Brigham Full ChapterDocument24 pagesDơnload Corporate Finance A Focused Approach 6th Edition Michael C Ehrhardt Eugene F Brigham Full Chapterkakucsdosth100% (6)

- Curriculum VitaeDocument1 pageCurriculum VitaetextNo ratings yet

- MSC in Mathematics and Finance Imperial College London, 2020-2021Document4 pagesMSC in Mathematics and Finance Imperial College London, 2020-2021Carlos CadenaNo ratings yet

- Structure Gad DrawingsDocument56 pagesStructure Gad Drawingsdheeraj sehgalNo ratings yet

- Manajemen Perioperatif Operasi Arterial Switch PadaDocument12 pagesManajemen Perioperatif Operasi Arterial Switch Padacomiza92No ratings yet

- COSHH Assessment FormDocument2 pagesCOSHH Assessment Formsupriyo sarkar100% (1)

- Lab Class Routine Spring 2020 Semester - 2Document1 pageLab Class Routine Spring 2020 Semester - 2Al-jubaer Bin Jihad PlabonNo ratings yet

- Study On Private Label Brand Vs National BrandDocument71 pagesStudy On Private Label Brand Vs National BrandPrerna Singh100% (3)

- Patricia Eldridge 4.19.23Document3 pagesPatricia Eldridge 4.19.23abhi kumarNo ratings yet

- Sample Front Desk Receptionist ResumeDocument5 pagesSample Front Desk Receptionist ResumeReyvie FabroNo ratings yet

- Doing Business in IndiaDocument20 pagesDoing Business in IndiarasheedNo ratings yet

- The Cathedral & The Bazaar: Eric S. RaymondDocument38 pagesThe Cathedral & The Bazaar: Eric S. RaymondAli SattariNo ratings yet

- SCHLETTER Solar Mounting Systems Roof EN WebDocument19 pagesSCHLETTER Solar Mounting Systems Roof EN WebcharlesbenwariNo ratings yet

- Media (Pads & Rolls) : Amerklean M80 Pads Frontline Gold Pads Airmat Type RDocument12 pagesMedia (Pads & Rolls) : Amerklean M80 Pads Frontline Gold Pads Airmat Type RBayu SamudraNo ratings yet

- Muffin Top WorkoutDocument4 pagesMuffin Top WorkoutMIBNo ratings yet

- RPU12W0C Restore Wage Types After UpgradDocument5 pagesRPU12W0C Restore Wage Types After Upgradyeison baqueroNo ratings yet

- Bhagyalaxmi Cotton: Bank Name - Icici Bankaccount No.-655705502528 IFCI CODE-ICIC0006557Document2 pagesBhagyalaxmi Cotton: Bank Name - Icici Bankaccount No.-655705502528 IFCI CODE-ICIC0006557patni psksNo ratings yet

- Laguna Lake Development Authority V CA GR No. 120865-71 December 7, 1995Document1 pageLaguna Lake Development Authority V CA GR No. 120865-71 December 7, 1995MekiNo ratings yet

- S TR GEN STC (Spec Tech Requ's) (Rev.2 2009) - LampsDocument10 pagesS TR GEN STC (Spec Tech Requ's) (Rev.2 2009) - LampsRaghu NathNo ratings yet

- IJAUD - Volume 2 - Issue 4 - Pages 11-18 PDFDocument8 pagesIJAUD - Volume 2 - Issue 4 - Pages 11-18 PDFAli jeffreyNo ratings yet

- Uv VarnishDocument4 pagesUv VarnishditronzNo ratings yet

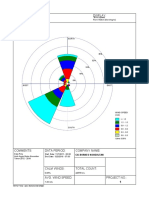

- Wind Rose Plot: DisplayDocument1 pageWind Rose Plot: Displaydhiecha309No ratings yet

- Rajendra N Shah Vs Union of India On 22 April, 2013Document29 pagesRajendra N Shah Vs Union of India On 22 April, 2013praveenaNo ratings yet

- Engine Performance Data at 1500 RPM: B3.9 1 Cummins IncDocument4 pagesEngine Performance Data at 1500 RPM: B3.9 1 Cummins IncJosé CarlosNo ratings yet

- Sankalp Research Paper 2023Document22 pagesSankalp Research Paper 2023Shivansh SinghNo ratings yet

- FFT - Constructing A Secure SD-WAN Architecture v6.2 r6Document91 pagesFFT - Constructing A Secure SD-WAN Architecture v6.2 r6Pepito CortizonaNo ratings yet

- TOUCHLESS VEHICLE WASH W NATSURF 265 HCAC20v1Document1 pageTOUCHLESS VEHICLE WASH W NATSURF 265 HCAC20v1rezaNo ratings yet