Professional Documents

Culture Documents

Physical Science: Fusion?

Physical Science: Fusion?

Uploaded by

ian3318Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Physical Science: Fusion?

Physical Science: Fusion?

Uploaded by

ian3318Copyright:

Available Formats

Physical

science

the systematic study of the inorganic world,

as distinct from the study of the organic

world, which is the province of

biological science. Physical science is

ordinarily thought of as consisting of four

broad areas: astronomy, physics,chemistry,

and the Earth sciences. Each of these is in

turn divided into fields and subfields. This

article discusses the historical development

with due attention to the scope, principal

concerns, and methodsof the first three of

these areas. The Earth sciences are

discussed in a separate article.

Physics, in its modern sense, was founded in

the mid-19th century as a synthesis of

several older sciencesnamely, those

of mechanics, optics,acoustics, electricity, m

agnetism, heat, and the physical properties

ofmatter. The synthesis was based in large

part on the recognition that the different

forces of nature are related and are, in fact,

interconvertible because they are forms

of energy.

he boundary between physics and chemistry

is somewhat arbitrary. As it developed in the

20th century, physics is concerned with the

structure and behaviour of

individual atoms and their components,

while chemistry deals with the properties

and reactions of molecules. These latter

depend on energy, especially heat, as well as

on atoms; hence, there is a strong link

between physics and chemistry. Chemists

tend to be more interested in the specific

properties of

different elements and compounds, whereas

physicists are concerned with general

properties shared by all matter.

(See chemistry: The history of chemistry.)

FUSION?

Fusion is the process that powers the sun and the

stars. It is the reaction in which two atoms of

hydrogen combine together, or fuse, to form an atom

of helium. In the process some of the mass of the

hydrogen is converted into energy. The easiest fusion

reaction to make happen is combining deuterium (or

heavy hydrogen) with tritium (or heavy-heavy

hydrogen) to make helium and a neutron. Deuterium

is plentifully available in ordinary water. Tritium can be

produced by combining the fusion neutron with the

abundant light metal lithium. Thus fusion has the

potential to be an inexhaustible source of energy.

To make fusion happen, the atoms of hydrogen must

be heated to very high temperatures (100 million

degrees) so they are ionized (forming a plasma) and

have sufficient energy to fuse, and then be held

together i.e. confined, long enough for fusion to occur.

The sun and stars do this by gravity. More practical

approaches on earth are magnetic confinement,

where a strong magnetic field holds the ionized atoms

together while they are heated by microwaves or

other energy sources, and inertial confinement, where

a tiny pellet of frozen hydrogen is compressed and

heated by an intense energy beam, such as a laser,

so quickly that fusion occurs before the atoms can fly

apart.

Who cares? Scientists have sought to make fusion

work on earth for over 40 years. If we are successful,

we will have an energy source that is inexhaustible.

One out of every 6,500 atoms of hydrogen in ordinary

water is deuterium, giving a gallon of water the energy

content of 300 gallons of gasoline. In addition, fusion

would be environmentally friendly, producing no

combustion products or greenhouse gases. While

fusion is a nuclear process, the products of the fusion

reaction (helium and a neutron) are not radioactive,

and with proper design a fusion power plant would be

passively safe, and would produce no long-lived

radioactive waste. Design studies show that electricity

from fusion should be about the same cost as present

day sources.

Were getting close! While fusion sounds simple, the

details are difficult and exacting. Heating,

compressing and confining hydrogen plasmas at 100

million degrees is a significant challenge. It has taken

a lot of science and engineering research to get

fusion developments to where they are today. Both

magnetic and inertial fusion programs are conducting

experiments to develop a commercial application. If

all goes well, commercial application should be

possible by about 2020, providing humankind a safe,

clean, inexhaustible energy source for the future.

Stellar nucleosynthesis

Stellar nucleosynthesis is the process by which the

natural abundances of the chemical elements within

stars change due to nuclear fusion reactions in the

cores and their overlying mantles. Stars are said to

evolve (age) with changes in the abundances of the

elements within. Core fusion increases the atomic

weight of elements and reduces the number of

particles, which would lead to a pressure loss except

that gravitation leads to contraction, an increase of

temperature, and a balance of forces.[1] A star loses

most of its mass when it is ejected late in the star's

stellar lifetimes, thereby increasing the abundance of

elements heavier than helium in the interstellar

medium. The term supernova nucleosynthesis is used

to describe the creation of elements during the

evolution and explosion of a presupernova star,

as Fred Hoyle advocated presciently in 1954.[2] A

stimulus to the development of the theory of

nucleosynthesis was the discovery of variations in

the abundances of elements found in the universe.

Those abundances, when plotted on a graph as a

function of atomic number of the element, have a

jagged sawtooth shape that varies by factors of tens

of millions. This suggested a natural process other

than random. Such a graph of the abundances can be

seen at History of nucleosynthesis theory article. Of

the several processes of nucleosynthesis, stellar

nucleosynthesis is the dominating contributor to

elemental abundances in the universe.

A second stimulus to understanding the processes of

stellar nucleosynthesis occurred during the 20th

century, when it was realized that the energy released

from nuclear fusion reactions accounted for the

longevity of the Sun as a source of heat and light.

[3]

The fusion of nuclei in a star, starting from its initial

hydrogen and helium abundance, provides it energy

and the synthesis of new nuclei is a byproduct of that

fusion process. This became clear during the decade

prior to World War II. The fusion-produced nuclei are

restricted to those only slightly heavier than the fusing

nuclei; thus they do not contribute heavily to the

natural abundances of the elements. Nonetheless,

this insight raised the plausibility of explaining all of

the natural abundances of elements in this way. The

prime energy producer in our Sun is

the fusion of hydrogen to form helium, which occurs at

a solar-core temperature of 14 million kelvin.

triple-alpha process is a

set of nuclear fusion reactions by which three helium4 nuclei (alpha particles) are transformed

Helium accumulates in the core of stars as a result of

the protonproton chain reaction and the carbon

nitrogenoxygen cycle. Further nuclear fusion

reactions of helium with hydrogen or another alpha

particle produce lithium-5 and beryllium8 respectively. Both products are highly unstable and

decay, almost instantly, back into smaller nuclei,

unless a third alpha particle fuses with a beryllium

before that time to produce a stable carbon12 nucleus.[3]

When a star runs out of hydrogen to fuse in its core, it

begins to collapse until the central temperature rises

to 108 K,[4] six times hotter than the sun's core. At this

temperature and density, alpha particles are able to

fuse rapidly enough (the half-life of 5Li

is 3.71022 s and that of 8Be is 6.71017 s) to

produce significant amounts of carbon and

restore thermodynamic equilibrium in the core

4

2He

+4

2He

8

4Be

8

4Be

+4

2He

12

6C

(91.8 keV)

(+7.367 MeV)

The net energy release of the process is 7.273

MeV (1.166 pJ).

As a side effect of the process, some carbon

nuclei fuse with additional helium to produce a

stable isotope of oxygen and energy:

12

6C

+4

2He

16

8O

+

(+7.162 MeV)

See alpha process for more details about

this reaction and further steps in the chain of

stellar nucleosynthesis.

This creates a situation in which stellar

nucleosynthesis produces large amounts of

carbon and oxygen but only a small fraction

of those elements are converted into neon

and heavier elements. Both oxygen and

carbon make up the 'ash' of helium-4

burning.

into carbon.[1][2] The triple alpha process is highly

dependent on carbon-12 and beryllium-8 having

resonances with slightly more energy than helium-4,

and before 1952, no such energy levels were known

for carbon. The astrophysicist Fred Hoyle used the

fact that carbon-12 is abundant in the universe as

evidence for the existence of a carbon-12 resonance.

Alpha Ladder :

is a simple

structure that uses thealphabet to organise and

record information, such as definitions, factors, people

or events. Update yourAlpha Ladder throughout your

studies. When assessment time comes around you

will have a pre-prepared and comprehensive resource

to study

CNO cycle:

Overview of the CNO-I Cycle

The CNO cycle (for carbonnitrogenoxygen) is one

of the two known sets of fusion reactions by

which stars converthydrogen to helium, the other

being the protonproton chain reaction. Unlike the

latter, the CNO cycle is a catalytic cycle. It is dominant

in stars that are more than 1.3 times as massive as

the Sun.[1]

In the CNO cycle, four protons fuse, using carbon,

nitrogen and oxygen isotopes as catalysts, to produce

one alpha particle, two positrons and two electron

neutrinos. Although there are various paths and

catalysts involved in the CNO cycles, all these cycles

have the same net result:

41

1H

+ 2

e

4

2He

+ 2

e+

+ 2

e

+ 2

e + 3

+ 24.7 MeV 4

2He

+ 2

e + 3

+ 26.7 MeV

The positrons will almost instantly annihilate with

electrons, releasing energy in the form of gamma

rays. The neutrinos escape from the star carrying

away some energy. One nucleus goes to

become carbon, nitrogen, and oxygen isotopes

through a number of transformations in an

endless loop.

Theoretical models suggest that the CNO cycle

is the dominant source of energy in stars whose

mass is greater than about 1.3 times that of

the Sun.[1] The protonproton chain is more

important in stars the mass of the Sun or less.

This difference stems from temperature

dependency differences between the two

reactions; pp-chain reactions starts at

temperatures around 4106 K[2] (4 megakelvins),

making it the dominant energy source in smaller

stars. A self-maintaining CNO chain starts at

approximately 15106 K, but its energy output

rises much more rapidly with increasing

temperatures.[1] At approximately 17106 K, the

CNO cycle starts becoming the dominant source

of energy.[3] The Sun has a core temperature of

around 15.7106 K, and only 1.7% of 4

He

nuclei produced in the Sun are born in the CNO

cycle. The CNO-I process was independently

proposed by Carl von Weizscker[4] and Hans

Bethe[5] in 1938 and 1939, respectively.

Contents

[hide]

1Cold CNO cycles

o

1.1CNO-I

1.2CNO-II

1.3CNO-III

1.4CNO-IV

2Hot CNO cycles

o

2.1HCNO-I

2.2HCNO-II

2.3HCNO-III

3Use in astronomy

4See also

5References

6Further reading

Main sequence: stars

fuse hydrogen atoms to form helium atoms in

their cores. About 90 percent of the stars in

the universe, including the sun, are main

sequence stars. These stars can range from

about a tenth of the mass of the sun to up to

200 times as massive.

Stars start their lives as clouds of dust and

gas. Gravity draws these clouds together. A

small protostar forms, powered by the

collapsing material. Protostars often form in

densely packed clouds of gas and can be

challenging to detect.

"Nature doesn't form stars in isolation," Mark

Morris, of the University of California at Los

Angeles (UCLS), said in a statement. "It

forms them in clusters, out of natal clouds

that collapse under their own gravity."

Advertisement

Red giant:

From Wikipedia, the free encyclopedia

This article is about the type of star. For the

racehorse, see Red Giant (horse). For the

software company, see Red Giant Software.

For the comic book publisher, see Red Giant

Entertainment.

HertzsprungRussell diagram

Spectral type

Brown dwarfs

White dwarfs

Red dwarfs

Subdwarfs

Main sequence

("dwarfs")

Subgiants

Giants

Bright giants

Supergiants

Hypergiants

absolute

magnitude

(MV)

A red giant is a luminous giant star of low or

intermediate mass (roughly 0.38 solar

masses (M)) in a late phase ofstellar

evolution. The outer atmosphere is inflated

and tenuous, making the radius large and

the surface temperature as low as 5,000 K

and lower. The appearance of the red giant

is from yellow-orange to red, including

the spectral types K and M, but also class S

stars and most carbon stars.

The most common red giants are stars on

the red-giant branch (RGB) that are

still fusing hydrogen into helium in a shell

surrounding an inert helium core. Other red

giants are the red-clump stars in the cool half

of the horizontal branch, fusing helium into

carbon in their cores via the triple-alpha

process; and the asymptotic-giantbranch (AGB) stars with a helium burning

shell outside a degenerate carbonoxygen

core, and a hydrogen burning shell just

beyond that.

Supernova explosion:

The brilliant point of light is the explosion of a

star that has reached the end of its life, otherwise

known as asupernova. Supernovas can briefly

outshine entire galaxies and radiate more energy

than our sun will in its entire lifetime. They're also

the primary source of heavy elements in the

universe.Jan 27, 2014

faster rate of neutron capture of more than one

neutron before beta-decay takes place.

Supernova

nucleosynthesis:

r-process

a nucleosynthesis process that occurs in core-

From Wikipedia, the free encyclopedia

collapse supernovae (see also supernova

nucleosynthesis) and is responsible for the creation of

approximately half of the neutron-rich atomic

nuclei heavier than iron. The process entails a

succession of rapid neutron captures (hence the

name r-process) by heavy seed nuclei, typically56Fe or

other more neutron-rich heavy isotopes.

Supernova nucleosynthesis is a theory of the

production of many different chemical

elements in supernova explosions, first advanced

by Fred Hoyle in 1954.[1] Thenucleosynthesis, or

fusion of lighter elements into heavier ones,

occurs during explosive oxygen burning and

silicon burning.[2] Those fusion reactions create

the

elements silicon,sulfur, chlorine, argon, sodium, p

otassium, calcium, scandium, titanium and iron

peak elements: vanadium, chromium, manganese,

iron, cobalt, and nickel. These are called "primary

elements", in that they can be fused from pure

hydrogen and helium in massive stars. As a result

of their ejection from supernovae, their

abundances increase within the interstellar

medium. Elements heavier than nickel are created

primarily by a rapid capture of neutrons in a

process called the r-process. However, these are

much less abundant than the primary chemical

elements. Other processes thought to be

responsible for some of the nucleosynthesis of

underabundant heavy elements, notably

a proton capture process known as the rpprocess and a photodisintegration process known

as the gamma (or p) process. The latter

synthesizes the lightest, most neutron-poor,

isotopes of the heavy elements.

The other predominant mechanism for the production

of heavy elements in the universe (and in the Solar

System) is the s-process, which is nucleosynthesis by

means of slowcaptures of neutrons, primarily

occurring in AGB stars. The s-process is secondary,

meaning that it requires preexisting heavy isotopes as

seed nuclei to be converted into other heavy nuclei.

Taken together, these two processes account for a

majority of galactic chemical evolution of elements

heavier than iron.

The r-process occurs to a slight extent

in thermonuclear weapon explosions, and was

responsible for the historical discovery of the

elements einsteinium (element 99)

andfermium (element 100).

s-process:

The s-process or slow-neutron-capture-process is

a nucleosynthesis process that occurs at relatively

low neutron density and intermediate temperature

conditions in stars. Under these conditions heavier

nuclei are created by neutron capture, increasing the

atomic mass of the nucleus by one. A neutron in the

new nucleus decays by beta-minus decay to a proton,

creating a nucleus of higher atomic number. The rate

of neutron capture by atomic nuclei is slow relative to

the rate of radioactive beta-minus decay, hence the

name. Thus if beta decay can occur at all, it almost

always occurs before another neutron can be

captured. This process produces stable isotopes by

moving along the valley ofbeta-decay stable

isobars in the chart of isotopes. The s-process

produces approximately half of the isotopes of the

elements heavier than iron, and therefore plays an

important role in the galactic chemical evolution. The

more rapid r-process differs from the s-process by its

Contents

[hide]

1History

2Nuclear physics

3Astrophysical sites

4References

History[edit]

The need for some kind of rapid capture of neutrons

was seen from the relative abundances of isotopes of

heavy elements given in a newly published table

of abundances byHans Suess and Harold Urey in

1956. Radioactive isotopes must capture another

neutron faster than they can undergo beta decay in

order to create abundance peaks

atgermanium, xenon, and platinum. According to

the nuclear shell model, radioactive nuclei that would

decay into isotopes of these elements have closed

neutron shells near theneutron drip line, where more

neutrons cannot be added. Those abundance peaks

created by rapid neutron capture implied that other

nuclei could be accounted for by such a process. That

process of rapid neutron capture in neutron-rich

isotopes is called the r-process. A table apportioning

the heavy isotopes phenomenologically between sprocess and r-process was published in the

famous B2FH review paper in 1957,[1] which named

that process and outlined the physics that guides it.

B2FH also elaborated the theory ofstellar

nucleosynthesis and set substantial frame-work for

contemporary nuclear astrophysics.

The r-process described by the B2FH paper was first

computed time-dependently at Caltech by Phillip

Seeger, William A. Fowler and Donald D. Clayton,

[2]

who achieved the first successful characterization of

the r-process abundances and showed its evolution in

time. They were also able using theoretical production

calculations to construct more quantitative

apportionment between s-process and r-process of

the abundance table of heavy isotopes, thereby

establishing a more reliable abundance curve for the

r-process isotopes than B2FH had been able to

define. Today, the r-process abundances are

determined using their technique of subtracting the

more reliable s-process isotopic abundances from the

total isotopic abundances and attributing the

remainder to the r-process nucleosynthesis. That rprocess abundance curve (vs. atomic weight)

gratifyingly resembles computations of abundances

synthesized by the physical process.

Most neutron-rich isotopes of elements heavier than

nickel are produced, either exclusively or in part, by

the beta decay of very radioactive matter synthesized

during the r-process by rapid absorption, one after

another, of free neutrons created during the

explosions. The creation of free neutrons by electron

capture during the rapid collapse to high density of

the supernova core along with assembly of some

neutron-rich seed nuclei makes the r-process

a primary process; namely, one that can occur even in

a star of pure H and He, in contrast to the B2FH

designation as a secondary process building on

preexisting iron.

Observational evidence of the r-process enrichment

of stars, as applied to the abundance evolution of the

galaxy of stars, was laid out by Truran in 1981.[3] He

and many subsequent astronomers showed that the

pattern of heavy-element abundances in the earliest

metal-poor stars matched that of the shape of the

solar r-process curve, as if the s-process component

were missing. This was consistent with the hypothesis

that the s-process had not yet begun in these young

stars, for it requires about 100 million years of galactic

history to get started. These stars were born earlier

than that, showing that the r-process emerges

immediately from quickly-evolving massive stars that

become supernovae. The primary nature of the r-

Different state of

matter

There are five known phases,

or states, of matter: solids, liquids,

gases, plasma and Bose-Einstein

condensates. The main difference in

the structures of each state is in the

densities of the particles.Apr 11, 2016

Characteristics

of state of matter

Matter in the solid state maintains a fixed

volume and shape, with component particles

(atoms,molecules or ions) close together and

fixed into place. Matter in the liquid state

maintains a fixed volume, but has a variable

shape that adapts to fit its container. Its

particles are still close together but move

freely. Matter in the gaseous state has both

variable volume and shape, adapting both to

fit its container. Its particles are neither close

together nor fixed in place. Matter in the

plasma state has variable volume and shape,

but as well as neutral atoms, it contains a

significant number of ions and electrons, both

of which can move around freely. Plasma is

the most common form of visible matter in the

universe.[1]

Types of Graphic Organizers

Definition and Types

A graphic organizer is a visual and graphic

display that depicts the relationships

between facts, terms, and or ideas within

a learning task. Graphic organizers are

also sometimes referred to as knowledge

maps, concept maps, story maps,

cognitive organizers, advance organizers,

or concept diagrams.

Types of Graphic Organizers

Graphic organizers come in many different

forms, each one best suited to organizing

a particular type of information. The

following examples are merely a sampling

of the different types and uses of graphic

organizers.

A Descriptive or Thematic Map works well

for mapping generic information, but

particularly well for mapping hierarchical

relationships.

Graphic organizer

A graphic organizer, also known as

a knowledge map, concept map, story

map (or storymap), cognitive

organizer, advance organizer, or concept

diagram, is acommunication tool that uses

visual symbols to express knowledge, concepts,

thoughts, or ideas, and the relationships

between them.[1] The main purpose of a graphic

organizer is to provide a visual aid to facilitate

learning and instruction

Organizing

a

hierarchical

set

of

information, reflecting superordinate or

subordinate elements, is made easier by

constructing a Network Tree.

When the information relating to a main

idea or theme does not fit into a hierarchy,

a Spider Map can help with organization.

A Sequential Episodic Map is useful for

mapping cause and effect.

When cause-effect relationships are

complex and non-redundant a Fishbone

Map may be particularly useful.

When information contains cause and

effect problems and solutions, a Problem

and Solution Map can be useful for

organizing.

A Comparative and Contrastive Map can

help students to compare and contrast

two concepts according to their features.

A Problem-Solution Outline helps students

to compare different solutions to a

problem.

Another way to compare concepts'

attributes is to construct a CompareContrast Matrix.

A Human Interaction Outline is effective

for organizing events in terms of a chain of

action and reaction (useful in social

sciences and humanities)

TopicOutline:

Continuum Scale is effective for organizing

information along a dimension such as

less to more, low to high, and few to many.

Several aspects must be considered in writing a

topic outline.

Recall that all headings and subheadings

must be words or phrases, not sentences.

Also, the wording within each division must

be parallel.

Finally, as in any outline, remember that a

division or subdivision cannot be divided into one

part; therefore, if there is an "A" there must be a

"B," and if there is a "1" there must be a "2."

I. Family Problems

A. Custodial: Non-custodial Conflicts

B. Extended Family

C. Adolescent's Age

II. Economic Problems

A. Child Support

B. Women's Job Training

C. Lower Standard of Living

D. Possible Relocation

1. Poorer Neighborhood

2. New School

III. Peer Problems

A. Loss of Friends

B. Relationships with Dates

A Series of Events Chain can help students

organize information according to various

steps or stages.

SentenceOutline:

Several aspects must be considered in writing a

sentence outline.

A Cycle Map is useful for organizing

information that is circular or cyclical, with

no absolute beginning or ending.

If you have chosen to write a sentence

outline, all headings and sub-headings must be in

sentence form.

As in any outline, remember that a division

or sudivision can not be divided into one part;

therefore, if there is an "A" there must be a "B,"

and if there is a "1" there must be a "2."

Negative Effects of Divorce on Adolescents

I. When family conflicts arise as a result of divorce,

adolescents suffer.

A. During the first year, these young people may

be depressed due to conflicts between the custodial

and non-custodial parents.

B. Grandparents, aunts, and uncles are often

restricted by visitation provisions.

C. Almost without exception, adolescents find

divorce very painful, but they react in differing

degrees depending on their age.

II. Some of the most negative effects on adolescents

may be associated with economic problems.

A. The family will most probably experience a

lower standard of living due to the cost of maintaining

two households.

B. Some female custodial parents have poor job

skills and must train before entering the job market.

C. The lower standard of living may result in

misunderstanding and conflicts within the family.

D. The decreased standard of living, particularly

for an untrained female custodial parent, often causes

relocation.

1. The family may have to move to a poorer

neighborhood in order to cut costs.

2. As a result, the adolescent may have to attend

a different school.

III. Adolescents from divorced families often

opinions, behaviors, and other defined variables and

generalize results from a larger sample population.

Quantitative Research uses measurable data to

formulate facts and uncover patterns in research.

Quantitative data collection methods are much more

structured than Qualitative data collection methods.

Quantitative data collection methods include various

forms of surveys online surveys, paper

surveys, mobile surveys and kiosk surveys, face-toface interviews, telephone interviews, longitudinal

studies, website interceptors, online polls, and

systematic

Cold Smoke:flavours the product

dependant on the type of wood used, but will

not cook the product. Smoking is usually

carried out between 10 and 29 degrees C (50F

and 85F). As you will discover Cold Smoking

temperatures are dependant on the outside

Qualitative Research:

temperature, and when temperatures are low,

it is possible to smoke your products

is primarily exploratory research. It is used to gain an

successfully, provided you are prepared to

understanding of underlying reasons, opinions, and

spend more time on the process. When

motivations. It provides insights into the problem or

helps to develop ideas or hypotheses for potential

ambient temperatures are high, the smoke

quantitative research. Qualitative Research is also

temperatures are to be kept as low as

used to uncover trends in thought and opinions, and

possible to avoid cooking the product

dive deeper into the problem. Qualitative data

collection methods vary using unstructured or semistructured techniques. Some common methods

include focus groups (group discussions), individual

and also causing the fish to disintegrate.

Hot

Smoking exposes the foods to smoke

interviews, and participation/observations. The

and heat in a controlled environment. Like Cold

sample size is typically small, and respondents are

Smoking, the item is hung first to develop a

selected to fulfill a given quota.

Quantitative Research:

pellicle, and then smoked. Although foods that

have been hot smoked are often reheated or

cooked, they are typically safe to eat without

is used to quantify the problem by way of generating

further cooking. Hot Smoking occurs within the

numerical data or data that can be transformed into

range of 52 to 82 C. Within this temperature

useable statistics. It is used to quantify attitudes,

range foods are fully cooked, moist, and

through a connecting pipe or opening into the cooking

chamber.

flavourfu

Upright drum smoker[edit]

Types of

Smokehouses

Charcoal Grill:

One of the least expensive methods to smoke small

amounts of meats and sausages is on your covered

charcoal grill. This will require an oven thermometer

to monitor the temperature. You can fill the bottom of

your grill with briquettes and burn them until gray

ash appears.

ertical Water and

Electrical Smokers:

A vertical water smoker is built with a bottom fire pan

that holds charcoal briquettes and generally has two

cooking racks near the top.

Barrel Smoker:

A clean, non-contaminated 50-gallon metal barrel,

with both ends removed, can be used as a smoker for

small quantities of meat, fowl, and fish. Set the openended barrel on the upper end of a shallow, sloping,

covered

trench

or

10-to12-foot

stovepipe.

A diagram of a typical upright drum smoker

The upright drum smoker (also referred to as an ugly

drum smoker or UDS) is exactly what its name

suggests; an upright steel drum that has been

modified for the purpose of pseudo-indirect hot

smoking. There are many ways to accomplish this,

but the basics include the use of a complete steel

drum, a basket to hold charcoal near the bottom, and

cooking rack (or racks) near the top; all covered by a

vented lid of some sort.

Vertical water smoker[edit]

A typical vertical water smoker

A vertical water smoker (also referred to as a bullet

smoker because of its shape)[9] is a variation of the

upright drum smoker. It uses charcoal or wood to

generate smoke and heat, and contains a water bowl

between the fire and the cooking grates

Propane smoker[edit]

A diagram of a propane smoker, loaded with country

style ribs and pork loin in foil.

Dig a pit at the lower end for the fire. Smoke rises

naturally, so having the fire lower than the barrel will

Offset smokers[edit]

An example of a common offset smoker.

The main characteristics of the offset smoker are that

the cooking chamber is usually cylindrical in shape,

with a shorter, smaller diameter cylinder attached to

the bottom of one end for a firebox. To cook the meat,

a small fire is lit in the firebox, where airflow is tightly

controlled. The heat and smoke from the fire is drawn

A propane smoker is designed to allow the smoking of

meat in a somewhat more controlled environment.

The primary differences are the sources of heat and

of the smoke. In a propane smoker, the heat is

generated by a gas burner directly under a steel or

iron box containing the wood or charcoal that provides

the smoke. The steel box has few vent holes, on the

top of the box only. By starving the heated wood of

oxygen, it smokes instead of burning. Any

combination of woods and charcoal may used. This

method uses less wood.

Smoke box method[edit]

This more traditional method uses a two-box system:

a fire box and a food box. The fire box is typically

adjacent or under the cooking box, and can be

controlled to a finer degree. The heat and smoke from

the fire box exhausts into the food box, where it is

used to cook and smoke the meat. These may be as

simple as an electric heating element with a pan of

wood chips placed on it, although more advanced

models have finer temperature controls.

properties:moke emission is one of the

basic elements for characterizing a fire

environment. The combustion conditions under

which smoke is produced-flaming, pyrolysis,

and smoldering-affect the amount and

character of the smoke. The smoke emission

from a flame represents a balance between

growth processes in the fuel-rich portion of the

flame and burnout with oxygen. While it is not

possible at the present time to predict the

smoke emission as a function of fuel chemistry

and combustion conditions, it is known that an

aromatic polymer, such as polystyrene,

produces more smoke than hydrocarbons with

single

carbon-carbon

bonds,

such

as

polypropylene. The smoke produced in flaming

combustion tends to have

oftwoods

Softwoods can be identified by their leaf

shape as well theyre typically needles.

Pine trees, firs, spruces, and most other

general evergreens fall into this category.

Typically, this type of wood has less

potential BTU energy than hardwood. It also

tends to smoke more than hardwood, which

might be useful for the aforementioned

purpose of meat smoking. The one true

advantage that softwood has, though, is that

it lights very quickly due to its lighter density.

For this reason, softwoods make excellent

kindling for any fire, even ones with

hardwood log

You might also like

- Sullair Supervisor Controller Manual - 02250146-049Document32 pagesSullair Supervisor Controller Manual - 02250146-049martin_jaitman82% (11)

- En-Handbook With ExerciseDocument45 pagesEn-Handbook With ExerciseEkosso CkastereandNo ratings yet

- Stellar - Nucleosynthesis - WikiDocument8 pagesStellar - Nucleosynthesis - Wikisakura sasukeNo ratings yet

- Science Week 1 - Origin of The Elements: 2 SemesterDocument8 pagesScience Week 1 - Origin of The Elements: 2 SemesterBOBBY AND KATESNo ratings yet

- How Elements Are FormedDocument6 pagesHow Elements Are Formedjoseph guerreroNo ratings yet

- Nuclear FusionDocument6 pagesNuclear FusionmokshNo ratings yet

- Stellar NucleosynthesisDocument7 pagesStellar Nucleosynthesisclay adrianNo ratings yet

- Ssci2 - Physical Science Lesson 1: Formation of Light and Heavy Elements in The UniverseDocument20 pagesSsci2 - Physical Science Lesson 1: Formation of Light and Heavy Elements in The UniverseMs. ArceñoNo ratings yet

- Quipper Lecture Physical ScienceDocument279 pagesQuipper Lecture Physical ScienceLoren Marie Lemana AceboNo ratings yet

- LH - Lesson 1.1 - 1.2Document3 pagesLH - Lesson 1.1 - 1.2joel gatanNo ratings yet

- Ch10 OriginElementsDocument4 pagesCh10 OriginElementsPrichindel MorocanosNo ratings yet

- Quipper Lecture Physical ScienceDocument326 pagesQuipper Lecture Physical ScienceLoren Marie Lemana AceboNo ratings yet

- Nu Cleo SynthesisDocument22 pagesNu Cleo SynthesisRaden Dave BaliquidNo ratings yet

- NucleosynthesisDocument49 pagesNucleosynthesisJeanine CristobalNo ratings yet

- Baguio College of Technology: Physical ScienceDocument7 pagesBaguio College of Technology: Physical ScienceKwen Cel MaizNo ratings yet

- Timeline: (Hide) 1 2 3 4 o 4.1 o 4.2 o 4.3 o 4.4 o 4.5 5 6 7 8 9 10Document7 pagesTimeline: (Hide) 1 2 3 4 o 4.1 o 4.2 o 4.3 o 4.4 o 4.5 5 6 7 8 9 10mimiyuhNo ratings yet

- Kelompok 2Document47 pagesKelompok 2Kiswan SetiawanNo ratings yet

- Formation of Elements in The UniverseDocument3 pagesFormation of Elements in The Universeclay adrianNo ratings yet

- Jonathan Tennyson Et Al - Molecular Line Lists For Modelling The Opacity of Cool StarsDocument14 pagesJonathan Tennyson Et Al - Molecular Line Lists For Modelling The Opacity of Cool Stars4534567No ratings yet

- Nucleosintese AstrobiologiaDocument9 pagesNucleosintese AstrobiologiaLym LyminexNo ratings yet

- Mitres 2 008su22 - ch1Document21 pagesMitres 2 008su22 - ch1Nguyen Pham HoangNo ratings yet

- Big Bang NucleosynthesisDocument3 pagesBig Bang NucleosynthesisMarjorie Del Mundo MataacNo ratings yet

- Reviewer Physical ScienceDocument17 pagesReviewer Physical ScienceMarian AnormaNo ratings yet

- Physical Sciences Lesson 1 NucleosynthesisDocument14 pagesPhysical Sciences Lesson 1 NucleosynthesisJustin BirdNo ratings yet

- Modern PhysicsDocument4 pagesModern PhysicsSamuel VivekNo ratings yet

- Jurnal ScientiDocument9 pagesJurnal ScientiSinta MardianaNo ratings yet

- Phy Sci NotesDocument8 pagesPhy Sci NotesReynalene ParagasNo ratings yet

- Physical Science 1Document25 pagesPhysical Science 1EJ RamosNo ratings yet

- NUCLEAR FUSION 1-WPS OfficeDocument3 pagesNUCLEAR FUSION 1-WPS OfficeHarshit ShuklaNo ratings yet

- Physical ScienceDocument13 pagesPhysical ScienceJoy CruzNo ratings yet

- Teachers Notes Booklet 5Document16 pagesTeachers Notes Booklet 5api-189958761No ratings yet

- Physical Science Powerpoint 1Document40 pagesPhysical Science Powerpoint 1Jenezarie TarraNo ratings yet

- A Piece of the Sun: The Quest for Fusion EnergyFrom EverandA Piece of the Sun: The Quest for Fusion EnergyRating: 3 out of 5 stars3/5 (4)

- DQWQWEDQWUKUDocument29 pagesDQWQWEDQWUKULuis Arturo CachiNo ratings yet

- 1 - Physical Science The Origin of The ElementsDocument25 pages1 - Physical Science The Origin of The ElementsTom FernandoNo ratings yet

- Grade 11 Confucius Pointers For Physical ScienceDocument3 pagesGrade 11 Confucius Pointers For Physical ScienceSuzette Ann MantillaNo ratings yet

- PHY Nuclear Fusion and FissionDocument36 pagesPHY Nuclear Fusion and FissionSarika KandasamyNo ratings yet

- Formation of ElementsDocument4 pagesFormation of ElementsPatotie CorpinNo ratings yet

- NucleosynthesisDocument5 pagesNucleosynthesisJm FloresNo ratings yet

- Primordial NucleosynthesisDocument6 pagesPrimordial NucleosynthesisScribdTranslationsNo ratings yet

- PhyScience Assignment ABM 211Document2 pagesPhyScience Assignment ABM 211jsemlpzNo ratings yet

- Chapter 1 - Nuclear ReactionsDocument15 pagesChapter 1 - Nuclear ReactionsFelina AnilefNo ratings yet

- The Suns Origin PDFDocument6 pagesThe Suns Origin PDFgamersgeneNo ratings yet

- Origin of ElementsDocument26 pagesOrigin of ElementsDocson Jeanbaptiste Thermitus Cherilus100% (1)

- Nuclear Fusion: Jump To Navigationjump To SearchDocument3 pagesNuclear Fusion: Jump To Navigationjump To SearchMikko RamiraNo ratings yet

- PHYS225 Lecture 02Document26 pagesPHYS225 Lecture 02ahmetalkan2021No ratings yet

- Reviewer Module 1-2Document12 pagesReviewer Module 1-2Kathrina HuertoNo ratings yet

- Formation of ElementsDocument17 pagesFormation of ElementsWinde SerranoNo ratings yet

- Physical Science Notes Grade 12Document9 pagesPhysical Science Notes Grade 12juliaysabelle.hongoNo ratings yet

- Handouts 1-8Document12 pagesHandouts 1-8Roma ConstanteNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document9 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- WEEK1Document23 pagesWEEK1marvin galvanNo ratings yet

- Formation of Heavy Elementsheavy ElementsDocument54 pagesFormation of Heavy Elementsheavy Elementsrhea mijaresNo ratings yet

- Astro NucDocument20 pagesAstro NucHiya MukherjeeNo ratings yet

- Lesson 2 We Are All Made of Star Stuff (Formation of The Heavy Elements)Document5 pagesLesson 2 We Are All Made of Star Stuff (Formation of The Heavy Elements)Cristina MaquintoNo ratings yet

- 11 Physical ScienceDocument8 pages11 Physical ScienceLovely Gracie88% (32)

- Lesson 1Document16 pagesLesson 1Meng GedragaNo ratings yet

- Nuclear Physic By: Susi Susanti: HistoryDocument8 pagesNuclear Physic By: Susi Susanti: HistoryAldi CakepsNo ratings yet

- History of The Theory: by John P. Millis, PH.DDocument2 pagesHistory of The Theory: by John P. Millis, PH.Dpriyaarumugam3010No ratings yet

- Alchemy: Ancient and Modern Being a Brief Account of the Alchemistic Doctrines, and Their Relations, to Mysticism on the One Hand, and ...From EverandAlchemy: Ancient and Modern Being a Brief Account of the Alchemistic Doctrines, and Their Relations, to Mysticism on the One Hand, and ...No ratings yet

- GATE Architecture Solved 2011Document12 pagesGATE Architecture Solved 2011Mohd SalahuddinNo ratings yet

- Robuschi BlowersDocument23 pagesRobuschi Blowers1977jul100% (1)

- Effects of Harmonics On Power SystemsDocument6 pagesEffects of Harmonics On Power SystemsLammie Sing Yew LamNo ratings yet

- Repair Information gx50 enDocument1 pageRepair Information gx50 engeorge e.bayNo ratings yet

- Tensile TestingDocument2 pagesTensile TestingFsNo ratings yet

- Banner Power BullDocument3 pagesBanner Power Bullanon_579800775No ratings yet

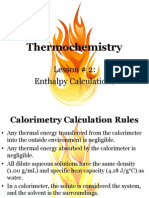

- 12 U Thermo Lesson 2 Enthalpy CalculationsDocument13 pages12 U Thermo Lesson 2 Enthalpy CalculationsAhmed AbdullahNo ratings yet

- The Economic ProblemDocument3 pagesThe Economic Problemisabellamasira999100% (1)

- 914K Scematic PDFDocument30 pages914K Scematic PDFgraham staines100% (1)

- 2013-g2.0 Dohc Mfi #1pdfDocument1 page2013-g2.0 Dohc Mfi #1pdfautocomputerNo ratings yet

- AHT87 Leaflet - Issue June 2005Document1 pageAHT87 Leaflet - Issue June 2005gmailNo ratings yet

- Engine: 8045.25 (3908 CC) 8041.25 / 8041 SI-25 (3908 CC) : QTY Item # DescriptionDocument2 pagesEngine: 8045.25 (3908 CC) 8041.25 / 8041 SI-25 (3908 CC) : QTY Item # DescriptionAhmed AbdalhadyNo ratings yet

- Aits 2014Document32 pagesAits 2014Hemlata GuptaNo ratings yet

- Terex: System Specification SheetDocument1 pageTerex: System Specification SheetMaylson SenaNo ratings yet

- Fundamentals of Power System Protection & Circuit Interrupting DevicesDocument50 pagesFundamentals of Power System Protection & Circuit Interrupting DevicesPrEmNo ratings yet

- Method Statement of Rectification of Defect No. E398Document13 pagesMethod Statement of Rectification of Defect No. E398Aous HNo ratings yet

- AVC63-7F Voltage Regulator - Instructions - 9302800994-D - March 2012 - BASLER ELECTRIC PDFDocument4 pagesAVC63-7F Voltage Regulator - Instructions - 9302800994-D - March 2012 - BASLER ELECTRIC PDFpevareNo ratings yet

- 基于CFD方法的高温热管特性研究 余清远Document7 pages基于CFD方法的高温热管特性研究 余清远小黄包No ratings yet

- Power Generation Through Speed BreakersDocument22 pagesPower Generation Through Speed BreakersSaikumar MysaNo ratings yet

- Ventilation-System CompressedDocument2 pagesVentilation-System CompressedchristianducuaraNo ratings yet

- PT FMDocument12 pagesPT FMNabil NizamNo ratings yet

- Mt1 (Physics)Document4 pagesMt1 (Physics)Amaranath GovindollaNo ratings yet

- FPRB Installation Manual For SPD Rev ABDocument46 pagesFPRB Installation Manual For SPD Rev ABEduardoUrueta50% (4)

- TransformersDocument33 pagesTransformersSachith Praminda Rupasinghe92% (12)

- Regulatory Updates April1 - 2023Document57 pagesRegulatory Updates April1 - 2023durgamadhabaNo ratings yet

- SH 60 TD enDocument98 pagesSH 60 TD enCésar David Pedroza DíazNo ratings yet

- 114 ALPcatDocument124 pages114 ALPcatJASHNo ratings yet

- 10 Lecture HydraulicsDocument38 pages10 Lecture HydraulicsAnoosha AnwarNo ratings yet

- Desert CoolerDocument18 pagesDesert CoolermanasNo ratings yet