Professional Documents

Culture Documents

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Uploaded by

IJIRSTCopyright:

Available Formats

You might also like

- Final 2015 Cookie Project Report The Cookie FactoryDocument16 pagesFinal 2015 Cookie Project Report The Cookie FactorySadat10162701100% (1)

- Introducer Agreement For IndiaDocument5 pagesIntroducer Agreement For Indiaaasim9609No ratings yet

- 802 1ad-2005Document74 pages802 1ad-2005lipe_sr100% (1)

- A Literature Survey of Image Descriptors in Content Based Image Retrieval PDFDocument11 pagesA Literature Survey of Image Descriptors in Content Based Image Retrieval PDFray_tan_11No ratings yet

- Tax Planning of RelianceDocument35 pagesTax Planning of RelianceDhaval DevmurariNo ratings yet

- Corporate Tax AdvisoryDocument2 pagesCorporate Tax AdvisoryParas MittalNo ratings yet

- Chocolate ReportDocument67 pagesChocolate ReportAkhil KwatraNo ratings yet

- Executive Summary: Sunsilk Is A Hair Care Brand, Primarily Aimed at Women, Produced by The UnileverDocument6 pagesExecutive Summary: Sunsilk Is A Hair Care Brand, Primarily Aimed at Women, Produced by The UnileverKarishma SaxenaNo ratings yet

- Lesson Plan Writing KSSR SJK Year 4Document12 pagesLesson Plan Writing KSSR SJK Year 4tamil arasi subrayanNo ratings yet

- FYP ThesisDocument83 pagesFYP ThesisHaris SohailNo ratings yet

- REPORT Aida ChocsDocument20 pagesREPORT Aida ChocsAnith Zahirah50% (2)

- Fast Track Hindi Songs LyricsDocument4 pagesFast Track Hindi Songs LyricsjasujunkNo ratings yet

- Eaa Presentation SP&CDocument16 pagesEaa Presentation SP&CGaabii Aaguilaar MoraalesNo ratings yet

- PGT 202E - Score Interpretation 2016 PDFDocument27 pagesPGT 202E - Score Interpretation 2016 PDFChinYcNo ratings yet

- An Intelligent Traffic Light System Using Object DDocument8 pagesAn Intelligent Traffic Light System Using Object DHo Wang FongNo ratings yet

- Note On The Asset Management IndustryDocument16 pagesNote On The Asset Management IndustryBenj DanaoNo ratings yet

- An Evaluation of The Effectiveness of Health and Safety Induction Practices in The Zambian Construction IndustryDocument5 pagesAn Evaluation of The Effectiveness of Health and Safety Induction Practices in The Zambian Construction IndustryYakub AndriyadiNo ratings yet

- 26302VNR 2020 Bangladesh Report PDFDocument201 pages26302VNR 2020 Bangladesh Report PDFMd. Mujibul Haque Munir100% (1)

- Break-Even SpreadsheetDocument9 pagesBreak-Even SpreadsheetioanaclaudiacapatinaNo ratings yet

- PM-Assignment 4Document23 pagesPM-Assignment 4raheel iqbalNo ratings yet

- School - Based Experience (SBE) ReportDocument4 pagesSchool - Based Experience (SBE) ReportKhairul AnwarNo ratings yet

- Fee Sharing Agreement Fix PointDocument2 pagesFee Sharing Agreement Fix PointAraceli GloriaNo ratings yet

- Itpm Discussion Question Chap 6 ShashiDocument3 pagesItpm Discussion Question Chap 6 ShashiShatiish Shaz0% (1)

- TSLB3023 English For Academic Purpose: Task 2:-Oral PresentationDocument9 pagesTSLB3023 English For Academic Purpose: Task 2:-Oral PresentationpreyangkaNo ratings yet

- Thesis On Content Based Image RetrievalDocument7 pagesThesis On Content Based Image Retrievalamberrodrigueznewhaven100% (2)

- Content Based Image Retrieval System Based On Neural NetworksDocument5 pagesContent Based Image Retrieval System Based On Neural NetworksInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Latest Research Paper On Content Based Image RetrievalDocument4 pagesLatest Research Paper On Content Based Image Retrievaldyf0g0h0fap3No ratings yet

- Thesis Content Based Image RetrievalDocument4 pagesThesis Content Based Image Retrievaltiffanycarpenterbillings100% (2)

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocument8 pagesA User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmVasanth Prasanna TNo ratings yet

- Research Paper On Image RetrievalDocument7 pagesResearch Paper On Image Retrievalafmbvvkxy100% (1)

- An Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsDocument7 pagesAn Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsAnonymous lPvvgiQjRNo ratings yet

- Significance of DimensionalityDocument16 pagesSignificance of DimensionalitysipijNo ratings yet

- IJRAR1ARP043Document4 pagesIJRAR1ARP043Muhammad MuhammadiNo ratings yet

- Multi-Feature and Genetic AlgorithmDocument41 pagesMulti-Feature and Genetic AlgorithmVigneshInfotechNo ratings yet

- Budapest2020Document22 pagesBudapest2020Uyen NhiNo ratings yet

- PHD Thesis On Content Based Image RetrievalDocument7 pagesPHD Thesis On Content Based Image Retrievallizaschmidnaperville100% (2)

- 1 s2.0 S1047320320301115 MainDocument10 pages1 s2.0 S1047320320301115 MainizuddinNo ratings yet

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocument8 pagesA User-Oriented Image Retrieval System Based On Interactive Genetic Algorithmakumar5189No ratings yet

- A User Oriented Image Retrieval System Using Halftoning BBTCDocument8 pagesA User Oriented Image Retrieval System Using Halftoning BBTCEditor IJRITCCNo ratings yet

- Image Segmentation For Object Detection Using Mask R-CNN in ColabDocument5 pagesImage Segmentation For Object Detection Using Mask R-CNN in ColabGRD JournalsNo ratings yet

- Feature Extraction Approach For Content Based Image RetrievalDocument5 pagesFeature Extraction Approach For Content Based Image Retrievaleditor_ijarcsseNo ratings yet

- Sketch4Match - Content-Based Image Retrieval System Using SketchesDocument17 pagesSketch4Match - Content-Based Image Retrieval System Using SketchesMothukuri VijayaSankarNo ratings yet

- Review of Image Recognition From Image RepositoryDocument5 pagesReview of Image Recognition From Image RepositoryIJRASETPublicationsNo ratings yet

- Image Quantification Learning Technique Through Content Based Image RetrievalDocument4 pagesImage Quantification Learning Technique Through Content Based Image RetrievalRahul SharmaNo ratings yet

- Content Based Remote-Sensing Image Retrieval With Bag of Visual Words RepresentationDocument6 pagesContent Based Remote-Sensing Image Retrieval With Bag of Visual Words RepresentationNikhil TengliNo ratings yet

- Content Based Image Retrieval Thesis PDFDocument7 pagesContent Based Image Retrieval Thesis PDFstephanierivasdesmoines100% (2)

- Content-Based Image RetrievalDocument4 pagesContent-Based Image RetrievalKuldeep SinghNo ratings yet

- Content-Based Image Retrieval Based On Corel Dataset Using Deep LearningDocument10 pagesContent-Based Image Retrieval Based On Corel Dataset Using Deep LearningIAES IJAINo ratings yet

- Content Based Image Retrieval Using Color, Multi Dimensional Texture and Edge OrientationDocument6 pagesContent Based Image Retrieval Using Color, Multi Dimensional Texture and Edge OrientationIJSTENo ratings yet

- Medical Images Retrieval Using Clustering TechniqueDocument8 pagesMedical Images Retrieval Using Clustering TechniqueEditor IJRITCCNo ratings yet

- Content Based Image Retrieval Literature ReviewDocument7 pagesContent Based Image Retrieval Literature Reviewaflspfdov100% (1)

- Content-Based Image Retrieval Based On ROI Detection and Relevance FeedbackDocument31 pagesContent-Based Image Retrieval Based On ROI Detection and Relevance Feedbackkhalid lmouNo ratings yet

- Content Based Image Retrieval System Using Sketches and Colored Images With ClusteringDocument4 pagesContent Based Image Retrieval System Using Sketches and Colored Images With ClusteringInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 1002.2193 CBIR PublishedDocument5 pages1002.2193 CBIR PublishedAmr MausadNo ratings yet

- Thesis On Image RetrievalDocument6 pagesThesis On Image Retrievallanawetschsiouxfalls100% (2)

- Content Based Image Retrieval Methods Using Self Supporting Retrieval Map AlgorithmDocument7 pagesContent Based Image Retrieval Methods Using Self Supporting Retrieval Map Algorithmeditor_ijarcsseNo ratings yet

- Baby Manjusha - CBIR ReportDocument25 pagesBaby Manjusha - CBIR ReportFeroza MirajkarNo ratings yet

- A Review On Image Retrieval TechniquesDocument4 pagesA Review On Image Retrieval TechniquesBONFRINGNo ratings yet

- A Novel Approach For Feature Selection and Classifier Optimization Compressed Medical Retrieval Using Hybrid Cuckoo SearchDocument8 pagesA Novel Approach For Feature Selection and Classifier Optimization Compressed Medical Retrieval Using Hybrid Cuckoo SearchAditya Wiha PradanaNo ratings yet

- Patil New Project ReportDocument45 pagesPatil New Project ReportRavi Kiran RajbhureNo ratings yet

- Content Based Image Retrieval ThesisDocument8 pagesContent Based Image Retrieval ThesisDon Dooley100% (2)

- Arduino-UNO Based Magnetic Field Strength MeasurementDocument4 pagesArduino-UNO Based Magnetic Field Strength MeasurementIJIRSTNo ratings yet

- Patterns of Crop Concentration, Crop Diversification and Crop Combination in Thiruchirappalli District, Tamil NaduDocument10 pagesPatterns of Crop Concentration, Crop Diversification and Crop Combination in Thiruchirappalli District, Tamil NaduIJIRSTNo ratings yet

- Satellite Dish Positioning SystemDocument5 pagesSatellite Dish Positioning SystemIJIRST100% (1)

- Transmission of SAE BajaDocument5 pagesTransmission of SAE BajaIJIRST100% (1)

- Currency Recognition Blind Walking StickDocument3 pagesCurrency Recognition Blind Walking StickIJIRSTNo ratings yet

- Development of Tourism Near Loktak Lake (Moirang) in Manipur Using Geographical Information and Management TechniquesDocument4 pagesDevelopment of Tourism Near Loktak Lake (Moirang) in Manipur Using Geographical Information and Management TechniquesIJIRSTNo ratings yet

- Literature Review For Designing of Portable CNC MachineDocument3 pagesLiterature Review For Designing of Portable CNC MachineIJIRSTNo ratings yet

- Social Impact of Chukha Hydro Power On Its Local Population in BhutanDocument2 pagesSocial Impact of Chukha Hydro Power On Its Local Population in BhutanIJIRSTNo ratings yet

- Analysis and Design of Shear Wall For An Earthquake Resistant Building Using ETABSDocument7 pagesAnalysis and Design of Shear Wall For An Earthquake Resistant Building Using ETABSIJIRSTNo ratings yet

- Analysis of Fraudulent in Graph Database For Identification and PreventionDocument8 pagesAnalysis of Fraudulent in Graph Database For Identification and PreventionIJIRSTNo ratings yet

- Automated Online Voting System (AOVS)Document4 pagesAutomated Online Voting System (AOVS)IJIRSTNo ratings yet

- Modeled Sensor Database For Internet of ThingsDocument4 pagesModeled Sensor Database For Internet of ThingsIJIRSTNo ratings yet

- Biology CurriculumDocument2 pagesBiology CurriculummarkddublinNo ratings yet

- The Noble-Abel Equation of State Termodynamic Derivations For Ballistic ModellingDocument27 pagesThe Noble-Abel Equation of State Termodynamic Derivations For Ballistic Modellingsux2beedNo ratings yet

- BMW B4 R1 Retail Standards 2013 Reference Handbook June 2014 en 1Document329 pagesBMW B4 R1 Retail Standards 2013 Reference Handbook June 2014 en 1Duy Anh Quách ĐôngNo ratings yet

- 1.2. Entrepreneurial Functions and Tasks: Esbm NotesDocument7 pages1.2. Entrepreneurial Functions and Tasks: Esbm NotesAdil ZamiNo ratings yet

- 2016-IoT-Adoption-Benchmark-Report - Year of The Smart DeviceDocument19 pages2016-IoT-Adoption-Benchmark-Report - Year of The Smart DevicecooperNo ratings yet

- Load-Carrying Behaviour of Connectors Under Shear, Tension and Compression in Ultra High Performance ConcreteDocument8 pagesLoad-Carrying Behaviour of Connectors Under Shear, Tension and Compression in Ultra High Performance Concretesriki_neelNo ratings yet

- Literacy TestDocument6 pagesLiteracy TestNURUL AIN BT GHAZALI nurul565No ratings yet

- Ilovepdf MergedDocument3 pagesIlovepdf MergedKirill KalininNo ratings yet

- Chapter 2 Part A 2.6L Four-Cylinder EngineDocument20 pagesChapter 2 Part A 2.6L Four-Cylinder EngineFlorin FurculesteanuNo ratings yet

- Foundry TechnologyDocument28 pagesFoundry TechnologyVp Hari NandanNo ratings yet

- T-920 User Manual v1.2Document11 pagesT-920 User Manual v1.2Aung Kyaw OoNo ratings yet

- Free Sap Tutorial On Invoice VerificationDocument46 pagesFree Sap Tutorial On Invoice VerificationAnirudh SinghNo ratings yet

- Em-35-6 - Operator's Manual Rate of Turn Indicator Roti-310Document41 pagesEm-35-6 - Operator's Manual Rate of Turn Indicator Roti-310pawanNo ratings yet

- Compressed Air System Design BasisDocument11 pagesCompressed Air System Design Basiskaruna346100% (2)

- Cired 2023 Technical ProgrammeDocument69 pagesCired 2023 Technical Programme5p9r7jxp9fNo ratings yet

- Hashtag GuideDocument11 pagesHashtag GuideChithra ShineyNo ratings yet

- Injection Molding Mold DesignDocument26 pagesInjection Molding Mold DesignDiligence100% (1)

- Power Point View TabDocument17 pagesPower Point View TabAudi PrevioNo ratings yet

- 250 kVA Transformer Manufacturing and Transformer TestingDocument84 pages250 kVA Transformer Manufacturing and Transformer TestingPronoy Kumar100% (2)

- Eryigit Goldberg 120sDocument2 pagesEryigit Goldberg 120sElom Djadoo-ananiNo ratings yet

- XRV Digital Interface ManualDocument131 pagesXRV Digital Interface ManualAbrahamNo ratings yet

- Istanbul New Airport LTFM Procedure: IVAO TR ATC OperationsDocument3 pagesIstanbul New Airport LTFM Procedure: IVAO TR ATC OperationsArmağan B.No ratings yet

- FlowLabEOC2e CH03Document2 pagesFlowLabEOC2e CH03tomekzawistowskiNo ratings yet

- Study Plan For PHD StudiesDocument2 pagesStudy Plan For PHD StudiesAhmad Raza100% (3)

- Service-Oriented Computing:: State of The Art and Research ChallengesDocument19 pagesService-Oriented Computing:: State of The Art and Research Challengesapi-26748751No ratings yet

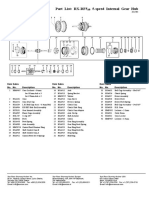

- Part List: RX-RF5 5-Speed Internal Gear HubDocument2 pagesPart List: RX-RF5 5-Speed Internal Gear HubTomislav KoprekNo ratings yet

- What Every Engineer Should Know About InventingDocument169 pagesWhat Every Engineer Should Know About InventingIlyah JaucianNo ratings yet

- MPD May 2012Document120 pagesMPD May 2012Brzata PticaNo ratings yet

- R290LC 3Document476 pagesR290LC 3PHÁT NGUYỄN THẾ100% (9)

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Uploaded by

IJIRSTOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Content-Based Image Retrieval Using Feature Extraction and K-Means Clustering

Uploaded by

IJIRSTCopyright:

Available Formats

IJIRST International Journal for Innovative Research in Science & Technology| Volume 3 | Issue 04 | September 2016

ISSN (online): 2349-6010

Content-Based Image Retrieval using Feature

Extraction and K-Means Clustering

Geethu Varghese Dr. Arun Kumar M N

PG Student Associate Professor

Department of Computer Science & Engineering Department of Computer Science & Engineering

Federal Institute of Science & Technology Federal Institute of Science & Technology

Abstract

There are many methods to retrieve an image from an amassment of images in the database in order to meet users demand with

image content kindred attribute, edge pattern homogeneous attribute, color homogeneous attribute, etc. An image retrieval

system offers an efficient way to access or retrieve set of similar images by directly computing the image features from images

by directly computing the image features from an image as reported by utilizing different kinds of techniques as well as

algorithm. Content based image retrieval (CBIR) is most recently used technique for image retrieval from large image database.

The reason behind content based image retrieval is to get perfect and fast result. There are many technique of CBIR utilized for

image retrieval. A Block Truncation Coding technique is the famous method used for image retrieval. In the proposed system the

advanced technique of BTC is used that is Ordered Dither Block Truncation Coding (ODBTC). ODBTC encoded data stream to

construct the image features namely Color Co-occurrence and Bit Pattern features. After the extraction of this feature similarity

distance is computed for retrieving a set of similar images. And to make the search more accurate K-means clustering method is

used. The most similar images to the query image are selected and these features are being appended together and k-means

clustering is applied. This method retrieves more similar images to the query image than the first search. The proposed scheme

can be considered as very good in color image retrieval application. The process is implemented in a MATLAB 2014.

Keywords: Content-based image retrieval, bit pattern feature, color co-occurrence, K-means clustering, ordered dither

block Truncation coding

_______________________________________________________________________________________________________

I. INTRODUCTION

Recent years have visually perceived a rapid increase of the size of digital image amassments. Every day, both military and

civilian equipment engenders giga-bytes of images. Large amount of information is out there. However, we cannot access to or

make utilization of the information unless it is organized so as to allow efficient browsing, searching and retrieval. Image

retrieval has been a very active research area since 1970s. From early 90s content based image retrieval has become a very

active research area. Many Images retrieval system, both commercial and research, have been build. Most image retrieval system

support one or more of the following options: random browsing, search by sketch, search by text, navigation with customized

image categories. The most common approache to search on image collections are: based on textual metadata of image and based

on content information of image. The commonest approache utilized is called Content-Based Image Retrieval. The main

advantage of the Content Based Image Retrieval is the automatic retrieval process instead of the traditional keyword based

approach, which is time consuming. The main application of Content Based Image Retrievel technology are fingerprint

identification, biodiversity information systems, digital libraries, crime prevention, medicine, historical research.

Content Based Image Retrieval (CBIR) is also known as Query by Image Content (QBIC) and Content Visual Information

Retrieval (CBVIR). In CBIR, content based means the searching of image based on actual content of image rather than its

metadata. The Content Based Image Retrieval system is employed to extract the features, index those feature to appropriate

structure and efficiently provide answers to the users question. To provide the satisfactory answer to the user question, CBIR

provides some flow of work. Foremost CBIR system takes the RGB image as input, performs feature extraction, performs some

similarity computations with the images stored database and retrieves the output image on the idea of similarity computation.

Then select the similar images to the query image then the features of the selected similar images are extracted and appended

together and k-means is applied which retrieve most similar images to the query image.

Relevance feedback (RF) could be a usually accepted technique to enhance the effectiveness of retrieval systems interactively.

Basically, it's composed of 3 steps: (a) An initial search is created by the system for a user-supplied question pattern, returning a

small number of images ;(b) The user then indicates that the retrieved pictures relevant;.(c) Finally, the system mechanically

reformulates the initial question primarily based upon users relevance judgments. This method is continued till the user is

satisfied. RF way facilitates to alleviate the linguistics gap drawback, since it permits the CBIR system to find out image

perceptions. RF way sometimes manage small training sample, imbalance in training sample and real time demand. Another vital

issue is concerned with the planning and implementation of learning mechanisms.

Clustering is a method that separate a given dataset into same teams based on specific requirements. The similar objects are

measured in a cluster whereas dissimilar objects are in different clusters. Clustering plays a vital role in various fields including

All rights reserved by www.ijirst.org 291

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

image processing, mobile communication, computational biology, medicine and economics. K-Means is a well-known clustering

algorithm which is popularly known as Hard C Means algorithm. This algorithm splits the given image into different clusters of

pixels within the feature area,each of them defined by its center.First each pixel within the image is allocated to the closest

cluster.Then the new centred are computed within the new clusters. These steps are repeated until convergence.Primarily we

need to determine the number of clusters K first. Then the centroids will be assumed for these clusters.We could assume random

objects as initial centroids or first K objects in sequence could also serve as the initial centroids.Steps followed in the algorithm:

(a) determine the centroid coordinates.(b) Determine the distance of each object pixel to the centroids.(c) Group the object based

on minimum distance with the centroid.

II. RELATED WORKS

There are many methods for content based image retrievel. In [2] Roland Kwitt,Andreas Uhl proposed a framework of

probabilistic texture retrieval in the wavelet domain from the viewpoints of retrieval accuracy and computational performance.

Then a novel retrievel method is proposed based on image representation in the complex wavelet domain and several statistical

models for the magnitude of the complex transform coefficients. KL-divergences between the proposed statistical models are

measured for similarity measurement. The problem with this method is that incorporation of color information is still an open

problem.In [3] B Zhang, Y Gao, S Zhao, and Jia Liu, proposes a novel high-order local pattern descriptor, local derivative

pattern (LDP), for face recognition The proposed LDP operator labels the pixels of an image by comparing two derivative

directions at two neighboring pixels and concatenating the results as a 32-bit binary sequence. The procedure applies a high-

order local feature operator on each pixel to extract discriminative features from its neighborhood. Then model the distribution of

high-order local derivative pattern by spatial histogram. Spatial histograms can be extracted from circular regions with different

radiuses. Many similarity measures for histogram matching have been proposed. In this paper, histogram intersection is used to

measure the similarity between two histograms. The problem with this method is that the performance of LDP drops when it

reaches to the fourth order.

In [4] Xiaoyang Tan and Bill Triggs propose a method for recogniting face under uncontrolled lighting condition. The overall

process can be viewed as a pipeline consisting of image normalization, feature extraction, and subspace representation. This

paper present a simple and efficient preprocessing chain that eliminates most of the effects of changing illumination while still

preserving the essential appearance details that are needed for recognition. This paper focuses mainly on the issue of robustness

to lighting variations. The problem with this method is that filter removes both the useful and the incidental information. In [5] J

Chen, S Shan, Chu He, Guoying Zhao, M Pinen , propose a a simple, yet very powerful and robust local descriptor, called the

Weber Local Descriptor (WLD). WLD consists of two components: differential excitation and orientation. The differential

excitation component is a function of the ratio between two terms: One is the relative intensity differences of a current pixel

against its neighbors, the other is the intensity of the current pixel. WLD features to compute a histogram by encoding both

differential excitations and orientations at certain locations. The problem with this method is that it cannot be used for descriptor

for the domain of face recognition and object recognition.

In [6] Chih-Chin Lai,Ying-Chuan Chen proposed a user oriented mechanism for CBIR method based on an an interactive

genetic algorithm to infer which images in the databases would be of most interest to the user. Three visual features, color,

texture, and edge, of an image are utilized in this approach. This method provides an interactive mechanism to bridge the gap

between the visual features and the human perception. The color distributions, the mean value, the standard deviation,and image

bitmap are used as color information of an image. The problem with this algorithms have many limitations when dealing with

broad content image database. In [7] Subrahmanyam Murala, R. P. Maheshwari,R. Balasubramanian, proposed a novel image

indexing and retrieval algorithm using local tetra patterns (LTrPs). This method encodes the relationship between the referenced

pixel and its neighbors,based on the directions that are calculated using the first-order derivatives in vertical and horizontal

directions. The magnitude of the binary pattern is collected using magnitudes of derivatives. The effectiveness of the proposed

approach has been also analyzed by combining it with the Gabor transform(GT). This method doesnot take diagonal pixels for

derivative calculation.

In [8] J J de Mesquita , P C Cortez, and A Ricardo Backes proposed a method for color texture classiffication using shortest

path in graphs. First approach is to model a RGB color texture as a graph consider each color channel as an independent graph.

As a second approach, creat a single graph that represents the interaction among the three channels, connect the vertices from

pixels sharing the same region, independent of the color channel. The shortest paths now represent not only the global

information of the graph, but also hold information about the transitions between channels. Shortest paths computed by the

Dijkstras algorithm. The main problem with this method is that window size must be small. In [9] Z Liu, H Li, W Zhou, R Zhao,

and Qi Tian proposed to represent the spatial context of local features into binary codes, and implicitly achieve geometric

verification by efficient comparison of the binary codes. In this approach, for each single feature, its surrounding features are

clustered into different groups based on their spatial relationships with the center feature. The circle is divided into three fan

regions by the blue line originating from the circle center. Those surrounding features that locate in the same fan region belong to

the same group. Based on these grouped surrounding features, we generate a binary code to describe the center features spatial

context. The problem with this method is that in this method a large number of features are take for retrievel so to reduce the

number of features for retrievel purpose is still challenging.

All rights reserved by www.ijirst.org 292

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

In [10] Tanaya Guha,Rabab K. Proposed Image similarity sparse representation and compression distance .In this method it

measuring the similarity between two given images is to quantify how well each image can be represented using the information

of the other. A sparse representation-based approach to encode the information content of an image.It use the compactness of the

representation of the image as a measure of its compressibility. Two quantities use are the dictionary learnt from the image itself,

the dictionary extracted from the other image .Extract suitable features from the available training data. When a new query data

is available, similar features are extracted from the query. The obstacle lies in evaluating and approximating the conditional

compression.

III. PROPOSED METHODOLOGY

The main objective of this method is to retrieve similar images using feature extraction and k-means clustering. First a RGB

color image of size MxN is taken. And this image is divided into multiple non-overlapping image blocks of size mxn, and each

image block can be processed independently. Each image block b(i,j) is firstly converted into the inter-band average image

b i , j by

k ,l

b i , j 3 b i , j b i , j b k ,l i , j ;

1 red green blue

k ,l k ,l k ,l

k 1, 2 ,3 ... m ; l 1, 2 ,... n ,

Where (k,l) denotes the pixel coordinate on image block (i, j ). The inter-band average computation is applied to all image

blocks BTC approach performs the thresholding operation with a single threshold value obtained from the mean value of the

pixels in an image block. A pixel of a smaller value compared to the threshold is turned to 0 (black pixel); otherwise it turns to

1(white pixel) to construct the bitmap image representation. The ODBTC employs the void-and-cluster dither array of the same

size as an image block to generate the bitmap image. Let D k , l denotes the dither array coefficient at position k , l ,where

k 1, 2 ,... m and l 1, 2 ,... n. Dither array can be easily computed as

D k , l D

D k , l d

d min

D max

D min

Where D min

and D max

denotes the minimum and maximum coefficient values in the dither array respectively.The variable d

denotes the dither array index and is defined by d b i , j b i , j .

max min

All rights reserved by www.ijirst.org 293

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

Fig. 1: Block diagram of the proposed system

The minimum b i , j and maximum value b i , j of inter band average image on image block i, j

min max

can be computed

as

b i , j min b i , j ,

min

k ,l

k ,l

All rights reserved by www.ijirst.org 294

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

b i , j max b i , j ,

max

k ,l

k ,l

Minimum and maximum quantizer from all image blocks is given as

X min

x i , j ; i 1, 2 ..., M

min

; j 1, 2 ...

N

,

m n

X max

x i , j ; i 1, 2 ,...

max

M

; j 1, 2 ...

N

,

m n

Where x i , j and x i , j

min max

denotes the minimum and maximum value respectively, over red, green, and blue channels

on the corresponding image block i, j .The two values can be formally formulated as

x i , j min b i , j , min b i , j , min b k , l i , j

red green blue

min k ,l k ,l

k ,l k ,l k ,l

i , j max b k , l i , j , max b k , l i , j , max b k , l i , j

red green blue

x max

k ,l k ,l k ,l

At the end of the ODBTC encoding, the bitmap image, bm, the minimum quantizer,Xmin, and maximum quantizer, Xmax ,

are obtained. The decoder simply replaces the elements of value 0 in the bitmap by the minimum quantizer, and elements of

value 1 in the bitmap by the maximum quantizer.

Fig. 2: Block diagram of ODBTC encoding.

The ODBTC employed in the proposed method decomposes an image into a bitmap image and two color quantizers which are

subsequently exploited for deriving the image feature descriptor. Two image features are introduced in the proposed method to

characterize the image contents, i.e., Color Co-occurrence Feature (CCF) and Bit Pattern Feature (BPF). The CCF is derived

from the two color quantizers, and the BPF is from the bitmap image.

Color Co-Occurrence Feature (CCF):

Color Co-occurrence Feature (CCF) can be derived from the color co-occurrence matrix.CCF is computed from the two ODBTC

color quantizers. The minimum and maximum color quantizers are firstly indexed using a specific color codebook. The color co-

occurrence matrix is subsequently constructed from these indexed values. The CCF is derived from the color co-occurrence

matrix at the end of computation. The color indexing process on RGB space can be defined as mapping a RGB pixel of three

tuples into a finite subset (single tuple) of codebook index. The color indexing process of the ODBTC minimum quantizer can be

considered as the closest matching between the minimum quantizer value of each image block x i , j

min

and the codebook

C min

which meets the following condition.

i i , j arg min x i , j , c ||

min 2

min q 1 , 2 ... Nc

|| min q 2

All rights reserved by www.ijirst.org 295

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

M N

For all i 1, 2 ,... and j 1, 2 ,...

. Similarily for maximum quantizer can be calculated. The color co-occurrence matrix

m n

(i.e., Color Co- occurrence Features (CCF)) for a given image can be directly computed as

CCF t , t Pr i i , j t , i i , j t

1 2 min 1 max 2

| i 1, 2 ,...

M

; j 1, 2 ,...

N

m n

For t ,t

1 2

1, 2 ,... Nc . The color co-occurrence matrix is a sparse matrix, in which the zeros dominate its entries. To reduce

the feature dimensionality of the CCF and to speed up the image retrieval process, the color co-occurrence matrix can be binned

along its columns or rows to form a 1D image feature descriptor.

Fig. 3: Block diagram for Color Co-Occurance Feature Extraction

Bit Pattern Feature (BPF):

The binary vector quantization produces a representative bit pattern codebook from a set of training bitmap images obtained

from the ODBTC encoding process.These bit pattern codebooks are generated using binary vector quantization with soft

centroids , and many bitmap images are involved in the training stage.The bitmap of each block b i , j

m

is simply indexed

based on the similarity measurement between this bitmap and the codeword Q q

which meets the following criterion .

b i , j arg min q 1 , 2 ,... Nb b i , j , Q

H m q

M N

For all i 1, 2 ,...

m

and j 1, 2 ,...

n

.The symbol H

denotes the hamming distance between the two binary patterns.

Fig 4: Block diagram for Bit Pattern Feature Extraction

The Similarity Measure of the Feature:

The similarity between two images can be measured using the relative distance measure. The similarity distance plays an

important role for retrieving a set of similar images. The query image is firstly encoded with the ODBTC, yielding the

corresponding CCF and BPF. The two features are later compared with the features of target images in the database. A set of

similar images to the query image is returned and ordered based on their similarity distance score,i.e,. the lowest score indicates

the most similar image to the query image .

All rights reserved by www.ijirst.org 296

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

t CCF t |

q u ery t arg et

Nc

| CCF

query , t arg et 1

t CCF t

q u ery t arg et

t 1 | CCF

t BPF t

q u ery t arg et

Nb

BPF |

t BPF t

2 q u ery t arg et

| BPF

t 1

Where 1

and 2

denote the similarity weighting constants, representing the percentage contributions of the CCF and BPF

in the proposed image retrieval system.A small number is placed at the denominator to avoid the mathematical division error.

After the similarity computation a set of images are retrieved from the database related to the query images. These images may

also contain some irrelevant images also, so in order to make the search more accurate K-Means Clustering is used. We provide

a feedback form in which if we want to search some more image which are similar to the query image then press 1 or 0 to exit.

Then select the images more similar to the query image from the retrived images. The CCF and BPF features of all selected

images are taken and appented together ,then k- means clustering is applied and centroid is calculated and again similarity

computation is performed with rest images in the database and this search provide some more accurate result and this search can

be repeated three times to provide more accurate image retrivel.

IV. EXPERIMENTAL RESULTS

The method was implemented in a MATLAB 2014 prototype and tested with image collected from the database of 1000 images.

All the images are stored in JPEG format with size 384 x 256 or 256 x 384. It was applied to RGB images . Both the image were

retrived and test were performed on a desktop PC with the following characteristics: Intel Core i3 CPU, 3.4 GHz, 4 GB RAM.

The database was color images of different varieties. There are different categories which include 100 people,100 rose

flowers,100 beeches,100 old building,100 horses,100 dinosaur,100 elephant,100 mountain,100 food,100 buses.

A color image of size M x N is taken as a query image. This image is divided into image blocks of size m x n . ODBTC

encoding is performed. The image blocks are converted into inter-band average image. It is applied to each block independly.

BTC approach performs thresholding operation with a single threshold value obtained from the mean value of the pixel in an

image block.A pixel of a smaller value compared to the threshold is turned to 0 otherwise it is turned to 1 to construct the bitmap

image. Also maximum and minimum quantizer is computed. ODBTC transmit image bitmap,and also two extreme color

quantizer (minimum and maximum quantizer)to the decoder module over transmission channel. Figure 5 below shows minimum

and maximum quantizer of the query image .

Fig. 5: Minimum and Maximum Quantizer of the query image.

All rights reserved by www.ijirst.org 297

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

Fig. 6: Bitmap image of the query image

Figure 6 shows the bitmap image of the query image obtained in ODBTC encoding. After the maximum and minimum

quantizer and bitmap image is computed then value 0 in the bitmap is replaced with value in minimum quantizer and value 1 in

bitmap is replaced by value in the maximum quantizer and reconstructed image is formed. Figure 7 below shows the

reconstructed image of the query image.

Fig. 7: Original and reconstructed query image

The receiver decodes this data stream to reconstruct the image. The decoder replaces the element of value 0 in the bitmap with

value of minimum quantizer and element of value 1 in the bitmap by value of maximum quantizer. Two image features are

extracted to characterize the image contents i.e color co-occurrence feature and bit pattern feature. The CCF uses the two color

quantizer and BPF uses bitmap image. Then similarity between two images i.e query image and set of images in the database as

target image are measured using relative distance measure.And set of images are retrived,this also contain irrelevant images. So

select the relevant images from the retrived images. Then the CCF and BPF features of all selected images are extracted and

appended together. K-Means Clustering is applied. Then again similarity is computed and it retrive more similar image to the

query image and it can be repeated three times to yield better retrivel. Figure 8 shows a query image of peaople is tested and

similar images are been retrieved.

All rights reserved by www.ijirst.org 298

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

Fig. 8: Retrieving images similar to query image Fig. 9: Providing relevant feedback

Fig. 10: Selecting the image more similar to the query image. Fig. 11: More relevant images but providing relevant feedback

Fig. 12: More similar images to query image Fig. 13: Retrieving images similar to images of elephant.

All rights reserved by www.ijirst.org 299

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

Fig. 14: Providing relevant feedback Fig. 15: Selecting the images similar to image of an elephant.

Fig 17: More similar images to image of an elephant after

Fig 16: More relevant images but providing feedback.

providing feedback.

Fig 18: More similar image to elephant.

Content - based image retrievel using halftonic block truncation coding is compared with k-means clustering method. The k-

means clustering shows good retrievel of images than the halftonic block truncation coding. Here each image of people ,beache,

elephant, horse, ower, dinosaur etc are taken as query image and tested using halftonic based block truncation coding and k-

means clustering. And the accuracy by which correct images are retrieved using halftonic based block truncation coding is

All rights reserved by www.ijirst.org 300

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

calculated and then more relevant images are selected and its features are extracted and k-means is applied and the accuracy of

retrieving correct image using k-means is also calculated.

Table 1

Comparing the halftonic based BTC and K-Means Clustering

V. CONCLUSION

This work presented a novel method for image retrievel using feature extraction and k-mean clustering, which is easy to

implement while shows promising performance. First ODBTC encoding is done then maximum and minimum quantizer and

bitmap image is obtained. Then color co-occurrence and bit pattern features are extracted from the query image. The similarity

between two images (i.e., a query image and the set of images in the database as target image) can be measured using the relative

distance measure. The similarity distance plays an important role for retrieving a set of similar images. Then select the images

that are more relevant to query image.Then features of the selected images are appended together and k-mean is applied, which

provide more similar images to the query image.

The advantages of the proposed method are that it can effectively retrieve more similar images to the query image.

REFERENCES

[1] Y D Chun,N C Kim and I H Jang , Content based image retrieval using multiresolution color and texture features,in IEEE T rans. Image Process.vol

.10,no. 6, pp. 241253, Oct. 2008.

[2] R. Kwitt and A. Uhl , Lightweight probabilistic texture retrieval,in IEEE T rans. Image Process.vol. 19, no. 1,pp. 241253,Jan. 2010.

[3] B.Zhang,Y.Gao,S.Zhao and J.liu, Local derivative pattern versus local binary pattern: Face recognition with high-order local pattern descriptor, in IEEE

T rans. Image Process ,vol. 19, no. 2,pp. 533544, Feb. 2010.

[4] X.Tan and B.Triggs, Enhanced local texture feature sets for face recognition under difficult lighting conditions, in IEEE T rans. Image Process., vol.19,

no. 6, pp. 16351650, Jun. 2010.

All rights reserved by www.ijirst.org 301

Content-Based Image Retrieval using Feature Extraction and K-Means Clustering

(IJIRST/ Volume 3 / Issue 04/ 046)

[5] J.Chen et al., WLD: A robust local image descriptor ,in IEEE Trans. Pattern Anal. Mach. Intell.,,vol. 32,no .9, pp. 17051720,Sep. 2010.

[6] C. C Lai and Y.C Chen, A user-oriented image retrieval system based on interactive genetic algorithm, in IEEE Trans.

Instrum.Meas,.vol.60,no.10,pp.33183325,Oct. 2011.

[7] S.Murala,R.P.Maheshwari, Local tetra patterns:A new feature descriptor for content-based image retrieval , in IEEE T rans. Image Process.,vol. 21,

no.5,pp.28742886, May. 2012.

[8] T.Guha and R.K.Ward, Image similarity using sparse representation and compression distance ,in IEEE Trans. Multimedia.,vol. 16,no.4 pp.980987,Jun.

2014.

[9] J.J.de Mesquita,P.C.Cortex,and A.R.Backes, Color texture classification using shortest paths in graphs,in IEEE Trans. Image Process., vol.

23,no.8,pp.337513761,Sep. 2014.

[10] Z.Liu,H.Li,W.Zhou,R.Zhao and Q.Tian Contextual hashing for large-scale image search ,in IEEE Trans. Image Process., vol. 23,no.

4,pp.16061614,Apr. 2014.

[11] Jing-Ming Guo and Heri Prasetyo, Content-Based Image Retrieval Using Features Extracted From Halftoning-Based Block Truncation Coding, in IEEE

Trans. Image Process.vol.24,no.3, Mar. 2015.

[12] A.R.Baches, D.Casanov and O.M.Burno, Color texture analysis based on fractal descriptors in PIEEE Trans. Multimedia, vol.45,no.5,pp. 1984-

1992,2012.

All rights reserved by www.ijirst.org 302

You might also like

- Final 2015 Cookie Project Report The Cookie FactoryDocument16 pagesFinal 2015 Cookie Project Report The Cookie FactorySadat10162701100% (1)

- Introducer Agreement For IndiaDocument5 pagesIntroducer Agreement For Indiaaasim9609No ratings yet

- 802 1ad-2005Document74 pages802 1ad-2005lipe_sr100% (1)

- A Literature Survey of Image Descriptors in Content Based Image Retrieval PDFDocument11 pagesA Literature Survey of Image Descriptors in Content Based Image Retrieval PDFray_tan_11No ratings yet

- Tax Planning of RelianceDocument35 pagesTax Planning of RelianceDhaval DevmurariNo ratings yet

- Corporate Tax AdvisoryDocument2 pagesCorporate Tax AdvisoryParas MittalNo ratings yet

- Chocolate ReportDocument67 pagesChocolate ReportAkhil KwatraNo ratings yet

- Executive Summary: Sunsilk Is A Hair Care Brand, Primarily Aimed at Women, Produced by The UnileverDocument6 pagesExecutive Summary: Sunsilk Is A Hair Care Brand, Primarily Aimed at Women, Produced by The UnileverKarishma SaxenaNo ratings yet

- Lesson Plan Writing KSSR SJK Year 4Document12 pagesLesson Plan Writing KSSR SJK Year 4tamil arasi subrayanNo ratings yet

- FYP ThesisDocument83 pagesFYP ThesisHaris SohailNo ratings yet

- REPORT Aida ChocsDocument20 pagesREPORT Aida ChocsAnith Zahirah50% (2)

- Fast Track Hindi Songs LyricsDocument4 pagesFast Track Hindi Songs LyricsjasujunkNo ratings yet

- Eaa Presentation SP&CDocument16 pagesEaa Presentation SP&CGaabii Aaguilaar MoraalesNo ratings yet

- PGT 202E - Score Interpretation 2016 PDFDocument27 pagesPGT 202E - Score Interpretation 2016 PDFChinYcNo ratings yet

- An Intelligent Traffic Light System Using Object DDocument8 pagesAn Intelligent Traffic Light System Using Object DHo Wang FongNo ratings yet

- Note On The Asset Management IndustryDocument16 pagesNote On The Asset Management IndustryBenj DanaoNo ratings yet

- An Evaluation of The Effectiveness of Health and Safety Induction Practices in The Zambian Construction IndustryDocument5 pagesAn Evaluation of The Effectiveness of Health and Safety Induction Practices in The Zambian Construction IndustryYakub AndriyadiNo ratings yet

- 26302VNR 2020 Bangladesh Report PDFDocument201 pages26302VNR 2020 Bangladesh Report PDFMd. Mujibul Haque Munir100% (1)

- Break-Even SpreadsheetDocument9 pagesBreak-Even SpreadsheetioanaclaudiacapatinaNo ratings yet

- PM-Assignment 4Document23 pagesPM-Assignment 4raheel iqbalNo ratings yet

- School - Based Experience (SBE) ReportDocument4 pagesSchool - Based Experience (SBE) ReportKhairul AnwarNo ratings yet

- Fee Sharing Agreement Fix PointDocument2 pagesFee Sharing Agreement Fix PointAraceli GloriaNo ratings yet

- Itpm Discussion Question Chap 6 ShashiDocument3 pagesItpm Discussion Question Chap 6 ShashiShatiish Shaz0% (1)

- TSLB3023 English For Academic Purpose: Task 2:-Oral PresentationDocument9 pagesTSLB3023 English For Academic Purpose: Task 2:-Oral PresentationpreyangkaNo ratings yet

- Thesis On Content Based Image RetrievalDocument7 pagesThesis On Content Based Image Retrievalamberrodrigueznewhaven100% (2)

- Content Based Image Retrieval System Based On Neural NetworksDocument5 pagesContent Based Image Retrieval System Based On Neural NetworksInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Latest Research Paper On Content Based Image RetrievalDocument4 pagesLatest Research Paper On Content Based Image Retrievaldyf0g0h0fap3No ratings yet

- Thesis Content Based Image RetrievalDocument4 pagesThesis Content Based Image Retrievaltiffanycarpenterbillings100% (2)

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocument8 pagesA User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmVasanth Prasanna TNo ratings yet

- Research Paper On Image RetrievalDocument7 pagesResearch Paper On Image Retrievalafmbvvkxy100% (1)

- An Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsDocument7 pagesAn Efficient Perceptual of Content Based Image Retrieval System Using SVM and Evolutionary AlgorithmsAnonymous lPvvgiQjRNo ratings yet

- Significance of DimensionalityDocument16 pagesSignificance of DimensionalitysipijNo ratings yet

- IJRAR1ARP043Document4 pagesIJRAR1ARP043Muhammad MuhammadiNo ratings yet

- Multi-Feature and Genetic AlgorithmDocument41 pagesMulti-Feature and Genetic AlgorithmVigneshInfotechNo ratings yet

- Budapest2020Document22 pagesBudapest2020Uyen NhiNo ratings yet

- PHD Thesis On Content Based Image RetrievalDocument7 pagesPHD Thesis On Content Based Image Retrievallizaschmidnaperville100% (2)

- 1 s2.0 S1047320320301115 MainDocument10 pages1 s2.0 S1047320320301115 MainizuddinNo ratings yet

- A User-Oriented Image Retrieval System Based On Interactive Genetic AlgorithmDocument8 pagesA User-Oriented Image Retrieval System Based On Interactive Genetic Algorithmakumar5189No ratings yet

- A User Oriented Image Retrieval System Using Halftoning BBTCDocument8 pagesA User Oriented Image Retrieval System Using Halftoning BBTCEditor IJRITCCNo ratings yet

- Image Segmentation For Object Detection Using Mask R-CNN in ColabDocument5 pagesImage Segmentation For Object Detection Using Mask R-CNN in ColabGRD JournalsNo ratings yet

- Feature Extraction Approach For Content Based Image RetrievalDocument5 pagesFeature Extraction Approach For Content Based Image Retrievaleditor_ijarcsseNo ratings yet

- Sketch4Match - Content-Based Image Retrieval System Using SketchesDocument17 pagesSketch4Match - Content-Based Image Retrieval System Using SketchesMothukuri VijayaSankarNo ratings yet

- Review of Image Recognition From Image RepositoryDocument5 pagesReview of Image Recognition From Image RepositoryIJRASETPublicationsNo ratings yet

- Image Quantification Learning Technique Through Content Based Image RetrievalDocument4 pagesImage Quantification Learning Technique Through Content Based Image RetrievalRahul SharmaNo ratings yet

- Content Based Remote-Sensing Image Retrieval With Bag of Visual Words RepresentationDocument6 pagesContent Based Remote-Sensing Image Retrieval With Bag of Visual Words RepresentationNikhil TengliNo ratings yet

- Content Based Image Retrieval Thesis PDFDocument7 pagesContent Based Image Retrieval Thesis PDFstephanierivasdesmoines100% (2)

- Content-Based Image RetrievalDocument4 pagesContent-Based Image RetrievalKuldeep SinghNo ratings yet

- Content-Based Image Retrieval Based On Corel Dataset Using Deep LearningDocument10 pagesContent-Based Image Retrieval Based On Corel Dataset Using Deep LearningIAES IJAINo ratings yet

- Content Based Image Retrieval Using Color, Multi Dimensional Texture and Edge OrientationDocument6 pagesContent Based Image Retrieval Using Color, Multi Dimensional Texture and Edge OrientationIJSTENo ratings yet

- Medical Images Retrieval Using Clustering TechniqueDocument8 pagesMedical Images Retrieval Using Clustering TechniqueEditor IJRITCCNo ratings yet

- Content Based Image Retrieval Literature ReviewDocument7 pagesContent Based Image Retrieval Literature Reviewaflspfdov100% (1)

- Content-Based Image Retrieval Based On ROI Detection and Relevance FeedbackDocument31 pagesContent-Based Image Retrieval Based On ROI Detection and Relevance Feedbackkhalid lmouNo ratings yet

- Content Based Image Retrieval System Using Sketches and Colored Images With ClusteringDocument4 pagesContent Based Image Retrieval System Using Sketches and Colored Images With ClusteringInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- 1002.2193 CBIR PublishedDocument5 pages1002.2193 CBIR PublishedAmr MausadNo ratings yet

- Thesis On Image RetrievalDocument6 pagesThesis On Image Retrievallanawetschsiouxfalls100% (2)

- Content Based Image Retrieval Methods Using Self Supporting Retrieval Map AlgorithmDocument7 pagesContent Based Image Retrieval Methods Using Self Supporting Retrieval Map Algorithmeditor_ijarcsseNo ratings yet

- Baby Manjusha - CBIR ReportDocument25 pagesBaby Manjusha - CBIR ReportFeroza MirajkarNo ratings yet

- A Review On Image Retrieval TechniquesDocument4 pagesA Review On Image Retrieval TechniquesBONFRINGNo ratings yet

- A Novel Approach For Feature Selection and Classifier Optimization Compressed Medical Retrieval Using Hybrid Cuckoo SearchDocument8 pagesA Novel Approach For Feature Selection and Classifier Optimization Compressed Medical Retrieval Using Hybrid Cuckoo SearchAditya Wiha PradanaNo ratings yet

- Patil New Project ReportDocument45 pagesPatil New Project ReportRavi Kiran RajbhureNo ratings yet

- Content Based Image Retrieval ThesisDocument8 pagesContent Based Image Retrieval ThesisDon Dooley100% (2)

- Arduino-UNO Based Magnetic Field Strength MeasurementDocument4 pagesArduino-UNO Based Magnetic Field Strength MeasurementIJIRSTNo ratings yet

- Patterns of Crop Concentration, Crop Diversification and Crop Combination in Thiruchirappalli District, Tamil NaduDocument10 pagesPatterns of Crop Concentration, Crop Diversification and Crop Combination in Thiruchirappalli District, Tamil NaduIJIRSTNo ratings yet

- Satellite Dish Positioning SystemDocument5 pagesSatellite Dish Positioning SystemIJIRST100% (1)

- Transmission of SAE BajaDocument5 pagesTransmission of SAE BajaIJIRST100% (1)

- Currency Recognition Blind Walking StickDocument3 pagesCurrency Recognition Blind Walking StickIJIRSTNo ratings yet

- Development of Tourism Near Loktak Lake (Moirang) in Manipur Using Geographical Information and Management TechniquesDocument4 pagesDevelopment of Tourism Near Loktak Lake (Moirang) in Manipur Using Geographical Information and Management TechniquesIJIRSTNo ratings yet

- Literature Review For Designing of Portable CNC MachineDocument3 pagesLiterature Review For Designing of Portable CNC MachineIJIRSTNo ratings yet

- Social Impact of Chukha Hydro Power On Its Local Population in BhutanDocument2 pagesSocial Impact of Chukha Hydro Power On Its Local Population in BhutanIJIRSTNo ratings yet

- Analysis and Design of Shear Wall For An Earthquake Resistant Building Using ETABSDocument7 pagesAnalysis and Design of Shear Wall For An Earthquake Resistant Building Using ETABSIJIRSTNo ratings yet

- Analysis of Fraudulent in Graph Database For Identification and PreventionDocument8 pagesAnalysis of Fraudulent in Graph Database For Identification and PreventionIJIRSTNo ratings yet

- Automated Online Voting System (AOVS)Document4 pagesAutomated Online Voting System (AOVS)IJIRSTNo ratings yet

- Modeled Sensor Database For Internet of ThingsDocument4 pagesModeled Sensor Database For Internet of ThingsIJIRSTNo ratings yet

- Biology CurriculumDocument2 pagesBiology CurriculummarkddublinNo ratings yet

- The Noble-Abel Equation of State Termodynamic Derivations For Ballistic ModellingDocument27 pagesThe Noble-Abel Equation of State Termodynamic Derivations For Ballistic Modellingsux2beedNo ratings yet

- BMW B4 R1 Retail Standards 2013 Reference Handbook June 2014 en 1Document329 pagesBMW B4 R1 Retail Standards 2013 Reference Handbook June 2014 en 1Duy Anh Quách ĐôngNo ratings yet

- 1.2. Entrepreneurial Functions and Tasks: Esbm NotesDocument7 pages1.2. Entrepreneurial Functions and Tasks: Esbm NotesAdil ZamiNo ratings yet

- 2016-IoT-Adoption-Benchmark-Report - Year of The Smart DeviceDocument19 pages2016-IoT-Adoption-Benchmark-Report - Year of The Smart DevicecooperNo ratings yet

- Load-Carrying Behaviour of Connectors Under Shear, Tension and Compression in Ultra High Performance ConcreteDocument8 pagesLoad-Carrying Behaviour of Connectors Under Shear, Tension and Compression in Ultra High Performance Concretesriki_neelNo ratings yet

- Literacy TestDocument6 pagesLiteracy TestNURUL AIN BT GHAZALI nurul565No ratings yet

- Ilovepdf MergedDocument3 pagesIlovepdf MergedKirill KalininNo ratings yet

- Chapter 2 Part A 2.6L Four-Cylinder EngineDocument20 pagesChapter 2 Part A 2.6L Four-Cylinder EngineFlorin FurculesteanuNo ratings yet

- Foundry TechnologyDocument28 pagesFoundry TechnologyVp Hari NandanNo ratings yet

- T-920 User Manual v1.2Document11 pagesT-920 User Manual v1.2Aung Kyaw OoNo ratings yet

- Free Sap Tutorial On Invoice VerificationDocument46 pagesFree Sap Tutorial On Invoice VerificationAnirudh SinghNo ratings yet

- Em-35-6 - Operator's Manual Rate of Turn Indicator Roti-310Document41 pagesEm-35-6 - Operator's Manual Rate of Turn Indicator Roti-310pawanNo ratings yet

- Compressed Air System Design BasisDocument11 pagesCompressed Air System Design Basiskaruna346100% (2)

- Cired 2023 Technical ProgrammeDocument69 pagesCired 2023 Technical Programme5p9r7jxp9fNo ratings yet

- Hashtag GuideDocument11 pagesHashtag GuideChithra ShineyNo ratings yet

- Injection Molding Mold DesignDocument26 pagesInjection Molding Mold DesignDiligence100% (1)

- Power Point View TabDocument17 pagesPower Point View TabAudi PrevioNo ratings yet

- 250 kVA Transformer Manufacturing and Transformer TestingDocument84 pages250 kVA Transformer Manufacturing and Transformer TestingPronoy Kumar100% (2)

- Eryigit Goldberg 120sDocument2 pagesEryigit Goldberg 120sElom Djadoo-ananiNo ratings yet

- XRV Digital Interface ManualDocument131 pagesXRV Digital Interface ManualAbrahamNo ratings yet

- Istanbul New Airport LTFM Procedure: IVAO TR ATC OperationsDocument3 pagesIstanbul New Airport LTFM Procedure: IVAO TR ATC OperationsArmağan B.No ratings yet

- FlowLabEOC2e CH03Document2 pagesFlowLabEOC2e CH03tomekzawistowskiNo ratings yet

- Study Plan For PHD StudiesDocument2 pagesStudy Plan For PHD StudiesAhmad Raza100% (3)

- Service-Oriented Computing:: State of The Art and Research ChallengesDocument19 pagesService-Oriented Computing:: State of The Art and Research Challengesapi-26748751No ratings yet

- Part List: RX-RF5 5-Speed Internal Gear HubDocument2 pagesPart List: RX-RF5 5-Speed Internal Gear HubTomislav KoprekNo ratings yet

- What Every Engineer Should Know About InventingDocument169 pagesWhat Every Engineer Should Know About InventingIlyah JaucianNo ratings yet

- MPD May 2012Document120 pagesMPD May 2012Brzata PticaNo ratings yet

- R290LC 3Document476 pagesR290LC 3PHÁT NGUYỄN THẾ100% (9)