Professional Documents

Culture Documents

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Uploaded by

Siva KumarCopyright:

Available Formats

You might also like

- Data Leakage Detection Complete Project ReportDocument59 pagesData Leakage Detection Complete Project Reportmansha9985% (33)

- Data Leakage Detection Complete Project ReportDocument59 pagesData Leakage Detection Complete Project ReportGokul krishnan100% (1)

- Encryption Privacy PreservationDocument2 pagesEncryption Privacy PreservationMonish KumarNo ratings yet

- Privacy in Online Social Networks: A SurveyDocument4 pagesPrivacy in Online Social Networks: A SurveyGRD JournalsNo ratings yet

- Review On Health Care Database Mining in Outsourced DatabaseDocument4 pagesReview On Health Care Database Mining in Outsourced DatabaseInternational Journal of Scholarly ResearchNo ratings yet

- Entrustment of Access Control in Public CloudsDocument7 pagesEntrustment of Access Control in Public CloudsIJCERT PUBLICATIONSNo ratings yet

- Public Integrity Auditing For Shared Dynamic Cloud Data With Group User RevocationDocument15 pagesPublic Integrity Auditing For Shared Dynamic Cloud Data With Group User RevocationSikkandhar Jabbar100% (1)

- Enforcing Secure and Privacy-Preserving Information Brokering in Distributed Information SharingDocument3 pagesEnforcing Secure and Privacy-Preserving Information Brokering in Distributed Information SharingIJRASETPublicationsNo ratings yet

- Database SecurityDocument3 pagesDatabase SecurityLoveNo ratings yet

- Evaluating The Utility of Anonymized Network Traces For Intrusion DetectionDocument18 pagesEvaluating The Utility of Anonymized Network Traces For Intrusion DetectionAgus Riki GunawanNo ratings yet

- (IJCST-V3I3P23) : Mr. J.RangaRajesh, Mrs. Srilakshmi - Voddelli, Mr. G.Venkata Krishna, Mrs. T.HemalathaDocument9 pages(IJCST-V3I3P23) : Mr. J.RangaRajesh, Mrs. Srilakshmi - Voddelli, Mr. G.Venkata Krishna, Mrs. T.HemalathaEighthSenseGroupNo ratings yet

- Protecting and Analysing Health Care Data On Cloud: Abstract-Various Health Care Devices Owned by Either HosDocument7 pagesProtecting and Analysing Health Care Data On Cloud: Abstract-Various Health Care Devices Owned by Either HosSathis Kumar KathiresanNo ratings yet

- Implementation On Health Care Database Mining in Outsourced DatabaseDocument5 pagesImplementation On Health Care Database Mining in Outsourced DatabaseInternational Journal of Scholarly ResearchNo ratings yet

- Data Sharing in Cloud Storage Using Key Aggregate: 2.1 Existing SystemDocument44 pagesData Sharing in Cloud Storage Using Key Aggregate: 2.1 Existing SystemUday SolNo ratings yet

- AbstractDocument42 pagesAbstractNarayanan.mNo ratings yet

- Application of Association Rule Hiding On Privacy Preserving Data MiningDocument4 pagesApplication of Association Rule Hiding On Privacy Preserving Data MiningerpublicationNo ratings yet

- Secured Data Lineage Transmission in Malicious Environment: K. IndumathiDocument3 pagesSecured Data Lineage Transmission in Malicious Environment: K. Indumathisnet solutionsNo ratings yet

- Data Leakage DetectionDocument48 pagesData Leakage DetectionSudhanshu DixitNo ratings yet

- ProjectDocument69 pagesProjectChocko AyshuNo ratings yet

- V3i205 PDFDocument5 pagesV3i205 PDFIJCERT PUBLICATIONSNo ratings yet

- Preventing Diversity Attacks in Privacy Preserving Data MiningDocument6 pagesPreventing Diversity Attacks in Privacy Preserving Data MiningseventhsensegroupNo ratings yet

- Privacy Levels For Computer Forensics: Toward A More Efficient Privacy-Preserving InvestigationDocument6 pagesPrivacy Levels For Computer Forensics: Toward A More Efficient Privacy-Preserving InvestigationluluamalianNo ratings yet

- ICETIS 2022 Paper 96Document6 pagesICETIS 2022 Paper 96Abdullah AlahdalNo ratings yet

- 1 Data LeakageDocument9 pages1 Data LeakageRanjana singh maraviNo ratings yet

- ML OppDocument9 pagesML OppNAGA KUMARI ODUGUNo ratings yet

- Privacy Preserving Query Processing Using Third PartiesDocument10 pagesPrivacy Preserving Query Processing Using Third PartiesAnonymous UAmZFqzZ3PNo ratings yet

- Siva SankarDocument6 pagesSiva SankarSiva SankarNo ratings yet

- Data Leakage in Cloud SystemDocument5 pagesData Leakage in Cloud SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ijramt V4 I6 12Document4 pagesIjramt V4 I6 12Banumathi MNo ratings yet

- Privacy Preserving Association Rule Mining Using ROBFRUGHAL AlgorithmDocument6 pagesPrivacy Preserving Association Rule Mining Using ROBFRUGHAL AlgorithmInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Maximize The Storage Space in Cloud Environment Using DeduplicationDocument3 pagesMaximize The Storage Space in Cloud Environment Using DeduplicationStartechnico TechnocratsNo ratings yet

- 160 1498417881 - 25-06-2017 PDFDocument4 pages160 1498417881 - 25-06-2017 PDFEditor IJRITCCNo ratings yet

- Access Control Aware Data Retrieval For Secret SharingDocument30 pagesAccess Control Aware Data Retrieval For Secret Sharings.bahrami1104No ratings yet

- Information Leakage Prevention Model Using MultiAgent Architecture in A Distributed EnvironmentDocument4 pagesInformation Leakage Prevention Model Using MultiAgent Architecture in A Distributed EnvironmentInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Decentralized Access Control Based Crime Analysis-Survey: A A A A A B CDocument3 pagesDecentralized Access Control Based Crime Analysis-Survey: A A A A A B CeditorinchiefijcsNo ratings yet

- A Survey On Privacy Preserving Data Mining TechniquesDocument5 pagesA Survey On Privacy Preserving Data Mining TechniquesIOSRjournalNo ratings yet

- A25-Article 1686738490Document9 pagesA25-Article 1686738490Safiye SencerNo ratings yet

- Secrecy Preserving Discovery of Subtle Statistic ContentsDocument5 pagesSecrecy Preserving Discovery of Subtle Statistic ContentsIJSTENo ratings yet

- Privacy Preserving DDMDocument5 pagesPrivacy Preserving DDMShanthi SubramaniamNo ratings yet

- Privacy Preserving Association Rule Mining: February 2002Document9 pagesPrivacy Preserving Association Rule Mining: February 2002Karina AuliaNo ratings yet

- 6.Privacy-Preserving Public Auditing For Secure Cloud StorageDocument7 pages6.Privacy-Preserving Public Auditing For Secure Cloud StorageVishal S KaakadeNo ratings yet

- Mona: Secure Multi-Owner Data Sharing For Dynamic Groups in The CloudDocument5 pagesMona: Secure Multi-Owner Data Sharing For Dynamic Groups in The CloudRajaRajeswari GanesanNo ratings yet

- Deduplication on Encrypted Data in Cloud ComputingDocument4 pagesDeduplication on Encrypted Data in Cloud ComputingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Entrustment of Access Control in Public Clouds: A.Naga Bala, D.RavikiranDocument7 pagesEntrustment of Access Control in Public Clouds: A.Naga Bala, D.RavikiranijcertNo ratings yet

- A Review On "Privacy Preservation Data Mining (PPDM)Document6 pagesA Review On "Privacy Preservation Data Mining (PPDM)ATSNo ratings yet

- Information Reliquishment Using Big Data: A.P, India A.P, IndiaDocument8 pagesInformation Reliquishment Using Big Data: A.P, India A.P, IndiaEditor InsideJournalsNo ratings yet

- A Framework For Privacy-Preserving White-Box Anomaly Detection Using A Lattice-Based Access ControlDocument13 pagesA Framework For Privacy-Preserving White-Box Anomaly Detection Using A Lattice-Based Access Controlcr33sNo ratings yet

- Survey On Incentive Compatible Privacy Preserving Data Analysis TechniqueDocument5 pagesSurvey On Incentive Compatible Privacy Preserving Data Analysis TechniqueInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Data Leakage Detection: A Seminar Report ONDocument32 pagesData Leakage Detection: A Seminar Report ONPradeep Chauhan0% (1)

- Detecting SecretsDocument27 pagesDetecting SecretsvmadhuriNo ratings yet

- Ijser: Data Leakage Detection Using Cloud ComputingDocument6 pagesIjser: Data Leakage Detection Using Cloud ComputingHyndu ChowdaryNo ratings yet

- Improving Database Security in Cloud Computing by Fragmentation of DataDocument7 pagesImproving Database Security in Cloud Computing by Fragmentation of DataMadhuri M SNo ratings yet

- Data Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentDocument5 pagesData Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentIJRASETPublicationsNo ratings yet

- Data Security - Challenges and Research OpportunitiesDocument5 pagesData Security - Challenges and Research Opportunities22ec10va480No ratings yet

- UntitledDocument53 pagesUntitledAastha Shukla 22111188113No ratings yet

- Toaz - Info Data Leakage Detection Complete Project Report PRDocument59 pagesToaz - Info Data Leakage Detection Complete Project Report PRVardhan ThugNo ratings yet

- Basu 2015Document7 pagesBasu 2015727822TPMB005 ARAVINTHAN.SNo ratings yet

- A Secure Data Leakage Detection Protection Scheme Over Lan NetworkDocument13 pagesA Secure Data Leakage Detection Protection Scheme Over Lan NetworkkokomomoNo ratings yet

- Uncertainty Theories and Multisensor Data FusionFrom EverandUncertainty Theories and Multisensor Data FusionAlain AppriouNo ratings yet

- Pristine Pixcaptcha As Graphical Password For Secure Ebanking Using Gaussian Elimination and Cleaves AlgorithmDocument6 pagesPristine Pixcaptcha As Graphical Password For Secure Ebanking Using Gaussian Elimination and Cleaves AlgorithmSiva KumarNo ratings yet

- A Survey On Nutrition Monitoring and Dietary Management SystemsDocument7 pagesA Survey On Nutrition Monitoring and Dietary Management SystemsSiva KumarNo ratings yet

- Security Analysis and Improvement For Kerberos Based On Dynamic Password and DiffieDocument1 pageSecurity Analysis and Improvement For Kerberos Based On Dynamic Password and DiffieSiva KumarNo ratings yet

- Ec1x11 Electron Devices and Circuits3 0 2 100Document2 pagesEc1x11 Electron Devices and Circuits3 0 2 100Siva KumarNo ratings yet

- Core New ArchDocument1 pageCore New ArchSiva KumarNo ratings yet

- Provably Leakage-Resilient Password-Based Authenticated Key Exchange in The Standard ModelDocument10 pagesProvably Leakage-Resilient Password-Based Authenticated Key Exchange in The Standard ModelSiva KumarNo ratings yet

- A Study On The Impact ofDocument1 pageA Study On The Impact ofSiva KumarNo ratings yet

- Towards Privacy-Preserving Storage and Retrieval in Multiple CloudsDocument1 pageTowards Privacy-Preserving Storage and Retrieval in Multiple CloudsSiva KumarNo ratings yet

- Analysis of Employee Welfare: The Basic Features of Labor Welfare Measures Are As FollowsDocument1 pageAnalysis of Employee Welfare: The Basic Features of Labor Welfare Measures Are As FollowsSiva KumarNo ratings yet

- DEARER: A Distance-and-Energy-Aware Routing With Energy Reservation For Energy Harvesting Wireless Sensor NetworksDocument6 pagesDEARER: A Distance-and-Energy-Aware Routing With Energy Reservation For Energy Harvesting Wireless Sensor NetworksSiva KumarNo ratings yet

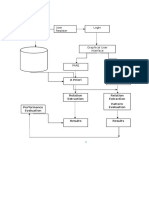

- Login: SVM Algorithm A Priori AlgorithmDocument1 pageLogin: SVM Algorithm A Priori AlgorithmSiva KumarNo ratings yet

- Fut EnhaDocument1 pageFut EnhaSiva KumarNo ratings yet

- MODULESDocument2 pagesMODULESSiva KumarNo ratings yet

- User Register Login: SVM A PrioriDocument1 pageUser Register Login: SVM A PrioriSiva KumarNo ratings yet

- CRDT: Correlation Ratio Based Decision Tree Model For Healthcare Data MiningDocument1 pageCRDT: Correlation Ratio Based Decision Tree Model For Healthcare Data MiningSiva KumarNo ratings yet

- Elm: An Extended Logic Matching Method On Record Linkage Analysis of Disparate Databases For Profiling Data MiningDocument1 pageElm: An Extended Logic Matching Method On Record Linkage Analysis of Disparate Databases For Profiling Data MiningSiva KumarNo ratings yet

- A Mining Algorithm For Frequent Closed Pattern On Data Stream Based On Sub-Structure Compressed in Prefix-TreeDocument1 pageA Mining Algorithm For Frequent Closed Pattern On Data Stream Based On Sub-Structure Compressed in Prefix-TreeSiva KumarNo ratings yet

- Ksf-Oabe: Outsourced Attribute-Based Encryption With Keyword Search Function For Cloud StorageDocument1 pageKsf-Oabe: Outsourced Attribute-Based Encryption With Keyword Search Function For Cloud StorageSiva KumarNo ratings yet

- An Encryption, Compression and Key (Eck) Management Based Data Security Framework For Infrastructure As A Service in CloudDocument1 pageAn Encryption, Compression and Key (Eck) Management Based Data Security Framework For Infrastructure As A Service in CloudSiva KumarNo ratings yet

- Authentication and Access ControlDocument1 pageAuthentication and Access ControlSiva KumarNo ratings yet

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Uploaded by

Siva KumarOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Privacy-Preserving Utility Verification of The Data Published by Non-Interactive Differentially Private Mechanisms

Uploaded by

Siva KumarCopyright:

Available Formats

PRIVACY-PRESERVING UTILITY VERIFICATION OF THE DATA

PUBLISHED BY NON-INTERACTIVE DIFFERENTIALLY PRIVATE

MECHANISMS

ABSTRACT

In the problem of privacy-preserving collaborative data publishing, a central data

publisher is responsible for aggregating sensitive data from multiple parties and then

anonymizing it before publishing for data mining. In such scenarios, the data users may

have a strong demand to measure the utility of the published data, since most

anonymization techniques have side effects on data utility.

Nevertheless, this task is non-trivial, because the utility measuring usually requires

the aggregated raw data, which is not revealed to the data users due to privacy concerns.

Furthermore, the data publishers may even cheat in the raw data, since no one, including

the individual providers, knows the full data set.

In this paper, we first propose a privacy-preserving utility verification mechanism

based upon cryptographic technique for DiffPart-a differentially private scheme designed

for set-valued data. This proposal can measure the data utility based upon the encrypted

frequencies of the aggregated raw data instead of the plain values, which thus prevents

privacy breach. Moreover, it is enabled to privately check the correctness of the

encrypted frequencies provided by the publisher, which helps detect dishonest publishers.

We also extend this mechanism to DiffGen-another differentially private publishing

scheme designed for relational data. Our theoretical and experimental evaluations

demonstrate the security and efficiency of the proposed mechanism.

You might also like

- Data Leakage Detection Complete Project ReportDocument59 pagesData Leakage Detection Complete Project Reportmansha9985% (33)

- Data Leakage Detection Complete Project ReportDocument59 pagesData Leakage Detection Complete Project ReportGokul krishnan100% (1)

- Encryption Privacy PreservationDocument2 pagesEncryption Privacy PreservationMonish KumarNo ratings yet

- Privacy in Online Social Networks: A SurveyDocument4 pagesPrivacy in Online Social Networks: A SurveyGRD JournalsNo ratings yet

- Review On Health Care Database Mining in Outsourced DatabaseDocument4 pagesReview On Health Care Database Mining in Outsourced DatabaseInternational Journal of Scholarly ResearchNo ratings yet

- Entrustment of Access Control in Public CloudsDocument7 pagesEntrustment of Access Control in Public CloudsIJCERT PUBLICATIONSNo ratings yet

- Public Integrity Auditing For Shared Dynamic Cloud Data With Group User RevocationDocument15 pagesPublic Integrity Auditing For Shared Dynamic Cloud Data With Group User RevocationSikkandhar Jabbar100% (1)

- Enforcing Secure and Privacy-Preserving Information Brokering in Distributed Information SharingDocument3 pagesEnforcing Secure and Privacy-Preserving Information Brokering in Distributed Information SharingIJRASETPublicationsNo ratings yet

- Database SecurityDocument3 pagesDatabase SecurityLoveNo ratings yet

- Evaluating The Utility of Anonymized Network Traces For Intrusion DetectionDocument18 pagesEvaluating The Utility of Anonymized Network Traces For Intrusion DetectionAgus Riki GunawanNo ratings yet

- (IJCST-V3I3P23) : Mr. J.RangaRajesh, Mrs. Srilakshmi - Voddelli, Mr. G.Venkata Krishna, Mrs. T.HemalathaDocument9 pages(IJCST-V3I3P23) : Mr. J.RangaRajesh, Mrs. Srilakshmi - Voddelli, Mr. G.Venkata Krishna, Mrs. T.HemalathaEighthSenseGroupNo ratings yet

- Protecting and Analysing Health Care Data On Cloud: Abstract-Various Health Care Devices Owned by Either HosDocument7 pagesProtecting and Analysing Health Care Data On Cloud: Abstract-Various Health Care Devices Owned by Either HosSathis Kumar KathiresanNo ratings yet

- Implementation On Health Care Database Mining in Outsourced DatabaseDocument5 pagesImplementation On Health Care Database Mining in Outsourced DatabaseInternational Journal of Scholarly ResearchNo ratings yet

- Data Sharing in Cloud Storage Using Key Aggregate: 2.1 Existing SystemDocument44 pagesData Sharing in Cloud Storage Using Key Aggregate: 2.1 Existing SystemUday SolNo ratings yet

- AbstractDocument42 pagesAbstractNarayanan.mNo ratings yet

- Application of Association Rule Hiding On Privacy Preserving Data MiningDocument4 pagesApplication of Association Rule Hiding On Privacy Preserving Data MiningerpublicationNo ratings yet

- Secured Data Lineage Transmission in Malicious Environment: K. IndumathiDocument3 pagesSecured Data Lineage Transmission in Malicious Environment: K. Indumathisnet solutionsNo ratings yet

- Data Leakage DetectionDocument48 pagesData Leakage DetectionSudhanshu DixitNo ratings yet

- ProjectDocument69 pagesProjectChocko AyshuNo ratings yet

- V3i205 PDFDocument5 pagesV3i205 PDFIJCERT PUBLICATIONSNo ratings yet

- Preventing Diversity Attacks in Privacy Preserving Data MiningDocument6 pagesPreventing Diversity Attacks in Privacy Preserving Data MiningseventhsensegroupNo ratings yet

- Privacy Levels For Computer Forensics: Toward A More Efficient Privacy-Preserving InvestigationDocument6 pagesPrivacy Levels For Computer Forensics: Toward A More Efficient Privacy-Preserving InvestigationluluamalianNo ratings yet

- ICETIS 2022 Paper 96Document6 pagesICETIS 2022 Paper 96Abdullah AlahdalNo ratings yet

- 1 Data LeakageDocument9 pages1 Data LeakageRanjana singh maraviNo ratings yet

- ML OppDocument9 pagesML OppNAGA KUMARI ODUGUNo ratings yet

- Privacy Preserving Query Processing Using Third PartiesDocument10 pagesPrivacy Preserving Query Processing Using Third PartiesAnonymous UAmZFqzZ3PNo ratings yet

- Siva SankarDocument6 pagesSiva SankarSiva SankarNo ratings yet

- Data Leakage in Cloud SystemDocument5 pagesData Leakage in Cloud SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Ijramt V4 I6 12Document4 pagesIjramt V4 I6 12Banumathi MNo ratings yet

- Privacy Preserving Association Rule Mining Using ROBFRUGHAL AlgorithmDocument6 pagesPrivacy Preserving Association Rule Mining Using ROBFRUGHAL AlgorithmInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Maximize The Storage Space in Cloud Environment Using DeduplicationDocument3 pagesMaximize The Storage Space in Cloud Environment Using DeduplicationStartechnico TechnocratsNo ratings yet

- 160 1498417881 - 25-06-2017 PDFDocument4 pages160 1498417881 - 25-06-2017 PDFEditor IJRITCCNo ratings yet

- Access Control Aware Data Retrieval For Secret SharingDocument30 pagesAccess Control Aware Data Retrieval For Secret Sharings.bahrami1104No ratings yet

- Information Leakage Prevention Model Using MultiAgent Architecture in A Distributed EnvironmentDocument4 pagesInformation Leakage Prevention Model Using MultiAgent Architecture in A Distributed EnvironmentInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Decentralized Access Control Based Crime Analysis-Survey: A A A A A B CDocument3 pagesDecentralized Access Control Based Crime Analysis-Survey: A A A A A B CeditorinchiefijcsNo ratings yet

- A Survey On Privacy Preserving Data Mining TechniquesDocument5 pagesA Survey On Privacy Preserving Data Mining TechniquesIOSRjournalNo ratings yet

- A25-Article 1686738490Document9 pagesA25-Article 1686738490Safiye SencerNo ratings yet

- Secrecy Preserving Discovery of Subtle Statistic ContentsDocument5 pagesSecrecy Preserving Discovery of Subtle Statistic ContentsIJSTENo ratings yet

- Privacy Preserving DDMDocument5 pagesPrivacy Preserving DDMShanthi SubramaniamNo ratings yet

- Privacy Preserving Association Rule Mining: February 2002Document9 pagesPrivacy Preserving Association Rule Mining: February 2002Karina AuliaNo ratings yet

- 6.Privacy-Preserving Public Auditing For Secure Cloud StorageDocument7 pages6.Privacy-Preserving Public Auditing For Secure Cloud StorageVishal S KaakadeNo ratings yet

- Mona: Secure Multi-Owner Data Sharing For Dynamic Groups in The CloudDocument5 pagesMona: Secure Multi-Owner Data Sharing For Dynamic Groups in The CloudRajaRajeswari GanesanNo ratings yet

- Deduplication on Encrypted Data in Cloud ComputingDocument4 pagesDeduplication on Encrypted Data in Cloud ComputingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Entrustment of Access Control in Public Clouds: A.Naga Bala, D.RavikiranDocument7 pagesEntrustment of Access Control in Public Clouds: A.Naga Bala, D.RavikiranijcertNo ratings yet

- A Review On "Privacy Preservation Data Mining (PPDM)Document6 pagesA Review On "Privacy Preservation Data Mining (PPDM)ATSNo ratings yet

- Information Reliquishment Using Big Data: A.P, India A.P, IndiaDocument8 pagesInformation Reliquishment Using Big Data: A.P, India A.P, IndiaEditor InsideJournalsNo ratings yet

- A Framework For Privacy-Preserving White-Box Anomaly Detection Using A Lattice-Based Access ControlDocument13 pagesA Framework For Privacy-Preserving White-Box Anomaly Detection Using A Lattice-Based Access Controlcr33sNo ratings yet

- Survey On Incentive Compatible Privacy Preserving Data Analysis TechniqueDocument5 pagesSurvey On Incentive Compatible Privacy Preserving Data Analysis TechniqueInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Data Leakage Detection: A Seminar Report ONDocument32 pagesData Leakage Detection: A Seminar Report ONPradeep Chauhan0% (1)

- Detecting SecretsDocument27 pagesDetecting SecretsvmadhuriNo ratings yet

- Ijser: Data Leakage Detection Using Cloud ComputingDocument6 pagesIjser: Data Leakage Detection Using Cloud ComputingHyndu ChowdaryNo ratings yet

- Improving Database Security in Cloud Computing by Fragmentation of DataDocument7 pagesImproving Database Security in Cloud Computing by Fragmentation of DataMadhuri M SNo ratings yet

- Data Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentDocument5 pagesData Security of Dynamic and Robust Role Based Access Control From Multiple Authorities in Cloud EnvironmentIJRASETPublicationsNo ratings yet

- Data Security - Challenges and Research OpportunitiesDocument5 pagesData Security - Challenges and Research Opportunities22ec10va480No ratings yet

- UntitledDocument53 pagesUntitledAastha Shukla 22111188113No ratings yet

- Toaz - Info Data Leakage Detection Complete Project Report PRDocument59 pagesToaz - Info Data Leakage Detection Complete Project Report PRVardhan ThugNo ratings yet

- Basu 2015Document7 pagesBasu 2015727822TPMB005 ARAVINTHAN.SNo ratings yet

- A Secure Data Leakage Detection Protection Scheme Over Lan NetworkDocument13 pagesA Secure Data Leakage Detection Protection Scheme Over Lan NetworkkokomomoNo ratings yet

- Uncertainty Theories and Multisensor Data FusionFrom EverandUncertainty Theories and Multisensor Data FusionAlain AppriouNo ratings yet

- Pristine Pixcaptcha As Graphical Password For Secure Ebanking Using Gaussian Elimination and Cleaves AlgorithmDocument6 pagesPristine Pixcaptcha As Graphical Password For Secure Ebanking Using Gaussian Elimination and Cleaves AlgorithmSiva KumarNo ratings yet

- A Survey On Nutrition Monitoring and Dietary Management SystemsDocument7 pagesA Survey On Nutrition Monitoring and Dietary Management SystemsSiva KumarNo ratings yet

- Security Analysis and Improvement For Kerberos Based On Dynamic Password and DiffieDocument1 pageSecurity Analysis and Improvement For Kerberos Based On Dynamic Password and DiffieSiva KumarNo ratings yet

- Ec1x11 Electron Devices and Circuits3 0 2 100Document2 pagesEc1x11 Electron Devices and Circuits3 0 2 100Siva KumarNo ratings yet

- Core New ArchDocument1 pageCore New ArchSiva KumarNo ratings yet

- Provably Leakage-Resilient Password-Based Authenticated Key Exchange in The Standard ModelDocument10 pagesProvably Leakage-Resilient Password-Based Authenticated Key Exchange in The Standard ModelSiva KumarNo ratings yet

- A Study On The Impact ofDocument1 pageA Study On The Impact ofSiva KumarNo ratings yet

- Towards Privacy-Preserving Storage and Retrieval in Multiple CloudsDocument1 pageTowards Privacy-Preserving Storage and Retrieval in Multiple CloudsSiva KumarNo ratings yet

- Analysis of Employee Welfare: The Basic Features of Labor Welfare Measures Are As FollowsDocument1 pageAnalysis of Employee Welfare: The Basic Features of Labor Welfare Measures Are As FollowsSiva KumarNo ratings yet

- DEARER: A Distance-and-Energy-Aware Routing With Energy Reservation For Energy Harvesting Wireless Sensor NetworksDocument6 pagesDEARER: A Distance-and-Energy-Aware Routing With Energy Reservation For Energy Harvesting Wireless Sensor NetworksSiva KumarNo ratings yet

- Login: SVM Algorithm A Priori AlgorithmDocument1 pageLogin: SVM Algorithm A Priori AlgorithmSiva KumarNo ratings yet

- Fut EnhaDocument1 pageFut EnhaSiva KumarNo ratings yet

- MODULESDocument2 pagesMODULESSiva KumarNo ratings yet

- User Register Login: SVM A PrioriDocument1 pageUser Register Login: SVM A PrioriSiva KumarNo ratings yet

- CRDT: Correlation Ratio Based Decision Tree Model For Healthcare Data MiningDocument1 pageCRDT: Correlation Ratio Based Decision Tree Model For Healthcare Data MiningSiva KumarNo ratings yet

- Elm: An Extended Logic Matching Method On Record Linkage Analysis of Disparate Databases For Profiling Data MiningDocument1 pageElm: An Extended Logic Matching Method On Record Linkage Analysis of Disparate Databases For Profiling Data MiningSiva KumarNo ratings yet

- A Mining Algorithm For Frequent Closed Pattern On Data Stream Based On Sub-Structure Compressed in Prefix-TreeDocument1 pageA Mining Algorithm For Frequent Closed Pattern On Data Stream Based On Sub-Structure Compressed in Prefix-TreeSiva KumarNo ratings yet

- Ksf-Oabe: Outsourced Attribute-Based Encryption With Keyword Search Function For Cloud StorageDocument1 pageKsf-Oabe: Outsourced Attribute-Based Encryption With Keyword Search Function For Cloud StorageSiva KumarNo ratings yet

- An Encryption, Compression and Key (Eck) Management Based Data Security Framework For Infrastructure As A Service in CloudDocument1 pageAn Encryption, Compression and Key (Eck) Management Based Data Security Framework For Infrastructure As A Service in CloudSiva KumarNo ratings yet

- Authentication and Access ControlDocument1 pageAuthentication and Access ControlSiva KumarNo ratings yet