Professional Documents

Culture Documents

Peer Review 9-5-09.PdfBayes Estimation of

Peer Review 9-5-09.PdfBayes Estimation of

Uploaded by

fst3040501Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Peer Review 9-5-09.PdfBayes Estimation of

Peer Review 9-5-09.PdfBayes Estimation of

Uploaded by

fst3040501Copyright:

Available Formats

Communications in Statistics Theory and Methods

Fo

rP

Bayes Estimation of Weibull Distribution Parameters Using

Ranked Set Sampling

ee

Journal: Communications in Statistics Theory and Methods

rR

Manuscript ID: LSTA-2009-0199.R1

Manuscript Type: Original Paper

ev

Date Submitted by the

Author:

Complete List of Authors: Helu, Amal; University of Jordan

ie

Abu-Salih, Muhammad; Amman Arab University for Graduate

Studies

Alkam, Osama; University of Jordan

w

Weibull Distribution, Ranked Set Sampling, Simple Random Sample,

Keywords:

Modified Ranked Set Sampling

On

Estimation of the parameters of Weibull distribution is considered

using different methods of estimation based on different sampling

schemes namely, Simple Random Sample (SRS), Ranked Set

Sample (RSS), and Modified Ranked Set Sample (MRSS). Methods

of estimation used are Maximum Likelihood (ML), Method of

Abstract:

ly

moments (Mom), and Bayes. Comparison between estimators is

made through simulation via their Biases, Relative Efficiency (RE),

and Pitman Nearness Probability (PN). Estimators based on RSS

and MRSS have many advantages over other estimators based on

SRS.

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 1 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9

10

11

12

13

Bayes Estimation of Weibull

14

15

Distribution Parameters Using

16

Ranked Set Sampling

Fo

17

18

19

20 Amal Helu

rP

21 Department of mathematics, University of Jordan

22

23 Amman, Jordan

24

Muhammad Abu-Salih

ee

25

26 Amman Arab University for Graduate Studies

27

28 Amman, Jordan

rR

29

30 Osama Alkam

31

32 Department of mathematics, University of Jordan

33 Amman, Jordan

ev

34

35

36

ABSTRACT

iew

37

38

39 Estimation of the parameters of Weibull distribution is considered using dif-

40

ferent methods of estimation based on different sampling schemes namely,

41

42 Simple Random Sample (SRS), Ranked Set Sample (RSS), and Modified

43 Ranked Set Sample (M RSS). Methods of estimation used are Maximum

On

44 Likelihood (M L), Method of moments (M om), and Bayes. Comparison be-

45 tween estimators is made through simulation via their Biases, Relative Effi-

46

47 ciency (RE), and Pitman Nearness Probability (P N ). Estimators based on

RSS and M RSS have many advantages over those that are based on SRS.

ly

48

49

50

51 Key Words: Weibull distribution; Bayes; Estimation; Ranked Set Sampling; Sim-

52 ple Random Sample; Modified Ranked Set Sampling.

53

54 e-mail: a.helu@ju.edu.jo

55

56

57

1

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 2 of 29

1

2

3

4

5

6

7

8 1 Introduction

9

10

Weibull distribution has been widely used in so many applications including

11

12 reliability, life time data analysis, climatology, finance, biology, medicine, and

13 engineering.

14 Numerous methods have been suggested for estimating the scale and the

15 shape parameters of Weibull distribution. Cohen (1965), Harter and Moore

16

Fo

17 (1965) used the maximum likelihood method (M L) to derive estimators for

18 the two parameters of Weibull distribution based on the complete and cen-

19 sored samples. Bain and Antle (1967) gave estimators for the two-parameter

20 Weibull distribution based on a modified version of maximum likelihood

rP

21

22 method. Mann (1968) estimated the scale and shape parameters using mo-

23 ments, maximum likelihood and best linear unbiased estimators methods.

24 Hossain and Zimmer (2003) compared the maximum likelihood estimator to

ee

25 the least square estimator based on complete and censored samples. Re-

26

27

cently, Soliman, Ellah and Sultan (2006) derived the Bayes estimates of the

28 two parameters of Weibull distribution using the conjugate prior for the scale

rR

29 parameter and a discrete prior for the shape parameter and compared these

30 estimators with the ML estimators. They applied their study on the record

31

values.

32

33 Ranked Set Sampling (RSS) was first introduced by McIntyre (1952) as a

ev

34 cost efficient alternative to Simple Random Sampling (SRS). Takahasi and

35 Wakimoto (1968) showed that RSS mean is an unbiased estimator for the

36

population mean with smaller variance compared to the SRS mean. Dell

iew

37

38 and Clutter (1972) showed that the mean of RSS is still unbiased whether

39 the ranking is perfect or not. A modified version of RSS known as Strati-

40 fied Ranking Set Sample was introduced by Samawi (1996) to improve the

41 accuracy of estimating the population mean.

42

43 Samawi et al. (1996) used Extreme Ranked Set Sample (ERSS) to de-

On

44 fine an estimator of population mean in the case of a symmetric distribution.

45 Samawi (1996) introduced Double Rank Set Sample (DERSS) as an exten-

46 sion to (ERSS). The Double Ranked Set Sampling (DRSS) which is an ex-

47

tension to the RSS method was presented by Al-Saleh and Al-Kadiri (2000).

ly

48

49 Al-Saleh and Samawi (2004) introduced the Bivariate Extreme Ranked Set

50 Sampling as a procedure to better estimating the parameters of bivariate

51 normal distribution. Al-saleh and Muttalk (1998) used RSS in Bayesian

52

53

estimation for exponential and normal distributions to reduce Bayes risk.

54 Lavine (1999) examined the procedure of the RSS from a Bayesian point of

55

56

57

2

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 3 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 view and explored some optimality questions.

9 In this article, Maximum Likelihood (M L), Method of moments (M om),

10

11 and Bayes estimators of the shape and scale parameters of Weibull distribu-

12 tion are provided using Simple Random Sampling (SRS), RSS and M RSS.

13 For Bayes methods we used the Inverse Gamma(, ) as the prior distri-

14 bution for the scale parameter, and the non-informative prior for the shape

15

16 parameter.

Fo

17 In addition to deriving the estimators, their performance is also to be

18 investigated and compared through simulation with the most common meth-

19 ods, namely, the maximum likelihood and the moment methods. Bias, Rela-

20

tive Efficiency and Pitman nearness probability are used as criteria for com-

rP

21

22 parison.

23

24

2 Estimation of Parameters Using SRS

ee

25

26

27

28 2.1 Maximum Likelihood Estimation (ML)

rR

29

30 Let X1 , , Xn be independent and identically distributed random variables

31 (r.v.) from two-parameters Weibull distribution with probability density

32 function (p.d.f.)

33

ev

34 1 x /

35 f (x) = x e x > 0, > 0, > 0 (1)

36

= 0 o.w.

iew

37

38

39 The M LE of the parameters and are derived as follows

40

41

42 n

43 L = x1

i exi /

On

i=1

44

X n n

45 1X

46 log(L) = nlog nlog + ( 1) log xi x

i=1

i=1 i

47

ly

n n

48 log(L) n X 1X

49 put = + log xi xi log xi = 0 (2)

50 i=1

i=1

51 n

52 log(L) n 1X

and = + 2 x =0 (3)

53 i=1 i

54

55

56

57

3

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 4 of 29

1

2

3

4

5

6

7

8 Note that there is no closed form solution to equations (2) and (3), there-

9 fore, numerical techniques are used to solve for the M LEs of the scale and

10

11 shape parameters. Several have tackled this problem see for details Cohen

12 (1965).

13

14

15 2.2 Moment Estimation

16 R

Fo

k

17 The k-th moment of Weibull distribution is k = xk f (x)dx = (1 + k ),

18 0

19 R

where (t) = and S 2 be the mean and

xt1 ex dx, where t > 0. Let X

20

rP

0

21 variance of the random sample respectively, let cv denote the coefficient of

22

23 variation XS . Using the method of moments technique to Weibull distribution

24 we get

ee

25

26 X = 1/ (1 + 1 ) (4)

27

28 2 1

S 2 = 2/ (1 + ) 2/ 2 (1 + ) (5)

rR

29

30 q

31 S (1 + 2 ) 2 (1 + 1 )

32 This implies = . (6)

33 X (1 + 1 )

ev

34

35 Equation (6) gives as an estimator of based on cv of the sample and

36 from equation (4) we get

iew

37

38 !

39 X

= . (7)

40 (1 + 1 )

41

42

43

We will use numerical methods to solve equations (6) and (7).

On

44

45

46

2.3 Bayes Estimation

47 Let X1 , X2 , ..., Xn be distributed as in equation (1), where and are

ly

48

49 realization of the random variables and respectively. We like to study

50 Bayes estimators under squared error loss. Let and be independent with

51 prior probability density functions given by the non-informative prior,

52

53 1

54 1 () = ; >0 (8)

55

56

57

4

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 5 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 and the Inverse Gamma density, IG(, ) given by

9

10

11

12 2 () = e/ ; >0 (9)

13 () +1

14 Let the observed sample values be x= (x1 , ..., xn ), then the conditional

15

16

joint p.d.f. of the sample is given by:

Fo

17

18 1 "

n

#

19 n n X

20 L(x|, ) = n xi exp xi / ; xi > 0 i = 1, ..., n.

i=1

rP

21 i=1

22

23 Using the priors given in (8) and (9) we get the joint posterior p.d.f. of

24 and by:

ee

25

26 1 n

n1

n

1

P

27 xi exp xi +

28 i=1

i=1

(, |x) = ; (10)

rR

29 K n++1 (n + )

30 1

n

31

Z

xi n1

32 i=1

33 where K = n n+ d and , > 0, xi > 0, i = 1, ..., n

ev

34 P

0 xi +

35 i=1

36

iew

37 The marginal posterior p.d.f. of is:

38

39 1

n

n1

40 xi

41 i=1

1 (|x) = n+ ; > 0, xi > 0, i = 1, ..., n (11)

42 P

n

43 K xi +

On

44 i=1

45 The marginal posterior p.d.f. of is:

46

47

" n !#

ly

48 Z 1

49 1 n 1 X

50 2 (|x) = n1 xi exp xi + d;

K n++1 (n + ) i=1 i=1

51 0

52 (12)

53 where > 0, xi > 0, i = 1, ..., n.

54

55

56

57

5

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 6 of 29

1

2

3

4

5

6

7

8 Under squared error loss function, the Bayes estimator of is:

9

10 1

n

n

11 Z xi

12 i=1

13

E [|x] = n+ d (13)

P

n

14 0 K xi +

15 i=1

16 and the Generalized Bayes estimator of is

Fo

17

18

19

20 1

rP

21

n

n1 P

n

Z Z xi exp 1 xi +

22 i=1 i=1

23 E [|x] = d d

24

K n+ (n + )

0 0

1

ee

25

n

26 n1

Z xi

27 i=1

28 = n n+1 d (14)

P

rR

29 0 (n + 1)K xi +

30 i=1

31

32 Computation of estimates of and are carried out through numerical

33

ev

integration.

34

35

36

3 Estimation Of Parameters Using RSS

iew

37

38

39 The Ranked Set Sampling (RSS) was designed by McIntyer (1952) to im-

40 prove the estimation of the population mean. In many sampling situations

41

42 when the variable of interest from the experimental or observational units

43 can be easily ranked than quantified, it turns out that the use of the method

On

44 of Ranked Set Sampling RSS is highly beneficial and much superior to the

45 standard Simple Random Sampling (SRS) for estimating some of the pop-

46

47

ulation parameters. In order to plan a RSS design, we choose a small size

set, around 2 to 4 to minimize ranking error by visual methods.

ly

48

49 The procedure of RSS is described as follows:

50 Step 1. Select randomly m2 sample units denoted by Xij , i = 1, ..., m

51

52

and j = 1, ..., m, from the population.

53 Step 2. Allocate the m2 selected units randomly into m sets each of size

54 m. Denote the result by:

55

56

57

6

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 7 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 x11 x21 . . . xm1

9 . . ... .

10 .. .. .. ..

11 . . . . .

12 x1m x2m . xmm

13

14

15 Step 3. Without taking any measurements, rank units within each row

16 based

on a criterion chosen by the researcher.

The result is presented as:

Fo

17 x(1)1 x(2)1 . . . . . . x(m)1

18 x(1)2 x(2)2 . . . . . . x(m)2

19

. . . ... .

20

.. .. .. . . .. which will be one cycle.

rP

21 . . . . .

22

x(1)m x(2)m . . x(m)m

23

24

Step 4. Choose a sample by taking the smallest ranked unit from the

ee

25

26 first row, then the second smallest ranked unit from the second row, and

27

28

continue in this fashion until the largest ranked unit is selected from the

last row. As a result, the ranked set sample associated with this cycle will

rR

29

30 be (x(1)1 , x(2)2 , ..., x(m)m ). Note that X(i)i is the ith order statistic X(i) of a

31 sample of size m.

32

Step 5. Repeat step1 through 4 (k) times until the desired sample size

33

ev

34 n = k m, is obtained for analysis, andour RSS sample using k cycleseach

35 of size m such that n = k m will be: X(i)ic , i = 1, ..., m, c = 1, ..., k .

36 To simplify notation, let Yic = X(i)ic , then for fixed c, Yic , i = 1, ..., m are

iew

37 independent with p.d.f. equal to p.d.f. of X(i) and given by:

38

39

m!

40 g(yic ) = [F (yic )]i1 f (yic ) [1 F (yic )]mi (15)

41 (i 1)! (m i)!

42

Let Yc = (Y1c , Y2c , ..., Ymc ) , i.e. the RSS sample of cycle c.

43

On

44 Let Y= (Y1 , Y2 , ..., Yk ) , then the conditional joint p.d.f. of Y given =

k m

45

46

and = is given by: L(y|, ) = g(yic |, ).

c=1 i=1

47 Our interest is to derive Bayes estimators of Weibull distribution param-

ly

48 eters using RSS. Let Xij be distributed as in (1) then the conditional p.d.f.

49

of Y given and will be:

50

51

52

53

54

55

56

57

7

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 8 of 29

1

2

3

4

5

6

7

8

9

k

m m! h

ii1

1

10 L(y|, ) = yic 1 eyic / e(mi+1)yic / ,

11 c=1i=1 (i 1)! (m i)!

12 (16)

13 which can be written as

14

15 kP P

m

1

16 km (m i + 1)yic

m! c=1 i=1

Fo

17 L(y|, ) = exp

18

19

20

rP

21 h ii1

1 /

yic

22 y

k m ic 1 e

23 . (17)

24 c=1i=1 (i 1)! (m i)!

ee

25

26

27 3.1 Maximum Likelihood Estimation

28

rR

29 Using the set up in section 3, we get the M LE of the parameters and ,

30

by differentiating the likelihood in equation (17) with respect to and and

31

32 equating to zero

33

ev

k m k m

34 log(L) km XX

(m i + 1)yic XX (i 1)y log yic

ic

= + log yic [1 ]+ / =0

35 c=1 i=

c=1 i=

y

(e ic 1)

36

iew

37 k Xm k Xm

38 log(L) X X (i 1)yic

39 = (m i + 1)yic m k =0

c=1 i= c=1 i=

(eyic / 1)

40

41 equations are solved numerically for and

42

43

On

44 3.2 Moment Estimation

45

46 Using the set up in section 3, we get the method of moments estimators by

47 using the moments of Yic = X(i)ic . The lth moment of Yic is

ly

48

49 Z

50 m l+1 myic /

l = y e dyic

51 ic

52 0

53 l l

54 = ( ) (1 + ).

55

m

56

57

8

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 9 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 Let Y and Sy2 be the mean and variance of the random sample, given by:

9 P

k P

m P

k P

m

10 X(i)ic (X(i)ic Y )2

11 Y = c=1i=1

mk

& Sy2

= c=1i=1

mk1

, let cv denotes the coefficient of varia-

12 tion.

13 Equating the first and the second moments of the population with those

14

of the sample we get

15

16

Fo

17 1

18 Y = ( )1/ (1 + ) (18)

19 qm

20

Sy (1 + 2 ) 2 (1 + 1 )

rP

21 = . (19)

22 Y (1 + 1 )

23

24 Solving equation (19) we get as an estimator of based on cv of the

ee

25 sample and from equation (18) we get

26

27

!

28 Y

= m . (20)

rR

29 (1 + 1 )

30

31 We will use numerical methods to solve 19 and 20.

32

33

ev

34 3.3 Bayes Estimation

35

36 Using the set up in section 3, and the priors of and given by (8) and (9)

we get the joint posterior p.d.f. of and given Y= y to be:

iew

37

38

/

39 e

40 L(y|, ) 1

() +1

41 (, |y) =

42 RR 1 e/

43 L(y|, ) d d

() +1

On

0 0

44 k m

P P

45 km

(mi+1)yic

46 = m!

exp c=1i=1

47

ly

48 i1

49 k m

1

yic 1eyic /

50 (i1)!(mi)!

e/

+1

51 c=1i=1

52

R

R , > 0, > 0 (21)

53 1 e/

L(y|, ) +1

d d

54 0 0

55

56

57

9

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 10 of 29

1

2

3

4

5

6

7

8 The marginal posterior p.d.f. of is:

9

10 Z

11

1 (|y) = (, |y) d; >0 (22)

12

13 0

14

15 The marginal posterior p.d.f. of is:

16

Fo

17 Z

18 2 (|y) = (, |y) d; >0 (23)

19

20 0

rP

21

22 Under squared error loss function, the Bayes estimator of is

23

Z

24

E |y = 1 (|y)d (24)

ee

25

26

0

27

28 and the Generalized Bayes estimator of is

rR

29

30 Z

31

32 E |y = 2 (|y)d (25)

33

ev

0

34

35 Computation of estimates of and are carried through numerical inte-

36 gration.

iew

37

38

39

40 4 Estimation Of Parameters Using MRSS

41

42 As a special case of RSS, the Modified Ranked Set Sampling (M RSS) is

43 used. It is obtained by using the first three Steps of RSS then the sample is

On

44

chosen by taking the smallest ranked unit from each row of the m rows. The

45

46 M RSS of one cycle will be (x(1)1 , x(1)2 , ..., x(1)m ), and the M RSS sample of

47 cycle c will be denoted by (x(1)1c , x(1)2c , ..., x(1)mc ).

ly

48 Next, we repeat the previous steps k times until the desired sample size

49

n = k m, is obtained.

50

51 The M RSS that is obtained from each cycle consists of independent and

52 identically distributed variables, since X(1)ic is distributed as the first order

53 statistic in a random sample of size m from the original distribution.

54

55

56

57

10

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 11 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 The required M RSS will be {X(1)ic , i = 1, ..., m; c = 1, ..., k}.

9 Let Uic = X(1)ic , then the p.d.f. of Uic is given by

10

11 m 1 muic /

12 h(uic ) = u e , uic > 0, > 0, > 0

13 ic

14 Let Uc = (U1c , ..., Umc ) and U= (U1 , ...,Uk ), then the conditional joint

15

16 p.d.f. of U given = and = is given by:

Fo

17

18 k m m

19 L(u|, ) = u1

ic emuic / , uic > 0, i = 1, ..., m; c = 1, ..., k (26)

20 c=1i=1

rP

21

22

23

4.1 Maximum Likelihood Estimation

24

ee

25 To get the M LE of the parameters and in case of M RSS we differentiate

26 equation (26) with respect to and and equating to zero

27

28

rR

29 k m

log(L) km X X m

30 = + log uic [1 uic ] = 0 (27)

31 c=1 i=1

32

Xk X m

33 log(L) uic

ev

34 = k =0 (28)

35

c=1 i=1

2

36

These equations are solved numerically for and .

iew

37

38

39

40

4.2 Moment Estimation

41 Using the set up in section 4, we get the method of moments estimators by

42

43 using the moments of Uic = X(1)ic . The lth moment of Uic is

On

44 Z

45 m l+1 muic /

46 l = u e duic

ic

47 0

ly

48

l l

49 = ( ) (1 + ).

50 m

51 P

k P

m P

k P

m

X(1)ic )2

(X(1)ic U

52

53 Let U = c=1i=1

mk

& Su2 = c=1i=1mk1 be the mean and variance of the

54 random sample respectively and let cv denotes the coefficient of variation.

55

56

57

11

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 12 of 29

1

2

3

4

5

6

7

8 Equating the first and second moments of the population with those of

9 the sample we get

10

11

12

13

1

14 U = ( )1/ (1 + ) (29)

15 qm

16

(1 + 2 ) 2 (1 + 1 )

Fo

17 Su

= . (30)

18 U (1 + 1 )

19

20 b

rP

21 Equation (30) gives

as an estimator of based on cv of the sample and

22 from equation (29) we get

23

24 !b

U

ee

25

26 b

=m . (31)

27 (1 + b1 )

28

We will use numerical methods to solve equations 30 and 29.

rR

29

30

31

32 4.3 Bayes Estimation

33

ev

34

The Bayes and the Generalized Bayes estimate of and respectively in

35 the M RSS case are exactly as in formulas (24) and (25) of RSS, with the

36 exception that equation (26) is used instead of equation (17) in deriving the

iew

37 posterior distributions of and .

38

39

40

41 5 Simulation Results

42

43

On

44 For fixed = 3 and = 2 a random sample of size 1000 is chosen from

45 Inverse Gamma(, ). Let =the sample mean of IG(, ) of size 1000.

46 We choose = 3 and generate data from Weibull distribution using sam-

47

ple size n = 10(10)60, 100, and 120. For each n, a set (m; k) is determined

ly

48

49 such that n = m k. For the chosen set of parameters and each sample of

50 size n, a 10, 000 data set are simulated and nine estimators of and are

51 computed.

52

53 A comparison between these estimators is done through three different

54 criteria, namely,

55

56

57

12

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 13 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 (i) Bias: is computed as Bias = , where is the average of the

9

10 10, 000 estimates of , and is the value that is used in the simulation

11

ER(1 )

12 (ii) Relative Efficiency (RE): is computed as RE(1 , 2 ) = ,

13 ER(2 )

14 P

10,000

15 where ER(j ) = 10,000

1

(ji )2 , ji being the estimate of for

16 i=1

Fo

17 the ith simulated data set and j = 1, ..., 9. We say that 1 is better

18 estimator than, 2 , if RE < 1. In this paper the RE is computed as

19

ER(j )

20 follows RE(j , M LE(SRS) ) = , j = 1, ..., 8.

rP

21 ER(M LE(SRS) )

22

23 (iii) Pitman Nearness (PN) probability which is computed as

24

1

ee

25

26 P N = P {|1 | < |2 |} = #[|1i | < |2i |],

10, 000

27

28

where 1i and 2i are the estimates of for the ith simulated data set,

rR

29

30 of the pair 1 , 2 . We say 1 is a better estimator than 2 if P N > 0.5.

31

32 Due to the large number of Tables of results, only results for two param-

33 eter sets, namely, = 3, = 3, = 2 given = 0.9871, and = 1, = 1,

ev

34 = 0.5 given = 3.4036 are reported.

35

36

iew

37

38 6 Results and Remarks

39

40 Various results of the simulation are provided in Tables 1 to 8 which are

41 shown at the end of this section and a summary of the results are provided

42

43

below.

On

44 Shape parameter

45

46 In terms of bias, it can be noticed from Table (1) that e Bayes(RSS)

47 b

has the smallest bias compared to

Bayes(M RSS) and b

Bayes(SRS) , while

ly

48

49 b

Bayes(M RSS) has a bias value that is slightly higher than that for

50

b

Bayes(SRS) .

51

52

53 Moreover, biases of M LE and M om based on M RSS are equivalent

54 to those of M LE and M om based on SRS.

55

56

57

13

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 14 of 29

1

2

3

4

5

6

7

8 It can be seen from Table 2 that e Bayes(RSS) is the most efficient com-

9 pared to the other estimators. On the other hand, when n is small

10

b

11 Bayes(M RSS) is more efficient than b

M LE(SRS) , whereas when n is mod-

12 b

13 erate to large

Bayes(M RSS) and bM LE(SRS) are comparable.

14

15 In terms of P N values, Tables 3 & 4 show that the Bayes estimators are

16 better than both M LE and M om estimators under the same sampling

Fo

17 method, i.e.

18

19

20

b

Bayes(SRS) is better than both b

M LE(SRS) and b

M om(SRS)

rP

21 e

Bayes(RSS) is better than both e

M LE(RSS) and e

M om(RSS)

22

b b b

23

Bayes(M RSS) is better than both

M LE(M RSS) and

M om(M RSS) .

24

ee

25

26 The Bayes estimator under RSS is the best estimator in terms of P N

27 probability.

28

rR

29 The estimators based on M RSS outperform those based on SRS when

30 n is small, whereas the estimators based on SRS are slightly better than

31

32

those based on M RSS when n is large (n > 30) , see Tables 3 & 4.

33

ev

b

34

M LE(M RSS) and b

M LE(SRS) are equivalent when n is large (n 40)

35 b

36 and when n is small

M LE(M RSS) outperforms b

M LE(SRS) .

iew

37

38

39 Scale parameter

40

41 Table 5 shows that e b

GBayes(RSS) has the smallest bias, while

GBayes(M RSS)

42 has a bias value that is relatively small and comparable with the bias

43

On

44 of the estimators based on SRS.

45

46 In general, e

GBayes(RSS) has the smallest RE values which means that it

47 is the most efficient compared to the other estimators, see Table 6. We

ly

48 also notice that for small sample size, the estimators under M RSS are

49

more efficient than b M LE(SRS) , moreover they are equivalent for large

50

51 sample size.

52

53 Under SRS scheme, GBayes and M om estimators are more efficient

54 than M LE estimator (RE < 1).

55

56

57

14

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 15 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 From Table 7 we can clearly see that P N probability of the estimators

9 based on SRS relative to the estimators based on RSS are around

10

11 0.4, which indicates the poor performance of the estimators based

12 on SRS. Moreover, the GBayes(RSS) outperforms both M LE(RSS) and

13 M om(RSS) ( P N < 0.5), see Table 8.

14

15 Table 8 shows that the P N probability of GBayes(RSS) relative to the

16

estimators based on M RSS are all around 0.5, which indicates that

Fo

17

18 although the estimators based on M RSS do not outperform the esti-

19 mators based on RSS, but they are comparable. In addition, for small

20 sample size the b b

M LE(M RSS) , b

M om(M RSS) and

GBayes(M RSS) outper-

rP

21 form the estimators based on SRS.

22

23

24 In this paper we have used three different estimation methods namely

(M LE, M om and the Bayes) based on three different sampling procedures

ee

25

26 (SRS, RSS, and M RSS). We studied the performance of these estimators

27

based on three criteria Bias, RE and P N probability. One could suggest

28

to use any of M LE, M om or Bayes estimators based on RSS procedure as

rR

29

30 long as there are no ranking errors caused by a large set size m. However,

31 since M RSS has an advantage of reducing the ranking errors over the RSS

32

in case of large set size m, as well as reducing the cost, then we recommend

33

ev

34 to use the estimators based on M RSS

35

36

iew

37

38

39

40

41

42

43

On

44

45

46

47

ly

48

49

50

51

52

53

54

55

56

57

15

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 16 of 29

1

2

3

4

5

6

7

8

9

10

11

Table 1: Bias comparison between estimators of when = 3, = 2, = 0.9871 and

12

13 =3

14

SRS RSS M RSS

b b b e e e b

b

b

15 (m; k) M LE M om Bayes M LE M om Bayes M LE M om Bayes

16

Fo

17 (2; 5) 0.5222 0.2957 0.3566 0.4071 0.1950 0.3010 0.4862 0.2624 0.3935

18 (2; 10) 0.2210 0.1158 0.1560 0.2010 0.0948 0.1547 0.2378 0.1332 0.2241

19

20 (3; 10) 0.1401 0.0715 0.0993 0.1151 0.0484 0.0922 0.1583 0.0887 0.1678

rP

21

22

23 (4; 10) 0.1048 0.0521 0.0749 0.0759 0.0236 0.0620 0.1054 0.0541 0.1219

24 (4; 15) 0.0743 0.0401 0.0545 0.0439 0.0099 0.0348 0.0618 0.0371 0.0763

ee

25 (4; 25) 0.0366 0.0151 0.0250 0.0297 0.0097 0.0241 0.0405 0.0216 0.0505

26

27 (4; 30) 0.0308 0.0139 0.0150 0.0195 0.0031 0.0113 0.0349 0.0169 0.0435

28

rR

29

30

31

32

33

ev

34

35 Table 2: RE comparison between estimators of with respect to M LE(SRS) when = 3,

36

= 2, = 0.9871 and = 3

iew

37

38 SRS RSS M RSS

b b b e e e b

b

b

39 (m; k) M LE M om Bayes M LE M om Bayes M LE M om Bayes

40

41 (2; 5) 1.0000 0.7914 0.7466 0.6118 0.6461 0.5940 0.9440 0.7589 0.7088

42 (2; 10) 1.0000 0.8748 0.8765 0.6257 0.6643 0.6029 0.9459 0.8917 0.9034

43

On

44

(3; 10) 1.0000 0.9181 0.9129 0.6484 0.7068 0.6144 0.9467 0.8922 0.9357

45

46

47 (4; 10) 1.0000 0.9412 0.9339 0.6898 0.7450 0.6426 1.0176 0.9198 0.9655

ly

48 (4; 15) 1.0000 0.9759 0.9521 0.7170 0.7647 0.6816 1.0310 0.9831 1.0331

49 (4; 25) 1.0000 0.9859 0.9727 0.7259 0.7749 0.6840 1.0441 1.0261 1.0369

50

51 (4; 30) 1.0000 0.9979 0.9774 0.8495 0.8318 0.7743 1.0773 1.0403 1.0456

52

53

54

55

56

57

16

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 17 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9 Table 3: PN comparison between different estimators of when = 3, = 2, = 0.9871

10 and = 3

11 (cycle size; number of cycles)

12 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

13 vs

M om(SRS) 0.4758 0.5138 0.5210 0.5336 0.5337 0.5394 0.5396

14 M LE(SRS)

15

vs 0.3748 0.4156 0.4314 0.4524 0.4592 0.4686 0.4732

M LE(SRS) Bayes(SRS)

16

Fo

17

18 M LE(SRS)

vs e

M LE(RSS) 0.4260 0.4368 0.4409 0.4426 0.4430 0.4429 0.4433

19 vs e

M om(RSS) 0.4460 0.4466 0.4498 0.4552 0.4616 0.4572 0.4640

M LE(SRS)

20 vs. e

Bayes(RSS) 0.4328 0.4380 0.4440 0.4450 0.4482 0.4484 0.4488

rP

21 M LE(SRS)

22

b

23

M LE(SRS)

vs

M LE(M RSS) 0.4902 0.4920 0.4950 0.5060 0.5104 0.5120 0.5162

24

b

vs

ee

25

M LE(SRS)

M om(M RSS) 0.4802 0.4836 0.498 0.5026 0.5030 0.5095 0.5122

26 b

27 vs

Bayes(M RSS) 0.4758 0.4890 0.4922 0.4992 0.5034 0.5088 0.5126

M LE(SRS)

28

rR

29

30 M om(SRS)

vs Bayes(SRS) 0.4144 0.4302 0.4360 0.4392 0.4544 0.4564 0.4630

31

32 vs e

M LE(RSS) 0.4256 0.4414 0.4422 0.4456 0.4468 0.4514 0.4916

M om(SRS)

33 vs e

ev

34 M om(SRS)

M om(RSS) 0.4526 0.4570 0.4590 0.4592 0.4626 0.4708 0.4812

35 vs e

Bayes(RSS) 0.4250 0.4390 0.4394 0.4424 0.4566 0.4686 0.4720

M om(SRS)

36

iew

37

b

38 M om(SRS)

vs

M LE(M RSS) 0.4852 0.4906 0.5006 0.5076 0.5162 0.5164 0.5206

39

b

40 M om(SRS)

vs

M om(M RSS) 0.4856 0.4948 0.4988 0.5044 0.5070 0.5106 0.5112

b

41

M om(SRS)

vs

Bayes(M RSS) 0.4842 0.4908 0.4980 0.4984 0.5086 0.5098 0.5162

42

43

On

44 vs e

M LE(RSS) 0.4312 0.4460 0.4464 0.4466 0.4496 0.4586 0.4938

Bayes(SRS)

45 e

46 Bayes(SRS)

vs M om(RSS) 0.4556 0.4562 0.4566 0.4672 0.4690 0.4740 0.4822

47 vs e

0.4292 0.4426 0.4428 0.4446 0.4478 0.4532 0.4740

Bayes(SRS) Bayes(RSS)

ly

48

49

b

50 Bayes(SRS)

vs

M LE(M RSS) 0.4938 0.4950 0.5112 0.5120 0.5226 0.5228 0.5312

51

b

52 Bayes(SRS)

vs

M om(M RSS) 0.4904 0.4982 0.5054 0.5098 0.5108 0.5164 0.5198

b

53

Bayes(SRS)

vs

Bayes(M RSS) 0.4930 0.4940 0.5082 0.5098 0.5106 0.5154 0.5206

54

55

56

57

17

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 18 of 29

1

2

3

4

5

6

7

8

9

10

11

12

Table 4: PN comparison between different estimators of when = 3, = 2, = 0.9871

13

and = 3

14

15

(cycle size; number of cycles)

16 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

Fo

17 e

M LE(RSS) vs e

M om(RSS) 0.5180 0.5410 0.5448 0.5502 0.5550 0.5662 0.5716

18

19 e

e

vs 0.3876 0.4222 0.4302 0.4478 0.4634 0.4650 0.4810

M LE(RSS) Bayes(RSS)

20

rP

21

e b

22 M LE(RSS) vs

M LE(M RSS) 0.5390 0.5598 0.5664 0.5710 0.5704 0.5810 0.5842

e b

23

24

M LE(RSS) vs

M om(M RSS) 0.5362 0.5610 0.5656 0.5752 0.5756 0.5760 0.5824

e b

M LE(RSS) vs

ee

25 Bayes(M RSS) 0.5190 0.5524 0.5636 0.5666 0.5674 0.5704 0.5778

26

27

28 e

M om(RSS) vs e

Bayes(RSS) 0.4232 0.4326 0.4366 0.4384 0.4424 0.4440 0.4482

rR

29

30 b

31 M om(RSS) vs

e M LE(M RSS) 0.5302 0.5376 0.5386 0.5404 0.5506 0.5510 0.5682

32 e b

33 M om(RSS) vs

M om(M RSS) 0.5206 0.5326 0.5414 0.5418 0.5442 0.5552 0.5574

ev

e b

34 M om(RSS) vs

Bayes(M RSS) 0.5068 0.5363 0.5364 0.5374 0.5506 0.5518 0.5546

35

36

e b

Bayes(RSS) vs

iew

37 M LE(M RSS) 0.5532 0.5582 0.5708 0.5716 0.5716 0.5820 0.5898

38

e b

39 Bayes(RSS) vs

M om(M RSS) 0.5518 0.5560 0.5576 0.5616 0.5634 0.5674 0.5768

40

e b

41 Bayes(RSS) vs

Bayes(M RSS) 0.5380 0.5580 0.5674 0.5696 0.5736 0.5764 0.5820

42

43

On

44 b b

45

M LE(M RSS) vs

M om(M RSS) 0.4318 0.4490 0.4494 0.4596 0.4614 0.4624 0.4698

46 b

b

47 M LE(M RSS) vs

Bayes(M RSS) 0.3954 0.4034 0.4696 0.4682 0.4850 0.5268 0.5342

ly

48

49 b

b

50 M om(M RSS) vs

Bayes(M RSS) 0.4506 0.4636 0.4777 0.4834 0.4819 0.4900 0.5003

51

52

53

54

55

56

57

18

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 19 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9

10

11 Table 5: Bias comparison between estimators of when = 3, = 2, = 0.9871, and

12 =3

13 SRS RSS M RSS

14 (m; k) M LE M om GBayes M LE M om GBayes b

M LE b

b

M om

GBayes

15

16 (2; 5) 0.0420 0.0416 0.0249 0.0264 0.0252 0.0244 0.0459 0.0464 0.0352

Fo

17

18 (2; 10) 0.0242 0.0237 0.0243 0.0251 0.0247 0.0230 0.0455 0.0459 0.0329

19

20

rP

21 (3; 10) 0.0217 0.0209 0.0240 0.0249 0.0242 0.0202 0.0453 0.0435 0.0314

22

23

24 (4; 10) 0.0180 0.0167 0.0218 0.0244 0.0223 0.0153 0.0449 0.0395 0.0286

ee

25

26 (4; 15) 0.0166 0.0155 0.0192 0.0221 0.0193 0.0146 0.0444 0.0363 0.0284

27 (4; 25) 0.0139 0.0119 0.0104 0.0139 0.0108 0.0102 0.0372 0.0352 0.0281

28

rR

29 (4; 30) 0.0023 0.0094 0.0017 0.0128 0.0099 0.0012 0.0371 0.0346 0.0280

30

31

32

33

ev

34

35

36 Table 6: RE comparison between estimators of with respect to M LE(SRS) when = 3,

iew

37 = 2, = 0.9871, and = 3

38

39

SRS RSS M RSS

40 (m; k) M LE M om GBayes M LE M om GBayes b

M LE b

M om b

GBayes

41 (2; 5) 1.0000 0.5720 0.8878 0.5033 0.7821 0.6441 0.6809 0.6569 0.6706

42

43 (2; 10) 1.0000 0.7694 0.9384 0.7555 0.8205 0.7052 0.8894 0.8525 0.8242

On

44

45 (3; 10) 1.0000 0.8462 0.9519 0.8368 0.8849 0.8846 0.9286 0.9417 0.9317

46

47

(4; 10) 1.0000 0.8760 0.9587 0.8771 0.8999 0.9008 1.0231 0.9429 0.9897

ly

48

49 (4; 15) 1.0000 0.9036 0.9608 0.9156 0.9159 0.9156 1.0149 0.9994 1.0054

50 (4; 25) 1.0000 0.9412 0.9638 0.9478 0.9431 0.9421 1.0120 1.1093 1.0141

51 (4; 30) 1.0000 0.9535 0.9767 0.9555 0.9559 0.9539 1.0129 1.1157 1.0149

52

53

54

55

56

57

19

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 20 of 29

1

2

3

4

5

6

7

8

9

10 Table 7: RE comparison between estimators of with respect to M LE(SRS) when = 3,

11 = 2, = 0.9871, and = 3

12 (cycle size; number of cycles)

13 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

14

15 M LE(SRS) vs M om(SRS ) 0.1904 0.2802 0.3382 0.3658 0.3940 0.4398 0.4522

16 M LE(SRS) vs GBayes(SRS) 0.0478 0.0644 0.0836 0.0872 0.1054 0.1448 0.1494

Fo

17

18

19 M LE(SRS) vs M LE(RSS) 0.359 0.3656 0.3722 0.3736 0.3828 0.3920 0.431

20 M LE(SRS) vs M om(RSS) 0.3494 0.3662 0.3720 0.3748 0.3816 0.3892 0.4158

rP

21 M LE(SRS) vs. GBayes(RSS) 0.3416 0.3576 0.3656 0.3700 0.3768 0.3790 0.3800

22

23

24 b

M LE(SRS) vs

M LE(M RSS) 0.4944 0.5402 0.5498 0.5612 0.5620 0.5670 0.5678

ee

25 b

26 M LE(SRS) vs

M om(M RSS ) 0.4770 0.5278 0.5426 0.5566 0.5576 0.5661 0.5662

27 b

M LE(SRS) vs

GBayes(M RSS) 0.4190 0.497 0.5126 0.5356 0.551 0.5524 0.5590

28

rR

29

30 M om(SRS) vs GBayes(SRS) 0.0696 0.1216 0.162 0.2028 0.2744 0.3444 0.3466

31

32

M om(SRS) vs M LE(RSS) 0.3688 0.3748 0.3776 0.3778 0.3834 0.3990 0.4480

33

ev

34 M om(SRS) vs M om(RSS) 0.3590 0.3738 0.3766 0.3768 0.3834 0.3950 0.4306

35 M om(SRS) vs GBayes(RSS) 0.3516 0.3648 0.3686 0.3738 0.3790 0.3832 0.3968

36

iew

37

38 b

M om(SRS) vs

M LE(M RSS) 0.4859 0.5488 0.5592 0.5644 0.5684 0.5696 0.5706

39 b

40

M om(SRS) vs

M om(M RSS) 0.4999 0.5308 0.5520 0.5592 0.564 0.5676 0.5700

41 b

M om(SRS) vs

GBayes(M RSS) 0.4394 0.5068 0.5222 0.5434 0.5532 0.5554 0.5584

42

43

On

44 GBayes(SRS) vs M LE(RSS ) 0.3828 0.3864 0.3880 0.3902 0.3960 0.4182 0.5058

45 GBayes(SRS) vs M om(RSS ) 0.3830 0.3856 0.3886 0.3892 0.3898 0.4160 0.4810

46

47

GBayes(SRS) vs. GBayes(RSS) 0.3766 0.3784 0.3790 0.3800 0.3864 0.4036 0.4516

ly

48

49 b

GBayes(SRS) vs

M LE(M RSS) 0.5686 0.5704 0.5712 0.5736 0.5778 0.5864 0.5888

50

51

b

GBayes(SRS) vs

M om(M RSS) 0.5516 0.5554 0.5696 0.5732 0.5764 0.5768 0.5770

52 b

GBayes(SRS) vs

GBayes(M RSS) 0.4978 0.5360 0.5410 0.5566 0.5586 0.5658 0.5668

53

54

55

56

57

20

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 21 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9

10

11

12

13

14 Table 8: RE comparison between estimators of with respect to M LE(SRS) when = 3,

15 = 2, = 0.9871, and = 3

16 (cycle size; number of cycles)

Fo

17 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

18

19

M LE(RSS ) vs M om(RSS) 0.3254 0.4548 0.4656 0.4912 0.4934 0.4934 0.5034

20 M LE(RSS ) vs GBayes(RSS) 0.0666 0.1428 0.1480 0.184 0.2056 0.2364 0.2462

rP

21

22 b

23 M LE(RSS ) vs

M LE(M RSS) 0.5756 0.6584 0.6924 0.6926 0.6976 0.7004 0.7038

24 b

M LE(RSS ) vs

M om(M RSS) 0.5502 0.6496 0.6876 0.6894 0.6900 0.6946 0.6970

ee

25

b

M LE(RSS ) vs

GBayes(M RSS) 0.4806 0.6298 0.6514 0.6780 0.6842 0.6850 0.6908

26

27

28 M om(RSS) vs GBayes(RSS) 0.2600 0.4472 0.4480 0.4490 0.4688 0.4740 0.4802

rR

29

30

31 b

M om(RSS) vs

M LE(M RSS) 0.594 0.6642 0.6912 0.6870 0.6960 0.6978 0.7010

32 b

33 M om(RSS) vs

M om(M RSS) 0.5740 0.6510 0.684 0.6846 0.6884 0.6894 0.6896

ev

34 b

M om(RSS) vs

GBayes(M RSS) 0.5028 0.6326 0.6552 0.6724 0.6810 0.6814 0.6842

35

36

b

GBayes(RSS) vs

M LE(M RSS) 0.5647 0.5684 0.5692 0.5707 0.5739 0.5811 0.5933

iew

37

38 b

39

GBayes(RSS) vs

M om(M RSS) 0.5198 0.5260 0.5328 0.5490 0.5510 0.5520 0.5740

40 b

GBayes(RSS) vs

GBayes(M RSS) 0.5480 0.5568 0.5684 0.5864 0.5878 0.5892 0.5974

41

42

43

On

44 b

b

M LE(M RSS) vs

M om(M RSS) 0.4990 0.5020 0.5040 0.5088 0.5130 0.5358 0.5360

45 b b

46

M LE(M RSS) vs

GBayes(M RSS) 0.4722 0.4874 0.4942 0.5168 0.5260 0.5276 0.5536

47

ly

48 b

b

M om(M RSS) vs

GBayes(M RSS) 0.5028 0.6326 0.6552 0.6724 0.6810 0.6814 0.6842

49

50

51

52

53

54

55

56

57

21

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 22 of 29

1

2

3

4

5

6

7

8

9

10

11

Table 9: Bias comparison between estimators of when = 1, = 0.5, = 3.40364 and

12

13 =1

14

SRS RSS M RSS

b b b e e e b

b

b

15 (m; k) M LE M om Bayes M LE M om Bayes M LE M om Bayes

16

Fo

17 (2; 5) 0.1680 0.1662 0.0419 0.1388 0.1525 0.0328 0.1581 0.1604 0.07634

18 (2; 10) 0.0774 0.0878 0.0215 0.0631 0.0824 0.0177 0.0756 0.0878 0.0398

19

20 (3; 10) 0.0471 0.0589 0.0111 0.0390 0.0556 0.0109 0.0496 0.0620 0.0343

rP

21

22

23 (4; 10) 0.0372 0.0473 0.0105 0.0246 0.0381 0.0088 0.0403 0.0501 0.0329

24 (4; 15) 0.0255 0.0346 0.0080 0.0130 0.0232 0.0026 0.0238 0.0357 0.0190

ee

25 (4; 25) 0.0120 0.0187 0.0027 0.0092 0.0186 0.0020 0.0138 0.0194 0.0106

26

27 (4; 30) 0.0119 0.0168 0.0020 0.0077 0.0133 0.0017 0.0128 0.0169 0.0102

28

rR

29

30

31

32

33

ev

34

35 Table 10: RE comparison between estimators of with respect to M LE(SRS) when = 1,

36

= 0.5, = 3.40364 and = 1

iew

37

38 SRS RSS M RSS

b b b e e e b

b

b

39 (m; k) M LE M om Bayes M LE M om Bayes M LE M om Bayes

40

41 (2; 5) 1.0000 0.8621 0.5025 0.5996 0.7921 0.3823 0.9273 0.8181 0.5989

42 (2; 10) 1.0000 0.8724 0.7054 0.6164 0.8118 0.6067 0.9579 0.8818 0.8185

43

On

44

(3; 10) 1.0000 0.8904 0.7953 0.6370 0.8513 0.6128 0.9859 0.9434 0.9517

45

46

47 (4; 10) 1.0000 0.8999 0.8366 0.6746 0.8620 0.5737 1.0158 1.0328 0.9664

ly

48 (4; 15) 1.0000 0.9432 0.8852 0.7243 0.8811 0.6095 1.0298 1.0333 0.9830

49 (4; 25) 1.0000 0.9636 0.9363 0.7670 0.9033 0.6310 1.0379 1.0464 0.9881

50

51 (4; 30) 1.0000 0.9916 0.9369 0.8016 0.9438 0.7656 1.0451 1.0533 1.0135

52

53

54

55

56

57

22

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 23 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9 Table 11: PN comparison between different estimators of when = 1, = 0.5, =

10 3.40364 and = 1

11 (cycle size; number of cycles)

12 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

13 vs

M om(SRS) 0.4326 0.5376 0.5740 0.5794 0.5864 0.5974 0.6072

14 M LE(SRS)

15

vs 0.4202 0.4384 0.4602 0.4650 0.4682 0.4806 0.4848

M LE(SRS) Bayes(SRS)

16

Fo

17

18 M LE(SRS)

vs e

M LE(RSS) 0.4272 0.4282 0.4328 0.4382 0.4418 0.4680 0.4734

19 vs e

M om(RSS) 0.4800 0.4868 0.5168 0.5202 0.5222 0.5320 0.5486

M LE(SRS)

20 e

vs Bayes(RSS) 0.3952 0.4198 0.4206 0.4248 0.4276 0.4314 0.4488

rP

21 M LE(SRS)

22

b

23

M LE(SRS)

vs

M LE(M RSS) 0.4918 0.4924 0.4966 0.5004 0.5008 0.5074 0.5090

24

b

vs

ee

25

M LE(SRS)

M om(M RSS) 0.4916 0.5204 0.5440 0.5524 0.5544 0.5678 0.5724

26 b

27 vs

Bayes(M RSS) 0.4568 0.4822 0.4934 0.4974 0.5008 0.5016 0.5044

M LE(SRS)

28

rR

29

30 M om(SRS)

vs Bayes(SRS) 0.3670 0.3744 0.3758 0.3762 0.3794 0.3926 0.4009

31

32 vs e

M LE(RSS) 0.3764 0.3806 0.3848 0.3888 0.4036 0.4216 0.4816

M om(SRS)

33 vs e

ev

34 M om(SRS)

M om(RSS) 0.4558 0.4676 0.4704 0.4726 0.4744 0.4790 0.4914

35 vs e

Bayes(RSS) 0.3692 0.3704 0.3720 0.3794 0.3826 0.4062 0.4268

M om(SRS)

36

iew

37

b

38 M om(SRS)

vs

M LE(M RSS) 0.4372 0.4440 0.4470 0.4646 0.4650 0.4660 0.4958

39

b

40 M om(SRS)

vs

M om(M RSS) 0.4842 0.4998 0.5022 0.5022 0.5052 0.5084 0.5112

b

41

M om(SRS)

vs

Bayes(M RSS) 0.4352 0.4444 0.4462 0.4554 0.4572 0.4582 0.4660

42

43

On

44 vs e

M LE(RSS) 0.4410 0.4468 0.4506 0.4608 0.4798 0.4898 0.5380

Bayes(SRS)

45 e

46 Bayes(SRS)

vs M om(RSS) 0.5166 0.5260 0.5412 0.5430 0.5440 0.5530 0.5539

47 vs e

0.4334 0.4356 0.4370 0.4410 0.4706 0.4800 0.4991

Bayes(SRS) Bayes(RSS)

ly

48

49

b

50 Bayes(SRS)

vs

M LE(M RSS) 0.4954 0.4990 0.5100 0.5242 0.5252 0.5458 0.5540

51

b

52 Bayes(SRS)

vs

M om(M RSS) 0.4950 0.4973 0.5514 0.5610 0.5692 0.5794 0.5799

b

53

Bayes(SRS)

vs

Bayes(M RSS) 0.4965 0.4981 0.5064 0.5104 0.5218 0.5188 0.5219

54

55

56

57

23

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 24 of 29

1

2

3

4

5

6

7

8

9

10

11

12

Table 12: PN comparison between different estimators of when = 1, = 0.5, =

13

3.40364 and = 1

14

15

(cycle size; number of cycles)

16 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

Fo

17 e

M LE(RSS) vs e

M om(RSS) 0.4904 0.5802 0.5866 0.6220 0.6292 0.6394 0.6542

18

19 e

e

vs 0.4344 0.4572 0.4622 0.4672 0.4884 0.4944 0.4950

M LE(RSS) Bayes(RSS)

20

rP

21

e b

22 M LE(RSS) vs

M LE(M RSS) 0.5230 0.5542 0.5650 0.5696 0.5760 0.5756 0.5848

e b

23

24

M LE(RSS) vs

M om(M RSS) 0.5210 0.5888 0.5974 0.6298 0.6322 0.6324 0.6430

e b

M LE(RSS) vs

ee

25 Bayes(M RSS) 0.4920 0.5486 0.5642 0.5654 0.5698 0.5722 0.5834

26

27

28 e

M om(RSS) vs e

Bayes(RSS) 0.3378 0.3578 0.3618 0.3660 0.3832 0.3876 0.3878

rR

29

30 b

31 eM om(RSS) vs

M LE(M RSS) 0.5420 0.5476 0.5487 0.5482 0.5494 0.5516 0.5523

32 b

33 M om(RSS)

vs

M om(M RSS) 0.5088 0.5230 0.5296 0.5308 0.5326 0.5384 0.5452

ev

b

34

M om(RSS)

vs

Bayes(M RSS) 0.5042 0.5428 0.5477 0.5482 0.5483 0.5484 0.5504

35

36

e b

Bayes(RSS) vs

iew

37 M LE(M RSS) 0.5654 0.5762 0.5776 0.5816 0.5852 0.5878 0.5908

38

e b

39 Bayes(RSS) vs

M om(M RSS) 0.5612 0.5612 0.5617 0.5634 0.5638 0.5642 0.5662

40

e b

41 Bayes(RSS) vs

Bayes(M RSS) 0.5382 0.5634 0.5742 0.5762 0.5816 0.5828 0.5864

42

43

On

44 b

45 vs

M om(M RSS) 0.4766 0.4840 0.5076 0.5124 0.5178 0.5254 0.5480

M LE(M RSS)

46 b

47 M LE(M RSS)

vs

Bayes(M RSS) 0.4074 0.4240 0.4316 0.4378 0.4514 0.4594 0.4626

ly

48

49 b

b

50 M om(M RSS) vs

Bayes(M RSS) 0.4502 0.4728 0.4778 0.4814 0.4818 0.4830 0.5042

51

52

53

54

55

56

57

24

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 25 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9

10

11 Table 13: Bias comparison between estimators of when = 1, = 0.5, = 3.40364,

12 and = 1

13 SRS RSS M RSS

14 (m; k) M LE M om GBayes M LE M om GBayes b

M LE b

b

M om

GBayes

15

16 (2; 5) 0.0707 0.0806 0.0525 0.0516 0.0603 0.0342 0.0495 0.041 0.0472

Fo

17

18 (2; 10) 0.0639 0.0708 0.04741 0.0472 0.0498 0.0291 0.0471 0.0342 0.0403

19

20

rP

21 (3; 10) 0.0382 0.0476 0.0295 0.0468 0.0366 0.0264 0.0426 0.0296 0.0384

22

23

24 (4; 10) 0.0299 0.0374 0.0239 0.0458 0.0251 0.0222 0.0409 0.0254 0.0349

ee

25

26 (4; 15) 0.0192 0.0259 0.0207 0.0321 0.0207 0.0156 0.0390 0.0234 0.0271

27

28

(4; 25) 0.0088 0.0133 0.0146 0.0264 0.0125 0.0125 0.0323 0.0229 0.0211

rR

29 (4; 30) 0.0086 0.0040 0.0056 0.0157 0.0059 0.0019 0.0233 0.0117 0.0114

30

31

32

33

ev

34

35

36

Table 14: Bias comparison between estimators of when = 1, = 0.5, = 3.40364,

iew

37

and = 1

38

39

SRS RSS M RSS

40 (m; k) M LE M om GBayes M LE M om GBayes b

M LE b

M om b

GBayes

41 (2; 5) 1.0000 0.6901 0.6779 0.8878 0.8126 0.6267 0.7046 0.6191 0.4491

42

43 (2; 10) 1.0000 0.7961 0.8188 0.9384 0.8886 0.7311 0.7767 0.8962 0.7542

On

44

45 (3; 10) 1.0000 0.8337 0.8345 0.9519 0.9319 0.8120 1.0294 0.9716 0.9888

46

47

(4; 10) 1.0000 0.8642 0.8779 0.9587 0.9278 0.8341 1.0296 0.9883 1.0029

ly

48

49 (4; 15) 1.0000 0.9520 0.9205 0.9608 0.9625 0.8370 1.0347 0.9891 1.0231

50 (4; 25) 1.0000 0.9549 0.9565 0.9638 0.9866 0.8530 1.0352 1.0290 1.0333

51 (4; 30) 1.0000 0.9736 0.9663 0.9767 0.9892 0.8676 1.0362 1.0314 1.0363

52

53

54

55

56

57

25

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 26 of 29

1

2

3

4

5

6

7

8

9

10 Table 15: RE comparison between estimators of with respect to M LE(SRS) when = 1,

11 = 0.5, = 3.40364, and = 1

12 (cycle size; number of cycles)

13 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

14

15 M LE(SRS) vs M om(SRS ) 0.4520 0.5272 0.5542 0.5588 0.5642 0.5808 0.5818

16 M LE(SRS) vs GBayes(SRS) 0.3278 0.3776 0.4230 0.4322 0.4704 0.4960 0.4968

Fo

17

18

19 M LE(SRS) vs M LE(RSS) 0.3640 0.3724 0.3788 0.3862 0.3908 0.43 0.4532

20 M LE(SRS) vs M om(RSS) 0.4158 0.4237 0.4400 0.4434 0.4462 0.4478 0.4574

rP

21 M LE(SRS) vs GBayes(RSS) 0.3656 0.3672 0.3712 0.3774 0.3834 0.415 0.4164

22

23

24 b

M LE(SRS) vs

M LE(M RSS) 0.3498 0.3548 0.357 0.3606 0.3654 0.3934 0.4184

ee

25 b

26 M LE(SRS) vs

M om(M RSS ) 0.3576 0.3600 0.3608 0.3652 0.3680 0.3698 0.4136

27 b

M LE(SRS) vs

GBayes(M RSS) 0.3494 0.3516 0.3546 0.3586 0.3644 0.3872 0.3946

28

rR

29

30 M om(SRS) vs GBayes(SRS) 0.4024 0.4068 0.4100 0.4128 0.4144 0.4166 0.4222

31

32

M om(SRS) vs M LE(RSS) 0.3402 0.3426 0.3486 0.3584 0.3650 0.3694 0.3906

33

ev

34 M om(SRS) vs M om(RSS) 0.3976 0.3984 0.4100 0.4160 0.4202 0.4502 0.4507

35 M om(SRS) vs GBayes(RSS) 0.3280 0.3382 0.3426 0.3522 0.3560 0.3788 0.4220

36

iew

37

38 b

M om(SRS) vs

M LE(M RSS) 0.4254 0.5312 0.5344 0.5349 0.5442 0.5564 0.5578

39 b

40

M om(SRS) vs

M om(M RSS) 0.4329 0.5370 0.5384 0.5414 0.5560 0.5599 0.6002

41 b

M om(SRS) vs

GBayes(M RSS) 0.4322 0.5416 0.5516 0.5532 0.5542 0.5545 0.5554

42

43

On

44 GBayes(SRS) vs M LE(RSS ) 0.2336 0.3314 0.3322 0.3332 0.3416 0.3516 0.3964

45 GBayes(SRS) vs M om(RSS ) 0.3896 0.4542 0.4550 0.4622 0.4764 0.4888 0.4892

46

47

GBayes(SRS) vs GBayes(RSS) 0.3326 0.3638 0.3820 0.3886 0.3908 0.4338 0.4530

ly

48

49 b

GBayes(SRS) vs

M LE(M RSS) 0.4221 0.4602 0.4654 0.5075 0.5376 0.5403 0.5453

50

51

b

GBayes(SRS) vs

M om(M RSS) 0.4526 0.4646 0.4996 0.5307 0.5453 0.5453 0.5455

52 b

GBayes(SRS) vs

GBayes(M RSS) 0.4998 0.4580 0.5036 0.5372 0.5378 0.5401 0.5431

53

54

55

56

57

26

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 27 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8

9

10

11

12

13

14 Table 16: RE comparison between estimators of with respect to M LE(SRS) when = 1,

15 = 0.5, = 3.40364, and = 1

16 (cycle size; number of cycles)

Fo

17 P N probability of (2; 5) (4; 5) (3; 10) (4; 10) (4; 15) (4; 25) (4; 30)

18

19

M LE(RSS) vs M om(RSS) 0.4798 0.5594 0.5958 0.6060 0.6136 0.6292 0.6326

20 M LE(RSS) vs GBayes(RSS) 0.3438 0.4510 0.4684 0.4686 0.4874 0.4984 0.5058

rP

21

22 b

23 M LE(RSS) vs

M LE(M RSS) 0.4480 0.4728 0.4732 0.4752 0.4774 0.4788 0.4828

24 b

M LE(RSS) vs

M om(M RSS) 0.4624 0.4774 0.4854 0.4866 0.4886 0.4944 0.4969

ee

25

b

M LE(RSS) vs

GBayes(M RSS) 0.4252 0.4436 0.4688 0.469 0.4736 0.4736 0.4794

26

27

28 M om(RSS) vs GBayes(RSS) 0.3136 0.3680 0.3500 0.3798 0.3904 0.3998 0.4094

rR

29

30

31 b

M om(RSS) vs

M LE(M RSS) 0.3962 0.4032 0.4066 0.4096 0.4233 0.4352 0.4658

32 b

33 M om(RSS) vs

M om(M RSS) 0.3997 0.43 0.3998 0.4009 0.4032 0.4144 0.4602

ev

34 b

M om(RSS) vs

GBayes(M RSS) 0.3892 0.4352 0.3892 0.3998 0.4020 0.4042 0.4274

35

36

b

GBayes(RSS) vs

M LE(M RSS) 0.5460 0.5476 0.5483 0.5485 0.5587 0.5740 0.5972

iew

37

38 b

39

GBayes(RSS) vs

M om(M RSS) 0.5606 0.5608 0.5617 0.5628 0.5699 0.5769 0.5899

40 b

GBayes(RSS) vs

GBayes(M RSS) 0.5132 0.5467 0.5457 0.5476 0.5481 0.5483 0.5486

41

42

43

On

44 b

b

M LE(M RSS) vs

M om(M RSS) 0.4420 0.4480 0.4904 0.5309 0.5368 0.5401 0.5403

45 b b

46

M LE(M RSS) vs

Bayes(M RSS) 0.4332 0.4682 0.4910 0.5064 0.5419 0.5462 0.5486

47

ly

48 b

b

M om(M RSS) vs

GBayes(M RSS) 0.5038 0.5399 0.6020 0.6042 0.6274 0.6502 0.6778

49

50

51

52

53

54

55

56

57

27

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Communications in Statistics Theory and Methods Page 28 of 29

1

2

3

4

5

6

7

8 REFERENCES

9

10

11

Al-Saleh, M. F. and Al-Kadiri, M. (2000). Double ranked set sampling.

12 Statistics and probability letters, 48, 205-212.

13

14 Al-Saleh, M. F. and Muttlak, H. A. (1998). A Note in Bayesian Estimation

15 using Ranked Set Sampling, Pakistan Journal of Statistics. 14 , pp.

16 49-56.

Fo

17

18 Al-Saleh, M. F and Samawi, H., (2004). Estimation Using Bivariate Ex-

19

20 treme Ranked Set Sampling With Application to the Bivariate Normal

rP

21 Distribution, J. of Modern Applied Statistical Methods. 3, 1, 134-142.

22

23 Bain, L. J., and Antle, C. E. (1967). Estimation of Parameters in the

24 Weibull Distribution, Technometrics, 9, 621-627.

ee

25

26 Billman, B. R., Antle, C. E., and Bain, L. J. (1972). Statistical Inference

27

28

from Censored Weibull Samples, Technometrics, 14, 831-840.

rR

29

30 Cohen, A. C. (1965). Maximum likelihood estimation in the Weibull dis-

31 tribution based on complete and censored samples, Technometrics, 7,

32 579-588.

33

ev

34 Dell, T. R. and Clutter, J. L. (1972). Ranked set sampling theory with

35

order statistics background. Biometrics, 28, 545-555.

36

iew

37

38

Harter, H. L. and Moore, A. H. (1965). Point and Interval Estimation,

39 Based on m-order Statistics, for the scale Parameter of a Weibull Pop-

40 ulation with Known Shape Parameter, Technometrics, 7, 405-422.

41

42 Harter, H. L. and Moore, A. H. (1968). Maximum Likelihood Estima-

43 tion from Doubly Censored Samples, of the Parameters of the First

On

44

45 Asymptotic Distribution of Extreme Values. Journal of the American

46 Statistical Association, 63, 889-901.

47

Hossain, A. M., Zimmer, W. J., (2003). Comparison of estimation methods

ly

48

49 for Weibull parameters: complete and censored samples. J. Statist.

50 Comput. Simulation 73, 145-153.

51

52

53

Lavine, M. (1999). The Bayesics of Ranked Set Sampling. J. of Environ-

54 mental & Ecological Statistics, 6, 47-57

55

56

57

28

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

Page 29 of 29 Communications in Statistics Theory and Methods

1

2

3

4

5

6

7

8 Mann, N. R. (1968). Point estimation procedures for the two-parameter

9 Weibul distribution and extreme-value distributions, Technometrics,

10

11 10, 231-256.

12

13 McIntyre, G. A. (1952). A method of unbiased selective sampling, using

14 ranked sets, Australian J. Agricultural Research, 3, 385-390.

15

16 Newby, M. J. (1980). The Properties of Moment Estimators for the Weibul

Fo

17 Distribution Based on the Sample Coefficient of Variation, Technomet-

18

19

rics, 22, 187-194.

20

Samawi. H. (1996). Stratified Ranked Set Sampling, Pakistan J. of stat.,

rP

21

22 12, 9-16.

23

24 Samawi. H., Ahmed, M., and Abu-Dayyeh, W. (1996). Estimating the

ee

25 population mean using extreme ranked set sampling, Biometrical. J.

26

38, 5, 577-586

27

28

Samawi. H., Muttlak, H. (1996). Estimation of ratio using rank set sam-

rR

29

30 pling. Biometrical. J, 36, 6, 753-764.

31

32 Soliman, A. A., Abd Ellah, A. H., Sultan, K. S. (2006). Comparison of

33 estimates using record statistics from Weibull model: Bayesian and

ev

34 non-Bayesian approaches, Computational Statistics and Data Analysis,

35

36 51, 2065-2077.

iew

37

38 Takahasi, K., Wakimoto, K. (1968) On unbiased estimates of the population

39 mean based on the sample stratified by means of ordering, Annals of

40 Institute of Statistical Mathematics. 20, 1-31.

41

42

43

On

44

45

46

47

ly

48

49

50

51

52

53

54

55

56

57

29

58

59

60

URL: http://mc.manuscriptcentral.com/lsta E-mail: comstat@univmail.cis.mcmaster.ca

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5834)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (350)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (824)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (405)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- CostAccounting 2016 VanderbeckDocument396 pagesCostAccounting 2016 VanderbeckAngel Kitty Labor88% (32)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- General Revision For Treasury Management (Please See That You Can Answer The Following 32 Questions and The MCQS)Document14 pagesGeneral Revision For Treasury Management (Please See That You Can Answer The Following 32 Questions and The MCQS)RoelienNo ratings yet

- ScheduleDocument2 pagesScheduleJen NevalgaNo ratings yet

- Multi-Component FTIR Emission Monitoring System: Abb Measurement & Analytics - Data SheetDocument16 pagesMulti-Component FTIR Emission Monitoring System: Abb Measurement & Analytics - Data SheetRonaldo JuniorNo ratings yet

- Find Me Phoenix Book 6 Stacey Kennedy Full ChapterDocument67 pagesFind Me Phoenix Book 6 Stacey Kennedy Full Chaptercatherine.anderegg828100% (21)

- AP Practice Test - BreakdownDocument4 pagesAP Practice Test - BreakdowncheyNo ratings yet

- Presentation - Pragati MaidanDocument22 pagesPresentation - Pragati MaidanMohamed Anas100% (4)

- M Thermal Heat Pump A4Document34 pagesM Thermal Heat Pump A4Serban TiberiuNo ratings yet

- Hospice SynopsisDocument6 pagesHospice SynopsisPhalguna NaiduNo ratings yet

- The Body Productive Rethinking Capitalism Work and The Body Steffan Blayney Full ChapterDocument67 pagesThe Body Productive Rethinking Capitalism Work and The Body Steffan Blayney Full Chaptersharon.tuttle380100% (6)

- Hayden Esterak Resume 1Document1 pageHayden Esterak Resume 1api-666885986No ratings yet

- Title DefenseDocument3 pagesTitle DefenseLiezl Sabado100% (1)

- MT2OL-Ia6 2 1Document136 pagesMT2OL-Ia6 2 1QUILIOPE, JUSTINE JAY S.No ratings yet

- PR m1Document15 pagesPR m1Jazmyn BulusanNo ratings yet

- Air Cooled Flooded Screw ChillersDocument50 pagesAir Cooled Flooded Screw ChillersAhmed Sofa100% (1)

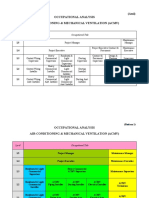

- Occupational StructureDocument3 pagesOccupational StructureEmirul FairuzNo ratings yet

- MTCNA Lab Guide INTRA 1st Edition - Id.en PDFDocument87 pagesMTCNA Lab Guide INTRA 1st Edition - Id.en PDFreyandyNo ratings yet

- Understanding Nuances and Commonalities of Job DesDocument10 pagesUnderstanding Nuances and Commonalities of Job DesAmrezaa IskandarNo ratings yet

- Indian Standard: Methods of Test For Stabilized SoilsDocument10 pagesIndian Standard: Methods of Test For Stabilized Soilsphanendra kumarNo ratings yet

- Nemo Complete Documentation 2017Document65 pagesNemo Complete Documentation 2017Fredy A. CastañedaNo ratings yet

- SoftOne BlackBook ENG Ver.3.3 PDFDocument540 pagesSoftOne BlackBook ENG Ver.3.3 PDFLiviu BuliganNo ratings yet

- SPB ClientDocument4 pagesSPB ClientRKNo ratings yet

- Cuadernillo de Trabajo: InglésDocument20 pagesCuadernillo de Trabajo: InglésFátima Castellano AlcedoNo ratings yet

- Housekeeping Management Practices and Standards of Selected Hotels and Restaurants of Ilocos Sur, PhilippinesDocument8 pagesHousekeeping Management Practices and Standards of Selected Hotels and Restaurants of Ilocos Sur, PhilippinesMehwish FatimaNo ratings yet

- Piping Stress AnalysisDocument10 pagesPiping Stress AnalysisM Alim Ur Rahman100% (1)

- Opening Your Own Bank Drops: Edited EditionDocument4 pagesOpening Your Own Bank Drops: Edited EditionGlenda83% (6)

- Kalsi® Building Board Cladding: Kalsi® Clad Standard DimensionsDocument1 pageKalsi® Building Board Cladding: Kalsi® Clad Standard DimensionsDenis AkingbasoNo ratings yet

- Ericka Joyce O. Reynera: PERSONAL - INFORMATIONDocument2 pagesEricka Joyce O. Reynera: PERSONAL - INFORMATIONdead insideNo ratings yet

- Wa0004.Document15 pagesWa0004.Sudhir RoyNo ratings yet

- Feasibility StudyyyDocument27 pagesFeasibility StudyyyMichael James ll BanawisNo ratings yet