Professional Documents

Culture Documents

Small File Packing in Distributed Parallel File Systems - Project Proposal

Small File Packing in Distributed Parallel File Systems - Project Proposal

Uploaded by

washereOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Small File Packing in Distributed Parallel File Systems - Project Proposal

Small File Packing in Distributed Parallel File Systems - Project Proposal

Uploaded by

washereCopyright:

Available Formats

SMALL FILE PACKING IN DISTRIBUTED PARALLEL FILE SYSTEMS

- PROJECT PROPOSAL

Srinivas Chellappa and Mikhail Chainani and Faraz Shaikh

{schellap, mchainan, fshaikh}@andrew.cmu.edu

Carnegie Mellon University

ABSTRACT The goal of this project is to propose and study a design

to store small files in their entirety along with the associated

Distributed parallel file systems are typically optimized for

metadata in such distributed parallel storage systems. The

accesses to large files. Small file performance in certain

hope is to reduce the costs of accessing small files by elim-

parallel file systems may potentially be improved by storing

inating the separate access to the storage system for small

small files in their entirety along with the file metadata in-

files.

formation. This paper proposes a design to do so on PVFS2

and explores how such a design could be evaluated. Paper Organization. This section has provided a brief in-

troduction and background. Section 2 presents related work.

1. INTRODUCTION AND BACKGROUND Section 3 motivates and presents the problem statement.

Section 4 presents our proposed design. Section 5 presents

Distributed parallel file systems are file systems that dis- our proposed evaluation methods.

tribute file data across multiple storage components to pro-

vide higher concurrent performance. These typically appear

as a single, persistent system to clients. Distributed parallel 2. RELATED WORK

file systems exist for various reasons, the primary ones be-

ing the reduction of access bottlenecks and the delivery of The file system that we propose to implement and evalu-

high performance in concurrent environments. ate our design on is PVFS2 [3]. PVFS2 is an open source

Many current distributed parallel systems focus on opti- parallel file system designed for use in large scale cluster

mizing performance for large files, and either support small computing. PVFS2 is optimized for large dataset access

files at lower performance levels, or not support small files performance [1, 2]. Increasing the performance of PVFS2

at all [1, 2]. Though this is not an issue for applications for small file/dataset access without affecting large dataset

that process vast amounts of data in large chunks, applica- access performance is a desired outcome of this project.

tions that would benefit from storing and accessing small Packing and storing small files along with metadata in-

files (say, less than 10k) either have to live wih the reduced formation is not a new idea, especially in the domain of tra-

performance, or have to include an extra software or hard- ditional local file systems. NTFS packs small files (< 1500

ware layer to be able to eke better peformance out of the bytes) into the metadata structure itself [4]. However, par-

parallel file system. allel file systems typically do not do this.

The problem of low performance is exacerbated in sys- Some distributed parallel file systems take other approaches

tems like PVFS2 [3] that use separate systems for metadata to optimizing small file access. MSFSS [5] is a distributed

and actual storage. This requires the clients to first contact parallel file system that optimizes small file access by us-

a metadata system to acquire the correct storage location, ing an underlying file system (ReiserFS) that is optimized

and a second, separate access to the appropriate storage lo- for small file access. However, the type of systems we are

cation to actually acquire the data or the file. Such accesses targetting are already optimized at all levels for large file ac-

are typically bound in latency and bandwidth by local area cess, and since changing that is not an option, this approach

networks like ethernet. This is not an issue in terms of band- does not work.

width performance for accessing large files, since the cost of Devulapalli et al. in [6] describe a method to decrease

the two accesses is amortized over the large size of the files. network traffic utilization during file creation in a parallel

However, the latency of accessing small files on such a par- file system. A side effect of our approach is that it would

allel file system might be large because the overhead costs achieve the same effect, thus increasing available bandwidth

would dominate. for large file accesses.

Project Proposal/FA07/15-712/CMU 1 S.Chellappa, M. Chainani, F. Shaikh

3. PROBLEM STATEMENT

As previously mentioned, certain distributed parallel sys-

tems like PVFS2 include separate metadata servers and stor-

age systems. Since there is a fixed overhead associated with

making multiple accesses to obtain a file, file sizes below

a certain threshold might benefit from being stored on the

metadata server itself. Our project proposes to implement

such a design and determine such a threshold in addition to

evaluating the general viability of such an approach.

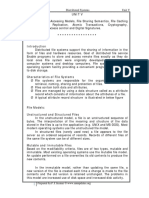

4. APPROACH AND DESIGN Fig. 1. A PVFS client first accesses the mgr (metadata

server) (From [7])

PVFS2 uses a database to maintain and serve metadata. Our

approach is to modify PVFS2 to store files with sizes under

a given threshold in the metadata server system. Below, we

present an overview of PVFS2 and expected modifications.

Implementation Platform. We selected PVFS2 [3] as our

implementation and evaluation platform. Apart from be-

ing an open source project, as mentioned earlier, PVFS2

focuses mainly on large file performance, and our hope is

to increase small file performance in PVFS2 while leaving

large file performance untouched (or increasing it).

There are four major components to the PVFS system:

Fig. 2. A PVFS client uses information from the mgr to

• Metadata server (mgr) access the iod (actual file data store) (From [7])

• I/O server (iod)

• PVFS native API (libpvfs) server (mgr) and the other to the IO server (iod). Figure 1

and Figure 2 illustrate file access in PVFS. Our design is

• PVFS Linux kernel support aimed at eliminating the access shown in Figure 2.

The daemon servers run on the cluster nodes. The meta- Issues. There are also several issues that we will attempt to

data server (mgr), manages all the metadata for the files in- answer during the course of this project. Metadata servers

cluding the file name, directory heirarchy, and information might be replicated for performance, and our design might

about how the file is distributed across servers. The meta- cause undesired replication of small files along with meta-

data is stored in a database on the mgr. Our proposed design data replication, thus increasing storage requirements. Some

would pack the file data along with its metadata at the mgr distributed file systems [8] allow clients to cache meatadata,

for small files. and this has to be handled appropriately.

The second daemon is the I/O server (iod). the I/O servers

manage the data storage and retrieval from local disks. The 5. EVALUATION

nodes run a local file system which is leveraged by these

servers. No modifications are expected to be required on

the iod. Evaluation System. We will evaluate our implementation

on a simple setup consisting of three machines - a client, an

Clients can access the PVFS filesystem using two meth-

iod (I/O server), and an mgr (metadata server), running on

ods - via a user-space library or through Linux kernel sup-

100MBps ethernet. If available, we will also evaluate our

port. In either case, the client has to be modified appropri-

implementation on larger deployments of PVFS2.

ately to support small file packing.

PVFS does not cache meta data due to complexity of Evaluation Metrics. Our project primarily targets increas-

maintaining consistency in a parallel access environment. ing the performance of small file accesses in distributed file

Thus for small files, reads and writes will force the client to systems. Hence, our primary metric will compare the la-

make at least two network accesses: one to the meta data tency of single accesses, and the sustained throughput of

Project Proposal/FA07/15-712/CMU 2 S.Chellappa, M. Chainani, F. Shaikh

several small file accesses on our system, with the baseline. [2] R. Latham, N. Miller, R. Ross, and P. Carns, “Shared

The baseline will be an unmodified PVFS2 setup. parallel filesystems in heterogeneous linux multi-

A major goal of this project is to determine a threshold cluster environments,” proceedings of the 6th LCI In-

or a range of file sizes for which file packing increases per- ternational Conference on Linux Clusters: The HPC

formance. Measuring the access latencies and bandwidths Revolution, 2004.

of a range of file sizes would provide an idea of such a

[3] The parallel virtual file system, version 2. [Online].

threshold. Note that such a threshold or range would depend

Available: http://www.pvfs.org/pvfs2

on the actual distributed parallel file system under consid-

eration, and other configuration parameters of an instance [4] NTFS.COM. Ntfs. [Online]. Available: http://www.

(eg.: network latencies, bandwidths). ntfs.com/ntfs-mft.htm

Next, we will compare the overall performance of an

actual workload across our modified and baseline systems. [5] L. Yu, G. Chen, W. Wang, and J. Dong, “Msfss: A stor-

We will choose an appropriate set of workloads, preferably age system for mass small files,” in Proceedings of the

providing various mixtures of small and large file accesses. 2007 11th International Conference on Computer Sup-

ported Cooperative Work in Design, 2007.

Secondary metrics that we will use, time permitting, are

the extra load on the metadata servers and the network uti- [6] P. Devulapalli, A.; Ohio, “File creation strategies in a

lization of the connections to these servers, for specific work- distributed metadata file system,” in Parallel and Dis-

loads. Though client access performance is ultimately what tributed Processing Symposium, 2007. IPDPS 2007.

matters, these secondary metrics might provide insight into IEEE International, 2007.

the costs and bottlenecks of our design.

[7] The parallel virtual file system. [Online]. Available:

http://www.parl.clemson.edu/pvfs/desc.html

6. REFERENCES

[8] S. Ghemawat, H. Gobioff, and S. Leung, “The google

[1] R. Latham, N. Miller, R. Ross, and P. Carns, “A next file system,” in ACM SOSP, Oct. 2003.

generation parallel file system for linux clusters,” Lin-

uxWorld, 2004.

Project Proposal/FA07/15-712/CMU 3 S.Chellappa, M. Chainani, F. Shaikh

You might also like

- Macroeconomics Canadian 5th Edition Mankiw Solutions ManualDocument25 pagesMacroeconomics Canadian 5th Edition Mankiw Solutions ManualMrJosephCruzMDfojy100% (54)

- Chak de India Management Perspective ProjectDocument21 pagesChak de India Management Perspective ProjectMr. Umang PanchalNo ratings yet

- User Manual Bioksel 6100Document49 pagesUser Manual Bioksel 6100TRUNG Lê ThànhNo ratings yet

- CS101 Current Mid-Term Solved Papers 2022 BY M ZAMANDocument44 pagesCS101 Current Mid-Term Solved Papers 2022 BY M ZAMANNabil AhmedNo ratings yet

- Padmasambhava Aka Guru RinpocheDocument11 pagesPadmasambhava Aka Guru RinpochewashereNo ratings yet

- Lesson Plan 3 2D Shape With ReflectionDocument7 pagesLesson Plan 3 2D Shape With ReflectionfatimaNo ratings yet

- Ceph: A Scalable, High-Performance Distributed File SystemDocument14 pagesCeph: A Scalable, High-Performance Distributed File SystemManuel PobreNo ratings yet

- Unit-3 Part1Document57 pagesUnit-3 Part1himateja mygopulaNo ratings yet

- Docs TemplateDocument12 pagesDocs TemplateJohn Bernard TungolNo ratings yet

- Distributed Directory Service in The Farsite File SystemDocument14 pagesDistributed Directory Service in The Farsite File SystemBhalodia HarshNo ratings yet

- Shivajirao Kadam Institute of Technology and Management, Indore (M.P.)Document13 pagesShivajirao Kadam Institute of Technology and Management, Indore (M.P.)Atharv ChaudhariNo ratings yet

- 2distributed File System DfsDocument21 pages2distributed File System DfsNUREDIN KEDIRNo ratings yet

- CSAR-2: A Case Study of Parallel File System Dependability AnalysisDocument14 pagesCSAR-2: A Case Study of Parallel File System Dependability AnalysisRegin CabacasNo ratings yet

- Unit-3 (Bit-43)Document16 pagesUnit-3 (Bit-43)Vimal VermaNo ratings yet

- Lecture 4.0 - Distributed File SystemsDocument15 pagesLecture 4.0 - Distributed File SystemsLynn Cynthia NyawiraNo ratings yet

- Authenticated Key Exchange Protocols For Parallel PDFDocument14 pagesAuthenticated Key Exchange Protocols For Parallel PDFNinad SamelNo ratings yet

- Varadin FinalDocument8 pagesVaradin FinalDoutorNo ratings yet

- Fast18 SunDocument14 pagesFast18 SunGautham LNo ratings yet

- 7 A Taxonomy and Survey On Distributed File SystemsDocument6 pages7 A Taxonomy and Survey On Distributed File SystemsvikasbhowateNo ratings yet

- Bigdata 15cs82 Vtu Module 1 2 Notes PDFDocument49 pagesBigdata 15cs82 Vtu Module 1 2 Notes PDFShobhit KushwahaNo ratings yet

- Bigdata 15cs82 Vtu Module 1 2 NotesDocument49 pagesBigdata 15cs82 Vtu Module 1 2 Notesrishik jha57% (14)

- ARM: Advanced File Replication and Consistency Maintenance in P2P SystemDocument5 pagesARM: Advanced File Replication and Consistency Maintenance in P2P SystemRakeshconclaveNo ratings yet

- Scale and Performance in A Distributed File SystemDocument31 pagesScale and Performance in A Distributed File Systemeinsteniano40000No ratings yet

- Parallel Virtual File SystemDocument6 pagesParallel Virtual File SystemgrvrulzNo ratings yet

- 9 05372781 Design Reliable DFSDocument6 pages9 05372781 Design Reliable DFSvikasbhowateNo ratings yet

- Distributed File SystemDocument49 pagesDistributed File SystemRicha SinghNo ratings yet

- Distributed UNIT 5Document15 pagesDistributed UNIT 5somayajula venkata srinivasNo ratings yet

- Object Based File System in GridDocument12 pagesObject Based File System in GridAdane AbateNo ratings yet

- Performance Modeling of A Distributed File-System: Sandeep KumarDocument9 pagesPerformance Modeling of A Distributed File-System: Sandeep KumarJaideep RayNo ratings yet

- DC - PPT A Case Study On Distributed File SystemsDocument17 pagesDC - PPT A Case Study On Distributed File SystemsAMAN SINGHNo ratings yet

- Modern Distributed File System Design: Vrije Universiteit AmsterdamDocument7 pagesModern Distributed File System Design: Vrije Universiteit AmsterdamxrzhaoNo ratings yet

- Distributed File SystemDocument5 pagesDistributed File SystemHarsh NebhwaniNo ratings yet

- Bundelkhand University Jhansi IET Mobile Computing: SAUMYA KATIYAR (161381030040) B.Tech (Cse) 4 YearDocument18 pagesBundelkhand University Jhansi IET Mobile Computing: SAUMYA KATIYAR (161381030040) B.Tech (Cse) 4 YearSaumya KatiyarNo ratings yet

- An Overview - Google File System (GFS) and Hadoop Distributed File System (HDFS)Document3 pagesAn Overview - Google File System (GFS) and Hadoop Distributed File System (HDFS)Priya deshmukhNo ratings yet

- Unit II-bid Data ProgrammingDocument23 pagesUnit II-bid Data ProgrammingjasmineNo ratings yet

- Introduction To Hadoop EcosystemDocument46 pagesIntroduction To Hadoop EcosystemGokul J LNo ratings yet

- SCALER: Scalable Parallel File Write in HDFS: Xi Yang, Yanlong Yin Hui Jin Xian-He SunDocument9 pagesSCALER: Scalable Parallel File Write in HDFS: Xi Yang, Yanlong Yin Hui Jin Xian-He SunSalim MehenniNo ratings yet

- Improving Performance of Distributed File System - ZFSDocument39 pagesImproving Performance of Distributed File System - ZFSParvez GuptaNo ratings yet

- First Whitepaper GfsDocument40 pagesFirst Whitepaper GfsLeoNo ratings yet

- 1.1 Organizational ProfileDocument31 pages1.1 Organizational ProfileAmir AmirthalingamNo ratings yet

- ClouddaDocument8 pagesClouddaRahithya AmbarapuNo ratings yet

- ClouddafinalDocument8 pagesClouddafinalRahithya AmbarapuNo ratings yet

- PDF Bigdata 15cs82 Vtu Module 1 2 NotesDocument17 pagesPDF Bigdata 15cs82 Vtu Module 1 2 NotesrajuNo ratings yet

- Class NotesDocument9 pagesClass NotesviswaNo ratings yet

- HDFS Vs AFSDocument4 pagesHDFS Vs AFSabulfaiziqbalNo ratings yet

- Imp DSDocument27 pagesImp DSVimal VermaNo ratings yet

- (Ci) 09 (Ci) 12Document31 pages(Ci) 09 (Ci) 12uborah987No ratings yet

- Distributed File SystemDocument7 pagesDistributed File SystemMahamud elmogeNo ratings yet

- A Novel Approach For Managing Data For Cluster Based Metadata Management System Using HbaDocument5 pagesA Novel Approach For Managing Data For Cluster Based Metadata Management System Using HbaIjesat JournalNo ratings yet

- Research Paper 7 2022Document21 pagesResearch Paper 7 2022Shubham PitaleNo ratings yet

- HopsFS: Scaling Hierarchical File System Metadata Using NewSQL DatabasesDocument17 pagesHopsFS: Scaling Hierarchical File System Metadata Using NewSQL DatabasesJim DowlingNo ratings yet

- IBM Total Storage SAN File System As An Infrastructure For Multimedia Servers Redp4098Document12 pagesIBM Total Storage SAN File System As An Infrastructure For Multimedia Servers Redp4098bupbechanhNo ratings yet

- Bda Material Unit 2Document19 pagesBda Material Unit 2Syamnadh UppalapatiNo ratings yet

- SNMR Ia2 Ab PDFDocument20 pagesSNMR Ia2 Ab PDFVaibhav PhadNo ratings yet

- Fist: A Language For Stackable File Systems: Computer Science Department, Columbia UniversityDocument16 pagesFist: A Language For Stackable File Systems: Computer Science Department, Columbia UniversityPep DizNo ratings yet

- 3Document11 pages3Sourabh porwalNo ratings yet

- Unit 3.4 Gfs and HdfsDocument4 pagesUnit 3.4 Gfs and HdfsIT2025Chinmay KamlaskarNo ratings yet

- Block Level Data Deduplication For Faster Cloud BackupDocument5 pagesBlock Level Data Deduplication For Faster Cloud BackupPradyumna ReddyNo ratings yet

- 1564-Article Text-2810-1-10-20171231 PDFDocument5 pages1564-Article Text-2810-1-10-20171231 PDFpaoloNo ratings yet

- Unit V Cloud Technologies and Advancements 8Document33 pagesUnit V Cloud Technologies and Advancements 8Jaya Prakash MNo ratings yet

- Reliable Distributed SystemsDocument44 pagesReliable Distributed Systemslcm3766lNo ratings yet

- Ceph File SystemDocument13 pagesCeph File SystemIjazKhan100% (1)

- Sa301 (Midterm Coverage)Document12 pagesSa301 (Midterm Coverage)EFREN LAZARTENo ratings yet

- Prepared By: - Shaunak Patel. Digesh ThakoreDocument14 pagesPrepared By: - Shaunak Patel. Digesh Thakorejnanesh582No ratings yet

- RP-WD007: North AmericaDocument20 pagesRP-WD007: North AmericawashereNo ratings yet

- Name of School LCME Preliminary Accreditation Survey Month #-#, 20##Document7 pagesName of School LCME Preliminary Accreditation Survey Month #-#, 20##washereNo ratings yet

- Case Fan Product BriefDocument6 pagesCase Fan Product BriefwashereNo ratings yet

- Who Is Accredited Hague Web 5.29.2020.Document131 pagesWho Is Accredited Hague Web 5.29.2020.washereNo ratings yet

- Electrification of Transportation Systems Program Clean Energy Fund #3 (CEF #3) - Electrification of Transportation Systems Question & AnswerDocument43 pagesElectrification of Transportation Systems Program Clean Energy Fund #3 (CEF #3) - Electrification of Transportation Systems Question & AnswerwashereNo ratings yet

- Pretrial Order P1 June 1 2020Document5 pagesPretrial Order P1 June 1 2020washereNo ratings yet

- Cui Notice 2020 03a Non Disclosure Agreement TemplateDocument3 pagesCui Notice 2020 03a Non Disclosure Agreement TemplatewashereNo ratings yet

- Comment Form - 2 Draft of SAR For System Restoration and BlackstartDocument4 pagesComment Form - 2 Draft of SAR For System Restoration and BlackstartwashereNo ratings yet

- Senator Kenneth J. Donnelly Workforce Success Grants For Healthcare Workforce HubsDocument31 pagesSenator Kenneth J. Donnelly Workforce Success Grants For Healthcare Workforce HubswashereNo ratings yet

- Dog Foster/ Adoption Application: CARE, SouthcoastDocument3 pagesDog Foster/ Adoption Application: CARE, SouthcoastwashereNo ratings yet

- Montana COVID 19 Appendix K 2 Final 6-1-2020Document14 pagesMontana COVID 19 Appendix K 2 Final 6-1-2020washereNo ratings yet

- IBMSIQOverview Guide 76020Document28 pagesIBMSIQOverview Guide 76020washereNo ratings yet

- LEGAL AD Davidson County May 30, 2020Document1 pageLEGAL AD Davidson County May 30, 2020washereNo ratings yet

- Thomas A. KochanDocument23 pagesThomas A. KochanwashereNo ratings yet

- 1st Prep Model Exam22020answers 1Document3 pages1st Prep Model Exam22020answers 1washereNo ratings yet

- CY21 Enlisted Development TemplatesDocument3 pagesCY21 Enlisted Development TemplateswashereNo ratings yet

- Singles Rankings: 14 October 2019 Numeric List ForDocument29 pagesSingles Rankings: 14 October 2019 Numeric List ForwashereNo ratings yet

- Correction On The Line UnderneathDocument2 pagesCorrection On The Line UnderneathwashereNo ratings yet

- Duncan I. SimesterDocument12 pagesDuncan I. SimesterwashereNo ratings yet

- Inferring The Function Performed by A Recurrent Neural NetworkDocument12 pagesInferring The Function Performed by A Recurrent Neural NetworkwashereNo ratings yet

- Prayers To Guru RinpocheDocument5 pagesPrayers To Guru RinpochewashereNo ratings yet

- Vajra Guru MantraDocument1 pageVajra Guru MantrawashereNo ratings yet

- Script SampleDocument49 pagesScript SamplewashereNo ratings yet

- RywDocument5 pagesRywwashereNo ratings yet

- Tibetan ArchitectureDocument4 pagesTibetan ArchitecturewashereNo ratings yet

- Summary of US Class Size Reduction ResearchDocument7 pagesSummary of US Class Size Reduction ResearchLeonie HaimsonNo ratings yet

- 09-Humble Homemade Hifi - PhleaDocument4 pages09-Humble Homemade Hifi - Phleajns0110No ratings yet

- Cara Membaca Analisis Gas Darah Arteri (AGDA)Document27 pagesCara Membaca Analisis Gas Darah Arteri (AGDA)Martin Susanto, MD67% (3)

- Ebook PDF Statistics Principles and Methods 8th Edition PDFDocument41 pagesEbook PDF Statistics Principles and Methods 8th Edition PDFjoseph.corey248100% (37)

- Eugenio Ochoa GonzalezDocument3 pagesEugenio Ochoa GonzalezEugenio Ochoa GonzalezNo ratings yet

- Curriculum Vitae (CV) : Data PribadiDocument2 pagesCurriculum Vitae (CV) : Data Pribadingurah.gde.ariNo ratings yet

- Radtech0718 Davao jg18 PDFDocument23 pagesRadtech0718 Davao jg18 PDFPhilBoardResultsNo ratings yet

- Maths Specimen Paper 1 2014 2017Document16 pagesMaths Specimen Paper 1 2014 2017Ly Shan100% (1)

- IPT Full MaterialsDocument197 pagesIPT Full Materialsabenezer milkiasNo ratings yet

- SNS NotesDocument26 pagesSNS NotesyslnjuNo ratings yet

- JOSE R. CATACUTAN, Petitioner, vs. PEOPLE OF THE PHILIPPINES, Respondent FactsDocument2 pagesJOSE R. CATACUTAN, Petitioner, vs. PEOPLE OF THE PHILIPPINES, Respondent FactsAngela Marie AlmalbisNo ratings yet

- Week1 - FEE GIKIDocument38 pagesWeek1 - FEE GIKIHadeedAhmedSherNo ratings yet

- Spotter - S Guide To Plastic Pollution Trawls PDFDocument2 pagesSpotter - S Guide To Plastic Pollution Trawls PDFwilly paceteNo ratings yet

- NURS FPX 6614 Assessment 1 Defining A Gap in PracticeDocument6 pagesNURS FPX 6614 Assessment 1 Defining A Gap in PracticeCarolyn HarkerNo ratings yet

- Sheila Mae Seville CVDocument1 pageSheila Mae Seville CVc21h25cin203.2hc1No ratings yet

- Gr10 Ch13.4 TBDocument5 pagesGr10 Ch13.4 TBnr46jpdxbpNo ratings yet

- S Geo ProDocument22 pagesS Geo ProMathews SikasoteNo ratings yet

- Community Capstone Health Policy PaperDocument17 pagesCommunity Capstone Health Policy Paperapi-417446716No ratings yet

- Marinediesels - Co.uk - Members Section Starting and Reversing Sulzer ZA40 Air Start SystemDocument2 pagesMarinediesels - Co.uk - Members Section Starting and Reversing Sulzer ZA40 Air Start SystemArun SNo ratings yet

- Manual Armado 960E-1 Serial Number A30003-A30024 CEAW005502Document338 pagesManual Armado 960E-1 Serial Number A30003-A30024 CEAW005502Joel Carvajal ArayaNo ratings yet

- Course Code: MCS-011 Course Title: Problem Solving and ProgrammingDocument9 pagesCourse Code: MCS-011 Course Title: Problem Solving and Programminggrvs0No ratings yet

- Spill Kit ChecklistDocument1 pageSpill Kit Checklistmd rafiqueNo ratings yet

- TLC Online Learning Pack ContentDocument15 pagesTLC Online Learning Pack ContentjennoNo ratings yet

- Product Characteristics and ClassificationsDocument3 pagesProduct Characteristics and Classificationstsega-alemNo ratings yet

- ESC Cardiomyopathy ClassificationDocument7 pagesESC Cardiomyopathy Classificationvalerius83No ratings yet