Professional Documents

Culture Documents

Securing Sensitive Business Data in Non-Production Environment Using Non-Zero Random Replacement Masking Method

Securing Sensitive Business Data in Non-Production Environment Using Non-Zero Random Replacement Masking Method

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Securing Sensitive Business Data in Non-Production Environment Using Non-Zero Random Replacement Masking Method

Securing Sensitive Business Data in Non-Production Environment Using Non-Zero Random Replacement Masking Method

Copyright:

Available Formats

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Securing Sensitive Business Data in Non-

Production Environment Using Non-Zero

Random Replacement Masking Method

Ruby Bhuvan Jain1, Dr. Manimala Puri2

1

Research Scholar, JSPM’s Abacus Institute of Computer Applications, Pune, Maharashtra, India

2

Director, JSPM Group of Institutes, Survey No.80, Pune-Mumbai Bypass Highway, Tathawade, Pune, Maharashtra,

India

Abstract:

The main objective is to protect the privacy of individuals and security of society which is becoming crucial for

effective functioning over the business. Privacy enforcement today is being handled primarily through governments

legally. Authors aim is to provide uniform data masking architecture for masking the sensitive data like finance

retails etc.There is a growing need to protect sensitive employee, customer, and business data across the enterprise

wherever such data may reside. Until recently, most data theft occurred from malicious individuals hacking into

production databases that were made available across the non-production environment. An important tier of

computer data remains practically untouched and unprotected by today’s new data security procedures:

nonproduction systems used for in-house development, testing, and training purposes are generally open systems

and leave a large hole in the data privacy practices at companies of all sizes. These environments leverage real data

to test applications, housing some of the most confidential or sensitive information in an organization, such as

Social Security numbers, bank records, and financial documents. This research paper discusses data privacy

procedures in nonproduction environments, using a proven commercial solution for masking sensitive data in all

nonproduction environments, and integrating these privacy processes and technology across the enterprise.

The paper concentrates on data shuffling, substitution, and proposed model Non-Zero Random Replacement

masking algorithms. Our results strongly augmented non-zero random replacement method can be used across the

domains starting from critical business like finance, banking, and health care.

Keywords: Structured data, Un-structured data, Sensitive Data and Data Masking.

1. Introduction:

Every company has sensitive data, whether it is trade secrets, intellectual property, critical business information,

business partners’ information, or its customers’ information. All of this data must be protected based on company

policy, regulatory requirements, and industry standards. This section will cover a number of important elements of

protecting this data.

Confidentiality, integrity, and availability are the cornerstones of information privacy, as well as a sound business

practice. They are essential to the following:

• Compliance with existing regulations and industry standards

• Reliable, accurate, high-performance services

• Competitive positioning

• Reputation of the firm

• Customer trust.

Another important aspect in the protection of knowledge, information, and data is how they are used within the

operations of the firm and in what form the data resides (hard copy, electronic, or human). In addition, protection

requirements will also vary by type of operational environment, such as production, preproduction test, development,

quality assurance (QA), or third party. The requirements for protecting this knowledge, information, and data must be

clearly defined and reflect the specific requirements within the appropriate regulatory and industry rules and standards.

Specific data elements must be labelled as sensitive and should never be used within their factual state in development,

QA, or other nonproduction environments. The data classification policy should clearly identify the requirements for

data masking. Note that knowledge and information can be broken down into specific data elements.

Volume 8, Issue 3, March 2019 Page 382

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Data elements within a domain, a built-in data type, or an object field within data objects are real data and must comply

with the data definition of the data element. However, this information can be factual—an actual Social Security

number—or it can be nonfactual—a random collection of numbers conforming to the data definition for that particular

data element.

Some data elements alone may not contain sensitive data; however, once an association with other data elements

occurs, they all become sensitive data. So data masking must quickly become very sophisticated to ensure that all the

sensitive data is protected (masked), the data is still “real,” and a record of the association is retained. Today, with all

of the regulations and risk of fines, the negative impact on reputation, and the possibility of criminal convictions,

organizations have moved toward third-party masking technology that is regularly updated according to evolving

standards and regulations. This technology is used to mask sensitive data in the general open development and QA

environments.

The term “unauthorized access” is very familiar to us, but who authorizes access? We as consumers and customers play

a role in defining that has access to our “non-public personal information” and under what conditions they can use this

information. We sign and click on agreements every day that define the terms and conditions of granting access to and

use of our non-public personal information. However, the service providers we grant these privileges to be required to

follow security practices to ensure that “need-to-know” concepts are followed and all unauthorized parties are denied

access to our non-public personal information. Some other very important best practices are to keep up to date with

agency guidance on GLBA; it’s just like keeping up with operating system patches, but with less frequency. You must

protect PFI in all environments and including development, QA, and test environments; the regulations do not

differentiate among these environments. Remember: masking is the safest way to protect sensitive information in

development and QA environments.

Special Regulatory and Industry Requirements: Depending on the countries that your organization does business in,

there will be some unique requirements for the protection of sensitive data. Nearly every country in the world has a data

privacy law, so you should always do a comprehensive review of each of the pertinent laws and their supporting

requirements.

The Gramm-Leach-Bliley Act (GLBA) applies to financial institutions that offer financial products or services such as

loans, financial or investment advice, or insurance to individuals. Compliance is mandatory for all nonbank mortgage

lenders, loan brokers, financial or investment advisers, tax preparers, debt collectors, and providers of real estate

settlements. The law requires that financial institutions protect information that is collected about individuals; it does

not apply to information that is collected in business or commercial activities.

There are three basic rules to understand to ensure compliance with GLBA: 1. Ensure the security and confidentiality

of customer records and information 2. Protect against any anticipated threats or hazards to the security or integrity of

such records 3. Protect against unauthorized access to or use of such records or information that could result in

substantial harm or inconvenience to any customer.

Health Insurance Portability and Accountability Act (HIPAA) The HIPAA Security and Privacy Standard defines

administrative, physical, and technical safeguards to protect the confidentiality, integrity, and availability of electronic

protected health information (PHI), sometimes referred to as personal health information. HIPAA has three major

purposes:

• To protect and enhance the rights of consumers by providing them access to their health information and

controlling the inappropriate use of that information

• To improve the quality of health care in the United States by restoring trust in the health care system among

consumers, health care professionals, and the multitude of organizations and individuals that are committed to

the delivery of care

• To increase the efficiency and effectiveness of health care delivery by creating a national framework for health

privacy protection that builds on efforts by states, health systems, and individual organizations and

individuals.

Understanding the HIPAA Security and Privacy Standard requirements is the key to interpreting what the covered

entities must do:

1. Ensure the confidentiality, integrity, and availability of all electronic protected health information that the

covered entity creates, receives, maintains, or transmits

2. Protect against any reasonably anticipated threats or hazards to the security or integrity of such information

3. Protect against any reasonably anticipated uses or disclosures of such information that are not permitted or

required under subpart E of this part

4. Ensure compliance with this subpart by its workforce Payment Card Industry Data Security Standard (PCI DSS)

PCI DSS originally began as five different programs: VISA Card Information Security Program, MasterCard

Volume 8, Issue 3, March 2019 Page 383

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Site Data Protection, American Express Data Security Operating Policy, Discover Information and

Compliance, and JCB Data Security Program. Each company’s intentions were roughly similar: to create an

additional level of protection for customers by ensuring that merchants meet minimum levels of security when

they store, process, and transmit cardholder data.

2. Related Work:

Data masking is the process of obscuring -masking specific data elements within data stores. It ensures that sensitive

data is replaced with realistic but not real data. The goal is that sensitive customer information is not available outside

of the authorized environment.

In white paper by informatica[1], focus is on sound practices to secure data. To protect sensitive data (trade secrets,

intellectual property, critical business information, business partners’ information, or its customers’ information)

company policy, regulatory requirements, and industry standards need to be followed. Data classification policies are

used to store sensitive data. The data is mainly classified in three categories—Public, Internal use only and

Confidential. Sensitive data occurs in two forms: structured and unstructured. The requirements for protecting this

knowledge, information, and data must be clearly defined and reflect the specific requirements within the appropriate

regulatory and industry rules and standards. Specific data elements must be labelled as sensitive and should never be

used within their factual state in development, QA, or other nonproduction environments. The data classification policy

should clearly identify the requirements for data masking. Note that knowledge and information can be broken down

into specific data elements. Organizations must define, implement, and enforce their data classification policy and

provide procedures and standards to protect both structured and unstructured sensitive data.

S.Vijayarani, Dr.A.Tamilarasi[14],In this research paper authors proposed a model to analyze the performance of Data

transformation and Bit transformation technique to protect numeric sensitive data and proved that Data transformation

is better than Bit transformation.

Kamlesh Kumar Hingwe , S. Mary SairaBhanu[13], In this research paper authors proposed framework to support data

protection in form of double layer encryption method using FPE followed by OPE.

John Haldeman[12],In this research paper author briefed about Optim Data Masking option for Test Data Management

allows you to build new data privacy functions using column map exits in C/C++, or by creating scripts in the Lua

language. Data Stage can be extended using C/C++ or BASIC in Transformer Stages, or by creating custom operators

in C/C++.[12]

XiaolingXia ,Qiang Xiao and Wei Ji [21] , In this research paper authors analyzed the progress of m-invariance

method and proposed a method called as NCm-invariance which converts the numerical data into categorical data to

overcome from the defects of m-invariance method.

Jun Liao, Chaohui Jiang, Chun Guo[11], In this paper authors proposed an algorithm called as SDUPPA algorithm

used to analyze dynamic updating algorithm on sensitive data to the other non-production users.

Securosis[9] ,In this paper focused on data masking, how the data masking technique work. Different types of Static

and dynamic data masking techniques are discussed. Effective data masking requires the alteration of data in such a

way that the actual values cannot be determined or re-engineered.

ChiragGoyal [17] , in this paper focused on the laws of data masking, need and implementation details of data

masking, types and order used in data masking. Research only focused on static masking whereas dynamic data

masking is also very important to cover.

I-Cheng Yeh, Che-hui Lien[23], they focused on risk management and prediction about the credit card client that the

client is credible or not credible after observing the behaviour of client account for six months.

3. Research Methodology:

Sensitive data occurs in two forms: structured and unstructured. Structured sensitive data is easier to find because it

typically resides within business applications, databases, enterprise resource planning (ERP) systems, storage devices,

third-party service providers, back-up media, and off-site storage. Unstructured sensitive data is much more difficult to

find because it is usually dispersed throughout the firm’s infrastructure (desktops), employees’ portable devices (laptops

and handhelds), and external business partners. That dissemination is not the problem; what increases the level of

difficultly substantially is that this sensitive data is typically contained within the messaging infrastructure (email,

instant messaging, voice-over-IP), personal production tools (Excel spreadsheets), unmanaged portable devices, and

portable media. So if these various unstructured environments are “unmanaged,” then the sensitive data can propagate

in an uncontrolled manner. For example, if users are permitted to archive local data and/or move it to a personal (home

computer) or portable storage device (USB memory stick), it becomes uncontrolled and at risk.

Volume 8, Issue 3, March 2019 Page 384

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Data classification policy organizations that collect, use, and store sensitive information should establish an

information classification policy and standard. This classification policy and standard should contain a small number of

classification levels that will meet the business needs of the firm. Most organizations have at least three categories such

as public, internal use only, and confidential.

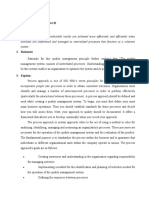

The below figure1, represents four phases of masking life cycle– Profiling, Environment Sizing, Sensitive field

discovery and Masking. The main objective of this work is to design an effective data masking tool to secure sensitive

data from unauthorized access.

3.1. Profiling:

Profiling as a process recognizes the detail that data can be derived, inferred and predicted from other data. Profiling

occurs in a range of contexts and for a variety of purposes. This can be used to score, rank and evaluate and assess

people, and to make and inform decisions about individuals that may or may not be automated. Through profile,

sensitive data can be inferred from other non-sensitive data.

Profiling, just as any form of data processing also needs a legal basis. Since misidentification, misclassification and

misjudgment are an inevitable risk associated to profiling, controllers should also notify the data subject about these

risks and their rights, including to access, rectification and deletion. Individual’s rights need to be applied to derived,

inferred and predicted data, to the extent that they qualify as personal data.

3.2. Extract into hard end environment:

The masking environment should be hardened. Hardened environment is where access is protected by a workflow

like a production, software’s are licenses as a production, anti viruses are updated like production, log files are

generated so that in case if somebody add/removes we can have a watch. For masking process we need size of 2x.

Required environment size = (Original data size)*2

For Example:

If original production data needs 1GB storage space then as per infrastructure team, we need to have 2GB storage space

for the masking process.

3.3. Sensitive Diligence: This step is the preliminary step of masking. In this process two files will be prepared

that will be used as input in masking process, Sensitive diligence file and master masking file. The Sensitive diligence

file will contain information about the column name in first row, whether the specific column needs to be masked in

second row and if specific condition needs to be applied while masking if in the third row. For example date field

should follow date rules. The master masking file will contain name of the file which contains original data in first

row, file separator in second row, type of file in third row and output file name in fourth row.

3.4. Masking Method:

There are various masking techniques are available described in earlier in this paper. All the techniques have some

pros and cons. In this study two most popular masking techniques substitution and Shuffling compared with the

proposed Non-Zero Random Replacement technique.

CSV TX XM

Production

Data High End Infrastructure

Data Extract into

Profiling hard end

Information Technology

Application Owner

System Integration

Data Sensitive

Non- Masking Diligence

Production

CSV TX XM

Figure 1 Phases of data masking life cycle

Volume 8, Issue 3, March 2019 Page 385

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

4. Results and Findings:

The present work considers business data of UCI repository, i.e. financial[3] and bank marketing dataset [2]. It is a real

data. The data sets are openly available and downloadable at free of cost. UCI states that, Machine learning community

donates data sets to UCI, for promoting research & development activities. The structure of data sets is suitable for

machine learning applications.

Table 1: (Financial Data) credit card clients Data Set

Data Set Multivariate Number of Instances: 3000 Area: Business

Characteristics: 0

Attribute Characteristics: Integer, Real Number of Attributes: 24 Date Donated 2016-01-

26

Associated Tasks: Classificatio Missing Values? N/A Number of Web 312599

n Hits:

Table 1 contains financial data set of credit card clients and the data sets shown in table 2 do not have name of the

patient and SSN (Social Security Number) as they reveal identity of the patient. Each of the data sets listed in the table

2 are analysed. The researcher advice readers to see structure of the data sets on UCI repository site if required.

Table 2: Bank Marketing Data Set

Data Set Multivariate Number of Instances: 4521 Area: Business

Characteristics: 1

Attribute Characteristics: Real Number of Attributes: 17 Date Donated 2012-02-

14

Associated Tasks: Classificatio Missing Values? N/A Number of Web 800176

n Hits:

The proposed Non-Zero Random Replacement method is implemented in Python programming language. The current

experiment uses two datasets for the processing masking, which are: Financial and bank marketing datasets. The whole

experiment is carried out on a PC with an Intel i3 processor having 8GB of main memory as this is commonly available

configuration.

The experiment enabled the measurement of classification performance values by different masking algorithms on two

experimental real data sets. Results displayed general variations in performance parameters. The performance

measured by Non-Zero Random Replacement masking algorithm is consistently showed superior values over those

delivered by Substitution and shuffling algorithm. Information gain rankings of the attributes in the initial data set,

performed as part of the data pre-processing and transformation phase, had confirmed that the select attributes were

among the most influential in predicting the value of the outcome class variable.

To evaluate the masking algorithm researcher used classification algorithm. In this study ID3 (Iterative Dichotomiser

3) classification algorithm is applied. The algorithm is used on training and test dataset to produce decision tree which

is stored in memory. In financial training dataset 24000 sample with 23 predictors and two classes ‘0’ and ‘1’ were

present. After repeating the algorithm 3 times with 10 fold approach the results satisfied the proposed method is

performing as accurate as the original data as compared to other masking techniques.

Comparing the resulting performance metric values: classification accuracy, precision, recall, f-measure, and

Specificity delivered by the original data and masked data produced by three algorithms, it was found that they all

figured within a percentage point of each other when measured within the control across all groups. Authors have

developed a uniform data masking architecture for various data types and comparison of non-zero random replacement

method with other methods like Substitution and shuffling algorithm. The results strongly emphasize the proposed

method as one of the standard method for data masking with highest order of accuracy and usability. Observations also

showed that original data and Non-Zero Random Replacement data masking algorithm, on average, delivered matching

classification performance metrics. This was a surprising finding.

Table 3 lists weighted classification performance metrics achieved by each of the masking algorithms used to predict

the outcome variable within the data set.Values are weighted because they are based on the set of attributes determined

to carry highest influence on the outcome class variable that they were used to predict.Original data and Non-Zero

Volume 8, Issue 3, March 2019 Page 386

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Random Replacement delivered the highest values with almost matching 95% accuracy and precision. This was

followed by substitution at 73%. Shuffling delivered the lowest performance metrics at 68%.

Table 3: Financial Group Real dataset

Number of Instance: 24,000

Number of attributes: 23

Algorithm Original Non-Zero Random Substitution Shuffling

Replacement

Accuracy 82.13 82.09 81.87 82.00

Precision 0.8401 0.8400 0.8360 0.8361

Recall 0.9531 0.9530 0.9132 0.9058

CP Value 0.00112 0.00111 0.00110 0.00132

Sensitivity 0.9532 0.9531 0.9557 0.9582

Specificity 0.3476 0.3474 0.3262 0.3465

F1 0.8966 0.8965 0.8746 0.8795

Plotting the metrics obtained for each of the classification algorithms allowed a more practical way to view the relative

performance between classifiers. A schematic representation is shown in Figure below:

Figure 2 Comparison plot of various masking method on Credit card client dataset

Table 4 lists weighted classification performance metrics achieved by each of the masking algorithms used to predict

the outcome variable within the data set. Values are weighted because they are based on the set of attributes determined

to carry highest influence on the outcome class variable that they were used to predict. The classification algorithm is

applied on 36170 instances with 13 attributes. Original data and Non-Zero Random Replacement delivered the highest

values with almost matching 97% accuracy and precision. This was followed by substitution at 80%. Shuffling

delivered the lowest performance metrics at 76%.

Table 4 Bank Marketing Group Real dataset

Number of Instance: 36170

Number of attributes: 13

Algorithm Original Non-Zero Random Substitution Shuffling

Replacement

Accuracy 0.896 0.8959 0.8955 0.8965

Precision 0.9153 0.9191 0.9166 0.9161

Recall 0.9723 0.9533 0.9563 0.9571

Sensitivity 0.9723 0.9672 0.9698 0.9717

Specificity 0.3198 0.3576 0.334 0.3283

Volume 8, Issue 3, March 2019 Page 387

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

F1 0.94377 0.9362 0.9348 0.9365

Plotting the metrics obtained for each of the classification algorithms allowed a more practical way to view the relative

performance between classifiers. A schematic representation is shown in Figure below:

Figure 3 Comparison plot of various masking method on bank marketing dataset

5. Conclusion:

As a final point, as appeals for preserving privacy and confidentiality rises, the needs for masking techniques speed up

quickly. Data swapping techniques appeal automatically because they do not modify original sensitive values. Data

swapping is also easier to explain to the common user than other techniques. Among data swapping methods, only data

shuffling delivers the same data usefulness and disclosure risks as other advanced masking techniques, particularly for

large data sets where the asymptotic requirements are likely to be satisfied.

Using data shuffling in these situations would not only provide optimal results, but would also foster greater user

acceptance of masked data for analysis. The result showed by classification model evaluation metric says that the

accuracy produce by original financial data is 0.8213 with 95% CI, whereas accuracy produce by Non-Zero

randomized data is 0.8209 with 95% CI, accuracy produce by shuffled data is 0.820 with 95% CI, accuracy produce by

substituted data is 0.8187 with 95% CI. This represent that the proposed model is giving 99% accuracy as of original

data. Precision, recall, Specificity and F1 values also represent similar results as per original data compared to other

masking algorithm like shuffling and substitution. The same set of result is showed when the model is applied on bank

marketing dataset. Thus the results show that proposed model is a more efficient overall solution, making it a valid

alternative for protecting sensitive data.

As future work, we will develop our technique to accomplish masking unstructured data also, to provide a complete

data protection solution. We will also strive to prove and improve its security strength without exposing database

performance. We also intend to take advantage of the inclusion of the extra column in the masked tables for detecting

malicious or incorrect data changes in each row, broadening the scope of our solution to embrace data integrity issues.

Acknowledgment:

Thanks to the Research Center, Abacus Institute of Computer Applications, SavitribaiPhule Pune University for

supporting me for pursuing research in the defensibly protect sensitive data topic. Thanks to, I-Cheng Yeh -UCI center

for Machine Learning Repository, for his timely response to queries regarding data sets.

References:

Volume 8, Issue 3, March 2019 Page 388

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

[1] Data Privacy Best Practices for Data Protection in Nonproduction Environments, Informatica White Paper (2009).

[2] [Moro et al., 2014] S. Moro, P. Cortez and P. Rita. A Data-Driven Approach to Predict the Success of Bank

Telemarketing. Decision Support Systems, Elsevier, 62:22-31, June 2014

[3] Yeh, I. C., & Lien, C. H. (2009). The comparisons of data mining techniques for the predictive accuracy of

probability of default of credit card clients. Expert Systems with Applications, 36(2), 2473-2480.

[4] Ruby JAIN, Dr.Manimala PURI.A Dynamic Model To Identify Sensitive Data And Suggest Masking Technique,

Proceedings of the 4th IETEC Conference, Hanoi, Vietnam 2017.

[5] Ruby BhuvanJain , Dr. ManimalaPuri,Umesh Jain. A Robust Dynamic Data Masking Transformation approach To

Safeguard Sensitive Data, International Journal on Future Revolution in Computer Science &Communication

Engineering, Volume: 4 Issue: 2, ISSN: 2454-4248, Pg no. 366 – 370 , IJFRCSC-E | February 2018, Available @

http://www.ijfrcsce.org

[6] Ruby BhuvanJain , Dr. ManimalaPuri,Umesh Jain. A Robust Approach to Secure Structured Sensitive Data using

Non-Deterministic Random Replacement Algorithm, International Journal of Computer Applications (0975 –

8887) Volume 179 – No.50, June 2018.

[7] Oracle Corporation. Data Masking Best Practices JULY 2013 Oracle White Paper.

[8] Adrian Lane (2012). Understanding and Selecting Data Masking Solutions: Creating Secure and Useful Data,

Securosis Version 1.0, August 10, 2012

[9] Securosis – Understanding and Selecting Data Masking Solutions: Creating Secure and Useful Data, Version 1.0

Released: August 10, 2012

[10] 2016 Identity Theft Resource Center Data Breach Category Summary, www.IDTC911.com

[11] Jun Liao, Chaohui Jiang, Chun Guo, DATA PRIVACY PROTECTION BASED ON SENSITIVE ATTRIBUTES

DYNAMIC UPDATE, Proceedings of CCIS2016.

[12] Compare IBM data masking solutions: InfoSphereOptim and DataStage, Options for depersonalizing sensitive

production data for use in your test environments, John Haldeman (john.haldeman@infoinsightsllc.com)

Information Management Consultant Information Insights LLC

[13] Kamlesh Kumar Hingwe, S. Mary SairaBhanu (2014). Sensitive Data Protection of DBaaS using OPE and FPE,

2014 Fourth International Conference of Emerging Applications of Information Technology, 978-1-4799-4272-

5/14 $31.00 © 2014 IEEE DOI 10.1109/EAIT.2014.22 pg no. 320-327

[14] S. Vijayarani, Dr. A. Tamilarasi (2011). An Efficient Masking Technique for Sensitive Data Protection, IEEE-

International Conference on Recent Trends in Information Technology, ICRTIT 2011 978-1-4577-0590-

8/11/$26.00 ©2011 IEEE, MIT, Anna University, Chennai. June 3-5, 2011

[15] Muralidhar, K. and R.Sarathy,(1999). Security of Random Data Perturbation Methods, ACM Transactions on

Database Systems, 24(4), 487-493.

[16] Data Masking: What You Need to Know What You Really Need To Know Before You Begin A Net 2000 Ltd.

White Paper.

[17] ChiragGoyal. Data Masking: Need, Techniques & Solutions, International Research Journal of Management

Science & Technology (IRJMST)Vol 6 Issue 5 [Year 2015] ISSN 2250 – 1959 (0nline) 2348 – 9367 (Print) Pg no

221-229

[18] Data Sanitization Techniques. A Net 2000 Ltd. White Paper. Copyright © Net 2000 Ltd. 2010 Available at

http://www.DataMasker.com

[19] Muralidhar and Sarathy. Data Shuffling—A New Masking Approach for Numerical Data, MANAGEMENT

SCIENCE Vol. 52, No. 5, May 2006, pp. 658–670 ISSN 0025-1909 ©2006 INFORMS

[20] https://www.red-gate.com/hub/product-learning/sql-provision/masking-data-practice

[21] XiaolingXia ,Qiang Xiao and Wei Ji (2012). An Efficient Method to Implement Data Private Protection for

dynamic Numerical Sensitive Attributes, The 7th International Conference on Computer Science & Education

(ICCSE 2012) July 14-17, 2012. Melbourne, Australia.

[22]Report on data masking Tools available on https://www.bloorresearch.com/research/data-masking-2017/

[23] Yeh, I. C., & Lien, C. H. (2009). The comparisons of data mining techniques for the predictive accuracy of

probability of default of credit card clients. Expert Systems with Applications, 36(2), 2473-2480.

AUTHOR

Ruby Bhuvan Jain received the BCA and M.Sc.(I.T.) degrees from MCRPV in 2003 and 2005 respectively. In 2006, received

MCA from GJU, Hisar. Having 10 years of teaching experience in various UG and PG colleges under SPPU. Published and

presented multiple research papers in national and international journals and conferences.

Dr. Manimal Puri

Volume 8, Issue 3, March 2019 Page 389

International Journal of Application or Innovation in Engineering & Management (IJAIEM)

Web Site: www.ijaiem.org Email: editor@ijaiem.org

Volume 8, Issue 3, March 2019 ISSN 2319 - 4847

Over 20 years of experience in Strategic Planning, Academic Operations, Corporate Communication, Teaching and Tutoring and

General Administration with reputed organisations in the education sector. Proficient in managing successful business operations

with proven ability of achieving Service Delivery/Business Targets. Extensive experience of facilitating/coaching students.

Distinction of being deputed at All India Council for Technical Education- An apex body in field of technical education under

Mininstry of Human Resources and Development,India. A keen planner and strategist with proven abilities in corporate

communication image and brand

building. Adept at handling day to day activities in co-ordination with internal/external departments for smooth business operations.

A skilled communicator with exceptional presentation skills and abilities.

Volume 8, Issue 3, March 2019 Page 390

You might also like

- Mother Ourselves ManualDocument26 pagesMother Ourselves ManualAlexis Pauline Gumbs100% (9)

- Information Security Policies and Procedures: Corporate Policies-Tier 1, Tier 2 and Tier3 PoliciesDocument42 pagesInformation Security Policies and Procedures: Corporate Policies-Tier 1, Tier 2 and Tier3 PoliciesSanskrithi TigerNo ratings yet

- EmergingDocument8 pagesEmergingSani MohammedNo ratings yet

- Maina Assignment1Document19 pagesMaina Assignment1Peter Osundwa KitekiNo ratings yet

- Application of Data Masking in Achieving Information PrivacyDocument9 pagesApplication of Data Masking in Achieving Information PrivacyIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNo ratings yet

- Group 6 MSCSCM626Document24 pagesGroup 6 MSCSCM626Tinotenda ChiveseNo ratings yet

- International Journal of Multimedia and Ubiquitous EngineeringDocument10 pagesInternational Journal of Multimedia and Ubiquitous EngineeringPeter Osundwa KitekiNo ratings yet

- RohitDocument75 pagesRohitRobin PalanNo ratings yet

- Firestone Data Security 071118Document26 pagesFirestone Data Security 071118shashiNo ratings yet

- IJMIE5April24 33214Document9 pagesIJMIE5April24 33214editorijmieNo ratings yet

- The DAMA Guide To The Data Management 7a 8Document61 pagesThe DAMA Guide To The Data Management 7a 8jorgepr4d4No ratings yet

- Bereket H - MDocument24 pagesBereket H - MHailemickael GulantaNo ratings yet

- Security and Privacy - A Big Concern in Big Data A Case Study On Tracking and Monitoring SystemDocument4 pagesSecurity and Privacy - A Big Concern in Big Data A Case Study On Tracking and Monitoring SystemabswaronNo ratings yet

- Session 3 4 Data Literacy Privacy EthicsDocument19 pagesSession 3 4 Data Literacy Privacy EthicsNikhila LakkarajuNo ratings yet

- Chapter 7 Data Security - DONE DONE DONEDocument41 pagesChapter 7 Data Security - DONE DONE DONERoche ChenNo ratings yet

- Data Protection and PrivacyDocument10 pagesData Protection and PrivacyKARTIK DABASNo ratings yet

- InfoDocument5 pagesInfoprojectssupervision70No ratings yet

- B Cyber Security Report 20783021 en - UsDocument7 pagesB Cyber Security Report 20783021 en - UsAbizer HiraniNo ratings yet

- The 12 Elements of An Information Security Policy - Reader ViewDocument7 pagesThe 12 Elements of An Information Security Policy - Reader ViewHoney DhaliwalNo ratings yet

- Lopiga, Hermanio A. COMPUTER ENGINEERINGDocument4 pagesLopiga, Hermanio A. COMPUTER ENGINEERINGLOPIGA, HERSHA MHELE A.No ratings yet

- ACC705 Short Answers #3 S1 2020Document8 pagesACC705 Short Answers #3 S1 2020Shazana KhanNo ratings yet

- 8 Components of IM ACTIVITYDocument5 pages8 Components of IM ACTIVITYJohn Louie IrantaNo ratings yet

- Dataguise WP Why Add Masking PDFDocument8 pagesDataguise WP Why Add Masking PDFdubillasNo ratings yet

- Module 6 EETDocument27 pagesModule 6 EETPratheek PoojaryNo ratings yet

- Big Data and Security ChallengesDocument2 pagesBig Data and Security ChallengesBilal Bin TaifoorNo ratings yet

- Privacy - by - Design 10.1007/s12394 010 0055 XDocument8 pagesPrivacy - by - Design 10.1007/s12394 010 0055 XscribrrrrNo ratings yet

- Big Data and Data Security 2Document6 pagesBig Data and Data Security 2Adeel AhmedNo ratings yet

- Information Security Communication PlanDocument10 pagesInformation Security Communication PlanScribdTranslationsNo ratings yet

- Big Data SecurityDocument4 pagesBig Data SecurityIbrahim MezouarNo ratings yet

- Business Value of Security ControlDocument3 pagesBusiness Value of Security ControlTowhidul IslamNo ratings yet

- EMERGING TECHNOLOGIES (DATA PROCESSING) UpdatedDocument14 pagesEMERGING TECHNOLOGIES (DATA PROCESSING) UpdatedDARIAN FRANCIS 19213031No ratings yet

- ATARC AIDA Guidebook - FINAL ZDocument5 pagesATARC AIDA Guidebook - FINAL ZdfgluntNo ratings yet

- Assgnment FridayDocument7 pagesAssgnment FridayrobertbillatteNo ratings yet

- Consumer Behaviour ProjectDocument9 pagesConsumer Behaviour Projectsumit ranjanNo ratings yet

- Information Security Means Protecting Information and Information Systems From Unauthorized AccessDocument1 pageInformation Security Means Protecting Information and Information Systems From Unauthorized AccessJohn MasikaNo ratings yet

- Authenticated Medical Documents Releasing With Privacy Protection and Release ControlDocument4 pagesAuthenticated Medical Documents Releasing With Privacy Protection and Release ControlInternational Journal of Trendy Research in Engineering and TechnologyNo ratings yet

- Big Data and Security ChallengesDocument2 pagesBig Data and Security ChallengesBilal Ahmad100% (1)

- Maintaining Your Data: Internet SecurityDocument20 pagesMaintaining Your Data: Internet SecuritySenathipathi RamkumarNo ratings yet

- Blue Doodle Project Presentation 20240507 195451 0000Document18 pagesBlue Doodle Project Presentation 20240507 195451 0000enemecio eliNo ratings yet

- Why Is BIG Data Important?: A Navint Partners White PaperDocument5 pagesWhy Is BIG Data Important?: A Navint Partners White PaperparasharaNo ratings yet

- Sample MootDocument5 pagesSample MootMaha PrabhuNo ratings yet

- Itech Finish CW EditiedDocument12 pagesItech Finish CW EditiedTroy LoganNo ratings yet

- CSS L-5Document6 pagesCSS L-5Utkarsh Singh MagarauthiyaNo ratings yet

- "Final Requirement in Pharmacy Informatics" (Laboratory) : Submitted By: Angeline Karylle C. Mejia, Bs-PharmacyDocument10 pages"Final Requirement in Pharmacy Informatics" (Laboratory) : Submitted By: Angeline Karylle C. Mejia, Bs-PharmacyAngeline Karylle MejiaNo ratings yet

- Ch6 - EthicsDocument21 pagesCh6 - EthicsmutgatkekdengNo ratings yet

- BSC Degree in Information TechnologyDocument15 pagesBSC Degree in Information Technologysenarathgayani95No ratings yet

- A Systematic Approach To E-Business SecurityDocument5 pagesA Systematic Approach To E-Business SecurityIRJCS-INTERNATIONAL RESEARCH JOURNAL OF COMPUTER SCIENCENo ratings yet

- Big Data and Security ChallengesDocument3 pagesBig Data and Security ChallengesHasnain Khan AfridiNo ratings yet

- ISCA Chap 9Document24 pagesISCA Chap 9Abhishek ChaudharyNo ratings yet

- Data Security - Challenges and Research OpportunitiesDocument5 pagesData Security - Challenges and Research Opportunities22ec10va480No ratings yet

- Big Data PrivacyDocument30 pagesBig Data Privacymr_ton1e46No ratings yet

- IG Workbook V7 2017-18 20170411 PDFDocument21 pagesIG Workbook V7 2017-18 20170411 PDFjanushan05No ratings yet

- What Is Data PrivacyDocument13 pagesWhat Is Data PrivacyyemaneNo ratings yet

- Who Is Watching YouDocument3 pagesWho Is Watching YouMercy MhazoNo ratings yet

- IASDocument11 pagesIASAsna AbdulNo ratings yet

- Data Residency. Your Organization Is Full of Sensitive Data AndDocument3 pagesData Residency. Your Organization Is Full of Sensitive Data AndAllaiza SantosNo ratings yet

- Information SystemsDocument5 pagesInformation SystemsDumisani NyathiNo ratings yet

- ATARC AIDA Guidebook - FINAL XDocument4 pagesATARC AIDA Guidebook - FINAL XdfgluntNo ratings yet

- Security of Information in Commercial and Business OrganizationsDocument14 pagesSecurity of Information in Commercial and Business OrganizationsAssignmentLab.com100% (1)

- Data-Driven Business Strategies: Understanding and Harnessing the Power of Big DataFrom EverandData-Driven Business Strategies: Understanding and Harnessing the Power of Big DataNo ratings yet

- Study of Customer Experience and Uses of Uber Cab Services in MumbaiDocument12 pagesStudy of Customer Experience and Uses of Uber Cab Services in MumbaiInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- THE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSDocument7 pagesTHE TOPOLOGICAL INDICES AND PHYSICAL PROPERTIES OF n-HEPTANE ISOMERSInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Detection of Malicious Web Contents Using Machine and Deep Learning ApproachesDocument6 pagesDetection of Malicious Web Contents Using Machine and Deep Learning ApproachesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyDocument6 pagesAnalysis of Product Reliability Using Failure Mode Effect Critical Analysis (FMECA) - Case StudyInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Design and Detection of Fruits and Vegetable Spoiled Detetction SystemDocument8 pagesDesign and Detection of Fruits and Vegetable Spoiled Detetction SystemInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- An Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewDocument9 pagesAn Importance and Advancement of QSAR Parameters in Modern Drug Design: A ReviewInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Soil Stabilization of Road by Using Spent WashDocument7 pagesSoil Stabilization of Road by Using Spent WashInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Mexican Innovation System: A System's Dynamics PerspectiveDocument12 pagesThe Mexican Innovation System: A System's Dynamics PerspectiveInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Performance of Short Transmission Line Using Mathematical MethodDocument8 pagesPerformance of Short Transmission Line Using Mathematical MethodInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Deep Learning Based Assistant For The Visually ImpairedDocument11 pagesA Deep Learning Based Assistant For The Visually ImpairedInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)Document10 pagesA Comparative Analysis of Two Biggest Upi Paymentapps: Bhim and Google Pay (Tez)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Staycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityDocument10 pagesStaycation As A Marketing Tool For Survival Post Covid-19 in Five Star Hotels in Pune CityInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Synthetic Datasets For Myocardial Infarction Based On Actual DatasetsDocument9 pagesSynthetic Datasets For Myocardial Infarction Based On Actual DatasetsInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Design and Manufacturing of 6V 120ah Battery Container Mould For Train Lighting ApplicationDocument13 pagesDesign and Manufacturing of 6V 120ah Battery Container Mould For Train Lighting ApplicationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Impact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryDocument8 pagesImpact of Covid-19 On Employment Opportunities For Fresh Graduates in Hospitality &tourism IndustryInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Ijaiem 2021 01 28 6Document9 pagesIjaiem 2021 01 28 6International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Anchoring of Inflation Expectations and Monetary Policy Transparency in IndiaDocument9 pagesAnchoring of Inflation Expectations and Monetary Policy Transparency in IndiaInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- The Effect of Work Involvement and Work Stress On Employee Performance: A Case Study of Forged Wheel Plant, IndiaDocument5 pagesThe Effect of Work Involvement and Work Stress On Employee Performance: A Case Study of Forged Wheel Plant, IndiaInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Swot Analysis of Backwater Tourism With Special Reference To Alappuzha DistrictDocument5 pagesSwot Analysis of Backwater Tourism With Special Reference To Alappuzha DistrictInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- KoohejiCo Portfolio 2017Document31 pagesKoohejiCo Portfolio 2017Ahmed AlkoohejiNo ratings yet

- CHNDocument42 pagesCHNvinwaleed50% (2)

- Business English VocabularyDocument28 pagesBusiness English VocabularyputriNo ratings yet

- Lesson 06 Past Perfect SimpleDocument17 pagesLesson 06 Past Perfect SimpleAhmed EltohamyNo ratings yet

- Public Sector AccountingDocument415 pagesPublic Sector AccountingZebedy Victor ChikaNo ratings yet

- Case Study 1Document2 pagesCase Study 1shwetauttam0056296No ratings yet

- Art in Pre-Colonial PhilippinesDocument3 pagesArt in Pre-Colonial PhilippinesBacaro JosyndaNo ratings yet

- en 20220825141030Document2 pagesen 20220825141030mokhtarNo ratings yet

- MN88472 PDFDocument1 pageMN88472 PDFthagha mohamedNo ratings yet

- Chapter 12Document31 pagesChapter 12KookieNo ratings yet

- Tracking, Delivery Status For DHL Express Shipments - MyDHL+Document2 pagesTracking, Delivery Status For DHL Express Shipments - MyDHL+Linda tonyNo ratings yet

- Thesis Statement For Twelfth NightDocument8 pagesThesis Statement For Twelfth Nightafhbexrci100% (2)

- 4 Process ApproachDocument6 pages4 Process ApproachBãoNo ratings yet

- IEP - Individualized Education PlanDocument9 pagesIEP - Individualized Education PlanCho Madronero LagrosaNo ratings yet

- Science, Technology, and Nation BuildingDocument19 pagesScience, Technology, and Nation BuildingElla Mae Kate GoopioNo ratings yet

- Moot ProblemsDocument20 pagesMoot ProblemsAryan KumarNo ratings yet

- BDO Remit Counters in SM For WebsiteDocument3 pagesBDO Remit Counters in SM For WebsiteArchAngel Grace Moreno BayangNo ratings yet

- CONVENTION No. 169Document160 pagesCONVENTION No. 169Wanderer leeNo ratings yet

- Singian Jr. Vs Sandiganbayan Rule 33Document2 pagesSingian Jr. Vs Sandiganbayan Rule 33Theodore0176100% (4)

- The Beatles With A Little Help From My Friends LyricsDocument23 pagesThe Beatles With A Little Help From My Friends Lyricswf34No ratings yet

- Grade 7, Quarter 4Document81 pagesGrade 7, Quarter 4che fhe100% (1)

- Grilled Almond Barfi (Sugar Free) RecipeDocument5 pagesGrilled Almond Barfi (Sugar Free) Reciperishisap2No ratings yet

- Statement of Account: Date Transaction Type Amount NAV in INR (RS.) Price in INR (RS.) Number of Units Balance UnitDocument3 pagesStatement of Account: Date Transaction Type Amount NAV in INR (RS.) Price in INR (RS.) Number of Units Balance Unitkousik chakrabortiNo ratings yet

- Term Paper On Income TaxDocument8 pagesTerm Paper On Income Taxdajev1budaz2100% (1)

- Lesson I Finalizing Evaluated Business Plan What Is This Lesson About?Document17 pagesLesson I Finalizing Evaluated Business Plan What Is This Lesson About?AshMere MontesinesNo ratings yet

- Zambales Chromite Mining Co., Inc. v. Leido PDFDocument2 pagesZambales Chromite Mining Co., Inc. v. Leido PDFNorie SapantaNo ratings yet

- Morfemas BrayanDocument3 pagesMorfemas BrayanSttefaNy Peña AlvarezNo ratings yet

- A Study of Accounting Standard: As 6 DepreciationDocument55 pagesA Study of Accounting Standard: As 6 DepreciationNehaNo ratings yet

- Strategies Improving Adult Learning: European GuideDocument160 pagesStrategies Improving Adult Learning: European GuideBudulan RaduNo ratings yet