Professional Documents

Culture Documents

MIcro Design - ABC Framework Ver 1.3

MIcro Design - ABC Framework Ver 1.3

Uploaded by

Anup MukhopadhyayOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

MIcro Design - ABC Framework Ver 1.3

MIcro Design - ABC Framework Ver 1.3

Uploaded by

Anup MukhopadhyayCopyright:

Available Formats

Micro Design

For

ABC Framework

Owner: IBM

Project

Creation Date: 20/05/12

Last Updated: 18/09/12

Version: V1.3

Authors: IBM

Version: V1.3 Page 1 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and Generic ABC validation

Document Revision History

Revision Number Revision Date Revision Made by Summary of Changes

Draft 20/05/12 IBM New document

V1.1 08/06/12 IBM 1.Added New table called Global_Config

2.Added New Fields in Object Data Metadata

3. Modified the field lengths in the table Batch_job_audit

V1.2 IBM 1. Autosys process updated

2. Minor Metadata Tables modifications updated

V1.3 18/05/2012 IBM 1. Customs ABC Check metadata Tables inserted

2. New key fields(ABC_ID) added into Batch_job_audit table

3. mapping picture changed for generic check

Approval List

Serial# Name Position Sign-off Status Sign-off Date

1

2

3

4

Distribution List

Serial# Name Email id Position

1

2

3

Version: V1.3 Page 2 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and Generic ABC validation

Table of Contents

1 INTRODUCTION........................................................................................................................................................... 4

2 PROGRAM SPECIFICATION........................................................................................................................................... 4

2.1 Tables used for ETL framework and ABC verification.................................................................................................................................4

2.2 INFORMATICA ETL Objects Created for ABC checks...............................................................................................................................12

3 UNIX SHELL SCRIPTS.............................................................................................................................................. 18

3.1 Autosys Dependency Jobs:..........................................................................................................................................................................23

4 UTILITIES:................................................................................................................................................................ 24

Version: V1.3 Page 3 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and Generic ABC validation

1 Introduction

The purpose of this document is to provide detail information regarding the ETL process control framework and

audit-balance-check functionalities to support the following:

1.Track ETL batch execution

2.Maintain audit trail of files / database extracts received and utilized in each batch execution cycle

3.Maintain audit trail of each job executed and associated job statistics

2 Program Specification

As a process flow the ETL framework will perform audit, balance and control for all the source systems irrespective

of flat file or relational databases.

Data Load to Landing Area:

Data loading will be audited in depending on the type of interface between source system and landing area

For systems where files are provided on ETL server, audit-balance-checks will be done using shell scripts

For systems where file are pulled via sftp from source system server, audit-balance-checks will be done using shell

scripts

For systems where data is pulled from source system database using Informatica mappings, audit-balance-checks

will be done using informatica worklets

Data Load to Staging Area:

Data loading between Landing area to Staging area is done by Informatica workflow called from ETL

framework(Unix Shell script) programs. In these cases, audit-balance-checks are done using informatica worklet.

The ABC framework will maintain job stream and job execution history and results of ABC performed in a set of

metadata and transaction tables as listed below.

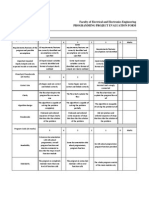

2.1 Tables used for ETL framework and ABC verification

ABC Metadata tables:

SYSTEM_CONFIG_METADATA - This table is used to maintain the metadata related various folders and FTP location details for

each source system. Data from this table is utilized during job execution to set informatica parameters for Source and target files,

Log files, Bad files,etc.

GLOBAL_CONFIG - This table used to maintain the metadata related to success/Failure email ID /group ID and other

parameters like waiting period for dependent process to search for parent job completion.

JOB_STREAM_METADATA – This table is used to hold the metadata for job streams. Appropriate values need to be

populated in this table for each job stream during code deployment.

OBJECT_DATA_METADATA – This table used to contain the metadata information related to each jobs and table

loaded. This table will hold metadata related to source object name, target object name, source object pattern,

acquisition type, acquisition frequency, what the generic ABC checks applicable against each job name.

Generic ABC checks are

Version: V1.3 Page 4 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Record count

Checksum

Range checks vis-a-vis previous period data

Checks for zero record count

Checks for same count and checksum on consecutive periods

Appropriate values need to be populated manually in this table for each jobs

ABC_CHECK_METADATA – This table used to keep the metadata for each custom ABC check that would be

performed against job. These checks will be used primarily during load from Staging to SOR and SOR to Mart.

JOB_PARAM_DATA – This table used to keep the data corresponds to all the parameter and its values for all

workflows/sessions. It contains the data related to sections like “Global” and “Object” wise

Detailed transaction / ABC tables:

JOB_STREAM – This table is used to keep the audit data for each job stream execution. This table will record

details like date and time of job stream execution, status, business processing date associated with the job stream

BATCH_JOB_STATUS – This table used to keep the audit data for execution of each jobs which have defined in the

OBJECT_DATA_METADATA table

BATCH_JOB_AUDIT – This table used to keep the audit data for result of generic as well as customs ABC checks

defined against each job in the OBJECT_DATA_METADATA table. New inclusion of field like ABC_Chek_ID to

identify the records corresponds to which ABC rule .

Description of columns of each Tables used :

Control Famework

DDL.zip

Data Model

Version: V1.3 Page 5 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Version: V1.3 Page 6 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

JOB_STREAM_METADATA :

Column Name Description

JOB_STREAM_NAME Name of the job stream like STG_VP_LOAD,

STG_PRIMA_LOAD etc.

RUN_FREQUENCY Will hold data like 'D' for daily, 'W' for weekly, 'M' for monthly

etc.

BUSINESS_DATE Will hold value of date for which data is belongs to in 'dd-MMM-

yy' format

JOB_STREAM:

Column Name Description

JOB_STREAM_ID Sequence generated unique number would be created during

each job stream execution

JOB_STREAM_NAME Name of the job stream like STG_VP_LOAD,

STG_PRIMA_LOAD, SOR_VP_LOAD etc

EDW_AREA Will hold data like 'STG' for staging, 'SOR' for enterprise data

warehouse, 'MART ' for mart area

BUSINESS_PROCESSING_DATE Will hold value of date for which data is belongs to in 'dd-MMM-

yy' format

JOB_STREAM_START_DATETIME Start time of the job stream execution

JOB_STREAM_END_DATETIME Completion time of the job stream execution

JOB_STREAM_PROCESS_STATUS Status of the job stream , would contain values like

'SUCCESS', 'FAIL' ,'RUN' 'SKIP' depending upon job stream

execution

OBJECT_DATA_METADATA :

Column Name Description

OBJECT_ID Unique number would be created for each job name

SYSTEM_NAME Name of the systems like VP, PRIMA, LAS, LAG etc

SOURCE_OBJECT_NAME Name of source entity(can be a table or flat file) for the Job

name

TARGET_OBJECT_NAME Name of the target entity(can be a table or flat file) for the Job

name

ACQUISITION_TYPE Acquisition type could be PUSH or PULL depending upon to

system

ACQUISITION_FREQUENCY Acquisition frequency could be 'DAILY', 'MONTHLY', 'WEEKLY'

HEADER_LINE_CNT Count of number of header records

TRAILER_LINE_CNT Count of number of trailer records

JOB_NAME Name of the Jobs, for the Informatica jobs it is the work flow

Version: V1.3 Page 7 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

name.

COUNT_CHECK This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then job's ABC check will fail if

count does not match between the count from control file and

actual count of records inserted in the table.

NA- If defined as 'NA' then count check would not be

performed for that job

CHECKSUM_CHECK This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then job's ABC check will fail if

checksum value does not match between the checksum value

from control file and actual checksum value against the same

column for records inserted in the table.

NA- If defined as 'NA' then checks w.r.t to that column would

not be performed for that job

COUNT_ZERO This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then job's ABC check will fail if

actual count of inserted records in the table is zero.

NA- If defined as 'NA' then this check w.r.t count of records

inserted inside the table could not be performed for that job

CHECKSUM_ZERO This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then job's ABC check will fail if

checksum value against a column is zero

NA- If defined as 'NA' then checks w.r.t to that column would

not be performed for that job

PREV_CNT_CSM_SAME This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then ABC check of the job will fail

if previous and current counts and checksum values for a table

are same

NA- If defined as 'NA' then checks w.r.t to that column would

not be performed for that job

RANGE_CHECK This column holds the values like “ABORT” , “NA”

ABORT – if defined as 'abort' then ABC check of the job will fail

if checksum does not fit in the range defined

NA- If defined as 'NA' then checks w.r.t to that column would

not be performed for that job

RANGE_TYPE Values would be 'ABS' for absolute or 'PERCENT' for

percentage

RANGE_MIN If RANGE_CHECK is defined as 'NA' then the value would be

Zero/NULL, otherwise lower limit value for the RANGE_TYPE

is equal to 'PERCENT' (NULL → No lower limit)

RANGE_MAX If RANGE_CHECK is defined as 'NA' then the value would be

Zero/NULL, otherwise upper limit value for the RANGE_TYPE

is equal to 'PERCENT' (NULL → No upper limit)

INPUT_FILE_PATTERN This field contains the pattern using which sFTP mechanism

will find which files need to be FTP from a remote server

Version: V1.3 Page 8 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

SOURCE_OBJECT_TYPE Holds the value of object Type of the Source entity (FILE /

TABLE)

TARGET_OBJECT_TYPE Holds the value of object Type of the Target entity (FILE /

TABLE)

CHKSUM_EXPR This field will hold the value of checksum expressions ,

example like SUM(ABS(col_name))

CHKSUM_WHERECLAUSE This field will hold the where clause value of checksum

expressions , example like WHERE RECORD_ID='2' (as

done for HTSM for VP system)

RANGE_EXPR This field will hold the value of RANGE expressions

RANGE_WHERECLAUSE This field will hold the where clause value of range expressions

HDR_CHECKSUM_DIV This field will hold the value for the CHECKSUM DIVISION if

required for any type of file ( example , values would be default

1 , in some types of file like HTSM,ATH2,ATH4 it is 100)

BATCH_JOB_STATUS :

Column Name Description

JOB_RUN_ID Sequence generated unique number which will be created

during each job is being executed

JOB_STREAM_ID Would be flowing from Jobstream table based upon which job

stream is being executed

OBJECT_ID Would be the object id associated with every job name

BUSINESS_PROCESSING_DATE Will hold the value of the date for which the job stream/job is

being run

JOB_START_DATETIME Will hold the start time stamp of the job

JOB_END_DATETIME Will hold the completion time stamp of the job

JOB_RUN_STATUS Will hold the status of the job. would contain values like

'SUCCESS', 'FAIL' ,'RUN' 'SKIP' depending upon job execution

BATCH_JOB_AUDIT :

Column Name Description

JOB_RUN_ID Sequence generated unique number which will be created

during each job is being executed

JOB_STREAM_ID Would be flowing from Jobstream table based upon which job

stream is being executed

OBJECT_ID Would be the object id associated with every job name which is

being executed

SOURCE_RECORD_EXTRACTED Actual number of source records extracted during the job run

Version: V1.3 Page 9 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

TARGET_RECORD_INSERTED Actual number of records inserted into target table during the

job run

TARGET_RECORD_UPDATED Actual number of records updated into target table during the

job run

RUN_DATE Contains the date value for which job is being run

PREV_CNT Actual count value of the previous period run for the a job

PREV_CHKSUM Actual checksum value of the previous period run for the a job

CURR_CNT Actual count value of the current run for the same job

CURR_CHKSUM Actual checksum value of the current run for the same job

RANGE_MIN_VAL Holds minimum value of the range for the job is being run

RANGE_MAX_VAL Holds maximum value of the range for the job is being run

COUNT_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'COUNT' check for the job .

CHECKSUM_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'CHECKSUM' check for the job .

CNT_ZERO_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'CNT_ZERO' check for the job .

CHKSUM_ZERO_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'CHKSUM_ZERO' check for the job .

CNT_CSM_SAME_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'CNT_CSM_SAME_RESULT' check for the

job .

RANGE_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the corresponding ABC 'RANGE' check for the job .

HEADER_CHKSUM Holds the value of checksum shown in the header line of the

flat file source

HEADER_CNT Holds the value of record count shown in the header line of the

flat file source

HEADER_BUSINESS_DATE Holds the value of record count shown in the header line of the

flat file source

BUSINESS_DATE_CHK_RESULT Will hold the values like 'SUCCESS', 'FAIL' depending upon the

status of the ABC 'Business date check RESULT' check for the

job

ABC_CHECK_ID It will contain “ABC_CHECK_ID” for each ABC checks to be be

performed for each SOR or MART workflow. For STG area the

value would be “NA”

ABC_CHECK_METADATA:

Column Name Description

ABC_CHECK_ID A number which will be created during population of metadata

Version: V1.3 Page 10 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

TARGET_OBJECT_NAME Target Object name related to a Job

MINIMUM_THRESHOLD Will hold the minimum thresold value for that target column

which is in the Range_expression

MAXIMUM_THRESHOLD Will hold the minimum thresold value for that target column

which is in the Range_expression

THRESHOLD_TYPE Tells what type of Thresholds like PERCENT, ABS value

SOURCE_CUSTOMS_SQL Complex source side SQL to be saved to perform any kind of

ABC check

SOURCE_COUNT_EXPR Will hold the expression for actual count value for the source

column of target table

ex. COUNT(DISTINCT ACCT)

SOURCE_CHECKSUM_EXPR Will hold the expression for actual checksum value for the

source column of target table

ex. SUM(LOGO)

TARGET_RANGE_FROM_CLAUSE Would hold the value of “FROM clause” to be applied for

determining actual range value on a particular target column,

ex. $$SOR_DB.$$TGT_OWNER.STG_VP_ATH1

SOURCE_FROM_CLAUSE Would hold the value of “FROM clause” to be applied for

determining checksum or count value on a particular source

column, ex. $$SOR_DB.$$TGT_OWNER.STG_VP_ATH1

SOURCE_WHERE_CLAUSE Would hold the value of “where clause” to be applied for

determining checksum or count value on a particular source

column, ex. BUSINESS_DT=$$BUSINESS_DT

TARGET_COUNT_EXPR Will hold the expression for actual count value for the target

column of target table

ex. COUNT(DISTINCT ACCT)

TARGET_CHECKSUM_EXPR Will hold the expression for actual checksum value for the

target column of target table

ex. SUM(LOGO)

TARGET_RANGE_EXPR Will hold the actual value of the target column for which range

to be determined

ex. COUNT(DISTINCT ACCT)

TARGET_FROM_CLAUSE Would hold the value of “FROM clause” to be applied for

determining checksum or count value on a particular target

column, ex. $$SOR_DB.$$TGT_OWNER.STG_VP_ATH1

TARGET_WHERE_CLAUSE Would hold the value of “where clause” to be applied for

determining checksum or count value on a particular target

column, ex. BUSINESS_DT=$$BUSINESS_DT

OBJECT_ID Will hold the value of the object id for which ABC check is being

evaluated. ex. 14 for ATH1

TARGET_RANGE_WHERE_CLAUSE Would hold the value of “Range where clause” to be applied

for determining range value on a particular target column, ex.

BUSINESS_DT=$$BUSINESS_DT

Version: V1.3 Page 11 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

TARGET_CUSTOM_SQL Complex target side SQL to be saved to perform any kind of

ABC check on target table

SYSTEM_CONFIG_METADATA

Column Name Description

SYSTEM_NAME Name of the source systems like VP, PRIMA, FINONE

INBOUND_DIR This is the name of the directory where the source files to be

kept

REJECT_DIR This directory is made for data files which are rejected after file

validation

ARCHIVE_DIR This directory is made for archiving the data files which are ok

after file validation and before informatica job runs for that file

SRC_FILE_DIR Directory path where source files to be kept

TGT_FILE_DIR Directory path where target files to be kept

FTP_SERVER Will contain the HOSTNAME of the FTP server

FTP_SRC_DIR Source file location on the FTP server

FTP_USER Name of the user to log into FTP server

BAD_FILE_DIR This directory is made for the files which are rejected during

Informatica load

SESS_LOG_DIR This directory is made for the files which are created during

Informatica execution as a log file

GLOBAL_CONFIG

Column Name Description

SUCCESS_EMAILID Will hold the value of EMAIL ID in case of Suceess of Jobs

FAILURE_EMAILID Will hold the value of EMAIL ID in case of failure of Jobs

FILE_WATCHER_WAIT_INTERVAL Will hold the value of “waiting period” value (in seconds) for

Autosys dependent job will search for its file related to its

parent job's completeion

JOB_PARAM_DATA

Column Name Description

OBJECT_ID Object id for a particular jobname

PARAM_SECTION Will hold the “SECTION” value of for that jobname, like

“GLOBAL” or

“[ORGNAME_STG_VP.WF:wf_stg_VP_ATH1.ST:s_m_stg_VP_

ATH1]”

Version: V1.3 Page 12 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

PARAM_NAME Will hold the value of “param name” which would be used

during workflow (jobname) execution

like $$JOB_STRM_ID or $PMSessionLogFile

PARAM_VALUE Will hold the actual value of “param name” which would be

used during workflow (jobname) execution

like STG_VP_ATH1_JSID(to be dynamically replaced with

actual value like 1, 1001 etc) for $$JOB_STRM_ID or

“s_m_stg_VP_ATH1.log” for $PMSessionLogFile

Relation of Tables within ETL Framework:

JOB_PARAM_DATA

Global_Config

2.2 INFORMATICA ETL Objects Created for ABC checks

a) Generic ABC check mapping used during staging area population:

Version: V1.3 Page 13 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

b) Custom ABC check mapping used during SOR and MART area population:

(a)Mapping Details

Specification Type Specification

Mapping Name m_Generic_ABC_Check

Mapping Location ORGNAME_Shared

Processing Logic Performs the generic ABC checks which are defined in the object data metadata tables against

each entity as an “ABORT'

It compares the values like Count, Checksum against a column defined in the control

file(generated from header record of the source file ) with the actual count of records and

Version: V1.3 Page 14 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Specification Type Specification

checksum values against that specific column of the Target Table(once loaded from the source

file/Table)

Input Tables Object_Data_Metadata,

Actual STAGING Table

BATCH_JOB_AUDIT

Sequence of this mapping Run Once after each Target table load from file

Parameter/ Variables $$TABLENAME -->Name of the stage Table

$$CHECKSUM_EXPR-->like SUM(ABS(col_name)) or SUM(col_name) etc.

$$ABCRESULT -->Will be used later for generic ABC checks

$$JOB_NAME → Job name for which ABC checks is being performed

$$JS_ID → Job stream Id for the job

$$JOB_RUN_ID → Current Job run ID

$$SRCROW → Number of source record extracted would be fetched from Informatica metadata

variable during the execution of the mapping

$$TGTINSROW → Number of target record inserted would be fetched from Informatica

metadata variable during the execution of the mapping

$$BUS_DT_CTL_FILE → Value of the Business date from the source control file

$$CNT_CTL_FILE → Value of the count of records from the control file

$$CHKSUM_CTL_FILE → Value of the Checksum column from the control file

$$WHERECLAUSE → for files like HTSM of VP systems where $$WHERECLAUSE would be

useful to get CHKSUM value

$$CHECKSUM_EXPR → would be used to hold values like SUM(ABS(COL_VAL1))

$$RANGE_EXPR→ would be used to hold values for Range expression

$$RANGE_WHERECLAUSE→ would be used to hold values for where clause for range

expression

$$CHECKSUM_DIV→ would be used to hold values for CHECKSUM_DIV to divide the value

provided in the header file (like in case of ATH2,ATH4,HTSM)

Ouput TABLES BATCH JOB_AUDIT

DUMMY_ABC_TARGET → This flow added in the mapping to ABORT the session in case if any

of the ABC check fails

NB – The shortcut of this mapping would be created in each of the folders specific to each source systems

b) Worklet and session Details for Generic ABC Check

One worklet (below mentioned) need to be created in each of the folder specific to the Source Systems, and this

worklet to be added after each target entity load session of the workflow.

Version: V1.3 Page 15 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Session Details:

Specification Type Specification

Informatica server used to execute the mapping IS_ORGNAME_EDW_DEV

Session name s_m_Generic_ABC_Check_STG

Mapping name m_Generic_ABC_Check

Session Type non-reusable

Session Log File <sessionname>.log

Parameter File /edw/infa_shared/ParamFiles/

<system_name>_<Target_entity_Name>.prm

Source NA

Record Treatment Target Data driven

Pre Pre_session_variable_A Gets all the variables from the previous session

ssignment

Session specific to Source Systems(where ever the flat file is the source , example VP)

Specification Type Specification

Informatica server used to execute the mapping IS_ORGNAME_EDW_DEV

Session name s_m_READ_VP_CONTROL_FILE

Mapping name m_READ_VP_CONTROL_FILE

Session Type non-reusable

Session Log File <sessionname>.log

Parameter File /edw/infa_shared/ParamFiles/

<system_name>_<Target_entity_Name>.prm

Source NA

Record Treatment Target Insert

Post Post_session_Success_ Assigns all the variable from the control file related to the job_name to the

Variable_assignments next session

Work-let Name Specification

Worklet Name wklt_Generic_ABC_Check_STG

Worklet Variables $$CTRL_FILE → Name of the control file to be used

$$P_TGT_CNT → Target record count from the earlier session (Actual

Version: V1.3 Page 16 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Work-let Name Specification

Worklet Name wklt_Generic_ABC_Check_STG

target table load)

$$P_BUS_DT_CTL_FILE → Business date value from the Control file

$$P_COUNT_CTL_FILE → Record Count value from the Control File

$$P_CHKSUM_CTL_FILE →Checksum Value from the Control file

Control Task Name Fail_ABC_CHECK → Fails the worklet and parent workflow if there are any

of the checks not complied

Parmeter File Entry for each workflow for ABC Check Session:

Sample entry for ABC Check related with ATH2

[_STG_VP.WF:wf_stg_VP_ATH2.WT:wklt_Generic_ABC_Check_STG]

$PMSessionLogFile=s_m_Generic_ABC_Check.log

$DBConnection_Src=CONN_EDW_NZ

$DBConnection_ABC=Oracle_repo

$$TABLENAME=STG_VP_ATH2

$$CHECKSUM_EXPR=SUM(ABS(MT_AMOUNT))

$$JS_ID=STG_VP_ATH2_JSID

$$JOB_RUN_ID=STG_VP_ATH2_JOBID

$$JOB_NAME=STG_VP_ATH2_JOBNM

$$CTRL_FILE=ATH2_20120515.ctl

$$COUNT_VALUE=0

$$CHECKSUM_VALUE=0

$$CHECKSUM_EXPR=VAR_CHKSUMEXPR

$$WHERECLAUSE=VAR_WHERECLAUSE

$$RANGE_EXPR=VAR_RANGEEXPR

$$RANGE_WHERECLAUSE=VAR_RANGEWHERECLAUSE

$$CHECKSUM_DIV=VAR_CHECKSUMDIV

II>> Customs ABC check mapping:

Mapping Details

Specification Type Specification

Mapping Name m_Custom_ABC_Check

Mapping Location ORGNAME_Shared

Processing Logic Performs the custom ABC checks which are defined in the ABC_CHECK_Metadata table for a

particular workflow against each entity as an “ABORT'

It compares the values like Count, Checksum , range against a column defined in the source

side columns like “SOURCE_COUNT_EXPR” ,” SOURCE_CHECKSUM_EXPR” with the actual

count of records on target side vcolumns like

“TARGET_COUNT_EXPR” ,”TARGET_CHECKSUM_EXPR” and checksum values against that

specific column of the Target Table

Input Tables ABC_CHECK_METADATA

Actual SOR/MART table

BATCH_JOB_AUDIT

Sequence of this mapping Run Once after each workflow (containing multiple Target tables) load from file

Version: V1.3 Page 17 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Specification Type Specification

Parameter/ Variables $$STG_DB - staging database name

$$SOR_DB – SOR database name

$$SRC_OWNER – name of the owner for source side database

$$TGT_OWNER – name of the owner for target side database

$$TABLENAME -->Name of the stage Table

$$CHECKSUM_EXPR-->like SUM(ABS(col_name)) or SUM(col_name) etc.

$$ABCRESULT -->Will be used later for generic ABC checks

$$JOB_NAME → Job name for which ABC checks is being performed

$$JS_ID → Job stream Id for the job

$$JOB_RUN_ID → Current Job run ID

BUSINESS_DT → Date for which the job is being executed

$$SRC_RECORD_EXTRACTED – Number of records is being extracted from set of sources

$$TGT_RECORD_INSERTED – Number of records are being inserted into target table

Ouput TABLES BATCH JOB_AUDIT

DUMMY_ABC_TARGET → This flow added in the mapping to ABORT the session in case if any

of the ABC check fails

Session Details:

Specification Type Specification

Informatica server used to execute the mapping IS_ORGNAME_EDW_DEV or IS_ORGNAME_EDW_UAT

Session name s_m_Custom_ABC_Check

Mapping name m_Custom_ABC_Check

Session Type non-reusable

Session Log File <sessionname>.log

Parameter File /edw/infa_shared/ParamFiles/

<system_name>_<Target_entity_Name>.prm

Source NA

Record Treatment Target Data driven

Pre Pre_session_variable_A NA

ssignment

NB - One worklet to be defined for this “s_m_Custom_ABC_Check” in each SOR/MART folder based upon subject

area , which worklet be attached after each SOR or MART workflow so that all the “ABC” related checks (defined in

the ABC_CHECK_METADATA) to be executed after each worklflow(job) runs.

Version: V1.3 Page 18 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

3 UNIX Shell Scripts

Scripts.zip B a c k u p _ 1 4 S e p . z ip

Unix Shell Script Name Description

initialize_jobstream.sh This shell script does the following

Starts the ETL control process

initiates a jobstream process

inserts a record into “job stream” table with 'RUN' status

Predecessor job Will be called from Autosys

Input Arguments jobstream_name

edw_area

SQL files Involved initialize_JobStream_GetStatus.sql

initialize_JobStream_Insert_Rec.sql

get_business_date.sql

Unix Shell Script Name Description

job_exec_lnd_vp.sh This shell script is specific to systems(like VP) where source is a FF with

header/trailer does the following

Checks whether a landing Job can be started based on the status of a job stream

and status of the job

initiates the specific landing job sent as a argument

Gets system configuration folder structure

inserts a record in "Batch Job Status" with 'RUN' status

Also inserts a record in the "Batch Job Audit " table

Does sFTP of the specific flat file from remote server to the local server

validates file for record count check and updates result into “batch job audit” table

for that job

validate file for date provided in the header record if that is matching with the

business date.

If validation gets successful then it moves source file from “Inbound” folder to

“SrcFiles” folder defined for that specific system

If validation fails then it moves the file to “Reject” folder defined for that specific

system

Calls <job_afterrun .sh> program based upon the status of sFTP and file validation

Predecessor job(if any) Will be called from Autosys

initialize_jobstream.sh

Input Arguments jobstream_name

edw_area

job_name

SQL Files Used job_execution_Get_JobId.sql

Version: V1.3 Page 19 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Unix Shell Script Name Description

job_execution_Get_Status.sql

job_execution_Insert_Rec.sql

job_vf_update_bja.sql

get_business_date.sql

Unix Shell Script Name Description

job_execution.sh This shell script does the following

Checks whether a Job can be started based on the status of a job stream and status

of the job

Gets system configuration folder structure

Initiates the specific job sent as a argument

Inserts a record in "Batch Job Status" with 'RUN' status

Also inserts a record in the "Batch Job Audit " table

Copies the source file from “SrcFiles” folder to “Archive” folder defined for that

system

Creates template parameter file based on entries in JOB_PARAM_DATA table by

“union all” of sections with

No object id (GLOBAL section)

Object id specific (job specifc section)

Replaces the parameters of the Template parameter file defined for specific job – to

be used by the ETL Informatica jobs – one such ETL parameter file for each job

Calls the Informatica ETL job specific to that job name

Calls <job_afterrun .sh> program based upon the status of informatica job

execution status.

Predecessor job(if any) Will be called from Autosys

initialize_jobstream.sh

Input Arguments jobstream_name

edw_area

job_name

SQL Files Used job_execution_Get_JobId.sql

job_execution_Get_Status.sql

job_execution_Insert_Rec.sql

get_business_date.sql

Unix Shell Script Name Description

job_afterrun.sh This shell script does the following

Removes the source file and control file from “SrcFiles” directory defined for that

system for that job

updates the status for that job in the “Batch_Job_status” table based upon the

status of the job which just ran

Predecessor job Either job_execution.sh or job_exec_lnd_vp.sh

Input Arguments jobstream_name

job_name

Job Status

Business_date

Version: V1.3 Page 20 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Unix Shell Script Name Description

SQL Files Used job_afterrun_Get_Status.sql

job_afterrun_Update_Rec.sql

Version: V1.3 Page 21 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Unix Shell Script Name Description

jobstream_wrapup.sh This shell script does the following

Updates a jobstream record based upon the status of all the jobs under that

jobstream

Advances the jobstream metadata table based upon the frequency of the jobstream

defined.

Predecessor job initialize_jobstream.sh and/or

either job_execution.sh or job_exec_lnd_vp.sh

job_afterrun.sh

Will be called from Autosys

Input Arguments Jobstream Name

SQL Files Used(If Any) jobstream_wrapup_Get_Status.sql

jobstream_wrapup_Update_Rec.sql

get_business_date.sql

update_business_date.sql

Configuration File (if any) NA

Unix Shell Script Name Description

sftp_files.sh This shell script does the following

Does sFTP of the source file from “FTP source” directory to “Inbound ” directory

defined for that system for that job

Predecessor job Either job_execution.sh or job_exec_lnd_vp.sh

Input Arguments SOURCE_SYSTEM

INPUT_FILE_PATTERN

OUTPUT_FILE_PATTERN

SQL Files Used(If Any) NA

Configuration File (if any) VP_FTP.cfg (system Specific)

rsa_keys to be created in the remote server for password less FTP

Version: V1.3 Page 22 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Unix Shell Script Name Description

Validatefile.sh This shell script does the following

Checks whether input file is empty or not

Validates business date is matching with the header date and updates

“batch_job_audit” table accordingly

validates file for record count check and updates result into “batch job audit” table

for that job

If validation gets successful then it moves source file from “Inbound” folder to

“SrcFiles” folder defined for that specific system

If validation fails then it moves the file to “Reject” folder defined for that specific

system

Predecessor job Either job_execution.sh or job_exec_lnd_vp.sh

Input Arguments <jobstream_name>

<file_name>

<Job_name>

SQL Files Used(If Any) /checkfile_get_head_tail_cnt.sql

get_business_date.sql

job_afterrun_Get_Status.sql

job_vf_update_bja.sql

Configuration File (if any)

Unix Shell Script Name Description

runSQL.sh This shell script does the following

executes the SQL scripts

Predecessor job NA

Input Arguments SQL File

RPT file

Variable number of arguments

SQL Files Used(If Any) NA

Configuration File (if any) NA

Unix Shell Script Name Description

parse_header.sh This shell script does the following

parses the different header/trailer record of different source file

Predecessor job NA

Input Arguments NA

SQL Files Used(If Any) NA

Configuration File (if any) NA

Version: V1.3 Page 23 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Unix Shell Script Name Description

rmhdrtrl.sh This shell script removes the header & trailer record for the input source file.

This uses the SED unix command to remove the header and trailer record from the

source flat file

Predecessor job NA

Input Arguments Input_Filename

SQL Files Used(If Any) NA

Configuration File (if any) NA

Unix Shell Script Name Description

cmn_const.sh Common constants kept in this file

Also keeps the password of the Informatica user in encrypted format

comm_func_lib.sh Common function library

WriteToLog

proc_lnd_prm_<source_entity_name>. Shell script to run the job related to prima landing ETL jobs for

sh <source_entity_name>

proc_stg_prm_<source_entity_name>. Shell script to run the job related to prima staging ETL jobs for

sh <source_entity_name>

proc_lnd_vp_<source_entity_name>.sh Shell script to run the job related to vp landing ETL jobs for <source_entity_name>

proc_stg_vp_<source_entity_name>.sh Shell script to run the job related to vp staging ETL jobs for <source_entity_name>

proc_init_stg_<source_system_name>. Initializes the Jobstream for <source_system_name>

sh

proc_wrp_<source_system_name>.sh Wraps up the jobstream for <source_system_name>

3.1 Autosys Dependency Jobs [file_watcher_autosys_touch_file.sh]:

Process purpose:

As Autosys can not create a dependency between jobs in two separate flows. To overcome this constraint we have

following strategy:

1.Create a touch file after execution of each parent job

2.Create a Object Dependency table for all inter-dependent jobs

3.Allow to Execute dependent jobs only if touch file created by successful parent job(s) is present

4.Remove touch file [Created by successful parent job execution] at appropriate point in processing

Metadata Table creation:

Metadata Table to be created : Object_Dependency_Metadata

Version: V1.3 Page 24 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

Fields :

Parent_Job_Object_Id Integer

Child_Job_Object_id Integer

File_Watcher_Ind Char(1)

Process Description :

1.Create touch file – “File watcher” file[ Standard : <object_id>_BUSDATE.suc ex. 1_20120807.suc]. Touch file to

be created in existing shell script <Job_afterrun.sh>

2.Create file watcher job to perform following steps

1.Read “Object_Dependency_Metadata” tables for the job to check if there is a dependency exists for that

object , if dependency exists then check for the respective file_watcher file.

2.If file_watcher file for Parent job found in step-2 then allow the child job to execute

3.If file_watcher file for Parent job not found in step-2 , wait 30 secs (configurable in global config metadata

column “File_Watcher_Wait_Interval”) again go to step-2 for rechecking

3.Create shell script to remove the “file_watcher” file related to parent job.

4 Utilities:

1.Bulkwriter – There is a awk script which was specifically written for changing the “Netezza Relational writer” for

target table to “Netezza Bulk writer” . This utility applies to the XML file(exported Informatica workflows) and it

generated the changed XML file. Changed XML can be imported in Informatica workflow Manager which would

contain the properties set with “Netezza Bulk writer” .

Use : awk -v v_pattern=VP -f parseWorkflow.awk VP.XML > VP.Changed.XML

2.Session Config -There is a awk script which specifically written for changing the following session properties .

This utility applies to the XML file(exported Informatica workflows) and it generated the changed XML file. Changed

XML can be imported in Informatica workflow Manager which would contain the properties set with “Netezza Bulk

writer” .

1. Save Session log by – By Run

2. Save session log by these runs -10

3. Recovery strategy – Restart task

4.Session on Grid – Is Enabled(checkbox checked)

Use : awk -f parseWorkflow.awk PRIMA.XML > PRIMA.Changed.XML

3.Paramete File Conversion - There is a awk script which was specifically written for uploading the “conventional

parameter file” to target table “Job_Param_Data” . This utility applies to the text file(containing parameter name-

Version: V1.3 Page 25 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

value pairs) and it uploads those parameter values into a table “Job_Param_Data” using a sqlloader and a control

file.

Control file – load_param.ctl

4.load data

infile './vp_template.prm.out'

append

into table tmp_job_param_data

fields terminated by '~' optionally enclosed by '"'

(filename, param_section, wf_name, param_name, param_value)

Step-1> Use : awk -f Convert_global_section.awk vp_template.prm>vp_template_out.prm

vp_template_out.prm is a '~' seperated flat file which would be used in the second step

Step-2 > Use : sqlldr userid/password@infarepo CONTROL=load_param.ctl BADFILE='load_param.bad'

5.Repository Backup : This shell script would be used to take backup of the repository

use :

sh repository_backup.sh

Version: V1.3 Page 26 of 26

Date: 20/09/12

File Name Micro Design Document for ETL Framework and ABC Checks

You might also like

- Informatica Data Qaulity Technical Design DocumentDocument17 pagesInformatica Data Qaulity Technical Design DocumentYusuf RangwallaNo ratings yet

- Abinitio - Enterprise - Environment - Setup v1.0Document16 pagesAbinitio - Enterprise - Environment - Setup v1.0Amjathck100% (1)

- Etl Tools Ab Initio PDFDocument2 pagesEtl Tools Ab Initio PDFMyrosiaNo ratings yet

- BMC Control-M 7: A Journey from Traditional Batch Scheduling to Workload AutomationFrom EverandBMC Control-M 7: A Journey from Traditional Batch Scheduling to Workload AutomationNo ratings yet

- Teradata Basics Exam - Sample Question Set 1 (Answers in Italic Font)Document5 pagesTeradata Basics Exam - Sample Question Set 1 (Answers in Italic Font)Vishal GuptaNo ratings yet

- 71 Ab Initio Etl Best PracticesDocument3 pages71 Ab Initio Etl Best Practiceswald007No ratings yet

- Ab Initio TrainingDocument100 pagesAb Initio TrainingNamrata MukherjeeNo ratings yet

- Ab InitioDocument2 pagesAb InitioAnantavaram Ramakoteswara RaoNo ratings yet

- Ab-Initio Interview Questions and AnswersDocument2 pagesAb-Initio Interview Questions and Answersskills9tanishNo ratings yet

- Data Lake Architecture Strategy A Complete Guide - 2021 EditionFrom EverandData Lake Architecture Strategy A Complete Guide - 2021 EditionNo ratings yet

- DataStage MatterDocument81 pagesDataStage MatterEric SmithNo ratings yet

- AbintioDocument110 pagesAbintiomaggie_thomas_6100% (1)

- Sequential DatawarehousingDocument25 pagesSequential DatawarehousingSravya ReddyNo ratings yet

- Abinitio - Session 2: Asia'S Largest Global Software & Services CompanyDocument34 pagesAbinitio - Session 2: Asia'S Largest Global Software & Services CompanykkkkoaNo ratings yet

- Difference Between Fact Table and Dimension Table: Last Updated: 04 Sep, 2020Document2 pagesDifference Between Fact Table and Dimension Table: Last Updated: 04 Sep, 2020Niranjan Kumar DasNo ratings yet

- AB-INITIO Developer: Learning Made Easy!Document4 pagesAB-INITIO Developer: Learning Made Easy!yerrasudhakarNo ratings yet

- Explain About Your Project?Document20 pagesExplain About Your Project?Akash ReddyNo ratings yet

- DataStage MatterDocument81 pagesDataStage MatterShiva Kumar0% (1)

- Datastage GuideDocument233 pagesDatastage Guideabreddy2003No ratings yet

- IDQ ReferenceDocument31 pagesIDQ ReferenceRaman DekuNo ratings yet

- DataStage Interview QuestionsDocument3 pagesDataStage Interview QuestionsvrkesariNo ratings yet

- Module 1: Introduction To Data Warehouse Concepts: Syllabus of Datastage CourseDocument13 pagesModule 1: Introduction To Data Warehouse Concepts: Syllabus of Datastage CourseSampath PagidipallyNo ratings yet

- ©2010, Cognizant Technology Solutions ConfidentialDocument32 pages©2010, Cognizant Technology Solutions ConfidentialNamrata MukherjeeNo ratings yet

- Top 70+ Data Engineer Interview Questions and AnswersDocument18 pagesTop 70+ Data Engineer Interview Questions and AnswersvanjchaoNo ratings yet

- Abnitio Interview QuestionDocument9 pagesAbnitio Interview QuestionSomnath ChatterjeeNo ratings yet

- Teradata CVDocument4 pagesTeradata CVkavitha221No ratings yet

- Ab Initio OverviewDocument4 pagesAb Initio OverviewKulbir Minhas100% (3)

- Gde Release Notes 3 1Document37 pagesGde Release Notes 3 1piawaimultiNo ratings yet

- Difference Between Layout and Depth in Ab InitioDocument1 pageDifference Between Layout and Depth in Ab InitioSravya ReddyNo ratings yet

- Version - 5.0 Architecture: Ascential Data Stage XE 5.1Document7 pagesVersion - 5.0 Architecture: Ascential Data Stage XE 5.1manjinder888No ratings yet

- XBRL US Pacific Rim Workshop Database and Business Intelligence Workshop Karen Hsu Director Product Marketing, InformaticaDocument18 pagesXBRL US Pacific Rim Workshop Database and Business Intelligence Workshop Karen Hsu Director Product Marketing, InformaticaUmapathi BaskarNo ratings yet

- Overview of The ETL Operations: Etl:: Extraction: Extract Is Where Data Is Pulled From Source SystemsDocument12 pagesOverview of The ETL Operations: Etl:: Extraction: Extract Is Where Data Is Pulled From Source SystemsBijjam MohanreddyNo ratings yet

- Datastage QuestionsDocument18 pagesDatastage QuestionsMonica MarciucNo ratings yet

- Project XplanationDocument4 pagesProject Xplanationvenkata ganga dhar gorrelaNo ratings yet

- Abinitio Vijay - 8553385664Document28 pagesAbinitio Vijay - 8553385664Vijayakumar ReddyNo ratings yet

- AB Initio Online Training Course Introduction To AbinitioDocument7 pagesAB Initio Online Training Course Introduction To AbinitioHari Prathap ReddyNo ratings yet

- Imp Datastage NewDocument153 pagesImp Datastage NewDinesh SanodiyaNo ratings yet

- Re: Name The Air Commands in Ab Initio? Answer #Document4 pagesRe: Name The Air Commands in Ab Initio? Answer #suri_NNo ratings yet

- CloudMDM Student Lab GuideDocument68 pagesCloudMDM Student Lab GuideYogesh DurairajaNo ratings yet

- AbinitioDocument28 pagesAbinitiossr100@gmail100% (1)

- Qdoc - Tips Datastage-MaterialDocument40 pagesQdoc - Tips Datastage-MaterialRanji DhawanNo ratings yet

- Oracle Vs Nucleus Vs Sybase IQ Vs NetezzaDocument18 pagesOracle Vs Nucleus Vs Sybase IQ Vs Netezzaenselsoftware.com100% (4)

- TeraData DBADocument7 pagesTeraData DBAavinashkakarlaNo ratings yet

- Topics For The Data Warehouse Test PlanDocument16 pagesTopics For The Data Warehouse Test PlanWayne YaddowNo ratings yet

- Abinisio GDE HelpDocument221 pagesAbinisio GDE HelpvenkatesanmuraliNo ratings yet

- GCP Data Engineer Resume Examples For 2024 Resume WordedDocument1 pageGCP Data Engineer Resume Examples For 2024 Resume WordedAnuja PatilNo ratings yet

- Data Warehousing Interview Questions and AnswersDocument5 pagesData Warehousing Interview Questions and Answerssiva_mmNo ratings yet

- Cognos Interview Questions - Very GoodDocument7 pagesCognos Interview Questions - Very GoodChandu YadavNo ratings yet

- What Is A Sandbox in Ab Initio?Document1 pageWhat Is A Sandbox in Ab Initio?Sravya ReddyNo ratings yet

- How To Load Fact TablesDocument6 pagesHow To Load Fact TablesRamyaKrishnanNo ratings yet

- Unstructured Dataload Into Hive Database Through PySparkDocument9 pagesUnstructured Dataload Into Hive Database Through PySparksayhi2sudarshanNo ratings yet

- Ab InitioDocument17 pagesAb InitioVeerendra BodduNo ratings yet

- Informatica MDM Match Tuning GuideDocument13 pagesInformatica MDM Match Tuning GuideSathish KumarNo ratings yet

- What Is ETLDocument47 pagesWhat Is ETLKoti BaswarajNo ratings yet

- D. Raghuram: Work ExperienceDocument3 pagesD. Raghuram: Work ExperienceNaveen KumarNo ratings yet

- Test Bank For Clinical Nursing Skills 8th Edition SmithDocument7 pagesTest Bank For Clinical Nursing Skills 8th Edition Smithnoumenalskall0wewNo ratings yet

- Is Codes ListDocument37 pagesIs Codes Listmoondonoo7No ratings yet

- Fire and Gas PhilosophyDocument19 pagesFire and Gas PhilosophyOmar TocmoNo ratings yet

- Heater: Hydrate PreventionDocument12 pagesHeater: Hydrate PreventionMahmoud Ahmed Ali AbdelrazikNo ratings yet

- Al Boury Oil FieldDocument11 pagesAl Boury Oil FieldSherif MohammedNo ratings yet

- 3126E Engine: Electrical Circuit DiagramDocument10 pages3126E Engine: Electrical Circuit DiagramPhil B.No ratings yet

- Pistons To JetsDocument41 pagesPistons To JetsRon Downey100% (2)

- Optare Electric Vehicles: Embracing The EnvironmentDocument4 pagesOptare Electric Vehicles: Embracing The EnvironmentarrenNo ratings yet

- MTS719# 2u2s2wc-21Document2 pagesMTS719# 2u2s2wc-21glukkerNo ratings yet

- Aptis Writing C1Document33 pagesAptis Writing C1Sanjar JumayevNo ratings yet

- Bank Management System Source CodeDocument5 pagesBank Management System Source CodetheblueartboxNo ratings yet

- Detailed Lesson Plan in ICT ExcelDocument5 pagesDetailed Lesson Plan in ICT ExcelColleen Vender100% (3)

- Corrosion Detection Midterm LessonDocument52 pagesCorrosion Detection Midterm LessonVv ZoidNo ratings yet

- Risk Assessment On Temperature and Relative Humidity Deviation During On-Going Stability StudiesDocument2 pagesRisk Assessment On Temperature and Relative Humidity Deviation During On-Going Stability StudiesAAAAAAAAAAAAAAAAAAAA ANo ratings yet

- Toyota Corolla A-131L OVERHAULDocument61 pagesToyota Corolla A-131L OVERHAULgerber damian100% (2)

- ACDC - Lucina - DatasheetDocument1 pageACDC - Lucina - Datasheetwincad_sgNo ratings yet

- A Practical Guide To Free-Energy' DevicesDocument73 pagesA Practical Guide To Free-Energy' DevicesJoe Seserman100% (1)

- Measurement GER NER GPI - Version 1.0Document8 pagesMeasurement GER NER GPI - Version 1.0Wouter RijneveldNo ratings yet

- Start With Why SummaryDocument6 pagesStart With Why SummaryAnurag100% (1)

- Emerging Horizons in HRM FinalDocument72 pagesEmerging Horizons in HRM Finalprernanew100% (5)

- Resistances, Voltages and Current in CircuitsDocument21 pagesResistances, Voltages and Current in CircuitsHisyamAl-MuhammadiNo ratings yet

- Brkarc-2350 - 2014Document128 pagesBrkarc-2350 - 2014Sarah AnandNo ratings yet

- SCIETECHNODocument19 pagesSCIETECHNOChini ChanNo ratings yet

- Topic 4-Bv2Document77 pagesTopic 4-Bv2hooranghooraeNo ratings yet

- Adam Izdebski & Michael Mulryan - Environment and Society in The Long LateDocument7 pagesAdam Izdebski & Michael Mulryan - Environment and Society in The Long Latecarlos murciaNo ratings yet

- Unit 1 Nissim Ezekiel and Eunice de Souza: 1.0 ObjectivesDocument14 pagesUnit 1 Nissim Ezekiel and Eunice de Souza: 1.0 ObjectivesJasmineNo ratings yet

- Report RubricsDocument2 pagesReport Rubricsswaggerz95No ratings yet

- Interactive Physics ManualDocument13 pagesInteractive Physics ManualMarciano SantamaríaNo ratings yet

- 1001076002-HT8911 Datasheet - V1.1Document13 pages1001076002-HT8911 Datasheet - V1.1Zhang EthanNo ratings yet