Professional Documents

Culture Documents

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Uploaded by

jojotitiCopyright:

Available Formats

You might also like

- Bernoulli's Principle Demonstration (Lab Report)Document17 pagesBernoulli's Principle Demonstration (Lab Report)Arey Ariena94% (110)

- Cloud Computing Interview Questions You'll Most Likely Be Asked: Second EditionFrom EverandCloud Computing Interview Questions You'll Most Likely Be Asked: Second EditionNo ratings yet

- Computing PradigmDocument10 pagesComputing PradigmHtun Aung LynnNo ratings yet

- Unit-1 & Ii GCCDocument37 pagesUnit-1 & Ii GCCpabithasNo ratings yet

- Cloud Technologies: Grid ComputingDocument13 pagesCloud Technologies: Grid ComputingNarmatha ThiyagarajanNo ratings yet

- Differences Unit 1Document7 pagesDifferences Unit 1manpreet singhNo ratings yet

- Distributed Operating SystemDocument18 pagesDistributed Operating SystemRajni ShelkeNo ratings yet

- CSE 423 Virtualization and Cloud Computinglecture0Document16 pagesCSE 423 Virtualization and Cloud Computinglecture0Shahad ShahulNo ratings yet

- Assignment: Parallel and Distributed Computing Submitted To: Sir Shoaib Date: 25-03-2019Document5 pagesAssignment: Parallel and Distributed Computing Submitted To: Sir Shoaib Date: 25-03-2019UmerNo ratings yet

- Study and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalDocument6 pagesStudy and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalIJERDNo ratings yet

- Unit 1 Introduction To Cloud Computing: StructureDocument17 pagesUnit 1 Introduction To Cloud Computing: StructureRaj SinghNo ratings yet

- A Comparative Analysis Grid Cluster and Cloud ComputingDocument5 pagesA Comparative Analysis Grid Cluster and Cloud ComputingMaddula PrasadNo ratings yet

- U1cc Updated-NpDocument16 pagesU1cc Updated-Npkisu kingNo ratings yet

- Data Storage DatabasesDocument6 pagesData Storage DatabasesSrikanth JannuNo ratings yet

- Distributed ComputingDocument13 pagesDistributed Computingpriyanka1229No ratings yet

- CC Question Bank All UnitsDocument28 pagesCC Question Bank All Unitsasmitha0567No ratings yet

- The Distributed Computing Model Based On The Capabilities of The InternetDocument6 pagesThe Distributed Computing Model Based On The Capabilities of The InternetmanjulakinnalNo ratings yet

- It CC1 LN R18Document75 pagesIt CC1 LN R18Sàñdèép SûññyNo ratings yet

- Unit - 1: Cloud Architecture and ModelDocument9 pagesUnit - 1: Cloud Architecture and ModelRitu SrivastavaNo ratings yet

- The Poor Man's Super ComputerDocument12 pagesThe Poor Man's Super ComputerVishnu ParsiNo ratings yet

- Introduction To Distributed SystemsDocument45 pagesIntroduction To Distributed Systemsaderaj getinetNo ratings yet

- Different Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingDocument4 pagesDifferent Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingSandeep MotamariNo ratings yet

- CC Notes UNIT 1Document21 pagesCC Notes UNIT 1amanbabu839383607No ratings yet

- Recent Trends in ComputingDocument5 pagesRecent Trends in ComputingAkashNo ratings yet

- Unit - 1 Systems Modelling, Clustering and Virtualization: 1. Scalable Computing Over The InternetDocument28 pagesUnit - 1 Systems Modelling, Clustering and Virtualization: 1. Scalable Computing Over The Internetsuryavamsi kakaraNo ratings yet

- GCC QBDocument16 pagesGCC QBSherril Vincent100% (1)

- Grid - and - Cloud Computing - Important - Questions - Unit - 1 - Part - ADocument5 pagesGrid - and - Cloud Computing - Important - Questions - Unit - 1 - Part - ASridevi SivakumarNo ratings yet

- Srinivas Institute of Management Studies: Pandeswar, Mangalore - 575 001Document101 pagesSrinivas Institute of Management Studies: Pandeswar, Mangalore - 575 001SAJIN PNo ratings yet

- Third Year Sixth Semester CS6601 Distributed System 2 Mark With AnswerDocument25 pagesThird Year Sixth Semester CS6601 Distributed System 2 Mark With AnswerPRIYA RAJI86% (7)

- MCA 502 - Cloud: Computing Theory 80 + 20 Marks Lab: 80 + 20 MarksDocument33 pagesMCA 502 - Cloud: Computing Theory 80 + 20 Marks Lab: 80 + 20 MarkskvrNo ratings yet

- Distributed Computing: Information TechnologyDocument19 pagesDistributed Computing: Information TechnologySanskrit KavuruNo ratings yet

- Compusoft, 3 (10), 1149-1156 PDFDocument8 pagesCompusoft, 3 (10), 1149-1156 PDFIjact EditorNo ratings yet

- TopologyiesDocument18 pagesTopologyieslahiruaioitNo ratings yet

- Grid ComputingDocument31 pagesGrid ComputingCutiepiezNo ratings yet

- Unit - 1 Architecture of Distributed SystemsDocument22 pagesUnit - 1 Architecture of Distributed SystemsAnjna SharmaNo ratings yet

- Job Scheduling in Cloud Computing: A Review of Selected TechniquesDocument5 pagesJob Scheduling in Cloud Computing: A Review of Selected TechniquesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Cloud ComputingDocument167 pagesCloud ComputingdoctoraseenNo ratings yet

- Chapter One 1.1 Background of StudyDocument26 pagesChapter One 1.1 Background of StudyAaron Paul Oseghale OkosunNo ratings yet

- UNIT 1 DS Question Bank With AnswersDocument4 pagesUNIT 1 DS Question Bank With Answersanish.t.p100% (1)

- ITC4205 Introduction To Cloud Computing Assignment: ON 30 December, 2021Document11 pagesITC4205 Introduction To Cloud Computing Assignment: ON 30 December, 2021Aisha kabirNo ratings yet

- Parallel Computing - Unit IVDocument40 pagesParallel Computing - Unit IVshraddhaNo ratings yet

- 1 Evolution of Cloud ComputingDocument8 pages1 Evolution of Cloud ComputingSantanuNo ratings yet

- Cloud Computing As An Evolution of Distributed ComputingDocument6 pagesCloud Computing As An Evolution of Distributed ComputingVIVA-TECH IJRINo ratings yet

- Chapter OneDocument33 pagesChapter OneUrgessa GedefaNo ratings yet

- Cloud Computing 30 66Document37 pagesCloud Computing 30 66Rizky MuhammadNo ratings yet

- Evolution of Cloud ComputingDocument3 pagesEvolution of Cloud ComputingMa. Jessabel AzurinNo ratings yet

- Cloud Computing AssignmentDocument11 pagesCloud Computing AssignmentYosief DagnachewNo ratings yet

- Distributed Computing Management Server 2Document18 pagesDistributed Computing Management Server 2Sumanth MamidalaNo ratings yet

- CCunit 1Document69 pagesCCunit 1aman kumarNo ratings yet

- Introduction To Cloud ComputingDocument9 pagesIntroduction To Cloud ComputingSowdha MiniNo ratings yet

- Introduction To Distributed SystemsDocument8 pagesIntroduction To Distributed SystemsMahamud elmogeNo ratings yet

- Unit IDocument53 pagesUnit INameis GirishNo ratings yet

- Models of Network ComputingDocument2 pagesModels of Network ComputingMohammad Waqas JauharNo ratings yet

- Cluster ComputingDocument7 pagesCluster ComputingBalachandar KrishnaswamyNo ratings yet

- Overview of Computing ParadigmDocument1 pageOverview of Computing Paradigmchirayush chakrabortyNo ratings yet

- 555 PGDocument49 pages555 PGbivakarmahapatra7872No ratings yet

- Cloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)Document32 pagesCloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)ranjan_ravi04No ratings yet

- Cloud Computing Unit 1 - 230927 - 111849Document16 pagesCloud Computing Unit 1 - 230927 - 111849varundhawan241285No ratings yet

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingFrom EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo ratings yet

- Computer Science Self Management: Fundamentals and ApplicationsFrom EverandComputer Science Self Management: Fundamentals and ApplicationsNo ratings yet

- Cloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDFrom EverandCloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDNo ratings yet

- DTE UserGuideDocument521 pagesDTE UserGuidebidyut_iitkgpNo ratings yet

- Quality Manual For Hydraulically Bound Mixtures.53d0d866.8046Document18 pagesQuality Manual For Hydraulically Bound Mixtures.53d0d866.8046Anonymous PeFQLw19No ratings yet

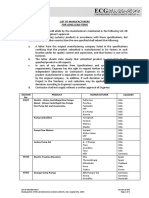

- Ecg Long Lead Items Vendor ListDocument2 pagesEcg Long Lead Items Vendor Listamr abdelmageedNo ratings yet

- Fases Hilos F.D. F.P. F.T. F.A. M VDocument56 pagesFases Hilos F.D. F.P. F.T. F.A. M VariakinerakNo ratings yet

- Ovum Decision Matrix CRM Telcos 2183241Document16 pagesOvum Decision Matrix CRM Telcos 2183241SilvioNo ratings yet

- Aws D1.3-D1.3M 2018Document6 pagesAws D1.3-D1.3M 2018Lee Chong EeNo ratings yet

- ISSUE Tracking DocumentDocument64 pagesISSUE Tracking DocumentAishwarya SairamNo ratings yet

- CSP 520N 1BDocument18 pagesCSP 520N 1BfredtornayNo ratings yet

- OM 002 Belt Conveyor Idler Instruct 6E74091AB9993Document9 pagesOM 002 Belt Conveyor Idler Instruct 6E74091AB9993gopi_ggg20016099No ratings yet

- Quotation 132kv CT PT 33kv PTDocument5 pagesQuotation 132kv CT PT 33kv PTSharafat AliNo ratings yet

- Iron-Iron Carbide Phase Diagram ExampleDocument3 pagesIron-Iron Carbide Phase Diagram ExampleBenjamin Enmanuel Mango DNo ratings yet

- Canadian Solar KuPower HiKu CS3L 370W MS - Super High PowerDocument2 pagesCanadian Solar KuPower HiKu CS3L 370W MS - Super High PowercristiNo ratings yet

- Ford Supplement K TemplateDocument3 pagesFord Supplement K TemplateelevendotNo ratings yet

- Dry Transformers - Schneider Electric - Trihal - 15 or 20kV-400VDocument9 pagesDry Transformers - Schneider Electric - Trihal - 15 or 20kV-400VVăn Đạt Nguyễn VănNo ratings yet

- Title: Solo's Coders-Page: Amiga Advanced Graphics Architecture (AGA) DocumentationDocument32 pagesTitle: Solo's Coders-Page: Amiga Advanced Graphics Architecture (AGA) DocumentationddaaggNo ratings yet

- Certificado Internacional Bel 15k G LVDocument2 pagesCertificado Internacional Bel 15k G LVmarcelo__182182No ratings yet

- Lorry Report Month of August 2015Document22 pagesLorry Report Month of August 2015PrasantaKumarMallikaNo ratings yet

- Waste WaterDocument60 pagesWaste Waterbarhooom100% (1)

- Amplifier Control Unit: Not EquipmentDocument2 pagesAmplifier Control Unit: Not Equipmentariadi supriyantoNo ratings yet

- 0801 B2 BSolutionDocument3 pages0801 B2 BSolutionsajuhereNo ratings yet

- Zadar s85Document33 pagesZadar s85ZeeNo ratings yet

- Basic Computer Model and Different Units of Computer 1Document15 pagesBasic Computer Model and Different Units of Computer 1sheetalNo ratings yet

- F 2296 - 03 RjiyotyDocument3 pagesF 2296 - 03 RjiyotyfrostestNo ratings yet

- NIBCO - Copper FittingsDocument56 pagesNIBCO - Copper Fittingsjpdavila205No ratings yet

- TF100 47C - Sure MateDocument8 pagesTF100 47C - Sure MatechaurandNo ratings yet

- Mr. Talha Alam Khan: Personal InfoDocument2 pagesMr. Talha Alam Khan: Personal InfoTalhaNo ratings yet

- C 452 - 95 Qzq1mi1sruq - PDFDocument4 pagesC 452 - 95 Qzq1mi1sruq - PDFAlejandro MuñozNo ratings yet

- Loading Instruction A340-600Document2 pagesLoading Instruction A340-600rustedangel1976No ratings yet

- ES 102 Long Exam 2 Formulas: 1 Second Area Moment 5 TorsionDocument1 pageES 102 Long Exam 2 Formulas: 1 Second Area Moment 5 TorsionGela LageNo ratings yet

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Uploaded by

jojotitiOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Overview of The Computing Paradigm: 1.1 Recent Trends in Distributed Computing

Uploaded by

jojotitiCopyright:

Available Formats

CHAPTER

1

OVERVIEW OF THE

COMPUTING PARADIGM

Automatic computing has changed the way humans can solve problems

and the different ways in which problems can be solved. Computing has

changed the perception and even the world more than any other innovation

in the recent past. Still, a lot of revolution is going to happen in computing.

Understanding computing provides deep insights and generates reasoning

in our minds about our universe.

Over the last couple of years, there has been an increased interest in

reducing computing processors’ powers. This chapter aims to understand

different distributed computing technologies like peer to peer, cluster, utility,

grid, cloud, fog and jungle computing, and make comparisons between them.

1.1 RECENT TRENDS IN DISTRIBUTED COMPUTING

A method of computer processing in which different parts of a program are

executed simultaneously on two or more computers that are communicating

with each other over a network is termed distributed computing. In dis-

tributed computing, the processing requires that a program be segmented

into sections that can run simultaneously; it is also required that the division

of the program should consider different environments on which the differ-

ent sections of the program will be executing. Three significant characteris-

tics of distributed systems are concurrency of components, lack of a global

clock and independent failure of components.

2 • CLOUD COMPUTING BASICS

A program that runs in a distributed system is called a distributed

program, and distributed programming is the process of writing such pro-

grams. Distributed computing also refers to solving computational problems

using the distributed systems. Distributed computing is a model in which

resources of a system are shared among multiple computers to improve effi-

ciency and performance as shown in Figure 1.1.

FIGURE 1.1 Workflow of distributed systems

A distributed computing system has the following characteristics:

It consists of several independent computers connected via a communi-

cation network.

The message is being exchanged over the network for communication.

Each computer has its own memory and clock and runs its own operat-

ing system.

Remote resources are accessed through the network.

Various classes of distributed computing are shown in Figure 1.2 and will be

discussed further in the subsequent sections.

FIGURE 1.2 Taxonomy of distributed computing

OVERVIEW OF THE COMPUTING PARADIGM • 3

1.1.1 Peer to Peer Computing

When computers moved into mainstream use, personal computers (PCs)

were connected together through LANs (Local Area Networks) to central

servers. These central servers were much more powerful than the PCs, so

any large data processing can take place on these servers. PCs have now

become much more powerful, and capable enough to handle the data pro-

cessing locally rather than on central servers. Due to this, peer-to-peer (P2P)

computing can now occur when individual computers bypass central servers

to connect and collaborate directly with each other.

A peer is a computer that behaves as a client in the client/server model.

It also contains an additional layer of software that allows it to perform server

functions. The peer computer can respond to requests from other peers by

communicating a message over the network.

P2P computing refers to a class of systems and applications that employ

distributed resources to perform a critical function in a decentralized man-

ner. The resources encompass computing power, data (storage and content),

network bandwidth, and presence

(computers, humans and other

resources) [3]. P2P computing is a

network-based computing model

for applications where comput-

ers share resources and services

via direct exchange as shown in

Figure 1.3.

Technically, P2P provides the

opportunity to make use of vast

untapped resources that go unused FIGURE 1.3 Peer to peer network

without it. These resources include

processing power for large-scale computations and enormous storage poten-

tial. The P2P mechanism can also be used to eliminate the risk of a single

point of failure. When P2P is used within the enterprise, it may be able to

replace some costly data center functions with distributed services between

clients. Storage, for data retrieval and backup, can be placed on clients. P2P

applications build up functions such as storage, computations, messaging,

security, and file distribution through direct exchanges between peers.

1.1.2 Cluster Computing

Cluster computing consists of a collection of interconnected standalone

computers cooperatively working together as a single integrated comput-

ing resource to take advantage of the parallel processing power of those

4 • CLOUD COMPUTING BASICS

standalone computers. Computer clusters have each node set to carry out

the same tasks, controlled and scheduled by software. The components of

a cluster are connected to each other through fast local area networks as

shown in Figure 1.4. Clustered computer systems have proven to be effec-

tive in handling a heavy workload with large datasets. Deploying a cluster

increases performance and fault tolerance.

FIGURE 1.4 Cluster computing

Some major advantages of cluster computing are manageability, single

system image and high availability. In the cluster software is automatically

installed and configured, and the nodes of the cluster can be added and

managed easily. So, it is an open system that is very easy to deploy and cost-

effective to acquire and manage. Cluster computing contains some disad-

vantages also. It is hard to manage cluster computing without experience.

When the size of the cluster is large, it is difficult to find out if something

fails. Its programming environment is hard to be improved when software

on some node is different from the other.

The use of clusters as a computing platform is not just limited to scien-

tific and engineering applications; there are many business applications that

benefit from the use of clusters. This technology improves the performance

of applications by using parallel computing on different machines and also

enables the shared use of distributed resources.

1.1.3 Utility Computing

Utility computing is a service provisioning model in which a service provider

makes computing resources and infrastructure management available to the

OVERVIEW OF THE COMPUTING PARADIGM • 5

customer as per the need, and charges them for specific usage rather than

a fixed rate. It has an advantage of being low cost with no initial setup cost

to afford the computer resources. This repackaging of computing services is

the foundation of the shift to on-demand computing, software as a service,

and cloud computing models.

The customers need not to buy all the hardware, software, and licenses

to do business. Instead, the customer relies on another party to provide these

services. Utility computing is one of the most popular IT service models pri-

marily because of the flexibility and economy it provides. This model is based

on that used by conventional utilities such as telephone services, electricity,

and gas. Customers have access to

a virtually unlimited supply of com-

puting solutions over the Internet

or a virtual private network (VPN),

which can be used whenever, wher-

ever required. The back-end infra-

structure and computing resources

management and delivery are gov-

erned by the provider. Utility com-

puting solutions can include virtual

software, virtual servers, virtual

storage, backup, and many more IT

solutions. Multiplexing, multitask-

ing, and virtual multitenancy have

brought us to the utility computing

business as shown in Figure 1.5. FIGURE 1.5 Utility computing

1.1.4 Grid Computing

A scientist studying proteins logs into a computer using an entire network of

computers to analyze data. A businessman accesses his company’s network

through a Personal Digital Assistant in order to forecast the future of a par-

ticular stock. An army official accesses and coordinates computer resources

on three different military networks to formulate a battle strategy. All these

scenarios have one thing in common: they rely on a concept called grid com-

puting. At its most basic level, grid computing is a computer network in

which each computer’s resources are shared with every other computer in

the system. Processing power, memory and data storage are all community

resources that authorized consumers can tap into and leverage for specific

tasks. A grid computing system can be as simple as a collection of similar

computers running on the same operating system or as complex as Internet

worked systems comprised of every computer platform you can think of.

You might also like

- Bernoulli's Principle Demonstration (Lab Report)Document17 pagesBernoulli's Principle Demonstration (Lab Report)Arey Ariena94% (110)

- Cloud Computing Interview Questions You'll Most Likely Be Asked: Second EditionFrom EverandCloud Computing Interview Questions You'll Most Likely Be Asked: Second EditionNo ratings yet

- Computing PradigmDocument10 pagesComputing PradigmHtun Aung LynnNo ratings yet

- Unit-1 & Ii GCCDocument37 pagesUnit-1 & Ii GCCpabithasNo ratings yet

- Cloud Technologies: Grid ComputingDocument13 pagesCloud Technologies: Grid ComputingNarmatha ThiyagarajanNo ratings yet

- Differences Unit 1Document7 pagesDifferences Unit 1manpreet singhNo ratings yet

- Distributed Operating SystemDocument18 pagesDistributed Operating SystemRajni ShelkeNo ratings yet

- CSE 423 Virtualization and Cloud Computinglecture0Document16 pagesCSE 423 Virtualization and Cloud Computinglecture0Shahad ShahulNo ratings yet

- Assignment: Parallel and Distributed Computing Submitted To: Sir Shoaib Date: 25-03-2019Document5 pagesAssignment: Parallel and Distributed Computing Submitted To: Sir Shoaib Date: 25-03-2019UmerNo ratings yet

- Study and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalDocument6 pagesStudy and Applications of Cluster Grid and Cloud Computing: Umesh Chandra JaiswalIJERDNo ratings yet

- Unit 1 Introduction To Cloud Computing: StructureDocument17 pagesUnit 1 Introduction To Cloud Computing: StructureRaj SinghNo ratings yet

- A Comparative Analysis Grid Cluster and Cloud ComputingDocument5 pagesA Comparative Analysis Grid Cluster and Cloud ComputingMaddula PrasadNo ratings yet

- U1cc Updated-NpDocument16 pagesU1cc Updated-Npkisu kingNo ratings yet

- Data Storage DatabasesDocument6 pagesData Storage DatabasesSrikanth JannuNo ratings yet

- Distributed ComputingDocument13 pagesDistributed Computingpriyanka1229No ratings yet

- CC Question Bank All UnitsDocument28 pagesCC Question Bank All Unitsasmitha0567No ratings yet

- The Distributed Computing Model Based On The Capabilities of The InternetDocument6 pagesThe Distributed Computing Model Based On The Capabilities of The InternetmanjulakinnalNo ratings yet

- It CC1 LN R18Document75 pagesIt CC1 LN R18Sàñdèép SûññyNo ratings yet

- Unit - 1: Cloud Architecture and ModelDocument9 pagesUnit - 1: Cloud Architecture and ModelRitu SrivastavaNo ratings yet

- The Poor Man's Super ComputerDocument12 pagesThe Poor Man's Super ComputerVishnu ParsiNo ratings yet

- Introduction To Distributed SystemsDocument45 pagesIntroduction To Distributed Systemsaderaj getinetNo ratings yet

- Different Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingDocument4 pagesDifferent Types of Computing - Grid, Cloud, Utility, Distributed and Cluster ComputingSandeep MotamariNo ratings yet

- CC Notes UNIT 1Document21 pagesCC Notes UNIT 1amanbabu839383607No ratings yet

- Recent Trends in ComputingDocument5 pagesRecent Trends in ComputingAkashNo ratings yet

- Unit - 1 Systems Modelling, Clustering and Virtualization: 1. Scalable Computing Over The InternetDocument28 pagesUnit - 1 Systems Modelling, Clustering and Virtualization: 1. Scalable Computing Over The Internetsuryavamsi kakaraNo ratings yet

- GCC QBDocument16 pagesGCC QBSherril Vincent100% (1)

- Grid - and - Cloud Computing - Important - Questions - Unit - 1 - Part - ADocument5 pagesGrid - and - Cloud Computing - Important - Questions - Unit - 1 - Part - ASridevi SivakumarNo ratings yet

- Srinivas Institute of Management Studies: Pandeswar, Mangalore - 575 001Document101 pagesSrinivas Institute of Management Studies: Pandeswar, Mangalore - 575 001SAJIN PNo ratings yet

- Third Year Sixth Semester CS6601 Distributed System 2 Mark With AnswerDocument25 pagesThird Year Sixth Semester CS6601 Distributed System 2 Mark With AnswerPRIYA RAJI86% (7)

- MCA 502 - Cloud: Computing Theory 80 + 20 Marks Lab: 80 + 20 MarksDocument33 pagesMCA 502 - Cloud: Computing Theory 80 + 20 Marks Lab: 80 + 20 MarkskvrNo ratings yet

- Distributed Computing: Information TechnologyDocument19 pagesDistributed Computing: Information TechnologySanskrit KavuruNo ratings yet

- Compusoft, 3 (10), 1149-1156 PDFDocument8 pagesCompusoft, 3 (10), 1149-1156 PDFIjact EditorNo ratings yet

- TopologyiesDocument18 pagesTopologyieslahiruaioitNo ratings yet

- Grid ComputingDocument31 pagesGrid ComputingCutiepiezNo ratings yet

- Unit - 1 Architecture of Distributed SystemsDocument22 pagesUnit - 1 Architecture of Distributed SystemsAnjna SharmaNo ratings yet

- Job Scheduling in Cloud Computing: A Review of Selected TechniquesDocument5 pagesJob Scheduling in Cloud Computing: A Review of Selected TechniquesInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Cloud ComputingDocument167 pagesCloud ComputingdoctoraseenNo ratings yet

- Chapter One 1.1 Background of StudyDocument26 pagesChapter One 1.1 Background of StudyAaron Paul Oseghale OkosunNo ratings yet

- UNIT 1 DS Question Bank With AnswersDocument4 pagesUNIT 1 DS Question Bank With Answersanish.t.p100% (1)

- ITC4205 Introduction To Cloud Computing Assignment: ON 30 December, 2021Document11 pagesITC4205 Introduction To Cloud Computing Assignment: ON 30 December, 2021Aisha kabirNo ratings yet

- Parallel Computing - Unit IVDocument40 pagesParallel Computing - Unit IVshraddhaNo ratings yet

- 1 Evolution of Cloud ComputingDocument8 pages1 Evolution of Cloud ComputingSantanuNo ratings yet

- Cloud Computing As An Evolution of Distributed ComputingDocument6 pagesCloud Computing As An Evolution of Distributed ComputingVIVA-TECH IJRINo ratings yet

- Chapter OneDocument33 pagesChapter OneUrgessa GedefaNo ratings yet

- Cloud Computing 30 66Document37 pagesCloud Computing 30 66Rizky MuhammadNo ratings yet

- Evolution of Cloud ComputingDocument3 pagesEvolution of Cloud ComputingMa. Jessabel AzurinNo ratings yet

- Cloud Computing AssignmentDocument11 pagesCloud Computing AssignmentYosief DagnachewNo ratings yet

- Distributed Computing Management Server 2Document18 pagesDistributed Computing Management Server 2Sumanth MamidalaNo ratings yet

- CCunit 1Document69 pagesCCunit 1aman kumarNo ratings yet

- Introduction To Cloud ComputingDocument9 pagesIntroduction To Cloud ComputingSowdha MiniNo ratings yet

- Introduction To Distributed SystemsDocument8 pagesIntroduction To Distributed SystemsMahamud elmogeNo ratings yet

- Unit IDocument53 pagesUnit INameis GirishNo ratings yet

- Models of Network ComputingDocument2 pagesModels of Network ComputingMohammad Waqas JauharNo ratings yet

- Cluster ComputingDocument7 pagesCluster ComputingBalachandar KrishnaswamyNo ratings yet

- Overview of Computing ParadigmDocument1 pageOverview of Computing Paradigmchirayush chakrabortyNo ratings yet

- 555 PGDocument49 pages555 PGbivakarmahapatra7872No ratings yet

- Cloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)Document32 pagesCloud Computing Is Changing How We Communicate: By-Ravi Ranjan (1NH06CS084)ranjan_ravi04No ratings yet

- Cloud Computing Unit 1 - 230927 - 111849Document16 pagesCloud Computing Unit 1 - 230927 - 111849varundhawan241285No ratings yet

- Cloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingFrom EverandCloud Computing Made Simple: Navigating the Cloud: A Practical Guide to Cloud ComputingNo ratings yet

- Computer Science Self Management: Fundamentals and ApplicationsFrom EverandComputer Science Self Management: Fundamentals and ApplicationsNo ratings yet

- Cloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDFrom EverandCloud: Get All The Support And Guidance You Need To Be A Success At Using The CLOUDNo ratings yet

- DTE UserGuideDocument521 pagesDTE UserGuidebidyut_iitkgpNo ratings yet

- Quality Manual For Hydraulically Bound Mixtures.53d0d866.8046Document18 pagesQuality Manual For Hydraulically Bound Mixtures.53d0d866.8046Anonymous PeFQLw19No ratings yet

- Ecg Long Lead Items Vendor ListDocument2 pagesEcg Long Lead Items Vendor Listamr abdelmageedNo ratings yet

- Fases Hilos F.D. F.P. F.T. F.A. M VDocument56 pagesFases Hilos F.D. F.P. F.T. F.A. M VariakinerakNo ratings yet

- Ovum Decision Matrix CRM Telcos 2183241Document16 pagesOvum Decision Matrix CRM Telcos 2183241SilvioNo ratings yet

- Aws D1.3-D1.3M 2018Document6 pagesAws D1.3-D1.3M 2018Lee Chong EeNo ratings yet

- ISSUE Tracking DocumentDocument64 pagesISSUE Tracking DocumentAishwarya SairamNo ratings yet

- CSP 520N 1BDocument18 pagesCSP 520N 1BfredtornayNo ratings yet

- OM 002 Belt Conveyor Idler Instruct 6E74091AB9993Document9 pagesOM 002 Belt Conveyor Idler Instruct 6E74091AB9993gopi_ggg20016099No ratings yet

- Quotation 132kv CT PT 33kv PTDocument5 pagesQuotation 132kv CT PT 33kv PTSharafat AliNo ratings yet

- Iron-Iron Carbide Phase Diagram ExampleDocument3 pagesIron-Iron Carbide Phase Diagram ExampleBenjamin Enmanuel Mango DNo ratings yet

- Canadian Solar KuPower HiKu CS3L 370W MS - Super High PowerDocument2 pagesCanadian Solar KuPower HiKu CS3L 370W MS - Super High PowercristiNo ratings yet

- Ford Supplement K TemplateDocument3 pagesFord Supplement K TemplateelevendotNo ratings yet

- Dry Transformers - Schneider Electric - Trihal - 15 or 20kV-400VDocument9 pagesDry Transformers - Schneider Electric - Trihal - 15 or 20kV-400VVăn Đạt Nguyễn VănNo ratings yet

- Title: Solo's Coders-Page: Amiga Advanced Graphics Architecture (AGA) DocumentationDocument32 pagesTitle: Solo's Coders-Page: Amiga Advanced Graphics Architecture (AGA) DocumentationddaaggNo ratings yet

- Certificado Internacional Bel 15k G LVDocument2 pagesCertificado Internacional Bel 15k G LVmarcelo__182182No ratings yet

- Lorry Report Month of August 2015Document22 pagesLorry Report Month of August 2015PrasantaKumarMallikaNo ratings yet

- Waste WaterDocument60 pagesWaste Waterbarhooom100% (1)

- Amplifier Control Unit: Not EquipmentDocument2 pagesAmplifier Control Unit: Not Equipmentariadi supriyantoNo ratings yet

- 0801 B2 BSolutionDocument3 pages0801 B2 BSolutionsajuhereNo ratings yet

- Zadar s85Document33 pagesZadar s85ZeeNo ratings yet

- Basic Computer Model and Different Units of Computer 1Document15 pagesBasic Computer Model and Different Units of Computer 1sheetalNo ratings yet

- F 2296 - 03 RjiyotyDocument3 pagesF 2296 - 03 RjiyotyfrostestNo ratings yet

- NIBCO - Copper FittingsDocument56 pagesNIBCO - Copper Fittingsjpdavila205No ratings yet

- TF100 47C - Sure MateDocument8 pagesTF100 47C - Sure MatechaurandNo ratings yet

- Mr. Talha Alam Khan: Personal InfoDocument2 pagesMr. Talha Alam Khan: Personal InfoTalhaNo ratings yet

- C 452 - 95 Qzq1mi1sruq - PDFDocument4 pagesC 452 - 95 Qzq1mi1sruq - PDFAlejandro MuñozNo ratings yet

- Loading Instruction A340-600Document2 pagesLoading Instruction A340-600rustedangel1976No ratings yet

- ES 102 Long Exam 2 Formulas: 1 Second Area Moment 5 TorsionDocument1 pageES 102 Long Exam 2 Formulas: 1 Second Area Moment 5 TorsionGela LageNo ratings yet