Professional Documents

Culture Documents

0 ratings0% found this document useful (0 votes)

6 viewsFeature Selection CHI

Feature Selection CHI

Uploaded by

sofia guptaThe document describes a proposed algorithm that uses particle swarm optimization (PSO) with a surrogate model to solve high-dimensional optimization problems. It initializes particles with randomly selected features from a feature space, then updates velocities and positions using standard PSO equations to optimize feature selection. The algorithm is tested on feature selection for classification with dimensionality ranging from 600 to 1200 features. Results show the proposed algorithm achieves better classification accuracy than other feature selection methods. An analysis suggests the algorithm's increased computation time for surrogate training is negligible compared to time for real-world problem evaluations.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You might also like

- Stanley Gibbons 'Commonwealth & British Empire Stamp Catalogue 2007' Report and Market UpdateDocument12 pagesStanley Gibbons 'Commonwealth & British Empire Stamp Catalogue 2007' Report and Market Updatepeter smith100% (1)

- Case StudyDocument4 pagesCase StudyShivang Bhatt100% (1)

- Automatic Road Safety Reflector Lightning System: Department of Electrical and Electronics EngineeringDocument2 pagesAutomatic Road Safety Reflector Lightning System: Department of Electrical and Electronics EngineeringSrujan ReddyNo ratings yet

- Erasmus+: Erasmus Mundus Design Measures (EMDM)Document22 pagesErasmus+: Erasmus Mundus Design Measures (EMDM)sofia guptaNo ratings yet

- Bill of Sale (English) - 2019Document1 pageBill of Sale (English) - 2019Brenda AvilaNo ratings yet

- En Tanagra Kohonen SOM RDocument21 pagesEn Tanagra Kohonen SOM RVbg DaNo ratings yet

- Preview 2Document7 pagesPreview 2Mohamed SalahNo ratings yet

- Simple Linear Regression Models Using SASDocument10 pagesSimple Linear Regression Models Using SASRobinNo ratings yet

- Multiple RegressionDocument4 pagesMultiple Regressionpedropinto8400No ratings yet

- Calculator TechniquesDocument8 pagesCalculator TechniquesRafael DeocuarizaNo ratings yet

- Rajshahi University of Engineering and Technology, RajshahiDocument9 pagesRajshahi University of Engineering and Technology, RajshahiShakil Ahmed100% (1)

- SC Lab File Fayiz PDFDocument29 pagesSC Lab File Fayiz PDFfayizpNo ratings yet

- Chapter 9 Regression Practice ProblemsDocument7 pagesChapter 9 Regression Practice ProblemsMathaneshan RajagopalNo ratings yet

- McCombe Etal Supplementary Materials 2021Document6 pagesMcCombe Etal Supplementary Materials 2021dobbyNo ratings yet

- V. Desain Pondasi: 5.1. Kapasitas Tiang Pancang Berdasarkan Nilai N - SPTDocument8 pagesV. Desain Pondasi: 5.1. Kapasitas Tiang Pancang Berdasarkan Nilai N - SPTnugraha pierreNo ratings yet

- Guo 2005Document16 pagesGuo 2005Taha SerwerNo ratings yet

- 105-DBMS - Practical-05Document13 pages105-DBMS - Practical-05103ACHAL JICHKARNo ratings yet

- ML Lab6.Ipynb - ColaboratoryDocument5 pagesML Lab6.Ipynb - ColaboratoryAvi Srivastava100% (1)

- ARIMA Predict ForecastDocument1 pageARIMA Predict ForecastManoj MNo ratings yet

- Linear Regression (Cellphone - Prices)Document14 pagesLinear Regression (Cellphone - Prices)Ali YaqoobNo ratings yet

- Simple PendulumDocument13 pagesSimple Pendulumthelmamusonda60No ratings yet

- HVAC-Duct Sizing-Dougherty Rev5Document10 pagesHVAC-Duct Sizing-Dougherty Rev5ScribdJrNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Hands-On Assignment II Solution: Pulse Code Modulation: ReadingDocument6 pagesHands-On Assignment II Solution: Pulse Code Modulation: ReadingnongonNo ratings yet

- Staad Pro and OffshoreDocument9 pagesStaad Pro and OffshoreAla BazrqanNo ratings yet

- Sensor Drift, Proposed SolutionDocument4 pagesSensor Drift, Proposed Solutionvijeta diwanNo ratings yet

- Surveying & Levelling: OER 6-Repository of QuestionsDocument10 pagesSurveying & Levelling: OER 6-Repository of QuestionsLelan de SouzaNo ratings yet

- Qualityandreliability-X and R Chartin Class ExampleDocument6 pagesQualityandreliability-X and R Chartin Class ExampleamritaneogiNo ratings yet

- DALab_Part-B_BCU&BUDocument12 pagesDALab_Part-B_BCU&BU8daudio02No ratings yet

- SQL Update For Db2Document38 pagesSQL Update For Db2ivanaNo ratings yet

- PE 4410 Lab 2 Inflow Performance Relationships (IPR) and Performance Index (PI)Document9 pagesPE 4410 Lab 2 Inflow Performance Relationships (IPR) and Performance Index (PI)Narjes WalidNo ratings yet

- Connectivity of 1D Ad Hoc Networks - v13.3Document14 pagesConnectivity of 1D Ad Hoc Networks - v13.3kanagaraj2331993No ratings yet

- Formula Sheet TMQU03Document3 pagesFormula Sheet TMQU03Maheer SohbatNo ratings yet

- Analisis JalurDocument30 pagesAnalisis JaluradityanugrahassNo ratings yet

- Lecture Plan 12 - 16!1!1Document7 pagesLecture Plan 12 - 16!1!1Hashir NajmiNo ratings yet

- 8) Pengolahan Data DIESEL 1 Katup Gas Konstan-DikonversiDocument47 pages8) Pengolahan Data DIESEL 1 Katup Gas Konstan-DikonversiIndra Arvito ArmanNo ratings yet

- Chapter 9: Selection of VariablesDocument30 pagesChapter 9: Selection of VariablesMerk GetNo ratings yet

- Satellite Survivability Using Lasso Regression in RDocument31 pagesSatellite Survivability Using Lasso Regression in RJeffrey StricklandNo ratings yet

- Código - Operaciones de Sistemas Eléctricos - Trabajo Final PDFDocument41 pagesCódigo - Operaciones de Sistemas Eléctricos - Trabajo Final PDFJuan Camilo Guevara GrajalesNo ratings yet

- DAA Unit 4Document24 pagesDAA Unit 4hari karanNo ratings yet

- AP19110010030 R Lab-Assignment-4Document7 pagesAP19110010030 R Lab-Assignment-4Sravan Kilaru AP19110010030No ratings yet

- Data Ukuran Utama Kapal "KM Nupy Cerria": Bayu Adam SetiaDocument29 pagesData Ukuran Utama Kapal "KM Nupy Cerria": Bayu Adam SetiaHelmyJuliantoNo ratings yet

- Tutorial PostprocDocument30 pagesTutorial PostprocAntonio RojasNo ratings yet

- 11 Shell Courses 6 FT WideDocument3 pages11 Shell Courses 6 FT WideImran Ahmed ShaikhNo ratings yet

- HVAC Formulas by AEMEPDocument9 pagesHVAC Formulas by AEMEPZen LohNo ratings yet

- X-Bar and R Chart Example In-Class ExerciseDocument6 pagesX-Bar and R Chart Example In-Class ExerciseMostak TahmidNo ratings yet

- Chapter 4 - Linear RegressionDocument25 pagesChapter 4 - Linear Regressionanshita100% (2)

- Easy Lab 4Document10 pagesEasy Lab 4ZbNo ratings yet

- Week16 N9Document3 pagesWeek16 N920131A05N9 SRUTHIK THOKALANo ratings yet

- TimeSeries ReportDocument41 pagesTimeSeries ReportSukanya ManickavelNo ratings yet

- 8 Shell Courses 8 FT Wide PDFDocument2 pages8 Shell Courses 8 FT Wide PDFImran Ahmed ShaikhNo ratings yet

- Waterflooding Ejercicio 1 FinalDocument10 pagesWaterflooding Ejercicio 1 FinalJorge Blanco ChoqueNo ratings yet

- Example Problem 18-1: SpringsDocument5 pagesExample Problem 18-1: SpringsVinaasha BalakrishnanNo ratings yet

- Experiment 9Document4 pagesExperiment 9Sindiswa Minenhle MngadiNo ratings yet

- Free Vibration of A Single Degree of Freedom SystemDocument6 pagesFree Vibration of A Single Degree of Freedom SystemStephanieNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- Application of IFS in Horizontal Curve Design: Table 4-11: Radius V.S Operating SpeedDocument5 pagesApplication of IFS in Horizontal Curve Design: Table 4-11: Radius V.S Operating SpeedAkram ElsaiedNo ratings yet

- Transitions from Digital Communications to Quantum Communications: Concepts and ProspectsFrom EverandTransitions from Digital Communications to Quantum Communications: Concepts and ProspectsNo ratings yet

- CamScanner 09-25-2020 13.42.28Document4 pagesCamScanner 09-25-2020 13.42.28sofia guptaNo ratings yet

- Gandhi Ko Samjhein - HindiDocument150 pagesGandhi Ko Samjhein - Hindisofia guptaNo ratings yet

- Perspectives in CommunicationDocument14 pagesPerspectives in Communicationsofia guptaNo ratings yet

- CH 6, 7 and 8 of WordDocument23 pagesCH 6, 7 and 8 of Wordsofia guptaNo ratings yet

- Shashi Tharoor Has Covered The Ground With These 2 Brutally Frank Lines That Indict The BritishDocument6 pagesShashi Tharoor Has Covered The Ground With These 2 Brutally Frank Lines That Indict The Britishsofia guptaNo ratings yet

- Colleges of University of DelhiDocument5 pagesColleges of University of Delhisofia guptaNo ratings yet

- Artificial IntelligenceDocument8 pagesArtificial Intelligencesofia guptaNo ratings yet

- Form 1 - Details From KG To X, XI and XIIDocument96 pagesForm 1 - Details From KG To X, XI and XIIsofia guptaNo ratings yet

- 12th ResultDocument4 pages12th Resultsofia guptaNo ratings yet

- Adam StyleDocument248 pagesAdam StyleNelson ZamoranoNo ratings yet

- Q11. Toggle Key Is A Feature of - : A. Microsoft Office B. Microsoft Excel C. Microsoft Word D. Microsoft WindowsDocument1 pageQ11. Toggle Key Is A Feature of - : A. Microsoft Office B. Microsoft Excel C. Microsoft Word D. Microsoft Windowssofia gupta0% (2)

- Practical Examination 2020 Ip Set 1Document3 pagesPractical Examination 2020 Ip Set 1sofia gupta100% (1)

- The National Education PolicyDocument3 pagesThe National Education Policysofia guptaNo ratings yet

- Maderno's Façade For ST Peter's, RomeDocument13 pagesMaderno's Façade For ST Peter's, Romesofia guptaNo ratings yet

- Pandas SeriesDocument2 pagesPandas Seriessofia guptaNo ratings yet

- International Indian School, Riyadh WORKSHEET (2020-2021) Grade - Xii - Informatics Practices - Second TermDocument9 pagesInternational Indian School, Riyadh WORKSHEET (2020-2021) Grade - Xii - Informatics Practices - Second Termsofia guptaNo ratings yet

- New - Template 1 - Zone 29 - Online Class (KG To 10th)Document3 pagesNew - Template 1 - Zone 29 - Online Class (KG To 10th)sofia guptaNo ratings yet

- Class Xii Minimum Level LearningDocument10 pagesClass Xii Minimum Level Learningsofia guptaNo ratings yet

- Informatics Practices: Class XII (As Per CBSE Board)Document20 pagesInformatics Practices: Class XII (As Per CBSE Board)sofia guptaNo ratings yet

- Crossword1 PDFDocument1 pageCrossword1 PDFsofia guptaNo ratings yet

- Sales: 1st QTR 2nd QTR 3rd QTR 4th QTRDocument2 pagesSales: 1st QTR 2nd QTR 3rd QTR 4th QTRsofia guptaNo ratings yet

- IBR 2010 Emerging Markets Report FINALDocument44 pagesIBR 2010 Emerging Markets Report FINALGrantThorntonAPACNo ratings yet

- Papers SelectionDocument3 pagesPapers Selectionanony starkNo ratings yet

- TLEICT9 Module (Week 1) PDFDocument5 pagesTLEICT9 Module (Week 1) PDFAN GelNo ratings yet

- Idirect Product PortfolioDocument74 pagesIdirect Product PortfolioImran SadiqNo ratings yet

- Elevation: Section of Approach SlabDocument1 pageElevation: Section of Approach Slabfevahe756No ratings yet

- Simple Circuit Design Tutorial For PoE ApplicationsDocument10 pagesSimple Circuit Design Tutorial For PoE ApplicationsTayyeb AliNo ratings yet

- District Wise List of Working Units of Eou'SDocument21 pagesDistrict Wise List of Working Units of Eou'SOm PrakashNo ratings yet

- (Download PDF) Business Psychology and Organizational Behaviour 6Th Edition Eugene Mckenna Online Ebook All Chapter PDFDocument42 pages(Download PDF) Business Psychology and Organizational Behaviour 6Th Edition Eugene Mckenna Online Ebook All Chapter PDFkurt.holland582100% (13)

- Unit Plan WwiDocument4 pagesUnit Plan Wwiapi-299888967No ratings yet

- Jawaban Suzette WashingtonDocument1 pageJawaban Suzette Washingtonakmal muzamarNo ratings yet

- UEFA Financial Fair Play, Club Toolkit 2013Document110 pagesUEFA Financial Fair Play, Club Toolkit 2013Tifoso BilanciatoNo ratings yet

- Module I. Obligations and ContractsDocument3 pagesModule I. Obligations and ContractsMa Leslie BuhanghangNo ratings yet

- CV 2013 NgouneDocument4 pagesCV 2013 NgouneNGOUNENo ratings yet

- Panasonic SC BT200Document60 pagesPanasonic SC BT200Mark CoatesNo ratings yet

- Accounting Cycle Journal Entries With Chart of AccountsDocument3 pagesAccounting Cycle Journal Entries With Chart of AccountsLala BoraNo ratings yet

- Gr10 Tenses Chapter Test ICSEDocument1 pageGr10 Tenses Chapter Test ICSEanirahul jtNo ratings yet

- School Bus AgreementDocument2 pagesSchool Bus AgreementtinduganmdNo ratings yet

- Finale Manuscript1 3.1Document14 pagesFinale Manuscript1 3.1Emmanuel BaccarayNo ratings yet

- English - Grade VIII - My Lost Dollar - Chapter - 1Document4 pagesEnglish - Grade VIII - My Lost Dollar - Chapter - 1Vishvavenkat K SNo ratings yet

- Things To Know Before Trading 2Document8 pagesThings To Know Before Trading 2guccijgzNo ratings yet

- 1.1 Contingency - Squeeze (Shoe, Top Liner) : Desired DesiredDocument2 pages1.1 Contingency - Squeeze (Shoe, Top Liner) : Desired DesiredilkerkozturkNo ratings yet

- Customizing Your SQL PromptDocument2 pagesCustomizing Your SQL PromptharimajorNo ratings yet

- RCDC SWOT Analysis - Tech Manufacturing FirmsDocument6 pagesRCDC SWOT Analysis - Tech Manufacturing FirmsPaul Michael AngeloNo ratings yet

- Pamphlet 60 - Edition 7 - Aug 2013 PDFDocument28 pagesPamphlet 60 - Edition 7 - Aug 2013 PDFmonitorsamsungNo ratings yet

- RW E-Jet User Manual V20-2-4 RottweilDocument107 pagesRW E-Jet User Manual V20-2-4 RottweilPaulo MagalhaesNo ratings yet

- BL en BlancoDocument1 pageBL en Blancoana bejaranoNo ratings yet

Feature Selection CHI

Feature Selection CHI

Uploaded by

sofia gupta0 ratings0% found this document useful (0 votes)

6 views3 pagesThe document describes a proposed algorithm that uses particle swarm optimization (PSO) with a surrogate model to solve high-dimensional optimization problems. It initializes particles with randomly selected features from a feature space, then updates velocities and positions using standard PSO equations to optimize feature selection. The algorithm is tested on feature selection for classification with dimensionality ranging from 600 to 1200 features. Results show the proposed algorithm achieves better classification accuracy than other feature selection methods. An analysis suggests the algorithm's increased computation time for surrogate training is negligible compared to time for real-world problem evaluations.

Original Description:

Original Title

Algorithm

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document describes a proposed algorithm that uses particle swarm optimization (PSO) with a surrogate model to solve high-dimensional optimization problems. It initializes particles with randomly selected features from a feature space, then updates velocities and positions using standard PSO equations to optimize feature selection. The algorithm is tested on feature selection for classification with dimensionality ranging from 600 to 1200 features. Results show the proposed algorithm achieves better classification accuracy than other feature selection methods. An analysis suggests the algorithm's increased computation time for surrogate training is negligible compared to time for real-world problem evaluations.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

0 ratings0% found this document useful (0 votes)

6 views3 pagesFeature Selection CHI

Feature Selection CHI

Uploaded by

sofia guptaThe document describes a proposed algorithm that uses particle swarm optimization (PSO) with a surrogate model to solve high-dimensional optimization problems. It initializes particles with randomly selected features from a feature space, then updates velocities and positions using standard PSO equations to optimize feature selection. The algorithm is tested on feature selection for classification with dimensionality ranging from 600 to 1200 features. Results show the proposed algorithm achieves better classification accuracy than other feature selection methods. An analysis suggests the algorithm's increased computation time for surrogate training is negligible compared to time for real-world problem evaluations.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

Download as docx, pdf, or txt

You are on page 1of 3

Algorithm 1: Particle Swarm Optimization

1: Initialize parameters of PSO

2: Randomly initialize particles

3: WHILE stopping criterion not met DO

4: calculate each particle's fitness value

5: For i = 1 to population size DO

6: update the Gbest of Pi

7: update the Pbest of Pi

8: END

9: FOR i = 1 to population size DO

10: FOR j = 1 to dimension of particle DO

11: update the velocity of Pi according to equation (1)

12: update the position of Pi according to equation (2)

13: END

14: END

15: END

Algorithm 2: Proposed Algorithm

1: Load feature space set F of unique features f

2: Set the parameter desired number of features d

3: Initialize the parameters of PSO

4: Randomly initialize particles with dimension d over the set F

5: WHILE stopping criterion not met DO

6: calculate each particle's fitness value as accuracy of underlying classifier

7: For i = 1 to population size DO

8: update the Gbest of Pi

9: update the Pbest of Pi

10: END

11: FOR i = 1 to population size DO

12: FOR j = 1 to d DO

13: update the velocity of Pi according to equation (1)

14: update the position of Pi according to equation (2)

15: END

16: END

17: END

18: Return the best feature subset of d features found by the PSO

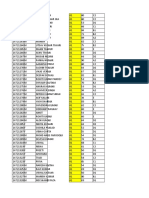

Feature Desired number of features

Classifier

Selection D=600 D=700 D=800 D=900 D=1000 D=110 D=1200

NB 0.78 0.792 0.813 0.825 0.838 0.837 0.844

CHI SVM 0.803 0.813 0.835 0.843 0.865 0.874 0.878

KNN 0.771 0.783 0.795 0.802 0.813 0.824 0.835

NB 0.827 0.843 0.841 0.846 0.845 0.85 0.854

MI SVM 0.835 0.856 0.867 0.869 0.87 0.882 0.893

KNN 0.817 0.834 0.844 0.846 0.853 0.863 0.865

TF-IDF NB 0.762 0.774 0.783 0.786 0.802 0.814 0.824

SVM 0.782 0.785 0.789 0.806 0.825 0.834 0.843

KNN 0.827 0.843 0.841 0.846 0.845 0.85 0.854

NB 0.862 0.883 0.886 0.8910 0.894 0.905 0.915

Proposed

SVM 0.886 0.876 0.8841 0.895 0.901 0.913 0.924

Algorithm

KNN 0.857 0.863 0.874 0.885 0.865 0.87 0.893

The main motivation of this work is to push the boundary of

surrogate-assisted metaheuristics for solving high-dimensional

computationally problems. In the following, we examine

the performance of the SA-COSO on 100-dimensional test algorithms on 100-dimensional

test problems. As we can see,SA-COSO shows similar search dynamics as shown on the 50-

dimensional test problems. The RBF network is able to help

the SA-COSO algorithm locate the region where the optimum

is and keep improving the solution, indicating the cooperative

search of SL-PSO and PSO is very effective in finding

an optimal solution. The experimental results on the 100-

dimensional problems confirm the competitive performance of

SA-COSO on high-dimensional problems.

To further evaluate our method on higher dimensional

problems, we have also conducted the comparisons on 200-

dimensional problems and the results are provided in Supplementary

materials. These results confirm the good performance

of the proposed method for solving high-dimensional

problems.

D. Empirical Analysis of the Computational Complexity

The computational complexity of the proposed SA-COSO

is composed of three main parts, namely, the computation

time for fitness evaluations, for training the RBF network,

and for calculating the distances in updating the archive DB.

In this section, we empirically compare computation time

needed by compared algorithm for solving the 50-D and 100-

D optimization problems. All algorithms are implemented on

a computer with a 2.50GHz processor and 8GB in RAM.

Table V presents the computation time of the algorithms

under comparison averaged over 20 independent runs when

a maximum of 1000 fitness evaluations using the real fitness

function is allowed. From Table V, we find that PSO, whose

computation time is mainly dedicated to fitness evaluations,

requires the least computation time. For convenience, we use

the time for PSO to search for an optimum on a fixed budget of

1000 fitness evaluations as the baseline for comparison. From

Table V, we can see that the RBF-assisted SL-PSO requires the

longest time, meaning that training the surrogate model takes

up more time than calculating the distance in updating the

archive DB. We can also find that the average time required

by the proposed SA-COSO for solving 50-D and 100-D test

problems are approximately 0.6 and 1.4 seconds per fitness

evaluation using the expensive fitness function, respectively.

Compared to most time-consuming fitness evaluations in real

world applications where each fitness evaluation may take

tens of minutes to hours, this increase in computation time

for surrogate training and distance calculation can still be

considered negligible.

You might also like

- Stanley Gibbons 'Commonwealth & British Empire Stamp Catalogue 2007' Report and Market UpdateDocument12 pagesStanley Gibbons 'Commonwealth & British Empire Stamp Catalogue 2007' Report and Market Updatepeter smith100% (1)

- Case StudyDocument4 pagesCase StudyShivang Bhatt100% (1)

- Automatic Road Safety Reflector Lightning System: Department of Electrical and Electronics EngineeringDocument2 pagesAutomatic Road Safety Reflector Lightning System: Department of Electrical and Electronics EngineeringSrujan ReddyNo ratings yet

- Erasmus+: Erasmus Mundus Design Measures (EMDM)Document22 pagesErasmus+: Erasmus Mundus Design Measures (EMDM)sofia guptaNo ratings yet

- Bill of Sale (English) - 2019Document1 pageBill of Sale (English) - 2019Brenda AvilaNo ratings yet

- En Tanagra Kohonen SOM RDocument21 pagesEn Tanagra Kohonen SOM RVbg DaNo ratings yet

- Preview 2Document7 pagesPreview 2Mohamed SalahNo ratings yet

- Simple Linear Regression Models Using SASDocument10 pagesSimple Linear Regression Models Using SASRobinNo ratings yet

- Multiple RegressionDocument4 pagesMultiple Regressionpedropinto8400No ratings yet

- Calculator TechniquesDocument8 pagesCalculator TechniquesRafael DeocuarizaNo ratings yet

- Rajshahi University of Engineering and Technology, RajshahiDocument9 pagesRajshahi University of Engineering and Technology, RajshahiShakil Ahmed100% (1)

- SC Lab File Fayiz PDFDocument29 pagesSC Lab File Fayiz PDFfayizpNo ratings yet

- Chapter 9 Regression Practice ProblemsDocument7 pagesChapter 9 Regression Practice ProblemsMathaneshan RajagopalNo ratings yet

- McCombe Etal Supplementary Materials 2021Document6 pagesMcCombe Etal Supplementary Materials 2021dobbyNo ratings yet

- V. Desain Pondasi: 5.1. Kapasitas Tiang Pancang Berdasarkan Nilai N - SPTDocument8 pagesV. Desain Pondasi: 5.1. Kapasitas Tiang Pancang Berdasarkan Nilai N - SPTnugraha pierreNo ratings yet

- Guo 2005Document16 pagesGuo 2005Taha SerwerNo ratings yet

- 105-DBMS - Practical-05Document13 pages105-DBMS - Practical-05103ACHAL JICHKARNo ratings yet

- ML Lab6.Ipynb - ColaboratoryDocument5 pagesML Lab6.Ipynb - ColaboratoryAvi Srivastava100% (1)

- ARIMA Predict ForecastDocument1 pageARIMA Predict ForecastManoj MNo ratings yet

- Linear Regression (Cellphone - Prices)Document14 pagesLinear Regression (Cellphone - Prices)Ali YaqoobNo ratings yet

- Simple PendulumDocument13 pagesSimple Pendulumthelmamusonda60No ratings yet

- HVAC-Duct Sizing-Dougherty Rev5Document10 pagesHVAC-Duct Sizing-Dougherty Rev5ScribdJrNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Table 13-6 Values of Parameter E As A Function of The Pulse RatioDocument10 pagesTable 13-6 Values of Parameter E As A Function of The Pulse Ratioسحر سلامتیانNo ratings yet

- Hands-On Assignment II Solution: Pulse Code Modulation: ReadingDocument6 pagesHands-On Assignment II Solution: Pulse Code Modulation: ReadingnongonNo ratings yet

- Staad Pro and OffshoreDocument9 pagesStaad Pro and OffshoreAla BazrqanNo ratings yet

- Sensor Drift, Proposed SolutionDocument4 pagesSensor Drift, Proposed Solutionvijeta diwanNo ratings yet

- Surveying & Levelling: OER 6-Repository of QuestionsDocument10 pagesSurveying & Levelling: OER 6-Repository of QuestionsLelan de SouzaNo ratings yet

- Qualityandreliability-X and R Chartin Class ExampleDocument6 pagesQualityandreliability-X and R Chartin Class ExampleamritaneogiNo ratings yet

- DALab_Part-B_BCU&BUDocument12 pagesDALab_Part-B_BCU&BU8daudio02No ratings yet

- SQL Update For Db2Document38 pagesSQL Update For Db2ivanaNo ratings yet

- PE 4410 Lab 2 Inflow Performance Relationships (IPR) and Performance Index (PI)Document9 pagesPE 4410 Lab 2 Inflow Performance Relationships (IPR) and Performance Index (PI)Narjes WalidNo ratings yet

- Connectivity of 1D Ad Hoc Networks - v13.3Document14 pagesConnectivity of 1D Ad Hoc Networks - v13.3kanagaraj2331993No ratings yet

- Formula Sheet TMQU03Document3 pagesFormula Sheet TMQU03Maheer SohbatNo ratings yet

- Analisis JalurDocument30 pagesAnalisis JaluradityanugrahassNo ratings yet

- Lecture Plan 12 - 16!1!1Document7 pagesLecture Plan 12 - 16!1!1Hashir NajmiNo ratings yet

- 8) Pengolahan Data DIESEL 1 Katup Gas Konstan-DikonversiDocument47 pages8) Pengolahan Data DIESEL 1 Katup Gas Konstan-DikonversiIndra Arvito ArmanNo ratings yet

- Chapter 9: Selection of VariablesDocument30 pagesChapter 9: Selection of VariablesMerk GetNo ratings yet

- Satellite Survivability Using Lasso Regression in RDocument31 pagesSatellite Survivability Using Lasso Regression in RJeffrey StricklandNo ratings yet

- Código - Operaciones de Sistemas Eléctricos - Trabajo Final PDFDocument41 pagesCódigo - Operaciones de Sistemas Eléctricos - Trabajo Final PDFJuan Camilo Guevara GrajalesNo ratings yet

- DAA Unit 4Document24 pagesDAA Unit 4hari karanNo ratings yet

- AP19110010030 R Lab-Assignment-4Document7 pagesAP19110010030 R Lab-Assignment-4Sravan Kilaru AP19110010030No ratings yet

- Data Ukuran Utama Kapal "KM Nupy Cerria": Bayu Adam SetiaDocument29 pagesData Ukuran Utama Kapal "KM Nupy Cerria": Bayu Adam SetiaHelmyJuliantoNo ratings yet

- Tutorial PostprocDocument30 pagesTutorial PostprocAntonio RojasNo ratings yet

- 11 Shell Courses 6 FT WideDocument3 pages11 Shell Courses 6 FT WideImran Ahmed ShaikhNo ratings yet

- HVAC Formulas by AEMEPDocument9 pagesHVAC Formulas by AEMEPZen LohNo ratings yet

- X-Bar and R Chart Example In-Class ExerciseDocument6 pagesX-Bar and R Chart Example In-Class ExerciseMostak TahmidNo ratings yet

- Chapter 4 - Linear RegressionDocument25 pagesChapter 4 - Linear Regressionanshita100% (2)

- Easy Lab 4Document10 pagesEasy Lab 4ZbNo ratings yet

- Week16 N9Document3 pagesWeek16 N920131A05N9 SRUTHIK THOKALANo ratings yet

- TimeSeries ReportDocument41 pagesTimeSeries ReportSukanya ManickavelNo ratings yet

- 8 Shell Courses 8 FT Wide PDFDocument2 pages8 Shell Courses 8 FT Wide PDFImran Ahmed ShaikhNo ratings yet

- Waterflooding Ejercicio 1 FinalDocument10 pagesWaterflooding Ejercicio 1 FinalJorge Blanco ChoqueNo ratings yet

- Example Problem 18-1: SpringsDocument5 pagesExample Problem 18-1: SpringsVinaasha BalakrishnanNo ratings yet

- Experiment 9Document4 pagesExperiment 9Sindiswa Minenhle MngadiNo ratings yet

- Free Vibration of A Single Degree of Freedom SystemDocument6 pagesFree Vibration of A Single Degree of Freedom SystemStephanieNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- RG PDFDocument24 pagesRG PDFAdytya MahawardhanaNo ratings yet

- Application of IFS in Horizontal Curve Design: Table 4-11: Radius V.S Operating SpeedDocument5 pagesApplication of IFS in Horizontal Curve Design: Table 4-11: Radius V.S Operating SpeedAkram ElsaiedNo ratings yet

- Transitions from Digital Communications to Quantum Communications: Concepts and ProspectsFrom EverandTransitions from Digital Communications to Quantum Communications: Concepts and ProspectsNo ratings yet

- CamScanner 09-25-2020 13.42.28Document4 pagesCamScanner 09-25-2020 13.42.28sofia guptaNo ratings yet

- Gandhi Ko Samjhein - HindiDocument150 pagesGandhi Ko Samjhein - Hindisofia guptaNo ratings yet

- Perspectives in CommunicationDocument14 pagesPerspectives in Communicationsofia guptaNo ratings yet

- CH 6, 7 and 8 of WordDocument23 pagesCH 6, 7 and 8 of Wordsofia guptaNo ratings yet

- Shashi Tharoor Has Covered The Ground With These 2 Brutally Frank Lines That Indict The BritishDocument6 pagesShashi Tharoor Has Covered The Ground With These 2 Brutally Frank Lines That Indict The Britishsofia guptaNo ratings yet

- Colleges of University of DelhiDocument5 pagesColleges of University of Delhisofia guptaNo ratings yet

- Artificial IntelligenceDocument8 pagesArtificial Intelligencesofia guptaNo ratings yet

- Form 1 - Details From KG To X, XI and XIIDocument96 pagesForm 1 - Details From KG To X, XI and XIIsofia guptaNo ratings yet

- 12th ResultDocument4 pages12th Resultsofia guptaNo ratings yet

- Adam StyleDocument248 pagesAdam StyleNelson ZamoranoNo ratings yet

- Q11. Toggle Key Is A Feature of - : A. Microsoft Office B. Microsoft Excel C. Microsoft Word D. Microsoft WindowsDocument1 pageQ11. Toggle Key Is A Feature of - : A. Microsoft Office B. Microsoft Excel C. Microsoft Word D. Microsoft Windowssofia gupta0% (2)

- Practical Examination 2020 Ip Set 1Document3 pagesPractical Examination 2020 Ip Set 1sofia gupta100% (1)

- The National Education PolicyDocument3 pagesThe National Education Policysofia guptaNo ratings yet

- Maderno's Façade For ST Peter's, RomeDocument13 pagesMaderno's Façade For ST Peter's, Romesofia guptaNo ratings yet

- Pandas SeriesDocument2 pagesPandas Seriessofia guptaNo ratings yet

- International Indian School, Riyadh WORKSHEET (2020-2021) Grade - Xii - Informatics Practices - Second TermDocument9 pagesInternational Indian School, Riyadh WORKSHEET (2020-2021) Grade - Xii - Informatics Practices - Second Termsofia guptaNo ratings yet

- New - Template 1 - Zone 29 - Online Class (KG To 10th)Document3 pagesNew - Template 1 - Zone 29 - Online Class (KG To 10th)sofia guptaNo ratings yet

- Class Xii Minimum Level LearningDocument10 pagesClass Xii Minimum Level Learningsofia guptaNo ratings yet

- Informatics Practices: Class XII (As Per CBSE Board)Document20 pagesInformatics Practices: Class XII (As Per CBSE Board)sofia guptaNo ratings yet

- Crossword1 PDFDocument1 pageCrossword1 PDFsofia guptaNo ratings yet

- Sales: 1st QTR 2nd QTR 3rd QTR 4th QTRDocument2 pagesSales: 1st QTR 2nd QTR 3rd QTR 4th QTRsofia guptaNo ratings yet

- IBR 2010 Emerging Markets Report FINALDocument44 pagesIBR 2010 Emerging Markets Report FINALGrantThorntonAPACNo ratings yet

- Papers SelectionDocument3 pagesPapers Selectionanony starkNo ratings yet

- TLEICT9 Module (Week 1) PDFDocument5 pagesTLEICT9 Module (Week 1) PDFAN GelNo ratings yet

- Idirect Product PortfolioDocument74 pagesIdirect Product PortfolioImran SadiqNo ratings yet

- Elevation: Section of Approach SlabDocument1 pageElevation: Section of Approach Slabfevahe756No ratings yet

- Simple Circuit Design Tutorial For PoE ApplicationsDocument10 pagesSimple Circuit Design Tutorial For PoE ApplicationsTayyeb AliNo ratings yet

- District Wise List of Working Units of Eou'SDocument21 pagesDistrict Wise List of Working Units of Eou'SOm PrakashNo ratings yet

- (Download PDF) Business Psychology and Organizational Behaviour 6Th Edition Eugene Mckenna Online Ebook All Chapter PDFDocument42 pages(Download PDF) Business Psychology and Organizational Behaviour 6Th Edition Eugene Mckenna Online Ebook All Chapter PDFkurt.holland582100% (13)

- Unit Plan WwiDocument4 pagesUnit Plan Wwiapi-299888967No ratings yet

- Jawaban Suzette WashingtonDocument1 pageJawaban Suzette Washingtonakmal muzamarNo ratings yet

- UEFA Financial Fair Play, Club Toolkit 2013Document110 pagesUEFA Financial Fair Play, Club Toolkit 2013Tifoso BilanciatoNo ratings yet

- Module I. Obligations and ContractsDocument3 pagesModule I. Obligations and ContractsMa Leslie BuhanghangNo ratings yet

- CV 2013 NgouneDocument4 pagesCV 2013 NgouneNGOUNENo ratings yet

- Panasonic SC BT200Document60 pagesPanasonic SC BT200Mark CoatesNo ratings yet

- Accounting Cycle Journal Entries With Chart of AccountsDocument3 pagesAccounting Cycle Journal Entries With Chart of AccountsLala BoraNo ratings yet

- Gr10 Tenses Chapter Test ICSEDocument1 pageGr10 Tenses Chapter Test ICSEanirahul jtNo ratings yet

- School Bus AgreementDocument2 pagesSchool Bus AgreementtinduganmdNo ratings yet

- Finale Manuscript1 3.1Document14 pagesFinale Manuscript1 3.1Emmanuel BaccarayNo ratings yet

- English - Grade VIII - My Lost Dollar - Chapter - 1Document4 pagesEnglish - Grade VIII - My Lost Dollar - Chapter - 1Vishvavenkat K SNo ratings yet

- Things To Know Before Trading 2Document8 pagesThings To Know Before Trading 2guccijgzNo ratings yet

- 1.1 Contingency - Squeeze (Shoe, Top Liner) : Desired DesiredDocument2 pages1.1 Contingency - Squeeze (Shoe, Top Liner) : Desired DesiredilkerkozturkNo ratings yet

- Customizing Your SQL PromptDocument2 pagesCustomizing Your SQL PromptharimajorNo ratings yet

- RCDC SWOT Analysis - Tech Manufacturing FirmsDocument6 pagesRCDC SWOT Analysis - Tech Manufacturing FirmsPaul Michael AngeloNo ratings yet

- Pamphlet 60 - Edition 7 - Aug 2013 PDFDocument28 pagesPamphlet 60 - Edition 7 - Aug 2013 PDFmonitorsamsungNo ratings yet

- RW E-Jet User Manual V20-2-4 RottweilDocument107 pagesRW E-Jet User Manual V20-2-4 RottweilPaulo MagalhaesNo ratings yet

- BL en BlancoDocument1 pageBL en Blancoana bejaranoNo ratings yet