Professional Documents

Culture Documents

Module+11 - Capacity and Throughput Planning and Monitoring

Module+11 - Capacity and Throughput Planning and Monitoring

Uploaded by

Murad SultanzadehOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Module+11 - Capacity and Throughput Planning and Monitoring

Module+11 - Capacity and Throughput Planning and Monitoring

Uploaded by

Murad SultanzadehCopyright:

Available Formats

Capacity and Throughput Planning and Monitoring

Introduction

In any backup environment, it is critical to plan capacity and throughput adequately.

Planning ensures your backups complete within the time that is required and are

securely retained for the needed times. Data growth in backups is also a reality as

business needs change. Inadequate capacity and bandwidth to perform the backup

can cause backups to lag, or fail to complete. Unplanned growth can fill a backup

device sooner than expected and choke backup processes.

The main goal in capacity planning is to design your system with a model and

configuration that can store the required data for the required retention period.

When planning for throughput requirements, the goal is to ensure that the

bandwidth is sufficient to perform daily and weekly backups within the allotted

backup window. Effective throughput planning considers network bandwidth

sharing, and adequate backup and system housekeeping timeframes– windows.

Upon completing this module, you will be able to:

Describe capacity planning and benefits

Perform basic capacity-planning calculations

Describe throughput planning

Perform basic throughput-planning calculations and analysis

Identify throughput tuning steps

Cover Page Title

© Copyright 2019 Dell Inc. Page 1

Capacity and Throughput Planning Overview

Capacity and Throughput Planning Overview

Introduction

This lesson covers the process that is used to determine the capacity requirements

of a Data Domain system such as collecting information, and determining capacity

needs.

Dell EMC Sales uses detailed software tools and formulas when working with its

customers to identify backup environment capacity and throughput needs. Such

tools help systems architects recommend systems with appropriate capacities and

correct throughput to meet those needs. This lesson discusses the most basic

considerations for capacity and throughput planning.

This lesson covers the following topics:

• Determining capacity and throughput needs

• Typical data reduction expectations over time

• Calculating the required capacity

• Calculating the required throughput capacity

Cover Page Title

Page 2 © Copyright 2019 Dell Inc.

Capacity and Throughput Planning Overview

Determining Capacity Needs

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Using information collected about the backup system, you calculate capacity needs

by understanding the amount of data (data size) to be backed up, the types of data,

the size of a full (complete) backup, the number of copies of the data backed up,

and the expected data reduction rates (deduplication).

Data Domain system internal indices and other product components use extra,

variable amounts of storage, depending on the type of data and the sizes of files. If

you send different datasets to otherwise identical systems, one system may, over

time, have room for more or less actual backup data than another.

Cover Page Title

© Copyright 2019 Dell Inc. Page 3

Capacity and Throughput Planning Overview

Data reduction factors depend on the type of data being backed up. Some types of

challenging (deduplication-unfriendly) datatypes include:

• Compressed (multimedia, .mp3, .zip, and .jpg)

• Encrypted data

Retention policies greatly determine the amount of deduplication that can be

realized on a Data Domain system. The longer data is retained, the greater the

data reduction that can be realized. A backup schedule where retained data is

repeatedly replaced with new data results in little data reduction.

Cover Page Title

Page 4 © Copyright 2019 Dell Inc.

Capacity and Throughput Planning Overview

Typical Data Reduction Expectations Over Time

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

The reduction factors that are listed in this slide are examples of how changing

retention rates can improve the amount of data reduction over time.

The reduction rates that are shown are approximate.

A daily full backup that is retained only for one week on a Data Domain system

may result in a compression factor of only 5x. However, retaining weekly backups

plus daily incrementals for up to 90 days may result in 20x or higher reduction.

Data reduction rates depend on several variables including datatypes, the amount

of similar data, and the length of storage. It is difficult to determine exactly what

rates to expect from any given system. The highest rates are achieved when many

full backups are stored.

Cover Page Title

© Copyright 2019 Dell Inc. Page 5

Capacity and Throughput Planning Overview

When calculating capacity planning, use average rates as a starting point for your

calculations and refine them after real data is available.

Cover Page Title

Page 6 © Copyright 2019 Dell Inc.

Capacity and Throughput Planning Overview

Calculating the Required Capacity

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Calculate the required capacity by adding up the space required in this manner:

• First Full backup +

• Incremental backups (the number of days incrementals are run—typically 4 to

6) +

• Weekly cycle (one weekly full and 4 to 6 incrementals) times the number of

weeks data is retained

For example, 1 TB of data is backed up and a conservative reduction rate is

estimated at 5x. This gives 200 GB needed for the initial backup. With a 10 percent

change rate in the data each day, incremental backups are 100 GB each, and with

an estimated compression of 10x, the amount of space that is required for each

incremental backup is 10 GB.

Cover Page Title

© Copyright 2019 Dell Inc. Page 7

Capacity and Throughput Planning Overview

As subsequent full backups run, it is likely that the backup yields a higher data

reduction rate. 25x is estimated for the data reduction rate on subsequent full

backups. 1 TB of data compresses to 40 GB.

Four daily incremental backups require 10 GB each, and one weekly backup

needing 40-GB yields a burn rate of 80 GB per week. Running out the 80-GB

weekly burn rate over the full 8-week retention period means that an estimated 640

GB is needed to store the daily incremental backups and the weekly full backups.

Adding this to the initial full backup gives a total of 840 GB needed. On a Data

Domain system with 1 TB of usable capacity, this means that the unit operates at

about 84% of capacity. A 16% buffer may be OK for current needs. You might want

to consider a system with a larger capacity, or that can have extra storage added,

to compensate for data growth.

Again, these calculations are for estimation purposes only. Before determining true

capacity, use the analysis of real data that is gathered from your system as a part

of a Dell EMC BRS sizing evaluation.

Cover Page Title

Page 8 © Copyright 2019 Dell Inc.

Capacity and Throughput Planning Overview

Calculating Required Throughput

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

While capacity is one part of the sizing calculation, it is important not to neglect the

throughput of the data during backups.

An assumption would be that the greatest backup need is to process a full 200-GB

backup within a 10-hour backup window. Incremental backups should require less

time to complete, and you can safely presume that incremental backups would

complete within the backup window.

Dividing 200 GB by 10 hours yields a raw processing requirement of at least 20 GB

per hour.

Over an unrestricted 1-GB network, with maximum bandwidth available (with a

theoretical 270 GB per hour throughput), this backup would take less than 1 hour to

complete. If the network were sharing throughput resources during the backup time

Cover Page Title

© Copyright 2019 Dell Inc. Page 9

Capacity and Throughput Planning Overview

window, the amount of time that is required to complete the backup would increase

considerably.

It is important to note the effective throughput of both the Data Domain system and

the network on which it runs. Both points in data transfer determine whether the

required speeds are reliably feasible. Feasibility can be assessed by running

network testing software such as iperf.

Cover Page Title

Page 10 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Model Capacity and Throughput Performance

Introduction

This lesson applies the formulae from the previous two lessons to selecting the

best Data Domain system to fit specific capacity and throughput requirements.

This lesson covers the following topics:

• System model capacity and throughput performance

• Selecting a model

• Calculating capacity buffer for selected models

• Matching required capacity to model specifications

• Calculating throughput buffer for selected models

• Matching required performance to model specifications

Cover Page Title

© Copyright 2019 Dell Inc. Page 11

Model Capacity and Throughput Performance

System Model Capacity and Throughput Performance

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

The system capacity numbers of a Data Domain system assume a mix of typical

enterprise backup data—such as file systems, databases, mail, and developer files.

How often data is backed up determine the low and high ends of the range.

The maximum capacity for each Data Domain model assumes the maximum

number of drives (either internal or external) supported for that model.

Maximum throughput for each Data Domain model is dependent mostly on the

number and speed capability of the network interfaces being used to transfer data.

Some Data Domain systems have more and faster processors so they can process

incoming data faster.

Cover Page Title

Page 12 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Advertised capacity and throughput ratings for Data Domain products are based on

tests that are conducted in laboratory conditions. Your throughput varies depending

on your network conditions.

The number of network streams you may expect to use depends on your hardware

model. To learn specific maximum supported stream counts, see the specific model

Data Domain system guide.

Cover Page Title

© Copyright 2019 Dell Inc. Page 13

Model Capacity and Throughput Performance

Data Domain Performance Factors

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

There are external and internal factors that affect Data Domain system

performance in backup environments. External Factors in the backup environment

that often gate how fast data is sent to the Data Domain system. External factor

bottlenecks do not affect the potential throughput of the Data Domain system.

Internal Factors reduce potential throughput of the Data Domain system. Internal

factor bottlenecks require that specified values are addressed for potential

sustained performance of the DD system.

The number of simultaneous streams to a Data Domain system has a significant

contribution to its potential throughput. Each data Domain system model has a

range of simultaneous stream counts for optimal throughput and peak efficiency.

Cover Page Title

Page 14 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Throughput increases as more streams are used for Data Domain system models

and protocols to a point where peak performance occurs. After that, adding more

streams usually reduces performance. Data Domain systems are designed so that

performance does not drop below 85% of the peak throughput when more streams

are used (up to the maximum number supported for the model and protocol). It is

not recommended to run with more streams than the maximum supported values,

as it is not tested and can reduce system performance.

The number of disks in a Data Domain system is an important factor for the level of

performance the system can achieve.

Garbage Collection (GC), also known as cleaning, reclaims space that

unreferenced data segments use. Data segments become unreferenced by backup

application deletion policies. The cleaning process impacts Data Domain

performance.

Other internal factors are: Initial dataset backup speeds, compression, high

replication load, RAID rebuilds. Ensure that you follow the most current best

practice for each protocol.

Cover Page Title

© Copyright 2019 Dell Inc. Page 15

Model Capacity and Throughput Performance

Collecting Customer Site Information to Determine Needs

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

To determine required performance and capacity, site information must be

collected. Collected information can include: unstructured file data, rich media,

Exchange, SQL, SharePoint, and other databases.

“Amount in GB” is for the size of a SINGLE FULL BACKUP (before

compression).

Also, RPO, and RTO must be defined.

Cover Page Title

Page 16 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Selecting a Model

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Standard practices are to be conservative in calculating capacity and throughput

that is required for the needs of a specific backup environment. Estimate the need

for greater throughput and capacity rather than less. Apply your requirements

against conservative ratings (not the maximums) of a Data Domain system. Allow

for a minimum 20% buffer in both capacity and throughput requirements:

• Required capacity divided by maximum capacity of a particular model and then

multiply by 100 yields the capacity percentage.

• Required throughput divided by the maximum throughput of a particular model

and then multiply by 100 yields the throughput percentage.

If the capacity or throughput for a particular model does not provide at least a 20%

buffer, calculate the capacity and throughput for a Data Domain model of the next

higher capacity. For example, if the capacity calculation for a DD6300 yields a

Cover Page Title

© Copyright 2019 Dell Inc. Page 17

Model Capacity and Throughput Performance

capacity percentage of 91%, only a 9% buffer is available, so you should look at

the DD6800 next to calculate its capacity.

Sometimes one model provides adequate capacity, but does not provide enough

throughput, or conversely. The model selection must accommodate both

throughput and capacity requirements with an appropriate buffer.

Cover Page Title

Page 18 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Best Practices for Selecting a Data Domain Model

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

With capacity, performance, and buffer all taken under consideration, this is a best

practice of selecting a Data Domain model that is being implemented.

Cover Page Title

© Copyright 2019 Dell Inc. Page 19

Model Capacity and Throughput Performance

Matching Customer Requirements with a Model

Discussion

Question/Discussion Topic:

Given the current Data Domain hardware offerings, determine which model is best

suited for the needs of the customer.

1. A customer estimates that they require 70-TB usable storage for backups over

the next 5 years. They require at least 3.25 TB/hour throughput to ensure that

all data is backed up within their backup window.

2. A customer estimates that they require 275-TB usable storage for backups over

the next 5 years. They require at least 15 TB/hour throughput to ensure that all

data is backed up within their backup window.

3. A customer estimates that they require 575-TB usable storage for backups over

the next 5 years. They require at least 25 TB/hour throughput to ensure that all

data is backed up within their backup window.

Cover Page Title

Page 20 © Copyright 2019 Dell Inc.

Model Capacity and Throughput Performance

Discussion:

Accept answers, and discuss. Ensure that the following points are covered:

1. The customer could use the DD3300 if both Cloud Tier is used. Otherwise the

DD6300 would be the better choice.

2. The customer could use the DD6800 if both DD Boost and Cloud Tier are used.

Otherwise the DD9300 would be the better choice.

3. The customer could use the DD9300 if DD Boost and Cloud Tier are used.

Otherwise the DD9300 would be the better choice.

Cover Page Title

© Copyright 2019 Dell Inc. Page 21

Throughput Monitoring and Tuning

Throughput Monitoring and Tuning

Introduction

This lesson covers basic throughput monitoring and tuning on a Data Domain

System.

This lesson covers the following topics:

• Throughput bottlenecks

• Data Domain system performance metrics: Network and process utilization

• Data Domain system performance metrics: CPU and disk utilization

• Data Domain system stats metrics: Throughput

• Tuning solutions

Cover Page Title

Page 22 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Throughput Bottlenecks

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Integrating Data Domain systems into an existing backup architecture can change

the responsiveness of the backup system. Bottlenecks can restrict the flow of data

being backed up.

Some possible bottlenecks are:

• Clients: Disk Issues, Configuration, and Connectivity

• Network: Wire speeds, Switches and routers, and Routing protocols and

firewalls

As demand shifts among system resources – such as the backup host, client,

network, and Data Domain system itself – the source of the bottlenecks can shift.

Cover Page Title

© Copyright 2019 Dell Inc. Page 23

Throughput Monitoring and Tuning

Eliminating bottlenecks where possible, or at least mitigating the cause of reduced

performance through system tuning, is essential to a productive backup system.

Data Domain systems collect and report performance metrics through real-time

reporting and in logfiles to help identify potential bottlenecks and their causes.

Cover Page Title

Page 24 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Performing Daily Monitoring

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

To know what causes the performance bottlenecks and remove them, monitoring is

the first step. Using DD Management Center to perform daily monitoring prevents

serious problems from happening. Monitoring capacity is important, sometimes

capacity problems cause performance issues.

Dashboard widgets provide an overview of key performance indicators for the

monitored Data Domain systems, including Health Status, Active Alerts, Capacity

Thresholds, Capacity Used, Replication Status, Lag Thresholds, High Availability

Readiness, and Cloud Health.

The Capacity Thresholds widget displays systems that have crossed warning or

critical storage capacity levels.

Cover Page Title

© Copyright 2019 Dell Inc. Page 25

Throughput Monitoring and Tuning

The Capacity Used widget lets you monitor aggregate totals of storage levels for all

the Data Domain systems it is configured to manage. This widget monitors the total

storage capacity of all systems (for space that is used and available) or a selected

group if a filter is set.

Cover Page Title

Page 26 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Evaluating Customer Data and Actual Performance

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Some commands and tools can be used for evaluating customer data and

performance in Data Domain environment.

• replication show status can be used for checking the status of

MTree/Directory Replication Contexts

• Replication Data Transferred over 24 hr is the report of replication data over a

24-hour period

• From Replication History/Replication Detailed History, hourly breakdown 24-

hour replication can be seen

• Replication Throttle is used for checking Data Domain Throttle.

Cover Page Title

© Copyright 2019 Dell Inc. Page 27

Throughput Monitoring and Tuning

• Optimized Deduplication (Opt-dup) Data Transfer History can be used for

checking DDboost MFR [Manage File Replication] statistics – DDboost

Replication

• ddboost file-replication show stats is used for MFR statistics and is

a counter since the system has been up

• ddboost stats provides more counters information, which is useful when

troubleshooting live

• system show performance provides 24-hour report of system performance

include throughput of replication traffic

Cover Page Title

Page 28 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Network and Process Utilization

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

If you notice backups running slower than expected, it is useful to review system

performance metrics.

From the command line, use the command system show performance.

The command syntax is: system show performance [ {hr | min | sec}

[ {hr | min | sec} ]]

For example, system show performance 24 hr 10 min shows the system

performance for the last 24 hours at 10-minute intervals. 1 minute is the minimum

interval.

Servicing a file system request consists of three steps: receiving the request over

the network, processing the request, and sending a reply to the request.

Cover Page Title

© Copyright 2019 Dell Inc. Page 29

Throughput Monitoring and Tuning

Utilization is measured in four states:

• ops/s: Operations per second

• load: Load percentage (pending ops/total RPC ops *100)

• data (MB/s in/out): Protocol throughput - The amount of data the file

system can read from and write to the kernel socket buffer

• wait (ms/MB in/out): Time taken to send and receive 1 MB of data from the file

system to kernel socket buffer

Cover Page Title

Page 30 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

CPU and Disk Utilization

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

An important section of the system show performance output is the CPU and disk

utilization.

CPU avg/max: The average and maximum CPU utilization. The CPU ID of the

most-loaded CPU is shown in the brackets.

Disk max: Maximum disk utilization over all disks. The disk ID of the most-loaded

disk is shown in the brackets.

If the CPU utilization shows 80% or greater, or if the disk utilization is 60% or

greater for an extended period, the Data Domain system is likely to run out of disk

capacity or reach the CPU processing maximum. Check that there is no cleaning or

disk reconstruction in progress. You can check cleaning and disk reconstruction in

the State section of the system show performance report.

Cover Page Title

© Copyright 2019 Dell Inc. Page 31

Throughput Monitoring and Tuning

The following is a list of states and their meaning that is indicated in the system

show performance output:

• C – Cleaning

• D – Disk reconstruction

• B – GDA (also known as multinode cluster [MNC] balancing)

• V – Verification (used in the deduplication process)

• M – Fingerprint merge (used in the deduplication process)

• F – Archive data movement (active to archive)

• S – Summary vector checkpoint (used in the deduplication process)

• I – Data integrity

Typically the processes that are listed in the State section of the system show

performance report impact the amount of CPU utilization for handling backup and

replication activity.

Cover Page Title

Page 32 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Throughput Monitoring

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Besides watching disk utilization, you should monitor the rate at which data is being

received and processed. These throughput statistics are measured at several

points in the system to assist with analyzing the performance to identify

bottlenecks.

If slow performance is happening in real time, you can also run the system show

stats interval [interval in seconds] command. For example: system

show stats interval 2 produces a new line of data every two seconds.

The system show stats command reports CPU activity and disk read/write

amounts.

In the example report shown, you can see a high and steady amount of data

inbound on the network interface, which indicates that the backup host is writing

Cover Page Title

© Copyright 2019 Dell Inc. Page 33

Throughput Monitoring and Tuning

data. It is backup traffic and not replication traffic as the Repl column is reporting

no activity.

Low disk-write rates relative to steady inbound network activity are likely because

many of the incoming data segments are duplicates of segments that are already

stored on disk. The Data Domain system is identifying the duplicates in real time as

they arrive and writing only those new segments it detects.

Cover Page Title

Page 34 © Copyright 2019 Dell Inc.

Throughput Monitoring and Tuning

Tuning Solutions

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

If you experience system performance concerns, for example, you are exceeding

your backup window, or if throughput is slower than expected, consider the

following:

Check the Streams columns of the system show performance command to

ensure that the system is not exceeding the recommended write and read stream

count. Look under rd (active read streams) and wr (active write streams) to

determine the stream count. Compare active stream count with the recommended

number of streams supported for your system. If you are unsure about the

recommended streams number, contact Data Domain Support for assistance.

Cover Page Title

© Copyright 2019 Dell Inc. Page 35

Throughput Monitoring and Tuning

Check that CPU utilization (1 – process) is not unusually high. If you see CPU

utilization at or above 80%, it is possible that the CPU is under-powered for the

load it is required to process.

Check the State output of the system show performance command. Confirm that

there is no cleaning (C) or disk reconstruction (D) in progress.

Check the output of the replication show performance all command. Confirm that

there is no replication in progress. If there is no replication activity, the output

reports zeros. Press Ctrl + c to stop the command. If replication is occurring during

data ingestion and causing slower-than-expected performance, you might want to

separate these two activities in your backup schedule.

If CPU utilization is unusually high for any extended period, and you are unable to

determine the cause, contact Data Domain Support for further assistance.

When you are identifying performance problems, document the time when poor

performance was observed to know where to look in the system show

performance output.

Cover Page Title

Page 36 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

Monitoring File System Space Usage

Introduction

This lesson covers how to monitor Data Domain file system space usage.

This lesson covers the following topics:

• Monitoring space usage

• File system summary

• Space usage

• Space consumption with capacity indicator

• Physical Capacity Measurement

• Daily written

Cover Page Title

© Copyright 2019 Dell Inc. Page 37

Monitoring File System Space Usage

Monitoring File System Space Usage

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

When a disk-based deduplication system such as a Data Domain system is used

as the primary destination storage device for backups, sizing must be done

appropriately. Presuming the correctly sized system is installed, monitor usage to

ensure that data growth does not exceed system capacity.

The factors affecting how fast data on a disk grows on a Data Domain system

include:

• The size and number of datasets being backed up

An increase in the number of backups or an increase in the amount of data

being backed-up and retained causes space usage to increase.

• The compressibility of data being backed up

Cover Page Title

Page 38 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

Pre-compressed data formats do not compress or deduplicate as well as non-

compressed files and thus increase the amount of space that is used on the

system.

• The retention period specified in the backup software

The longer the retention period, the larger the amount of space required.

If any of these factors increase above the original sizing plan, your backup system

could overrun its capacity.

There are several ways to monitor the space usage on a Data Domain system to

help prevent system full conditions.

Cover Page Title

© Copyright 2019 Dell Inc. Page 39

Monitoring File System Space Usage

File System Summary

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Data Management > File System > Summary displays current space usage and

availability. It also provides an up-to-the-minute indication of the compression

factor.

The Space Usage section shows two panes. The first pane shows the amount of

disk space available based on the last cleaning.

Size: The amount of total disk space available for data.

Used: The physical space that is used for compressed data. Warning messages go

to the system log and an email alert is generated when the use reaches 90%, 95%,

and 100%. At 100%, the Data Domain system accepts no more data from backup

hosts.

Cover Page Title

Page 40 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

Available: The total amount of space available for data storage. This number can

change because an internal index may expand as the Data Domain system fills

with data. The index expansion takes space from the Available amount.

Cleanable: The estimated amount of space that could be reclaimed if a cleaning

operation were run.

The bottom pane displays compression information:

• Pre-Compression: Data written before compression

• Post-Compression: Storage used after compression

• Global-Comp Factor: Pre-Compression/(Size after global compression)

• Local-Comp Factor: (Size after global compression)/Post-Compression

• Total-Comp Factor: Pre-Compression/Post-Compression

• Reduction %: [(Pre-Compression - Post-Compression)/Pre-Compression]

Cover Page Title

© Copyright 2019 Dell Inc. Page 41

Monitoring File System Space Usage

Space Usage

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Data Management > File System > Charts displays graphs depicting space

usage and consumption on the Data Domain system.

The Space Usage view contains a graph that displays a visual representation of

data usage for the system. The Date Range choices are one week, one month,

three months, one year and All. Custom date ranges can be entered.

The lines of the graph denote measurement for:

Pre-comp Used (blue)—The total amount of data that is sent to the Data Domain

system by backup servers. Pre-compressed data on a Data Domain system is what

a backup server sees as the total uncompressed data held by a Data Domain

system-as-storage unit. Shown with the Space Used (left) vertical axis of the graph.

Cover Page Title

Page 42 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

Post-comp Used (red)—The total amount of disk storage in use on the Data

Domain system. Shown with the Space Used (left) vertical axis of the graph.

Comp Factor (green)—The amount of compression the Data Domain system has

performed with the data it received (compression ratio). Shown with the

Compression Factor (right) vertical axis of the graph.

Cover Page Title

© Copyright 2019 Dell Inc. Page 43

Monitoring File System Space Usage

Consumption (Space Used)

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

The Consumption view contains a graph that displays the space that is used over

time, which is shown in relation to total system capacity.

It displays Post-Comp in red, Comp Factor in green, Cleaning in yellow and Data

Movement in purple.

Data Movement refers to the amount of disk space that is moved to the archiving

storage area.

With the Capacity option disabled, as shown on the slide, the scale is adjusted to

present a clear view of space used.

Cover Page Title

Page 44 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

This view is useful to see trends in space availability on the Data Domain system,

such as changes in space availability and compression in relation to cleaning

processes.

Cover Page Title

© Copyright 2019 Dell Inc. Page 45

Monitoring File System Space Usage

Consumption (Capacity)

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

The Consumption view with the Capacity option enabled, as shown on the slide,

displays the total amount of disk storage available on the Data Domain system.

The amount is shown with the Space Used (left) vertical axis of the graph.

Clicking the Capacity checkbox switches this line on and off. The scale now

displays Space Used relative to the total capacity of the system, with a blue

Capacity line indicating the storage limit.

This view also displays cleaning start and stop data points. This graph is set for

one week and displays one cleaning event. The cleaning schedule on this Data

Domain system is at the default of one day per week.

Cover Page Title

Page 46 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

This view is useful to see trends in space availability on the Data Domain system,

such as changes in space availability and compression in relation to cleaning

processes.

Cover Page Title

© Copyright 2019 Dell Inc. Page 47

Monitoring File System Space Usage

Physical Capacity Measurement

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Physical capacity measurement (PCM) provides space usage information for a

subset of storage space. From the System Manager, PCM provides space usage

information for MTrees. From the command line interface you can view space

usage information for MTrees, Tenants, Tenant Units, and pathsets.

For more information about using PCM from the command line, see the Dell EMC

Data Domain Operating System Command Reference Guide.

At a system level, shared data is calculated only once. Shared data is reported to

each namespace that is sharing the data subset along with their unique data.

Physical Capacity Measurement can answer questions like, how much physical

space is each subset using? How much total compression is each subset

reporting? How does physical space utilization for a subset grow and shrink over

Cover Page Title

Page 48 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

time? How can one tell whether a subset has reached its physical capacity quota?

And what proportion of the data is unique and what proportion is shared with other

subsets?

With IT-as-a-service (ITAAS), Physical Capacity Measurement can be used to

calculate chargeback details for internal customers, or billing details for third-party

customers sharing space.

Using physical capacity measurement, it is now possible to enforce data capacity

quotas for physical space use where previously only logical capacity could be

calculated. These types of measurements are essential for customer chargeback

and billing.

Through physical capacity measurement, IT management can view trends in

physical storage, plan capacity needs, identify poor datasets, and identify accounts

that might benefit by migrating to a different storage space for growth purposes.

The Data Domain System Manager can configure and run physical capacity

measurement operations at the MTree level only.

You add physical capacity measurement schedules in the Data Management >

MTree window by selecting an MTree and then clicking the Manage Schedules

button.

Click the plus, pencil, or X button to add, edit, or delete a schedule, respectively.

When a measurement job completes, the results are graphed and are viewed

under the selected MTree in the Space Usage tab.

The Data Domain Management Center version 1.4 and later is enhanced to

perform all physical capacity measurement operations except defining pathsets.

Cover Page Title

© Copyright 2019 Dell Inc. Page 49

Monitoring File System Space Usage

Daily Written

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

The Daily Written view contains a graph that displays a visual representation of

data that is written daily to the system over time. The data amounts are shown over

time for pre- and post-compression amounts.

It is useful to see data ingestion and compression factor results over a selected

duration. You may notice trends in compression factor and ingestion rates.

Global-Comp Factor refers to the compression of the files after deduplication.

Local-Comp Factor refers to the compression of the files as they are written to disk.

The default Local compression is lz. lz is the default algorithm that gives the best

throughput. Data Domain recommends the lz option.

Cover Page Title

Page 50 © Copyright 2019 Dell Inc.

Monitoring File System Space Usage

gzfast is a zip-style compression that uses less space for compressed data, but

more CPU cycles (two times more than lz). gzfast is the recommended alternative

for sites that want more compression at the cost of lower performance.

gz is a zip-style compression that uses the least amount of space for data storage

(10% to 20% less than lz on average. However, some datasets get higher

compression). gz also uses the most CPU cycles (up to five times more than lz).

The gz compression type is commonly used for nearline storage applications in

which performance requirements are low.

For more detailed information about these compression types, see the Data

Domain Operating System Administration Guide.

Cover Page Title

© Copyright 2019 Dell Inc. Page 51

File System Cleaning

File System Cleaning

Introduction

This lesson covers an introduction to file system cleaning and its operation.

This lesson covers the following topics:

• Process

• Benefits

• Executing process

• Scheduling

• Throttling

• Considerations and practices

Cover Page Title

Page 52 © Copyright 2019 Dell Inc.

File System Cleaning

File System Cleaning

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

When your backup application expires data, the Data Domain system marks the

data for deletion. The data is not deleted immediately, it is removed during a

cleaning operation. The file system is available during the cleaning operation for all

normal operations including backup (write) and restore (read).

Although cleaning uses a significant amount of system resources, cleaning is self-

throttling and gives up system resources in the presence of user traffic.

Depending on the amount of space the file system must clean, file system cleaning

can take from several hours to several days to complete.

Cover Page Title

© Copyright 2019 Dell Inc. Page 53

File System Cleaning

Cleaning Process

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

• Performance starts to drop off once you get above 75% of active tier capacity.

When you’re at 90% you’re performance has already dropped off significantly.

There are alerts at multiple steps telling the customer the system is nearing

capacity. The actual amount of cleanable space isn’t known until that part of

enumeration is complete which is a function of how the data is actually being

aged.

-----------------------------------------End of Instructor Note----------------------------------------

Data invulnerability requires that data be written only into new, empty containers –

data that is already written in existing containers cannot be overwritten. This

requirement also applies to file system cleaning. During file system cleaning, the

system reclaims space that is used by expired data so you can use it for new data.

The example on this slide refers to dead and valid segments. Dead segments are

segments in containers the system no longer needs. For example, segments a file

Cover Page Title

Page 54 © Copyright 2019 Dell Inc.

File System Cleaning

that has been deleted has claimed, and that was the only claim to that segment.

Valid segments contain unexpired data that is used to store backup-related files.

When files in a backup are expired, pointers to the related file segments are

removed. Dead segments are not permitted to be overwritten with new data since

this could put valid data at risk of corruption. Instead, valid segments are copied

forward into free containers to group the remaining valid segments together. When

the data is safe and reorganized, the original containers are appended back onto

the available disk space.

Since the Data Domain system uses a log structured file system, space that was

deleted must be reclaimed. The reclamation process runs automatically as a part of

file system cleaning.

Cleaning requires enough free capacity to store the cleanable containers until they

are verified.

During the cleaning process, a Data Domain system is available for all normal

operations, including accepting data from backup systems.

Cleaning does require a significant amount of system processing resources and

might take several hours, or under extreme circumstances days, to complete.

Cleaning applies a set processing throttle of 50% when other operations are

running, sharing the system resources with other operations. The throttling

percentage can be manually adjusted up or down by the system administrator.

Cover Page Title

© Copyright 2019 Dell Inc. Page 55

File System Cleaning

Running File System Cleaning

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Using the Data Domain System Manager, go to Data Management > File System

> View Status of File System Services to see the Active Tier Cleaning Status. This

page displays the time when the last cleaning finished. To begin an immediate

cleaning session, select Start.

Access the Clean Schedule section by selecting Settings > Cleaning. This page

displays the current cleaning schedule and throttle setting. In this example, you can

see the default schedule - every Tuesday at 6 a.m. and 50% throttle. The schedule

can be edited.

Cover Page Title

Page 56 © Copyright 2019 Dell Inc.

File System Cleaning

Cleaning Considerations

Instructor Note: Important Points to Cover

Ensure that the following points are covered:

-----------------------------------------End of Instructor Note----------------------------------------

Schedule cleaning for times when system traffic is lowest. Cleaning is a file system

operation that impacts overall system performance.

Adjusting the cleaning throttle higher than 50% consumes more system resources

during the cleaning operation and can potentially slow down other system

processes.

Data Domain recommends running a cleaning operation after the first full backup to

a Data Domain system. The initial local compression on a full backup is generally a

factor of 1.5 to 2.5. An immediate cleaning operation gives extra compression by

another factor of 1.15 to 1.2 and reclaims a corresponding amount of disk space.

Any operation that shuts down the Data Domain file system or turns off the device

(a system power-off, reboot, or filesys disable command) stops the clean

Cover Page Title

© Copyright 2019 Dell Inc. Page 57

File System Cleaning

operation. File system cleaning does not continue when the Data Domain system

or file system restarts.

Encryption and gz compression requires more time than normal to complete

cleaning as all existing data must be read, uncompressed, and compressed again.

Expiring files from your backup does not guarantee that space will be freed after

cleaning. If active pointers exist to any segments related to the data you expire,

such as snapshots or fast copies, those data segments are still considered valid

and remain on the system until all references to those segments are removed.

Daily file system cleaning is not recommended as frequent cleaning can lead to

increased file fragmentation. File fragmentation can result in poor data locality and,

among other things, higher-than-normal disk utilization.

If the retention period of your backups is short, you might be able to run cleaning

more often than once weekly. The more frequently the data expires, the more

frequently file system cleaning can operate. Work with Dell EMC Data Domain

Support to determine the best cleaning frequency under unusual circumstances.

When the cleaning operation finishes, a message is sent to the system log giving

the percentage of storage space that was reclaimed.

Cover Page Title

Page 58 © Copyright 2019 Dell Inc.

File System Cleaning

Configuring File System Cleaning Lab Exercise

Introduction

In this lab, you configure and run file system cleaning on a Data Domain system

using the System Manager and note the effect file system cleaning has on deleted

MTrees.

Upon completion of this lab, you will be able to:

Delete and attempt to re-create an MTree

Run and verify file system cleaning

Configuring File System Cleaning

Cover Page Title

© Copyright 2019 Dell Inc. Page 59

Summary

Summary

Cover Page Title

Page 60 © Copyright 2019 Dell Inc.

Cover Page Title

© Copyright 2019 Dell Inc. Page 61

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5825)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (852)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (903)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (541)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (349)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (823)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (403)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- 2.6.1.2 Lab - Securing The Router For Administrative Access (Filled)Document38 pages2.6.1.2 Lab - Securing The Router For Administrative Access (Filled)true0soul80% (5)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- NFC Reader Design - How To Build Your Own ReaderDocument42 pagesNFC Reader Design - How To Build Your Own Readersalah.elmrabetNo ratings yet

- Module+8 - Data Domain Cloud TierDocument56 pagesModule+8 - Data Domain Cloud TierMurad SultanzadehNo ratings yet

- Module+7 - Data Domain Virtual Tape LibraryDocument64 pagesModule+7 - Data Domain Virtual Tape LibraryMurad SultanzadehNo ratings yet

- Module+12 - Data Domain System AdministrationDocument4 pagesModule+12 - Data Domain System AdministrationMurad SultanzadehNo ratings yet

- Module+9 - Data SecurityDocument33 pagesModule+9 - Data SecurityMurad SultanzadehNo ratings yet

- Vostro 5510 LaptopDocument9 pagesVostro 5510 LaptopanasqumsiehNo ratings yet

- User's ManualDocument50 pagesUser's ManualbukanaqNo ratings yet

- Additional Info: Version: 6.38 Build 2 Retail + PortableDocument6 pagesAdditional Info: Version: 6.38 Build 2 Retail + Portabledownload fileNo ratings yet

- Azure Mobile Services BattlecardDocument6 pagesAzure Mobile Services BattlecardSergeyNo ratings yet

- Network Access Control Quiz3 PDFDocument2 pagesNetwork Access Control Quiz3 PDFDaljeet SinghNo ratings yet

- Eos MCQDocument189 pagesEos MCQshruti patilNo ratings yet

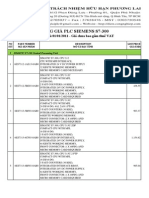

- Bang Gia PLC Siemens s7 300Document11 pagesBang Gia PLC Siemens s7 300916153No ratings yet

- PM Debug InfoDocument361 pagesPM Debug InfoEduardo kriguerNo ratings yet

- Arista ConfigGuideDocument2,242 pagesArista ConfigGuidesgourisNo ratings yet

- Trademarks: Mainboard User's ManualDocument39 pagesTrademarks: Mainboard User's Manualhenry barbozaNo ratings yet

- Transactions and Concurrency ControlDocument7 pagesTransactions and Concurrency Controlbhavesh agrawal100% (1)

- Virtual Machine MigrationDocument10 pagesVirtual Machine Migrationnitin goswamiNo ratings yet

- plt-04029 b.3 - Hid Biometric Manager Administration GuideDocument124 pagesplt-04029 b.3 - Hid Biometric Manager Administration GuidePablo OliveiraNo ratings yet

- PricelistDocument5 pagesPricelistParteep JangraNo ratings yet

- Comenzi LinuxDocument7 pagesComenzi LinuxleulgeorgeNo ratings yet

- Computer Network Assignment SolutionDocument6 pagesComputer Network Assignment SolutionpriyanksamdaniNo ratings yet

- Manual Digital SignatureDocument16 pagesManual Digital SignatureSuraj OjhaNo ratings yet

- Canteen Food Ordering & Management SystemDocument4 pagesCanteen Food Ordering & Management SystemHASSAN INAYATNo ratings yet

- Tenda Wireless Router - User Guide EnglishDocument106 pagesTenda Wireless Router - User Guide EnglishAdrian MGNo ratings yet

- Traing On HadoopDocument123 pagesTraing On HadoopShubhamNo ratings yet

- Used CPU's InquiryDocument8 pagesUsed CPU's InquiryRaresNo ratings yet

- C# - If A Folder Does Not Exist, Create It - Stack OverflowDocument11 pagesC# - If A Folder Does Not Exist, Create It - Stack Overflowjoao WestwoodNo ratings yet

- Panelview 5510 Panelview 5310 Panelview Plus 7 Performance Panelview Plus 7 Standard Mobileview Panelview 800 Panelview Plus 6 Panelview Plus 6 CompactDocument2 pagesPanelview 5510 Panelview 5310 Panelview Plus 7 Performance Panelview Plus 7 Standard Mobileview Panelview 800 Panelview Plus 6 Panelview Plus 6 CompactakbarmulangathNo ratings yet

- Experiment No: 8: Advanced System Security & Digital Forensics Lab Manual Sem 7 DlocDocument18 pagesExperiment No: 8: Advanced System Security & Digital Forensics Lab Manual Sem 7 DlocRAHUL GUPTANo ratings yet

- GP66 Leopard 11UH (20220119)Document17 pagesGP66 Leopard 11UH (20220119)Alex RomNo ratings yet

- Cisc vs. RiscDocument53 pagesCisc vs. RiscnikitaNo ratings yet

- IoT (15CS81) Module 5 Arduino UnoDocument30 pagesIoT (15CS81) Module 5 Arduino UnoRijo Jackson TomNo ratings yet

- Lenovo Legion5 17ach6 82k00016rmDocument2 pagesLenovo Legion5 17ach6 82k00016rmoanaNo ratings yet