Professional Documents

Culture Documents

Analysis of Student Academics Performance Using Hadoop: ISSN: 2454-132X Impact Factor: 4.295

Analysis of Student Academics Performance Using Hadoop: ISSN: 2454-132X Impact Factor: 4.295

Uploaded by

Vijay PatilOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Analysis of Student Academics Performance Using Hadoop: ISSN: 2454-132X Impact Factor: 4.295

Analysis of Student Academics Performance Using Hadoop: ISSN: 2454-132X Impact Factor: 4.295

Uploaded by

Vijay PatilCopyright:

Available Formats

Baliarsingh Diptimayee et.

al; International Journal of Advance Research, Ideas and Innovations in Technology

ISSN: 2454-132X

Impact factor: 4.295

(Volume 4, Issue 2)

Available online at: www.ijariit.com

Analysis of student academics performance using Hadoop

Diptimayee Baliarsingh Samiksha Hemant Parab Vijay N. Patil

baliarsinghdiptimayee@gmail.com samikshaprb@gmail.com vijay.patil.karad@gmail.com

Bharati Vidyapeeth College of Bharati Vidyapeeth College of Bharati Vidyapeeth College of

Engineering, Mumbai, Maharashtra Engineering, Mumbai, Maharashtra Engineering, Mumbai, Maharashtra

ABSTRACT

In recent years the amount of data generated in the educational sector is growing rapidly. In order to gain deeper insights from

the available data and extract useful knowledge to support decision making and improve the education service efficient storage

management and fast processing analytics is needed.

Academic data of a student helps institute to measure their progress. Students facing severe academic challenges are often

recognized too late. Analytics play a critical role in performing a thorough analysis of student and learning data to make an

informed decision. Big Data solution enables to analyze a wider variety of data sources and data types which improves the

accuracy of predictions. Hadoop platforms provide highly scalable platforms and can store a much greater volume of data at

lower cost.

The purpose of the proposed Project is to help in identifying “at risk” students who are not progressing towards graduation early

in order to get them back on track. The cause of lack of adequate progression can be identified and addressed. The system

proposed will be helpful for educational decision-makers to reduce the failure rate among students. The implementation is done

in Hadoop framework. The PAMAE algorithm is implemented for analyzing student’s academic data.

Keywords: Big data analytics, Educational data mining, Hadoop, MapReduce, HDFS, Clustering, Prediction.

1. INTRODUCTION

The amount of data generated in recent years in the educational sector is growing rapidly. It is required for institutes to extract

knowledge from huge amount of data collected for better decision making. Data Mining is defined as extracting information or

mining knowledge from huge sets of data. Data mining in the field of educational environment is known as Educational Data Mining.

Educational Data Mining (EDM) is defined on site http://educationaldatamining.org/ as: “Educational Data Mining is an emerging

discipline, concerned with developing methods for exploring the unique and increasingly large-scale data that come from

educational settings and using those methods to better understand students, and the settings which they learn in.”

A student’s performance is measured by how well they perform academically. In the proposed system, academically weak students

will be identified and the faculty can decide on necessary steps to be taken to help those students so that by developing proper plans

or making a change in their plans they can improve their skills. One of the most important responsibilities of educational institution

is to provide quality education. Quality education means that education is produced to students in an efficient manner so that they

learn without any problem. For this purpose, quality education includes features like methodology of teaching, continuous

evaluation, categorization of the student into similar type, so that students have similar objectives, educational background etc.

In the proposed system Hadoop framework is used. Hadoop is an open-source framework that allows to store and process big data

in a distributed environment across clusters of computers using simple programming models. Since student data generated can be

in petabytes it is considered as big datasets. In big data, traditional data mining algorithm is translated to MapReduce algorithms to

run them on Hadoop clusters. In a distributed computing environment, a Hadoop cluster is a special type of cluster used specifically

for storing and analyzing huge amounts of data. Hadoop MapReduce is a software framework which processes vast amounts of data

in-parallel on large clusters of commodity hardware in a reliable, fault-tolerant manner. The MapReduce algorithm contains two

important and distinct tasks, namely Map and Reduce. Map phase takes a set of data and converts it into another set of data, where

© 2018, www.IJARIIT.com All Rights Reserved Page | 2420

Baliarsingh Diptimayee et.al; International Journal of Advance Research, Ideas and Innovations in Technology

individual elements are broken down into tuples (key/value pairs). Reduce phase takes the output from Map phase as an input and

combines those data tuples into a smaller set of tuples.

2. LITERATURE REVIEW

A number of literature are taken into account.

Midhun Mohan M G & Siju K Augustin (2015) [1] this paper proposes a new approach called Learning Analytics and Predictive

analytics to identify academically at-risk students and to predict students learning outcomes in educational institutions. In this paper

they take the student data which is clustered using k-means algorithm and prediction of marks is done using multiple linear

regression.

Dr. N. Tajunisha, M. Anjali (2015) [2] this paper proposes a system that is useful in identifying weak students who are likely to

perform poorly in their studies. This paper identifies that in using MapReduce accuracy of the classification is increased than when

it is not. Time complexity of using MapReduce is also reduced. The proposed system which uses MapReduce can be used to process

big data too.

Hwanjun Song, Jae-Gil Lee & Wook-Shin Han (2017) [3] this paper proposes an algorithm Parallel k-Medoids Clustering with High

Accuracy and Efficiency (PAMAE). PAMAE significantly outperforms other algorithms like GREEDI I n terms of accuracy. In

terms of efficiency, PAMAE generates significantly lower clustering error as compared to other algorithms like CLARA-MR.

3. PROPOSED SYSTEM

Fig-1: System Architecture

3.1: Hadoop framework

Hadoop is an open-source software framework which is used to store huge amount of data. Hadoop provides background for running

applications on clusters for parallel processing on commodity hardware. It provides massive storage for data and enormous processing

power. Hadoop is composed of four core components—Hadoop Common, Hadoop Distributed File System (HDFS), MapReduce and

YARN. Hadoop framework lowers the risk of huge system failure and unexpected data loss. Hadoop can be easily scaled up from a

single server to thousands of hardware machines, each of which offers local computation and storage.

3.2: MapReduce

MapReduce is a software framework which provides ease of writing software applications whose function is to process a huge amount

of data on a number of clusters of DataNodes. The data can be structured or unstructured form. The term MapReduce is actually a

combination of two phases-Map phase and Reduce phase. The Map phase is designed for sorting and filtering the input data and

Reduce phase which is designed for summing up the data. The MapReduce framework operates exclusively on <key, value> pairs.

Each map task reads the input as a set of (key, value) pairs and produces a transformed set of (key, value) pairs as the output. The

framework shuffles and sorts outputs of the map tasks, sending the intermediate (key, value) pairs to reduce task, which groups them

into final results.

3.3: HDFS

HDFS stands for Hadoop Distributed File System, it provides data storage across Hadoop clusters. The data stored in HDFS can be

accessed across all the nodes in a Hadoop cluster. It connects together the file systems on many local nodes to create a single file

system. The datasets need to be processed and uploaded to Hadoop Distributed File System (HDFS). It can be used by various nodes

with Mappers and Reducers in Hadoop clusters. HDFS uses two types of nodes-NameNode and DataNode. NameNode acts as the

master node. It is responsible to manage the file system metadata, mapping of blocks to DataNodes. DataNodes act as slave nodes.

Datanode store the actual data. The DataNodes takes care of reading and writes operation with the file system.

Parallel k-Medoids Clustering with High Accuracy and Efficiency (PAMAE) algorithm

© 2018, www.IJARIIT.com All Rights Reserved Page | 2421

Baliarsingh Diptimayee et.al; International Journal of Advance Research, Ideas and Innovations in Technology

Phase I: PAMAE starts by simultaneously performing random sampling with replacement. That is, it creates m random samples

whose size is n. Then, a k-medoids algorithm A is performed in parallel against each sample. We note that any existing algorithm of

finding medoids globally can be a candidate for A. Among them sets of medoids, each of which was obtained from each sample,

PAMAE searches for the set of medoids that minimizes the clustering error. This set of medoids, denoted by {θˆ1, . . . , θˆk}, is

regarded as the best set of seeds and is fed to Phase II.

Fig-2.1: Phase I of PAMAE algorithm

Phase II: Using the seeds derived in Phase I, PAMAE simultaneously partitions the entire data set just like the assignment step of the

k-means algorithm. That is, it assigns each of non-medoid objects to the closest medoid. Then, the algorithm updates the medoid of

each partition (i.e., cluster) by choosing the most central object, similar to the update step of the k-means algorithm. Last, the entire

set of data objects is partitioned into clusters if needed.

Fig-2.2: Phase II of PAMAE algorithm

4. CONCLUSION

The user can identify academically at-risk students in educational institutes. This study will help to the students and the teachers to

improve the division of the student. This study will also work to identify those students which needed special attention to reducing

the failure rate and taking appropriate action for the next semester examination. Hadoop MapReduce framework provides parallel

distributed processing and reliable data storage for large volumes of data files.

5. ACKNOWLEDGEMENT

We would like to express our special thanks of gratitude to our guide Prof Vijay Patil, our Project Co-coordinator Prof S. M. Mhatre,

HOD Prof. S. M. Patil, and our Principal Dr. M. Z. Shaikh for their guidance. We also thank Bharati Vidyapeeth College of

Engineering for providing us an opportunity to embark on this project.

6. REFERENCES

[1] Midhun Mohan M G & Siju K Augustin, “A bigdata approach for classification and prediction of student result using

MapReduce,” 2015 IEEE Recent Advances in Intelligent Computational Systems (RAICS).

[2] Dr. N. Tajunisha, M. Anjali “Predicting student performance using MapReduce”, International Journal of Engineering and

Computer Science ISSN:2319-7242 Volume 4 Issue 1 January 2015

[3] Hwanjun Song, Jae-Gil Lee & Wook-Shin Han (2017), “PAMAE: Parallel k-Medoids Clustering with High Accuracy and

Efficiency”, KDD 2017 Research Paper.

© 2018, www.IJARIIT.com All Rights Reserved Page | 2422

You might also like

- Big Data AnalyticsDocument12 pagesBig Data AnalyticsGautam PrajapatiNo ratings yet

- Splunk-7 2 1-IndexerDocument446 pagesSplunk-7 2 1-IndexerRaghavNo ratings yet

- Improved Frequent Pattern Mining For Educational DataDocument8 pagesImproved Frequent Pattern Mining For Educational DataManjari UshaNo ratings yet

- Analysis of Student Performance Based On Classification and Mapreduce Approach in BigdataDocument8 pagesAnalysis of Student Performance Based On Classification and Mapreduce Approach in BigdataBHAKTI YOGANo ratings yet

- Data Warehouse On Hadoop Platform For Processing of Big Educational DataDocument4 pagesData Warehouse On Hadoop Platform For Processing of Big Educational DataPiyuSh AngRaNo ratings yet

- Data Mining: Analysis of Student Database Using Classification TechniquesDocument7 pagesData Mining: Analysis of Student Database Using Classification TechniquesNicholasRaheNo ratings yet

- Extending The Student's Performance Via K Means and Blended LearningDocument4 pagesExtending The Student's Performance Via K Means and Blended LearningIJEACS UKNo ratings yet

- 1 s2.0 S1877050915019018 MainDocument9 pages1 s2.0 S1877050915019018 MainAnna JonesNo ratings yet

- Literature Review On Educational Data MiningDocument5 pagesLiterature Review On Educational Data Miningafdtynfke100% (1)

- Cctedfrom K Means To Rough Set Theory Using Map Reducing TechniquesupdatedDocument10 pagesCctedfrom K Means To Rough Set Theory Using Map Reducing TechniquesupdatedUma MaheshNo ratings yet

- Prediction of Student Performance Using Linear RegressionDocument5 pagesPrediction of Student Performance Using Linear RegressionWisnuarynNo ratings yet

- Classifying Students Performance Using Gradient Boosting Algorithm TechniqueDocument7 pagesClassifying Students Performance Using Gradient Boosting Algorithm Techniqueayesha awanNo ratings yet

- Badr 2016Document10 pagesBadr 2016teelk100No ratings yet

- Article2013 MAC201310050Document6 pagesArticle2013 MAC201310050Jeena MathewNo ratings yet

- Literature ReviewDocument11 pagesLiterature ReviewM. AwaisNo ratings yet

- A Survey On The Result Based Analysis of Student Performance Using Data Mining TechniquesDocument5 pagesA Survey On The Result Based Analysis of Student Performance Using Data Mining TechniquesIIR indiaNo ratings yet

- E10380585S19Document6 pagesE10380585S19Akram PashaNo ratings yet

- Big Data Analytics Litrature ReviewDocument7 pagesBig Data Analytics Litrature ReviewDejene DagimeNo ratings yet

- Mapreduce: Simplified Data Analysis of Big Data: SciencedirectDocument9 pagesMapreduce: Simplified Data Analysis of Big Data: Sciencedirectmfs coreNo ratings yet

- Student Performance Analysis System Using Data Mining IJERTCONV5IS01025Document3 pagesStudent Performance Analysis System Using Data Mining IJERTCONV5IS01025abduNo ratings yet

- SSRN Id3243704Document6 pagesSSRN Id3243704deannete05No ratings yet

- Regression Analysis of Student Academic Performance Using Deep LearningDocument16 pagesRegression Analysis of Student Academic Performance Using Deep LearningSergio AlumendaNo ratings yet

- University Admission PredictionDocument18 pagesUniversity Admission PredictionRani LakshmanNo ratings yet

- Irjet V7i2688 PDFDocument4 pagesIrjet V7i2688 PDFdileepvk1978No ratings yet

- Proposition of An Employability PredictionDocument14 pagesProposition of An Employability PredictionLoganathan RamasamyNo ratings yet

- Performance Enhancement Using Combinatorial Approach of Classification and Clustering in Machine LearningDocument8 pagesPerformance Enhancement Using Combinatorial Approach of Classification and Clustering in Machine LearningInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Prediction ClusteringDocument16 pagesPrediction ClusteringisaacNo ratings yet

- A Study On Prediction Techniques of Data Mining in Educational DomainDocument4 pagesA Study On Prediction Techniques of Data Mining in Educational DomainijcnesNo ratings yet

- Educational Data Mining: A State-Of-The-Art Survey On Tools and Techniques Used in EDMDocument7 pagesEducational Data Mining: A State-Of-The-Art Survey On Tools and Techniques Used in EDMEditor IJCAITNo ratings yet

- Article 3Document4 pagesArticle 3Bam NoiceNo ratings yet

- Data Mining Application On StudentDocument1 pageData Mining Application On StudentdrvipinlaltNo ratings yet

- Reasearch PperDocument13 pagesReasearch PperDheeraj PranavNo ratings yet

- An Evaluation of Ensemble and Non-Ensemble Data Mining Techniques in Predicting Students' Performance On E-Learning DatasetDocument7 pagesAn Evaluation of Ensemble and Non-Ensemble Data Mining Techniques in Predicting Students' Performance On E-Learning DatasetInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- ICETIC Full Paper Submission3 JBuschDocument7 pagesICETIC Full Paper Submission3 JBuschArnob TanjimNo ratings yet

- Predicting Students Performance Using Data Mining Technique With Rough Set Theory ConceptsDocument7 pagesPredicting Students Performance Using Data Mining Technique With Rough Set Theory Conceptspro_naveenNo ratings yet

- Educational Data Mining For Student Placement Prediction Using Machine Learning AlgorithmsDocument4 pagesEducational Data Mining For Student Placement Prediction Using Machine Learning AlgorithmsshivuNo ratings yet

- Comparative Study of Classification Algorithms Based On Mapreduce ModelDocument4 pagesComparative Study of Classification Algorithms Based On Mapreduce Modelpradeepkraj22No ratings yet

- A Survey On Data Mining Algorithms On Apache Hadoop PlatformDocument3 pagesA Survey On Data Mining Algorithms On Apache Hadoop PlatformlakhwinderNo ratings yet

- Data Mining Research Papers Free Download 2014Document6 pagesData Mining Research Papers Free Download 2014egvhzwcd100% (1)

- Educational Data Mining and Analysis of Students' Academic Performance Using WEKADocument13 pagesEducational Data Mining and Analysis of Students' Academic Performance Using WEKAAgus ZalchNo ratings yet

- Data Analytics Data Analytics in HigherDocument13 pagesData Analytics Data Analytics in HighermukherjeesNo ratings yet

- Na BIC20122Document8 pagesNa BIC20122jefferyleclercNo ratings yet

- Final Survey Paper 17-9-13Document5 pagesFinal Survey Paper 17-9-13Yathish143No ratings yet

- Research Papers in Data Mining 2014Document6 pagesResearch Papers in Data Mining 2014linudyt0lov2100% (1)

- Data AnalyticsDocument191 pagesData AnalyticsKalpit SahooNo ratings yet

- Information Sciences: Junbo Zhang, Tianrui Li, Da Ruan, Zizhe Gao, Chengbing ZhaoDocument15 pagesInformation Sciences: Junbo Zhang, Tianrui Li, Da Ruan, Zizhe Gao, Chengbing ZhaoTapan ChowdhuryNo ratings yet

- Using ID3 Decision Tree Algorithm To The Student Grade Analysis and PredictionDocument4 pagesUsing ID3 Decision Tree Algorithm To The Student Grade Analysis and PredictionEditor IJTSRDNo ratings yet

- Student's Placement Eligibility Prediction Using Fuzzy ApproachDocument5 pagesStudent's Placement Eligibility Prediction Using Fuzzy ApproachInternational Journal of Engineering and TechniquesNo ratings yet

- (IJCST-V5I6P21) :babasaheb .S. Satpute, Dr. Raghav YadavDocument4 pages(IJCST-V5I6P21) :babasaheb .S. Satpute, Dr. Raghav YadavEighthSenseGroupNo ratings yet

- Expert System For Student Placement PredictionDocument5 pagesExpert System For Student Placement PredictionATSNo ratings yet

- Prediction of Higher Education AdmissibilityDocument7 pagesPrediction of Higher Education AdmissibilityAbiy MulugetaNo ratings yet

- Predictive Analytics For Big Data ProcessingDocument3 pagesPredictive Analytics For Big Data ProcessingInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Hybrid Machine Learning Algorithms For PDocument10 pagesHybrid Machine Learning Algorithms For PNicholasRaheNo ratings yet

- Assessing Naive Bayes and Support Vector Machine Performance in Sentiment Classification On A Big Data PlatformDocument7 pagesAssessing Naive Bayes and Support Vector Machine Performance in Sentiment Classification On A Big Data PlatformIAES IJAINo ratings yet

- Dissertation Report On Data MiningDocument8 pagesDissertation Report On Data MiningHelpWriteMyPaperCanada100% (1)

- The Machine Learning: The Method of Artificial IntelligenceDocument4 pagesThe Machine Learning: The Method of Artificial Intelligencearif arifNo ratings yet

- Performance Evaluation of Feature Selection Algorithms in Educational Data MiningDocument9 pagesPerformance Evaluation of Feature Selection Algorithms in Educational Data MiningIIR indiaNo ratings yet

- Cyber Cafe Management System DEEPAK SHINDEDocument36 pagesCyber Cafe Management System DEEPAK SHINDEMAYURESH KASARNo ratings yet

- Scalable Machine-Learning Algorithms For Big Data Analytics: A Comprehensive ReviewDocument21 pagesScalable Machine-Learning Algorithms For Big Data Analytics: A Comprehensive ReviewvikasbhowateNo ratings yet

- Machine Learning Algorithms for Data Scientists: An OverviewFrom EverandMachine Learning Algorithms for Data Scientists: An OverviewNo ratings yet

- Bre 2018Document24 pagesBre 2018Vijay PatilNo ratings yet

- FiveSemester IF PDFDocument77 pagesFiveSemester IF PDFVijay PatilNo ratings yet

- Cover Letter PDFDocument1 pageCover Letter PDFVijay PatilNo ratings yet

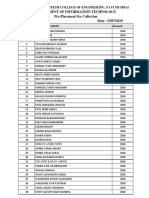

- Pre-Placement Fee Collection Department of Information TechnologyDocument1 pagePre-Placement Fee Collection Department of Information TechnologyVijay PatilNo ratings yet

- Websecurity 091201224753 Phpapp02 PDFDocument44 pagesWebsecurity 091201224753 Phpapp02 PDFVijay PatilNo ratings yet

- WEB SecurityDocument44 pagesWEB SecurityVijay PatilNo ratings yet

- Report 2Document33 pagesReport 2AdarshaNo ratings yet

- Introduction To Data Mining, 2 Edition: by Tan, Steinbach, Karpatne, KumarDocument95 pagesIntroduction To Data Mining, 2 Edition: by Tan, Steinbach, Karpatne, KumarsunilmeNo ratings yet

- QualityStage - Investigate Stage Intro - PR3 Systems BlogDocument6 pagesQualityStage - Investigate Stage Intro - PR3 Systems BlogMuraliKrishnaNo ratings yet

- Chap 06Document23 pagesChap 06anayaNo ratings yet

- Swathi Parupalli - OBIEEDocument10 pagesSwathi Parupalli - OBIEEMohamed AbrarNo ratings yet

- Learn SQL Quickly Using 30 ScenariosDocument107 pagesLearn SQL Quickly Using 30 ScenariosDrum CodeNo ratings yet

- Restrict References PragmaDocument2 pagesRestrict References PragmabskrbvkrNo ratings yet

- Redaction of PDF Files Using Adobe Acrobat Professional XDocument13 pagesRedaction of PDF Files Using Adobe Acrobat Professional XjimscotchdaleNo ratings yet

- Rohit Ranan CV UpdatedDocument3 pagesRohit Ranan CV UpdatedUjjwal TarwayNo ratings yet

- Access 1581305305Document24 pagesAccess 1581305305fake2222rdNo ratings yet

- ADO LectureDocument57 pagesADO LectureNikolay ChankovNo ratings yet

- Big DataDocument15 pagesBig DataVISHNUNATH MSNo ratings yet

- Oracle Business Intelligence Foundation Suite 11g Essentials Exam Study GuideDocument27 pagesOracle Business Intelligence Foundation Suite 11g Essentials Exam Study Guidekaushik4endNo ratings yet

- Imc 451 Quiz Test ExamDocument13 pagesImc 451 Quiz Test ExamLopee RahmanNo ratings yet

- SyllabusDocument2 pagesSyllabusnsavi16eduNo ratings yet

- Sap Ad-Hoc QueryDocument54 pagesSap Ad-Hoc Queryapi-3852248100% (7)

- Programming in Oracle With PL/SQLDocument26 pagesProgramming in Oracle With PL/SQLhshaiNo ratings yet

- Database Design Theory: Introduction To Databases CSCC43 Winter 2011 Ryan JohnsonDocument10 pagesDatabase Design Theory: Introduction To Databases CSCC43 Winter 2011 Ryan JohnsonPritam GuptaNo ratings yet

- Sample ThesisDocument40 pagesSample ThesisShehreen SyedNo ratings yet

- LinqDocument10 pagesLinqIdelfonzo VelásquezNo ratings yet

- How To Use EWM Product Master Fields and Enhancement - Brightwork - SAP PlanningDocument4 pagesHow To Use EWM Product Master Fields and Enhancement - Brightwork - SAP PlanningS BanerjeeNo ratings yet

- Lab Plan 2Document16 pagesLab Plan 2Mariam ChuhdryNo ratings yet

- Oracle Day 2Document8 pagesOracle Day 2hrudayNo ratings yet

- Storage AccountDocument6 pagesStorage AccountmicuNo ratings yet

- Unit1: Database Update With Open SQLDocument11 pagesUnit1: Database Update With Open SQL3vilcatNo ratings yet

- Data Integration Overview May 2020Document37 pagesData Integration Overview May 2020zaymounNo ratings yet

- Bahasa Inggris Tugas 2Document7 pagesBahasa Inggris Tugas 2kimbiofor.pkuNo ratings yet

- Ora4sap Database Update.2021 07Document16 pagesOra4sap Database Update.2021 07Mohamed AfeilalNo ratings yet