Professional Documents

Culture Documents

FMEA A Life Cycle Cost Perspective

FMEA A Life Cycle Cost Perspective

Uploaded by

arkaprava ghoshCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

FMEA A Life Cycle Cost Perspective

FMEA A Life Cycle Cost Perspective

Uploaded by

arkaprava ghoshCopyright:

Available Formats

Submitted to Proceedings of DETC 2000

2000 ASME Design Engineering Technical Conferences

September 10 - 14, 2000, Baltimore, Maryland

DETC2000/RSAFP-14478

SCENARIO-BASED FMEA: A LIFE CYCLE COST PERSPECTIVE

Steven Kmenta Kosuke Ishii

Design Division Design Division

Mechanical Engineering Dept. Mechanical Engineering Dept.

Stanford University Stanford University

Stanford, CA 94305-4022 Stanford, CA 94305-4022

kmenta@stanford.edu ishii@cdr.stanford.edu

ABSTRACT

Failure Modes and Effects Analysis (FMEA) is a method to Occurrence) have inconsistent definitions, resulting in

identify and prioritize potential failures of a product or process. questionable risk priorities.

The traditional FMEA uses three factors, Occurrence, Severity, This paper introduces a scenario-based FMEA to delineate

and Detection, to determine the Risk Priority Number (RPN). and evaluate risk events more accurately.

This paper addresses two major problems with the conventional

Scenario-based FMEA is an FMEA organized around

FMEA approach: 1) The Detection index does not accurately

undesired cause–effect chains of events rather than

measure contribution to risk, and 2) The RPN is an inconsistent

failure modes. Scenario-based FMEA uses probability

risk-prioritization technique.

and cost to evaluate the risk of failure.

The authors recommend two deployment strategies to

address these shortcomings: 1) Organize the FMEA around The purpose of scenario-based FMEA is to improve the

failure scenarios rather than failure modes, and 2) Evaluate risk representation of failures, and to evaluate these failures with

using probability and cost. The proposed approach uses consistent and meaningful metrics. This approach facilitates

consistent and meaningful risk evaluation criteria to facilitate life economic decision-making about the system design. Table 1

cost-based decisions. shows a rough comparison between traditional FMEA,

KEYWORDS: FMEA, FMECA, Risk Priority Number (RPN), serviceability design, and scenario-based FMEA.

reliability, risk management

Table 1 Comparison of risk-reduction techniques

1. INTRODUCTION method strategy failure cost of product total life

probability failure cost cost

While FMEA is considered a tool to help improve reliability, traditional ↑ reliability ↓ same ↑ ?

it can also guide improvements to the serviceability and FMEA

diagnosability of a system (Di Marco et al., 1995: Lee, 1999). serviceability ↓ service cost same ↓ ↑ ?

design

However, product development teams must balance risk

scenario- ↓ life-cycle cost cost-based cost- cost- ↓

reduction strategies (improved reliability, serviceability and based FMEA (failure cost and decision based based

diagnostics) with cost. product cost) decision decision

Companies are aware of warranty costs associated with

product failures. Additionally, many companies now offer long- Traditional FMEA is usually focused on improving product

term service contracts, making the manufacturer responsible for reliability – often at the expense of product cost. Serviceability

costs formerly borne by the customer. Now more than ever, design reduces the cost of the failure (if failure does occur) but

companies need to make cost-driven decisions when generally adds to product cost through design changes.

compromising between the risk of failure and the cost of Scenario-based FMEA can be used to weigh the expected cost

abatement. The Risk Priority Number (RPN), used to evaluate of the failure against the estimated cost of the solution strategy.

risk in FMEA, is not sufficient for making cost-driven decisions. The next section covers definitions of the Risk Priority

At best, the RPN provides a qualitative assessment of risk. In Number. Section 3 discusses shortcomings of traditional FMEA

addition, components of the RPN (Severity, Detection, and and the RPN. Section 4 compares scenario-based FMEA to

traditional FMEA using a hair dryer and video projector as

1 Copyright 2000 by ASME

illustrative examples. Section 5 concludes with advantages of Failure Mode – the manner in which a component,

scenario-based FMEA and lists promising opportunities for subsystem, or system could potentially fail to meet the

future research. design intent. The potential failure mode could also be

the cause of a potential failure mode in a higher level

2. INTRODUCTION TO FMEA subsystem, or system, or the effect of a lower level effect.

FMEA is a method to help identify and rank potential

failures of a design or a process. Table 2 outlines the three This definition helps distinguish between causes, failure modes,

major phases of the FMEA. and effects, depending on the level of the analysis. A failure

Table 2 Three major categories of FMEA Tasks mode can simultaneously be considered a cause and an effect

and is therefore a “link” in the cause-effect chain.

FMEA Task Result

Identify Describe failures: 2.2 The Risk Priority Number (RPN)

Failures Causes → Failure Modes → Effects The RPN is used by many FMEA procedures to assess risk

Prioritize Assess Risk Priority Numbers (RPN): using these three criteria:

Failures RPN = failure occurrence × effects severity × detection

difficulty • Occurrence (O) – how likely is the cause to occur and result

Reduce Reduce risk through: reliability, test plans,

in the failure mode?

Risk manufacturing changes, inspection, etc. • Severity (S) – how serious are the end effects?

• Detection (D) – how likely is the failure to be detected before

The FMEA team identifies, evaluates, and prioritizes potential it reaches the customer?

failures. The method helps focus resources on high “risk”

The RPN is the product of these three elements.

failure modes. Next, the team attempts to lessen risk by

reducing failure frequency and severity. RPN = O × S × D (1)

The FMEA emerged in the 1960‘s as a formal methodology

The definitions of the RPN elements will be described in the

in the aerospace and defense industries. Since then, it has been

following sections.

adopted and standardized by many industries worldwide. Table

3 lists several FMEA procedures published since 1964.

2.2.1 Probability of Occurrence (O)

Table 3 A partial list of FMEA Publications Occurrence is related to the probability of the failure mode

year FMEA Document and cause. Occurrence is not related to the probability of the

1964 “Failure Effect Analysis” Transactions of the NY Acad. of Sciences (J.S. end effects. The Occurrence values are arbitrarily related to

Couthino)

probabilities or failure rates (Table 4).

1974 US Department of Defense (DoD) Mil-Std-1629 (ships) “Procedures for

Performing a Failure Mode, Effects, and Criticality Analysis”

Table 4 Occurrence criteria (adapted from AIAG, 1995)

1980 US DoD Mil-Std-1629A Probability of Failure Failure Rates Occurrence

1984 US DoD Mil-Std-1629A/Notice 2 Very High: ≥ 1 in 2 10

1985 International Electrochemical Commission (IEC) 812 “Analysis techniques Failure is almost inevitable 1 in 3 9

for system reliability − procedure for failure mode and effects analysis” High: 1 in 8 8

1988 Ford published “Potential Failure Modes and Effects Analysis in Design Repeated Failures 1 in 20 7

and for Manufacturing and Assembly Processes Instruction Manual” Moderate: 1 in 80 6

1994 SAE J1739 Surface Vehicle Recommended Practice Occasional failures 1 in 400 5

1995 “FMEA: from theory to execution” (D.H. Stamatis): 1 in 2,000 4

1995 “FMEA: predicting and preventing problems before they occur” (P.

Low: 1 in 15,000 3

Palady) Relatively few failures 1 in 150,000 2

1996 Verbrand der Automobil industrie, Germany VDA Heft 4 Teil 2: Remote: Failure unlikely 1 in 1,500,000 1

1996 “The Basics of FMEA” (R.E. McDermott et. al.)

1998 Proposal for a new FMECA standard for SAE (J. Bowles)

2.2.2 Severity of Effect (S)

Severity measures the seriousness of the effects of a failure

2.1 What is a Failure Mode? mode. Severity categories are estimated using a 1 to 10 scale,

The Automotive Industry Action Group (1995) definition of for example:

a failure mode is similar to definitions used in many FMEA

standards (SAE 1994, Stamatis 1995).

2 Copyright 2000 by ASME

10 Total lack of function and a safety risk Most definitions of risk contain two basic elements: 1) chance:

..

. possibility, uncertainty, probability, etc., and 2) consequences:

8 Total lack of function

.. cost, hazard, injury, etc. Kaplan and Garrick (1981) maintain that

.

4 Moderate degradation of performance risk quantification should evaluate the following questions:

..

. • How likely is the scenario to happen?

1 Effect almost not noticeable

• (If it does happen) what are the consequences?

Severity scores are assigned only to the effects and not to The first question addresses the possibility of an undesired

the failure mode or cause. event. The second question attempts to quantify the loss or

hazard associated with the failure. We will use these principles

2.2.3 Detection (D) to examine the elements of the Risk Priority Number.

The definition of Detection usually depends on the scope

of the analysis. Definitions usually fall into one of three 3.2 “Occurrence” Evaluates the Probability of the

categories Failure Mode and its Cause

i) Detection during the design & development process The Occurrence rating reflects the probability of the cause

ii) Detection during the manufacturing process and the immediate failure mode and not the probability of the

iii) Detection during operation end effect (AIAG, 1995: Stamatis, 1995). Consider two possible

Two interpretations are described in this section. situations associated with a grease fire in a kitchen:

Detection Definition 1: The assessment of the ability of i) a grease fire resulting in damage to the kitchen only, and

the “design controls” to identify a potential cause or ii) a grease fire where the building burns down.

design weakness before the component or system is The Occurrence rating for these two scenarios would be

released for production. identical although, intuitively, we know that damage to the

kitchen is more likely than the building burning down.

Detection scores are generated on the basis of likelihood of According to the definitions of risk, we are ultimately

detection by the relevant company design review, testing interested in the probability of the cause and resulting effect.

programs, or quality control measures. This definition The two scenarios related to the fire have different probabilities

evaluates the internal quality and reliability systems of an (and outcomes) and therefore pose different risks. The different

organization (Palady, 1995). probabilities would not be reflected using the Occurrence index.

Detection Definition 2: What is the chance of the 3.3 Confusion Associated with the “Detection” Index

customer catching the problem before the problem results One definition of Detection relates to the quality processes

in catastrophic failure? of an organization. This definition quantifies how likely the

The rating decreases as the chance of detecting the problem is company’s “controls” are to detect the failure mode during the

increased, for example: product development process. There is some confusion

associated with this definition, for example: If a “design

10 – Almost impossible to detect control” detects a failure mode, does this imply it will be

..

. prevented? How can we quantify the competency of quality

1 – Almost certain to detect

processes? If a potential failure is identified as a line item on an

This definition measures how likely the customer is to detect the FMEA, has it already been “detected”?

failure based on the systems diagnostics and warnings, or Controls used by the organization can help identify

inherent indications of pending “catastrophic” failure. potential failures (e.g. design reviews, reliability estimation,

modeling, testing) and estimate the probability of a failure.

3. SHORTCOMINGS OF TRADITIONAL FMEA However, this is an organizational issue, rather than a product

issue. Assessing the effectiveness of an entire development

3.1 What is Risk? effort is extremely difficult and highly subjective.

The purpose of FMEA is to prioritize potential failures in Another definition of Detection relates to the detectability

accordance with their “risk.” There are many definitions of risk, of a failure once the product is in the hands of the customer.

and we have listed a few below Once the product is in operation, “Detection” reflects the

• The possibility of incurring damage probability that the failure will occur in one mode (detected

(Hauptmanns and Werner, 1991) early) versus another (undetected until ‘catastrophic’ failure).

• Exposure to chance of injury or loss For example, FMEA might list an oil leak resulting in engine

(Morgan and Henrion, 1990) failure with a low Detection rating (easy to detect) due to a

• Possibility of loss or injury,… uncertain danger prominent oil warning light. However, the RPN will give a low

(Webster’s Dictionary, 1988)

3 Copyright 2000 by ASME

priority to a very frequent oil leak that is easy to detect, even if example, an Occurrence rating of 8 is more likely than 4 but it is

this is the mostly costly failure over the life of the engine. not twice as likely. The 1-10 numbers are categories for which

Having multiple definitions for Detection begs the using letters categories would also be appropriate. It is valid to

question: which definition, if any, measures contribution to rank failures along a single ordinal dimension (e.g., “Severity”)

risk? Risk is composed of chance and consequences: the but multiplying ordinal scales is not an “admissible”

chance component should reflect the probability of the cause transformation (Mock and Grove, 1979).

and the specified consequences. If “probability of Occurrence” The magnitude of the RPN is used to prioritize failure risks.

rates the probability of the cause, then Detection should However, the magnitude of the RPN is not meaningful since it is

represent the conditional probability of realizing the ultimate the product of three ordinal indices. Perhaps using letter-

consequences (given the cause.) categories would be favorable since we would not be tempted to

We propose that the Detection rating should either be re- multiply categories. The definitions and calculations associated

defined as a conditional probability or omitted from the FMEA with the RPN raise the question: Is the RPN a valid measure of

procedure altogether. Some alternative rankings of risk risk? Section 4 demonstrates the inconsistencies of the RPN

recommend using only combinations of Occurrence vs. Severity with respect to another risk metric: expected cost.

to prioritize risk (SAE, 1994: Palady, 1995); Detection is

conspicuously absent from these prioritization schemes. 4. SCENARIO-BASED FMEA USING EXPECTED COST

The focus of FMEA should center on identifying undesired

3.4 Inconsistent Values are Assigned to the RPN causes and effects. If a “cause” has a cause, then the cause-

Elements effect chain should be lengthened to accommodate the

The numbers used for Occurrence, Severity, and Detection additional information. Traditional FMEA spreadsheets limit

do not carry any special meaning and so their basic definitions failure representation by only providing a few columns to

vary. Figure 1 shows four recommended relationships between describe an entire fault chain (Lee, 1999). In addition, the

Occurrence and probability. analysis is organized around failure modes, which are an

arbitrary “link” in the cause-effect chain. Ultimately, we are not

10

so concerned with whether “cracked pipe” is a cause, failure

9

AIAG, 1995 mode, or an effect, but rather:

8

• What could cause the failure?

Occurrence Rating

Palady, 1995

7

McDermott et al., 1996 • What are the potential effects on the system?

6

• How likely is the cause to occur and result in the

5 Humphries, 1994

4

specified effects?

3

There is a risk associated with the scenario, that is, the

2

series of causes and effects.

1

A failure scenario is an undesired cause-and-effect chain

0

of events. Each scenario can happen with some

1.E-11 1.E-09 1.E-07 1.E-05 1.E-03 1.E-01

Approximate Probability probability and results in negative consequences.

Figure 1 Occurrence rankings and probabilities The cause-effect chain can be lengthened when new effects and

An Occurrence rating of “5” could correspond to failure rates causes of “causes” are identified. Failure scenarios are used

which span several orders of magnitude (e.g. from around 0.1 to frequently in the Risk Analysis field (Kaplan and Garrick, 1981:

0.001 in Figure 1). Modarres, 1992). The difference between failure modes and

The ratings for Severity are not related to any standard scenarios is as follows

measure. These 1-10 values can only provide a relative ranking • failure mode describes a cause and the immediate effect

of “consequences” or “hazard” for a particular FMEA. • failure scenario is an undesired cause-effect chains of

Detection is not related directly to probability or any other events, from the initiating cause to end effect, including all

standard measure (with the exception of some “process FMEA” intermediate effects (Figure 2.)

manuals). In addition, Detection has several disparate

failure mode failure scenario

definitions as we’ve discussed.

immediate next-level end

3.5 Measure Theoretic Perspective on the RPN cause

effect effect effect

The scales for Severity, Occurrence, and Detection are

ordinal. Ordinal scales are used to rank-order items such as the Figure 2 Failure modes and scenarios

size of eggs, or quality of hotels. Ordinal measures preserve Each failure scenario happens with some probability and

transitivity (order) but their magnitude is not “meaningful.” For results in negative consequences. For example, brake failure in

4 Copyright 2000 by ASME

an automobile could cause an accident while carrying one Shipping &

Design Manufacturing Operation

Installation

passenger or four: these are different scenarios with distinct

design prototype fabrication / manufacturing

probabilities and consequences. It may not be necessary to flaw testing assembly flaw inspection shipping installation operation field failure

differentiate between “accident with one passenger” and

a b c d e f g h

“accident with four passengers,” but scenario-based FMEA can

accommodate this granularity. Different causes can be listed for - failure (cause) introduced - failure (effect) discovered

each scenario, such as “low fluid pressure” or “linkage failure” a probability = p(a) [1-p(b|a)] [1-p(d|a)] p(h|a) h

and causes for these events can be added. Figure 3 shows a a p(a) [1-p(b|a)] p(d|a) d

map of potential failure scenarios for a brake system failure. a p(a)p(b|a) b

design flaw inadequate linkage reduced c p(c)p(d|c) d

material failure braking c p(c) [1-p(d|c)] p(h|c) h

mfg. defect ability

excessive e p(e)p(h|e) h

force potential failure scenarios

leak in system f p(f)p(h|f) h

no

low fluid g p(g)p(h|g) h

frozen brake line braking

pressure ability Figure 4 Failure scenarios in a product’s life cycle

clogged brake line

Failures can be introduced at many points and discovered at

Figure 3 Scenario map for brake failures various instances downstream. For example, a design flaw

There are 12 independent paths, or failure scenarios, and (point a in Figure 4) might be discovered during prototype

each is listed as a line item on the FMEA. Risk ratings measure testing (point b), during manufacturing inspection (point d), or

the probability and consequences of each scenario. For during operation (point h). Each scenario has a “risk”

instance, an oil leak could result in equipment failure. associated with its probability and consequences.

Traditional FMEA would list this as a single line item with an

Occurrence (assigned to the cause), Severity (assigned to the 4.1 “Expected Cost” as a Measure of Risk

equipment damage) and Detection rating (assigned to how likely In section 3 we explained how risk contains two basic

the oil leak would be discovered before the end effect is elements: chance and consequences. Probability is a universal

realized). Scenario-based FMEA would list the failures as three measure of chance, and cost is an accepted measure of

line items, each with an assessed probability and consequence: consequences (Gilchrist, 1993). For a given failure scenario, risk

i) Oil leak, warning light goes on, signal is detected and calculated as expected cost: the product of probability and

operation is ceased (consequence1, probability1) failure cost (Rasmussen, 1981: Modarres, 1992). Expected cost

ii) Oil leak, warning light goes on, signal goes undetected, is used extensively in the fields of Risk Analysis, Economics,

operation continues and equipment is damaged Insurance, Decision Theory, etc. Both probability and cost are

(consequence2, probability2) ratio scales, for which multiplication is an admissible operation.

iii) Oil leak, no warning light, operation continues and For any scenario i

equipment is damaged (consequence2, probability3) expected costi = p i × ci (2)

Risk should be evaluated separately since each scenario has an Total risk for n scenarios can be expressed as follows

associated probability and consequence. Similarly, defects in a n

manufacturing line result in different failure scenarios: they can total expected failure cost = ∑ pi ci (3)

be “detected” at discrete points during production or after they i =1

have been shipped, with differing probabilities and Figure 4 shows the composition of the expected cost equation

consequences. for scenario a–d (from Figure 4).

According to Bayes’ theorem, scenario probability is the probability of scenario

probability of the cause and conditional probability of the end probability of cause conditional probability of consequences

effect (Lindley, 1965). Scenario-based FMEA accommodates a)

multiple causes that could result in a single end effect, b) single eca-d = p(a) [1- p(b|a)] p(d|a) ca-d

causes that result in multiple effect. Figure 3 shows examples of

failure scenarios throughout a products life cycle. measure of risk measure of consequences

Figure 5 Composition of expected cost

Expected costs for the eight scenarios introduced in Figure 4 are

expressed as:

5 Copyright 2000 by ASME

eca-b = p(a) p(b|a) ca-b (4) 4.2 Comparing the RPN to “Expected Cost”

This section shows a detailed comparison of the Risk Priority

eca-d = p(a) [1- p(b|a)] p(d|a) ca-d (5)

Number and expected cost. Our analysis is based on the

eca-h = p(a) [1- p(b|a)] [1- p(d|a)] p(h|a)] ca-h (6) following assumptions:

ecc-d = p(c) p(d|c) cc-d (7) Assumption 1: A table relating Occurrence to probability

We assume a table mapping probability to a 1-10 Occurrence

ecc-h = p(c) [1- p(d|c)] p(h|c) cc-h (8)

scale, similar to the relationships shown in Figure 1.

ece-h = p(e) p(h|e) ce-h (9)

Assumption 2: A table relating Severity to cost

ecf-h = p(f) p(h|f) cf-h(10) We assume there is a cost metric that is a continuous measure

ecg-h = p(g) p(h|g) cg-h (11) of consequences, and this cost is mapped to a 1-10 Severity

rating. Figure 7 shows examples of hypothetical cost-Severity

Total risk for this example is the sum of the expected costs for all relationships for different industries.

failure scenarios. In addition, failure costs associated with a $

specific cause can be calculated easily. For example, if a cause

(i) has (n) potential effects, we could calculate the expected cost

associated with this cause as

n

∑ p(j|i)c

Aerospace

eci = p(i) (12) Cost Company

i-j

j =1

From equations 4-6, the total risk associated with cause “a”

would be Automotive

Company

eca = eca-b + eca-d + eca-h (13) Appliance

Company

= p a-b ca-b + p a-d ca-d + p a-h ca-h 1 2 3 4 5 6 7 8 9 10

Severity Rating

= p(a)[p(b|a)ca-b + [1-p(b|a)] p(d|a)ca-d Figure 7 Hypothetical Cost-Severity relationships

+ [1-p(b|a)][1- p(d|a)]p(h|a)ca-h] Other “cost” metrics could be substituted, such as toxicity

Similarly, expected costs could be calculated for a given end levels, fatalities, etc., as long the magnitude of the measure is

event, using the probability of all contributing causes. This meaningful. Specific examples of cost functions are shown in

technique yields cost estimates that aren’t possible using the Table 5.

Risk Priority Number. For comparison, Figure 6 shows the

Table 5 Examples of cost-Severity tables

composition of the RPN.

linear exponential hybrid

1-10 rating corresponding to the 1-10 rating of Severity Cost ($) Severity Cost ($) Severity Cost ($)

probability of failure cause & mode consequences 1 50 1 10 1 20

2 100 2 50 2 100

3 150 3 200 3 400

RPN = O x D x S

4 200 4 700 4 1000

5 250 5 2500 5 2000

measure difficulty of detecting failure during the 6 300 6 10000 6 3500

of risk design, manufacturing, OR operation (1-10) 7 350 7 35000 7 6000

8 400 8 130000 8 10000

Figure 6 Composition of the Risk Priority Number 9 450 9 500000 9 15000

10 500 10 2000000 10 20000

Expected cost has an infinite range of values, but the RPN is

restricted to integer values between 1 and 1000. The RPN Assumption 3: Detection is omitted from RPN calculations

effectively expands a 0-1 probability into a 1-100 component For this example, we will set the Detection rating equal to 1 for

(Occurrence × Detection) and compresses the measure of all failures. We assume that the “probability of detection” has

consequences into a 1-10 range (Severity.) been rolled into the “probability of occurrence” rating.

Gilchrist (1993) was among the first to recommend

supplanting the RPN with expected cost. We build on Gilchrist's Theoretical Example: RPN vs. Expected Cost

ideas in the next section by performing a detailed comparison This example uses a standard probability-Occurrence

between expected cost and the RPN. relationship (from Table 4) and a linear cost-Severity

relationship (from Table 5). These two tables yield 100 pairs of

{probability, cost} and their corresponding {Occurrence,

6 Copyright 2000 by ASME

Severity} pair. We can calculate the Risk Priority number (O×S) For a linear cost function, we have demonstrated that the

and expected cost (p×c) for all 100 failure combinations. Risk Priority Number is an inconsistent measure of risk with

respect to expected cost. The two methods will not produce the

100

same risk priorities for a given set of failures. Using the

90

Detection index will magnify the differences in priorities

80 between expected cost and the RPN.

70

c

4.3 Other Occurrence and Severity Functions

60

Figure 9 displays expected cost-RPN relationships for nine

50 combinations of Severity-cost relationships (from Table 5) and

40 Occurrence-probability mappings (from Figure 1.)

e

30 AIAG McDermott Palady

20 100 100 100

80 80

b 80

a

10 60

60

linear

60

d 40

40

0 40

20

20

0.00001 0.001 0.1 10 1000 20

1E-09 1E-07 0.00001 0.001 0.1 10 1000 0

Expected Cost

0

1E-05 0.0001 0.001 0.01 0.1 1 10 100 1000 0.001 0.01 0.1 1 10 100 1000

100 100

100

Figure 8 RPN vs. expected cost for 100 pairs of probability 80 80 80

60

and cost

hybrid

60 60

40

40 40

20

The RPN has a 1-to-many relationship to expected cost (Figure 20

0

20

1E-10 0.0000001 0.0001 0.1 100 100000

8); the points don’t fall in a monotonically increasing line as we 0

0.00001 0.001 0.1 10 1000 100000

0

0.001 0.01 0.1 1 10 100 1000 10000 100000

might have expected. The results reveal some interesting facts:

100 100 100

Failures with the same RPN have different expected costs 80

80 80

logarithmic

60

60 60

Points a and b in Figure 8 depict the wide range of expected 40

40

40

20

costs associated with a single RPN value (Table 6). 20 0

1.E-10 1.E-07 1.E-04 1.E-01 1.E+02 1.E+05 1.E+08

20

0

0

Table 6 RPN can have different expected costs 1E-06 0.0001 0.01 1 100 10000 1000000 1E+08 0.0001 0.01 1 100 10000 1000000 100000000

Scenario Probability Cost Expected Occurrence Severity RPN

cost Rank, O Rank, S O×× S

Figure 9 RPN vs. expected cost for several Occurrence-

a 0.75 $ 50 $37.50 10 1 10

probability & Severity-cost relationships

b 6.66x10-7 $500 $0.00033 1 10 10

All combinations result in a 1-to-many relationship between the

Failures with the same expected cost have different RPNs RPN and expected cost. Ordering risks using the RPN will result

For this set of data, an expected cost of $6.25 has a range of in a different priority compared to expected cost for all

RPN from 8 to 60 (Table 7). combinations listed above.

Table 7 Expected-cost can have different RPN values

Scenario Probability Cost Expected Occurrence Severity RPN 4.4 Example: Hair Dryer

cost Rank, O Rank, S O×× S This section compares RPN to expected cost using data

d .125 $50 $ 6.25 8 1 8

from a hand-held hair dryer FMEA. Failure probabilities are

c .0125 $500 $ 6.25 6 10 60

listed with a corresponding Occurrence rating. In addition,

Two different RPN priorities would be given to failures with the failure cost estimates are listed with an associated Severity

same expected cost. This situation is shown in Figure 8 by rating (Table 9).

points c and d.

RPN and expected cost give conflicting priorities

The situation exists where conflicting priorities are given by

RPN and expected cost (points a and e in Figure 7, Table 8).

Table 8 Conflicting priorities of RPN and expected cost

Scenario Probability Cost Expected Occurrence Severity RPN

cost Rank, O Rank, S O×× S

a 0.75 $50 $ 37.50 10 1 10

e 6.66x10-5 $500 $ 0.033 3 10 30

This could lead to “myopic” decisions when prioritizing risk.

7 Copyright 2000 by ASME

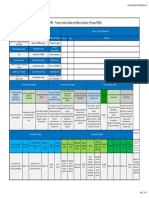

Table 9 RPN and Expected Cost associated with the failure 4.5 Other methods of Prioritizing Failures in FMEA

modes of a hair dryer The most popular alternative to the RPN is the “criticality

matrix,” introduced in the Failure Modes Effects and Criticality

Analysis (FMECA) standards (SAE, 1994). The criticality matrix

Occurrence

Probability

Potential Potential

exp. Cost

Scenario

Function/ Local End

Severity

Requirement

Failure Causes of

Effects Effects plots probability vs. Severity (Figure 11).

Cost

RPN

Modes Failure

1

convert power to no air hair not

g

rotation

no rotation motor failure 0.001 6

flow dried

100 8 0.1 48 E1

c

convert rotation no fan loose fan

0.01 8

no air hair not

30 6 0.3 48 10-1 A1

to flow rotation connection flow dried

convert power to obstruction motor melt A2

d no rotation 1E-04 4 1000 9 0.1 36

rotation impeding fan overheat casing

supply power to no power to broken fan no air hair not 10-2 A4 B3

i

fan fan switch

0.001 6

flow dried

30 6 0.03 36 C1

j

supply power to no power to loose switch

0.001 6

no air hair not

30 6 0.03 36

C2

fan fan connection flow dried

supply power to no power to short in power no air hair not

10-3 A3

k 0.001 6 30 6 0.03 36

fan fan cord flow dried

convert power to foreign matter- reduced inefficient

a low rotation 0.1 10 10 3 1 30 B2

rotation friction air flow drying 10-4 D1

P

convert power to obstruction no air hair not

b no rotation 0.1 10 10 3 1 30

rotation impeding fan flow dried

supply power to no power to no source no air hair not D2

f

fan fan power

0.01 8

flow dried

10 3 0.1 24 10-5 E2

convert power to rotor/stator reduced hair not

l low rotation 1E-04 4 30 6 0.003 24

rotation misalignment air flow dried

supply power to no power to short in power no air potential

e

fan fan cord

1E-05 2

flow injury

10000 10 0.1 20 10-6

supply power to low power low source reduced inefficient

IV III II I

m 1E-04 4 10 3 0.001 12

fan to fan power air flow drying Severity Classification

convert power to rotor/stator

h

rotation

low rotation

misalignment

0.01 8 noise noise 5 1 0.05 8 ( increasing severity )

Figure 11 Criticality matrix for prioritizing failures (adapted

The prioritization given by decreasing expected cost does from Bowles, 1998)

not match the RPN ordering. Figure 10 shows how the RPN can

give lower priority to failures that have high expected cost. FMECA standards use probability to measure the chance

of a failure and plot it on a log scale against severity categories.

60 1.2 This technique is preferable to the RPN for the several reasons:

• failure frequency is measured with probability

50 exp. Cost 1 • the Detection index is eliminated, and

RPN

• ordinal measures are not multiplied.

40 0.8

However, the heuristics used to calculate equivalent risk

exp. cost

RPN

30 0.6

failures are meaningless without knowing the relative magnitude

of the Severity classifications. For example, E1, A2, and B3 in

20 0.4 Figure 11 are considered “equivalent rank.” Depending on the

scale of the severity classifications, these points may or may

10 0.2 not have equivalent risk priority.

0 0

4.6 Using Expected Cost to Make Design Decisions:

h m e l f b a k j i d c g

failure scenarios Projector Bulb Example

Consider a computer video projector used for

Figure 10 Failures prioritized by expected cost have presentations: bulb failure is a known problem and occurs with

different priorities than the RPN probability 0.01. The cost of bulb replacement is $50. The bulb

Understandably, engineers are not inclined to make can fail either A) during a presentation or B) while “idle” (i.e.,

probability or cost estimates without any substantiating data. failure without an audience,) with respective probabilities of 0.05

Apparently there is less apprehension with using 1-10 point and 0.95. The cost of failure during a presentation is estimated

estimates for probability and severity. However, probability and to be $500 more than the $50 cost of replacing the bulb.

cost carry more meaning than their RPN counterparts. Both risk Expected cost for the two failure scenarios is as follows

criteria are based on educated estimates; the set containing I) Current bulb failure rate (Pbulb failure = 0.01)

probability and cost contains more information. Additionally,

ECbulb failure = ECpresentation (scenario A) + ECidle (scenario B)

probability and cost are ratio scales, which can be legitimately

multiplied into a composite risk measure. = (Pbulb failure )[(Ppresentation)($Cpresentation) + (Pidle )($Cidle )]

= 0.01 [(0.05)($550) + (0.95)($50)]

= $0.75

8 Copyright 2000 by ASME

From here, one can use a decision analytic approach to make The strategies are compared to the current design in Figure 12.

design trade-offs. Consider three risk abatement strategies 0.9

additional product cost

II) Lower bulb failure rate (Pbulb failure = 0.005) 0.8

expected failure cost

ECreliable bulb = Pbulb failure ′ [(Ppresentation)(Cpresentation) + (Pidle )(Cidle )] 0.7

= 0.005 [(0.05)($550)) + (0.95)($50)] 0.6

Cost per unit ($)

= $0.375 0.5

III) Lower bulb replacement cost (cost of replacement = $30) 0.4

ECserviceable bulb = Pbulb failure [(Ppresentation)($530) + (Pidle )(Cidle ′)] 0.3

= 0.01 [(0.05)($530) + (0.95)($30)] 0.2

= $0.55 0.1

0

IV) Early warning of bulb failure (75% of “in presentation”

current design reliable design easy service early warning

failures eliminated) design design

ECdetectable bulb = ECpresentation + ECidle

Figure 12 Life cycle cost comparison of design alternatives

=Pbulb failure [(Ppresentation)(1-Pdetect)(Cpresentation)

By examining both expected failure cost and estimated product

+(Pdetect)(Cidle ))+(Pidle )(Cidle )] cost, the design team can decide which strategy has the lowest

= 0.01[(0.05)(0.25)($550)+[(0.75)(0.05)+(0.95)]($50)] overall life cycle cost.

= $0.5625

5. CONCLUSIONS AND FUTURE WORK

These calculations are useful for deciding whether to implement This paper addressed two major shortcomings of the

specific solutions. Consider that additional product costs for conventional FMEA : the ambiguous definition of the detection

design strategies II, III, and IV are $0.50, $0.15, and $0.10 index and the inconsistent RPN scoring scheme. The authors

respectively. We can compare life cycle cost (product cost + proposed two deployment strategies to improve the

failure cost) for the four alternatives (Table 10.) effectiveness of FMEA.

Table 10 Life cycle cost comparison for design alternatives 1. Organize the FMEA around failure scenarios rather than

failure modes.

C presentation

Ppresentation

exp. extra life

2. Evaluate risk using probability and cost.

Pbulb

Pidle

C idle

Strategy failure cost cycle

cost per cost The method can help attribute cost to failure scenarios and

unit guide design decisions with more precision. Table 11 lists some

I. current 0.01 0.95 0.05 $50 $550 $0.75 $0 $0.75 advantages and disadvantages of scenario-based FMEA and

II. reliable bulb 0.005 0.95 0.05 $50 $550 $0.375 $0.50 $0.875

III. easy to 0.01 0.95 0.05 $35 $535 $0.55 $0.15 $0.70

traditional FMEA.

service

IV. early warning 0.01 0.988 0.013 $50 $550 $0.563 $0.10 $0.663

9 Copyright 2000 by ASME

Table 11 Comparison of scenario-based & Traditional FMEA their assistance. Finally, sincere thanks to Burton Lee for his

Scenario-based FMEA Traditional FMEA valuable insight and technical input.

using expected cost using the Risk Priority

Number REFERENCES

disadvantages disadvantages Aerospace Recommended Practice ARP926A, (1979).

cost and probability are difficult to O, S, D values do not have Automotive Industry Action Group (1995) Potential Failure

estimate without data meaning, definitions vary Modes and Effects Analysis (FMEA) Reference Manual,

there is some aversion to using Multiplication of ordinal scales Detroit, MI.

probability and cost estimates is not valid Bowles, J. (1998) “The New SAE FMECA Standard,”

advantages advantages Proceedings of the 1998 IEEE Annual Reliability and

probability and cost have universal 1-10 scales are familiar and Maintainability Symposium, pp. 48-53.

meaning, consistent definitions “quick” Di Marco, P., C. Eubanks and K. Ishii (1995), “Service Modes

rules for manipulating probabilities are there is a large existing base

and Effect Analysis: Integration of Failure Analysis and

well established - sub-system FMEA of FMEA software and

using probability can be more easily procedures that use the RPN

Serviceability Design,” Proc. of the 15th Annual

integrated into system FMEA International Computers in Engineering Conference,

estimated ratio scales contain more Boston, MA, pp. 833-840.

information than estimated ordinal Ford Motor Company (1988) “Potential Failure Modes and

scales Effects Analysis in Design and for Manufacturing and

FMEA can be used for cost-based Assembly Processes Instruction Manual,” Sept. 1988.

decisions Gilchrist, W. (1993) “Modeling failure modes and effects

using standard metrics facilitates International Journal of Quality and Reliability

sharing of FMEA data

Management, 10(5), 16-23.

can incorporate uncertainty into

probability and costs

Hauptmanns, U., and W. Werner (1991) Engineering Risks:

costs can reflect the concept of utility Evaluation and Valuation, Springer-Verlag New York, Inc.,

theory March 1991.

Humphries, N., (1994) Murphy’s Law Overruled: FMEA in

The examples in this paper have been using deterministic Design, Manufacture and Service, B&N Humphries Learning

point-estimates for probability and cost. There is an Service Pty Ltd, Victoria, Australia.

opportunity to include uncertainty into the estimates of the cost Kaplan, S. and B.J. Garrick (1981) “On the Quantitative

and probability parameters. Without detailed information, using Definition of Risk,” Risk Analysis, 1(1) pp. 11-27.

a range of probability and cost estimates is favorable to using Lee, B.H. (1999) “Design FMEA for Mechatronic Systems Using

point-estimates. In addition, a more formal procedure could be Bayesian Network Causal Models,” ASME Design

used to trace causal chains and their probabilities, such as Engineering Technical Conference, Las Vegas, NV.

Bayesian Nets (Lee, 1999). Lindley, D.V. (1965) Introduction to Probability and Statistics

Our future challenges include: a) Validation of the utility of from a Bayesian Viewpoint, 2 Volumes, Cambridge Press,

scenario-based FMEA through more case studies , and b) Using Cambridge.

the cost-based evaluation of failure scenarios to the McDermott, R. E., Mikulak, R. J., and Beauregard, M. R. (1996)

simultaneous design of hardware, monitoring and controls, and The basics of FMEA, New York: Quality Resources.

service logistics. The second topic presents an enormous Modarres, M. (1992) What Every Engineer Should Know about

challenge and opportunity as more companies provide long- Reliability and Risk Analysis, Marcel Dekker, Inc., New York,

term service support as a business strategy. For example, aircraft NY.

engine manufacturers are now leasing engines rather than Morgan, M. G., and M. Henrion (1990) Uncertainty: A Guide to

selling, i.e., providing customers with “thrust” as opposed to Dealing With Uncertainty in Quantitative Risk and Policy

just hardware. Such a business strategy requires an effective Analysis, Cambridge Press, 1990.

means of managing the life-cycle costs of the system by Palady, P. (1995) Failure Modes and Effects Analysis:

balancing failure cost (risk) and long term maintenance cost Predicting & Preventing Problems Before They Occur, PT

against up-front product cost. The authors firmly believe that Publications, West Palm Beach, FL, 1995.

scenario-based FMEA will assist in the design challenges of Rasmussen, N.C. (1981) “The Application of Probabilistic Risk

long-term support of complex products. Assessment Techniques to Energy Technologies,” Prog.

Energy Combust. Sci., 6, pp. 123-138.

ACKNOWLEDGMENTS Society of Automotive Engineers (SAE) (1994), “Potential

The authors would like to thank the Department of Energy Failure Mode and Effects Analysis in Design (Design FMEA)

and General Electric for funding this research. We would also and Potential Failure Mode and Effects Analysis in

like to give our appreciation to Kurt Beiter and Mark Martin for Manufacturing and Assembly Processes (Process FMEA)

10 Copyright 2000 by ASME

Reference Manual”, Surface Vehicle Recommended Practice

J1739, July 1994.

Stamatis, D.H., (1995) Failure Mode and Effect Analysis: FMEA

from Theory to Execution, ASQC Quality Press, Milwaukee,

WI.

United States Department of Defense (USDoD) (1974/80/84),

“Procedures for Performing A Failure Mode, Effects and

Criticality Analysis”, US MIL-STD-1629(ships), November

1974, US MIL-STD-1629A, November 1980, US MIL-STD-

1629A/Notice 2, November 1984, Washington, DC.

Voetter, M. and T. Mashhour (1996) “Verbesserung der FMEA

durch eine Systematische Erfassung von Kausal-

zusammenhaengen”, VDI-Z 138, Heft 4.

Webster’s II New Riverside University Dictionary (1988)

Houghton Mifflin, Boston, MA, p. 1013.

11 Copyright 2000 by ASME

You might also like

- Productivity and Reliability-Based Maintenance Management, Second EditionFrom EverandProductivity and Reliability-Based Maintenance Management, Second EditionNo ratings yet

- AIAG and VDA FMEA Handbook Apr 4 2019-1Document16 pagesAIAG and VDA FMEA Handbook Apr 4 2019-1Rudiney Trombetta88% (16)

- Management of Product Safety: 6) Esclation Process & Information FlowDocument2 pagesManagement of Product Safety: 6) Esclation Process & Information FlowGiang Luu100% (2)

- A Step by Step Guide To Carrying Out A Cost-Based Failure Modes and Effects AnalysisDocument26 pagesA Step by Step Guide To Carrying Out A Cost-Based Failure Modes and Effects AnalysisSteve ForsterNo ratings yet

- A New Approach For Prioritization of Failure Modes in Design FMEA Using ANOVADocument8 pagesA New Approach For Prioritization of Failure Modes in Design FMEA Using ANOVAsiskiadstatindustriNo ratings yet

- Guide To Risk ManagementDocument9 pagesGuide To Risk ManagementelevendotNo ratings yet

- 2018-Chih-Chung Chiu, Kuo-Sui Lin-A New Design Review Method For Functional Safety of Automotive Electrical SystemsDocument9 pages2018-Chih-Chung Chiu, Kuo-Sui Lin-A New Design Review Method For Functional Safety of Automotive Electrical SystemsAde Hikma TianaNo ratings yet

- Chapter No. 1: 1.1 Problem Statement (FMEA) Failure Mode Effect Analysis of Welding Defects of Beam AssemblyDocument27 pagesChapter No. 1: 1.1 Problem Statement (FMEA) Failure Mode Effect Analysis of Welding Defects of Beam AssemblyharshalNo ratings yet

- Answers To 7 FAQs About FMEA, FMECA, and FMEDADocument2 pagesAnswers To 7 FAQs About FMEA, FMECA, and FMEDAjagger zgNo ratings yet

- FMEA Vs FMECADocument10 pagesFMEA Vs FMECAAndika Haris NugrohoNo ratings yet

- Systematic Failure Mode Effect Analysis (FMEA) Using Fuzzy Linguistic ModellingDocument19 pagesSystematic Failure Mode Effect Analysis (FMEA) Using Fuzzy Linguistic ModellingSri Susilawati IslamNo ratings yet

- Failure Mode and Effects: AnalysisDocument17 pagesFailure Mode and Effects: AnalysisDelfina SugandiNo ratings yet

- T5 FmecaDocument6 pagesT5 Fmecahasovod464No ratings yet

- Failure Mode and Effect Analysis (FMEA) Implementation: A Literature ReviewDocument17 pagesFailure Mode and Effect Analysis (FMEA) Implementation: A Literature ReviewAdvanced Research PublicationsNo ratings yet

- Basic Concepts of FMEA and FMECADocument2 pagesBasic Concepts of FMEA and FMECAGli OxalNo ratings yet

- Risk Analysis Method: FMEA/FMECA in The OrganizationsDocument9 pagesRisk Analysis Method: FMEA/FMECA in The OrganizationsrusitadianNo ratings yet

- Asi 05 00045 v2Document20 pagesAsi 05 00045 v2velos666No ratings yet

- Presentation On FmeaDocument15 pagesPresentation On FmeaA Veda SagarNo ratings yet

- Fmea Thesis PDFDocument6 pagesFmea Thesis PDFwguuxeief100% (2)

- MainFEMA SKH PDFDocument46 pagesMainFEMA SKH PDFswapan kumar hazraNo ratings yet

- Failure Mode and Effect Analysis: Apparel Quality ManagementDocument30 pagesFailure Mode and Effect Analysis: Apparel Quality ManagementShagun SinhaNo ratings yet

- 1998 ASME CIE KmentaDocument9 pages1998 ASME CIE KmentaAlper GmNo ratings yet

- FMEADocument4 pagesFMEABERNARDO MALACARANo ratings yet

- (Original) Fata Et Al., 2019Document17 pages(Original) Fata Et Al., 2019Carolina CamposNo ratings yet

- The Possibility For FMEA Method Improvement and Its Implementation Into Bus Life CycleDocument8 pagesThe Possibility For FMEA Method Improvement and Its Implementation Into Bus Life CycleFerchin MartinezNo ratings yet

- 7.2.18 DFMEA-PFMEA-FMECA Comparison Guidance 13JUL2020Document4 pages7.2.18 DFMEA-PFMEA-FMECA Comparison Guidance 13JUL2020Moti Ben-ZurNo ratings yet

- Failure Mode and Effects Analysis of A Process of Reflow Lead-Free SolderingDocument5 pagesFailure Mode and Effects Analysis of A Process of Reflow Lead-Free SolderingSRIDHAREEE61No ratings yet

- Introduction To Failure ModeDocument10 pagesIntroduction To Failure ModeAMIT THAKKARNo ratings yet

- Quality Analysis Using Fmea Method On Assembly Processes of Washing Machine (Case Study in Panasonic Manufacturing Indonesia)Document5 pagesQuality Analysis Using Fmea Method On Assembly Processes of Washing Machine (Case Study in Panasonic Manufacturing Indonesia)gadhang dewanggaNo ratings yet

- Failure Analysis of FMEADocument4 pagesFailure Analysis of FMEAUsman Mustafa SyedNo ratings yet

- Fmea ReviewpaperDocument18 pagesFmea ReviewpaperGabrielle BarbozaNo ratings yet

- Failure Mode and Effects Analysis FMEADocument8 pagesFailure Mode and Effects Analysis FMEAatiwariNo ratings yet

- 1 CT AmefDocument23 pages1 CT AmefCuenta PokemonNo ratings yet

- Failure Mode and Effects Analysis - GoSkills Course SyllabusDocument3 pagesFailure Mode and Effects Analysis - GoSkills Course SyllabusIndra KurniawanNo ratings yet

- 4207 APM Strategy RCM FMEA Course BrochureDocument2 pages4207 APM Strategy RCM FMEA Course Brochurenadal.shahidNo ratings yet

- Reliability For Power PlantDocument15 pagesReliability For Power PlantMohammad Rizaly GaniNo ratings yet

- 1970 - Transformer FMEA PDFDocument7 pages1970 - Transformer FMEA PDFSing Yew Lam0% (1)

- Applsci 11 03726 v2 (Cast Resin FMEA)Document17 pagesApplsci 11 03726 v2 (Cast Resin FMEA)singowongsoNo ratings yet

- FEMCADDocument5 pagesFEMCADdaabhiNo ratings yet

- A Proposal of Operational Risk Management Method Using FMEA For Drug ManufacturingDocument10 pagesA Proposal of Operational Risk Management Method Using FMEA For Drug ManufacturingYunarso AnangNo ratings yet

- Pill Ay 2003Document17 pagesPill Ay 2003Annisa Zahwatul UmmiNo ratings yet

- Failure Analysis of FMEA: March 2009Document9 pagesFailure Analysis of FMEA: March 2009swapnil pandeNo ratings yet

- FMEA: Failure Modes and Effects AnalysisDocument24 pagesFMEA: Failure Modes and Effects AnalysisAlvaro CotaquispeNo ratings yet

- Scope of WorkDocument2 pagesScope of WorkMadhu Sudhan ReddyNo ratings yet

- Chen JK. 2007. Utility Priority Number Evaluation For FMEADocument8 pagesChen JK. 2007. Utility Priority Number Evaluation For FMEAAlwi SalamNo ratings yet

- FMEADocument10 pagesFMEAsriramoj100% (1)

- SW FmeaDocument6 pagesSW Fmeainitiative1972No ratings yet

- Failure Modes and Effects Analysis of Chemical StoDocument6 pagesFailure Modes and Effects Analysis of Chemical StogirishNo ratings yet

- Hazard Analysis and Risk Assessments For Industrial Processes Using FMEA and Bow-Tie MethodologiesDocument13 pagesHazard Analysis and Risk Assessments For Industrial Processes Using FMEA and Bow-Tie MethodologiesratrihaningdsNo ratings yet

- EMT 480 Reliability & Failure AnalysisDocument38 pagesEMT 480 Reliability & Failure AnalysisK ULAGANATHANNo ratings yet

- FMEADocument9 pagesFMEAVinay Kumar KumarNo ratings yet

- How FMEAs Can Be The Cornerstone of ISO 2001 2015 CompliantDocument5 pagesHow FMEAs Can Be The Cornerstone of ISO 2001 2015 CompliantchevroletNo ratings yet

- Failure Modes and Effects Analysis (FMEA) For Power TransformersDocument8 pagesFailure Modes and Effects Analysis (FMEA) For Power TransformersGary MartinNo ratings yet

- Basic Concepts of FMEA and FMECADocument4 pagesBasic Concepts of FMEA and FMECAtanasa100% (1)

- RCM Design and ImplementationDocument34 pagesRCM Design and ImplementationRozi Yuda100% (1)

- Failure Mode and Effect Analysis - FMEA - and Criticality Analysis - FMECADocument4 pagesFailure Mode and Effect Analysis - FMEA - and Criticality Analysis - FMECAA OmairaNo ratings yet

- Design, Structural Analysis and D-FMEA of Automobile Manual Transmission Gear-BoxDocument32 pagesDesign, Structural Analysis and D-FMEA of Automobile Manual Transmission Gear-BoxAtul DahiyaNo ratings yet

- Brochure - AVEVA Asset Strategy OptimizationDocument8 pagesBrochure - AVEVA Asset Strategy OptimizationTeguh YuliantoNo ratings yet

- A Novel Multiple-Criteria Decision-Making-Based FMEA Model For RiskDocument36 pagesA Novel Multiple-Criteria Decision-Making-Based FMEA Model For Risksawssen chNo ratings yet

- Effective FMEAs: Achieving Safe, Reliable, and Economical Products and Processes using Failure Mode and Effects AnalysisFrom EverandEffective FMEAs: Achieving Safe, Reliable, and Economical Products and Processes using Failure Mode and Effects AnalysisRating: 4.5 out of 5 stars4.5/5 (2)

- BS en 60423-2007Document16 pagesBS en 60423-2007arkaprava ghosh100% (1)

- Energy Use Rationalization ActDocument51 pagesEnergy Use Rationalization Actarkaprava ghoshNo ratings yet

- International Import Requirements Data Sheet - South KoreaDocument2 pagesInternational Import Requirements Data Sheet - South Koreaarkaprava ghoshNo ratings yet

- Crystal THK / 2, Wave Length in Crystal: Piezo-Electric EffectDocument1 pageCrystal THK / 2, Wave Length in Crystal: Piezo-Electric Effectarkaprava ghoshNo ratings yet

- Specification For Seamless Ferritic Alloy-Steel Pipe For High-Temperature ServiceDocument1 pageSpecification For Seamless Ferritic Alloy-Steel Pipe For High-Temperature Servicearkaprava ghoshNo ratings yet

- A. General: UG-84 Charpy Impact TestsDocument2 pagesA. General: UG-84 Charpy Impact Testsarkaprava ghosh100% (1)

- A. General: UG-84 Charpy Impact TestsDocument2 pagesA. General: UG-84 Charpy Impact Testsarkaprava ghosh100% (1)

- Emergency References Action ReferencesDocument2 pagesEmergency References Action Referencesarkaprava ghoshNo ratings yet

- (QSP-NPD-01) Process For Advanced Product Quality Planning (APQP)Document9 pages(QSP-NPD-01) Process For Advanced Product Quality Planning (APQP)Gourav SainiNo ratings yet

- Fmea TableDocument18 pagesFmea TablebryesanggalangNo ratings yet

- Training Courses For Manufacturing Industry Thors Elearning SolutionsDocument1 pageTraining Courses For Manufacturing Industry Thors Elearning Solutionsgtsi.draftsmanNo ratings yet

- Environmental Monitoring Risk Assessment PDFDocument26 pagesEnvironmental Monitoring Risk Assessment PDFEmte ZenNo ratings yet

- Basic Reliability Analysis of Electrical Power SystemsDocument21 pagesBasic Reliability Analysis of Electrical Power SystemssibtainNo ratings yet

- Xfmea Report Sample - Machinery FMEA: in Addition To This Summary, This Report Includes The Following FormsDocument5 pagesXfmea Report Sample - Machinery FMEA: in Addition To This Summary, This Report Includes The Following FormsMalkeet SinghNo ratings yet

- Section - Ix Data Collection - Test QuestionsDocument15 pagesSection - Ix Data Collection - Test QuestionsRaj KumarNo ratings yet

- Selection of Hazard Evaluation Techniques PDFDocument16 pagesSelection of Hazard Evaluation Techniques PDFdediodedNo ratings yet

- 1.5.2 RAID in Test Planning and Strategizing V1Document20 pages1.5.2 RAID in Test Planning and Strategizing V1mohangboxNo ratings yet

- FMEA Safety Assessment in Major Electrical EquipmentDocument5 pagesFMEA Safety Assessment in Major Electrical EquipmentNhan HuynhNo ratings yet

- Navistar Supplier Quality RequirementsDocument50 pagesNavistar Supplier Quality RequirementsJaskaran SinghNo ratings yet

- How To Establish Sample Sizes For Process Validation When Destructive Or...Document8 pagesHow To Establish Sample Sizes For Process Validation When Destructive Or...hanipe2979No ratings yet

- PfhomologationDocument31 pagesPfhomologationibrahime mohammedNo ratings yet

- LIBRO - Dhillon - Reliability in The Mechanical Design Process - Chapter 31 PDFDocument24 pagesLIBRO - Dhillon - Reliability in The Mechanical Design Process - Chapter 31 PDFFreeLatinBirdNo ratings yet

- 5 - Development of A Risk-Based Maintenance Strategy Using FMEA For A Continuous Catalytic Reforming PlantDocument8 pages5 - Development of A Risk-Based Maintenance Strategy Using FMEA For A Continuous Catalytic Reforming PlantNur Azizah NasutionNo ratings yet

- Fmea Analysis of Turret LatheDocument3 pagesFmea Analysis of Turret LatheSathriyan SathiNo ratings yet

- Risks Prioritization Using Fmea Method - A Case StudyDocument5 pagesRisks Prioritization Using Fmea Method - A Case StudyTony IsodjeNo ratings yet

- Proactive Hazard Analysis and Health Care Policy: by John E. McdonoughDocument37 pagesProactive Hazard Analysis and Health Care Policy: by John E. McdonoughdulipsinghNo ratings yet

- Teknik Dan Management Maintenance Dr. Muhammad Nur Yuniarto Waktu: 90 Menit (Open Any BOOKS)Document2 pagesTeknik Dan Management Maintenance Dr. Muhammad Nur Yuniarto Waktu: 90 Menit (Open Any BOOKS)Atuk GaekNo ratings yet

- Availability Analysis of Gas TurbinesDocument10 pagesAvailability Analysis of Gas TurbinesEman ZabiNo ratings yet

- APQP - From Reactive To ProactiveDocument4 pagesAPQP - From Reactive To Proactivemizar.g91No ratings yet

- Root CauseDocument60 pagesRoot CauserwillestoneNo ratings yet

- PFMEA AIAG VDA Heading Hints PDFDocument1 pagePFMEA AIAG VDA Heading Hints PDFRamdas PaithankarNo ratings yet

- Cleaning ValidationDocument33 pagesCleaning ValidationSKRJJ100% (3)

- 8.export ManagementDocument3 pages8.export ManagementRadhika VijayakumarNo ratings yet

- 180403-CGP-01900-015 - BBL - N - EN - 2015-08-18-Fault Tree AnalisesDocument83 pages180403-CGP-01900-015 - BBL - N - EN - 2015-08-18-Fault Tree Analisesjessy eghNo ratings yet

- RCM vs. FMEA - There Is A Distinct Difference!: RCM - Reliability Centered MaintenanceDocument4 pagesRCM vs. FMEA - There Is A Distinct Difference!: RCM - Reliability Centered Maintenanceg_viegasNo ratings yet

- Value Engineering VA - VEDocument146 pagesValue Engineering VA - VEactivedeen100% (2)

- Turkey Qualification ValidationDocument28 pagesTurkey Qualification ValidationBalesh NidhankarNo ratings yet