Professional Documents

Culture Documents

Generalization Capacity in Artificial Neural Networks - The Ballistic Trajectory Learning Case

Generalization Capacity in Artificial Neural Networks - The Ballistic Trajectory Learning Case

Uploaded by

Taylor MontedoOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Generalization Capacity in Artificial Neural Networks - The Ballistic Trajectory Learning Case

Generalization Capacity in Artificial Neural Networks - The Ballistic Trajectory Learning Case

Uploaded by

Taylor MontedoCopyright:

Available Formats

Generalization Capacity in Artificial Neural Networks

- the ballistic trajectory learning case -

M. Roisenberg1, J. M. Barreto2, F. M. Azevedo2

1Instituto de Informática, Departamento de Informática Aplicada,

Universidade Federal do Rio Grande do Sul,

Porto Alegre, Brasil

e-mail: roisenb@inf.ufrgs.br

(Atualmente em programa de doutorado no endereço 2)

2Grupo de Pesquisas em Engenharia Biomédica, Departamento de Engenharia Elétrica,

Universidade Federal de Santa Catarina,

Florianópolis, Brasil

e-mail: barreto@gpeb.ufsc.br, azevedo@gpeb.ufsc.br

Abstract

This paper presents de use of an Artificial Neural Network (ANN) in learning the features of a

dynamic system, in such case, the development of an ANN as the controller for a device designed to

launch objects under ballistic movement. We make considerations about the generalization capacity

both for live beings as for the network. We also present the network topology and configuration, the

learning technique and the precision obtained. At last, this work shows the importance of the choice of

the activation function in learning some critical points.

1. Introduction depends on the initial conditions of the

launching, that is, the initial launching angle

The ballistic movement interception

and velocity. The combination on both of these

capacity is pervasive to a lot of life beings.

variables is capable to generate trajectories that

Observing some situations in our everyday life,

fulfill all the state space ℜ 2.

we can imagine that the interception of objects

under ballistic movement is a trivial action. As there are an infinite number of

Consider for example, when we throw a wood possible trajectories, it is "not trivial" to train a

stick to a dog, or even during football, neural network to solve this problem (so easily

basketball or other games. In these situations, solved by human beings). We must count on the

the interception task is always present but we neural network generalization capacity. As it's

rarely think about how it's done. The other impossible to train a neural network with all the

problem in achieving that action, resides in the possible trajectories set, the neural network

required response time -- when someone throws generalization capacity must be used, so that

you a ball, you have just a few seconds to we train the network with some trajectories and

predict its trajectory and to act to intercept it at it can correctly predict all the others.

the right point. Another great problem is that

the ballistic movement, whose trajectory is

given by the following equations: 2. Generalization vs. Specialization

x = vo * cos(θ) * t (1) Artificial Neural Networks are capable

to estimate continuous functions through the

and observation on how the output data relates with

y = vo * sin(θ) * t - g * t2 / 2 (2) the process inputs, without mathematically

specifying this relationship. Due to this feature,

the neural networks are becoming largely launching angles to train the network. Once

utilized by the engineering community in trained for these trajectories, we expect that the

identification problems and for control of neural network could generalize, i.e., to predict

dynamic systems. Once a neural network has the real trajectory for angles and velocities not

learned the desired relationship between the seen in the training examples.

input and output data presented during the

In order to implement the interception

training phase, we hope that it gives right

device, we had to study some subjects: the

answers to other problems of the same type, but

generalization capacity of neural networks to

it didn't ever seen, in the execution phase,

solve this problem, how precise are the given

presenting the expected generalization feature.

responses, and what the network must learn

One of the most interesting features of (which trajectories must be presented to the

intelligent life beings is the specialization network during the training phase). So, we

versus generalization dilemma. In this case, implemented two networks and selected two

specialization means to be precise, accurate in situations to train the networks. In the first

producing the right answers to some given situation, the direct model of the ballistic

questions. In the other way, generalization movement was presented to the network, i.e.,

means the property to give right answers (not for a given launching angle, velocity and time

necessarily accurate answers) to questions not after the launching, we want to obtain the

previously seen in the examples. position of the launched object in the plane

(x,y). In the second case, the situation was

Some animals are very specialized in

inverted and the inverse model was presented to

some aspects of their behaviors or anatomy that

the network. In this case, the input data to the

help them to survive at their natural

network was a target position point and the

environment, like the Panda Bear that just feeds

output was the launching angle and velocity to

some kind of bamboo. By the other side, this

reach this target point.

specialization makes an adaptation to other

conditions or the learning of other tasks very Suppose a device designed to intercept

difficult. Animals not so specialized, like objects under ballistic movement, for example a

humans, have a higher adaptation and survival basket ball player like Michael Jordan. The two

capacity, may be due to their generalization situations previously described take place

capacity. In the computational area, traditional almost at the same time. As soon as he ("Air"

computer programs have a very good Jordan) detects an object under ballistic

performance in solving algorithmic problems, movement (the ball), the interception device

but they have much less performance in ill tries to predict the trajectory and choose the

defined, imprecise, fuzzy problems. On the interception point where the object will be at

other hand, humans are very poor in solving certain time in future. Following this choice, the

algorithmic problems, but they can predict the interception devices (Michael Jordan) launch

ballistic trajectory of an object, even if it has himself in a ballistic trajectory with some

been launched with an angle or velocity never velocity and launching angle, in order to

seen before. We expect that other intercept the object at the previewed point and

computational approaches, like the neural then do the points throwing the ball into the

computing paradigm, have a better performance basket (another ballistic movement)

in these ill defined and imprecise class of

The neural network used to implement

problems.

the direct and the inverse model has a feed

forward architecture with one hidden layer. The

learning algorithm used was back propagation.

3. Implementation

For the first model the network has three

As already said, it's impossible to train neurons at the input layer and two neurons at

the neural network with all the ballistic the output layer, as we can see at figure 1a. The

trajectories generated by different launching input neurons receive the considered time

velocities and launching angles. So, we must instant, the launching angle and velocity. At the

choose an adequate set of velocities and

output layer, we obtain the corresponding used by the back propagation algorithm

position points (x,y) presents greater residual error. In using the

hyperbolic tangent activation function, that

For the second case, the two input

don't happen. In such case, the derivative is

neurons of the network receive the target points

bigger just for points near zero.

coordinates (x,y) and produces at the two

output neurons the launching angle and velocity 4. Experiments

to reach the desired point as can be seen at

In this section we will describe the

figure 1b.

experiments we have done to study the

Another question that we were faced generalization capacity of the network, the

during the implementation of the neural features of the training set, as well as the

network to run the experiments, became the influence of the number of neurons in the

choice of the activation function (also known by hidden layer.

threshold, squashing, signal or output function)

4.1. experiment no 1

be implemented in the neurons. Traditionally, in

many commercial products, a function called In order to analyze the generalization

logistic seems to be frequently preferred to be capacity, the neural network was trained to

implemented, due to its simple derivative used learn the ballistic trajectory of an object

in the back propagation algorithm. However, launched with one initial velocity (10 m/s) and

the image of this function belongs to the range two launching angles (30o and 60o). Some

[0,1]. So, the network output could only has point coordinates (x,y) followed by an object

positive values in this range. That's OK for launched under these two initial conditions,

horizontal distances from the launching point (x during 2 seconds, were presented to the network

coordinate points), but means a hard work during the training phase, as can be seen in

when we have negative height values (y figure 2. At the execution phase, we recall from

coordinate points). A more natural choice, the trained network the 2 seconds' trajectory for

would be to use an activation function that had an object launched with the same initial

image values in the range [-1,1]. This would velocity, but launching angle varying from 0o

allow to train the network with trajectories that to 90o. We plotted the maximum error between

reach negative height values, as when the the real trajectory and that given by the trained

launch angle or velocity is small. For this case, network. These error values can be seen in

we choose an activation function in the figure 3.

hyperbolic tangent form. We can see that for launching angles

We made experiments with both the inside the range compound by the angles used

activation functions for the neural network to train the network, the interpolation done by

neurons. Firstly we implement the logistic the network was very good. However, the

function: extrapolation capacity for angles outside the

trained angles' interval was very poor.

(1 + e-x)-1

and secondly, the hyperbolic tangent function:

(1 - e-x) / (1 + e-x) .

4.2. experiment no 2

Our experiments confirmed the stated

by Gallant[3]. The learning performance In the second experiment we change the

presented by the network using the hyperbolic training set. In this case, we want to observe the

tangent activation function was higher than that interpolation capacity if with train the network

presented when we used the logistic function. with other two trajectories far away from each

This can be explained because, in the first other. Now we trained the network maintaining

moments after the launching, the output values the initial velocity but with other launching

produced by the network are near zero. The angles (15o and 75o). As can be seen in figure

derivative of the logistic function for this points 4, the interpolation performance presented by

is also very small, then the gradient method the network during the execution phase for

launching angles between the trained interval For more distant points from the

was not good. As stated previously, this poorer launching point, the variation in the "launching

performance occurs because the "concepts" in angle" parameter occurs less frequently, and we

this case the two different trajectories presented just have to increase the "launching velocity"

to the network during the training phase are too parameter to reach target far points. The

"distant" from each other, exceeding the neural "concepts" to be taught to the network are more

network generalization capacity. In this "homogeneous".

experiment we also vary the number of neurons

After a long training phase, the results

in the hidden layer, but can't observe any

presented by the network can be seen in figure

significant variance in the generalization

5. There we can see that for target points near

capacity. At last we trained the network with an

the launching point, the errors are large and the

intermediate angle (15o , 45o and 75o ). The

interpolation capacity presented by the network

result can be seen at figure 4.

are poor. For target points faraway from the

4.3. experiment no 3 origin, where the relationship "greater distance,

greater launching velocity" is more constant,

For the third experiment, the inverse

the generalization capacity as the precision

model previously described was implemented.

obtained by the network are greater.

In this case our goal was to teach to the neural

network some features of the inverse model.

Given some target coordinate point (x,y) to the

5. Conclusions

network input, we want to get from the network

output the right launching angle and velocity to The results obtained through the

reach the point. As there are infinite solutions experiments leads us to conclude that, in

to reach the target point, we give as solution to general, artificial neural networks have a good

the network the smaller angle (in 15o interval) generalization capacity, in the sense that they

and the corresponding launching velocity. In can produce correct responses (not exactly

this example the number and complexity of the precise ones) to questions that does not belong

"concepts" to be taught to the network are much to the training set. However, we note that this

greater. Moreover, for a lot of points in the generalization occurs in the interpolation sense

space of coordinates, mainly for those located between two or more previously learned

near the launching point, we must constantly "concepts". It's important to remark that the

vary the solution angle and velocity during the "distance" between the concepts, i.e., how

training phase. different the "concepts" are, plays an essential

role in the network generalization capacity.

To illustrate the variability of

"concepts" presented to the network, mainly at This shows the great importance of the

target points near the launching point, look at selection of the examples in the training set. In

the following example: In some situations we what concerns about the extrapolation capacity

must to "increase" the launching angle while of the neural networks, as for the humans, is a

"reducing" the launching velocity to reach a much more complex task and as the humans,

target point more distant than other relatively the neural networks tend to produce wrong

with the launching point (to reach the point results.

(1.5,2.0) the launching conditions taught to the In this work, the concepts of "concept

network are: θo=60o and vo=8.6m/s, while to taught to a neural network" and "distance

reach a far point (1.5,2.5) the launching angle between concepts" were presented in an

grows to θo =75o, but the initial velocity informal and intuitive way. We intend to

decreases to vo=7.3m/s). In other situations, the formalize these concepts based in

relation changes (to reach the point (1.5,0.5) .mathematically based.. approach in a future

the launching conditions taught to the network work.

are: θo =30o e vo=6.34m/s, while to reach a

far point (1.5,1.0) the launching angle grows to At last, this work tries to show the

θo =45o, and the initial velocity also grows to importance in choosing the neurons' activation

vo=6.64m/s). function in learning performance of some

critical points. Moreover, we presented an

application in which some features of a

dynamic system were learned by a static neural

network.

This fact is very important. In fact,

suppose the control of a plant performed by

conventional frequency response methods. It is

well known that it is impossible to control the

plant with even a non-linear gain in the

feedback loop in several cases, for exemple a

system with two integrators and without zeros.

In this case for any gain the frequency response

will have the -1 point inside the Nyquist loop,

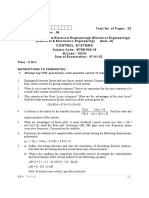

Figure 2. Real and neural network generated

indicating instability of the closed loop system.

trajectory for some launching angles and

In this case it is necessary to use a dynamic

launching velocity of 10 m/s.

system in the controller, in such a way to

reshape the frequency response and to leave the

-1 point outside the Nyquist loop. Or, using a

ANN in this case must include some sort of

dynamics.

In our case, however, we are learning

not the dynamics of the system, but the

trajectories that are functions in a space where

time is not a parameter, but a coordinate

variable, and it is possible to learn functions

with a static system implemented by the ANN.

Time X Position

Angle

Y Position

Velocity

...

Figure 3. Maximum error between the real and

the neural network calculated trajectory for

Figure 1a various launching angles and launching velocity

of 10m/s. The network was trained for 30o and

X Position 60o launching angles.

Angle

Velocity

Y Position

...

Figure 1b

Figure 1. Topology of the networks used to

learn the ballistic movement in an interception

device.

Figure 4. Maximum error between the real and for the MUCOM (Multisensory Control of

the neural network calculated trajectory for Movement, EU project), 1994.

various launching angles and launching velocity

[7] C. C. Klimasaukas, "Neural Networks: an

of 10m/s. The network was firstly trained for

Engineering Perspective," IEEE

15o and 75o launching angles and then for 15o,

Communications Magazine, p50-53, Sept.,

45o and 75o launching angles.

1992.

[8] J. L. Moody, "The Effective Number of

Parameters: An Analysis of Generalization

and Regularization in Nonlinear Learning

Systems." In J. E. Moody and S. J.

Hanson (Eds.), Neural Information

Processing Systems 4 (Morgan Kaufmann,

1992).

[9] J. M. Barreto, F. M. de Azevedo, C. I.

Zanchin, "Neural Network Identification

of Ressonance Frequencies from Noise".

In 9th Brazilian Congress on Automatics,

p840-844, Aug., 1992.

Figure 5. Minimum distance between the given

target point and the closer point reached by an

object launched with the initial conditions

calculated by the network.

References

[1] J. A. Anderson, "Neural-Network Learning

and Mark Twain's Cat," IEEE

Communications Magazine, p16-23,

September, 1992.

[2] D. E. Rummelhart, G. E. Hinton and R. J.

Williams, "Learning Internal

Representations by Error Propagation.") in

D. E. Rummelhart and J. L. McClelland

(Eds.), Parallel Distributed Processing -

Vol. 1, (MIT Press, 1989).

[3] S. I. Gallant, "Neural Network Learning

and Expert Systems," (MIT Press, 1993).

[4] R. Resnick, D. Halliday, "Physics, Part I,"

(Jonh Willey & Sons, 1973).

[5] Z. Schreter, "Connectionism: A Link

Between Psychology and Neuroscience?"

In J Stender, T. Addis (Eds.), Symbols

versus Neurons? (IOS Press, 1990).

[6] J. Barreto, T. Proychev, "Control of the

Standing Position: A Neural Network

Approach," Technical Report, Lab. of

Neurophysiology, Medicine Faculty,

University of Louvain, Brussels, prepared

You might also like

- Neural NetworksDocument12 pagesNeural NetworksP PNo ratings yet

- Gravity Neural NetworkDocument8 pagesGravity Neural NetworkRaden Ferrianggoro SupriadiNo ratings yet

- InTech-Introduction To The Artificial Neural Networks PDFDocument17 pagesInTech-Introduction To The Artificial Neural Networks PDFalexaalexNo ratings yet

- Artificial Neural Networks: A Seminar Report OnDocument13 pagesArtificial Neural Networks: A Seminar Report OnShiv KumarNo ratings yet

- Perspective: How Does The Brain Solve Visual Object Recognition?Document20 pagesPerspective: How Does The Brain Solve Visual Object Recognition?Kaito KaleNo ratings yet

- Artificial Intelligence Techniques in Solar Energy ApplicationsDocument26 pagesArtificial Intelligence Techniques in Solar Energy ApplicationsParas GupteNo ratings yet

- Introduction To The Artificial Neural Networks: Andrej Krenker, Janez Bešter and Andrej KosDocument18 pagesIntroduction To The Artificial Neural Networks: Andrej Krenker, Janez Bešter and Andrej KosVeren PanjaitanNo ratings yet

- COM417 Note Aug-21-2022Document25 pagesCOM417 Note Aug-21-2022oseni wunmiNo ratings yet

- Nonlinear, and Parallel Computer (Information-Processing System) - It Has The Capability ToDocument6 pagesNonlinear, and Parallel Computer (Information-Processing System) - It Has The Capability Toİsmail KıyıcıNo ratings yet

- What Are Neural NetsDocument4 pagesWhat Are Neural NetsCVDSCRIBNo ratings yet

- Driving Environmental Change Detection Subsystem in A Vision-Based Driver Assistance SystemDocument6 pagesDriving Environmental Change Detection Subsystem in A Vision-Based Driver Assistance Systemcristi_pet4742No ratings yet

- Deep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908Document5 pagesDeep Learning Assignment 1 Solution: Name: Vivek Rana Roll No.: 1709113908vikNo ratings yet

- Anfis NoteDocument15 pagesAnfis NoteNimoNo ratings yet

- Chapter 10. Neural Networks: "You Can't Process Me With A Normal Brain." - Charlie SheenDocument54 pagesChapter 10. Neural Networks: "You Can't Process Me With A Normal Brain." - Charlie SheenHassan AliNo ratings yet

- Deep Learning in Astronomy - VIVEK KUMARDocument6 pagesDeep Learning in Astronomy - VIVEK KUMARAniket SujayNo ratings yet

- Survey AiDocument16 pagesSurvey Ai2023mc21517No ratings yet

- Feature Extraction From Web Data Using Artificial Neural Networks (ANN)Document10 pagesFeature Extraction From Web Data Using Artificial Neural Networks (ANN)surendiran123No ratings yet

- Artificial Neural Networks For Beginners: September 2003Document9 pagesArtificial Neural Networks For Beginners: September 2003tekalegn barekuNo ratings yet

- Artificial Intelligence and Prediction of RockDocument5 pagesArtificial Intelligence and Prediction of RockManuel AragonNo ratings yet

- Neural NetworksDocument47 pagesNeural Networksshilpa.1595No ratings yet

- Artificial Neural Networks For Beginners: September 2003Document9 pagesArtificial Neural Networks For Beginners: September 2003Kosta NikolicNo ratings yet

- Inferring The Function Performed by A Recurrent Neural NetworkDocument12 pagesInferring The Function Performed by A Recurrent Neural NetworkwashereNo ratings yet

- Artificial Neural NetworkDocument12 pagesArtificial Neural NetworkRAZOR GAMINGNo ratings yet

- Unit 1 NotesDocument14 pagesUnit 1 NotesThor AvengersNo ratings yet

- Unit-4 AimlDocument27 pagesUnit-4 AimlRAFIQNo ratings yet

- Neural Network As Universal ApproximatesDocument5 pagesNeural Network As Universal ApproximatesGujuluva KarthikNo ratings yet

- Object Classification Through Perceptron Model Using LabviewDocument4 pagesObject Classification Through Perceptron Model Using LabviewLuis E. Neira RoperoNo ratings yet

- T. Sreedevi E-Mail: Sreedu2002@yahoo - Co.in Iv Cse G.Pulla Reddy Engineering College, KurnoolDocument11 pagesT. Sreedevi E-Mail: Sreedu2002@yahoo - Co.in Iv Cse G.Pulla Reddy Engineering College, Kurnoolapi-26830587No ratings yet

- A Simulator For Robot Navigation Algorithms: Michael A. Folcik and Bijan KarimiDocument6 pagesA Simulator For Robot Navigation Algorithms: Michael A. Folcik and Bijan KarimitechlabNo ratings yet

- Convolutional Neural NetworksDocument21 pagesConvolutional Neural NetworksHarshaNo ratings yet

- Classification by Backpropagation - A Multilayer Feed-Forward Neural Network - Defining A Network Topology - BackpropagationDocument8 pagesClassification by Backpropagation - A Multilayer Feed-Forward Neural Network - Defining A Network Topology - BackpropagationKingzlynNo ratings yet

- Chapter 19Document15 pagesChapter 19Ara Martínez-OlguínNo ratings yet

- ++ar Applications of Rna in Structural Eng ConceptDocument16 pages++ar Applications of Rna in Structural Eng ConceptdefrNo ratings yet

- Dhiraj Report1Document25 pagesDhiraj Report1nimbalkarDHIRAJNo ratings yet

- Brian Muinde P101/0928G/17Document11 pagesBrian Muinde P101/0928G/17Brian GnorldanNo ratings yet

- YOLO V3 ML ProjectDocument15 pagesYOLO V3 ML ProjectAnnie ShuklaNo ratings yet

- Symbolic Pregression, Discovering Physical Laws From Raw Distorted Video, Udrescu, Tegmark, 2020Document13 pagesSymbolic Pregression, Discovering Physical Laws From Raw Distorted Video, Udrescu, Tegmark, 2020Mario Leon GaticaNo ratings yet

- The Forward AlgorithmDocument16 pagesThe Forward AlgorithmBlerim HasaNo ratings yet

- Ijms 47 (3) 665-673Document9 pagesIjms 47 (3) 665-673projects allNo ratings yet

- Computers and Symbols Versus Nets and Neurons: Dept. Human Sciences, Brunel University Uxbridge, MiddxDocument9 pagesComputers and Symbols Versus Nets and Neurons: Dept. Human Sciences, Brunel University Uxbridge, MiddxYan MikhlinNo ratings yet

- Machine Learning: Version 2 CSE IIT, KharagpurDocument5 pagesMachine Learning: Version 2 CSE IIT, KharagpurVanu ShaNo ratings yet

- Institute of Engineering and Technology Davv, Indore: Lab Assingment OnDocument14 pagesInstitute of Engineering and Technology Davv, Indore: Lab Assingment OnNikhil KhatloiyaNo ratings yet

- Demystifying Deep Convolutional Neural Networks - Adam Harley (2014) CNN PDFDocument27 pagesDemystifying Deep Convolutional Neural Networks - Adam Harley (2014) CNN PDFharislyeNo ratings yet

- An Introduction To Artificial Neural NetworkDocument5 pagesAn Introduction To Artificial Neural NetworkMajin BuuNo ratings yet

- Every Model Learned by Gradient Descent Is Approximately A Kernel MachineDocument12 pagesEvery Model Learned by Gradient Descent Is Approximately A Kernel Machinekallenhard1No ratings yet

- Capsule NetworkDocument8 pagesCapsule NetworkMalia HaleNo ratings yet

- 1285 سراجهDocument4 pages1285 سراجهm1.nourianNo ratings yet

- AI Lab 1Document11 pagesAI Lab 1Vika PohorilaNo ratings yet

- Tutorial Instruction Answer The Following QuestionsDocument3 pagesTutorial Instruction Answer The Following QuestionsKatoon GhodeNo ratings yet

- 1.1 Cloaking Technology: Chapter - 1Document18 pages1.1 Cloaking Technology: Chapter - 1Avinash Kumar JhaNo ratings yet

- Understanding The Feedforward Artificial Neural Network Model From The Perspective of Network FlowDocument14 pagesUnderstanding The Feedforward Artificial Neural Network Model From The Perspective of Network Flow21amrikamaharajNo ratings yet

- Cs 801 PracticalsDocument67 pagesCs 801 Practicalsvishnu0751No ratings yet

- Artificial Intelligence in AerospaceDocument25 pagesArtificial Intelligence in AerospaceFael SermanNo ratings yet

- Machine LearningDocument73 pagesMachine LearningtesscarcamoNo ratings yet

- Artificial IntelligentDocument23 pagesArtificial Intelligentmohanad_j_jindeelNo ratings yet

- Implementations of Learning Control Systems Using Neural NetworksDocument9 pagesImplementations of Learning Control Systems Using Neural NetworksS KhatibNo ratings yet

- Aspects of The Numerical Analysis of Neural NetworksDocument58 pagesAspects of The Numerical Analysis of Neural Networks은지No ratings yet

- A Solution To The Learning Dilemma For Recurrent Networks of Spiking NeuronsDocument15 pagesA Solution To The Learning Dilemma For Recurrent Networks of Spiking NeuronsDiogo OliveiraNo ratings yet

- Long Short Term Memory: Fundamentals and Applications for Sequence PredictionFrom EverandLong Short Term Memory: Fundamentals and Applications for Sequence PredictionNo ratings yet

- Artificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationFrom EverandArtificial Neural Networks: Fundamentals and Applications for Decoding the Mysteries of Neural ComputationNo ratings yet

- Control Systems: Inst Ruct Ions T O Cand Idat EsDocument2 pagesControl Systems: Inst Ruct Ions T O Cand Idat EsGurjinder SinghNo ratings yet

- Control System LabDocument37 pagesControl System LabSagarManjrekarNo ratings yet

- Optimal Control For A Renewable-Energy-Based Micro-Grid: Fernando Ornelas-TellezDocument6 pagesOptimal Control For A Renewable-Energy-Based Micro-Grid: Fernando Ornelas-TellezjonathanNo ratings yet

- Frequency BiasDocument84 pagesFrequency BiasEmar OreaNo ratings yet

- An Introduction To Control Theory With Applications To Computer ScienceDocument46 pagesAn Introduction To Control Theory With Applications To Computer Sciencecleytonmarques1991No ratings yet

- Control Engg Model Imp QuesDocument9 pagesControl Engg Model Imp QuesAnonymous QCTFqaN01ZNo ratings yet

- B - Lecture14 Stability in The Frequency Domain and Relative Stability Automatic Control SystemDocument16 pagesB - Lecture14 Stability in The Frequency Domain and Relative Stability Automatic Control SystemAbaziz Mousa OutlawZzNo ratings yet

- An Introduction To Intelligent and Autonomous Control (Antsaklis)Document432 pagesAn Introduction To Intelligent and Autonomous Control (Antsaklis)hajianamir100% (1)

- An Effective Proportional-Double Derivative-Linear Quadratic Regulator Controller For Quadcopter Attitude and Altitude ControlDocument21 pagesAn Effective Proportional-Double Derivative-Linear Quadratic Regulator Controller For Quadcopter Attitude and Altitude ControlParvathy RajendranNo ratings yet

- LTJ-InS-002 Process Control Philosophy Rev1Document11 pagesLTJ-InS-002 Process Control Philosophy Rev1sswahyudiNo ratings yet

- ControllersDocument16 pagesControllersMONIRAJ MONDALNo ratings yet

- ISA Transactions: Imen Ben Abdelwahed, Abdelkader Mbarek, Kais BouzraraDocument18 pagesISA Transactions: Imen Ben Abdelwahed, Abdelkader Mbarek, Kais BouzraradleonarenNo ratings yet

- Activities SaudiDocument147 pagesActivities Saudikoib789No ratings yet

- Supplementary Material For: Controlled Flight of A Biologically Inspired, Insect-Scale RobotDocument14 pagesSupplementary Material For: Controlled Flight of A Biologically Inspired, Insect-Scale RobotsamanNo ratings yet

- Ball Balancing PID System Using Image ProcessingDocument12 pagesBall Balancing PID System Using Image ProcessingIJRASETPublicationsNo ratings yet

- EE3054 Signals and Systems: Continuous Time ConvolutionDocument29 pagesEE3054 Signals and Systems: Continuous Time Convolutionman-895142No ratings yet

- Module-Iii Machine Tools and Automation Machine Tools OperationsDocument17 pagesModule-Iii Machine Tools and Automation Machine Tools Operationsanil kumarNo ratings yet

- Electrical Engineering - Industrial Instrumentation and ControlDocument102 pagesElectrical Engineering - Industrial Instrumentation and ControlTrustWorthy100No ratings yet

- I Econ 2017 Final ProgramDocument100 pagesI Econ 2017 Final ProgramJulianNo ratings yet

- Active Filter Design Techniques: Thomas KugelstadtDocument11 pagesActive Filter Design Techniques: Thomas KugelstadtYeimy Paola CamaargoNo ratings yet

- Neural Network Based Induction Motor Speed ControllerDocument6 pagesNeural Network Based Induction Motor Speed ControllerYulia SetyaNingrumNo ratings yet

- Advances in Aerospace Guidance, Navigation and Control Selected Papers of The Third CEAS Specialist Conference On Guidance, Navigation and Control Held in ToDocument730 pagesAdvances in Aerospace Guidance, Navigation and Control Selected Papers of The Third CEAS Specialist Conference On Guidance, Navigation and Control Held in ToUma MageshwariNo ratings yet

- Sinamics Perfect Harmony gh180 Catalog d15 1 en PDFDocument104 pagesSinamics Perfect Harmony gh180 Catalog d15 1 en PDFblazer111No ratings yet

- Syllabus7859ECE ME15Document3 pagesSyllabus7859ECE ME15Kirti Deo MishraNo ratings yet

- Mnual Uryu Uec-4800tpeDocument65 pagesMnual Uryu Uec-4800tpeHeitor DâmasoNo ratings yet

- Juan Manuel Mauricio 2008Document11 pagesJuan Manuel Mauricio 2008oualid zouggarNo ratings yet

- Full Download Ebook Ebook PDF Modeling and Analysis of Dynamic Systems 3rd Edition PDFDocument41 pagesFull Download Ebook Ebook PDF Modeling and Analysis of Dynamic Systems 3rd Edition PDFjoe.anderson334100% (47)

- DS Honeywell Experion C300 ControllerDocument5 pagesDS Honeywell Experion C300 Controllersamim_khNo ratings yet

- Exam RT2 2017 WinterDocument12 pagesExam RT2 2017 WinterLuis CarvalhoNo ratings yet

- Feedback Control Systems Mit Complete PDFDocument609 pagesFeedback Control Systems Mit Complete PDFNour AtiehNo ratings yet