Professional Documents

Culture Documents

Regression Analysis: Business Statistics Class Lecturer: Dr. Phan Nguyen Ky Phuc

Regression Analysis: Business Statistics Class Lecturer: Dr. Phan Nguyen Ky Phuc

Uploaded by

NhibinhOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Regression Analysis: Business Statistics Class Lecturer: Dr. Phan Nguyen Ky Phuc

Regression Analysis: Business Statistics Class Lecturer: Dr. Phan Nguyen Ky Phuc

Uploaded by

NhibinhCopyright:

Available Formats

BUSINESS STATISTICS CLASS Lecturer: Dr.

Phan Nguyen Ky Phuc

Regression Analysis

Problem given a data set of one output and multiple inputs

Y 72 76 78 70 68 80 82 65 62 90

X1 12 11 15 10 11 16 14 8 8 18

X2 5 8 6 5 3 9 12 4 3 10

Assume that the relationship between output and inputs are linear, so it can be expressed as

k

Y 0 i X i

i 1

where N (0, 2 ) can be interpreted as noise. In this model 0 , 1 , 2 ..., k are unknown.

So our objective is to reconstruct a prediction model of Y which creates the minimum errors

based on the given data set.

Assume that our forecasting model is

k

Y b0 bi X i

i 1

So the error square between the forecasting model and the output of a record is

k 2

error (Y Y )2 b0 bi X i Y

2

i 1

For example, for the 1st record Y 72, X 1 12, X 2 5 the error square is

error 2 (Y Y ) 2 b0 12b1 5b2 72

2

Since we consider all the data set, so the error square must be summed for all records

k 2

L error (Y Y )2 b0 bi X i Y where n is the number of records in dataset.

2

n n n i 1

For the above data set, n=10.

BUSINESS STATISTICS CLASS Lecturer: Dr. Phan Nguyen Ky Phuc

k k

L L

2b0 b0 bi X i Y 2 X i b0 bi X i Y

b0 n i 1 bi n i 1

k k

L L

0 b0 bi X i Y 0 0 X i b0 bi X i Y 0

b0 n i 1 bi n i 1

So to minimize the error square, we take the first derivative corresponding to bi and set it equal

to zero. Solve these linear equation systems we can obtains values of bi

With the above example

b0 47.1649, b1 1.5990, b2 = 1.1487

The next two problems that we concern are

Whether this regression model is valid (good enough to explain the data)

Whether we can exclude some inputs, i.e., simplify current model but still can explain the

data

To answer the 1st concern we use the F-test

ANOVA Table for Multiple Regression

Source of Variation Sum of Squares Dof Mean Square F Ratio

SSR MSR

Regression SSR k MSR F

k MSE

SSE

Error SSE n (k 1) MSE

n (k 1)

Total SST n 1

n: number of data records

k: number of inputs

SSE 2 MSE

R2 1 ; R 1

SST MST

In the above example k=2, n=10.

n n 2 n 2

y y y y

2

y y

i 1

i

i 1

i i

i 1

i

SST SSE SSR

(Total sum of squares ) (Sum of squares for error ) (Sum of squares for regression)

BUSINESS STATISTICS CLASS Lecturer: Dr. Phan Nguyen Ky Phuc

ANOVA table

Source Dof SS MS F p

Regression 2 630.54 315.27 86.34 0.000

Error 7 25.56 3.67

Total 9 656.10

R-sq = 96.1% R-sq(adj) = 95.0%

To answer the 2nd concern we use the t-test for each coefficient

In t-test for coefficient i the hypothesis testing is: H 0 : i 0, H1 : i 0 . When we running the

software, for the above data set, we obtain

Predictor Coef Stdev t-ratio p

Constant 47.165 2.470 19.09 0.000

X1 1.5990 0.2810 5.69 0.000

X2 1.1487 0.3052 3.76 0.007

To look up the value of t-table we use the dof of SSE

You might also like

- Bayesian Inference For Categorical Data PDFDocument48 pagesBayesian Inference For Categorical Data PDFLili CRNo ratings yet

- SolutionsManual-Statistical and Adaptive Signal ProcessingDocument467 pagesSolutionsManual-Statistical and Adaptive Signal ProcessingkedirNo ratings yet

- Data Mining CaseDocument8 pagesData Mining CaseRoh MerNo ratings yet

- Test Bank For Foundations in Strategic Management 6th Edition Jeffrey S. HarrisonDocument9 pagesTest Bank For Foundations in Strategic Management 6th Edition Jeffrey S. HarrisonNhibinhNo ratings yet

- Do You Think That Hello Kitty Will Continue To Rule The World? What Are The Pros and Cons?Document6 pagesDo You Think That Hello Kitty Will Continue To Rule The World? What Are The Pros and Cons?NhibinhNo ratings yet

- Materi 3 - Multiple Regression-FixedDocument68 pagesMateri 3 - Multiple Regression-FixedThe Sun Will RiseNo ratings yet

- Linear Regression With One RegressionDocument42 pagesLinear Regression With One RegressionRa'fat JalladNo ratings yet

- MLR ProbsDocument45 pagesMLR Probstshilidzimulaudzi73No ratings yet

- Probability and Statistics Project 1 - Topic 6Document23 pagesProbability and Statistics Project 1 - Topic 6Thảo Ly NgNo ratings yet

- Linear ModelsDocument92 pagesLinear ModelsCART11No ratings yet

- Multivariate RegressionDocument20 pagesMultivariate RegressionTosharChaudharyNo ratings yet

- TCH442E Quantitative Methods For Finance: Last Lecture: NextDocument13 pagesTCH442E Quantitative Methods For Finance: Last Lecture: NextNgọc Mai VũNo ratings yet

- 2092 4033 1 SMDocument20 pages2092 4033 1 SMyonomasNo ratings yet

- 50 Years of FFT Algorithms and Applications.Document34 pages50 Years of FFT Algorithms and Applications.Alvaro CotaquispeNo ratings yet

- Multiple Linear RegressionDocument18 pagesMultiple Linear RegressionWERU JOAN NYOKABINo ratings yet

- QM All PDFDocument69 pagesQM All PDFtuhina27No ratings yet

- EconometricsII ExercisesDocument27 pagesEconometricsII ExercisesAkriti TrivediNo ratings yet

- A5 Coal MineDocument7 pagesA5 Coal MineBridge Iit At RanchiNo ratings yet

- Re Ning The Stern Diatomic Sequence - Richard Stanley, Herbert WilfDocument10 pagesRe Ning The Stern Diatomic Sequence - Richard Stanley, Herbert Wilfgauss202No ratings yet

- Wavelets Based On Orthogonal PolynomialsDocument26 pagesWavelets Based On Orthogonal PolynomialsShabbir ChaudharyNo ratings yet

- Multiple Hypotesis TestingDocument4 pagesMultiple Hypotesis Testingfrapass99No ratings yet

- Mathematical Tripos: at The End of The ExaminationDocument27 pagesMathematical Tripos: at The End of The ExaminationDedliNo ratings yet

- Experiment-11: Question: The Effect of 5 Ingredients (A, B, C, D, E) On The Reaction Time of A Chemical ProcessDocument6 pagesExperiment-11: Question: The Effect of 5 Ingredients (A, B, C, D, E) On The Reaction Time of A Chemical ProcessQuoraNo ratings yet

- V. - A. - Marchenko - A. - Boutet - de - Monvel - H. - McKean - ( (BookFi) PDFDocument403 pagesV. - A. - Marchenko - A. - Boutet - de - Monvel - H. - McKean - ( (BookFi) PDFHerman HermanNo ratings yet

- Chapter 02Document14 pagesChapter 02Joe Di NapoliNo ratings yet

- 2101 F 17 Assignment 1Document8 pages2101 F 17 Assignment 1dflamsheepsNo ratings yet

- 0802 0691 PDFDocument21 pages0802 0691 PDFGalina AlexeevaNo ratings yet

- Prueba de Hipótesis en La Regresión Lineal Simple: Universidad Industrial de SantanderDocument4 pagesPrueba de Hipótesis en La Regresión Lineal Simple: Universidad Industrial de Santandermafe veraNo ratings yet

- MT1 F13 v2 SolvedDocument8 pagesMT1 F13 v2 SolvedPPPNo ratings yet

- Linear Regression: March 28, 2013Document17 pagesLinear Regression: March 28, 2013dulanjaya jayalathNo ratings yet

- D Linear Regression With RDocument9 pagesD Linear Regression With RBùi Nguyên HoàngNo ratings yet

- The DC Power Flow EquationsDocument25 pagesThe DC Power Flow EquationsDaryAntoNo ratings yet

- Dyadic Wavelets and Refinable Functions On A Half-LineDocument30 pagesDyadic Wavelets and Refinable Functions On A Half-LineukoszapavlinjeNo ratings yet

- Stochastic Calculus Midterm Exam: Prof. D. Filipovi C, E. Hapnes 29.10.2019Document2 pagesStochastic Calculus Midterm Exam: Prof. D. Filipovi C, E. Hapnes 29.10.2019UasdafNo ratings yet

- CombinepdfDocument8 pagesCombinepdfkattarhindu1011No ratings yet

- Midterm 2019Document2 pagesMidterm 2019UasdafNo ratings yet

- Dimension Reduction and Hidden Structure: 1.1 Principal Component Analysis (PCA)Document40 pagesDimension Reduction and Hidden Structure: 1.1 Principal Component Analysis (PCA)SNo ratings yet

- JpegDocument28 pagesJpegSayem HasanNo ratings yet

- PT 3 Su 05 SolnsDocument5 pagesPT 3 Su 05 SolnsjustanaltaccntNo ratings yet

- Exam - Time Series AnalysisDocument8 pagesExam - Time Series AnalysisSheehan Dominic HanrahanNo ratings yet

- HW 0Document2 pagesHW 0Tushar GargNo ratings yet

- Simple Linear Regression 69Document69 pagesSimple Linear Regression 69Härêm ÔdNo ratings yet

- hw1 PDFDocument3 pageshw1 PDF何明涛No ratings yet

- Quantitative Methods 2018-2021Document32 pagesQuantitative Methods 2018-2021Gabriel RoblesNo ratings yet

- Assignment 1 (Concept) : Solutions: Note, Throughout Exercises 1 To 4, N Denotes The Input Size of A Problem. (10%)Document6 pagesAssignment 1 (Concept) : Solutions: Note, Throughout Exercises 1 To 4, N Denotes The Input Size of A Problem. (10%)Aijin JiangNo ratings yet

- Metrics Aug 2023Document10 pagesMetrics Aug 2023Ahmed leoNo ratings yet

- Second Order TransientsDocument6 pagesSecond Order Transientsmusy1233No ratings yet

- MultipleRegression 1Document40 pagesMultipleRegression 1Silmi AzmiNo ratings yet

- Least Squares TechniqueDocument9 pagesLeast Squares TechniqueBigNo ratings yet

- Wave Eq 2Document9 pagesWave Eq 2nithila bhaskerNo ratings yet

- Topic 3: Simple Linear RegressionDocument19 pagesTopic 3: Simple Linear RegressionSouleymane CoulibalyNo ratings yet

- Regression: Building Experimental ModelsDocument47 pagesRegression: Building Experimental ModelsOnur KirpiciNo ratings yet

- Math644 - Chapter 1 - Part2 PDFDocument14 pagesMath644 - Chapter 1 - Part2 PDFaftab20No ratings yet

- Reading 11: Correlation and Simple Regression: Calculate and Interpret The FollowingDocument15 pagesReading 11: Correlation and Simple Regression: Calculate and Interpret The FollowingACarringtonNo ratings yet

- Ecn 2311Document6 pagesEcn 2311Misheck muwawaNo ratings yet

- InterpolationDocument54 pagesInterpolationEdwin Okoampa BoaduNo ratings yet

- Computer Vision: Spring 2006 15-385,-685Document58 pagesComputer Vision: Spring 2006 15-385,-685minh_neuNo ratings yet

- Business Stat & Emetrics - Inference in RegressionDocument7 pagesBusiness Stat & Emetrics - Inference in RegressionkasimNo ratings yet

- Project 3Document3 pagesProject 3igerhard23No ratings yet

- Lecture 13. The 2 Factorial Design: Jesper Ryd enDocument22 pagesLecture 13. The 2 Factorial Design: Jesper Ryd enchashyNo ratings yet

- Finite Difference Methods Mixed Boundary ConditionDocument6 pagesFinite Difference Methods Mixed Boundary ConditionShivam SharmaNo ratings yet

- Slides 3Document38 pagesSlides 3creation portalNo ratings yet

- E9 205 - Machine Learning For Signal Processing: Midterm ExamDocument3 pagesE9 205 - Machine Learning For Signal Processing: Midterm ExamSaurav SinghNo ratings yet

- Green's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)From EverandGreen's Function Estimates for Lattice Schrödinger Operators and Applications. (AM-158)No ratings yet

- HomeworkW4 BADocument1 pageHomeworkW4 BANhibinhNo ratings yet

- 3 Circles AnalysisDocument3 pages3 Circles AnalysisNhibinhNo ratings yet

- Chap 14 Interviewing and Follow UpDocument20 pagesChap 14 Interviewing and Follow UpNhibinhNo ratings yet

- Chap 9 Informal ReportsDocument28 pagesChap 9 Informal ReportsNhibinhNo ratings yet

- Culture and Strategy ReadingsDocument6 pagesCulture and Strategy ReadingsNhibinhNo ratings yet

- Chap 13 The Job Search, Resumes, and Cover LetterDocument77 pagesChap 13 The Job Search, Resumes, and Cover LetterNhibinh100% (1)

- HRMDocument7 pagesHRMNhibinhNo ratings yet

- ilbfilDersNotu53 PDFDocument21 pagesilbfilDersNotu53 PDFNhibinhNo ratings yet

- DesignDocument33 pagesDesignNhibinhNo ratings yet

- Chapter 7 - TrainingDocument32 pagesChapter 7 - TrainingNhibinhNo ratings yet

- Chapter 7 - TrainingDocument32 pagesChapter 7 - TrainingNhibinhNo ratings yet

- Gosser D. R For Quantitative Chemistry 2023Document119 pagesGosser D. R For Quantitative Chemistry 2023jobpei2No ratings yet

- Thirty Years of Heteroskedasticity-Robust Inference: James G. MackinnonDocument25 pagesThirty Years of Heteroskedasticity-Robust Inference: James G. MackinnonKadirNo ratings yet

- Kinetics of PolyesterificationDocument7 pagesKinetics of Polyesterificationbmvogel95No ratings yet

- Empirical Research On Perceived Popularity of Tiktok in IndiaDocument6 pagesEmpirical Research On Perceived Popularity of Tiktok in IndiaGrasiawainNo ratings yet

- Chapter 7 (I) Correlation and Regression Model - Oct21Document23 pagesChapter 7 (I) Correlation and Regression Model - Oct21Nguyễn LyNo ratings yet

- Syllabus STA 36-202 - Statistics & Data Science Methods Spring 2018Document15 pagesSyllabus STA 36-202 - Statistics & Data Science Methods Spring 2018Bao GanNo ratings yet

- AI-900 Exam - Free Actual Q&As, Page 1 - ExamTopicsDocument88 pagesAI-900 Exam - Free Actual Q&As, Page 1 - ExamTopicsalexamariaa4No ratings yet

- Factors Associated With Obstetric Fistula Among ReDocument15 pagesFactors Associated With Obstetric Fistula Among Releta jimaNo ratings yet

- Statistical Analysis Using Gnumeric: Entering Data and Calculating ValuesDocument18 pagesStatistical Analysis Using Gnumeric: Entering Data and Calculating ValuesandriuzNo ratings yet

- Review Jurnal - Cost of Capital - Junan MutamadraDocument11 pagesReview Jurnal - Cost of Capital - Junan MutamadraJunan MutamadraNo ratings yet

- QAM Chapter04 Regression ModelsDocument82 pagesQAM Chapter04 Regression ModelsdavidpamanNo ratings yet

- Business Fundamentals Course - CORe - HBS OnlineDocument7 pagesBusiness Fundamentals Course - CORe - HBS OnlineWong kang xianNo ratings yet

- Homework #3 - Answers Economics 113 Introduction To Econometrics Professor Spearot Due Wednesday, October 29th, 2008 - Beginning of ClassDocument2 pagesHomework #3 - Answers Economics 113 Introduction To Econometrics Professor Spearot Due Wednesday, October 29th, 2008 - Beginning of ClassCheung TiffanyNo ratings yet

- Rosin Rammler RegressionDocument5 pagesRosin Rammler RegressionPEDRO HERNANDEZ RANGELNo ratings yet

- Tidyverse: Core Packages in TidyverseDocument8 pagesTidyverse: Core Packages in TidyverseAbhishekNo ratings yet

- Demand Estimation and ForcastingDocument43 pagesDemand Estimation and ForcastingKunal TayalNo ratings yet

- PDF Introductury Econometrics A Modern Approach 7Th Edition Jeffrey M Wooldridge Ebook Full ChapterDocument53 pagesPDF Introductury Econometrics A Modern Approach 7Th Edition Jeffrey M Wooldridge Ebook Full Chaptersue.madruga801100% (3)

- Regression: NotesDocument5 pagesRegression: NotesBENNY WAHYUDINo ratings yet

- BT307 Biological Data Analysis Assignment 1Document2 pagesBT307 Biological Data Analysis Assignment 1Karthik KashalNo ratings yet

- Determination of Metal From Various Samples Using Atomic Absorption SpectrosDocument8 pagesDetermination of Metal From Various Samples Using Atomic Absorption SpectrosPatricia DavidNo ratings yet

- Chapter 3 Cost Behavior Analysis and UseDocument45 pagesChapter 3 Cost Behavior Analysis and UseMarriel Fate Cullano100% (1)

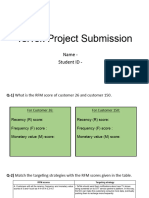

- Teltek Project SubmissionDocument12 pagesTeltek Project Submissionanuj.sarabhaiNo ratings yet

- Mplus Users Guide v6Document758 pagesMplus Users Guide v6Marco BardusNo ratings yet

- Oracle Demantra Advanced Forecasting and Demand Modeling: Key FeaturesDocument3 pagesOracle Demantra Advanced Forecasting and Demand Modeling: Key FeaturesjhlaravNo ratings yet

- Advanced StatisticsDocument125 pagesAdvanced StatisticsJose GallardoNo ratings yet

- Crop Yield Prediction Using ML AlgorithmsDocument8 pagesCrop Yield Prediction Using ML Algorithms385swayamNo ratings yet

- Econometric Analysis of Panel Data: William Greene Department of Economics Stern School of BusinessDocument37 pagesEconometric Analysis of Panel Data: William Greene Department of Economics Stern School of BusinessWaleed Said SolimanNo ratings yet

- Unit 4 FR ReviewDocument5 pagesUnit 4 FR Reviewapi-646571488No ratings yet