Professional Documents

Culture Documents

Root Split For The Iris Data Set

Root Split For The Iris Data Set

Uploaded by

Muhammad BassamCopyright:

Available Formats

You might also like

- Final Exam For SAS Enterprise MinerDocument17 pagesFinal Exam For SAS Enterprise MinerErdene BolorNo ratings yet

- Hyundai Sonata Workshop Manual (V6-3.3L (2006) ) - OptimizedDocument16,706 pagesHyundai Sonata Workshop Manual (V6-3.3L (2006) ) - Optimizedmnbvqwert80% (5)

- Project QuestionsDocument4 pagesProject Questionsvansh guptaNo ratings yet

- Lab (I)Document3 pagesLab (I)anand_seshamNo ratings yet

- (Case Study) Is Social Business Working OutDocument2 pages(Case Study) Is Social Business Working OutDwi Diana II100% (1)

- Lesson 1: Comparing Security Roles and Security ControlsDocument17 pagesLesson 1: Comparing Security Roles and Security ControlsPhan Sư Ýnh100% (2)

- CAP3770 Lab#4 DecsionTree Sp2017Document4 pagesCAP3770 Lab#4 DecsionTree Sp2017MelvingNo ratings yet

- Ass3 v1Document4 pagesAss3 v1Reeya PrakashNo ratings yet

- Unit 5 - Data Mining - WWW - Rgpvnotes.inDocument15 pagesUnit 5 - Data Mining - WWW - Rgpvnotes.inBhagwan BharoseNo ratings yet

- Decision TreesDocument14 pagesDecision TreesJustin Russo Harry50% (2)

- Assignment 1Document2 pagesAssignment 1Arnav YadavNo ratings yet

- LAB (1) Decision Tree: Islamic University of Gaza Computer Engineering Department Artificial Intelligence ECOM 5038Document18 pagesLAB (1) Decision Tree: Islamic University of Gaza Computer Engineering Department Artificial Intelligence ECOM 5038Eman IbrahimNo ratings yet

- It Works As Follows:: Decision Tree ?Document3 pagesIt Works As Follows:: Decision Tree ?cse VBITNo ratings yet

- Review Questions DSDocument14 pagesReview Questions DSSaleh AlizadeNo ratings yet

- 1 Data (7 Points) : Img Cv2.imreadDocument2 pages1 Data (7 Points) : Img Cv2.imreadVictor CanoNo ratings yet

- ML Unit 3Document14 pagesML Unit 3aiswaryaNo ratings yet

- ML-Lec-07-Decision Tree OverfittingDocument25 pagesML-Lec-07-Decision Tree OverfittingHamzaNo ratings yet

- Exam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General RemarksDocument5 pagesExam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General Remarkskishh28No ratings yet

- Unit Iii DMDocument48 pagesUnit Iii DMSuganthi D PSGRKCWNo ratings yet

- COMP3308/COMP3608 Artificial Intelligence Week 10 Tutorial Exercises Support Vector Machines. Ensembles of ClassifiersDocument3 pagesCOMP3308/COMP3608 Artificial Intelligence Week 10 Tutorial Exercises Support Vector Machines. Ensembles of ClassifiersharietNo ratings yet

- Data Structures and Algorithms Sheet #7 Trees: Part I: ExercisesDocument6 pagesData Structures and Algorithms Sheet #7 Trees: Part I: Exercisesmahmoud emadNo ratings yet

- MODULE 4-Dr - GMDocument23 pagesMODULE 4-Dr - GMgeetha megharajNo ratings yet

- Assignment Four: CSPS: Question OneDocument2 pagesAssignment Four: CSPS: Question OneCindy SanNo ratings yet

- Day 5 Supervised Technique-Decision Tree For Classification PDFDocument58 pagesDay 5 Supervised Technique-Decision Tree For Classification PDFamrita cse100% (1)

- ML Module IiiDocument12 pagesML Module IiiCrazy ChethanNo ratings yet

- Adaptive Boosting Assisted Multiclass ClassificationDocument5 pagesAdaptive Boosting Assisted Multiclass ClassificationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Sample Exam ProblemsDocument9 pagesSample Exam ProblemsSherelleJiaxinLi100% (1)

- 2 - Decision TreeDocument23 pages2 - Decision Treebandaru_jahnaviNo ratings yet

- Decision Trees and Random Forest Q&aDocument48 pagesDecision Trees and Random Forest Q&aVishnuNo ratings yet

- Data Mining With Weka Heart Disease Dataset: 1 Problem DescriptionDocument4 pagesData Mining With Weka Heart Disease Dataset: 1 Problem DescriptionSindhuja VigneshwaranNo ratings yet

- cs251 Quiz4 PDFDocument13 pagescs251 Quiz4 PDFYash GuptaNo ratings yet

- Data Structures and AlgorithmsDocument8 pagesData Structures and AlgorithmsLeary John TambagahanNo ratings yet

- Project - Data Mining: Bank - Marketing - Part1 - Data - CSVDocument4 pagesProject - Data Mining: Bank - Marketing - Part1 - Data - CSVdonnaNo ratings yet

- Decision Analysis Group Assignment 1 (2024)Document2 pagesDecision Analysis Group Assignment 1 (2024)Dian Safira Eka PutriNo ratings yet

- Assignment+1 +Regression+This+assignment+is+to+Document6 pagesAssignment+1 +Regression+This+assignment+is+to+Daniel GonzalezNo ratings yet

- Basic Stata Graphics For Economics StudentsDocument31 pagesBasic Stata Graphics For Economics StudentsLIUL LB TamiratNo ratings yet

- Lab 2Document3 pagesLab 2ptyquyen22No ratings yet

- MLT Unit-3 Important QuestionsDocument8 pagesMLT Unit-3 Important QuestionsJitendra KumarNo ratings yet

- Session 9 10 Decision TreeDocument41 pagesSession 9 10 Decision TreeShishir GuptaNo ratings yet

- New Microsoft Word DocumentDocument3 pagesNew Microsoft Word DocumentRavindraSamriaNo ratings yet

- TreeDocument7 pagesTreeSailla Raghu rajNo ratings yet

- Decision Tree (Autosaved)Document14 pagesDecision Tree (Autosaved)Bhardwaj DiwakarNo ratings yet

- 2 - Quiz 3 Instructions - Set O Group of 4hDocument3 pages2 - Quiz 3 Instructions - Set O Group of 4hKimberly IgnacioNo ratings yet

- Using Random Forests v4.0Document33 pagesUsing Random Forests v4.0rollschachNo ratings yet

- Week 1Document11 pagesWeek 1ng0934325No ratings yet

- Uecs3213 / Uecs3483 Data Mining SESSION: January 2020 Tutorial 5 Chapter 3-4 - ClassificationDocument3 pagesUecs3213 / Uecs3483 Data Mining SESSION: January 2020 Tutorial 5 Chapter 3-4 - ClassificationYuven RajNo ratings yet

- Assignment-7: Opening Iris - Arff and Removing Class AttributeDocument17 pagesAssignment-7: Opening Iris - Arff and Removing Class Attributeammi890No ratings yet

- Battery PDFDocument17 pagesBattery PDFTania IbarraNo ratings yet

- Data Mining Assignment 1Document2 pagesData Mining Assignment 1Zain AamirNo ratings yet

- Decision Tree Using Sci-Kit LearnDocument9 pagesDecision Tree Using Sci-Kit LearnsudeepvmenonNo ratings yet

- Description: Bank - Marketing - Part1 - Data - CSVDocument4 pagesDescription: Bank - Marketing - Part1 - Data - CSVravikgovinduNo ratings yet

- New Microsoft Word Document2Document2 pagesNew Microsoft Word Document2sreeNo ratings yet

- Midterm ExamDocument1 pageMidterm ExamkenNo ratings yet

- Mcq's (6 Topics)Document42 pagesMcq's (6 Topics)utpalNo ratings yet

- Decisiontree 2Document16 pagesDecisiontree 2shilpaNo ratings yet

- Decision Tree c45Document30 pagesDecision Tree c45AhmadRizalAfaniNo ratings yet

- Image Classification: UnsupervisedDocument15 pagesImage Classification: UnsupervisedandexNo ratings yet

- Lab-11 Random ForestDocument2 pagesLab-11 Random ForestKamranKhanNo ratings yet

- 2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Document6 pages2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Umairah IbrahimNo ratings yet

- Data Mining Algorithms Classification L4Document7 pagesData Mining Algorithms Classification L4u- m-No ratings yet

- Classification vs. Regression in Machine LearningDocument20 pagesClassification vs. Regression in Machine LearningShalini SinghalNo ratings yet

- Work Guide: Project PythonDocument44 pagesWork Guide: Project PythonMuhammad BassamNo ratings yet

- Muhammad Bassam-18B-077-CS (A) - Exam PDFDocument11 pagesMuhammad Bassam-18B-077-CS (A) - Exam PDFMuhammad BassamNo ratings yet

- CS415/515 Assignment 2: Due by Sep. 10, 2:00 PMDocument1 pageCS415/515 Assignment 2: Due by Sep. 10, 2:00 PMMuhammad BassamNo ratings yet

- 04 Boyer Moore v2Document23 pages04 Boyer Moore v2Muhammad BassamNo ratings yet

- (QT - Python - GUI) ThorLabs Pro8000 Controller Quote Request.Document1 page(QT - Python - GUI) ThorLabs Pro8000 Controller Quote Request.Muhammad BassamNo ratings yet

- Grocery List RequirementsDocument4 pagesGrocery List RequirementsMuhammad BassamNo ratings yet

- II. Field Name and DescriptionDocument4 pagesII. Field Name and DescriptionJannet De Lara VergeldeDiosNo ratings yet

- Week 3, Nana KitiaDocument3 pagesWeek 3, Nana Kitiaნანა კიტიაNo ratings yet

- Marketing 14th Edition Ebook PDF VersionDocument61 pagesMarketing 14th Edition Ebook PDF Versionwilliam.eshom55498% (52)

- Classes & Objects-Examples C++Document12 pagesClasses & Objects-Examples C++Aer ZNo ratings yet

- HASEE HP500 - QUANTA SW7 - REV 1ASecDocument41 pagesHASEE HP500 - QUANTA SW7 - REV 1ASecAdam TsiolakakisNo ratings yet

- Abandoned Cart - Flows - KlaviyoDocument1 pageAbandoned Cart - Flows - KlaviyoAdeps SmithsNo ratings yet

- School Forms Checking Report School Name: School Id: Table 1. Learner Records Examined/ReviewedDocument4 pagesSchool Forms Checking Report School Name: School Id: Table 1. Learner Records Examined/ReviewedArnulfa ManigosNo ratings yet

- 70 ATS Rating Resume TemplateDocument1 page70 ATS Rating Resume Templategofov85501No ratings yet

- Topic 2 - Consumer CircuitsDocument28 pagesTopic 2 - Consumer CircuitsVictorNo ratings yet

- 26071-100-V1A-J001-10619 - 001 HIPPS SYSTEM INTERNAL WIRING DIAGRAM FOR ISH-106 - Code1Document14 pages26071-100-V1A-J001-10619 - 001 HIPPS SYSTEM INTERNAL WIRING DIAGRAM FOR ISH-106 - Code1widionosucipto29No ratings yet

- Short Circuit Analysis PDFDocument31 pagesShort Circuit Analysis PDFmarvinNo ratings yet

- Exp 2.3 Java WSDocument4 pagesExp 2.3 Java WSmadhu jhaNo ratings yet

- Coal by RameshDocument9 pagesCoal by RameshKomma RameshNo ratings yet

- DLC3 CalyaDocument4 pagesDLC3 Calyakurnia wanNo ratings yet

- To The Learners: Consuelo M. Delima Elizabeth M. RuralDocument9 pagesTo The Learners: Consuelo M. Delima Elizabeth M. RuralMyla MillapreNo ratings yet

- Introduction To Networking: Lecture 5 - Addressing in NetworkingDocument23 pagesIntroduction To Networking: Lecture 5 - Addressing in NetworkingSamuel SilasNo ratings yet

- Tertiary Student Handbook 2 PDFDocument74 pagesTertiary Student Handbook 2 PDFKate Clarize Aguilar100% (1)

- CS2B - April23 - EXAM - Clean Proof - v2Document8 pagesCS2B - April23 - EXAM - Clean Proof - v2boomaNo ratings yet

- FusionCol8000-C210 Fan Wall User ManualDocument171 pagesFusionCol8000-C210 Fan Wall User ManualsamiramixNo ratings yet

- 16S-221 Cei 254-279Document26 pages16S-221 Cei 254-279Uncle VolodiaNo ratings yet

- Mahr Federal 828 Ultra High Precision Motorized Length Meaauring MachineDocument5 pagesMahr Federal 828 Ultra High Precision Motorized Length Meaauring MachineGökçe SaranaNo ratings yet

- Human Relations: Hospital The Determinants of Career Intent Among Physicians at A U.S. Air ForceDocument31 pagesHuman Relations: Hospital The Determinants of Career Intent Among Physicians at A U.S. Air ForceHusainiBachtiarNo ratings yet

- All Customer Delivery 2022Document394 pagesAll Customer Delivery 2022Ayu WulandaryNo ratings yet

- LM555 PDFDocument12 pagesLM555 PDFKamila KNo ratings yet

- Hive 1Document1 pageHive 1RadhikaNo ratings yet

- Sadsjryjtc74tv8Document3 pagesSadsjryjtc74tv8Fahad Qamar ParachaNo ratings yet

- PSM Circular No E of 2024Document79 pagesPSM Circular No E of 2024timndayo25No ratings yet

Root Split For The Iris Data Set

Root Split For The Iris Data Set

Uploaded by

Muhammad BassamOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Root Split For The Iris Data Set

Root Split For The Iris Data Set

Uploaded by

Muhammad BassamCopyright:

Available Formats

1.

Explain what is being decreased precisely (I don’t mean “impurity”) when we

select the best split using Gini index? Be precise and to the point. (“Decrease

in impurity” or any variation of that is not the answer.)

2. Show that the Gini impurity function for binary classification with two

classesC1andC2canbe simplified to Gini(T) = 2p(1−p) where p is the relative

frequency of classC1inT.

#3.Calculate the maximum entropy for a set containing a mixture of four

classes? Repeat the calculation for n classes; simplify the final formula as

much as possible (down to a single “log”).

4.Explain the reason for gain ratio to be the preferred measure for selecting

the best split when using Gini or entropy as the purity function. What is the

possible problem if we don’t use it and why is that problem bad? Is gain ratio

needed in all types of decision trees that use Gini or entropy or are some

versions immune?

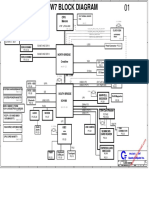

5. The split in Figure 1 is the split at the root of the decision tree for the Iris

data set generated by the Scikit-learn DecisionTreeClassifier().

Please calculate the following measures for this split. Note: Keep in mind that

the Iris data set has three classes. In the figure, they are reported using the

order[setosa, versicolor, virginica]

a. Purity gain using Gini impurity function.

b. Information gain.

Figure 1:Root split for the Iris data set

6. Use Gini index to build a decision tree with multi-way splits using the

training examples in Figure 2 below. Solve the problem by providing

responses to the following prompts.

a. Explain why Customer IDs hould not be used as an attribute test

condition.

b. Select the best split for the root. List/show all the splits you considered

together with their corre-sponding values of the Gini index. Justify your

selection for the root split condition.

c. Find all the remaining splits to construct a full decision tree where all leaves

contain only a single class. Show all the splits that you considered, include

Gini index for each one. Assign a class to each leaf.

d. Did you have difficulty assigning classes to leaves in part (c)? Note that we

cannot split a leaf if the records have identical attributes (see slide 78 “Pre-

pruning: Stopping Criteria”). This situation is called a “clash” and there are

various methods of dealing with it. In this assignment we just leave it as is, but

how would you handle it if you were to encounter it in a real project?

e. Use the final tree to classify the record (F, Family, Small).

f. Suppose we use as topping criterion that disallows leaves with fewer than

two examples. Modify the tree accordingly and reclassify the record (F,

Family, Small). (Note that there is no need tore-build the tree from scratch!)

g. Find the overall impurity of the tree in part (f).

Figure 2:Training set for Problem 6

6.Consider the set of training examples in Figure 3 below.

Figure 3:Training set for Problem 7

a. Compute two-level decision tree (use tree depth/height as the stopping

criterion) using the classi-fication error rate as the splitting criterion. Calculate

the overall error rate of the induced tree.

b. Repeat part (a) using X as the first splitting attribute and then choose the

best remaining attribute for splitting at each of the two successor nodes. What

is the error rate of the induced tree?

c. Compare the error rates of the trees induced in parts (a) and (b). Comment

on the difference.

[Hint:The error rates should be different.] What important property of decision

tree algorithms does it illustrate

8. As a data scientist, you will have to use software and programming

documentation on daily basis. And if you did not use an online documentation

in the past, then the Scikit-learn documentation is a great one to start with! It

is truly excellent! Thus for this problem, you will consult the Scikit-learn online

documentation and describe the type of decision tree that it implements. Make

sure to list which algorithm it implements, what is the accepted Type of input,

type of target variable, split selection function (impurity or error), stopping

criteria, pruning method, type of splits, etc

You might also like

- Final Exam For SAS Enterprise MinerDocument17 pagesFinal Exam For SAS Enterprise MinerErdene BolorNo ratings yet

- Hyundai Sonata Workshop Manual (V6-3.3L (2006) ) - OptimizedDocument16,706 pagesHyundai Sonata Workshop Manual (V6-3.3L (2006) ) - Optimizedmnbvqwert80% (5)

- Project QuestionsDocument4 pagesProject Questionsvansh guptaNo ratings yet

- Lab (I)Document3 pagesLab (I)anand_seshamNo ratings yet

- (Case Study) Is Social Business Working OutDocument2 pages(Case Study) Is Social Business Working OutDwi Diana II100% (1)

- Lesson 1: Comparing Security Roles and Security ControlsDocument17 pagesLesson 1: Comparing Security Roles and Security ControlsPhan Sư Ýnh100% (2)

- CAP3770 Lab#4 DecsionTree Sp2017Document4 pagesCAP3770 Lab#4 DecsionTree Sp2017MelvingNo ratings yet

- Ass3 v1Document4 pagesAss3 v1Reeya PrakashNo ratings yet

- Unit 5 - Data Mining - WWW - Rgpvnotes.inDocument15 pagesUnit 5 - Data Mining - WWW - Rgpvnotes.inBhagwan BharoseNo ratings yet

- Decision TreesDocument14 pagesDecision TreesJustin Russo Harry50% (2)

- Assignment 1Document2 pagesAssignment 1Arnav YadavNo ratings yet

- LAB (1) Decision Tree: Islamic University of Gaza Computer Engineering Department Artificial Intelligence ECOM 5038Document18 pagesLAB (1) Decision Tree: Islamic University of Gaza Computer Engineering Department Artificial Intelligence ECOM 5038Eman IbrahimNo ratings yet

- It Works As Follows:: Decision Tree ?Document3 pagesIt Works As Follows:: Decision Tree ?cse VBITNo ratings yet

- Review Questions DSDocument14 pagesReview Questions DSSaleh AlizadeNo ratings yet

- 1 Data (7 Points) : Img Cv2.imreadDocument2 pages1 Data (7 Points) : Img Cv2.imreadVictor CanoNo ratings yet

- ML Unit 3Document14 pagesML Unit 3aiswaryaNo ratings yet

- ML-Lec-07-Decision Tree OverfittingDocument25 pagesML-Lec-07-Decision Tree OverfittingHamzaNo ratings yet

- Exam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General RemarksDocument5 pagesExam Advanced Data Mining Date: 5-11-2009 Time: 14.00-17.00: General Remarkskishh28No ratings yet

- Unit Iii DMDocument48 pagesUnit Iii DMSuganthi D PSGRKCWNo ratings yet

- COMP3308/COMP3608 Artificial Intelligence Week 10 Tutorial Exercises Support Vector Machines. Ensembles of ClassifiersDocument3 pagesCOMP3308/COMP3608 Artificial Intelligence Week 10 Tutorial Exercises Support Vector Machines. Ensembles of ClassifiersharietNo ratings yet

- Data Structures and Algorithms Sheet #7 Trees: Part I: ExercisesDocument6 pagesData Structures and Algorithms Sheet #7 Trees: Part I: Exercisesmahmoud emadNo ratings yet

- MODULE 4-Dr - GMDocument23 pagesMODULE 4-Dr - GMgeetha megharajNo ratings yet

- Assignment Four: CSPS: Question OneDocument2 pagesAssignment Four: CSPS: Question OneCindy SanNo ratings yet

- Day 5 Supervised Technique-Decision Tree For Classification PDFDocument58 pagesDay 5 Supervised Technique-Decision Tree For Classification PDFamrita cse100% (1)

- ML Module IiiDocument12 pagesML Module IiiCrazy ChethanNo ratings yet

- Adaptive Boosting Assisted Multiclass ClassificationDocument5 pagesAdaptive Boosting Assisted Multiclass ClassificationInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Sample Exam ProblemsDocument9 pagesSample Exam ProblemsSherelleJiaxinLi100% (1)

- 2 - Decision TreeDocument23 pages2 - Decision Treebandaru_jahnaviNo ratings yet

- Decision Trees and Random Forest Q&aDocument48 pagesDecision Trees and Random Forest Q&aVishnuNo ratings yet

- Data Mining With Weka Heart Disease Dataset: 1 Problem DescriptionDocument4 pagesData Mining With Weka Heart Disease Dataset: 1 Problem DescriptionSindhuja VigneshwaranNo ratings yet

- cs251 Quiz4 PDFDocument13 pagescs251 Quiz4 PDFYash GuptaNo ratings yet

- Data Structures and AlgorithmsDocument8 pagesData Structures and AlgorithmsLeary John TambagahanNo ratings yet

- Project - Data Mining: Bank - Marketing - Part1 - Data - CSVDocument4 pagesProject - Data Mining: Bank - Marketing - Part1 - Data - CSVdonnaNo ratings yet

- Decision Analysis Group Assignment 1 (2024)Document2 pagesDecision Analysis Group Assignment 1 (2024)Dian Safira Eka PutriNo ratings yet

- Assignment+1 +Regression+This+assignment+is+to+Document6 pagesAssignment+1 +Regression+This+assignment+is+to+Daniel GonzalezNo ratings yet

- Basic Stata Graphics For Economics StudentsDocument31 pagesBasic Stata Graphics For Economics StudentsLIUL LB TamiratNo ratings yet

- Lab 2Document3 pagesLab 2ptyquyen22No ratings yet

- MLT Unit-3 Important QuestionsDocument8 pagesMLT Unit-3 Important QuestionsJitendra KumarNo ratings yet

- Session 9 10 Decision TreeDocument41 pagesSession 9 10 Decision TreeShishir GuptaNo ratings yet

- New Microsoft Word DocumentDocument3 pagesNew Microsoft Word DocumentRavindraSamriaNo ratings yet

- TreeDocument7 pagesTreeSailla Raghu rajNo ratings yet

- Decision Tree (Autosaved)Document14 pagesDecision Tree (Autosaved)Bhardwaj DiwakarNo ratings yet

- 2 - Quiz 3 Instructions - Set O Group of 4hDocument3 pages2 - Quiz 3 Instructions - Set O Group of 4hKimberly IgnacioNo ratings yet

- Using Random Forests v4.0Document33 pagesUsing Random Forests v4.0rollschachNo ratings yet

- Week 1Document11 pagesWeek 1ng0934325No ratings yet

- Uecs3213 / Uecs3483 Data Mining SESSION: January 2020 Tutorial 5 Chapter 3-4 - ClassificationDocument3 pagesUecs3213 / Uecs3483 Data Mining SESSION: January 2020 Tutorial 5 Chapter 3-4 - ClassificationYuven RajNo ratings yet

- Assignment-7: Opening Iris - Arff and Removing Class AttributeDocument17 pagesAssignment-7: Opening Iris - Arff and Removing Class Attributeammi890No ratings yet

- Battery PDFDocument17 pagesBattery PDFTania IbarraNo ratings yet

- Data Mining Assignment 1Document2 pagesData Mining Assignment 1Zain AamirNo ratings yet

- Decision Tree Using Sci-Kit LearnDocument9 pagesDecision Tree Using Sci-Kit LearnsudeepvmenonNo ratings yet

- Description: Bank - Marketing - Part1 - Data - CSVDocument4 pagesDescription: Bank - Marketing - Part1 - Data - CSVravikgovinduNo ratings yet

- New Microsoft Word Document2Document2 pagesNew Microsoft Word Document2sreeNo ratings yet

- Midterm ExamDocument1 pageMidterm ExamkenNo ratings yet

- Mcq's (6 Topics)Document42 pagesMcq's (6 Topics)utpalNo ratings yet

- Decisiontree 2Document16 pagesDecisiontree 2shilpaNo ratings yet

- Decision Tree c45Document30 pagesDecision Tree c45AhmadRizalAfaniNo ratings yet

- Image Classification: UnsupervisedDocument15 pagesImage Classification: UnsupervisedandexNo ratings yet

- Lab-11 Random ForestDocument2 pagesLab-11 Random ForestKamranKhanNo ratings yet

- 2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Document6 pages2021 ITS665 - ISP565 - GROUP PROJECT-revMac21Umairah IbrahimNo ratings yet

- Data Mining Algorithms Classification L4Document7 pagesData Mining Algorithms Classification L4u- m-No ratings yet

- Classification vs. Regression in Machine LearningDocument20 pagesClassification vs. Regression in Machine LearningShalini SinghalNo ratings yet

- Work Guide: Project PythonDocument44 pagesWork Guide: Project PythonMuhammad BassamNo ratings yet

- Muhammad Bassam-18B-077-CS (A) - Exam PDFDocument11 pagesMuhammad Bassam-18B-077-CS (A) - Exam PDFMuhammad BassamNo ratings yet

- CS415/515 Assignment 2: Due by Sep. 10, 2:00 PMDocument1 pageCS415/515 Assignment 2: Due by Sep. 10, 2:00 PMMuhammad BassamNo ratings yet

- 04 Boyer Moore v2Document23 pages04 Boyer Moore v2Muhammad BassamNo ratings yet

- (QT - Python - GUI) ThorLabs Pro8000 Controller Quote Request.Document1 page(QT - Python - GUI) ThorLabs Pro8000 Controller Quote Request.Muhammad BassamNo ratings yet

- Grocery List RequirementsDocument4 pagesGrocery List RequirementsMuhammad BassamNo ratings yet

- II. Field Name and DescriptionDocument4 pagesII. Field Name and DescriptionJannet De Lara VergeldeDiosNo ratings yet

- Week 3, Nana KitiaDocument3 pagesWeek 3, Nana Kitiaნანა კიტიაNo ratings yet

- Marketing 14th Edition Ebook PDF VersionDocument61 pagesMarketing 14th Edition Ebook PDF Versionwilliam.eshom55498% (52)

- Classes & Objects-Examples C++Document12 pagesClasses & Objects-Examples C++Aer ZNo ratings yet

- HASEE HP500 - QUANTA SW7 - REV 1ASecDocument41 pagesHASEE HP500 - QUANTA SW7 - REV 1ASecAdam TsiolakakisNo ratings yet

- Abandoned Cart - Flows - KlaviyoDocument1 pageAbandoned Cart - Flows - KlaviyoAdeps SmithsNo ratings yet

- School Forms Checking Report School Name: School Id: Table 1. Learner Records Examined/ReviewedDocument4 pagesSchool Forms Checking Report School Name: School Id: Table 1. Learner Records Examined/ReviewedArnulfa ManigosNo ratings yet

- 70 ATS Rating Resume TemplateDocument1 page70 ATS Rating Resume Templategofov85501No ratings yet

- Topic 2 - Consumer CircuitsDocument28 pagesTopic 2 - Consumer CircuitsVictorNo ratings yet

- 26071-100-V1A-J001-10619 - 001 HIPPS SYSTEM INTERNAL WIRING DIAGRAM FOR ISH-106 - Code1Document14 pages26071-100-V1A-J001-10619 - 001 HIPPS SYSTEM INTERNAL WIRING DIAGRAM FOR ISH-106 - Code1widionosucipto29No ratings yet

- Short Circuit Analysis PDFDocument31 pagesShort Circuit Analysis PDFmarvinNo ratings yet

- Exp 2.3 Java WSDocument4 pagesExp 2.3 Java WSmadhu jhaNo ratings yet

- Coal by RameshDocument9 pagesCoal by RameshKomma RameshNo ratings yet

- DLC3 CalyaDocument4 pagesDLC3 Calyakurnia wanNo ratings yet

- To The Learners: Consuelo M. Delima Elizabeth M. RuralDocument9 pagesTo The Learners: Consuelo M. Delima Elizabeth M. RuralMyla MillapreNo ratings yet

- Introduction To Networking: Lecture 5 - Addressing in NetworkingDocument23 pagesIntroduction To Networking: Lecture 5 - Addressing in NetworkingSamuel SilasNo ratings yet

- Tertiary Student Handbook 2 PDFDocument74 pagesTertiary Student Handbook 2 PDFKate Clarize Aguilar100% (1)

- CS2B - April23 - EXAM - Clean Proof - v2Document8 pagesCS2B - April23 - EXAM - Clean Proof - v2boomaNo ratings yet

- FusionCol8000-C210 Fan Wall User ManualDocument171 pagesFusionCol8000-C210 Fan Wall User ManualsamiramixNo ratings yet

- 16S-221 Cei 254-279Document26 pages16S-221 Cei 254-279Uncle VolodiaNo ratings yet

- Mahr Federal 828 Ultra High Precision Motorized Length Meaauring MachineDocument5 pagesMahr Federal 828 Ultra High Precision Motorized Length Meaauring MachineGökçe SaranaNo ratings yet

- Human Relations: Hospital The Determinants of Career Intent Among Physicians at A U.S. Air ForceDocument31 pagesHuman Relations: Hospital The Determinants of Career Intent Among Physicians at A U.S. Air ForceHusainiBachtiarNo ratings yet

- All Customer Delivery 2022Document394 pagesAll Customer Delivery 2022Ayu WulandaryNo ratings yet

- LM555 PDFDocument12 pagesLM555 PDFKamila KNo ratings yet

- Hive 1Document1 pageHive 1RadhikaNo ratings yet

- Sadsjryjtc74tv8Document3 pagesSadsjryjtc74tv8Fahad Qamar ParachaNo ratings yet

- PSM Circular No E of 2024Document79 pagesPSM Circular No E of 2024timndayo25No ratings yet