Professional Documents

Culture Documents

Sales Forecasting Using Kernel Based Support Vector Machine Algorithm

Sales Forecasting Using Kernel Based Support Vector Machine Algorithm

Uploaded by

jomasoolOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Sales Forecasting Using Kernel Based Support Vector Machine Algorithm

Sales Forecasting Using Kernel Based Support Vector Machine Algorithm

Uploaded by

jomasoolCopyright:

Available Formats

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

Sales Forecasting using Kernel Based Support Vector

Machine Algorithm

Shridhar Kamble Aaditya Desai Priya Vartak

Thakur College of Engineering Assistant Professor (IT), TCET Thakur College of Engineering

Mumbai, India Mumbai, India Mumbai, India

shridharkamble1@gmail.com aaditya1982@gmail.com priyanvartak@gmail.com

ABSTRACT

Due to huge applications, machine learning techniques are researched 2. FORECASTING TECHNIQUES

more widely. This paper establishes a model of sales prediction based All Classification and prediction are two forms of data analysis

on improved support vector machines, to forecast the sales data. that can be used to extract models describing important data classes

Support vector regression provides global optimal solutions which or to predict future data trends. Such analysis can help us to provide

avoid overtraining. To check the efficiency of forecasting model with a better understanding of the large data. Classification predicts

various accuracy parameters are used, including Mean Absolute categorical (discrete, unordered) labels, while prediction models

Percentage Error (MAPE), Mean Absolute Deviation (MAD) and continuous valued functions. Classification technique is capable of

Mean Squared Error (MSE) etc. processing a wider variety of data than regression and is growing in

popularity. Classification is also called supervised learning, as the

Development of an improved Sales forecasting model was done instances are given with known labels, contrast to unsupervised

which is based on kernel based support vector machine and other learning in which labels are not known. Each instance in the dataset

optimization parameters. Traditional SVM classifier is a linear used by supervised or unsupervised learning method is represented

classifier, Kernel approach gives nonlinear classifier and maximum by a set of features or attributes which may be categorical or

margin hyperplanes which in turn fit the maximum margin continuous [1] [2].

hyperplane into a transformed feature space. Due to the non-linear Classification is the process of building the model from the training

nature, kernel based SVM used to build the forecasting model to set made up of database instances and associated class label. The

improve the forecast precision. resulting model is then used to predict the class label of the testing

instances where the values of the predictor features are known.

Testing of forecasting model was done by applying it on apparel sales Supervised classification is one of the tasks most frequently carried

data which was from 2009/01/01 to 2013/12/30. Forecasting model out by intelligent techniques. The large numbers of techniques have

was rerun and same dataset applies to various classical techniques been developed.

along with kernel based support vector machine algorithm to ensure

that the results are comparable. The obtained Results show that the

forecast efficiency of kernel based support vector machine was better 2.1 Classical Techniques

than classical approaches and traditional SVM.

2.1.1 Simple Moving Average

Keywords Simple Moving Average is the most basic forecasting method.

Simple Moving Average, Naïve Bayes, Exponential Smoothing, Procedure to do forecasting using Simple Moving Average is as

Adaptive Rate Smoothing, Weighted Moving Average, Machine follows,

Learning, SVM, MLP, Time series prediction, MAPE, MAD, MSE.

Take data from last N periods

1. INTRODUCTION Average the data

Machine learning refers to a system that has the capability to Use average to forecast future period

automatically learn knowledge from experience and other ways.

Classification and prediction are two forms of data analysis that can

be used to extract models describing important data classes or to

predict future data trends [3]. (1)

Performance analysis of classical and machine learning algorithms

including neural network and SVM is carried out. These algorithms Where, Ft = forecast for period t

are used for classifying the actual sales data which were collected St-i = data

from apparel manufacturing firm. N = Number of time periods

Experimental setup is done using c# implemented code in visual

studio. The goal of this research is to find the best classifier which

outperforms other classifiers in all the aspects and use best algorithm 2.1.2 Weighted Moving Average

to build a forecasting model which will give better forecasting Simple moving average gives equal weight to all the data, the

results. weighted moving average can give more weight to more recent data.

IJCTA | May-June 2014 1112

Available online@www.ijcta.com

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

(2)

Where, w t-i = weight given to data at period t-i

2.1.3 Exponential Smoothing

Exponential smoothing is the forecasting technique that weights

the last forecast and the most recent data. Exponential smoothing is a

technique that can be applied to time series data, either to produce

smoothed data for presentation, or to make forecasts. Fig 1: Artificial Neural Network Architecture

Neural architecture consisted of three or more layers, i.e. input layer,

output layer and hidden layer as shown in Figure 2. The function of

this network was described as follows:

(3)

If actual data point was above the last forecast, it would tend to move (4)

the future forecast up and if the data point was below the last

forecast, it would tend to move the future forecast down. Where, Yj is the output of node j, f (.) is the transfer function, wij the

connection weight between node j and node i in the lower layer and

Xij is the input signal from the node i in the lower layer to node j.

2.1.4 Adaptive Rate Smoothing

Adaptive rate smoothing modifies the exponential smoothing Artificial Neural Network(ANN) largely used in forecasting,

technique by modifying the alpha smoothing parameter for each assists multivariate analysis. Multivariate models can rely on

period's forecast by the inclusion of a Tracking Signal. This is a greater information, where not only the lagged time series being

technique shown to respond much more quickly to step changes forecast, but also other indicators (such as technical,

while retaining the ability to filter out random noise. fundamental, inter-marker etc. for financial markets), are

combined to act as predictors. In addition, ANN is more effective

2.1.5 Naïve Bayes in describing the dynamics of non stationary time series due to

A naive Bayes classifier is a simple probabilistic classifier based its unique non-parametric, non-assumable, noise-tolerant and

on applying Bayes' theorem with strong (naive) independence adaptive properties. ANNs are universal function approximators

assumptions. A more descriptive term for the underlying probability that can map any nonlinear function without a prior assumptions

model would be "independent feature model". Depending on the about the data [2].

precise nature of the probability model, naive Bayes classifiers can be

trained very efficiently in a supervised learning setting. In many

practical applications, parameter estimation for naive Bayes models

uses the method of maximum likelihood; in other words, one can 2.3 Support Vector Machines (SVM)

work with the naïve Bayes model without believing in Bayesian

probability or using any Bayesian methods [2][3]. 2.3.1 Support Vector Machines (SVM)

Support Vector Machine (SVM) is a Machine learning

techniques comes under classification method which was based on

the construction of hyperplanes in a multidimensional space Support

2.2 Neural Networks vector machine (SVM) is a useful technique for data classification,

regression and prediction. For Given a set of training examples, each

2.2.1 ANN marked as belonging to one of two categories, an SVM training

Artificial Neural Network (ANN) is a Machine learning algorithm builds a model that predicts whether a new example falls

techniques which largely used in forecasting, assists multivariate into one category or the other. SVM model is a representation of the

analysis [7]. Many research activities were done to optimize the examples as points in space, mapped so that the examples of the

usage of ANN for forecasting in the past decade. ANNs provide a separate categories are divided by a clear gap that is as wide as

great deal of promise, but it also uncertain in behavior. Till the date possible. New examples are then mapped into that same space and

researchers are still not certain about the effect of key factors on the predicted to belong to a category based on which side of the gap they

forecasting performance of ANNs. fall on.

A linear support vector machine is composed of a set of given

support vectors z and a set of weights w. The computation for the

output of a given SVM with N support vectors z 1, z2, … , zN and

weights w1, w2, … , wN is then given by,

(5)

IJCTA | May-June 2014 1113

Available online@www.ijcta.com

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

2.3.2 Kernel Based Support Vector Machines systems the utility will be unable or unwilling to maintain. Earlier

The original optimal hyperplane algorithm proposed by statistical methods were used for forecasting. A time series model

Vladimir Vapnik in 1963 was a linear classifier. However, in 1992, was constructed solely from the past values of the variables to be

Bernhard Boser, Isabelle Guyon and Vapnik suggested a way to forecast.

create non-linear classifiers by applying the kernel trick (originally

proposed by Aizerman et al.) to maximum-margin hyperplanes. The

resulting algorithm is formally similar, except that every dot product

is replaced by a non-linear kernel function. This allows the algorithm

to fit the maximum-margin hyperplane in a transformed feature

space. The transformation may be non-linear and the transformed

space high dimensional; thus though the classifier is a hyperplane in

the high-dimensional feature space, it may be non-linear in the

original input space.

Using kernels, the original formulation for the SVM given SVM with

support vectors z1, z2, … , zN and weights w1, w2, … , wN is then

given by,

(6)

2.3.3 Standard Kernels

2.3.3.1 Linear Kernel

The Linear kernel is the simplest kernel function. It is given

by the common inner product <x,y> plus an optional constant c.

(7)

2.3.3.2 Polynomial Kernel

The Polynomial kernel is a non-stationary kernel. It is well

suited for problems where all data is normalized.

(8)

2.3.3.3 Gaussian Kernel

The Gaussian kernel is by far one of the most versatile

Kernels. It is a radial basis function kernel, and is the preferred

Kernel when we don’t know much about the data we are trying to

model.

Fig.2 Predictive Modeling using Data Mining

3.1 Learning Algorithm

(9)

John Platt proposed a Sequential minimal optimization (SMO)

algorithm in 1998, which is used for solving the quadratic

3. FORECASTING METHODOLOGY programming (QP) problem that arises during the training of support

Predictive modeling is the process by which a model is created to vector machines. Sequential Minimal Optimization (SMO) is a

predict an outcome. If the outcome is categorical it is simple algorithm that can quickly solve the SVM QP problem

called classification and its called regression when the outcome is without any extra matrix storage and without using numerical QP

numerical. A good forecasting system has qualities that distinguish it optimization steps at all. SMO decomposes the overall QP problem

from other systems. The qualities provide a useful basis for into QP sub-problems, to ensure convergence [7].

understanding why good forecasting systems outperform others over

time. If users understand the rationale of a forecast, they can appraise

the uncertainty of the forecast, and they will know when to revise the

4. EVALUATION PARAMETERS

To evaluate the performance of forecasting techniques certain

forecast in light of changing circumstances. The more accurate a

evaluation parameters are used. These are MAPE, MAD and MSE

forecast is, the better are the decisions that depend upon it.

etc. The forecast error is the difference between the actual value and

the forecast value for the corresponding period.

Inaccurate forecasts lead to too much or too little capacity and can be

very costly. Forecasts cost money, time and effort. Added expense

must purchase added accuracy, flexibility, or insight. A sophisticated (7)

forecasting system requires ample staff resources and technical skills

for maintenance. The Choice of a forecasting system must include a Where, E is the forecast error at period t, Y is the actual value at

commitment to the resources necessary to maintain it and avoid Period t and F is the forecast for period t.

IJCTA | May-June 2014 1114

Available online@www.ijcta.com

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

Following are the Measures of aggregate errors; 6. EXPERIMENTAL RESULTS

Proposed methodology is applied to a real-world sales data, as

Mean Absolute Percentage Error (MAPE) company wants to predict the number of units that will be sold the

following month, quarterly or yearly, for 6 different products

depending upon category. Consequently, we analyzed 5 time series

(8) representing Monthly sales data, during the period that runs from

January 2009 to December 2013 each. Figure 3 shows one of these 5

series. We applied the propose methodology to the 5 time series using

Mean Absolute Deviation (MAD) initial features (independent variables). As a result we obtained for

each series a different set of parameters describing the respective

model.

Along with the application of our methodology using kernel

(9) based support vector machines (K-SVM), we utilized the same

framework with neural networks and Classical techniques(Out of all

Mean squared error (MSE)

classical methods we have selected only Naïve approach due to

consistent results among other classical approaches) also. Tables 2, 3

and 4 show the accuracy error measures of mean absolute percentage

(10) error (MAPE), mean absolute deviation (MAD) and mean squared

error (MSE), obtained over the test set, by using the 3 forecasting

5. DATA PREPARATION methods for predicting sales for six products. The best result for each

product is underlined.

Classical algorithms including Simple Moving Average,

Weighted Moving Average, Exponential Smoothing, Adaptive Rate

TABLE II. MEAN ABSOLUTE PERCENTAGE ERROR

Smoothing, Naïve Bayes and Machine learning schemes such as

SVM and Artificial Neural Networks are implemented using C# Classical

programming language and .net platform using visual studio IDE. Product ANN K-SVM

(Naive)

Machine with windows 7 operating system and 2 GB of RAM is used P1 0.0792286 0.09923459 0.0743525

to conduct the experiments and analyze the results. All experiments P2 0.08635483 0.0874248

0.0542286

were rerun to ensure that the results are comparable. Detailed survey

is carried out in “Creative Handicrafts”, Mumbai and data collection P3 0.0992286 0.09835635 0.0835635

is done related to actual sales of manufactured apparels in the P4 0.0862286 0.1032769 0.0785831

company. P5 0.0892286 0.07792624 0.0794248

P6 0.0972286 0.10841386 0.0941381

Avg. 0.0842286 0.09559379 0.08291446

As we see in the above table the observed value of MAPE for

K-SVM is lower than the other techniques except the few cases.

TABLE III. MEAN ABSOLUTE DEVIATION

Classical

Product ANN K-SVM

(Naive)

P1 0.2774248 0.25823926 0.2313461

P2 0.2491538 0.29823926 0.2487574

P3 0.2844138 0.2728239 0.2918824

P4 0.2774248 0.32823926 0.3182393

Fig. 3 Quarterly apparel’s sales data P5 0.2974148 0.30296282 0.2762824

P6 0.2874871 0.29782393 0.2678239

There are three category of apparels are manufactured namely Avg. 0.2788865 0.29305473 0.27238858

Menswear, Woodenware and Kids ware. We have selected Men’s

Category from product details. The Product details along with the In the Table 3, the observed value of MAD for K-SVM is lower than

category are as follows; the other Classical technique and ANN except the few cases.

TABLE I. PRODUCT DETAILS TABLE IV. MEAN SQUARED ERROR

Classical

Category 1 Category 2 Category 3 Product ANN K-SVM

Product (Naive)

Men’s Women’s Kids

P1 Shirts Shirts Shirts P1 119.11536 108.886481 99.886481

P2 Trousers Trousers Trousers P2 117.41536 102.64577 104.936977

P3 T-Shirts Dress T-shirts P3 118.17436 111.123645 89.123645

P4 Jeans Jeans Jeans P4 109.94336 116.239836 98.239836

Kurtas &

P5 Kurtas Dress P5 109.88536 111.465725 113.465725

Kurtis

P6 Jackets Sarees Shorts P6 118.41536 106.917582 104.78652

Avg. 115.49152 109.546506 101.739864

IJCTA | May-June 2014 1115

Available online@www.ijcta.com

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

In the Table 4, the observed value of MSE for K-SVM is lower than [4] John Geweke and Charles Whiteman, Bayesian Forecasting,”

the other Classical technique and ANN except the few cases. Bayesian Forecasting”, Chapter 1 in Handbook of Economic

Forecasting, vol. 1, pp. 3-80, Elsevier 2006.

As we get contradictory results for some specific products, but the [5] Réal Carbonneau, Rustam Vahidov, Kevin Laframboise,

average error levels confirm that the proposed K-SVM methodology "Forecasting Supply Chain Demand Using Machine Learning

consistently outperforms ANN and Classical results, for all the three Algorithms", Chapter 6.9.

error measures of MAPE, MAD and MSE. This confirms the ability

of the proposed methodology to provide accurate forecasts. [6] Trigg and Leach, "Exponential Smoothing with an Adaptive

Response Rate", Operational Research, Vol. 18, No. 1, pp. 53-

59, Mar. 1967.

7. CONCLUSION

In this research paper, many time series forecasting methods are [7] V. Vapnik, S. Golowich, and A. Smola, “Support vector method

discussed and compared a number of widely used techniques for function approximation, regression estimation, and signal

for time series modeling and forecasting (prediction). We have processing,” Neural Information Processing Systems, vol. 9,

done an implementation of each technique and observe how each MIT Press, Cambridge, MA. 1997.

method works. Among these methods, different methods were [8] L. Cao and F. Tay, “Financial forecasting using support

encountered; pure statistical models like SMA, Naïve, WMA, ES vector machines,” Neural Computer & Applications, vol. 10,

and machine learning based methods like Support Vector pp.184-192, 2001.

Machines and Neural Networks. Different methods have their own

strengths and weaknesses depending upon different approaches and [9] Sansom, D. C., Downs, T., & Saha, T. K., “ Evaluation of

they used. support vector machine based forecasting tool in electricity

price forecasting for Australian national electricity market

Different methods fit in for different situations so understanding and participants”, Journal of Electrical and Electronics

interpreting the problem is required before choosing a technique to Engineering, Australia, 22(3), p p . 227- 234, 2003.

predict data. Specially, understanding data prior to algorithm [10] Joarder Kamruzzaman and Ruhul A. Sarker, “ANN-Based

selection is a major rule of thumb in data mining and related Forecasting of Foreign Currency Exchange Rates”, Neural

fields. The performance of these algorithms can be improved in Information Processing - Letters and Reviews, Vol. 3, No.

various ways. Pruning decision spaces, cleaning and preprocessing 2, May 2004.

time series data, improved coding standards, distributing

computation among different nodes can be considered as few [11] Neagu C.D., Guo G., Trundle P.R.,Cronin M.T.D, "A

general improvements. comparative study of machine learning algorithms applied to

predictive toxicology data mining", Alternatives to laboratory

The conclusion to arrive at this point is that no single techniques are animals : ATLA 35:1, pp. 25-32, 2007.

perfect, there are situations where one method would fit into a [12] Karpagavalli, Jamuna K and Vijaya MS, "Machine Learning

problem than another but this might be different for another Approach for Preoperative Anaesthetic Risk Prediction",

problem. So selecting a proper technique to analyze and forecast International Journal of Recent Trends in Engineering, Volume

time series data, one should have a sound and comprehensive No. 1, No. 2, 2009.

understanding of the problem domain.

[13] Cem Kadilar, Muammer Simsek and Cagdas Hakan Aladag,

Due to the significance of forecasting in many fields such as “F o r e c a s t in g the exchange rate series with ann: the case

stock prediction, medicine, biology, economics and many other of turkey”, Istanbul University Econometrics and Statistics e-

cutting edge research areas, researcher’s contributing towards Journal, pp. 17-29, 2009.

developing better approaches for time series prediction So the [14] J. Shahrabi, S. S. Mousavi and M. Heydar, "Supply Chain

importance and impact of better time series prediction models Demand Forecasting- A Comparison of Machine Learning

are obvious as making decisions about th e future is becoming Techniques and Traditional Methods", Journal of Applied

increasingly important and critical in many competitive Sciences, Volume 9,Issue 3, pp.521-527, 2009.

organizational structures.

[15] Hamid R. S. Mojaveri, Seyed S. Mousavi, Mojtaba Heydar, and

Ahmad Aminian, "Validation and Selection between Machine

8. ACKNOWLEDGMENTS Learning Technique and Traditional Methods to Reduce

This research paper is made possible through the help and support Bullwhip Effects: a Data Mining Approach", World Academy of

from Mr. Aaditya Desai. I would like to thank him for his valuable Science, Engineering and Technology, Volume 25, 2009.

advice, guidance and help. Special thanks to Mr. Johny Joseph,

Executive Officer of Creative Handicrafts Mumbai. He gives us an [16] Yang Lan Qin ; Xu Xin, “Research on the Price Prediction in

opportunity to explore the company procedures and doing surveys in Supply Chain based on Data Mining Technology”, Published in

the company. International Symposium on Instrumentation & Measurement,

Sensor Network and Automation (Volume:2 ), IEEE 2012.

[17] Karin Kandananond, “Consumer Product Demand Forecasting

9. REFERENCES based on Artificial Neural Network and Support Vector

Machine”, World Academy of Science, Engineering and

[1] lan H. Witten, Eibe Frank, “Data Mining – Practical Mahine Technology, 2012.

Learning Tools and Techniques,” 2nd Edition, Elsevier, 2005. [18] John B. Guerard, Jr., Introduction to Financial Forecasting in

[2] Efraim Turban, Linda Volonino, Information Technology for Investment Analysis: Springer New York, Online ISBN : 978-1-

Management: Wiley Publication, 8th Edition 2009. 4614-5239-3, 2013.

[3] Chopra, Sunil and Peter Meindl. Supply Chain Management. 2

ed. Upper Saddle River: Pearson Prentice Hall, 2004.

IJCTA | May-June 2014 1116

Available online@www.ijcta.com

ISSN:2229-6093

Shridhar Kamble et al, Int.J.Computer Technology & Applications,Vol 5 (3),1112-1117

Fig.4 Mean Absolute Percentage Error

Fig.5 Mean Absolute Deviation

Fig.6 Mean Squared Error

IJCTA | May-June 2014 1117

Available online@www.ijcta.com

You might also like

- Klara Sjolen and Erik Olofsson Design Sketching 2005 PDFDocument52 pagesKlara Sjolen and Erik Olofsson Design Sketching 2005 PDFVitalie VitalieNo ratings yet

- Correction Exercices DIANA Et AGnesDocument3 pagesCorrection Exercices DIANA Et AGnesMarwen GuesmiNo ratings yet

- Certnexus Certified Data Science Practitioner (CDSP) Exam Dsp-110Document9 pagesCertnexus Certified Data Science Practitioner (CDSP) Exam Dsp-110Hemanthsatyakumar SwarnaNo ratings yet

- Airtable - Ipynb - ColaboratoryDocument4 pagesAirtable - Ipynb - ColaboratoryVesselin NikovNo ratings yet

- JAVA Quick Reference PDFDocument3 pagesJAVA Quick Reference PDFMohamed NazimNo ratings yet

- Template Final Rapport PFE Latex 85 CopyDocument70 pagesTemplate Final Rapport PFE Latex 85 CopyMansour HasagiNo ratings yet

- Mastering With Ros: Turlebot3: How To Use Opencv in RosDocument21 pagesMastering With Ros: Turlebot3: How To Use Opencv in RosHusam Abu ZourNo ratings yet

- Map Reduce ExcerciseDocument2 pagesMap Reduce ExcerciseAshwin Ajmera0% (1)

- Fall 2010 Midterm Exam CS 319 Object-Oriented Software EngineeringDocument6 pagesFall 2010 Midterm Exam CS 319 Object-Oriented Software EngineeringUnusualFillerNo ratings yet

- Six Sigma DMAIC DMADV PDFDocument3 pagesSix Sigma DMAIC DMADV PDFromedic36No ratings yet

- Typhoon H User Manual UndatedDocument82 pagesTyphoon H User Manual UndatedJerry DeitzNo ratings yet

- K V y V, y V,: Bond Graph Modeling and Simulation of A Quarter Car Suspension System A Practical ActivityDocument2 pagesK V y V, y V,: Bond Graph Modeling and Simulation of A Quarter Car Suspension System A Practical ActivityEl GhafraouiNo ratings yet

- William D. Penny - Signal Processing CourseDocument178 pagesWilliam D. Penny - Signal Processing Coursejomasool100% (1)

- CS 229, Public Course Problem Set #1 Solutions: Supervised LearningDocument10 pagesCS 229, Public Course Problem Set #1 Solutions: Supervised Learningsuhar adiNo ratings yet

- Cs 229, Autumn 2016 Problem Set #2: Naive Bayes, SVMS, and TheoryDocument20 pagesCs 229, Autumn 2016 Problem Set #2: Naive Bayes, SVMS, and TheoryZeeshan Ali SayyedNo ratings yet

- Rapport PFE Smart Lead Generation Scrum EnglishDocument134 pagesRapport PFE Smart Lead Generation Scrum Englishbhkacem souhaNo ratings yet

- Java Beans Explained in DetailDocument13 pagesJava Beans Explained in DetailBalaji_SAPNo ratings yet

- Correction Multi-CoucheDocument20 pagesCorrection Multi-CoucheSamy NaifarNo ratings yet

- The Secure Zone Routing Protocol (SZRP) 1Document24 pagesThe Secure Zone Routing Protocol (SZRP) 1Kamalakar ReddyNo ratings yet

- DIGI-Net: A Deep Convolutional Neural Network For Multi-Format Digit RecognitionDocument11 pagesDIGI-Net: A Deep Convolutional Neural Network For Multi-Format Digit RecognitionHuseyin KusetogullariNo ratings yet

- HND Sector 4 Vol7 Software - 105540Document55 pagesHND Sector 4 Vol7 Software - 105540Ewi BenardNo ratings yet

- 2013F CPCS 202 - Exam 1 - SolutionDocument5 pages2013F CPCS 202 - Exam 1 - SolutionOsama MaherNo ratings yet

- A Brief History of Real Time Embedded SystemDocument27 pagesA Brief History of Real Time Embedded SystemPradeepdarshan PradeepNo ratings yet

- Handwritten Digit RegonizerDocument11 pagesHandwritten Digit RegonizerShubham Suyal100% (3)

- Consommi TounsiDocument83 pagesConsommi TounsiSami KrifaNo ratings yet

- CSL 210 Lab06 InheritanceDocument7 pagesCSL 210 Lab06 InheritancesamiullahNo ratings yet

- Exercise OFDM 1 PDFDocument33 pagesExercise OFDM 1 PDFAbdelhakim KhlifiNo ratings yet

- Cours 4 - ActivitésDocument36 pagesCours 4 - Activitésmor ndiayeNo ratings yet

- "Resume Ranking Using NLP and Machine Learning": Bachelor of EngineeringDocument41 pages"Resume Ranking Using NLP and Machine Learning": Bachelor of EngineeringNarges SodeifiNo ratings yet

- XT Camera Switch XT7100-XT500-EDocument2 pagesXT Camera Switch XT7100-XT500-Enatata18No ratings yet

- Computer Programming - C - : Mid-Term Exam For 02Document6 pagesComputer Programming - C - : Mid-Term Exam For 02張帕姆No ratings yet

- Research Paper On Android Based Home Automation Using Raspberry pi-IJAERDV04I0658329 PDFDocument3 pagesResearch Paper On Android Based Home Automation Using Raspberry pi-IJAERDV04I0658329 PDFAkshay AcchuNo ratings yet

- Huawei Hcia-Iot V2.0 Certification Exam OutlineDocument2 pagesHuawei Hcia-Iot V2.0 Certification Exam OutlineCarlos Solís TrinidadNo ratings yet

- JavaFx Using Scene Builder and With JDBC ConnectivityDocument9 pagesJavaFx Using Scene Builder and With JDBC ConnectivityNikith ChowdaryNo ratings yet

- PPTDocument20 pagesPPTHarshNo ratings yet

- Factor Participating and Impacting E-Markets Pioneers Behavioral TrackingDocument7 pagesFactor Participating and Impacting E-Markets Pioneers Behavioral Trackingindex PubNo ratings yet

- Introduction To Hadoop and Mapreduce - VM SetupDocument4 pagesIntroduction To Hadoop and Mapreduce - VM SetupDavid LlanesNo ratings yet

- Transfinite InterpolationDocument5 pagesTransfinite Interpolationdev burmanNo ratings yet

- GRASP PatternDocument76 pagesGRASP PatternDavinder KumarNo ratings yet

- PCA With An ExampleDocument7 pagesPCA With An ExampleB.Shyamala Gowri Asst.Prof-CSE DeptNo ratings yet

- 8.1.1.2 Packet Tracer - Create Your Own ThingDocument5 pages8.1.1.2 Packet Tracer - Create Your Own Thinggarfield19710% (1)

- Repport Btech FinalDocument49 pagesRepport Btech FinalSuzelle NGOUNOU MAGANo ratings yet

- Research On Speech Recognition Technique While Building Speech Recognition BotDocument13 pagesResearch On Speech Recognition Technique While Building Speech Recognition BotBurhan RajputNo ratings yet

- CXTOOL - Installation GuideDocument37 pagesCXTOOL - Installation GuideerbiliNo ratings yet

- Ospf Lab Opnet It GuruDocument3 pagesOspf Lab Opnet It GuruSheraz100% (1)

- Artificial Intelligence Notes Unit-4 Lecture-1 Expert SystemsDocument7 pagesArtificial Intelligence Notes Unit-4 Lecture-1 Expert SystemsShivangi ThakurNo ratings yet

- Network Simulation Using NS2: A Tutorial byDocument99 pagesNetwork Simulation Using NS2: A Tutorial byBhagyasri PatelNo ratings yet

- JavaFx Restaurant ManagementDocument60 pagesJavaFx Restaurant ManagementPrem LokeshNo ratings yet

- Les Statistiques Descriptives Est OujdaDocument4 pagesLes Statistiques Descriptives Est OujdaMouhsine EL MOUDIRNo ratings yet

- Association Rules FP GrowthDocument32 pagesAssociation Rules FP GrowthMuhammad TalhaNo ratings yet

- What Is Codename OneDocument18 pagesWhat Is Codename OneنورالدنياNo ratings yet

- DSK 6713 CCS V5.5 ProcedureDocument7 pagesDSK 6713 CCS V5.5 ProcedureNida WaheedNo ratings yet

- Rapport PFE Rania AliDocument69 pagesRapport PFE Rania AliDhouha Abbassi100% (1)

- QCM TelecomDocument3 pagesQCM TelecomChhengmean SimNo ratings yet

- Wireless Simulation Vanet - TCLDocument5 pagesWireless Simulation Vanet - TCLpiyushji125100% (1)

- System Sequence Diagram - Notification About Sales PDFDocument1 pageSystem Sequence Diagram - Notification About Sales PDFZayedNo ratings yet

- Oracle in ArabicDocument16 pagesOracle in ArabicRana HamedNo ratings yet

- Assignment - Robotics - Robots Classification-JIRA RIA AFRDocument3 pagesAssignment - Robotics - Robots Classification-JIRA RIA AFRSaud Ahmed NizamiNo ratings yet

- Az Support 1 - TBDocument44 pagesAz Support 1 - TBdunloper96No ratings yet

- AdminLTE 2 - DocumentationDocument32 pagesAdminLTE 2 - DocumentationYogi Cahyo GinanjarNo ratings yet

- Exercices en Java by Saad PDFDocument2 pagesExercices en Java by Saad PDFJimmyNo ratings yet

- Uml ExposéDocument19 pagesUml ExposéSALAHNo ratings yet

- (IJCST-V11I3P15) :auti Divya.S, Deshmukh Rajeshwari.B, Dumbre Komal.G, Dr.A.A.KhatriDocument5 pages(IJCST-V11I3P15) :auti Divya.S, Deshmukh Rajeshwari.B, Dumbre Komal.G, Dr.A.A.KhatriEighthSenseGroupNo ratings yet

- Irjet V9i11154Document4 pagesIrjet V9i11154abhiram2003pgdNo ratings yet

- SVMvs KNNDocument5 pagesSVMvs KNNLook HIMNo ratings yet

- Municipal Wi-Fi Networks: The Goals, Practices, and Policy Implications of The U.S. CaseDocument19 pagesMunicipal Wi-Fi Networks: The Goals, Practices, and Policy Implications of The U.S. CasejomasoolNo ratings yet

- Journal of Statistical Software: Regularization Paths For Generalized Linear Models Via Coordinate DescentDocument22 pagesJournal of Statistical Software: Regularization Paths For Generalized Linear Models Via Coordinate DescentjomasoolNo ratings yet

- New Bandwidth Selection Criterion For Kernel PCA Approach To Dimensionality Reduction and Classification ProblemsDocument12 pagesNew Bandwidth Selection Criterion For Kernel PCA Approach To Dimensionality Reduction and Classification ProblemsjomasoolNo ratings yet

- 2009 Nicholas I.Sapankevych Time Series Prediction - Using Support Vector Machines A SurveyDocument15 pages2009 Nicholas I.Sapankevych Time Series Prediction - Using Support Vector Machines A SurveyjomasoolNo ratings yet

- DataCamp - ForECASTING USING R - Dynamic RegressionDocument24 pagesDataCamp - ForECASTING USING R - Dynamic RegressionjomasoolNo ratings yet

- Multiprovider ServicesDocument6 pagesMultiprovider ServicesjomasoolNo ratings yet

- t9 PDFDocument77 pagest9 PDFjomasoolNo ratings yet

- How To Give A TalkDocument105 pagesHow To Give A TalkjomasoolNo ratings yet

- Sentiment Analysis: A Baseline AlgorithmDocument13 pagesSentiment Analysis: A Baseline AlgorithmMèo LườiNo ratings yet

- Heart Disease Python Report 1st PhaseDocument33 pagesHeart Disease Python Report 1st PhaseAishwarya PNo ratings yet

- Online Learning With Stream MiningDocument36 pagesOnline Learning With Stream MiningMikio BraunNo ratings yet

- SMS Spam Filtering Using Supervised Machine Learning AlgorithmsDocument6 pagesSMS Spam Filtering Using Supervised Machine Learning AlgorithmsGourob DasNo ratings yet

- Digital VisualizationDocument65 pagesDigital VisualizationAmani yar KhanNo ratings yet

- Bayesian Learning: Salma Itagi, SvitDocument14 pagesBayesian Learning: Salma Itagi, SvitSuhas NSNo ratings yet

- COMP 6930 Topic01 Classification BasicsDocument190 pagesCOMP 6930 Topic01 Classification BasicsPersonNo ratings yet

- Real Estate Price Prediction With Regression and ClassificationDocument5 pagesReal Estate Price Prediction With Regression and Classificationsk3146No ratings yet

- Fusionex ADA (Day1) v3.1 2022Document106 pagesFusionex ADA (Day1) v3.1 2022izzudinrozNo ratings yet

- DMDW Case Study FinishedDocument28 pagesDMDW Case Study FinishedYOSEPH TEMESGEN ITANANo ratings yet

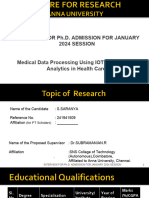

- S Saranya - (Ref No-241941509) - PH D Presentation Jan24Document20 pagesS Saranya - (Ref No-241941509) - PH D Presentation Jan24Shyam SundarNo ratings yet

- Automated Plant Disease Analysis (APDA) Performance Comparison of MachineDocument6 pagesAutomated Plant Disease Analysis (APDA) Performance Comparison of MachineSasi S INDIANo ratings yet

- Data Mining FullDocument19 pagesData Mining FullTejaswini ReddyNo ratings yet

- Advanced Python Training Content 2022Document4 pagesAdvanced Python Training Content 2022Rajat PugaliaNo ratings yet

- "Sentiment Analysis of Imdb Movie Reviews": A Project ReportDocument27 pages"Sentiment Analysis of Imdb Movie Reviews": A Project ReportAnimesh Kumar TilakNo ratings yet

- Spam Detection Framework For Android Twitter Application Using Naïve Bayes and K-Nearest Neighbor ClassifiersDocument6 pagesSpam Detection Framework For Android Twitter Application Using Naïve Bayes and K-Nearest Neighbor ClassifiersHaniAzzahraNo ratings yet

- Spam Detection Using Machine LearningDocument4 pagesSpam Detection Using Machine LearningLaxman BharateNo ratings yet

- Detecting Sarcasm in Text - An Obvious Solution To A Trivial ProblemDocument5 pagesDetecting Sarcasm in Text - An Obvious Solution To A Trivial ProblemsiewyukNo ratings yet

- ML QuestionsDocument56 pagesML QuestionsPavan KumarNo ratings yet

- BookSlides 11 The Art of Machine Learning For Predictive Data AnalyticsDocument27 pagesBookSlides 11 The Art of Machine Learning For Predictive Data AnalyticsMba NaniNo ratings yet

- Elsa Putri Supha Dewintha - 21421426 - HeaderFooterDocument18 pagesElsa Putri Supha Dewintha - 21421426 - HeaderFooterdewiNo ratings yet

- Multiple Disease Prediction Using Different Machine Learning Algorithms ComparativelyDocument5 pagesMultiple Disease Prediction Using Different Machine Learning Algorithms ComparativelyNeha BhatiNo ratings yet

- Ain Shams Engineering Journal: Eman M. Bahgat, Sherine Rady, Walaa Gad, Ibrahim F. MoawadDocument11 pagesAin Shams Engineering Journal: Eman M. Bahgat, Sherine Rady, Walaa Gad, Ibrahim F. MoawadSudeshna KunduNo ratings yet

- Chronic Kidney Disease Detection With Appropriate Diet PlanDocument9 pagesChronic Kidney Disease Detection With Appropriate Diet PlanIJRASETPublicationsNo ratings yet

- Unit 3 - DM FULLDocument46 pagesUnit 3 - DM FULLmintoNo ratings yet

- Kim 2016Document5 pagesKim 2016Anjani ChairunnisaNo ratings yet

- Essentials of Machine Learning Algorithms (With Python and R Codes) PDFDocument20 pagesEssentials of Machine Learning Algorithms (With Python and R Codes) PDFTeodor von Burg100% (1)