Professional Documents

Culture Documents

Chapter 5: Mind Map: Mathematical Functions

Chapter 5: Mind Map: Mathematical Functions

Uploaded by

Amir RashideeCopyright:

Available Formats

You might also like

- ScholarshipDocument17 pagesScholarshipharindramehtaNo ratings yet

- Project Kick Off Meeting TemplateDocument11 pagesProject Kick Off Meeting TemplateJunior Leite75% (4)

- D78850GC20 sg2Document298 pagesD78850GC20 sg2pisiu73100% (1)

- Machine Learning FileDocument7 pagesMachine Learning FilebuddyNo ratings yet

- Unsupervised Learning NotesDocument21 pagesUnsupervised Learning Notesneeharika.sssvvNo ratings yet

- ML Interview QuestionsDocument60 pagesML Interview Questionskoti199912No ratings yet

- 3 Tree Diagram - Explained With Example and Case StudyDocument7 pages3 Tree Diagram - Explained With Example and Case Studyashwani patelNo ratings yet

- Machine Learning QuestionsDocument2 pagesMachine Learning QuestionsPriyaprasad PandaNo ratings yet

- Innovative Model To Augment Small Datasets For ClassificationDocument7 pagesInnovative Model To Augment Small Datasets For ClassificationIJAR JOURNALNo ratings yet

- Machine Learning: B.Tech (CSBS) V SemesterDocument17 pagesMachine Learning: B.Tech (CSBS) V SemesterLIKHIT JHANo ratings yet

- Visvesvaraya Technological University Belagavi: House Price Prediction Using Machine LearningDocument9 pagesVisvesvaraya Technological University Belagavi: House Price Prediction Using Machine Learningjaya sreeNo ratings yet

- Introduction To Machine Learning: The Problem of OverfittingDocument8 pagesIntroduction To Machine Learning: The Problem of OverfittingMuneeb ButtNo ratings yet

- Breast Cancer Tumor Prediction Using XGBOOSTDocument1 pageBreast Cancer Tumor Prediction Using XGBOOSTVicky NagarNo ratings yet

- Pemodelan Analisis PrediktifDocument20 pagesPemodelan Analisis PrediktifRicco Putra PerdanaNo ratings yet

- Ai Project CycleDocument9 pagesAi Project CycleHardik GulatiNo ratings yet

- Curse of Dimensionality and Its ReductionDocument5 pagesCurse of Dimensionality and Its ReductionSameer KattelNo ratings yet

- Strategy DeckDocument16 pagesStrategy Decksaicherish90No ratings yet

- Types of MCDocument29 pagesTypes of MCnjhujhkunNo ratings yet

- 2 Interrelationship Diagram - Explained With Example & Case StudyDocument7 pages2 Interrelationship Diagram - Explained With Example & Case Studyashwani patelNo ratings yet

- Northbay Summarizes Data Pre-Processing AlgorithmsDocument10 pagesNorthbay Summarizes Data Pre-Processing AlgorithmssurendersaraNo ratings yet

- Model-Based Data-Complexity Estimator For Deep Learning SystemsDocument8 pagesModel-Based Data-Complexity Estimator For Deep Learning SystemsNathalia SantosNo ratings yet

- ML Summary PDFDocument5 pagesML Summary PDFJeevikaGoyalNo ratings yet

- Deep Learning: Seungsang OhDocument6 pagesDeep Learning: Seungsang OhKaAI KookminNo ratings yet

- Breast Cancer Using Image ProcessingDocument3 pagesBreast Cancer Using Image ProcessingRishabh KhoslaNo ratings yet

- Supervised LearningDocument210 pagesSupervised LearningRajachandra VoodigaNo ratings yet

- Lecture 8Document11 pagesLecture 8bevzogalaNo ratings yet

- Adadelta: An Adaptive Learning Rate Method Matthew D. Zeiler Google Inc., USA New York University, USADocument6 pagesAdadelta: An Adaptive Learning Rate Method Matthew D. Zeiler Google Inc., USA New York University, USAOlmer OlorteguiNo ratings yet

- Cheatsheet Midterms 2 - 3Document2 pagesCheatsheet Midterms 2 - 3Chen YuyingNo ratings yet

- Machine Learning Techniques in Cancer DiagnosisDocument4 pagesMachine Learning Techniques in Cancer Diagnosiskrishna sasankNo ratings yet

- OCR A Level H046 H446 Computational Thinking Cheat SheetDocument5 pagesOCR A Level H046 H446 Computational Thinking Cheat Sheetrebeccaau07No ratings yet

- 16 Comparison of Data Science AlgorithmsDocument13 pages16 Comparison of Data Science AlgorithmsshardullavandeNo ratings yet

- Regularizing Deep Networks With Semantic Data AugmentationDocument18 pagesRegularizing Deep Networks With Semantic Data Augmentationjack1zxvNo ratings yet

- Data ScienceDocument5 pagesData ScienceKritikaNo ratings yet

- Aws ML PDFDocument74 pagesAws ML PDFRajat ShrinetNo ratings yet

- Ca-Project: Aryan Devesh Puja Shabnas MuditDocument8 pagesCa-Project: Aryan Devesh Puja Shabnas MuditminionNo ratings yet

- Data Modeling and Database Design 2Nd Edition PDF Full Chapter PDFDocument53 pagesData Modeling and Database Design 2Nd Edition PDF Full Chapter PDFrvaenejan100% (7)

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- In5490 ClassificationDocument85 pagesIn5490 Classificationsherin joysonNo ratings yet

- Machine Learning FondamentalsDocument9 pagesMachine Learning Fondamentalsjemai.mohamedazeNo ratings yet

- Techniques (13 - 16)Document1 pageTechniques (13 - 16)call.bethel TayeNo ratings yet

- 6 الى13 داتا ماينقDocument19 pages6 الى13 داتا ماينقSoma FadiNo ratings yet

- Predict and Impute Missing Values in Diabetes Dataset Using OSICM and SVMDocument25 pagesPredict and Impute Missing Values in Diabetes Dataset Using OSICM and SVMAnbu SenthilNo ratings yet

- Random Forest AlgorithmDocument4 pagesRandom Forest Algorithmshipukumar009No ratings yet

- 02 Machine Learning OverviewDocument103 pages02 Machine Learning OverviewDhouha BenzinaNo ratings yet

- Techniques (17 - 20)Document1 pageTechniques (17 - 20)call.bethel TayeNo ratings yet

- Data Science: DemystifyingDocument73 pagesData Science: DemystifyingSukant Kumar ChoudharyNo ratings yet

- Regression Pros ConsDocument1 pageRegression Pros ConsAmit KasarNo ratings yet

- Data Pre ProcessingDocument23 pagesData Pre Processingee23b007No ratings yet

- Macaw Power BI Cheat Sheet ENDocument2 pagesMacaw Power BI Cheat Sheet ENphang7No ratings yet

- Timeline Visual Charts Presentation in Aquamarine Black White Simple StyleDocument9 pagesTimeline Visual Charts Presentation in Aquamarine Black White Simple StyleAm kaeNo ratings yet

- Deep Super Learner: A Deep Ensemble For Classification ProblemsDocument12 pagesDeep Super Learner: A Deep Ensemble For Classification Problemsvishesh goyalNo ratings yet

- Bill's PresentationDocument38 pagesBill's PresentationEduardo VieiraNo ratings yet

- Deployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformDocument1 pageDeployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformGaby GonzálezNo ratings yet

- 2 Machine Learning OverviewDocument86 pages2 Machine Learning Overviewahmadaus158No ratings yet

- Step-By-Step Guide To Execute Linear Regression in RDocument12 pagesStep-By-Step Guide To Execute Linear Regression in RResfeber RahulNo ratings yet

- Towards Data Science All About Feature ScalingDocument16 pagesTowards Data Science All About Feature ScalingSəidə ƏlizadəNo ratings yet

- Housing Price Prediction Modeling Using Machine LearningDocument6 pagesHousing Price Prediction Modeling Using Machine LearningRamesh ShresthaNo ratings yet

- Chapter 7 LearningDocument34 pagesChapter 7 Learningsa00059No ratings yet

- Supervised Machine Learning ModelsDocument1 pageSupervised Machine Learning ModelsLum&kpNo ratings yet

- Machine Learning Section4 Ebook v03Document20 pagesMachine Learning Section4 Ebook v03camgovaNo ratings yet

- AW-GD202 Abort Switch User Manual 20230316Document1 pageAW-GD202 Abort Switch User Manual 20230316AbrhamNo ratings yet

- Sub-Systems and Components For UNMANNED AERIAL VEHICLE SystemsDocument4 pagesSub-Systems and Components For UNMANNED AERIAL VEHICLE Systemsvikram_goelNo ratings yet

- Record Client L-IIDocument11 pagesRecord Client L-IILetaNo ratings yet

- The Brainy Book For Smarter ItsmDocument36 pagesThe Brainy Book For Smarter ItsmPablo Andres Diaz AramburoNo ratings yet

- Support Vector Machine (SVM) PDFDocument15 pagesSupport Vector Machine (SVM) PDFDhivya S - 67No ratings yet

- Desta Legese 2020Document121 pagesDesta Legese 2020hawariya abelNo ratings yet

- Tutor Hack SatelitDocument15 pagesTutor Hack SatelitKemalNo ratings yet

- Powerpak SEM Manual Rev N4Document62 pagesPowerpak SEM Manual Rev N4Renzo De PisisNo ratings yet

- Mobile Edge Computing (MEC)Document40 pagesMobile Edge Computing (MEC)Niharika PradhumanNo ratings yet

- SAP Analytics Emergency License Key Process PDFDocument4 pagesSAP Analytics Emergency License Key Process PDFVishal K P S&VNo ratings yet

- Errolog FinanceiroDocument4 pagesErrolog FinanceiroJefferson DouglasNo ratings yet

- AllData 10.53 (3Q.2013) Full Set - Domestic-Asian-EuropeDocument6 pagesAllData 10.53 (3Q.2013) Full Set - Domestic-Asian-EuropePapeda PapuaNo ratings yet

- Iready PD Success Guide For Teachers 2020 2 PDFDocument124 pagesIready PD Success Guide For Teachers 2020 2 PDFMexica UprisingNo ratings yet

- Using Maxim DS1307 Real Time Clock With Atmel AVR Microcontroller ErmicroblogDocument24 pagesUsing Maxim DS1307 Real Time Clock With Atmel AVR Microcontroller ErmicroblogVictor CamposNo ratings yet

- Employee Record SystemDocument73 pagesEmployee Record SystemDheeraj Singh67% (3)

- DTC IndexDocument34 pagesDTC IndexArturoRatierNo ratings yet

- Description: Princeton Technology CorpDocument5 pagesDescription: Princeton Technology CorpReynaldo CastilloNo ratings yet

- The Ultimate KiCad Flex PCB Design GuideDocument5 pagesThe Ultimate KiCad Flex PCB Design GuidejackNo ratings yet

- 3.1 Usage of Ajax and JsonDocument18 pages3.1 Usage of Ajax and JsonntwaliisimbivieiraNo ratings yet

- Gujarat Technological UniversityDocument1 pageGujarat Technological Universityvifaket581No ratings yet

- Tablet PC: Starter ManualDocument6 pagesTablet PC: Starter ManualViktorija AvicenaNo ratings yet

- MMC Cyber Handbook 2021Document59 pagesMMC Cyber Handbook 2021alphaoneNo ratings yet

- A6V10796498 - Electronic Heat Cost Allocator WHE5.. - WHE6.. - enDocument16 pagesA6V10796498 - Electronic Heat Cost Allocator WHE5.. - WHE6.. - enssssNo ratings yet

- Dom's Exit StrategiesDocument21 pagesDom's Exit StrategiesJuan Manuel Dominguez BarcaNo ratings yet

- Lab 09 QPSKDocument4 pagesLab 09 QPSKHassaan ShahzadNo ratings yet

- Amazon Aurora: Mysql Compatible EditionDocument19 pagesAmazon Aurora: Mysql Compatible EditiongalessNo ratings yet

- Sample Qualitative Literature ReviewDocument8 pagesSample Qualitative Literature Reviewafduaciuf100% (1)

- Information TechnologyDocument20 pagesInformation TechnologyLan Nhi NguyenNo ratings yet

Chapter 5: Mind Map: Mathematical Functions

Chapter 5: Mind Map: Mathematical Functions

Uploaded by

Amir RashideeOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

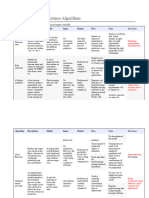

Chapter 5: Mind Map: Mathematical Functions

Chapter 5: Mind Map: Mathematical Functions

Uploaded by

Amir RashideeCopyright:

Available Formats

Linear

Classes are distinct and separable

Iris model from other chapter

Better fit = more attributes

Patterns that do not generalize: over-fitting

Mathematical Functions Over-fitting and it's Avoidance

Adding more xi's is more complex Allows for flexibility when searching data

wi = a learned parameter

Measure accuracy on training and test set

If not pure: estimate based on average

Sweet spot: where it starts to over-fit

Generalization

Sectioning to get "pure" data Chapter 5: Mind Map Not fit with other data: over-fit

Over-fitting in Tree Induction

For previously unseen data

memorizes training data and doesn't generalize

Number of nodes = complexity of the tree

Sampling approach = table model

Growing trees until the leaves are pure: how to over-fit

If fails: more realistic models will fail too

All data models could and do, do this

Recognize and manage in the principle way

Based on how complex you allow the model to be

Tendency to make models with training data

Overfitting

At the expense of Generalization

Based on accuracy as a model of complexity

Fitting Graph

Comparing predicted values w/hidden true values Increases when you allow more flexibility

Generalizaiton Performance

Why is it bad?

estimated performance

estimates all data

Must mis-trust data on a training set Cross-validation:

More sophisticated

Churn Data-set Model will pick up harmful correlations

all models are susceptible to over-fitting effects

Tree induction

Stop growing the tree

Avoidance

Grow until it is too large hen prune it back

Estimate the generalizing performance of each model

Find the right balance

Equations

Parameter optimization

You might also like

- ScholarshipDocument17 pagesScholarshipharindramehtaNo ratings yet

- Project Kick Off Meeting TemplateDocument11 pagesProject Kick Off Meeting TemplateJunior Leite75% (4)

- D78850GC20 sg2Document298 pagesD78850GC20 sg2pisiu73100% (1)

- Machine Learning FileDocument7 pagesMachine Learning FilebuddyNo ratings yet

- Unsupervised Learning NotesDocument21 pagesUnsupervised Learning Notesneeharika.sssvvNo ratings yet

- ML Interview QuestionsDocument60 pagesML Interview Questionskoti199912No ratings yet

- 3 Tree Diagram - Explained With Example and Case StudyDocument7 pages3 Tree Diagram - Explained With Example and Case Studyashwani patelNo ratings yet

- Machine Learning QuestionsDocument2 pagesMachine Learning QuestionsPriyaprasad PandaNo ratings yet

- Innovative Model To Augment Small Datasets For ClassificationDocument7 pagesInnovative Model To Augment Small Datasets For ClassificationIJAR JOURNALNo ratings yet

- Machine Learning: B.Tech (CSBS) V SemesterDocument17 pagesMachine Learning: B.Tech (CSBS) V SemesterLIKHIT JHANo ratings yet

- Visvesvaraya Technological University Belagavi: House Price Prediction Using Machine LearningDocument9 pagesVisvesvaraya Technological University Belagavi: House Price Prediction Using Machine Learningjaya sreeNo ratings yet

- Introduction To Machine Learning: The Problem of OverfittingDocument8 pagesIntroduction To Machine Learning: The Problem of OverfittingMuneeb ButtNo ratings yet

- Breast Cancer Tumor Prediction Using XGBOOSTDocument1 pageBreast Cancer Tumor Prediction Using XGBOOSTVicky NagarNo ratings yet

- Pemodelan Analisis PrediktifDocument20 pagesPemodelan Analisis PrediktifRicco Putra PerdanaNo ratings yet

- Ai Project CycleDocument9 pagesAi Project CycleHardik GulatiNo ratings yet

- Curse of Dimensionality and Its ReductionDocument5 pagesCurse of Dimensionality and Its ReductionSameer KattelNo ratings yet

- Strategy DeckDocument16 pagesStrategy Decksaicherish90No ratings yet

- Types of MCDocument29 pagesTypes of MCnjhujhkunNo ratings yet

- 2 Interrelationship Diagram - Explained With Example & Case StudyDocument7 pages2 Interrelationship Diagram - Explained With Example & Case Studyashwani patelNo ratings yet

- Northbay Summarizes Data Pre-Processing AlgorithmsDocument10 pagesNorthbay Summarizes Data Pre-Processing AlgorithmssurendersaraNo ratings yet

- Model-Based Data-Complexity Estimator For Deep Learning SystemsDocument8 pagesModel-Based Data-Complexity Estimator For Deep Learning SystemsNathalia SantosNo ratings yet

- ML Summary PDFDocument5 pagesML Summary PDFJeevikaGoyalNo ratings yet

- Deep Learning: Seungsang OhDocument6 pagesDeep Learning: Seungsang OhKaAI KookminNo ratings yet

- Breast Cancer Using Image ProcessingDocument3 pagesBreast Cancer Using Image ProcessingRishabh KhoslaNo ratings yet

- Supervised LearningDocument210 pagesSupervised LearningRajachandra VoodigaNo ratings yet

- Lecture 8Document11 pagesLecture 8bevzogalaNo ratings yet

- Adadelta: An Adaptive Learning Rate Method Matthew D. Zeiler Google Inc., USA New York University, USADocument6 pagesAdadelta: An Adaptive Learning Rate Method Matthew D. Zeiler Google Inc., USA New York University, USAOlmer OlorteguiNo ratings yet

- Cheatsheet Midterms 2 - 3Document2 pagesCheatsheet Midterms 2 - 3Chen YuyingNo ratings yet

- Machine Learning Techniques in Cancer DiagnosisDocument4 pagesMachine Learning Techniques in Cancer Diagnosiskrishna sasankNo ratings yet

- OCR A Level H046 H446 Computational Thinking Cheat SheetDocument5 pagesOCR A Level H046 H446 Computational Thinking Cheat Sheetrebeccaau07No ratings yet

- 16 Comparison of Data Science AlgorithmsDocument13 pages16 Comparison of Data Science AlgorithmsshardullavandeNo ratings yet

- Regularizing Deep Networks With Semantic Data AugmentationDocument18 pagesRegularizing Deep Networks With Semantic Data Augmentationjack1zxvNo ratings yet

- Data ScienceDocument5 pagesData ScienceKritikaNo ratings yet

- Aws ML PDFDocument74 pagesAws ML PDFRajat ShrinetNo ratings yet

- Ca-Project: Aryan Devesh Puja Shabnas MuditDocument8 pagesCa-Project: Aryan Devesh Puja Shabnas MuditminionNo ratings yet

- Data Modeling and Database Design 2Nd Edition PDF Full Chapter PDFDocument53 pagesData Modeling and Database Design 2Nd Edition PDF Full Chapter PDFrvaenejan100% (7)

- Unit 5Document8 pagesUnit 5arinkamble1711No ratings yet

- In5490 ClassificationDocument85 pagesIn5490 Classificationsherin joysonNo ratings yet

- Machine Learning FondamentalsDocument9 pagesMachine Learning Fondamentalsjemai.mohamedazeNo ratings yet

- Techniques (13 - 16)Document1 pageTechniques (13 - 16)call.bethel TayeNo ratings yet

- 6 الى13 داتا ماينقDocument19 pages6 الى13 داتا ماينقSoma FadiNo ratings yet

- Predict and Impute Missing Values in Diabetes Dataset Using OSICM and SVMDocument25 pagesPredict and Impute Missing Values in Diabetes Dataset Using OSICM and SVMAnbu SenthilNo ratings yet

- Random Forest AlgorithmDocument4 pagesRandom Forest Algorithmshipukumar009No ratings yet

- 02 Machine Learning OverviewDocument103 pages02 Machine Learning OverviewDhouha BenzinaNo ratings yet

- Techniques (17 - 20)Document1 pageTechniques (17 - 20)call.bethel TayeNo ratings yet

- Data Science: DemystifyingDocument73 pagesData Science: DemystifyingSukant Kumar ChoudharyNo ratings yet

- Regression Pros ConsDocument1 pageRegression Pros ConsAmit KasarNo ratings yet

- Data Pre ProcessingDocument23 pagesData Pre Processingee23b007No ratings yet

- Macaw Power BI Cheat Sheet ENDocument2 pagesMacaw Power BI Cheat Sheet ENphang7No ratings yet

- Timeline Visual Charts Presentation in Aquamarine Black White Simple StyleDocument9 pagesTimeline Visual Charts Presentation in Aquamarine Black White Simple StyleAm kaeNo ratings yet

- Deep Super Learner: A Deep Ensemble For Classification ProblemsDocument12 pagesDeep Super Learner: A Deep Ensemble For Classification Problemsvishesh goyalNo ratings yet

- Bill's PresentationDocument38 pagesBill's PresentationEduardo VieiraNo ratings yet

- Deployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformDocument1 pageDeployment: Cheat Sheet: Machine Learning With KNIME Analytics PlatformGaby GonzálezNo ratings yet

- 2 Machine Learning OverviewDocument86 pages2 Machine Learning Overviewahmadaus158No ratings yet

- Step-By-Step Guide To Execute Linear Regression in RDocument12 pagesStep-By-Step Guide To Execute Linear Regression in RResfeber RahulNo ratings yet

- Towards Data Science All About Feature ScalingDocument16 pagesTowards Data Science All About Feature ScalingSəidə ƏlizadəNo ratings yet

- Housing Price Prediction Modeling Using Machine LearningDocument6 pagesHousing Price Prediction Modeling Using Machine LearningRamesh ShresthaNo ratings yet

- Chapter 7 LearningDocument34 pagesChapter 7 Learningsa00059No ratings yet

- Supervised Machine Learning ModelsDocument1 pageSupervised Machine Learning ModelsLum&kpNo ratings yet

- Machine Learning Section4 Ebook v03Document20 pagesMachine Learning Section4 Ebook v03camgovaNo ratings yet

- AW-GD202 Abort Switch User Manual 20230316Document1 pageAW-GD202 Abort Switch User Manual 20230316AbrhamNo ratings yet

- Sub-Systems and Components For UNMANNED AERIAL VEHICLE SystemsDocument4 pagesSub-Systems and Components For UNMANNED AERIAL VEHICLE Systemsvikram_goelNo ratings yet

- Record Client L-IIDocument11 pagesRecord Client L-IILetaNo ratings yet

- The Brainy Book For Smarter ItsmDocument36 pagesThe Brainy Book For Smarter ItsmPablo Andres Diaz AramburoNo ratings yet

- Support Vector Machine (SVM) PDFDocument15 pagesSupport Vector Machine (SVM) PDFDhivya S - 67No ratings yet

- Desta Legese 2020Document121 pagesDesta Legese 2020hawariya abelNo ratings yet

- Tutor Hack SatelitDocument15 pagesTutor Hack SatelitKemalNo ratings yet

- Powerpak SEM Manual Rev N4Document62 pagesPowerpak SEM Manual Rev N4Renzo De PisisNo ratings yet

- Mobile Edge Computing (MEC)Document40 pagesMobile Edge Computing (MEC)Niharika PradhumanNo ratings yet

- SAP Analytics Emergency License Key Process PDFDocument4 pagesSAP Analytics Emergency License Key Process PDFVishal K P S&VNo ratings yet

- Errolog FinanceiroDocument4 pagesErrolog FinanceiroJefferson DouglasNo ratings yet

- AllData 10.53 (3Q.2013) Full Set - Domestic-Asian-EuropeDocument6 pagesAllData 10.53 (3Q.2013) Full Set - Domestic-Asian-EuropePapeda PapuaNo ratings yet

- Iready PD Success Guide For Teachers 2020 2 PDFDocument124 pagesIready PD Success Guide For Teachers 2020 2 PDFMexica UprisingNo ratings yet

- Using Maxim DS1307 Real Time Clock With Atmel AVR Microcontroller ErmicroblogDocument24 pagesUsing Maxim DS1307 Real Time Clock With Atmel AVR Microcontroller ErmicroblogVictor CamposNo ratings yet

- Employee Record SystemDocument73 pagesEmployee Record SystemDheeraj Singh67% (3)

- DTC IndexDocument34 pagesDTC IndexArturoRatierNo ratings yet

- Description: Princeton Technology CorpDocument5 pagesDescription: Princeton Technology CorpReynaldo CastilloNo ratings yet

- The Ultimate KiCad Flex PCB Design GuideDocument5 pagesThe Ultimate KiCad Flex PCB Design GuidejackNo ratings yet

- 3.1 Usage of Ajax and JsonDocument18 pages3.1 Usage of Ajax and JsonntwaliisimbivieiraNo ratings yet

- Gujarat Technological UniversityDocument1 pageGujarat Technological Universityvifaket581No ratings yet

- Tablet PC: Starter ManualDocument6 pagesTablet PC: Starter ManualViktorija AvicenaNo ratings yet

- MMC Cyber Handbook 2021Document59 pagesMMC Cyber Handbook 2021alphaoneNo ratings yet

- A6V10796498 - Electronic Heat Cost Allocator WHE5.. - WHE6.. - enDocument16 pagesA6V10796498 - Electronic Heat Cost Allocator WHE5.. - WHE6.. - enssssNo ratings yet

- Dom's Exit StrategiesDocument21 pagesDom's Exit StrategiesJuan Manuel Dominguez BarcaNo ratings yet

- Lab 09 QPSKDocument4 pagesLab 09 QPSKHassaan ShahzadNo ratings yet

- Amazon Aurora: Mysql Compatible EditionDocument19 pagesAmazon Aurora: Mysql Compatible EditiongalessNo ratings yet

- Sample Qualitative Literature ReviewDocument8 pagesSample Qualitative Literature Reviewafduaciuf100% (1)

- Information TechnologyDocument20 pagesInformation TechnologyLan Nhi NguyenNo ratings yet