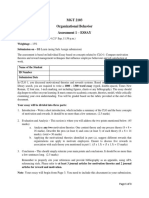

Professional Documents

Culture Documents

Time Series Analysis - Data Exploration and Visualization. - by Himani Gulati - Jovian - Medium

Time Series Analysis - Data Exploration and Visualization. - by Himani Gulati - Jovian - Medium

Uploaded by

vaskoreOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Time Series Analysis - Data Exploration and Visualization. - by Himani Gulati - Jovian - Medium

Time Series Analysis - Data Exploration and Visualization. - by Himani Gulati - Jovian - Medium

Uploaded by

vaskoreCopyright:

Available Formats

Upgrade

Follow 589 Followers · Newsletter Archive Learn Data Science Contribute About

Time Series Analysis — Data

Exploration and Visualization.

A simple walkthrough to handle time-series data and the statistics

involved.

Himani Gulati Jan 5 · 12 min read

A picture is worth a thousand words, as the saying

goes. And it definitely holds true in data analysis.

As a beginner, I really struggled to put pieces of the ‘THE TIME SERIES’

puzzle together, Hence I have tried to cover the most basic of the things

to the hopefully bigger ones, which once again makes this a beginner-

friendly Project. You can find the notebook for the source code here.

I still would suggest you'll to pick up Statistics as a subject if this is a field

where you’re headed. But, don't forget, Machine Learning != Statistics.

Statistics is Key… Well at least, one of the keys.

A Time Series Data is simply a sequence of data in chronological order (i.e

following the order of occurrence) which is used by businesses to analyze

past data and make better decisions. This project aims to create a basic

understanding of how to deal with and visualize time series data.

I have used Stock Data, hence also tried to come to a buy or sell decision

implementing one of the Trend-Following Strategies, and explored a few

others too, but only theoretically. I am still not definite with the statement

of whether Machine Learning Models can be used to predict movement in

the stock markets or not. I found this in one of the articles I read and I

couldn't agree more.

Note: There are many forms of analysis to determine the worth of an

investment/trade, I have only focused on the technical analysis of the

stock market here which solely takes historical data i.e directly related to

the particular stock into account. You can find out how ML helps with

fundamental analysis which involves the process of understanding a

stock’s intrinsic/inherent values here in this set of articles by Marco

Santos.

Data Exploration:

The Data I have used in this fieldwork has been scraped by Prakhar Goel

and can be found here and here. These are two CSV formatted files, which

contain some data from the Indian stock market on about 65 shares, from

the month of April to Sept-2020. The first CSV file, contains,

open_price(Opening Price), close_price(Closing Price),

high_price(Highest Price), low_price (Lowest Price), the timestamp, and

the Scrip_id for stock identification. And the second one contains names

for particular stocks and their exchange.

stocks_df = pd.read_csv(data_directory)

print(len(stocks_df))

stocks_df

2321232

id timestamp open_price high_price low_price close_price volume scrip_id

2020-02-24

0 1 1170.00 1170.00 1149.25 1164.60 104528 2

09:15:00+05:30

2020-02-24

1 13008 318.00 318.00 311.65 312.30 22036 1

09:15:00+05:30

2020-02-24

2 26015 828.95 828.95 825.45 825.85 22222 3

09:15:00+05:30

2020-02-24

3 39022 1672.00 1672.00 1665.00 1667.10 15844 4

09:15:00+05:30

2020-02-24

4 52029 1469.75 1469.75 1463.10 1465.05 150673 5

09:15:00+05:30

... ... ... ... ... ... ... ... ...

2020-09-03

2321227 2666522 108.40 108.70 108.40 108.70 1317 41

15:29:00+05:30

2020-09-03

2321228 2683019 497.00 497.00 496.10 497.00 1752 42

15:29:00+05:30

2020-09-03

2321229 2699517 827.00 828.00 826.95 828.00 54 43

15:29:00+05:30

2020-09-03

2321230 2716011 765.70 766.45 764.00 766.00 606 44

15:29:00+05:30

2020-09-03

2321231 2731697 1840.00 1840.00 1837.30 1840.00 197 45

15:29:00+05:30

2321232 rows × 8 columns

Hosted on Jovian View File

First DataFame

df = pd.read_csv(data_directory_two)

df.head()

id name zerodha_id exchange

0 1 GLENMARK 1895937 NSE

1 2 INDUSINDBK 1346049 NSE

2 3 TECHM 3465729 NSE

3 4 KOTAKBANK 492033 NSE

4 5 RELIANCE 738561 NSE

Hosted on Jovian View File

Second DataFrame

I have joined the two to get a single DataFrame called merged_df, and you

can see the names of the stock categories or the unique stocks whose

information I have in the datasets.

df.rename(columns = {'id':'scrip_id'}, inplace=True)

merged_df = stocks_df.merge(df, on='scrip_id')

merged_df.head()

timestamp open_price high_price low_price close_price volume scrip_id name zerodha_id

2020-02-24

0 1170.00 1170.00 1149.25 1164.60 104528 2 INDUSINDBK 1346049

09:15:00+05:30

2020-02-24

1 1165.60 1168.50 1159.00 1160.75 109720 2 INDUSINDBK 1346049

09:16:00+05:30

2020-02-24

2 1162.35 1164.45 1161.00 1163.05 48984 2 INDUSINDBK 1346049

09:17:00+05:30

2020-02-24

3 1164.10 1168.85 1163.65 1168.85 67387 2 INDUSINDBK 1346049

09:18:00+05:30

2020-02-24

4 1168.35 1173.00 1166.45 1173.00 78541 2 INDUSINDBK 1346049

09:19:00+05:30

Hosted on Jovian View File

Merged Dataframe.

merged_df['name'].unique()

array(['INDUSINDBK', 'GLENMARK', 'TECHM', 'KOTAKBANK', 'RELIANCE',

'HINDUNILVR', 'HDFCBANK', 'INFY', 'ADANIPORTS', 'WIPRO', 'ONGC',

'HDFC', 'TCS', 'RECLTD', 'NLCINDIA', 'PTC', 'GAIL', 'ITC', 'SBIN',

'SUNPHARMA', 'POWERGRID', 'CIPLA', 'ZEEL', 'M&M', 'IBULHSGFIN',

'VEDL', 'AXISBANK', 'HINDALCO', 'ICICIBANK', 'MUTHOOTFIN',

'MARUTI', 'BHARTIARTL', 'INFRATEL', 'PNBHOUSING', 'PFC',

'NIFTY 50', 'SENSEX', 'RELINFRA', 'BEL', 'DABUR', 'AUROPHARMA',

'JBCHEPHARM', 'VMART', 'PVR', 'AARTIIND', 'ESCORTS', 'IOC',

'ABFRL', 'HCLTECH', 'IGL', 'LUPIN', 'APOLLOHOSP', 'TORNTPHARM',

'NIFTY20DEC9000PE', 'NIFTY20DEC8000PE', 'NIFTY20DEC10000CE',

'NIFTY20DEC9000CE', 'NIFTY20DEC10000PE', 'NIFTY20DEC8000CE',

'HDFCBANK20AUGFUT', 'NIFTY20AUGFUT', 'NIFTY20AUG10000CE',

'RELIANCE20AUGFUT', 'NIFTY20AUG10000PE', 'RELIANCE20SEPFUT',

'HDFCBANK20SEPFUT'], dtype=object)

Hosted on Jovian View File

List of all Stock on which I have data.

DONT FORGET to convert the timestamp column of Object Data type to

pandas-DateTime Data Type. It’s important so we can aggregate our data

and resample it as per our need. You can find the code for everything

mentioned but not shown, in the notebook itself.

Exploratory Data Analysis:

The data I have above is about 2 million for about 65 different stocks and

ranges from the months April to September. But because I have to

perform an exploratory data analysis, I have narrowed it down to a

particular stock’s data of one particular stock i.e Nifty 50.

Below are two graphs representing the data I have curated from the entire

dataset of 2 million to about 35K covering nifty50's movement in the

selected time period.

Now there’s a difference in the two graphs but they basically represent the

same values of the closing price. The difference is that you will notice

there are some continuous points in the first graph. This is only because

in the first plot, I have used timestamp as my index and in the second plot,

I have plotted the values of the closing price in the order of occurrence.

This continuity in data is because I have missing data for the times the

markets aren’t open.

Now, for further analysis, observations over the entire time variation

weren’t so helpful, so I further narrowed the data to a particular month

that I chose to be April, with about 3000 data points.

nifty_50_april

timestamp open_price high_price low_price close_price volume month

0 2020-04-20 09:15:00+05:30 9390.20 9390.20 9306.25 9308.40 0 4

1 2020-04-20 09:16:00+05:30 9310.75 9319.35 9289.80 9291.40 0 4

2 2020-04-20 09:17:00+05:30 9292.00 9292.00 9270.70 9281.50 0 4

3 2020-04-20 09:18:00+05:30 9280.95 9296.55 9278.35 9290.30 0 4

4 2020-04-20 09:19:00+05:30 9288.25 9297.15 9280.75 9291.95 0 4

... ... ... ... ... ... ... ...

3370 2020-04-30 15:25:00+05:30 9866.00 9866.20 9860.05 9861.75 0 4

3371 2020-04-30 15:26:00+05:30 9861.65 9862.85 9857.10 9858.70 0 4

3372 2020-04-30 15:27:00+05:30 9858.50 9858.60 9856.20 9857.60 0 4

3373 2020-04-30 15:28:00+05:30 9857.25 9857.95 9853.80 9856.20 0 4

3374 2020-04-30 15:29:00+05:30 9856.15 9858.45 9848.10 9851.85 0 4

3375 rows × 7 columns

Hosted on Jovian View File

DataFrame on which analysis has been performed.

Moving on to the important analysis plots in a time series.

Correlation Plot/Matrix:

The correlation coefficient tells us the Linear Relationship between the

two variables. It is bound between -1 and 1. Make sure you understand the

meaning of correlation here… because the correlation plot is not so useful

with time series but the autocorrelation and Partial autocorrelation plots

are.. and they will determine the model we use to forecast our time series

data.

Coefficient close to 1 meaning

a +ve and robust association

between the two,

Coefficient close to -1 meaning

a strong -ve association

between the two variables. In

the terms, I understood,

something like Inversely

Proportional!

Autocorrelation and Partial

Autocorrelation Plot:

(ACF&PACF)

These are important plots for

Correlation Matrix Log for nifty 50 data time series. They graphically

summarize the strength of the

relationships of observations in time series.

In Autocorrelation, we calculate the correlation for time-series

observations with previous time steps, called lags. Because the

correlation of the time series observations is calculated with values of the

same series at previous times, hence are called a serial correlation or an

autocorrelation.

Below I have plotted the ACF for nifty 50 April data with the help of the

stats model library. → import statsmodels.api as sm

plt.rc("figure", figsize=(10,6))

sm.graphics.tsa.plot_acf(nifty_50_april['close_price'], lags=50);

Hosted on Jovian View File

High Autocorrelation graph

The horizontal axis of an autocorrelation plot shows the size of the lag

between the elements of the time series. In simple terms, The ‘kth’ lag is

the time period that happened “k” time points before the time I. You can

optionally set in how many lags you want to observe.

Observe → that our data has a very high correlation. You will come to

know below why and how this can be mended. Also, both these plots

help us with modeling the data which also you will see how.

The Autocorrelation Plot is used in a forecasting model for time series

called Moving Averages which you will read about later in this article.

PartialAutocorrelation Plot:

A partial autocorrelation is a summary of the relationship between an

observation in a time series with observations at prior time steps with the

relationships of intervening observations removed. Meaning… The effects

of the lags in between are removed and we can see the direct impact a

previous observation has on the value to be predicted at a time(t).

PACF can be computed by regression.

Regression is a statistical method to determine the strength and

character of the relationship between one dependant value and other

variables that are independent.

Below I have plotted the PACF for nifty 50 April data again directly with

the help of the stats model library.

plt.rc("figure", figsize=(10,6))

sm.graphics.tsa.plot_pacf(nifty_50_april['close_price']);

Hosted on Jovian View File

Plot for Partial Autocorrelation of my data

The PACF plot is used in the AutoRegressive Model for forecasting

which again you will see later in the article.

Stationarity of Our Data:

Stationarity is an essential concept in time series analysis. If our data is

Stationary, it means that the summary statistics of our data(or rather the

process generating it) are consistent and do not change over time. It is

important to check the stationarity because many useful analytical tools

and statistical models rely on it. I have tried two methods below:

1) Summary Statistics:

One of the most basic methods

to check if our data is

stationary is to the summary

statistics. This is not much of

an accurate way, sometimes

the outcome of this test can be

a statistical fluke.

Histogram Log of Closing Prices.

X = nifty_50['close_price'].values

split = round(len(X) / 2)

X1, X2 = X[0:split], X[split:]

mean1, mean2 = X1.mean(), X2.mean()

var1, var2 = X1.var(), X2.var()

print('mean1=%f, mean2=%f' % (mean1, mean2))

print('variance1=%f, variance2=%f' % (var1, var2))

mean1=9603.421572, mean2=11105.933332

variance1=223878.109347, variance2=114346.102623

Hosted on Jovian View File

Comparing mean and variances

2) Dickey-Fuller test:

Another method to check for stationarity in our data is statistical tests. I

have used The Augmented Dickey-Fuller test which is called a unit root

test. The null hypothesis of the test is that the time series can be

represented by a unit root concluding our data as not stationary.

p-value > 0.05: Fail to reject the null hypothesis (H0), the data has a

unit root and is non-stationary.

p-value <= 0.05: Reject the null hypothesis (H0), the data does not

have a unit root and is stationary.

X = nifty_50['close_price'].values

result = adfuller(X)

print('ADF Statistic: %f' % result[0])

print('p-value: %f' % result[1])

print('Critical Values:')

for key, value in result[4].items():

print('\t%s: %.3f' % (key, value))

ADF Statistic: -0.663206

p-value: 0.856051

Critical Values:

1%: -3.431

5%: -2.862

10%: -2.567

Hosted on Jovian View File

Value of P = 0.856, meaning we have non-stationary data.

The data I have is non-stationary as I expected, Hence I used a basic

technique to get rid of the trend in my data i.e DIFFERENCE

TRANSFORM.

Differencing can be used to remove the series dependence on time, also

called temporal dependence. This includes structures like trends and

seasonality. It is simply performed by subtracting the previous

observation from the current observation.

difference(t) = observation(t) — observation(t-1)

Using the same, I have performed differencing on my data with this piece

of code below:

data = nifty_april['close_price'] -

nifty_april['close_price'].shift(1)

Using this, I conducted the dickey-fuller test again which gave me the

following results:

X = data[1:].values

result = adfuller(X)

print('ADF Statistic: %f' % result[0])

print('p-value: %f' % result[1])

print('Critical Values:')

for key, value in result[4].items():

print('\t%s: %.3f' % (key, value))

ADF Statistic: -58.045640

p-value: 0.000000

Critical Values:

1%: -3.432

5%: -2.862

10%: -2.567

Hosted on Jovian View File

p ≤ 0.05, meaning the differenced data is now stationary.

Below I have plotted the graphs of my data before and after removing the

temporal dependence in them.

Logs for my data before and after removing trend/seasonality.

Further, I plotted ACF and PACF logs for the same.

Note: Our prior high autocorrelation plot showed no seasonality or trend.

By plotting the ACF and PACF after differencing we shall be able to get a

decent start on what kind of model we should use. Note that, these tools

help us to get a starting point on understanding the time series we are

dealing with, not to get us our final answer.

data = nifty_50_april['close_price'] - nifty_50_april['close_price'].shift(1)

plt.rc("figure", figsize=(10,6))

sm.graphics.tsa.plot_acf(data[1:], lags=50);

Hosted on Jovian View File

Autocorrelation plot after differencing

plt.rc("figure", figsize=(10,6))

sm.graphics.tsa.plot_pacf(data[1:]);

Hosted on Jovian View File

Partial Autocorrelation Plot after differencing

Moving Averages Model to smooth our data:

The time-series data is very noisy; hence it's very difficult to gauge a

trend/pattern in the data. This is where Moving Averages/Rolling mean

helps. It works by simply splitting and aggregating the data into windows

according to function and creates a constantly updated averaged out

data. Its piece of code is very straight forward.

df.rolling(window).mean()

Moving averages with upper and lower bounds, for clearer movement.

plot_moving_average(nifty_50_april, 30, column='small_ma', plot_intervals=True)

Hosted on Jovian View File

Moving average: 30

plot_moving_average(nifty_50_april, 100, column='large_ma', plot_intervals=True)

Hosted on Jovian View File

Moving average: 100

Simple Exponential Smoothing:

Forecasts produced using exponential smoothing methods are weighted

averages of past observations, with the weights decaying exponentially

as the observations get older. Let me explain that in simple terms along

with how it is different from Simple Moving Averages.

Exponential Smoothing considers past data in a certain order/fashion. In

exponential smoothing, the most recent observation gets a little more

weightage than the 2nd most recent observation, and the 2nd most

recent observation gets a little more weightage than the 3rd most recent

observation. Hope the graph below helps.

The difference in Moving Average’s and Exponential smoothing’s emphasis on past observations.

Another important advantage of the SES model over the SMA model is

that the SES model uses a smoothing parameter that is continuously

variable, so it can easily be optimized by using a “solver” algorithm to

minimize the mean squared error.

Mathematically, if F(t) is forecast at time ‘t’, A(t) is the actual value at time

‘t’,

F(t+1) = F(t) + α(A(t)-F(t))

where, α → smoothing constant, (0≤α≤1)

I have used two ways to get my exponentially smoothed column. First, I

have again used the stats model library, from statsmodels.tsa.api import

SimpleExpSmoothing

Taking three different instances as follows:

𝜶(alpha) = 0.2

𝜶(alpha) = 0.8

𝜶(alpha) value automatically optimized by the stats model itself which

is the recommended one.

I used this data of nifty 50 April for exponential smoothing but the results

weren't so helpful. Have a look :/

So to get graphs with visible results, I went on to narrow my data frame to

a day’s data, and well then the results were obviously :)) SPECTACULAR!!!

In this plot, we can see that the black line is the actual distribution of the

data, other than that the red line plot is the most accurate as it is plotted

according to the optimized value determined by the stats model itself.

Next, I have used the mathematical formula directly with this piece of

code below:

def exponential_smoothing

exponential_smoothing(series, alpha):

result = [series[0]] # first value is same as series

for n in range(1, len(series)):

result.append(alpha * series[n] + (1 - alpha) * result[n-1])

return result

Hosted on Jovian View File

plot_exponential_smoothing(stock_one_day['close_price'], [0.05, 0.3])

Hosted on Jovian View File

Simple ES for two alpha values.

Trend-Following Strategies in Algorithmic Trading.

Here’s a little brief of what I think I should have known before I started and

you should too if you’re a beginner. I was clueless about time series

analysis when I started, and statistics turned out to be a pretty emotional

subject… but don’t give up! It’s all coming to you!

Well, there are mainly 5 traditional models that are most commonly used

in time series forecasting:

AR: Autoregressive Models, These models express the current values of

time series Linearly in terms of its previous values and current residual.

In case you’re wondering, (In a time series model) Residual Values, are

what is left over after fitting a model.

In simple terms… in an AutoRegressive model, the value ‘Y(t)’ which is the

value we want to predict, at time ‘t’, depends on its own past values.

Secondly, it involves regressing the past values we are considering for our

forecast. This is why we use the PACF to determine the order of our AR

model. Meaning how many specific past values we will consider for

predicting ‘Y(t)’. I hope that made sense!!!

F(t) = f[y(t-1), y(t-2)….y(t)]

Also, for an AR model, we expect the ACF to exhibit diminishing behavior,

precisely like the one I have in my data. Normally then, we plot the PACF

for further evidence, but in my data, I did see a decaying ACF plot, but

after I plotted the PACF, I saw that it wasn't very informative. This is one of

the reasons why stock prices are one of the most challenging time series

to predict.

MA: Moving Average Models, These express the current values of the

time series Linearly, in terms of its current and previous residual values. In

the Moving average model rather than taking the past values of the

forecast variable, we consider past forecast errors. Therefore this time,

the value to be forecasted is a function of errors from previous forecasts.

F(t) = f[E(t-1), E(t-2)….E(t)]

We use the ACF plot, as I mentioned before here to determine the order of

errors to be taken into account to make our model.

ARMA: AutoRegressive MovingAverage Models, This is a combination of

Autoregressive and Moving Averages models. Here, the current values of

the time series are expressed linearly, in terms of its previous values and

in terms of both current and previous residual values.

NOTE: The Above Three models are for STATIONARY PROCESSES.

ARIMA: AutoRegressive Integrated MovingAverage Model, and SARIMA:

Seasonal AutoRegressive Integrated Moving Average Model, There is

very little difference in the two. ARIMA generally fits the non-stationary

time-series based on ARMA, with a differencing process that effectively

transforms the non-stationary data into a stationary one, whereas

SARIMA models combine seasonal differencing with ARIMA, i.e time-

series data with periodic characteristics.

Sorry, I have mentioned a lot of theory here, but like you know…

The best practice is inspired by Theory -Donald Knuth

Now, as I mentioned I have tried to come to a buy/sell decision using

moving averages. I have applied a very simple concept creating two case

scenarios. One where the closing price falls behind the small moving

average to generate a SELL signal and the other when it exceeds the large

moving average to generate a BUY signal. Small MA has a window of 30.

Large MA has a window of 100.

def small_ma

small_ma(df, coloumn='close_price'):

df['small_ma'] = df[coloumn].rolling(window=30).mean()

return df

def large_ma

large_ma(df, coloumn='close_price'):

df['large_ma'] = df[coloumn].rolling(window=100).mean()

return df

def choose_month

choose_month(df, month):

df = df[(df.month == month)]

return df

#def s_ma_slope(df, coloumn):

Hosted on Jovian View File

Code for calculating Moving averages

Generating a BUY/SELL signal:

I have created 2 case scenarios, where if the Closing Price crosses the

moving averages it generates a buy/sell signal.

Plotting the generated buy/sell signal.

#this function will tell me what price to buy at and what price to sell at.

def buy_sell

buy_sell(signal):

sigPriceBuy =[]

sigPriceSell=[]

flag = -1

for i in range(0,len(signal)):

if (signal['small_ma'][i] > signal['large_ma'][i]): #buying condition

if flag != 1: #incase we havent bought already.

sigPriceBuy.append(signal['close_price'][i])

sigPriceSell.append(np.nan)

flag = 1 # meaning now we have bought

else:

sigPriceBuy.append(np.nan)

sigPriceSell.append(np.nan)

elif (signal['small_ma'][i] < signal['large_ma'][i]): #selling condition

if flag != 0: #in case we already dont have stock

sigPriceSell.append(signal['close_price'][i])

sigPriceBuy.append(np.nan)

flag = 0 #now we have sold

else:

sigPriceBuy.append(np.nan)

sigPriceSell.append(np.nan)

else: #for nan values

sigPriceBuy.append(np.nan)

sigPriceSell.append(np.nan)

return (sigPriceBuy, sigPriceSell)

Hosted on Jovian View File

Generation of the buy/sell signal.

Possible Profits after using Buy/Sell signal generated on the basis of

Moving Averages:

I created a transaction table based on the buy/sell signal and after

applying a profit formula without taxes.

REFERENCES:

Informative BOOK( Very Insightful)

Stats,

Stationarity,

Differencing

WORDS: (That might be helpful)

The trend in data

Summary Statistics, provide information about our sample data, it tells us

about the locations of average and the skewness/kurtosis in the data in

order to communicate large information in the simplest words.

The null hypothesis, a typical statistical theory that suggests that no

statistical relationship and significance exists in a set of given single

observed variable, between two sets of observed data and measured

phenomena.

Residual Values, in a time series analysis, are what is left over after fitting

a model.

Hope this article helps!! Find me on Linked in here.

Also, hope you are doing well :)

47

Time Series Analysis Machine Learning Statistics Data Visualization Data Analysis

More from Jovian Follow

Jovian is a community-driven learning platform for data science and machine

learning. Take online courses, build real-world projects and interact with a global

community at www.jovian.ai

ABHISHEK KUMAR · Dec 29, 2020

Classifying Gender in images using Deep

Learning

Electricity transformed countless industries: transportation,

manufacturing, healthcare, communications, and more. AI will bring

about an equally big transformation.

-Andrew Ng

Quote by Andrew Ng

What is Deep Learning ?

Deep Learning is a subset of Machine Learning that has networks

capable of learning unsupervised from data that is unstructured or

unlabelled.

Introduction

This blog is a part of a course project from Deep Learning with PyTorch:

Zero to GANs. This course is a 6-week long course by Aakash N S and his

team at Jovian. It is a beginner-friendly online course offering a practical

and coding-focused introduction to deep learning using the PyTorch

framework. It was a novel experience for all, with the lectures being

delivered via Youtube live-streaming (on the beloved freeCodeCamp

Youtube channel)…

Read more · 7 min read

13

Abubakkar Siddique · Dec 28, 2020

Trends on Video Game Sales Using

Exploratory Data Analysis and Case Study

Image Credit: WallpaperTip

Playing video games has become a customary and important part of

everyday life for today’s youth, and the broader education community has

been exploring the affordances of video games to support various

competencies that are valuable for success in the twenty-first century.

Now, Let's learn out-of-box, that is completely about Sales but not the

Playing game, Does it sounds strange?

This Project is to perform the analysis on the Video Games Sales across

the countries. Used various libraries of Python for visualization of Data.

The Dataset of Video Game Sales which I used in the Project is Flashed

here. And…

Read more · 4 min read

74

Srijan · Dec 2, 2020

10 Ways You Can Create Tensors In

PyTorch

Photo by Florian Olivo on Unsplash

PyTorch is an open-source Python-based library. It provides high

flexibility and speed while building, training, and deploying deep learning

models.

At its core, PyTorch involves operations involving tensors. A tensor is a

number, vector, matrix, or any n-dimensional array.

In this article, we will see different ways of creating tensors using PyTorch

tensor methods (functions).

Topics

tensor

zeros

ones

full

arange

linspace

rand

randint

eye

complex

tensor()

It returns a tensor when data is passed to it. data can be a scalar, tuple, a

list, or a NumPy array.

In the above example, a NumPy array created using np.arange() …

Read more · 4 min read

40

Daniela Cruz · Nov 12, 2020

What makes a student prefer a

university?… Part II: Analysis.

Data analysis of American Universities to find out which are the most

preferred features by students when it comes to choosing a university.

Photo by Vasily Koloda on Unsplash

The goal of this project is to find out which are the most relevant features

that students take into account to choose the favorite university. Some of

the essential questions for developing this project are related to the

number of applications, admissions, and enrollments, cost of tuition and

fees, cost of living on campus, types of degrees offered, and features of

the states where universities are located (population and GDP).

The data set used for this analysis…

Read more · 12 min read

Aakash N S · Nov 4, 2020

Is a Udacity Nanodegree worth it?

I think Udacity Nanodegrees are quite expensive (around $315 or ₹23,000

per month for 4–6 months). In most cases, there are free or cheaper

alternatives that are just as good or better in some cases.

The most important outcome from a Nanodegree program is the projects

you build and not the courses or the certificate. I often come across

people who have completed a Nanodegree program but have relatively

weak projects, making it very difficult for them to find a job.

Keeping this in mind, here’s how I would evaluate a Nanodegree program:

Go through the syllabus and carefully study…

Read more · 2 min read

92

Read more from Jovian

About Help Legal

You might also like

- Simca 15 User Guide PDFDocument517 pagesSimca 15 User Guide PDForlando paez jopiaNo ratings yet

- Rational Comprehensive ModelDocument15 pagesRational Comprehensive Modelapi-370730581% (16)

- Time Series Forecasting - ShoeSales - Business Report - Divjyot Shah SinghDocument38 pagesTime Series Forecasting - ShoeSales - Business Report - Divjyot Shah SinghDivjyot100% (4)

- Complete Data Structures and Algorithms Guide: Resources, Notes, Questions, SolutionsDocument100 pagesComplete Data Structures and Algorithms Guide: Resources, Notes, Questions, Solutionscsin haNo ratings yet

- Data Structures & Algorithms - 1Document100 pagesData Structures & Algorithms - 1Rishu KumarNo ratings yet

- International Maths Olympiad - Class 1 (With CD): Theories with examples, MCQs & solutions, Previous questions, Model test papersFrom EverandInternational Maths Olympiad - Class 1 (With CD): Theories with examples, MCQs & solutions, Previous questions, Model test papersRating: 2 out of 5 stars2/5 (3)

- Geography of Human Wellbeing PP Nikki - FinalDocument73 pagesGeography of Human Wellbeing PP Nikki - Finalapi-414376990100% (2)

- Reliability Analysis For Repairable v1.9Document266 pagesReliability Analysis For Repairable v1.9ES Rouza100% (1)

- Business Information Systems Discipline Assignment Submission Form Module: MS802Document14 pagesBusiness Information Systems Discipline Assignment Submission Form Module: MS802anirudh kashyapNo ratings yet

- Chapter 001Document7 pagesChapter 001Nestor BalboaNo ratings yet

- Corporater Data Visualization GuideDocument30 pagesCorporater Data Visualization GuidemcbenmahdiNo ratings yet

- Simca 15 User Guide en B 00076 Sartorius DataDocument519 pagesSimca 15 User Guide en B 00076 Sartorius Datapisexew230No ratings yet

- Marketing Analytics Project: Hanoi University of Science and TechnologyDocument46 pagesMarketing Analytics Project: Hanoi University of Science and TechnologyMinh Tâm TrầnNo ratings yet

- Data Visualization-05-03Document17 pagesData Visualization-05-03taklearnavnNo ratings yet

- Intro-to-Data-and Data-Science-Course-Notes-365-Data-ScienceDocument18 pagesIntro-to-Data-and Data-Science-Course-Notes-365-Data-Sciencelukhanyisojikela12No ratings yet

- Intro-to-Data-and Data-Science-Course-Notes-365-Data-ScienceDocument17 pagesIntro-to-Data-and Data-Science-Course-Notes-365-Data-ScienceHelen MylonaNo ratings yet

- Week 1 - Data Visualization and SummarizationDocument8 pagesWeek 1 - Data Visualization and SummarizationJadee BuenaflorNo ratings yet

- Data-Science-Report sAURABHDocument28 pagesData-Science-Report sAURABHSaurabh DhavaneNo ratings yet

- Zambia Ict College Database SystemsDocument7 pagesZambia Ict College Database SystemsBlue TechNo ratings yet

- Bikesharing - 21070126112 - ColaboratoryDocument18 pagesBikesharing - 21070126112 - ColaboratoryVihan ChoradaNo ratings yet

- NSEpyDocument9 pagesNSEpyAnkan PalNo ratings yet

- Data Visualization and SummarizationDocument7 pagesData Visualization and SummarizationJaylloyd MartinezNo ratings yet

- Hitchhiker's Guide To Exploratory Data Analysis - by Harshit Tyagi - Towards Data ScienceDocument14 pagesHitchhiker's Guide To Exploratory Data Analysis - by Harshit Tyagi - Towards Data ScienceSaikatPanditNo ratings yet

- Fernando N. Abuan - Final Exam in Adv, Statistics (21-22)Document5 pagesFernando N. Abuan - Final Exam in Adv, Statistics (21-22)Fernando AbuanNo ratings yet

- Internship ReportDocument9 pagesInternship ReportNaveen VermaNo ratings yet

- DWM Unit 2. Data Warehousing Modeling & OLAP IDocument16 pagesDWM Unit 2. Data Warehousing Modeling & OLAP Ijemsbonnd100% (1)

- Data Driven Decisions For Business: Submitted byDocument23 pagesData Driven Decisions For Business: Submitted byNadia RiazNo ratings yet

- NAME-Rajat Gupta Section - B2B2 (Marketing and Analytics) UID - 2019-1706-0001-0007Document9 pagesNAME-Rajat Gupta Section - B2B2 (Marketing and Analytics) UID - 2019-1706-0001-0007Rajat GuptaNo ratings yet

- As Levels Paper OneDocument380 pagesAs Levels Paper OneAlvi GamingNo ratings yet

- Stock Price Prediction Report - Parth Bathla - 18ECU016Document12 pagesStock Price Prediction Report - Parth Bathla - 18ECU016Parth BathlaNo ratings yet

- Session 2 Summary: ForecastingDocument10 pagesSession 2 Summary: Forecastingpaul le cozNo ratings yet

- KPMG VI New Raw Data Update FinalDocument1,823 pagesKPMG VI New Raw Data Update FinalZeinNo ratings yet

- 300hours Study PlanDocument9 pages300hours Study Planaditya24292No ratings yet

- Python Pandas Data AnalysisDocument36 pagesPython Pandas Data AnalysisLakshit ManraoNo ratings yet

- Data MiningDocument29 pagesData MiningSrijita PoddarNo ratings yet

- OPC Excel Batch ReportingDocument6 pagesOPC Excel Batch ReportingddddNo ratings yet

- IQMB TiemeSeriesAnalysisDocument2 pagesIQMB TiemeSeriesAnalysisMohan RajNo ratings yet

- MaterialsDocument30 pagesMaterialsGowsik GNo ratings yet

- ML0101EN Clas Logistic Reg Churn Py v1Document13 pagesML0101EN Clas Logistic Reg Churn Py v1banicx100% (1)

- Big Data Business Analytics SAS Paython Courses in PuneDocument23 pagesBig Data Business Analytics SAS Paython Courses in PuneDefour AnalyticsNo ratings yet

- Data InterpretationDocument42 pagesData Interpretationsumit3010075% (4)

- T SQL TutorialDocument13 pagesT SQL TutorialAnusha ReddyNo ratings yet

- 1 50pm - Rich Jordan PPTX 1584619927Document21 pages1 50pm - Rich Jordan PPTX 1584619927Ashish MathurNo ratings yet

- Business Analytics ModuleDocument22 pagesBusiness Analytics ModuleMarjon DimafilisNo ratings yet

- Why Do We Use Story Points For EstimatingDocument6 pagesWhy Do We Use Story Points For EstimatingRenato Barbieri JuniorNo ratings yet

- Business Analytics Using TableauDocument2 pagesBusiness Analytics Using TableauChinmaya PatilNo ratings yet

- Analisis de Abandonos RetencionDocument12 pagesAnalisis de Abandonos Retenciongbonilla9295No ratings yet

- BigData NptelDocument813 pagesBigData NptelJagat ChauhanNo ratings yet

- 09 Handout 1Document4 pages09 Handout 1oracion.rovjaphethNo ratings yet

- BCG Internship Task 2Document26 pagesBCG Internship Task 2yomolojaNo ratings yet

- Simulation and Forecasting Solutions: (From Table) (No. of Cars)Document18 pagesSimulation and Forecasting Solutions: (From Table) (No. of Cars)Debbie DebzNo ratings yet

- Oreilly Technical Guide Understanding EtlDocument107 pagesOreilly Technical Guide Understanding EtlrafaelpdsNo ratings yet

- How Do I Profile C++ Code Running On Linux - Stack OverflowDocument30 pagesHow Do I Profile C++ Code Running On Linux - Stack Overflowciuciu.denis.2023No ratings yet

- Data Science: What the Best Data Scientists Know About Data Analytics, Data Mining, Statistics, Machine Learning, and Big Data – That You Don'tFrom EverandData Science: What the Best Data Scientists Know About Data Analytics, Data Mining, Statistics, Machine Learning, and Big Data – That You Don'tRating: 5 out of 5 stars5/5 (1)

- How To Prevent Data Leakage in Pandas and Scikit-LearnDocument3 pagesHow To Prevent Data Leakage in Pandas and Scikit-LearnnimaNo ratings yet

- CCW331 Business Analytics Lecture Notes 1Document286 pagesCCW331 Business Analytics Lecture Notes 1hakunamatata071203No ratings yet

- The Big Data Revolution and How To Extract Value From Big DataDocument16 pagesThe Big Data Revolution and How To Extract Value From Big DataSalim MehenniNo ratings yet

- BI Seminarski RadDocument21 pagesBI Seminarski RadRijadNo ratings yet

- Why Do AI Initiatives FailDocument5 pagesWhy Do AI Initiatives FailMd Ahsan AliNo ratings yet

- Week5 ModifiedDocument25 pagesWeek5 ModifiedturbonstreNo ratings yet

- 7QC ToolsDocument62 pages7QC ToolsRaiham EffendyNo ratings yet

- PYTHON DATA SCIENCE: Harnessing the Power of Python for Comprehensive Data Analysis and Visualization (2023 Guide for Beginners)From EverandPYTHON DATA SCIENCE: Harnessing the Power of Python for Comprehensive Data Analysis and Visualization (2023 Guide for Beginners)No ratings yet

- PYTHON DATA SCIENCE: A Practical Guide to Mastering Python for Data Science and Artificial Intelligence (2023 Beginner Crash Course)From EverandPYTHON DATA SCIENCE: A Practical Guide to Mastering Python for Data Science and Artificial Intelligence (2023 Beginner Crash Course)No ratings yet

- Python - How Do I Find Numeric Columns in Pandas - Stack OverflowDocument6 pagesPython - How Do I Find Numeric Columns in Pandas - Stack OverflowvaskoreNo ratings yet

- Narrowing The Search: Which Hyperparameters Really Matter?Document9 pagesNarrowing The Search: Which Hyperparameters Really Matter?vaskoreNo ratings yet

- Organisational Restructure Excel Dashboard - Excel Dashboards VBADocument1 pageOrganisational Restructure Excel Dashboard - Excel Dashboards VBAvaskoreNo ratings yet

- R - How Dnorm Works? - Stack OverflowDocument1 pageR - How Dnorm Works? - Stack OverflowvaskoreNo ratings yet

- Python - Display Number With Leading Zeros - Stack OverflowDocument8 pagesPython - Display Number With Leading Zeros - Stack OverflowvaskoreNo ratings yet

- For-Loops in R (Optional Lab) : This Is A Bonus Lab. You Are Not Required To Know This Information For The Final ExamDocument2 pagesFor-Loops in R (Optional Lab) : This Is A Bonus Lab. You Are Not Required To Know This Information For The Final ExamvaskoreNo ratings yet

- Mboxcox, Interpreting Difficult Regressions: 2 AnswersDocument1 pageMboxcox, Interpreting Difficult Regressions: 2 AnswersvaskoreNo ratings yet

- Autofilter With Column Formatted As Date: 10 AnswersDocument1 pageAutofilter With Column Formatted As Date: 10 AnswersvaskoreNo ratings yet

- Problems With Stepwise RegressionDocument1 pageProblems With Stepwise RegressionvaskoreNo ratings yet

- Three Reasons That You Should NOT Use Deep Learning - by George Seif - Towards Data ScienceDocument1 pageThree Reasons That You Should NOT Use Deep Learning - by George Seif - Towards Data SciencevaskoreNo ratings yet

- VBA - String Parsing. String Parsing Involves Looking Through - by Breakcorporate - MediumDocument1 pageVBA - String Parsing. String Parsing Involves Looking Through - by Breakcorporate - MediumvaskoreNo ratings yet

- 3 Must-Have Projects For Your Data Science Portfolio - by Aakash N S - Jovian - Jan, 2021 - MediumDocument1 page3 Must-Have Projects For Your Data Science Portfolio - by Aakash N S - Jovian - Jan, 2021 - MediumvaskoreNo ratings yet

- MS Excel PivotTable Deleted Items Remain - Excel and AccessDocument1 pageMS Excel PivotTable Deleted Items Remain - Excel and AccessvaskoreNo ratings yet

- Semi-Automated Exploratory Data Analysis (EDA) in Python - by Destin Gong - Mar, 2021 - Towards DataDocument3 pagesSemi-Automated Exploratory Data Analysis (EDA) in Python - by Destin Gong - Mar, 2021 - Towards DatavaskoreNo ratings yet

- Excel - Selecting A Specific Column of A Named Range For The SUMIF Function - Stack OverflowDocument1 pageExcel - Selecting A Specific Column of A Named Range For The SUMIF Function - Stack OverflowvaskoreNo ratings yet

- Excel VBA - Message and Input Boxes in Excel, MsgBox Function, InputBox Function, InputBox MethodDocument2 pagesExcel VBA - Message and Input Boxes in Excel, MsgBox Function, InputBox Function, InputBox MethodvaskoreNo ratings yet

- Refer To Excel Cell in Table by Header Name and Row Number: 7 AnswersDocument1 pageRefer To Excel Cell in Table by Header Name and Row Number: 7 AnswersvaskoreNo ratings yet

- TreeSheets: App Reviews, Features, Pricing & Download - AlternativeToDocument1 pageTreeSheets: App Reviews, Features, Pricing & Download - AlternativeTovaskoreNo ratings yet

- Excel - Can Advanced Filter Criteria Be in The VBA Rather Than A Range? - Stack OverflowDocument1 pageExcel - Can Advanced Filter Criteria Be in The VBA Rather Than A Range? - Stack OverflowvaskoreNo ratings yet

- Sorting Arrays in VBADocument2 pagesSorting Arrays in VBAvaskoreNo ratings yet

- VBA - Bubble Sort. A Bubble Sort Is A Technique To Order - by Breakcorporate - MediumDocument1 pageVBA - Bubble Sort. A Bubble Sort Is A Technique To Order - by Breakcorporate - MediumvaskoreNo ratings yet

- Excel VBA Type Mismatch Error Passing Range To Array - Stack OverflowDocument1 pageExcel VBA Type Mismatch Error Passing Range To Array - Stack OverflowvaskoreNo ratings yet

- Yin 1981 Knowledge Utilization As A Networking ProcessDocument27 pagesYin 1981 Knowledge Utilization As A Networking ProcessKathy lNo ratings yet

- MGT 2103 - Assessment 1 - EssayDocument3 pagesMGT 2103 - Assessment 1 - EssayYousef Al HashemiNo ratings yet

- Group Members Name Anas Iqbal Awais Younas Roll No: 133 167 Subject: Principal of Management Submitted To: Mam Shumila NazDocument18 pagesGroup Members Name Anas Iqbal Awais Younas Roll No: 133 167 Subject: Principal of Management Submitted To: Mam Shumila NazAdeel AhmadNo ratings yet

- Readiness, Barriers and Potential Strenght of Nursing in Implementing Evidence-Based PracticeDocument9 pagesReadiness, Barriers and Potential Strenght of Nursing in Implementing Evidence-Based PracticeWahyu HidayatNo ratings yet

- How To Think Like Leonardo Da VinciDocument6 pagesHow To Think Like Leonardo Da VinciBassam100% (1)

- Final Thesis ResearchDocument32 pagesFinal Thesis ResearchEdelyn Baluyot95% (22)

- Design and Implementation Options For Digital Library SystemsDocument5 pagesDesign and Implementation Options For Digital Library SystemsChala GetaNo ratings yet

- Anggara 2018 J. Phys. Conf. Ser. 1013 012116Document7 pagesAnggara 2018 J. Phys. Conf. Ser. 1013 012116RENELYN BULAGANo ratings yet

- Articulo Margarita 2018-2Document7 pagesArticulo Margarita 2018-2Oscar EsparzaNo ratings yet

- Nacije I InteligencijaDocument9 pagesNacije I InteligencijaToni JandricNo ratings yet

- The Singapore Statistical SystemDocument11 pagesThe Singapore Statistical SystemFIRDA AZZAHROTUNNISANo ratings yet

- Research Design and Development Lectures 1-1Document26 pagesResearch Design and Development Lectures 1-1georgemarkNo ratings yet

- A Digital Future For Planning Recom HeadlinesDocument1 pageA Digital Future For Planning Recom Headlinestakmid0505No ratings yet

- Data Strategy Roadmap ENGDocument57 pagesData Strategy Roadmap ENGHillmer VallenillaNo ratings yet

- Corporate Governance & The Director (As Provided Undercompanies Act 2013)Document13 pagesCorporate Governance & The Director (As Provided Undercompanies Act 2013)Parth BindalNo ratings yet

- Appendices: A B C DDocument14 pagesAppendices: A B C Dayu gustianingsihNo ratings yet

- The Neuroscience of Mindfulness MeditationDocument13 pagesThe Neuroscience of Mindfulness MeditationDEIVIDI GODOYNo ratings yet

- Solomons ParadoxDocument10 pagesSolomons ParadoxSorin FocsanianuNo ratings yet

- To Study The Impact of Social Media andDocument23 pagesTo Study The Impact of Social Media andAradhana SinghNo ratings yet

- Social DialectologyDocument46 pagesSocial DialectologyKatia LeliakhNo ratings yet

- 216biomass Gasification in Fluidized Bed Gasifiersmodeling and SimulationguilnazDocument84 pages216biomass Gasification in Fluidized Bed Gasifiersmodeling and SimulationguilnaznahomNo ratings yet

- Introduction To Biostatistics SyllabusDocument8 pagesIntroduction To Biostatistics SyllabusKasparov RepedroNo ratings yet

- Spatial Analysis of Human Population Distribution and Growth in Marinduque Island, PhilippinesDocument3 pagesSpatial Analysis of Human Population Distribution and Growth in Marinduque Island, PhilippinesMarinela DaumarNo ratings yet

- Reynolds03 JSSC Vol38no9 Pp1555-1560 ADirectConvReceiverICforWCDMAMobileSystemsDocument6 pagesReynolds03 JSSC Vol38no9 Pp1555-1560 ADirectConvReceiverICforWCDMAMobileSystemsTom BlattnerNo ratings yet

- Couvy Duchesne 2018Document12 pagesCouvy Duchesne 2018spaciugNo ratings yet

- Final AssignmentDocument25 pagesFinal AssignmentSana50% (2)

- Biological Child PsychiatryDocument264 pagesBiological Child Psychiatrydejoguna126100% (2)