Professional Documents

Culture Documents

Implementation of The Data in Rapidminer

Implementation of The Data in Rapidminer

Uploaded by

AliCopyright:

Available Formats

You might also like

- Data-Analytics ABDocument5 pagesData-Analytics ABSadeel YousefNo ratings yet

- Richard v. McCarthy - Applying Predictive Analytics - Finding Value in Data-Springer (2021)Document282 pagesRichard v. McCarthy - Applying Predictive Analytics - Finding Value in Data-Springer (2021)darko mc0% (1)

- Statistics For Psychologists (Calculating and Interpreting Basic Statistics Using SPSS) - Craig A. WendorfDocument96 pagesStatistics For Psychologists (Calculating and Interpreting Basic Statistics Using SPSS) - Craig A. WendorfsashasalinyNo ratings yet

- Forma Scientific - 86 Freezer Models 916 - 917 - 923 - 925 - and 926 Manual ENGDocument63 pagesForma Scientific - 86 Freezer Models 916 - 917 - 923 - 925 - and 926 Manual ENGNiKo100% (1)

- A Novel Hybrid Classification Model For The Loan Repayment Capability Prediction SystemDocument6 pagesA Novel Hybrid Classification Model For The Loan Repayment Capability Prediction SystemRahul SharmaNo ratings yet

- Experiment 4 Create A Test Plan Document For Any Application (E.g. Library Management System)Document3 pagesExperiment 4 Create A Test Plan Document For Any Application (E.g. Library Management System)John SinghNo ratings yet

- User Manual (Mental Health Issue Among University StudentDocument19 pagesUser Manual (Mental Health Issue Among University StudentANIS NABIHAH BINTI MOHD JAISNo ratings yet

- Ibrahim Zitouni Data Modeling Ibrahim Zitouni Update 64904 173936102Document22 pagesIbrahim Zitouni Data Modeling Ibrahim Zitouni Update 64904 173936102Ibrahim ZitouniNo ratings yet

- Anomaly Detection in Social Networks Twitter BotDocument11 pagesAnomaly Detection in Social Networks Twitter BotMallikarjun patilNo ratings yet

- 3903-Article Text-15481-4-10-20230905Document5 pages3903-Article Text-15481-4-10-20230905Honoratus Irpan Sinurat Honoratus Irpan SinuratNo ratings yet

- QuizDocument5 pagesQuizFatma Zohra OUIRNo ratings yet

- Take Assessment: Exercise 6: Index Choice and Query OptimizationDocument7 pagesTake Assessment: Exercise 6: Index Choice and Query OptimizationxgdsmxyNo ratings yet

- DSBDA Lab ManualDocument167 pagesDSBDA Lab ManualB34-Samruddhi LatoreNo ratings yet

- Performance Evaluation of Ontology Andfuzzybase CbirDocument7 pagesPerformance Evaluation of Ontology Andfuzzybase CbirAnonymous IlrQK9HuNo ratings yet

- Data Preprocessing Solution-24-37Document14 pagesData Preprocessing Solution-24-37gurudevpasupuleti09No ratings yet

- Research ProposalDocument4 pagesResearch ProposalNeha MuleNo ratings yet

- Data - Science Final ReportDocument8 pagesData - Science Final Reportshachidevmahato121No ratings yet

- Property Rental Price Prediction Using The Extreme Gradient BoostingDocument6 pagesProperty Rental Price Prediction Using The Extreme Gradient BoostingClara ANo ratings yet

- Ijcirv13n8 08Document8 pagesIjcirv13n8 08Aina RaksNo ratings yet

- FDS Unit 2Document8 pagesFDS Unit 2Amit AdhikariNo ratings yet

- Machine Learning - Project Group 3Document17 pagesMachine Learning - Project Group 3Tangirala AshwiniNo ratings yet

- Fake News ClassificationDocument8 pagesFake News ClassificationAnton LushkinNo ratings yet

- Lab Assignment 1 Title: Data Wrangling I: Problem StatementDocument12 pagesLab Assignment 1 Title: Data Wrangling I: Problem StatementMr. LegendpersonNo ratings yet

- Data Modeling ExamDocument4 pagesData Modeling ExammbpasumarthiNo ratings yet

- Experiment No. 03: Sr. No: 39 Name: Vanraj PardeshiDocument5 pagesExperiment No. 03: Sr. No: 39 Name: Vanraj PardeshiAnurag SinghNo ratings yet

- Python by Example Book 2 (Data Manipulation and Analysis)Document105 pagesPython by Example Book 2 (Data Manipulation and Analysis)Aamir MehmoodNo ratings yet

- Dwdmsem 6 QBDocument13 pagesDwdmsem 6 QBSuresh KumarNo ratings yet

- Analysis of Common Supervised Learning Algorithms Through ApplicationDocument20 pagesAnalysis of Common Supervised Learning Algorithms Through Applicationacii journalNo ratings yet

- Main Dock PinDocument31 pagesMain Dock PinPaul WalkerNo ratings yet

- 1stpaper NanlConf Textbook Rec Om MenderDocument3 pages1stpaper NanlConf Textbook Rec Om MenderKalyan RoyNo ratings yet

- A Strategy To Compromise Handwritten Documents Processing and Retrieving Using Association Rules MiningDocument6 pagesA Strategy To Compromise Handwritten Documents Processing and Retrieving Using Association Rules MiningUbiquitous Computing and Communication JournalNo ratings yet

- Unit04 CS04 DatabaseDesignDevelopment CourseworkTemplate HNDinComputingDocument9 pagesUnit04 CS04 DatabaseDesignDevelopment CourseworkTemplate HNDinComputingNicK VNo ratings yet

- Pergunta 1: 1 / 1 PontoDocument22 pagesPergunta 1: 1 / 1 PontoBruno CuryNo ratings yet

- ML Theory QuestionsDocument2 pagesML Theory QuestionsRamyashree Gs Dept. of Computer ApplicationsNo ratings yet

- Data Analysis MethodsDocument4 pagesData Analysis MethodsDorminic Wentworth Purcel100% (1)

- Bourne, DavidW. A. - Strauss, Steven - Mathematical Modeling of Pharmacokinetic Data (2018, CRC Press - Routledge)Document153 pagesBourne, DavidW. A. - Strauss, Steven - Mathematical Modeling of Pharmacokinetic Data (2018, CRC Press - Routledge)GuillermoNo ratings yet

- Lab 01Document5 pagesLab 01nayyabkanwal2004No ratings yet

- Method of Research and System AnalysisDocument27 pagesMethod of Research and System AnalysisCRISTINE JOY ATIENZANo ratings yet

- C45 AlgorithmDocument12 pagesC45 AlgorithmtriisantNo ratings yet

- Interplay Between Probabilistic Classifiers and Boosting Algorithms For Detecting Complex Unsolicited EmailsDocument5 pagesInterplay Between Probabilistic Classifiers and Boosting Algorithms For Detecting Complex Unsolicited EmailsShrawan Trivedi100% (1)

- Talend Examples DataQuality EN 7.2.1Document29 pagesTalend Examples DataQuality EN 7.2.1kunja4No ratings yet

- 04-DDD - Assignment Brief 2Document3 pages04-DDD - Assignment Brief 2Doan Thien An (FGW HCM)No ratings yet

- Teit Cbgs Dmbi Lab Manual FH 2015Document60 pagesTeit Cbgs Dmbi Lab Manual FH 2015Soumya PandeyNo ratings yet

- An Efficient Classification Algorithm For Real Estate DomainDocument7 pagesAn Efficient Classification Algorithm For Real Estate DomainIJMERNo ratings yet

- How To Start Your ProjectDocument12 pagesHow To Start Your ProjectNza HawramyNo ratings yet

- Important QuestionsDocument4 pagesImportant QuestionsAdilrabia rslNo ratings yet

- Data Mining Course OverviewDocument38 pagesData Mining Course OverviewharishkodeNo ratings yet

- BA Notes From LectureDocument9 pagesBA Notes From Lectureakashsharma9011328268No ratings yet

- 18-Article Text-61-1-10-20200510Document6 pages18-Article Text-61-1-10-20200510Ghi.fourteen Ghi.fourteenNo ratings yet

- Exp OlapDocument44 pagesExp OlapprashantNo ratings yet

- Current Trends in SoftwareDocument26 pagesCurrent Trends in SoftwareDinesh SamanNo ratings yet

- Unit II - DW&DMDocument19 pagesUnit II - DW&DMRanveer SehedevaNo ratings yet

- BDA Mini Project ReportDocument27 pagesBDA Mini Project ReportRajshree BorkarNo ratings yet

- Assignment 2Document1 pageAssignment 2khokharianNo ratings yet

- Data MningDocument10 pagesData MningrapinmystyleNo ratings yet

- Unit 1Document43 pagesUnit 1amrutamhetre9No ratings yet

- Project Report: A Report Submitted ToDocument13 pagesProject Report: A Report Submitted ToPuvvula Bhavya Sri chandNo ratings yet

- Lab #5 Sig FigsDocument2 pagesLab #5 Sig FigsRebekah MarchilenaNo ratings yet

- Crisp-DmDocument4 pagesCrisp-Dmshravan kumarNo ratings yet

- E Book Management Uml DiagramsDocument11 pagesE Book Management Uml DiagramsVarun Karra100% (1)

- Hotel Property Management SystemDocument12 pagesHotel Property Management SystemOlivia KateNo ratings yet

- Oracle Database 12c: SQL Workshop I: D80190GC10 Edition 1.0 August 2013 D83124Document144 pagesOracle Database 12c: SQL Workshop I: D80190GC10 Edition 1.0 August 2013 D83124Ndiogou diopNo ratings yet

- LogDocument84 pagesLogputri sulungNo ratings yet

- 10 Induction Motor ProtectionDocument19 pages10 Induction Motor ProtectionklicsbcmostNo ratings yet

- Canatal Series 6 - BrochureDocument8 pagesCanatal Series 6 - BrochureOscar A. Pérez MissNo ratings yet

- Form 182 For Name CorrectionDocument4 pagesForm 182 For Name CorrectionH ANo ratings yet

- Introduction To Microdevices and Microsystems: Module On Microsystems & MicrofabricaDocument31 pagesIntroduction To Microdevices and Microsystems: Module On Microsystems & MicrofabricaBanshi Dhar GuptaNo ratings yet

- HR Analytics 3rd ChapterDocument16 pagesHR Analytics 3rd ChapterAppu SpecialNo ratings yet

- Motiv Letter - Taltech - CSDocument2 pagesMotiv Letter - Taltech - CSJora Band7No ratings yet

- Submission Myname 3.sqlDocument3 pagesSubmission Myname 3.sqlRajendra LaddaNo ratings yet

- Initial PagesDocument7 pagesInitial PagesLaxmisha GowdaNo ratings yet

- egrzvs4-65d-r6n43-product-specificationsDocument6 pagesegrzvs4-65d-r6n43-product-specificationsantlozNo ratings yet

- XHHW 2 PDFDocument2 pagesXHHW 2 PDFNaveedNo ratings yet

- Leather Sofa Set Royal Sofa Set For Living Room Casa FurnishingDocument1 pageLeather Sofa Set Royal Sofa Set For Living Room Casa Furnishingalrickbarwa2006No ratings yet

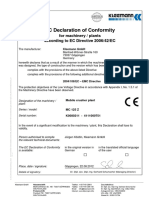

- EG Konformitätserklärung - K0080211 4111000751 - en GB PDFDocument1 pageEG Konformitätserklärung - K0080211 4111000751 - en GB PDFsfeNo ratings yet

- General Medical Merate S.PaDocument21 pagesGeneral Medical Merate S.PaFrancisco AvilaNo ratings yet

- Vesa CVT 1.2Document26 pagesVesa CVT 1.2Tvrtko LovrićNo ratings yet

- Chapter 7 Exam Style QuestionsDocument6 pagesChapter 7 Exam Style QuestionsUmm ArafaNo ratings yet

- ChartDocument1 pageChartFeoteNo ratings yet

- p01 04 Individual Drive Functions v9 Tud 0719 enDocument64 pagesp01 04 Individual Drive Functions v9 Tud 0719 enasphalto87No ratings yet

- DTV Group: Positions Job Description CertificationDocument6 pagesDTV Group: Positions Job Description CertificationhdnutzNo ratings yet

- KCET 2020 Cut Off Engineering General Round 1Document34 pagesKCET 2020 Cut Off Engineering General Round 1Adithya SRKianNo ratings yet

- The Youth Foreign Language School: 9/25/21 Unit 5: What Are You Watching? 1Document15 pagesThe Youth Foreign Language School: 9/25/21 Unit 5: What Are You Watching? 1Hoàng Khắc HuyNo ratings yet

- 3BUR000570R401B - en Advant Controller 460 User S GuideDocument334 pages3BUR000570R401B - en Advant Controller 460 User S Guidejose_alberto2No ratings yet

- © 2017 Interview Camp (Interviewcamp - Io)Document6 pages© 2017 Interview Camp (Interviewcamp - Io)abhi74No ratings yet

- Querying With Transact-SQL: Lab 7 - Using Table ExpressionsDocument2 pagesQuerying With Transact-SQL: Lab 7 - Using Table ExpressionsaitlhajNo ratings yet

- Mathematical Logic or ConnectivesDocument16 pagesMathematical Logic or ConnectivesMerrypatel2386No ratings yet

- Socio-Organizational Issues and Stakeholder Requirements - Part 3Document58 pagesSocio-Organizational Issues and Stakeholder Requirements - Part 3sincere guyNo ratings yet

- 2019 List of Govt ITIDocument41 pages2019 List of Govt ITISunny DuggalNo ratings yet

Implementation of The Data in Rapidminer

Implementation of The Data in Rapidminer

Uploaded by

AliOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Implementation of The Data in Rapidminer

Implementation of The Data in Rapidminer

Uploaded by

AliCopyright:

Available Formats

Analys And Discussion

Dataset

This data in this study uses data from Kaggle entitled Book Data. This data

consists of 460 pieces, with data on book titles, publisher names and publisher years.

This data has attributes or features that describe each example.

Fig 4.1 Data Book

The following is a list of attributes in this study and their explanation:

1.book titles

2.publisher names

3.publisher years (numeric from 2007 to 2020)

Implementation of the data in RapidMiner

To implement Classification in Rapid Miner, the first thing to do is import data.

The data that must be imported is the training data used in Excel and the test data

which contains all the data from the database, namely 460 data.

When importing data, note that each table column is assigned the correct type

attribute. If given the wrong attributes, the end result will also be wrong.

In pattern recognition, information retrieval and classification, precision is the

share of relevant samples among samples taken, while memory is the share of the

total number of relevant samples actually retrieved. Both precision and recall are

based on understanding and measuring the relevance of using a cross validation

system to check accurately and also check for low probability.

Fig 4.2 Cross Validation Book data

There are several types of attributes that we will use, namely :

a) Binominal: data type used for data types that only have two types, such as

YES / NO, or 0/1.

b) Polynominal: the type of data used for data types that have more than 2 types.

c) REAL: used for data types that have a decimal in the number.

d) Integer: used for data types that use numbers for data, without any decimals.

Fig 4.3 Attributes Data Book

For each data, the following are the types of data used for training data and testing

data :

1.Book Titles : Polynominal

2.Publisher Names : Polynominal

3.Publisher Years : Integer

At the data book processing stage, the accuracy of each publisher, the name of the

book, and the year of publication will be shown. where cross validation will show

accurate results from the titles of books that are most interested in to those that are

least in demand from year to year

Modeling is a stage that directly involves data mining techniques, namely by

selecting data mining techniques and determining the micro accurate.

Fig 4.4 Performance Data Book Cross Validation

Modeling is a stage that directly involves data mining techniques, using the decision

tree technique to describe the book title from the year it was published, the name of

the book and also where the book was published.

Fig 4.5 The Form Decision Tree Data Book

The decision tree will also specify the existing book data by describing how many

books have been published from a publisher according to the book title listed.

Fig 4.6 Spesification Decision Tree

Process implementation using Rapid Miner

Open Rapid Miner then in the repository table create a new repository then enter

the data book. Select the retrieval operator then enter the book data into the retrieval

operator in the fast miner design process area, then enter the decision tree operator

into the field, connect the two operators, then enter the applied model and the

performance operator into the design field and then enter the performance dataset

back into the data testing then connect with the operator model apply, as shown in

figure 4.7.

Fig 4.7 Rapid Miner Operators

You might also like

- Data-Analytics ABDocument5 pagesData-Analytics ABSadeel YousefNo ratings yet

- Richard v. McCarthy - Applying Predictive Analytics - Finding Value in Data-Springer (2021)Document282 pagesRichard v. McCarthy - Applying Predictive Analytics - Finding Value in Data-Springer (2021)darko mc0% (1)

- Statistics For Psychologists (Calculating and Interpreting Basic Statistics Using SPSS) - Craig A. WendorfDocument96 pagesStatistics For Psychologists (Calculating and Interpreting Basic Statistics Using SPSS) - Craig A. WendorfsashasalinyNo ratings yet

- Forma Scientific - 86 Freezer Models 916 - 917 - 923 - 925 - and 926 Manual ENGDocument63 pagesForma Scientific - 86 Freezer Models 916 - 917 - 923 - 925 - and 926 Manual ENGNiKo100% (1)

- A Novel Hybrid Classification Model For The Loan Repayment Capability Prediction SystemDocument6 pagesA Novel Hybrid Classification Model For The Loan Repayment Capability Prediction SystemRahul SharmaNo ratings yet

- Experiment 4 Create A Test Plan Document For Any Application (E.g. Library Management System)Document3 pagesExperiment 4 Create A Test Plan Document For Any Application (E.g. Library Management System)John SinghNo ratings yet

- User Manual (Mental Health Issue Among University StudentDocument19 pagesUser Manual (Mental Health Issue Among University StudentANIS NABIHAH BINTI MOHD JAISNo ratings yet

- Ibrahim Zitouni Data Modeling Ibrahim Zitouni Update 64904 173936102Document22 pagesIbrahim Zitouni Data Modeling Ibrahim Zitouni Update 64904 173936102Ibrahim ZitouniNo ratings yet

- Anomaly Detection in Social Networks Twitter BotDocument11 pagesAnomaly Detection in Social Networks Twitter BotMallikarjun patilNo ratings yet

- 3903-Article Text-15481-4-10-20230905Document5 pages3903-Article Text-15481-4-10-20230905Honoratus Irpan Sinurat Honoratus Irpan SinuratNo ratings yet

- QuizDocument5 pagesQuizFatma Zohra OUIRNo ratings yet

- Take Assessment: Exercise 6: Index Choice and Query OptimizationDocument7 pagesTake Assessment: Exercise 6: Index Choice and Query OptimizationxgdsmxyNo ratings yet

- DSBDA Lab ManualDocument167 pagesDSBDA Lab ManualB34-Samruddhi LatoreNo ratings yet

- Performance Evaluation of Ontology Andfuzzybase CbirDocument7 pagesPerformance Evaluation of Ontology Andfuzzybase CbirAnonymous IlrQK9HuNo ratings yet

- Data Preprocessing Solution-24-37Document14 pagesData Preprocessing Solution-24-37gurudevpasupuleti09No ratings yet

- Research ProposalDocument4 pagesResearch ProposalNeha MuleNo ratings yet

- Data - Science Final ReportDocument8 pagesData - Science Final Reportshachidevmahato121No ratings yet

- Property Rental Price Prediction Using The Extreme Gradient BoostingDocument6 pagesProperty Rental Price Prediction Using The Extreme Gradient BoostingClara ANo ratings yet

- Ijcirv13n8 08Document8 pagesIjcirv13n8 08Aina RaksNo ratings yet

- FDS Unit 2Document8 pagesFDS Unit 2Amit AdhikariNo ratings yet

- Machine Learning - Project Group 3Document17 pagesMachine Learning - Project Group 3Tangirala AshwiniNo ratings yet

- Fake News ClassificationDocument8 pagesFake News ClassificationAnton LushkinNo ratings yet

- Lab Assignment 1 Title: Data Wrangling I: Problem StatementDocument12 pagesLab Assignment 1 Title: Data Wrangling I: Problem StatementMr. LegendpersonNo ratings yet

- Data Modeling ExamDocument4 pagesData Modeling ExammbpasumarthiNo ratings yet

- Experiment No. 03: Sr. No: 39 Name: Vanraj PardeshiDocument5 pagesExperiment No. 03: Sr. No: 39 Name: Vanraj PardeshiAnurag SinghNo ratings yet

- Python by Example Book 2 (Data Manipulation and Analysis)Document105 pagesPython by Example Book 2 (Data Manipulation and Analysis)Aamir MehmoodNo ratings yet

- Dwdmsem 6 QBDocument13 pagesDwdmsem 6 QBSuresh KumarNo ratings yet

- Analysis of Common Supervised Learning Algorithms Through ApplicationDocument20 pagesAnalysis of Common Supervised Learning Algorithms Through Applicationacii journalNo ratings yet

- Main Dock PinDocument31 pagesMain Dock PinPaul WalkerNo ratings yet

- 1stpaper NanlConf Textbook Rec Om MenderDocument3 pages1stpaper NanlConf Textbook Rec Om MenderKalyan RoyNo ratings yet

- A Strategy To Compromise Handwritten Documents Processing and Retrieving Using Association Rules MiningDocument6 pagesA Strategy To Compromise Handwritten Documents Processing and Retrieving Using Association Rules MiningUbiquitous Computing and Communication JournalNo ratings yet

- Unit04 CS04 DatabaseDesignDevelopment CourseworkTemplate HNDinComputingDocument9 pagesUnit04 CS04 DatabaseDesignDevelopment CourseworkTemplate HNDinComputingNicK VNo ratings yet

- Pergunta 1: 1 / 1 PontoDocument22 pagesPergunta 1: 1 / 1 PontoBruno CuryNo ratings yet

- ML Theory QuestionsDocument2 pagesML Theory QuestionsRamyashree Gs Dept. of Computer ApplicationsNo ratings yet

- Data Analysis MethodsDocument4 pagesData Analysis MethodsDorminic Wentworth Purcel100% (1)

- Bourne, DavidW. A. - Strauss, Steven - Mathematical Modeling of Pharmacokinetic Data (2018, CRC Press - Routledge)Document153 pagesBourne, DavidW. A. - Strauss, Steven - Mathematical Modeling of Pharmacokinetic Data (2018, CRC Press - Routledge)GuillermoNo ratings yet

- Lab 01Document5 pagesLab 01nayyabkanwal2004No ratings yet

- Method of Research and System AnalysisDocument27 pagesMethod of Research and System AnalysisCRISTINE JOY ATIENZANo ratings yet

- C45 AlgorithmDocument12 pagesC45 AlgorithmtriisantNo ratings yet

- Interplay Between Probabilistic Classifiers and Boosting Algorithms For Detecting Complex Unsolicited EmailsDocument5 pagesInterplay Between Probabilistic Classifiers and Boosting Algorithms For Detecting Complex Unsolicited EmailsShrawan Trivedi100% (1)

- Talend Examples DataQuality EN 7.2.1Document29 pagesTalend Examples DataQuality EN 7.2.1kunja4No ratings yet

- 04-DDD - Assignment Brief 2Document3 pages04-DDD - Assignment Brief 2Doan Thien An (FGW HCM)No ratings yet

- Teit Cbgs Dmbi Lab Manual FH 2015Document60 pagesTeit Cbgs Dmbi Lab Manual FH 2015Soumya PandeyNo ratings yet

- An Efficient Classification Algorithm For Real Estate DomainDocument7 pagesAn Efficient Classification Algorithm For Real Estate DomainIJMERNo ratings yet

- How To Start Your ProjectDocument12 pagesHow To Start Your ProjectNza HawramyNo ratings yet

- Important QuestionsDocument4 pagesImportant QuestionsAdilrabia rslNo ratings yet

- Data Mining Course OverviewDocument38 pagesData Mining Course OverviewharishkodeNo ratings yet

- BA Notes From LectureDocument9 pagesBA Notes From Lectureakashsharma9011328268No ratings yet

- 18-Article Text-61-1-10-20200510Document6 pages18-Article Text-61-1-10-20200510Ghi.fourteen Ghi.fourteenNo ratings yet

- Exp OlapDocument44 pagesExp OlapprashantNo ratings yet

- Current Trends in SoftwareDocument26 pagesCurrent Trends in SoftwareDinesh SamanNo ratings yet

- Unit II - DW&DMDocument19 pagesUnit II - DW&DMRanveer SehedevaNo ratings yet

- BDA Mini Project ReportDocument27 pagesBDA Mini Project ReportRajshree BorkarNo ratings yet

- Assignment 2Document1 pageAssignment 2khokharianNo ratings yet

- Data MningDocument10 pagesData MningrapinmystyleNo ratings yet

- Unit 1Document43 pagesUnit 1amrutamhetre9No ratings yet

- Project Report: A Report Submitted ToDocument13 pagesProject Report: A Report Submitted ToPuvvula Bhavya Sri chandNo ratings yet

- Lab #5 Sig FigsDocument2 pagesLab #5 Sig FigsRebekah MarchilenaNo ratings yet

- Crisp-DmDocument4 pagesCrisp-Dmshravan kumarNo ratings yet

- E Book Management Uml DiagramsDocument11 pagesE Book Management Uml DiagramsVarun Karra100% (1)

- Hotel Property Management SystemDocument12 pagesHotel Property Management SystemOlivia KateNo ratings yet

- Oracle Database 12c: SQL Workshop I: D80190GC10 Edition 1.0 August 2013 D83124Document144 pagesOracle Database 12c: SQL Workshop I: D80190GC10 Edition 1.0 August 2013 D83124Ndiogou diopNo ratings yet

- LogDocument84 pagesLogputri sulungNo ratings yet

- 10 Induction Motor ProtectionDocument19 pages10 Induction Motor ProtectionklicsbcmostNo ratings yet

- Canatal Series 6 - BrochureDocument8 pagesCanatal Series 6 - BrochureOscar A. Pérez MissNo ratings yet

- Form 182 For Name CorrectionDocument4 pagesForm 182 For Name CorrectionH ANo ratings yet

- Introduction To Microdevices and Microsystems: Module On Microsystems & MicrofabricaDocument31 pagesIntroduction To Microdevices and Microsystems: Module On Microsystems & MicrofabricaBanshi Dhar GuptaNo ratings yet

- HR Analytics 3rd ChapterDocument16 pagesHR Analytics 3rd ChapterAppu SpecialNo ratings yet

- Motiv Letter - Taltech - CSDocument2 pagesMotiv Letter - Taltech - CSJora Band7No ratings yet

- Submission Myname 3.sqlDocument3 pagesSubmission Myname 3.sqlRajendra LaddaNo ratings yet

- Initial PagesDocument7 pagesInitial PagesLaxmisha GowdaNo ratings yet

- egrzvs4-65d-r6n43-product-specificationsDocument6 pagesegrzvs4-65d-r6n43-product-specificationsantlozNo ratings yet

- XHHW 2 PDFDocument2 pagesXHHW 2 PDFNaveedNo ratings yet

- Leather Sofa Set Royal Sofa Set For Living Room Casa FurnishingDocument1 pageLeather Sofa Set Royal Sofa Set For Living Room Casa Furnishingalrickbarwa2006No ratings yet

- EG Konformitätserklärung - K0080211 4111000751 - en GB PDFDocument1 pageEG Konformitätserklärung - K0080211 4111000751 - en GB PDFsfeNo ratings yet

- General Medical Merate S.PaDocument21 pagesGeneral Medical Merate S.PaFrancisco AvilaNo ratings yet

- Vesa CVT 1.2Document26 pagesVesa CVT 1.2Tvrtko LovrićNo ratings yet

- Chapter 7 Exam Style QuestionsDocument6 pagesChapter 7 Exam Style QuestionsUmm ArafaNo ratings yet

- ChartDocument1 pageChartFeoteNo ratings yet

- p01 04 Individual Drive Functions v9 Tud 0719 enDocument64 pagesp01 04 Individual Drive Functions v9 Tud 0719 enasphalto87No ratings yet

- DTV Group: Positions Job Description CertificationDocument6 pagesDTV Group: Positions Job Description CertificationhdnutzNo ratings yet

- KCET 2020 Cut Off Engineering General Round 1Document34 pagesKCET 2020 Cut Off Engineering General Round 1Adithya SRKianNo ratings yet

- The Youth Foreign Language School: 9/25/21 Unit 5: What Are You Watching? 1Document15 pagesThe Youth Foreign Language School: 9/25/21 Unit 5: What Are You Watching? 1Hoàng Khắc HuyNo ratings yet

- 3BUR000570R401B - en Advant Controller 460 User S GuideDocument334 pages3BUR000570R401B - en Advant Controller 460 User S Guidejose_alberto2No ratings yet

- © 2017 Interview Camp (Interviewcamp - Io)Document6 pages© 2017 Interview Camp (Interviewcamp - Io)abhi74No ratings yet

- Querying With Transact-SQL: Lab 7 - Using Table ExpressionsDocument2 pagesQuerying With Transact-SQL: Lab 7 - Using Table ExpressionsaitlhajNo ratings yet

- Mathematical Logic or ConnectivesDocument16 pagesMathematical Logic or ConnectivesMerrypatel2386No ratings yet

- Socio-Organizational Issues and Stakeholder Requirements - Part 3Document58 pagesSocio-Organizational Issues and Stakeholder Requirements - Part 3sincere guyNo ratings yet

- 2019 List of Govt ITIDocument41 pages2019 List of Govt ITISunny DuggalNo ratings yet