Professional Documents

Culture Documents

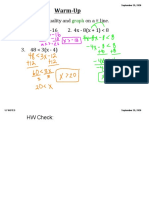

Tele Immersion Documentation

Tele Immersion Documentation

Uploaded by

naveenjawalkarOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Tele Immersion Documentation

Tele Immersion Documentation

Uploaded by

naveenjawalkarCopyright:

Available Formats

Tele-Immersion

INTRODUCTION:

Tele-immersion, a new medium for human interaction enabled by digital technologies,

approximates the illusion that a user is in the same physical space as other people, even through

the other participants might in fact be hundreds or thousands of miles away. It combines the

display and interaction techniques of virtual reality with new vision technologies that transcend

the traditional limitations of a camera. Rather than merely observing people and their immediate

environment from one vantage point, tele- immersion stations convey them as “moving

sculptures,” without favoring a single point of view. The result is that all the participants,

however distant, can share and explore a life-size space.

Beyond improving on videoconferencing, tele-immersion was conceived as an ideal

application for driving network-engineering research, specifically for Internet2, the primary

research consortium for advanced network studies in the U.S. If a computer network can support

tele-immersion, it can probably support any other application. This is because tele-immersion

demands as little delay as possible from flows of information (and as little inconsistency in

delay), in addition to the more common demands for very large and reliable flows.

WHAT IS TELE- IMMERSION?

Tele-immersion enables users at geographically distributed sites to collaborate in real

time in a shared, simulated, hybrid environment as if they were in the same physical room.

It is the ultimate synthesis of media technologies:

3D environment scanning.

Projective and display technologies.

Tracking technologies.

Audio technologies.

Powerful networking.

The considerable requirements for tele-immersion system, such as high bandwidth, low

latency and low latency variation make it one of the most challenging net applications. This

Department of ECE, MRITS 1

Tele-Immersion

application is therefore considered to be an ideal driver for the research agendas of the Internet2

community.

Tele-immersion is that sense of shared presence with distant individuals and their

environments that feels substantially as if they were in one’s own local space. This kind of tele-

immersion differs significantly from conventional video teleconferencing in that the use’s view

of the remote environment changes dynamically as he moves his head.

VIDEOCONFERENCING VS TELE-IMMERSION:

Human interaction has both verbal and nonverbal elements, and videoconferencing seems

precisely configured to confound the nonverbal ones. It is impossible to make eye contact

perfectly, for instance, in today’s videoconferencing systems, because the camera and the display

screen cannot be in the same spot. This usually leads to a deadened and formal affect in

interactions, eye contact being a nearly ubiquitous subconscious method of affirming trust.

Furthermore, participants aren’t able to establish a sense of position relative to one another and

therefore have no clear way to direct attention, approval or disapproval.

Tele-immersion is an improved version of digital technology. We can make an eye

contact, which will give a feeling of trust. This approximates the illusion that a user is in the

same physical space as other people, even though they may be far apart. Here rather than merely

observing the people and their immediate environment from one vantage point, tele-immersion

stations convey them as “moving sculptures”, without favoring a single point of view. They can

share a life cycle space. They are able to convey emotions in its right intensity. A three

dimensional view of the room is obtained. It can simulate shared models also.

NEW CONCEPTS AND CHALLENGES

In a tele-immersive environment computers recognize the presence and movements of

individuals and both physical and virtual objects, track those individuals and objects, and project

them in realistic, multiple, geographically distributed immersive environments on stereo-

immersive surfaces. This requires sampling and resynthesis of the physical environment as well

as the users faces and bodies, which is a new challenge that will move the range of emerging

technologies, such as scene depth extraction and warp rendering, to the next level.

Department of ECE, MRITS 2

Tele-Immersion

Tele-immersive environments will therefore facilitate not only interaction between users

themselves but also between users and computer-generated models and simulations. This will

require expanding the boundaries of computer vision, tracking, display, and rendering

technologies. As a result, all of this will enable users to achieve a compelling experience and it

will lay the groundwork for a higher degree of their inclusion into the entire system.

In order to fully utilize the tele-immersion, we need to provide interaction that is both as

seamless as real world but allows even more effective communication. For example, in the real

world someone involved in a meeting might draw a picture on a paper and then show the paper

to the other people in the meeting. In tele-immersion spaces people have the opportunity to

communicate in fundamentally new ways.

REQUIREMENTS OF TELE-IMMERSION

Tele-immersion is the ultimate synthesis of media technologies. It needs the best out of

every media technology. The requirements are given below.

3D environment scanning:

For a better exploring of the environment a stereoscopic view is required. For this, a

mechanism for 3D environment scanning method is to be used. It is by using multiple cameras

for producing two separate images for each of eyes. By using polarized glasses we can separate

each of the views and get a 3D view.

The key is that in tele-immersion, each participant must have a personal view point of

remote scenes-in fact, two of them, because each eye must see from its own perspective to

preserve a sense of depth. Furthermore, participants should be free to move about, so each

person’s perspective will be in constant motion. Tele-immersion demands that each scene be

sensed in a manner that is not biased toward any particular viewpoint (a camera, in contrast, is

locked into portraying a scene from its own position). Each place, and the people and things in it,

has to be sensed from all directions at once and conveyed as if it were an animated three-

dimensional sculpture. Each remote site receives information describing the whole moving

sculpture and renders viewpoints as needed locally. The scanning process has to be accomplished

fast enough to take place in real time at most within a small fraction of a second.

Department of ECE, MRITS 3

Tele-Immersion

The sculpture representing a person can then be updated quickly enough to achieve the

illusion of continuous motion. This illusion starts to appear at about 12.5 frames per second (fps)

but becomes robust at about 25 fps and better still at faster rates.

Measuring the moving three-dimensional contours of the inhabitants of a room and its

other contents can be accomplished in a variety of ways. In 1993, Henry Fuchs of the University

of North Carolina at Chapel Hill had proposed one method, known as the “sea of cameras”

approach, in which the viewpoints of many cameras are compared. In typical scenes in a human

environment, there will tend to be visual features, such as a fold in a sweater, that are visible to

more than one camera. By comparing the angle at which these features are seen by different

cameras, algorithms can piece together a three- dimensional model of the scene.

This technique had been explored in non-real-time configurations, which later culminated

in the “ Virtualized Reality “ demonstration at Carnegie Mellon University, reported in 1995.

That setup consisted of 51 inward-looking cameras mounted on a geodesic dome. Because it was

not a real – time device, it could not be used for tele-immersion.

Ruzena Bajcsy, head of GRASP (General Robotics, Automation, Sensing and

Perception ) Laboratory at the University of Pennsylvania, was intrigued by the idea of real-time

seas of cameras. Starting in 1994, small scale “puddles” of two or three cameras to gather real-

world data for virtual – reality applications was introduced.

But a sea of cameras in itself isn’t complete solution. Suppose a sea of cameras is looking

at a clean white wall. Because there are no surface futures, the cameras have no information with

which to build a sculptural model. A person can look at a white wall without being confused.

Humans don’t worry that a wall might actually be a passage to an infinitely deep white chasm,

because we don’t rely on geometric cues alone – we also have a model of a room in our minds

that can rein in errant mental interpretations. Unfortunately, to today’s digital cameras, a

person’s forehead or T–shirt can present the same challenge as a white wall, and today’s

software isn’t smart enough to undo the confusion that results.

Researchers at Chapel Hill came with a novel method that has shown promise for

overcoming this obstacle, called “ imperceptible structured light “ or ISL. Conventional light

bulbs flicker 50 or 60 times a second, fast enough for the flickering to be generally invisible to

the human eye. Similarly, ISL appears to the human eye as a continuous source of white light,

Department of ECE, MRITS 4

Tele-Immersion

like an ordinary light bulb, but in fact it is filled with quickly changing patterns visible only to

specialized, carefully synchronized cameras. These patterns fill in voids such as white wall with

imposed features that allow a sea of cameras to complete the measurements. If imperceptible

structured light is not used, then there may be holes in reconstruction data that result from

occlutions, areas that aren’t seen by enough cameras, or areas that don’t provide distinguishing

surface features.

To accomplish the simultaneous capture and display an office of the future is envisioned

where ceiling lights are controlled cameras and “smart” projectors that are used to capture

dynamic image-based models with imperceptible structured light techniques, and to display high-

resolution images on designated display surfaces. By doing simultaneously on the designated

display surfaces, one can dynamically adjust or auto calibrate for geometric, intensity, and

resolution variations resulting from irregular or changing display surfaces, or overlapped

projector images.

Now the current approach to dynamic image-based modeling is to use an optimized

structured light scheme that can capture per-pixel depth and reflectance at interactive rates. The

approach to rendering on the designated (potentially irregular) display surface is to employ a

two-pass projective texture scheme to generate images that when projected onto the surfaces

appear correct to a moving head-tracked observer.

Department of ECE, MRITS 5

Tele-Immersion

Image processing:

At the transmitting end, the 3d image scanned is generated using two techniques:

Shared table approach

Here, the depth of the 3d image is calculated using 3d wire frames. this technique uses

various camera views and complex image analysis algorithms to calculate the depth.

Ic3d (incomplete 3d) approach

In this case, a common texture surface is extracted from the available camera views and

the depth information is coded in an associated disparity map. This representation can be

encoded into a mpeg-4 video object, which is then transmitted.

Department of ECE, MRITS 6

Tele-Immersion

Fig:1 Left and Right Camera View of Stero Test Sequence

Fig:2 Texture and Disparity Maps Extracts from Stero Test Sequence

Department of ECE, MRITS 7

Tele-Immersion

Reconstruction in a holographic environment:

The process of reconstruction of image occurs in a holographic environment. The

reconstruction process is different for shared table and ic3d approach.

Shared Table Approach

Assuming that the geometrical parameters of the multi-view capture device, the

virtual scene and the virtual camera are well fitted to each other, it is ensured that the

scene is viewed in the right perspective view, even while changing the viewing

position.

Ic3d Approach

The decoded disparities are scaled according to the user’s 3d viewpoint in the virtual

scene, and a disparity-controlled projection is carried out. The 3d perspective of the

person changes with the movement of the virtual camera

In both the approaches, at the receiving end the entirely composed 3d scene is rendered

onto the 2d display of the terminal by using a virtual camera. the position of the virtual camera

coincides with the current position of the conferee's head. for this purpose the head position is

permanently registered by a head tracker and the virtual camera is moved with the head.

Projective & display technologies:

By using tele-immersion a user must feel that he is immersed in the other person’s world.

For this, a projected view of the other user’s world is needed. For producing a projected view,

big screen is needed. For better projection, the screen must be curved and special projection

cameras are to be used.

Tracking technologies:

It is great necessity that each of the objects in the immersive environment be tracked so

that we get a real world experience. This is done by tracking the movement of the user and

adjusting the camera accordingly.

Department of ECE, MRITS 8

Tele-Immersion

Moving Sculptures:

It combines the display and interaction techniques of virtual reality with new vision

technologies that transcend the traditional limitations of a camera. Rather than merely observing

people and their immediate environment from one vantage point, tele-immersion stations convey

them as “moving sculptures”, without favoring a single point of view. The result is that all the

participants, however distant, can share and explore a life size space.

Head & Hand tracking –

The UNC and Utah sites collaborated on several joint design-and-manufacture efforts,

including the design and rapid production of a head-tracker component (HiBall) (now used in the

experimental UNC wide-area ceiling tracker). Precise, unencumbered tracking of a user’s head

and hands over a room sized working area has been an elusive goal in modern technology and

the weak link in most virtual reality systems. Currant commercial offerings based on magnetic

technologies perform poorly around such ubiquitous, magnetically noisy computer components

as CRTs, while optical-based products have a very small working volume and illuminated

beacon targets (LEDs). Lack of an effective tracker has crippled a host of augmented reality

applications in which the user’s views of the local surroundings are augmented by synthetic data

(e.g., location of a tumor in the patient’s breast or the removal path of a part from within a

complicated piece of machinery).

Audio technologies:

For true immersive effect the audio system has to be extended to another dimension, i.e.,

a 3D sound capturing and reproduction method has to be used. This is necessary to track each

sound source’s relative position.

Powerful networking:

If a computer network can support tele-immersion it can probably support any other

application. This is because tele-immersion demands as little delay as possible from flows of

information (and as little inconsistency in delay), in addition to the more common demands for

very large and reliable flows. The considerable requirements for tele-immersion system, such as

Department of ECE, MRITS 9

Tele-Immersion

high bandwidth, low latency and low variation (jitter), make it one of the most challenging net

applications.

Internet 2 –the driving force behind Tele-immersion

It is the next generation internet. Tele-immersion was conceived as ideal application for

driving network engineering research. Internet2 is a consortium consisting of the US

government, industries and around 200 universities and colleges.

It has high bandwidth and speed. It enables revolutionary internet applications.

Need for speed:

If a computer network can support tele-immersion, it can probably support any other

application. This is because tele-immersion demands as little delay as possible from flows of

information (and as little inconsistency in delay), in addition to the more common demands for

very large and reliable flows.

Strain to Network:

In tele-immersion not only participant’s motion but also the entire surface of each

participant had to sent. So it strained a network very strongly. Bandwidth is a crucial concern.

Our demand for bandwidth varies with the scene and application; a more complex scene requires

more bandwidth. Conveying a single person at a desk, without the surrounding room, at a slow

frame rate of about two frames per second has proved to require around 20 megabits per second

but with up to 8-megabit-per-second peaks.

Network backbone:

A backbone is a network within a network that lets information travel over exceptionally

powerful, widely shared connections to go long distances more quickly. Some notable backbones

designed to support research were the NSFnet in the late 1980’s and the vBNS in the mid-

1990’s. Each of these played a part in inspiring new applications for the Internet, such as the

World Wide Web. Another backbone research project, called Abilene, began in 1998, and it was

to serve a university consortium called Internet2.Abilene now reaches more than 170 American

research universities. If the only goal of Internet2 were to offer a high level of bandwidth (that is,

Department of ECE, MRITS 10

Tele-Immersion

a large number of bits per second), then the mere existence of Abilene and related resources be

sufficient. But Internet2 research targeted additional goals, among them the development of new

protocols for handling applications that demand very high bandwidth and very low, controlled

latencies (delays imposed by processing signals en route).

The “last mile” of network connection that runs into computer science departments

currently tends to be an OC3 line, which can carry 155 megabits per second-just about right for

sustaining a three-way conversation at a slow frame rate. But an OC3 line has approximately 100

times more capacity than what is usually considered a broadband connection now, and it is

correspondingly more expensive.

Computational Needs

Beyond the scene-capture system, the principal components of a tele-immersion setup are

the computers, the network services, and the display and interaction devices. Each of these

components has been advanced in the cause of tele-immersion and must advance further. Tele-

immersion is a voracious consumer of computer resources. Literally dozens of such processors

are currently needed at each site to keep up with the demands of tele-immersion. Roughly

speaking, a cluster of eight two-gigahertz Pentium processors with shared memory should be

able to process a trio within a sea of cameras in approximately real time. Such processor clusters

should be available in the later year.

One promising avenue of exploration in the next few years will be routing tele-immersion

processing through remote supercomputer centers in real time to gain access to superior

computing power. In this case, a supercomputer will have to be fast enough to compensate for

the extra delay caused by the travel time to and from its location.

Bandwidth is a crucial concern. Our demand for bandwidth varies with the scene and

application; a more complex scene requires more bandwidth. We can assume that much of the

scene, particularly the background walls and such, is unchanging and does not need to be resent

with each frame.

Conveying a single person at a desk, without the surrounding room, at a slow frame rate

of about two frames per second has proved to require around 20 megabits per second but with up

to 80-megabit-per-second peaks. With time, however, that number will fall as better compression

techniques become established. Each site must receive the streams from all the others, so in a

Department of ECE, MRITS 11

Tele-Immersion

three-way conversation the bandwidth requirement must be multiplied accordingly. The “last

mile” of network connection that runs into computer science departments currently tends to be an

OC3 line, which can carry 155 megabits per second-just about right for sustaining a three-way

conversation at a slow frame rate. But on OC3 line is approximately 100 times more capacious

than what is usually considered a broadband connection now, and it is correspondingly more

expensive.

Tele Cubicle

The tele-cubicle represents the next generation immersive interface. It can also be seen as

a subset of all possible immersive interfaces. An office appears as one quadrant in a larger shared

virtual office space. The canvases onto which the imagery can be displayed are a stero-

immersive desk surface as well as at least two stereo. Such a system represents the unification of

Virtual Reality and videoconferencing, and it provides an opportunity for the full integration of

VR into the workflow. Physical and virtual environments appear united for both input and

display. This combination, we believe, offers a new paradigm for human communications and

collaboration.

RESULTS OF THE DEMO ON OCTOBER 2000:

In the demo in October 2000, most of the confetti was gone and the overall quality and

speed of the system had increased, but the most important improvement came from researchers

at Brown University. Demonstration of unified system with 3D real time acquisition data (“real”

data), 3D synthetic objects (“virtual” data) and user interactions with 3D objects using virtual

laser pointer.

The participants in the session are not only able to see each other in 3D but they were

able to engage in collaborative work, here a simple example of interior office design. The remote

site in the demo was Advanced Network & Services, Armonk, Ny, and local site where images

were taken was at the University of North Carolina at Chapell Hill, NC. The data were sent over

Internet2 links (Abilene-backbone) at the rate of 15-20 Mb/sec (no compression applied), 3D real

time acquisition data combined with static 3D background and synthetic 3D graphics objects. For

the interactive part we used magnetic tracker to mimic virtual laser pointer, as well as a mouse.

Department of ECE, MRITS 12

Tele-Immersion

All synthetic objects were either downloaded or created on the fly. Both users could

move objects around the scene and collaborate in design process In between the two people are

virtual objects (the furniture models). These are objects that don’t come from either physical

place. They can be created and manipulated on the fly-there’s a deep architecture behind them

(which was written at Brown University).

Fig 3 Three way video conferencing using Tele Immersion

APPLICATIONS

1) Collaborative Engineering Works

Teams of engineers might collaborate at great distances on computerized designs for new

machines that can be tinkered with as though they were real models on a shared workbench.

Archaeologists from around the world might experience being present during a crucial dig.

Rarefied experts in building inspection or engine repair might be able to visit locations without

losing time to air travel.

Department of ECE, MRITS 13

Tele-Immersion

2) Video Conferencing

In fact, tele-immersion might come to be seen as real competition for air travel-unlike

videoconferencing. Although few would claim that tele-immersion will be absolutely as good as

“being there” in the near term, it might be good enough for business meetings, professional

consultations, training sessions, trade show exhibits and the like. Business travel might be

replaced to a significant degree by tele-immersion in 10 years. This is not only because tele-

immersion will become better and cheaper but because air travel will face limits to growth

because of safety, land use and environmental concerns.

3) Immersive Electronic Book:

Applications of tele-immersion will include immersive electronic books that in effect

blend a “time machine” with 3D hypermedia, to add an additional important dimension, that of

being able to record experiences in which a viewer, immersed in the 3D reconstruction, can

literally walk through the scene or move backward and forward in time. While there are many

potential application areas for such novel technologies (e.g., design and virtual prototyping,

maintenance and repair, paleontological and archaeological reconstruction), the focus here will

be on a socially important and technologically challenging driving application, teaching surgical

management of difficult, potentially lethal, injuries.

4) Collaborative mechanical CAD:

A group of designers will be able to collaborate from remote sites in an interactive design

process. They will be able to manipulate a virtual model starting from the conceptual design,

review and discuss the design at each stage, perform desired evaluation and simulation, and even

finish off the cycle with the production of the concrete part on the milling machines.

Department of ECE, MRITS 14

Tele-Immersion

CONCLUSION

Tele immersion is a dynamic concept, which will transform the way humans,

interact with each other and the world in general.

Tele immersion takes video conferencing to the next higher level. It helps in simulation

of office environment so it can reduce business travel.

Tele immersion is expected to be very expensive to compete with other

communication technologies when implemented.

Department of ECE, MRITS 15

Tele-Immersion

BIBLIOGRAPHY

1. Jaron Lanier, “Three-dimensional tele-immersion may eventually bring the world to your

desk”, SCIENTIFIC AMERICAN Magazine, Scientific American Inc., April 2001,pp

66-75.

2. J Leigh, A.E .Johnson, M.Brown, D.J Sandin and T. A De-Fanti, “Visualization in

Teleimmersive environments”, computer 1999 , Vol 32, No 12, pp 66-73

3. www.internet2.edu

4. www.cis.upenn.edu

5. www.mrl.nyu.edu

6. www.howstuffworks.com

Department of ECE, MRITS 16

You might also like

- C560XL Schematic ManualDocument215 pagesC560XL Schematic Manualmrxybb100% (3)

- Meredith BrickenDocument14 pagesMeredith BrickenÁlv ZamoraNo ratings yet

- Massive MIMO Detection Algorithm and VLSI Architecture 2019 PDFDocument348 pagesMassive MIMO Detection Algorithm and VLSI Architecture 2019 PDFdupipiNo ratings yet

- Tele ImmersionDocument27 pagesTele ImmersionAnandhu AnilNo ratings yet

- Tele-Immersion: G.Bharath (10E41D0506) Department of Computer Science Sree Dutta College of Engineering and ScienceDocument14 pagesTele-Immersion: G.Bharath (10E41D0506) Department of Computer Science Sree Dutta College of Engineering and ScienceHarishchandrababu RavuriNo ratings yet

- "Immersion": Seminar ReportDocument6 pages"Immersion": Seminar ReportGayas MarkNo ratings yet

- PriyaDocument36 pagesPriyaPriyanka DaviesNo ratings yet

- A SEMINAR REPORT Tele-Immersion 1gowDocument25 pagesA SEMINAR REPORT Tele-Immersion 1gowSunil RAYALASEEMA GRAPHICSNo ratings yet

- Tele ImmersionDocument21 pagesTele ImmersionVenkatesh PitlaNo ratings yet

- Tele-Immersion (Virtual Instumenation) : P.V.H.N.Srinivas 2/4 EIE Bapatla Engineering College BapatlaDocument6 pagesTele-Immersion (Virtual Instumenation) : P.V.H.N.Srinivas 2/4 EIE Bapatla Engineering College BapatlaHanumantha RaoNo ratings yet

- Tele ImmersionDocument30 pagesTele ImmersionPrashant AgarwalNo ratings yet

- Tele-Immersion: Submitted by Monalin Sahoo 0701219007Document24 pagesTele-Immersion: Submitted by Monalin Sahoo 0701219007Monalin SahooNo ratings yet

- Moradabad Institute of Technology, Moradabad: Department of Computer Science and EngineeringDocument2 pagesMoradabad Institute of Technology, Moradabad: Department of Computer Science and EngineeringdhruvNo ratings yet

- Study On Teleimmersion Which Is A Form of Virtual Reality: Sravanthi PalleDocument4 pagesStudy On Teleimmersion Which Is A Form of Virtual Reality: Sravanthi PalleRaman JangamNo ratings yet

- Fuchs 1994 VirtualDocument7 pagesFuchs 1994 VirtualFoton y JacNo ratings yet

- Tele Immersion AbstractDocument1 pageTele Immersion AbstractshijinbgopalNo ratings yet

- Report On Tele-EmmersionDocument25 pagesReport On Tele-EmmersionAnshumanMishraNo ratings yet

- Stimulating The Brain in VR - Effects of Transcranial Direct-Current Stimulation On Redirected WalkingDocument9 pagesStimulating The Brain in VR - Effects of Transcranial Direct-Current Stimulation On Redirected WalkingDenielsen PaulusNo ratings yet

- InformationTechnology Aug2003Document3 pagesInformationTechnology Aug2003Raju SunkariNo ratings yet

- Autonomous Virtual Humans and Social Robots in TelepresenceDocument6 pagesAutonomous Virtual Humans and Social Robots in TelepresenceAraiz IjazNo ratings yet

- Tele Immersion & Internet2Document12 pagesTele Immersion & Internet2Geenas GsNo ratings yet

- Tele-Immersion: Uc Berkeley Uc Davis Uc Merced Uc Santa CruzDocument13 pagesTele-Immersion: Uc Berkeley Uc Davis Uc Merced Uc Santa CruzAnkush ThapaNo ratings yet

- Underwater Computer Vision: Exploring the Depths of Computer Vision Beneath the WavesFrom EverandUnderwater Computer Vision: Exploring the Depths of Computer Vision Beneath the WavesNo ratings yet

- SynopsisDocument8 pagesSynopsisSahil Oberoi100% (1)

- Tele ImmersionDocument10 pagesTele Immersionraazb4uNo ratings yet

- Industrial Training Report CopyDocument13 pagesIndustrial Training Report CopyAkash KunduNo ratings yet

- Current & Future Trends of MILDocument23 pagesCurrent & Future Trends of MILJULIUS E DIAZONNo ratings yet

- MCA Tele Immersion ReportDocument20 pagesMCA Tele Immersion ReportRaman JangamNo ratings yet

- Quantum TeleportationDocument27 pagesQuantum TeleportationWaseem KhanNo ratings yet

- Tele ImmersionDocument20 pagesTele ImmersionOluwatoyosilorun AlukoNo ratings yet

- Seminar On TeleDocument1 pageSeminar On Teletaqi1234No ratings yet

- Marin Morales Et Al., 2020-Emotion Recognition in Immersive Virtual Reality - From Statistics To Affective ComputingDocument26 pagesMarin Morales Et Al., 2020-Emotion Recognition in Immersive Virtual Reality - From Statistics To Affective ComputingPrudhvi PulapaNo ratings yet

- Ieee Final PDFDocument14 pagesIeee Final PDFJoshua Nessus Aragonès SalazarNo ratings yet

- The Efficiency Enhancement in Non Immersive Virtual Reality System by Haptic DevicesDocument5 pagesThe Efficiency Enhancement in Non Immersive Virtual Reality System by Haptic Deviceseditor_ijarcsseNo ratings yet

- Haptic TechnologyDocument6 pagesHaptic TechnologyNeeluCuteStarNo ratings yet

- A Virtual Holographic Display Case For Museum InstallationsDocument5 pagesA Virtual Holographic Display Case For Museum InstallationsCeaser SaidNo ratings yet

- Virtual Reality-Knowledge WiseDocument4 pagesVirtual Reality-Knowledge WiseHarish AthuruNo ratings yet

- Overview of Virtual Reality Technologies: Yuri Antonio Gonçalves Vilas BoasDocument6 pagesOverview of Virtual Reality Technologies: Yuri Antonio Gonçalves Vilas BoasAdya RizkyNo ratings yet

- Virtual Reality: Bhavna GuptaDocument5 pagesVirtual Reality: Bhavna GuptaIjact EditorNo ratings yet

- Tele ImmersionDocument10 pagesTele ImmersionARVINDNo ratings yet

- Ibc 2000Document6 pagesIbc 2000DJKRDXNo ratings yet

- Virtual RealityDocument157 pagesVirtual RealitykailasamraviNo ratings yet

- Mobile Substation: Harlal Singh Khairwa Enroll-412/09 Electrical EngineeringgDocument19 pagesMobile Substation: Harlal Singh Khairwa Enroll-412/09 Electrical EngineeringgHarlal KhairwaNo ratings yet

- RSTB 2009 0138 PDFDocument9 pagesRSTB 2009 0138 PDFpriyankaprakasanpNo ratings yet

- Virtual Architecture01Document29 pagesVirtual Architecture01ishwaryaNo ratings yet

- Animation and Performance Capture Using Digitized Models (Edilson de Aguiar (Auth.) ) (Z-Library)Document172 pagesAnimation and Performance Capture Using Digitized Models (Edilson de Aguiar (Auth.) ) (Z-Library)Fresy NugrohoNo ratings yet

- JETIR2302147Document5 pagesJETIR2302147arvind TttNo ratings yet

- Artificial RealityDocument19 pagesArtificial RealityPrinkle GulatiNo ratings yet

- Meeting ReportDocument3 pagesMeeting Reportapi-425170700No ratings yet

- 13504632-Virtual-Reality1 Converted by AbcdpdfDocument75 pages13504632-Virtual-Reality1 Converted by AbcdpdfHimanish SNo ratings yet

- Virtual Reality1Document72 pagesVirtual Reality1vinni vone100% (4)

- Perfect Presence: What Does This Mean For The Design of Virtual Learning Environments?Document13 pagesPerfect Presence: What Does This Mean For The Design of Virtual Learning Environments?Cdplus SevenNo ratings yet

- Lincoln 2009 AcDocument7 pagesLincoln 2009 Acgopa333_comNo ratings yet

- Divya Phase 2 ReportDocument51 pagesDivya Phase 2 ReportMost WantedNo ratings yet

- Taxonomy and Implementation of Redirection Techniques For Ubiquitous Passive Haptic FeedbackDocument7 pagesTaxonomy and Implementation of Redirection Techniques For Ubiquitous Passive Haptic FeedbackHabiba HeshamNo ratings yet

- What Is ClaytronicsDocument3 pagesWhat Is ClaytronicsMayur PatankarNo ratings yet

- Screen Less DisplayDocument5 pagesScreen Less DisplayJournalNX - a Multidisciplinary Peer Reviewed JournalNo ratings yet

- Tele ImmersionDocument19 pagesTele ImmersionVon Dominic BediaNo ratings yet

- Abstract Tele ImmersionDocument2 pagesAbstract Tele ImmersionBhanu DanduNo ratings yet

- Obstacle Avoidance Using Haptics and A Laser Rangefinder: Daniel Innala Ahlmark, Håkan Fredriksson, Kalevi HyyppäDocument6 pagesObstacle Avoidance Using Haptics and A Laser Rangefinder: Daniel Innala Ahlmark, Håkan Fredriksson, Kalevi HyyppäagebsonNo ratings yet

- The Concept Remains The SameDocument7 pagesThe Concept Remains The SameMayuri TalrejaNo ratings yet

- IE 411 Lecture6 90ABDocument15 pagesIE 411 Lecture6 90ABZain Ul AbidinNo ratings yet

- ICT Equipments and Devices Specification FinalDocument54 pagesICT Equipments and Devices Specification FinalTemesgen AynalemNo ratings yet

- Basic Troubleshooting CLARKDocument21 pagesBasic Troubleshooting CLARKRaul E. SoliNo ratings yet

- Star Holman 210HX Conveyor OvenDocument2 pagesStar Holman 210HX Conveyor Ovenwsfc-ebayNo ratings yet

- XT0223S16128 Smart调试说明书 ENDocument81 pagesXT0223S16128 Smart调试说明书 ENBagwis100% (1)

- 06 - Heights and DistancesDocument2 pages06 - Heights and DistancesRekha BhasinNo ratings yet

- Agribusiness: ExamplesDocument4 pagesAgribusiness: ExamplesAngelo AristizabalNo ratings yet

- LESSON 2 Occupational Safety and Health LawsDocument6 pagesLESSON 2 Occupational Safety and Health LawsMELCHOR CASTRONo ratings yet

- ITSDF B56-11-5-2014-Rev-12-2-14Document17 pagesITSDF B56-11-5-2014-Rev-12-2-14Fernando AguilarNo ratings yet

- Key AnDocument17 pagesKey Anpro techNo ratings yet

- NavAid Module 2.0Document21 pagesNavAid Module 2.0Kiel HerreraNo ratings yet

- WEEK 8 VentilationDocument39 pagesWEEK 8 VentilationNURIN ADLINA TAJULHALIMNo ratings yet

- Neonatal Ventilator EN NV8 V1.3Document4 pagesNeonatal Ventilator EN NV8 V1.3Surta DevianaNo ratings yet

- Helicopter SafetyDocument11 pagesHelicopter SafetyWilliam Greco100% (2)

- Dơnload A Simple Secret 2nd Edition M Brereton Full ChapterDocument24 pagesDơnload A Simple Secret 2nd Edition M Brereton Full Chapterjsfjarbin100% (3)

- Ecclesiastes: Or, The PreacherDocument12 pagesEcclesiastes: Or, The PreacherAuslegungNo ratings yet

- Deluge Valve Installation ManualDocument7 pagesDeluge Valve Installation Manualrahull.miishraNo ratings yet

- Guwahati: Places To VisitDocument5 pagesGuwahati: Places To Visitdrishti kuthariNo ratings yet

- Desalination: Yusuf Yavuz, A. Sava Ş Koparal, Ülker Bak Ir ÖğütverenDocument5 pagesDesalination: Yusuf Yavuz, A. Sava Ş Koparal, Ülker Bak Ir Öğütverendumi-dumiNo ratings yet

- Reinforcement PADDocument13 pagesReinforcement PADamr al yacoubNo ratings yet

- WBC AnomaliesDocument20 pagesWBC AnomaliesJosephine Piedad100% (1)

- FIN V1 Chap4 EconDocument15 pagesFIN V1 Chap4 EconV I S W A L S HNo ratings yet

- Ticf KKDDV: Kerala GazetteDocument28 pagesTicf KKDDV: Kerala GazetteVivek KakkothNo ratings yet

- Training Report of IoclDocument68 pagesTraining Report of IoclAnupam SrivastavNo ratings yet

- Watson InformationDocument17 pagesWatson InformationJorge ForeroNo ratings yet

- 11thMachAutoExpo 2022Document112 pages11thMachAutoExpo 2022Priyanka KadamNo ratings yet

- Variables On Both Sides of EquationsDocument17 pagesVariables On Both Sides of EquationsShatakshi DixitNo ratings yet

- Evertz MVP Manual PDFDocument2 pagesEvertz MVP Manual PDFDavidNo ratings yet