Professional Documents

Culture Documents

Heteroscedasticity

Heteroscedasticity

Uploaded by

Md AzimOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Heteroscedasticity

Heteroscedasticity

Uploaded by

Md AzimCopyright:

Available Formats

Heteroscedasticity:

#Heteroscedasticity means unequal scatter. In #regression analysis, we talk about heteroscedasticity in

the context of the #residuals or #error term. Specifically, heteroscedasticity is a systematic change in the

spread of the residuals over the range of measured values.

Heteroscedasticity is a problem because ordinary least squares (OLS) regression assumes that all

residuals are drawn from a population that has a constant variance (homoscedasticity).

#To_trust_the_result_of_regression_analysis, #there_should_not _be_heteroskedasticity.

To satisfy the regression assumptions and be able to trust the results, the residuals should have a

constant variance.

What Causes Heteroscedasticity?

Heteroscedasticity, also spelled heteroskedasticity, occurs more often in datasets that have a large

range between the largest and smallest observed values. While there are numerous reasons why

heteroscedasticity can exist, a common explanation is that the #error_variance_changes_proportionally

with a factor. This factor might be a variable in the model.

In some cases, the variance increases proportionally with this factor but remains constant as a

percentage. For instance, a 10% change in a number such as 100 is much smaller than a 10% change in a

large number such as 100,000. In this scenario, you expect to see larger residuals associated with higher

values. That’s why you need to be careful when working with wide ranges of values!

Because large ranges are associated with this problem, some types of models are more prone to

heteroscedasticity.

#Cross_sectional_studies often have very small and large values and, thus, are more

#likely_to_have_heteroscedasticity.

#Cross-sectional studies have a larger risk of residuals with #non-constant variance because of the larger

disparity between the largest and smallest values.

How to Detect Heteroscedasticity?

A residual plot can suggest (but not prove) heteroscedasticity. Residual plots are created by:

1. Calculating the square residuals.

2. Plotting the squared residuals against an explanatory variable (one that you think is related to

the errors).

3. Make a separate plot for each explanatory variable you think is contributing to the errors.

You don’t have to do this manually; most statistical software (i.e. SPSS, Maple) have commands to

create residual plots.

Several tests can also be run:

1. Park Test.

2. White Test.

Consequences of Heteroscedasticity?

Severe heteroscedastic data can give you a variety of problems:

OLS will not give you the estimator with the smallest variance (i.e. your estimators will not be

useful).

Significance tests will run either too high or too low.

Standard errors will be biased, along with their corresponding test statistics and confidence

intervals.

How to Deal with Heteroscedastic Data?

If your data is heteroscedastic, it would be inadvisable to run regression on the data as is. There are a

couple of things you can try if you need to run regression:

1. Give data that produces a large scatter less weight.

2. Transform the Y variable to achieve homoscedasticity. For example, use the #Box-Cox normality

plot to transform the data.

Sources:

https://statisticsbyjim.com/regression/heteroscedasticity-regression

https://www.statisticshowto.datasciencecentral.com/heteroscedasticity-simple-definition-examples

You might also like

- Multiple Regression Analysis Using SPSS LaerdDocument14 pagesMultiple Regression Analysis Using SPSS LaerdsonuNo ratings yet

- Axioms of Data Analysis - WheelerDocument7 pagesAxioms of Data Analysis - Wheelerneuris_reino100% (1)

- Example How To Perform Multiple Regression Analysis Using SPSS StatisticsDocument14 pagesExample How To Perform Multiple Regression Analysis Using SPSS StatisticsMunirul Ula100% (1)

- Multiple Regression Analysis Using SPSS StatisticsDocument9 pagesMultiple Regression Analysis Using SPSS StatisticsAmanullah Bashir GilalNo ratings yet

- Introduction To GraphPad PrismDocument33 pagesIntroduction To GraphPad PrismHening Tirta KusumawardaniNo ratings yet

- HeteroscedasticityDocument16 pagesHeteroscedasticityKarthik BalajiNo ratings yet

- Heteros Ce Dasti CityDocument17 pagesHeteros Ce Dasti CityjazibscribNo ratings yet

- Heteroscedasticity: What Heteroscedasticity Is. Recall That OLS Makes The Assumption ThatDocument20 pagesHeteroscedasticity: What Heteroscedasticity Is. Recall That OLS Makes The Assumption Thatbisma_aliyyahNo ratings yet

- Econometrics AssignmentDocument6 pagesEconometrics AssignmentHammad SheikhNo ratings yet

- BRM StatwikiDocument55 pagesBRM StatwikiMubashir AnwarNo ratings yet

- HomoscedasticityDocument4 pagesHomoscedasticitycallanantNo ratings yet

- How Do I Test The Normality of A Variable's Distribution?Document6 pagesHow Do I Test The Normality of A Variable's Distribution?ouko kevinNo ratings yet

- Descriptive Statistics PDFDocument24 pagesDescriptive Statistics PDFKrishna limNo ratings yet

- Heteroscedasticity NotesDocument9 pagesHeteroscedasticity NotesDenise Myka TanNo ratings yet

- 1.1 - Statistical Analysis PDFDocument10 pages1.1 - Statistical Analysis PDFzoohyun91720No ratings yet

- 7-Multiple RegressionDocument17 pages7-Multiple Regressionحاتم سلطانNo ratings yet

- 10 Most Common Analytical Errors - MCAPSDocument1 page10 Most Common Analytical Errors - MCAPSUnnamed EntityNo ratings yet

- Multiple Regression Analysis Using SPSS StatisticsDocument5 pagesMultiple Regression Analysis Using SPSS StatisticssuwashiniNo ratings yet

- HeteroscedasticityDocument12 pagesHeteroscedasticityarmailgmNo ratings yet

- The Detection of Heteroscedasticity in Regression Models For Psychological DataDocument26 pagesThe Detection of Heteroscedasticity in Regression Models For Psychological DataMagofrostNo ratings yet

- REPORTING ReliabilityDocument5 pagesREPORTING ReliabilityCheasca AbellarNo ratings yet

- Data SreeningDocument9 pagesData SreeningAli razaNo ratings yet

- HeteroscedasticityDocument4 pagesHeteroscedasticityAditya GogoiNo ratings yet

- Emerging Trends & Analysis 1. What Does The Following Statistical Tools Indicates in ResearchDocument7 pagesEmerging Trends & Analysis 1. What Does The Following Statistical Tools Indicates in ResearchMarieFernandesNo ratings yet

- Statistical Methods For Cross-Sectional Data AnalysisDocument1 pageStatistical Methods For Cross-Sectional Data AnalysisQueen ANo ratings yet

- HeteroskedasticityDocument2 pagesHeteroskedasticityRupok ChowdhuryNo ratings yet

- 1preparing DataDocument6 pages1preparing DataUkkyNo ratings yet

- HeteroscedasticityDocument7 pagesHeteroscedasticityBristi RodhNo ratings yet

- Hypothesis Testing - A Visual Introduction To Statistical Significance (Scott Hartshorn)Document137 pagesHypothesis Testing - A Visual Introduction To Statistical Significance (Scott Hartshorn)laskano aborbiNo ratings yet

- Chapter 10: Multicollinearity Chapter 10: Multicollinearity: Iris WangDocument56 pagesChapter 10: Multicollinearity Chapter 10: Multicollinearity: Iris WangОлена БогданюкNo ratings yet

- Part ADocument16 pagesPart ASaumya SinghNo ratings yet

- Measure of VariabilityDocument3 pagesMeasure of VariabilityZari NovelaNo ratings yet

- Name: Adewole Oreoluwa Adesina Matric. No.: RUN/ACC/19/8261 Course Code: Eco 307Document13 pagesName: Adewole Oreoluwa Adesina Matric. No.: RUN/ACC/19/8261 Course Code: Eco 307oreoluwa adewoleNo ratings yet

- Mathematical Exploration StatisticsDocument9 pagesMathematical Exploration StatisticsanhhuyalexNo ratings yet

- 09.the Gauss-Markov Theorem and BLUE OLS Coefficient EstimatesDocument10 pages09.the Gauss-Markov Theorem and BLUE OLS Coefficient Estimatesmalanga.bangaNo ratings yet

- BRM Unit 4 ExtraDocument10 pagesBRM Unit 4 Extraprem nathNo ratings yet

- Heteroscedasticity and MulticollinearityDocument4 pagesHeteroscedasticity and MulticollinearitydivyakantmevaNo ratings yet

- Statistics - The Big PictureDocument4 pagesStatistics - The Big PicturenaokiNo ratings yet

- Do You Have Leptokurtophobia - WheelerDocument8 pagesDo You Have Leptokurtophobia - Wheelertehky63No ratings yet

- Stats Mid TermDocument22 pagesStats Mid TermvalkriezNo ratings yet

- 049 Stat 326 Regression Final PaperDocument17 pages049 Stat 326 Regression Final PaperProf Bilal HassanNo ratings yet

- CH - 5 - Econometrics UGDocument24 pagesCH - 5 - Econometrics UGMewded DelelegnNo ratings yet

- Descriptive Statistics in SpssDocument14 pagesDescriptive Statistics in SpssCarlo ToledooNo ratings yet

- Linear Models BiasDocument17 pagesLinear Models BiasChathura DewenigurugeNo ratings yet

- Notes Data AnalyticsDocument19 pagesNotes Data AnalyticsHrithik SureshNo ratings yet

- SMOGD User Manual Vsn2.6Document5 pagesSMOGD User Manual Vsn2.6Jaqueline Figuerêdo RosaNo ratings yet

- Notes 14Document33 pagesNotes 14zenith6505No ratings yet

- Spss Training MaterialDocument117 pagesSpss Training MaterialSteve ElroyNo ratings yet

- Chapter 4-Volation Final Last 2018Document105 pagesChapter 4-Volation Final Last 2018Getacher NiguseNo ratings yet

- Robust RegressionDocument7 pagesRobust Regressionharrison9No ratings yet

- Statistics For Data Science: What Is Normal Distribution?Document13 pagesStatistics For Data Science: What Is Normal Distribution?Ramesh MudhirajNo ratings yet

- 8614 (1) - 1Document17 pages8614 (1) - 1Saqib KhalidNo ratings yet

- 20151113141143introduction To Statistics-7Document29 pages20151113141143introduction To Statistics-7thinagaranNo ratings yet

- Statistical TreatmentDocument7 pagesStatistical TreatmentKris Lea Delos SantosNo ratings yet

- Standard Deviation and Its ApplicationsDocument8 pagesStandard Deviation and Its Applicationsanon_882394540100% (1)

- Heteros Kedasti CityDocument26 pagesHeteros Kedasti CitypranshuNo ratings yet

- Return On Investment: Example of The ROI Formula CalculationDocument3 pagesReturn On Investment: Example of The ROI Formula CalculationMd Azim100% (1)

- Throughput Accounting and The Theory of ConstraintsDocument8 pagesThroughput Accounting and The Theory of ConstraintsMd AzimNo ratings yet

- What Is Transfer Pricing?Document2 pagesWhat Is Transfer Pricing?Md AzimNo ratings yet

- Method of Predicting Corporate FailuresDocument1 pageMethod of Predicting Corporate FailuresMd AzimNo ratings yet

- Problems of Target CostingDocument2 pagesProblems of Target CostingMd AzimNo ratings yet

- Accounting For Long Term AssetsDocument8 pagesAccounting For Long Term AssetsMd AzimNo ratings yet

- Probability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyDocument2 pagesProbability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyMd AzimNo ratings yet

- Types of RANDOM SAMPLINGDocument2 pagesTypes of RANDOM SAMPLINGMd AzimNo ratings yet

- How To Know Fraud in AdvanceDocument6 pagesHow To Know Fraud in AdvanceMd AzimNo ratings yet

- Probability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyDocument2 pagesProbability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyMd AzimNo ratings yet

- Deductive Reasoning Vs Inductive ReasoningDocument2 pagesDeductive Reasoning Vs Inductive ReasoningMd AzimNo ratings yet

- Probability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyDocument2 pagesProbability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyMd AzimNo ratings yet

- Probability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyDocument2 pagesProbability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyMd AzimNo ratings yet

- Probit Model: Conceptual FrameworkDocument1 pageProbit Model: Conceptual FrameworkMd AzimNo ratings yet

- Probability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyDocument4 pagesProbability and Statistics: To P, or Not To P?: Module Leader: DR James AbdeyMd AzimNo ratings yet

- Artificial Neural Network Model For Business Failure Prediction of Distressed Firms in Colombo Stock Exchanged - Sujeewa - 2014 PDFDocument18 pagesArtificial Neural Network Model For Business Failure Prediction of Distressed Firms in Colombo Stock Exchanged - Sujeewa - 2014 PDFMd AzimNo ratings yet

- Accountability of Accounting StakeholdersDocument7 pagesAccountability of Accounting StakeholdersMd AzimNo ratings yet

- Between Population: Difference MeansDocument2 pagesBetween Population: Difference MeansMd AzimNo ratings yet

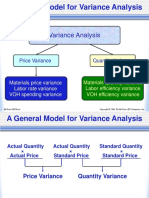

- Standard Costing and Variance AnalysisDocument11 pagesStandard Costing and Variance AnalysisMd AzimNo ratings yet

- Chapter: Amalgamation: Q: 1. What Is Amalgamation?Document4 pagesChapter: Amalgamation: Q: 1. What Is Amalgamation?Md AzimNo ratings yet

- Chapter - Goodwill ValuationDocument6 pagesChapter - Goodwill ValuationMd AzimNo ratings yet

- Bts 2Document262 pagesBts 2Chris DurellNo ratings yet

- Flexible Instruction Delivery Plan Template Group5 AutosavedDocument5 pagesFlexible Instruction Delivery Plan Template Group5 AutosavedMark Vincent Doria100% (1)

- Shapiro Wilks TestDocument8 pagesShapiro Wilks TestHadia Azhar2558No ratings yet

- MatlabDocument7 pagesMatlabamberriesNo ratings yet

- Relaksasi Nafas Dalam Menurunkan Kecemasan Pasien Pre Operasi Bedah AbdomenDocument6 pagesRelaksasi Nafas Dalam Menurunkan Kecemasan Pasien Pre Operasi Bedah AbdomenPian SaoryNo ratings yet

- The Optimization of Injection Molding Processes Using DOEDocument8 pagesThe Optimization of Injection Molding Processes Using DOECamila MatheusNo ratings yet

- أثر وحدات تعليمية بالقصص الحركية ممزوجة بالألعاب الصغيرة لتنمية بعض المهارات الحركية الأساسية الإنتقالية لدى تلاميذ السنة الثانية إبتدائي (6 7سنوات) .Document20 pagesأثر وحدات تعليمية بالقصص الحركية ممزوجة بالألعاب الصغيرة لتنمية بعض المهارات الحركية الأساسية الإنتقالية لدى تلاميذ السنة الثانية إبتدائي (6 7سنوات) .sattammashanNo ratings yet

- (AMALEAKS - BLOGSPOT.COM) Research (RSCH-121) 2nd QuarterDocument29 pages(AMALEAKS - BLOGSPOT.COM) Research (RSCH-121) 2nd QuarterJeff SongcayawonNo ratings yet

- Full Download PDF of (Ebook PDF) Statistics For Business Decision Making Analysis 2nd All ChapterDocument43 pagesFull Download PDF of (Ebook PDF) Statistics For Business Decision Making Analysis 2nd All Chaptergnanrinda8100% (7)

- 21s2 Prob Stats Assignment 2 TemplateDocument6 pages21s2 Prob Stats Assignment 2 Templateapi-647827239No ratings yet

- Handout 7Document20 pagesHandout 7Anum Nadeem GillNo ratings yet

- Instructor Materials Chapter 4: Advanced Data Analytics and Machine LearningDocument35 pagesInstructor Materials Chapter 4: Advanced Data Analytics and Machine Learningabdulaziz doroNo ratings yet

- Planning and Conducting SurveysDocument29 pagesPlanning and Conducting Surveys'Riomer G. Gonzales0% (1)

- Forecasting Steel Prices Using ARIMAX Model: A Case Study of TurkeyDocument7 pagesForecasting Steel Prices Using ARIMAX Model: A Case Study of TurkeyThe IjbmtNo ratings yet

- Open Research Paper - eWOM Influence On Customer's Purchasing DecisionDocument22 pagesOpen Research Paper - eWOM Influence On Customer's Purchasing DecisionKartavya YadavNo ratings yet

- Full Chapter Introduction To Data Analysis With R For Forensic Scientists International Forensic Science and Investigation 1St Edition Curran PDFDocument54 pagesFull Chapter Introduction To Data Analysis With R For Forensic Scientists International Forensic Science and Investigation 1St Edition Curran PDFbill.harris627100% (9)

- The Sample and Sampling ProceduresDocument30 pagesThe Sample and Sampling ProceduresDea ReanzaresNo ratings yet

- Understanding Normal Curve DistributionDocument5 pagesUnderstanding Normal Curve DistributionÇhärlöttë Çhrístíñë Dë ÇöldëNo ratings yet

- Msa PDFDocument35 pagesMsa PDFRajesh SharmaNo ratings yet

- Averages (Chapter 12) QuestionsDocument17 pagesAverages (Chapter 12) Questionssecret studentNo ratings yet

- Quantitative TechiniquesDocument38 pagesQuantitative TechiniquesJohn Nowell Diestro100% (3)

- Pengaruh Rekrutmen Terhadap Kinerja Karyawan: Roidah LinaDocument10 pagesPengaruh Rekrutmen Terhadap Kinerja Karyawan: Roidah LinaRanthika HanumNo ratings yet

- Jurnal Aset (Akuntansi Riset)Document14 pagesJurnal Aset (Akuntansi Riset)Oriana FannyNo ratings yet

- 2009 Central Limit TheoremDocument3 pages2009 Central Limit Theoremfabremil7472No ratings yet

- 12 Endogeneity, Instrumental Variables, Two Stage Least Squares, Treatment EffectsDocument70 pages12 Endogeneity, Instrumental Variables, Two Stage Least Squares, Treatment EffectsahportillaNo ratings yet

- SIM StatisticsDocument15 pagesSIM StatisticsMarife CulabaNo ratings yet

- SOL Tutorial-8Document35 pagesSOL Tutorial-8kevin dudhatNo ratings yet

- The Influence of Electronic Tax Filing SDocument24 pagesThe Influence of Electronic Tax Filing SAberaNo ratings yet

- The Growth of Mealworms On Two Different Substrates2Document8 pagesThe Growth of Mealworms On Two Different Substrates2mjfphotoNo ratings yet

- Research PaperDocument8 pagesResearch Paperapi-606937440No ratings yet